AwesomeLLM4SE

A Survey on Large Language Models for Software Engineering

Stars: 108

README:

Title: A Survey on Large Language Models for Software Engineering

Authors: Quanjun Zhang, Chunrong Fang, Yang Xie, Yaxin Zhang, Yun Yang, Weisong Sun, Shengcheng Yu, Zhenyu Chen

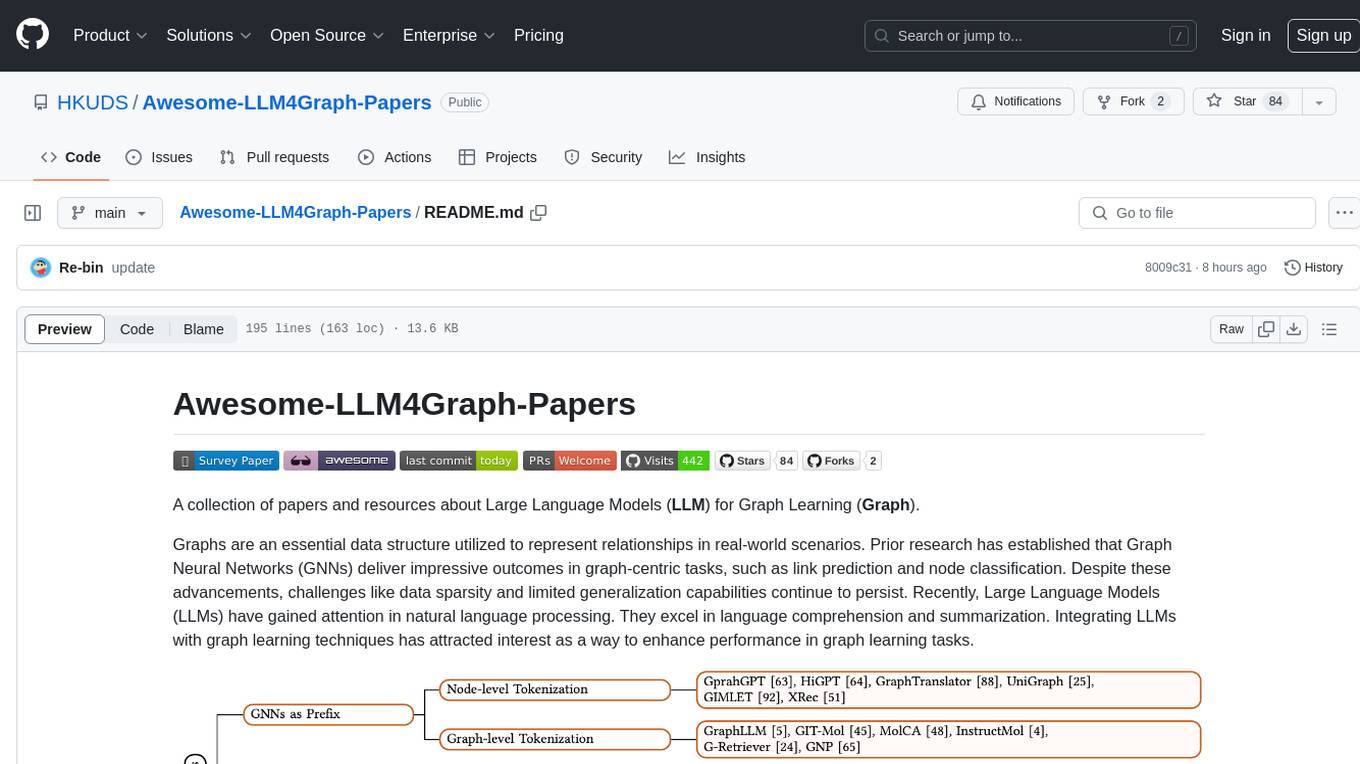

A collection of academic publications and methodologies on the classification of Code Large Language Models' pre-training tasks, downstream tasks, and the application of Large Language Models in the field of Software Engineering (LLM4SE).

We welcome all researchers to contribute to this repository and further contribute to the knowledge of the Large Language Models with Software Engineering field. Please feel free to contact us if you have any related references by Github issue or pull request.

@article{zhang2023survey,

title={A Survey on Large Language Models for Software Engineering},

author={Zhang, Quanjun and Fang, Chunrong and Xie, Yang and Zhang, Yaxin and Yang, Yun and Sun, Weisong and Yu, Shengcheng and Chen, Zhenyu},

journal={arXiv preprint arXiv:2312.15223},

year={2023}

}- 🔥Effi-Code: Unleashing Code Efficiency in Language Models[2024-arXiv]

- 🔥Agent-as-a-Judge: Evaluate Agents with Agents[2024-arXiv]

- 🔥Unraveling the Potential of Large Language Models in Code Translation: How Far Are We?[2024-arXiv]

- 🔥Generalized Adversarial Code-Suggestions: Exploiting Contexts of LLM-based Code-Completion[2024-arXiv]

- 🔥A Model Is Not Built By A Single Prompt: LLM-Based Domain Modeling With Question Decomposition[2024-arXiv]

- 🔥Test smells in LLM-Generated Unit Tests[2024-arXiv]

- 🔥AutoComply: Automating Requirement Compliance in Automotive Integration Testing[2024-CSE]

- 🔥TableAnalyst: an LLM-agent for tabular data analysis-Implementation and evaluation on tasks of varying complexity[2024-CSE]

- Using Learning from Answer Sets for Robust Question Answering with LLM[2024-LPNMR]

- Mitigating Gender Bias in Code Large Language Models via Model Editing[2024-arXiv]

- 🔥Exploring and Lifting the Robustness of LLM-powered Automated Program Repair with Metamorphic Testing[2024-arXiv]

- 🔥Checker Bug Detection and Repair in Deep Learning Libraries[2024-arXiv]

- 🔥Exploring the Effectiveness of LLMs in Automated Logging Statement Generation: An Empirical Study[2024-TSE]

- 🔥zsLLMCode: An Effective Approach for Functional Code Embedding via LLM with Zero-Shot Learning[2024-arXiv]

- 🔥Unlocking Memorization in Large Language Models with Dynamic Soft Prompting[2024-arXiv]

- 🔥PromSec: Prompt Optimization for Secure Generation of Functional Source Code with Large Language Models (LLMs)[2024-arXiv]

- 🔥Program Slicing in the Era of Large Language Models[2024-arXiv]

- 🔥PeriGuru: A Peripheral Robotic Mobile App Operation Assistant based on GUI Image Understanding and Prompting with LLM[2024-arXiv]

- Statically Contextualizing Large Language Models with Typed Holes[2024-arXiv]

- Agent-DocEdit: Language-Instructed LLM Agent for Content-Rich Document Editing[2024-COLM]

- CodeGraph: Enhancing Graph Reasoning of LLMs with Code[2024-arXiv]

- DataSculpt: Crafting Data Landscapes for LLM Post-Training through Multi-objective Partitioning[2024-arXiv]

- Co-Learning: Code Learning for Multi-Agent Reinforcement Collaborative Framework with Conversational Natural Language Interfaces[2024-arXiv]

- Enhancing Source Code Security with LLMs: Demystifying The Challenges and Generating Reliable Repairs[2024-arXiv]

- Codec Does Matter: Exploring the Semantic Shortcoming of Codec for Audio Language Model[2024-arXiv]

- 👏 Citation

- 📖 Contents

- 🤖LLMs of Code

-

💻SE with LLMs

-

📋Software Requirements & Design

- Ambiguity detection

- Class Diagram Derivation

- GUI Layouts

- Requirement Classification

- Requirement Completeness Detection

- Requirement Elicitation

- Requirement Engineering

- Requirement Prioritization

- Requirement Summarization

- Requirement Traceability

- Requirements Quality Assurance

- Software Modeling

- Specification Generation

- Specifications Repair

- Use Case Generation

-

🛠️Software Development

- API Documentation Smells

- API Inference

- API recommendation

- Code Comment Completion

- Code Completion

- Code Compression

- Code Editing

- Code Generation

- Code Representation

- Code Search

- Code Summarization

- Code Translation

- Code Understanding

- Continuous Development Optimization

- Data Augmentation

- Identifier Normalization

- Microservice Recommendation

- Neural Architecture search

- Program Synthesis

- SO Post Title Generation

- Type Inference

- Unified Development

- Code recommendation

- Control flow graph generation

- Data analysis

- Method name generation

- Project Planning

- SO Question Answering

-

🧪Software Testing

- Formal verification

- Invariant Prediction

- proof generation

- Resource leak detection

- taint analysis

- Actionable Warning Identification

- Adversarial Attack

- API Misuse Detection

- API Testing

- Assertion Generation

- Binary Code Similarity Detection

- Code Execution

- Decompilation

- Failure-Inducing Testing

- Fault Localization

- Fuzzing

- GUI Testing

- Indirect Call Analysis

- Mutation Testing

- NLP Testing

- Penetration Testing

- Program Analysis

- Program Reduction

- Property-based Testing

- Simulation Testing

- Static Analysis

- Static Warning Validating

- Test Generation

- Test Suite Minimization

- Vulnerability Detection

- Vulnerable Dependency Alert Detection

- Theorem Proving

-

📱Software Maintenance

- Android permissions

- APP Review Analysis

- Bug Report Detection

- Bug Reproduction

- Bug Triaging

- Code Clone Detection

- Code Coverage Prediction

- Code Evolution

- Code Porting

- Code Refactoring

- Code Review

- Code Smells

- Commit Message Generation

- Compiler Optimization

- Debugging

- Exception Handling Recommendation

- Flaky Test Prediction

- Incident Management

- Issue Labeling

- Log Analysis

- Log Anomaly Detection

- Malware Tracker

- Mobile app crash detection

- Outage Understanding

- Patch Correctness Assessment

- Privacy Policy

- Program Repair

- Report Severity Prediction

- Sentiment analysis

- Tag Recommendation

- Technical Debt Management

- Test Update

- Traceability Link Recovery

- Vulnerability Repair

- Code Clone Detection

- 📈Software Management

-

📋Software Requirements & Design

- 🧩Integration

- 📱Related Surveys

- CuBERT: Learning and evaluating contextual embedding of source code [2020-ICML] GitHub

- CodeBERT: CodeBERT: A Pre-Trained Model for Programming and Natural Languages [2020-EMNLP] GitHub

- GraphCodeBERT: GraphCodeBERT: Pre-training Code Representations with Data Flow [2021-ICLR] GitHub

- SOBertBase: Stack Over-Flowing with Results: The Case for Domain-Specific Pre-Training Over One-Size-Fits-All Models [2023-arXiv]

- CodeSage: Code Representation Learning At Scale [2024-ICLR] GitHub

- CoLSBERT: Scaling Laws Behind Code Understanding Model [2024-arXiv] GitHub

- PyMT5: PyMT5 multi-mode translation of natural language and Python code with transformers [2020-EMNLP] GitHub

- CodeT5: CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation [2021-EMNLP] GitHub

- PLBART: Unified Pre-training for Program Understanding and Generation [2021-NAACL] GitHub

- T5-Learning: Studying the usage of text-to-text transfer transformer to support code-related tasks [2021-ICSE] GitHub

- CodeRL: CodeRL: Mastering Code Generation through Pretrained Models and Deep Reinforcement Learning [2022-NeurIPS] GitHub

- CoditT5: CoditT5: Pretraining for Source Code and Natural Language Editing [2022-ASE] GitHub

- JuPyT5: Training and Evaluating a Jupyter Notebook Data Science Assistant [2022-arXiv] GitHub

- SPT-Code: Sequence-to-Sequence Pre-Training for Learning Source Code Representations [2022-ICSE] GitHub

- UnixCoder: UniXcoder: Unified Cross-Modal Pre-training for Code Representation [2022-ACL] GitHub

- AlphaCode: Competition-Level Code Generation with AlphaCode [2022-Science]

- ERNIE-Code: ERNIE-Code: Beyond English-Centric Cross-lingual Pretraining for Programming Languages [2023-ACL] GitHub

- CodeT5+: CodeT5+ Open Code Large Language Models for Code Understanding and Generation [2023-EMNLP] GitHub

- PPOCoder: Execution-based Code Generation using Deep Reinforcement Learning [2023-TMLR] GitHub

- RLTF: RLTF: Reinforcement Learning from Unit Test Feedback [2023-TMLR] GitHub

- CCT5: CCT5: A Code-Change-Oriented Pre-Trained Model [2023-FSE] GitHub

- B-Coder: B-Coder: Value-Based Deep Reinforcement Learning for Program Synthesis [2024-ICLR]

- AST-T5: AST-T5: Structure-Aware Pretraining for Code Generation and Understanding [2024-ICML] GitHub

- GrammarT5: GrammarT5: Grammar-Integrated Pretrained Encoder-Decoder Neural Model for Code [2024-ICSE] GitHub

-

GPT-C: IntelliCode compose code generation using transformer [2020-FSE]

-

Codex: Evaluating large language models trained on code [2021-arXiv]

-

CodeGPT: CodeXGLUE A Machine Learning Benchmark Dataset for Code Understanding and Generation [2021-NeurIPS] GitHub

-

PaLM-Coder: PaLM Scaling Language Modeling with Pathways [2022-JMLR]

-

PanGu-Coder: PanGu-Coder Program Synthesis with Function-Level Language Modeling [2022-arXiv]

-

PolyCoder: A Systematic Evaluation of Large Language Models of Code [2022-ICLR] GitHub

-

PyCodeGPT: CERT Continual Pre-Training on Sketches for Library-Oriented Code Generation [2022-IJCAI] GitHub

-

BLOOM: BLOOM: A 176B-Parameter Open-Access Multilingual Language Model [2022-arXiv] GitHub

-

CodeShell: CodeShell Technical Report [2023-arXiv]

-

PanGu-Coder2: PanGu-Coder2: LLM with Reinforcement Learning [2023-arXiv]

-

Code Llama: Code llama: Open foundation models for code [2023-arXiv] GitHub

-

CodeFuse: CodeFuse-13B: A Pretrained Multi-lingual Code Large Language Model [2023-ICSE] GitHub

-

CodeGen: CodeGen: An Open Large Language Model for Code with Multi-Turn Program Synthesis [2023-ICLR] GitHub

-

CodeGen2: CodeGen2 Lessons for Training LLMs on Programming and Natural Languages [2023-ICLR] GitHub

-

InCoder: InCoder: A Generative Model for Code Infilling and Synthesis [2023-ICLR] GitHub

-

SantaCoder: SantaCoder don’t reach for the stars! [2023-ICLR] GitHub

-

StarCoder: StarCoder may the source be with you [2023-TMLR] GitHub

-

CodeGeeX: CodeGeeX: A Pre-Trained Model for Code Generation with Multilingual Evaluations on HumanEval-X [2024-KDD] GitHub

-

Lemur: Lemur: Harmonizing Natural Language and Code for Language Agents [2024-ICLR] GitHub

-

Magicoder: Magicoder: Empowering Code Generation with OSS-Instruct [2024-ICML] GitHub

-

OctoCoder: Octopack: Instruction tuning code large language models [2024-ICLR] GitHub

-

WizardCoder: WizardCoder: Empowering Code Large Language Models with Evol-Instruct [2024-ICLR] GitHub

-

AlchemistCoder: AlchemistCoder: Harmonizing and Eliciting Code Capability by Hindsight Tuning on Multi-source Data [2024-arXiv] GitHub

-

AutoCoder: AutoCoder: Enhancing Code Large Language Model with AIEV-Instruct [2024-arXiv] GitHub

-

CodeGemma: CodeGemma: Open Code Models Based on Gemma [2024-arXiv] GitHub

-

DeepSeek-Coder: DeepSeek-Coder: When the Large Language Model Meets Programming -- The Rise of Code Intelligence [2024-arXiv] GitHub

-

DeepSeek-Coder-V2: DeepSeek-Coder-V2: Breaking the Barrier of Closed-Source Models in Code Intelligence [2024-arXiv] GitHub

-

DolphCoder: DolphCoder: Echo-Locating Code Large Language Models with Diverse and Multi-Objective Instruction Tuning [2024-ACL] GitHub

-

Granite: Granite Code Models: A Family of Open Foundation Models for Code Intelligence [2024-arXiv] GitHub

-

InverseCoder: InverseCoder: Unleashing the Power of Instruction-Tuned Code LLMs with Inverse-Instruct [2024-arXiv] GitHub

-

NT-Java: Narrow Transformer: Starcoder-Based Java-LM For Desktop [2024-arXiv] GitHub

-

StarCoder2: StarCoder 2 and The Stack v2: The Next Generation [2024-arXiv] GitHub

-

StepCoder: [2024-ACL] GitHub

-

UniCoder: UniCoder: Scaling Code Large Language Model via Universal Code [2024-ACL] GitHub

-

WaveCoder: WaveCoder: Widespread And Versatile Enhanced Code LLM [2024-ACL] GitHub

-

XFT: XFT: Unlocking the Power of Code Instruction Tuning by Simply Merging Upcycled Mixture-of-Experts [2024-ACL] GitHub

- Automated Handling of Anaphoric Ambiguity in Requirements: A Multi-Solution Study [2022-ICSE]

- Automated requirement contradiction detection through formal logic and LLMs [2024-AUSE]

- Identification of intra-domain ambiguity using transformer-based machine learning [2022-ICSE@NLBSE]

- TABASCO: A transformer based contextualization toolkit [2022-SCP]

- ChatGPT: A Study on its Utility for Ubiquitous Software Engineering Tasks [2023-arXiv]

- LLM-based Class Diagram Derivation from User Stories with Chain-of-Thought Promptings [2024-COMPSAC]

- A hybrid approach to extract conceptual diagram from software requirements [2024-SCP]

- Data-driven prototyping via natural-language-based GUI retrieval [2023-AUSE]

- Evaluating a Large Language Model on Searching for GUI Layouts [2023-EICS]

- Which AI Technique Is Better to Classify Requirements? An Experiment with SVM, LSTM, and ChatGPT [2023-arXiv]

- Improving Requirements Classification Models Based on Explainable Requirements Concerns [2023-REW]

- NoRBERT: Transfer Learning for Requirements Classification [2020-RE]

- Non Functional Requirements Identification and Classification Using Transfer Learning Model [2023-IEEE Access]

- PRCBERT: Prompt Learning for Requirement Classification using BERT-based Pretrained Language Models [2022-ASE]

- Pre-trained Model-based NFR Classification: Overcoming Limited Data Challenges [2023-IEEE Access]

- BERT-Based Approach for Greening Software Requirements Engineering Through Non-Functional Requirements [2023-IEEE Access]

- Improving requirements completeness: Automated assistance through large language models [2024-RE]

- Combining Prompts with Examples to Enhance LLM-Based Requirement Elicitation [2024-COMPSAC]

- Advancing Requirements Engineering through Generative AI: Assessing the Role of LLMs [2024-Generative AI]

- Lessons from the Use of Natural Language Inference (NLI) in Requirements Engineering Tasks [2024-arXiv]

- Enhancing Legal Compliance and Regulation Analysis with Large Language Models [2024-arXiv]

- MARE: Multi-Agents Collaboration Framework for Requirements Engineering [2024-arXiv]

- Multilingual Crowd-Based Requirements Engineering Using Large Language Models [2024-SBES]

- Requirements Engineering using Generative AI: Prompts and Prompting Patterns [2024-Generative AI]

- From Specifications to Prompts: On the Future of Generative LLMs in Requirements Engineering [2024-IEEE Software]

- Prioritizing Software Requirements Using Large Language Models [2024-arXiv]

- A Transformer-based Approach for Abstractive Summarization of Requirements from Obligations in Software Engineering Contracts [2023-RE]

- Natural Language Processing for Requirements Traceability [2024-arXiv]

- Traceability Transformed: Generating More Accurate Links with Pre-Trained BERT Models [2021-ICSE]

- Leveraging LLMs for the Quality Assurance of Software Requirements [2024-RE]

- Leveraging Transformer-based Language Models to Automate Requirements Satisfaction Assessment [2023-arXiv]

- Supporting High-Level to Low-Level Requirements Coverage Reviewing with Large Language Models [2024-MSR]

- ChatGPT as a tool for User Story Quality Evaluation: Trustworthy Out of the Box? [2023-XP]

- Towards using Few-Shot Prompt Learning for Automating Model Completion [2022-ICSE@NIER]

- Model Generation from Requirements with LLMs: an Exploratory Study [2024-arXiv]

- Leveraging Large Language Models for Software Model Completion: Results from Industrial and Public Datasets [2024-arXiv]

- How LLMs Aid in UML Modeling: An Exploratory Study with Novice Analysts [2024-arXiv]

- Natural Language Processing-based Requirements Modeling: A Case Study on Problem Frames [2023-APSEC]

- SpecGen: Automated Generation of Formal Program Specifications via Large Language Models [2024-arXiv]

- Large Language Models Based Automatic Synthesis of Software Specifications [2023-arXiv]

- Impact of Large Language Models on Generating Software Specifications [2023-arXiv]

- Automated Repair of Declarative Software Specifications in the Era of Large Language Models [2023-arXiv]

- Experimenting a New Programming Practice with LLMs [2024-arXiv]

Automatic Detection of Five API Documentation Smells: Practitioners’ Perspectives [2021-SANER]

- Adaptive Intellect Unleashed: The Feasibility of Knowledge Transfer in Large Language Models [2023-arXiv]

- Gorilla: Large language model connected with massive APIs [2023-arXiv]

- Measuring and Mitigating Constraint Violations of In-Context Learning for Utterance-to-API Semantic Parsing [2023-arXiv]

- Pop Quiz! Do Pre-trained Code Models Possess Knowledge of Correct API Names? [2023-arXiv]

- APIGen: Generative API Method Recommendation [2024-SANER]

- Let's Chat to Find the APIs: Connecting Human, LLM and Knowledge Graph through AI Chain [2023-ASE]

- PTM-APIRec: Leveraging Pre-trained Models of Source Code in API Recommendation [2023-TOSEM]

- CLEAR: Contrastive Learning for API Recommendation [2022-ICSE]

- Automatic recognizing relevant fragments of APIs using API references [2024-AUSE]

- ToolCoder: Teach Code Generation Models to use API search tools [2023-arXiv]

- APIGen: Generative API Method Recommendation [2024-SANER]

- Let's Chat to Find the APIs: Connecting Human, LLM and Knowledge Graph through AI Chain [2023-ASE]

- PTM-APIRec: Leveraging Pre-trained Models of Source Code in API Recommendation [2023-TOSEM]

- CLEAR: Contrastive Learning for API Recommendation [2022-ICSE]

- Automatic recognizing relevant fragments of APIs using API references [2024-AUSE]

- ToolCoder: Teach Code Generation Models to use API search tools [2023-arXiv]

1.🔥Generalized Adversarial Code-Suggestions: Exploiting Contexts of LLM-based Code-Completion[2024-arXiv] 2. Towards Efficient Fine-tuning of Pre-trained Code Models: An Experimental Study and Beyond [2023-ISSTA] 3. Dataflow-Guided Retrieval Augmentation for Repository-Level Code Completion [2024-ACL] 4. An Empirical Study on the Usage of BERT Models for Code Completion [2021-MSR] 5. An Empirical Study on the Usage of Transformer Models for Code Completion [2021-TSE] 6. R2C2-Coder: Enhancing and Benchmarking Real-world Repository-level Code Completion Abilities of Code Large Language Models [2024-arXiv] 7. A Static Evaluation of Code Completion by Large Language Models [2023-ACL] 8. CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion [2023-NeurIPS] 9. Large Language Models of Code Fail at Completing Code with Potential Bugs [2024-NeurIPS] 10. Piloting Copilot and Codex: Hot Temperature, Cold Prompts, or Black Magic? [2022-arXiv] 11. De-Hallucinator: Iterative Grounding for LLM-Based Code Completion [2024-arXiv] 12. Evaluation of LLMs on Syntax-Aware Code Fill-in-the-Middle Tasks [2024-ICML] 13. Codefill: Multi-token code completion by jointly learning from structure and naming sequences [2022-ICSE] 14. ZS4C: Zero-Shot Synthesis of Compilable Code for Incomplete Code Snippets using ChatGPT [2024-arXiv] 15. Automatic detection and analysis of technical debts in peer-review documentation of r packages [2022-SANER] 16. Toward less hidden cost of code completion with acceptance and ranking models [2021-ICSME] 17. Enhancing LLM-Based Coding Tools through Native Integration of IDE-Derived Static Context [2024-arXiv] 18. RepoBench: Benchmarking Repository-Level Code Auto-Completion Systems [2023-ICLR] 19. GraphCoder: Enhancing Repository-Level Code Completion via Code Context Graph-based Retrieval and Language Model [2025-arXiv] 20. STALL+: Boosting LLM-based Repository-level Code Completion with Static Analysis [2024-arXiv] 21. CCTEST: Testing and Repairing Code Completion Systems [2023-ICSE] 22. Contextual API Completion for Unseen Repositories Using LLMs [2024-arXiv] 23. Learning Deep Semantics for Test Completion [2023-ICSE] 24. Evaluating and improving transformers pre-trained on asts for code completion [2023-SANER] 25. RepoHyper: Better Context Retrieval Is All You Need for Repository-Level Code Completion [2024-arXiv] 26. Making the most of small Software Engineering datasets with modern machine learning [2021-TSE] 27. From copilot to pilot: Towards AI supported software development [2023-arXiv] 28. When Neural Code Completion Models Size up the Situation: Attaining Cheaper and Faster Completion through Dynamic Model Inference [2024-ICSE] 29. Prompt-based Code Completion via Multi-Retrieval Augmented Generation [2024-arXiv] 30. Domain Adaptive Code Completion via Language Models and Decoupled Domain Databases [2023-ASE] 31. Enriching Source Code with Contextual Data for Code Completion Models: An Empirical Study [2023-MSR] 32. RLCoder: Reinforcement Learning for Repository-Level Code Completion [2024-ICSE] 33. Repoformer: Selective Retrieval for Repository-Level Code Completion [2024-ICML] 34. A systematic evaluation of large language models of code [2022-PLDI] 35. Hierarchical Context Pruning: Optimizing Real-World Code Completion with Repository-Level Pretrained Code LLMs [2024-arXiv] 36. LLM-Cloud Complete: Leveraging Cloud Computing for Efficient Large Language Model-based Code Completion [2024-JAIGS]

- Semantic Compression With Large Language Models [2023-SNAMS]

- On the validity of pre-trained transformers for natural language processing in the software engineering domain [2022-TSE]

- Unprecedented Code Change Automation: The Fusion of LLMs and Transformation by Example [2024-FSE]

- GrACE: Generation using Associated Code Edits [2023-FSE]

- CodeEditor: Learning to Edit Source Code with Pre-trained Models [2023-TOSEM]

- Automated Code Editing with Search-Generate-Modify [2024-ICSE]

- Coffee: Boost Your Code LLMs by Fixing Bugs with Feedback [2023-arXiv]

- 🔥Effi-Code: Unleashing Code Efficiency in Language Models[2024-arXiv]

- 🔥Agent-as-a-Judge: Evaluate Agents with Agents[2024-arXiv]

- 🔥AutoAPIEval: A Framework for Automated Evaluation of LLMs in API-Oriented Code Generation[2024-arXiv]

- 🔥AutoSafeCoder: A Multi-Agent Framework for Securing LLM Code Generation through Static Analysis and Fuzz Testing[2024-arXiv]

- 🔥A Model Is Not Built By A Single Prompt: LLM-Based Domain Modeling With Question Decomposition[2024-arXiv]

- 🔥RethinkMCTS: Refining Erroneous Thoughts in Monte Carlo Tree Search for Code Generation[2024-arXiv]

- 🔥Python Symbolic Execution with LLM-powered Code Generation[2024-arXiv]

- LLMs in Web Development: Evaluating LLM-Generated PHP Code Unveiling[2024-SAFECOMP]

- Multi-Programming Language Ensemble for Code Generation in Large Language Mode[2024-arXiv]

- Planning In Natural Language Improves LLM Search For Code Generation[2024-arXiv]

- Large language model evaluation for high‐performance computing software development[2024-CCP]

- STREAM: Embodied Reasoning through Code Generation[2024-ICML]

- Environment Curriculum Generation via Large Language Models[2024-CoRL]

- Evaluation Metrics in LLM Code Generation[2024-TSD]

- Benchmarking LLM Code Generation for Audio Programming with Visual Dataflow Languages[2024-arXiv]

- An Empirical Study on Self-correcting Large Language Models for Data Science Code Generation[2024-arXiv]

- NL2ProcessOps: Towards LLM-Guided Code Generation for Process Execution[2024-arXiv]

- Balancing Security and Correctness in Code Generation: An Empirical Study on Commercial Large Language Models[2024-IEEE TETCI]

- Insights from Benchmarking Frontier Language Models on Web App Code Generation[2024-arXiv

- Towards Efficient Fine-tuning of Pre-trained Code Models: An Experimental Study and Beyond [2023-ISSTA]

- Dataflow-Guided Retrieval Augmentation for Repository-Level Code Completion [2024-ACL]

- An Empirical Study on the Usage of BERT Models for Code Completion [2021-MSR]

- An Empirical Study on the Usage of Transformer Models for Code Completion [2021-TSE]

- R2C2-Coder: Enhancing and Benchmarking Real-world Repository-level Code Completion Abilities of Code Large Language Models [2024-arXiv]

- A Static Evaluation of Code Completion by Large Language Models [2023-ACL]

- CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion [2023-NeurIPS]

- Large Language Models of Code Fail at Completing Code with Potential Bugs [2024-NeurIPS]

- Piloting Copilot and Codex: Hot Temperature, Cold Prompts, or Black Magic? [2022-arXiv]

- De-Hallucinator: Iterative Grounding for LLM-Based Code Completion [2024-arXiv]

- Evaluation of LLMs on Syntax-Aware Code Fill-in-the-Middle Tasks [2024-ICML]

- Codefill: Multi-token code completion by jointly learning from structure and naming sequences [2022-ICSE]

- ZS4C: Zero-Shot Synthesis of Compilable Code for Incomplete Code Snippets using ChatGPT [2024-arXiv]

- Automatic detection and analysis of technical debts in peer-review documentation of r packages [2022-SANER]

- Toward less hidden cost of code completion with acceptance and ranking models [2021-ICSME]

- Enhancing LLM-Based Coding Tools through Native Integration of IDE-Derived Static Context [2024-arXiv]

- RepoBench: Benchmarking Repository-Level Code Auto-Completion Systems [2023-ICLR]

- GraphCoder: Enhancing Repository-Level Code Completion via Code Context Graph-based Retrieval and Language Model [2025-arXiv]

- STALL+: Boosting LLM-based Repository-level Code Completion with Static Analysis [2024-arXiv]

- CCTEST: Testing and Repairing Code Completion Systems [2023-ICSE]

- Contextual API Completion for Unseen Repositories Using LLMs [2024-arXiv]

- Learning Deep Semantics for Test Completion [2023-ICSE]

- Evaluating and improving transformers pre-trained on asts for code completion [2023-SANER]

- RepoHyper: Better Context Retrieval Is All You Need for Repository-Level Code Completion [2024-arXiv]

- Making the most of small Software Engineering datasets with modern machine learning [2021-TSE]

- From copilot to pilot: Towards AI supported software development [2023-arXiv]

- When Neural Code Completion Models Size up the Situation: Attaining Cheaper and Faster Completion through Dynamic Model Inference [2024-ICSE]

- Prompt-based Code Completion via Multi-Retrieval Augmented Generation [2024-arXiv]

- Domain Adaptive Code Completion via Language Models and Decoupled Domain Databases [2023-ASE]

- Enriching Source Code with Contextual Data for Code Completion Models: An Empirical Study [2023-MSR]

- RLCoder: Reinforcement Learning for Repository-Level Code Completion [2024-ICSE]

- Repoformer: Selective Retrieval for Repository-Level Code Completion [2024-ICML]

- A systematic evaluation of large language models of code [2022-PLDI]

- Hierarchical Context Pruning: Optimizing Real-World Code Completion with Repository-Level Pretrained Code LLMs [2024-arXiv]

- LLM-Cloud Complete: Leveraging Cloud Computing for Efficient Large Language Model-based Code Completion [2024-JAIGS]

- A Closer Look at Different Difficulty Levels Code Generation Abilities of ChatGPT [2023-ASE]

- Enhancing Code Intelligence Tasks with ChatGPT [2023-arXiv]

- ExploitGen: Template-augmented exploit code generation based on CodeBERT [2024-JSS]

- A syntax-guided multi-task learning approach for Turducken-style code generation [2023-EMSE]

- CoLadder: Supporting Programmers with Hierarchical Code Generation in Multi-Level Abstraction [2023-arXiv]

- Evaluating the Code Quality of AI-Assisted Code Generation Tools: An Empirical Study on GitHub Copilot, Amazon CodeWhisperer, and ChatGPT [2023-arXiv]

- CoderEval: A Benchmark of Pragmatic Code Generation with Generative Pre-trained Models [2023-ICSE]

- CERT: Continual Pre-training on Sketches for Library-oriented Code Generation [2022-IJCAI]

- When language model meets private library [2022-EMNLP]

- Private-Library-Oriented Code Generation with Large Language Models [2023-arXiv]

- Self-taught optimizer (stop): Recursively self-improving code generation [2024-COLM]

- Coder reviewer reranking for code generation [2023-ICML]

- Planning with Large Language Models for Code Generation [2023-ICLR]

- CodeAgent: Enhancing Code Generation with Tool-Integrated Agent Systems for Real-World Repo-level Coding Challenges [2024-ACL]

- A Lightweight Framework for Adaptive Retrieval In Code Completion With Critique Model [2024-arXiv]

- Self-Edit: Fault-Aware Code Editor for Code Generation [2023-ACL]

- Outline, then details: Syntactically guided coarse-to-fine code generation [2023-ICML]

- Self-Infilling Code Generation [2024-ICML]

- Can ChatGPT replace StackOverflow? A Study on Robustness and Reliability of Large Language Model Code Generation [2023-arXiv]

- Can LLM Replace Stack Overflow? A Study on Robustness and Reliability of Large Language Model Code Generation [2024-AAAI]

- CodeBERTScore: Evaluating Code Generation with Pretrained Models of Code [2023-EMNLP]

- Hot or Cold? Adaptive Temperature Sampling for Code Generation with Large Language Models [2024-AAAI]

- Sketch Then Generate: Providing Incremental User Feedback and Guiding LLM Code Generation through Language-Oriented Code Sketches [2024-arXiv]

- Two Birds with One Stone: Boosting Code Generation and Code Search via a Generative Adversarial Network [2023-OOPSLA]

- Natural Language to Code: How Far Are We? [2023-FSE]

- Structured Code Representations Enable Data-Efficient Adaptation of Code Language Models [2024-arXiv]

- API2Vec++: Boosting API Sequence Representation for Malware Detection and Classification [2024-TSE]

- Representation Learning for Stack Overflow Posts: How Far are We? [2024-TOSEM]

- VarGAN: Adversarial Learning of Variable Semantic Representations [2024-TSE]

- ContraBERT: Enhancing Code Pre-trained Models via Contrastive Learning [2023-ICSE]

- Model-Agnostic Syntactical Information for Pre-Trained Programming Language Models [2023-MSR]

- One Adapter for All Programming Languages? Adapter Tuning for Code Search and Summarization [2023-ICSE]

- Two Birds with One Stone: Boosting Code Generation and Code Search via a Generative Adversarial Network [2023-OOPSLA]

- An Empirical Study on Code Search Pre-trained Models: Academic Progresses vs. Industry Requirements [2024-Internetware]

- Rapid: Zero-shot Domain Adaptation for Code Search with Pre-trained Models [2024-TOSEM]

- LLM Agents Improve Semantic Code Search [2024-arXiv]

- Generation-Augmented Query Expansion For Code Retrieval [2022-arXiv]

- MCodeSearcher: Multi-View Contrastive Learning for Code Search [2024-Internetware]

- Do Pre-trained Language Models Indeed Understand Software Engineering Tasks? [2022-TSE]

- Rewriting the Code: A Simple Method for Large Language Model Augmented Code Search [2024-ACL]

- CodeRetriever: A Large Scale Contrastive Pre-Training Method for Code Search [2022-EMNLP]

- Self-Supervised Query Reformulation for Code Search [2023-FSE]

- On Contrastive Learning of Semantic Similarity for Code to Code Search [2023-arXiv]

- On the Effectiveness of Transfer Learning for Code Search [2022-TSE]

- Cross-modal contrastive learning for code search [2022-ICSME]

- CoCoSoDa: Effective Contrastive Learning for Code Search [2023-ICSE]

- Improving code search with multi-modal momentum contrastive learning [2023-ICPC]

- CodeCSE: A Simple Multilingual Model for Code and Comment Sentence Embeddings [2024-arXiv]

- You Augment Me: Exploring ChatGPT-based Data Augmentation for Semantic Code Search [2023-ICSME]

- Natural Language to Code: How Far Are We? [2023-FSE]

- CCT-Code: Cross-Consistency Training for Multilingual Clone Detection and Code Search [2023-arXiv]

- Distilled GPT for Source Code Summarization [2024-AUSE]

- One Adapter for All Programming Languages? Adapter Tuning for Code Search and Summarization [2023-ICSE]

- Automatic Semantic Augmentation of Language Model Prompts (for Code Summarization) [2024-ICSE]

- Extending Source Code Pre-Trained Language Models to Summarise Decompiled Binaries [2023-SANER]

- Exploring Distributional Shifts in Large Language Models for Code Analysis [2023-EMNLP]

- On the transferability of pre-trained language models for low-resource programming languages [2022-ICPC]

- A Comparative Analysis of Large Language Models for Code Documentation Generation [2024-arXiv]

- Dialog summarization for software collaborative platform via tuning pre-trained models [2023-JSS]

- ESALE: Enhancing Code-Summary Alignment Learning for Source Code Summarization [2024-TSE]

- Constructing effective in-context demonstration for code intelligence tasks: An empirical study [2023-arXiv]

- Large Language Models are Few-Shot Summarizers: Multi-Intent Comment Generation via In-Context Learning [2024-ICSE]

- Assemble foundation models for automatic code summarization [2022-SANER]

- Analyzing the performance of large language models on code summarization [2024-arXiv]

- Binary code summarization: Benchmarking chatgpt/gpt-4 and other large language models [2023-arXiv]

- Binary Code Summarization: Benchmarking ChatGPT/GPT-4 and Other Large Language Models [2023-arXiv]

- SimLLM: Measuring Semantic Similarity in Code Summaries Using a Large Language Model-Based Approach [2024-FSE]

- Identifying Inaccurate Descriptions in LLM-generated Code Comments via Test Execution [2024-arXiv]

- Code Summarization without Direct Access to Code - Towards Exploring Federated LLMs for Software Engineering [2024-EASE]

- Cross-Modal Retrieval-enhanced code Summarization based on joint learning for retrieval and generation [2024-IST]

- Do Machines and Humans Focus on Similar Code? Exploring Explainability of Large Language Models in Code Summarization [2024-ICPC]

- MALSIGHT: Exploring Malicious Source Code and Benign Pseudocode for Iterative Binary Malware Summarization [2024-arXiv]

- CSA-Trans: Code Structure Aware Transformer for AST [2024-arXiv]

- Exploring the Efficacy of Large Language Models (GPT-4) in Binary Reverse Engineering [2024-arXiv]

- DocuMint: Docstring Generation for Python using Small Language Models [2024-arXiv]

- Achieving High-Level Software Component Summarization via Hierarchical Chain-of-Thought Prompting and Static Code Analysis [2023-ICoDSE]

- Multilingual Adapter-based Knowledge Aggregation on Code Summarization for Low-Resource Languages [2023-arXiv]

- Analysis of ChatGPT on Source Code [2023-arXiv]

- Bash comment generation via data augmentation and semantic-aware CodeBERT [2024-AUSE]

- SoTaNa: The Open-Source Software Development Assistant [2023-arXiv]

- Natural Language Outlines for Code: Literate Programming in the LLM Era [2024-arXiv]

- Semantic Similarity Loss for Neural Source Code Summarization [2023-JSEP]

- Context-aware Code Summary Generation [2024-arXiv]

- Automatic Code Summarization via ChatGPT: How Far Are We? [2023-arXiv]

- A Prompt Learning Framework for Source Code Summarization [2023-TOSEM]

- Source Code Summarization in the Era of Large Language Models [2024-ICSE]

- Automatic Code Summarization via ChatGPT- How Far Are We? [2023-arXiv]

- Large Language Models for Code Summarization [2024-arXiv]

- Enhancing Trust in LLM-Generated Code Summaries with Calibrated Confidence Scores [2024-arXiv]

- Generating Variable Explanations via Zero-shot Prompt Learning [2023-ASE]

- Natural Is The Best: Model-Agnostic Code Simplification for Pre-trained Large Language Models [2024-arXiv]

- SparseCoder: Identifier-Aware Sparse Transformer for File-Level Code Summarization [2024-SANER]

- Automatic smart contract comment generation via large language models and in-context learning [2024-IST]

- Prompt Engineering or Fine Tuning: An Empirical Assessment of Large Language Models in Automated Software Engineering Tasks [2023-arXiv]

- 🔥Unraveling the Potential of Large Language Models in Code Translation: How Far Are We?[2024-arXiv]

- 🔥Context-aware Code Segmentation for C-to-Rust Translation using Large Language Models[2024-arXiv]

- Learning Transfers over Several Programming Languages [2023-arXiv]

- Enhancing Code Translation in Language Models with Few-Shot Learning via Retrieval-Augmented Generation [2024-arXiv]

- Codetf: One-stop transformer library for state-of-the-art code llm [2023-arXiv]

- LASSI: An LLM-based Automated Self-Correcting Pipeline for Translating Parallel Scientific Codes [2024-arXiv]

- Towards Translating Real-World Code with LLMs: A Study of Translating to Rust [2024-arXiv]

- Program Translation via Code Distillation [2023-EMNLP]

- CoTran: An LLM-based Code Translator using Reinforcement Learning with Feedback from Compiler and Symbolic Execution [2023-arXiv]

- Few-shot code translation via task-adapted prompt learning [2024-JSS]

- Exploring the Impact of the Output Format on the Evaluation of Large Language Models for Code Translation [2024-Forge]

- SpecTra: Enhancing the Code Translation Ability of Language Models by Generating Multi-Modal Specifications [2024-arXiv]

- SteloCoder: a Decoder-Only LLM for Multi-Language to Python Code Translation [2023-arXiv]

- Lost in Translation: A Study of Bugs Introduced by Large Language Models while Translating Code [2024-ICSE]

- Understanding the effectiveness of large language models in code translation [2023-ICSE]

- SUT: Active Defects Probing for Transcompiler Models [2023-EMNLP]

- Explain-then-Translate: An Analysis on Improving Program Translation with Self-generated Explanations [2023-EMNLP]

- TransMap: Pinpointing Mistakes in Neural Code Translation [2023-FSE]

- An interpretable error correction method for enhancing code-to-code translation [2024-ICLR]

- Codetransocean: A comprehensive multilingual benchmark for code translation [2023-arXiv]

- Assessing and Improving Syntactic Adversarial Robustness of Pre-trained Models for Code Translation [2023-ICSE]

- Exploring and unleashing the power of large language models in automated code translation [2024-FSE]

- VERT: Verified Equivalent Rust Transpilation with Few-Shot Learning [2024-arXiv]

- Rectifier: Code Translation with Corrector via LLMs [2024-arXiv]

- Multilingual Code Snippets Training for Program Translation [2022-AAAI]

- On the Evaluation of Neural Code Translation: Taxonomy and Benchmark [2023-ASE]

- GALLa: Graph Aligned Large Language Models for Improved Source Code Understanding[2024-arXiv]

- BinBert: Binary Code Understanding with a Fine-tunable and Execution-aware Transformer [2024-TDSC]

- SEMCODER: Training Code Language Models with Comprehensive Semantics [2024-arXiv]

- PAC Prediction Sets for Large Language Models of Code [2023-ICML]

- The Scope of ChatGPT in Software Engineering: A Thorough Investigation [2023-arXiv]

- ART: Automatic multi-step reasoning and tool-use for large language models [2023-arXiv]

- Better Context Makes Better Code Language Models: A Case Study on Function Call Argument Completion [2023-AAAI]

- Benchmarking Language Models for Code Syntax Understanding [2022-EMNLP]

- ShellGPT: Generative Pre-trained Transformer Model for Shell Language Understanding [2023-ISSRE]

- Language Agnostic Code Embeddings [2024-NAACL]

- Understanding Programs by Exploiting (Fuzzing) Test Cases [2023-arXiv]

- Can Machines Read Coding Manuals Yet? -- A Benchmark for Building Better Language Models for Code Understanding [2022-AAAI]

- Using an LLM to Help With Code Understanding [2024-ICSE]

- Optimizing Continuous Development By Detecting and Preventing Unnecessary Content Generation [2023-ASE]

- A Transformer-based Approach for Augmenting Software Engineering Chatbots Datasets [2024-ESEM]

- PERFGEN: A Synthesis and Evaluation Framework for Performance Data using Generative AI [2024-COMPSAC]

- BEQAIN: An Effective and Efficient Identifier Normalization Approach with BERT and the Question Answering System [2022-TSE]

- MicroRec: Leveraging Large Language Models for Microservice Recommendation [2024-MSR]

- LLMatic: Neural Architecture Search via Large Language Models and Quality-Diversity Optimization [2023-GECOO]

- Program synthesis with large language models [2021-arXiv]

- HYSYNTH: Context-Free LLM Approximation for Guiding Program Synthesis [2024-arXiv]

- Natural Language Commanding via Program Synthesis [2023-arXiv]

- Function-constrained Program Synthesis [2023-arXiv]

- Jigsaw: Large Language Models meet Program Synthesis [2022-ICSE]

- Less is More: Summary of Long Instructions is Better for Program Synthesis [2022-EMNLP]

- Guiding enumerative program synthesis with large language models [2024-CAV]

- Fully Autonomous Programming with Large Language Models [2023-GECCO]

- Exploring the Robustness of Large Language Models for Solving Programming Problems [2023-arXiv]

- Evaluating ChatGPT and GPT-4 for Visual Programming [2023-ICER]

- Enhancing Program Synthesis with Large Language Models Using Many-Objective Grammar-Guided Genetic Programming [2024-Algorithms]

- Synergistic Utilization of LLMs for Program Synthesis [2024-GECCO]

- Generating Data for Symbolic Language with Large Language Models [2023-EMNLP]

- Good things come in three: Generating SO Post Titles with Pre-Trained Models, Self Improvement and Post Ranking [2024-ESEM]

- Automatic bi-modal question title generation for Stack Overflow with prompt learning [2024-EMSE]

- Learning to Predict User-Defined Types [2022-TSE]

- Chatdev: Communicative agents for software development [2023-ACL]

- Improving code example recommendations on informal documentation using bert and query-aware lsh: A comparative study [2023-arXiv]

- GraphPyRec: A novel graph-based approach for fine-grained Python code recommendation [2024-SCP]

- AI Chain on Large Language Model for Unsupervised Control Flow Graph Generation for Statically-Typed Partial Code [2023-arXiv]

- Is GPT-4 a Good Data Analyst? [2023-EMNLP]

- Automating Method Naming with Context-Aware Prompt-Tuning [2023-ICPC]

- AutoScrum: Automating Project Planning Using Large Language Models [2023-arXiv]

- Time to separate from StackOverflow and match with ChatGPT for encryption [2024-JSS]

- Is Stack Overflow Obsolete? An Empirical Study of the Characteristics of ChatGPT Answers to Stack Overflow Questions [2024-CHI]

A New Era in Software Security: Towards Self-Healing Software via Large Language Models and Formal Verification [2023-arXiv]

PropertyGPT: LLM-driven Formal Verification of Smart Contracts through Retrieval-Augmented Property Generation [2024-arXiv]

The FormAI Dataset: Generative AI in Software Security Through the Lens of Formal Verification [2023-PROMISE]

Enchanting Program Specification Synthesis by Large Language Models Using Static Analysis and Program Verification [2024-CAV]

Can Large Language Models Reason about Program Invariants? [2023-ICML]

Selene: Pioneering Automated Proof in Software Verification [2024-ACL]

Boosting Static Resource Leak Detection via LLM-based Resource-Oriented Intention Inference [2023-arXiv]

Harnessing the Power of LLM to Support Binary Taint Analysis [2023-arXiv]

Pre-trained Model-based Actionable Warning Identification: A Feasibility Study [2024-arXiv]

An LLM-Assisted Easy-to-Trigger Backdoor Attack on Code Completion Models: Injecting Disguised Vulnerabilities against Strong Detection [2024-USENIX Security]

CodeBERT‐Attack: Adversarial attack against source code deep learning models via pre‐trained model [2023-JSEP]

ChatGPT as an Attack Tool: Stealthy Textual Backdoor Attack via Blackbox Generative Model Trigger [2024-NAACL]

- Exploring Automatic Cryptographic API Misuse Detection in the Era of LLMs [2024-arXiv]

- KAT: Dependency-aware Automated API Testing with Large Language Models [2024-ICST]

- Exploring Automated Assertion Generation via Large Language Models [2024-TOSEM]

- AssertionBench: A Benchmark to Evaluate Large-Language Models for Assertion Generation [2024-arXiv]

- TOGA: A Neural Method for Test Oracle Generation [2022-ICSE]

- Can Large Language Models Transform Natural Language Intent into Formal Method Postconditions? [2024-FSE]

- Beyond Code Generation: Assessing Code LLM Maturity with Postconditions [2024-arXiv]

- An Empirical Study on Focal Methods in Deep-Learning-Based Approaches for Assertion Generation [2024-ICSE]

- TOGLL: Correct and Strong Test Oracle Generation with LLMs [2024-arXiv]

- ChIRAAG: ChatGPT Informed Rapid and Automated Assertion Generation [2024-arXiv]

- Retrieval-Based Prompt Selection for Code-Related Few-Shot Learning [2023-ICSE]

- AssertionBench: A Benchmark to Evaluate Large-Language Models for Assertion Generation [2024-NeurIPS]

- Generating Accurate Assert Statements for Unit Test Cases using Pretrained Transformers [2022-AST]

- Chat-like Asserts Prediction with the Support of Large Language Model [2024-arXiv]

- Practical Binary Code Similarity Detection with BERT-based Transferable Similarity Learning [2022-ACSAC]

- CRABS-former: CRoss-Architecture Binary Code Similarity Detection based on Transformer [2024-Internetware]

- jTrans: Jump-Aware Transformer for Binary Code Similarity Detection [2022-ISSTA]

- Order Matters: Semantic-Aware Neural Networks for Binary Code Similarity Detection [2020-AAAI]

- SelfPiCo: Self-Guided Partial Code Execution with LLMs [2024-ISSTA]

- SLaDe: A Portable Small Language Model Decompiler for Optimized Assembly [2024-CGO]

- DeGPT: Optimizing Decompiler Output with LLM [2024-NDSS]

- Nova+: Generative Language Models for Binaries [2023-arXiv]

- How Far Have We Gone in Binary Code Understanding Using Large Language Models [2024-ICSME]

- WaDec: Decompile WebAssembly Using Large Language Model [2024-arXiv]

- Refining Decompiled C Code with Large Language Models [2023-arXiv]

- LmPa: Improving Decompilation by Synergy of Large Language Model and Program Analysis [2023-arXiv]

- LLM4Decompile: Decompiling Binary Code with Large Language Models [2024-arXiv]

- Nuances are the Key: Unlocking ChatGPT to Find Failure-Inducing Tests with Differential Prompting [2023-ASE]

- 🔥Enhancing Fault Localization Through Ordered Code Analysis with LLM Agents and Self-Reflection[2024-arXiv]

- LLM Fault Localisation within Evolutionary Computation Based Automated Program Repair [2024-GECCO]

- Supporting Cross-language Cross-project Bug Localization Using Pre-trained Language Models [2024-arXiv]

- Too Few Bug Reports? Exploring Data Augmentation for Improved Changeset-based Bug Localization [2023-arXiv]

- Fast Changeset-based Bug Localization with BERT [2022-ICSE]

- Pre-training Code Representation with Semantic Flow Graph for Effective Bug Localization [2023-FSE]

- Impact of Large Language Models of Code on Fault Localization [2024-arXiv]

- A Quantitative and Qualitative Evaluation of LLM-based Explainable Fault Localization [2024-FSE]

- Enhancing Bug Localization Using Phase-Based Approach [2023-IEEE Access]

- AgentFL: Scaling LLM-based Fault Localization to Project-Level Context [2024-arXiv]

- Face It Yourselves: An LLM-Based Two-Stage Strategy to Localize Configuration Errors via Logs [2024-ISSTA]

- Demystifying Faulty Code with LLM: Step-by-Step Reasoning for Explainable Fault Localization [2024-arXiv]

- Demystifying faulty code: Step-by-step reasoning for explainable fault localization [2024-SANER]

- Large Language Models in Fault Localisation [2023-arXiv]

- Better Debugging: Combining Static Analysis and LLMs for Explainable Crashing Fault Localization [2024-arXiv]

- Large language models for test-free fault localization [2024-ICSE]

- TroBo: A Novel Deep Transfer Model for Enhancing Cross-Project Bug Localization [2021-KSEM]

- ConDefects: A Complementary Dataset to Address the Data Leakage Concern for LLM-Based Fault Localization and Program Repair [2024-FSE]

- 🔥AutoSafeCoder: A Multi-Agent Framework for Securing LLM Code Generation through Static Analysis and Fuzz Testing[2024-arXiv]

- 🔥ISC4DGF: Enhancing Directed Grey-box Fuzzing with LLM-Driven Initial Seed Corpus Generation[2024-arXiv]

- FuzzCoder: Byte-level Fuzzing Test via Large Language Model[2024-arXiv]

- ProphetFuzz: Fully Automated Prediction and Fuzzing of High-Risk Option Combinations with Only Documentation via Large Language Model[2024-arXiv]

- SearchGEM5: Towards Reliable gem5 with Search Based Software Testing and Large Language Models [2023-SSBSE]

- Large Language Models are Zero-Shot Fuzzers: Fuzzing Deep-Learning Libraries via Large Language Models [2023-ISSTA]

- Large Language Models are Edge-Case Fuzzers: Testing Deep Learning Libraries via FuzzGPT [2023-ICSE]

- Large Language Models are Edge-Case Generators- Crafting Unusual Programs for Fuzzing Deep Learning Libraries [2023-ICSE]

- CovRL: Fuzzing JavaScript Engines with Coverage-Guided Reinforcement Learning for LLM-based Mutation [2024-arXiv]

- Fuzzing JavaScript Interpreters with Coverage-Guided Reinforcement Learning for LLM-based Mutation [2024-ISSTA]

- Augmenting Greybox Fuzzing with Generative AI [2023-arXiv]

- Large Language Model guided Protocol Fuzzing [2023-NDSS]

- Fuzzing BusyBox: Leveraging LLM and Crash Reuse for Embedded Bug Unearthing [2024-USENIX Security]

- Large Language Models for Fuzzing Parsers [2023-Fuzzzing]

- Llm4fuzz: Guided fuzzing of smart contracts with large language models [2024-arXiv]

- Llmif: Augmented large language model for fuzzing iot devices [2024-SP]

- Fuzz4all: Universal fuzzing with large language models [2024-ICSE]

- Kernelgpt: Enhanced kernel fuzzing via large language models [2023-arXiv]

- White-box Compiler Fuzzing Empowered by Large Language Models [2023-arXiv]

- LLAMAFUZZ: Large Language Model Enhanced Greybox Fuzzing [2024-arXiv]

- Understanding Large Language Model Based Fuzz Driver Generation [2023-arXiv]

- How Effective Are They? Exploring Large Language Model Based Fuzz Driver Generation [2024-ISSTA]

- Vision-driven Automated Mobile GUI Testing via Multimodal Large Language Model [2024-arXiv]

- Fill in the Blank: Context-aware Automated Text Input Generation for Mobile GUI Testing [2022-ICSE]

- Make LLM a Testing Expert: Bringing Human-like Interaction to Mobile GUI Testing via Functionality-aware Decisions [2023-ICSE]

- Autonomous Large Language Model Agents Enabling Intent-Driven Mobile GUI Testing [2023-arXiv]

- Intent-Driven Mobile GUI Testing with Autonomous Large Language Model Agents [2024-ICST]

- Guardian: A Runtime Framework for LLM-based UI Exploration [2024-ISSTA]

- Semantic-Enhanced Indirect Call Analysis with Large Language Models [2024-arXiv]

- µBert- Mutation Testing using Pre-Trained Language Models [2022-ICST]

- On the Coupling between Vulnerabilities and LLM-generated Mutants: A Study on Vul4J dataset [2024-ICST]

- Llm-guided formal verification coupled with mutation testing [2024-DATE]

- Automated Bug Generation in the era of Large Language Models [2023-arXiv]

- Contextual Predictive Mutation Testing [2023-FSE]

- Efficient Mutation Testing via Pre-Trained Language Models [2023-arXiv]

- Mutation-based consistency testing for evaluating the code understanding capability of llms [2024-FSE]

- VULGEN: Realistic Vulnerability Generation Via Pattern Mining and Deep Learning [2023-ICSE]

- Learning Realistic Mutations- Bug Creation for Neural Bug Detectors [2022-ICST]

- Large Language Models for Equivalent Mutant Detection: How Far are We? [2024-ISSTA]

- LLMorpheus: Mutation Testing using Large Language Models [2024-arXiv]

- An Exploratory Study on Using Large Language Models for Mutation Testing [2024-arXiv]

- Machine Translation Testing via Pathological Invariance [2020-FSE]

- Structure-Invariant Testing for Machine Translation [2020-ICSE]

- Dialtest: automated testing for recurrent-neural-network-driven dialogue systems [2021-ISSTA]

- Qatest: A uniform fuzzing framework for question answering systems [2022-ASE]

- Improving Machine Translation Systems via Isotopic Replacement [2022-ICSE]

- Mttm: Metamorphic testing for textual content moderation software [2023-ICSE]

- Automated testing and improvement of named entity recognition systems [2023-FSE]

- PentestGPT: An LLM-empowered Automatic Penetration Testing Tool [2024-USENIX Security]

- Getting pwn'd by AI: Penetration Testing with Large Language Models [2023-FSE]

- CIPHER: Cybersecurity Intelligent Penetration-testing Helper for Ethical Researcher [2024-arXiv]

- PTGroup: An Automated Penetration Testing Framework Using LLMs and Multiple Prompt Chains [2024-ICIC]

- CFStra: Enhancing Configurable Program Analysis Through LLM-Driven Strategy Selection Based on Code Features [2024-TASE]

- LPR: Large Language Models-Aided Program Reduction [2024-ISSTA]

- Can Large Language Models Write Good Property-Based Tests? [2023-arXiv]

- DiaVio: LLM-Empowered Diagnosis of Safety Violations in ADS Simulation Testing [2024-ISSTA]

- Interleaving Static Analysis and LLM Prompting [2024-SOAP]

- Large Language Models for Code Analysis: Do LLMs Really Do Their Job? [2024-USENIX Security]

- E&V: Prompting Large Language Models to Perform Static Analysis by Pseudo-code Execution and Verification [2023-arXiv]

- Assisting Static Analysis with Large Language Models: A ChatGPT Experiment [2023-FSE]

- Enhancing Static Analysis for Practical Bug Detection: An LLM-Integrated Approach [2024-OOPSLA]

- SkipAnalyzer: An Embodied Agent for Code Analysis with Large Language Models [2023-arXiv]

- Automatically Inspecting Thousands of Static Bug Warnings with Large Language Model: How Far Are We? [2024-TOSEM]

- Analyzing source code vulnerabilities in the D2A dataset with ML ensembles and C-BERT [2024-EMSE]

- 🔥Test smells in LLM-Generated Unit Tests[2024-arXiv]

- 🔥Rethinking the Influence of Source Code on Test Case Generation[2024-arXiv]

- Generating Test Scenarios from NL Requirements using Retrieval-Augmented LLMs: An Industrial Study [2024-arXiv]

- ChatGPT is a Remarkable Tool—For Experts [2023-Data Intelligence]

- Large Language Models for Mobile GUI Text Input Generation: An Empirical Study [2024-arXiv]

- Effective Test Generation Using Pre-trained Large Language Models and Mutation Testing [2023-IST]

- Leveraging Large Language Models for Enhancing the Understandability of Generated Unit Tests [2024-ICSE]

- Mokav: Execution-driven Differential Testing with LLMs [2024-arXiv]

- TestART: Improving LLM-based Unit Test via Co-evolution of Automated Generation and Repair Iteration [2024-arXiv]

- An initial investigation of ChatGPT unit test generation capability [2023-SAST]

- Exploring Fuzzing as Data Augmentation for Neural Test Generation [2024-arXiv]

- Harnessing the Power of LLMs: Automating Unit Test Generation for High-Performance Computing [2024-arXiv]

- Navigating Confidentiality in Test Automation: A Case Study in LLM Driven Test Data Generation [2024-SANER]

- ChatGPT and Human Synergy in Black-Box Testing: A Comparative Analysis [2024-arXiv]

- CODAMOSA: Escaping Coverage Plateaus in Test Generation with Pre-trained Large Language Models [2023-ICSE]

- DLLens: Testing Deep Learning Libraries via LLM-aided Synthesis [2024-arXiv]

- Large Language Models as Test Case Generators: Performance Evaluation and Enhancement [2024-arXiv]

- Leveraging Large Language Models for Automated Web-Form-Test Generation: An Empirical Study [2024-arXiv]

- LLM-Powered Test Case Generation for Detecting Tricky Bugs [2024-arXiv]

- A System for Automated Unit Test Generation Using Large Language Models and Assessment of Generated Test Suites [2024-arXiv]

- Code Agents are State of the Art Software Testers [2024-arXiv]

- Test Code Generation for Telecom Software Systems using Two-Stage Generative Model [2024-arXiv]

- CasModaTest: A Cascaded and Model-agnostic Self-directed Framework for Unit Test Generation [2024-arXiv]

- Large-scale, Independent and Comprehensive study of the power of LLMs for test case generation [2024-arXiv]

- CoverUp: Coverage-Guided LLM-Based Test Generation [2024-arXiv]

- Automatic Generation of Test Cases based on Bug Reports: a Feasibility Study with Large Language Models [2024-ICSE]

- CAT-LM Training Language Models on Aligned Code And Tests [2023-ASE]

- Code-aware prompting: A study of coverage guided test generation in regression setting using llm [2024-FSE]

- Adaptive test generation using a large language model [2023-arXiv]

- An Empirical Evaluation of Using Large Language Models for Automated Unit Test Generation [2024-TSE]

- Domain Adaptation for Deep Unit Test Case Generation [2023-arXiv]

- Exploring the effectiveness of large language models in generating unit tests [2023-arXiv]

- Reinforcement Learning from Automatic Feedback for High-Quality Unit Test Generation [2023-arXiv]

- ChatGPT vs SBST: A Comparative Assessment of Unit Test Suite Generation [2024-TSE]

- Unit Test Case Generation with Transformers and Focal Context [2020-arXiv]

- HITS: High-coverage LLM-based Unit Test Generation via Method Slicing [2024-ASE]

- Optimizing Search-Based Unit Test Generation with Large Language Models: An Empirical Study [2024-Internetware]

- ChatUniTest: a ChatGPT-based automated unit test generation tool [2023-arXiv]

- The Program Testing Ability of Large Language Models for Code [2023-arXiv]

- An Empirical Study of Unit Test Generation with Large Language Models [2024-ASE]

- Enhancing LLM-based Test Generation for Hard-to-Cover Branches via Program Analysis [2024-arXiv]

- No More Manual Tests? Evaluating and Improving ChatGPT for Unit Test Generation [2023-arXiv]

- Evaluating and Improving ChatGPT for Unit Test Generation [2024-FSE]

- Algo: Synthesizing algorithmic programs with generated oracle verifiers [2023-NeurIPS]

- How well does LLM generate security tests? [2023-arXiv]

- An LLM-based Readability Measurement for Unit Tests' Context-aware Inputs [2024-arXiv]

- A3Test - Assertion Augmented Automated Test Case Generation [2024-IST]

- LLM4Fin: Fully Automating LLM-Powered Test Case Generation for FinTech Software Acceptance Testi [2024-ISSTA]

- LTM: Scalable and Black-box Similarity-based Test Suite Minimization based on Language Models [2023-arXiv]

- 🔥VulnLLMEval: A Framework for Evaluating Large Language Models in Software Vulnerability Detection and Patching[2024-arXiv]

- 🔥Code Vulnerability Detection: A Comparative Analysis of Emerging Large Language Models[2024-arXiv]

- FLAG: Finding Line Anomalies (in code) with Generative AI [2023-arXiv]

- Vulnerability Detection and Monitoring Using LLM [2023-WIECON-ECE]

- Low Level Source Code Vulnerability Detection Using Advanced BERT Language Model [2022-Canadian AI]

- LLM-based Vulnerability Sourcing from Unstructured Data [2024-EuroS&PW]

- Transformer-based vulnerability detection in code at EditTime: Zero-shot, few-shot, or fine-tuning? [2023-arXiv]

- Diversevul: A new vulnerable source code dataset for deep learning based vulnerability detection [2023-RAID]

- Bridge and Hint: Extending Pre-trained Language Models for Long-Range Code [2024-arXiv]

- LLM-Enhanced Static Analysis for Precise Identification of Vulnerable OSS Versions [2024-arXiv]

- VulCatch: Enhancing Binary Vulnerability Detection through CodeT5 Decompilation and KAN Advanced Feature Extraction [2024-arXiv]

- Exploring RAG-based Vulnerability Augmentation with LLMs [2024-arXiv]

- Vulnerability Detection with Code Language Models: How Far Are We? [2024-arXiv]

- Optimizing software vulnerability detection using RoBERTa and machine learning [2024-AUSE]

- Large Language Models for Secure Code Assessment: A Multi-Language Empirical Study [2024-arXiv]

- Generalization-Enhanced Code Vulnerability Detection via Multi-Task Instruction Fine-Tuning [2024-ACL]

- Vul-RAG: Enhancing LLM-based Vulnerability Detection via Knowledge-level RAG [2024-arXiv]

- LineVul: A Transformer-based Line-Level Vulnerability Prediction [2021-MSR]

- How Far Have We Gone in Vulnerability Detection Using Large Language Models [2023-arXiv]

- BERT-and TF-IDF-based feature extraction for long-lived bug prediction in FLOSS: a comparative study [2023-IST]

- SCoPE: Evaluating LLMs for Software Vulnerability Detection [2024-arXiv]

- The EarlyBIRD Catches the Bug: On Exploiting Early Layers of Encoder Models for More Efficient Code Classification [2023-FSE]

- Vulberta: Simplified source code pre-training for vulnerability detection [2022-IJCNN]

- DFEPT: Data Flow Embedding for Enhancing Pre-Trained Model Based Vulnerability Detection [2024-Internetware]

- A Study of Using Multimodal LLMs for Non-Crash Functional Bug Detection in Android Apps [2024-arXiv]

- Understanding the Effectiveness of Large Language Models in Detecting Security Vulnerabilities [2023-arXiv]

- A Qualitative Study on Using ChatGPT for Software Security: Perception vs. Practicality [2024-arXiv]

- Detecting Phishing Sites Using ChatGPT [2023-arXiv]

- Bug In the Code Stack: Can LLMs Find Bugs in Large Python Code Stacks [2024-arXiv]

- LLM-Assisted Static Analysis for Detecting Security Vulnerabilities [2024-arXiv]

- VulDetectBench: Evaluating the Deep Capability of Vulnerability Detection with Large Language Models [2024-arXiv]

- EaTVul: ChatGPT-based Evasion Attack Against Software Vulnerability Detection [2024-arXiv]

- GRACE: Empowering LLM-based software vulnerability detection with graph structure and in-context learning [2024-JSS]

- Evaluating Large Language Models in Detecting Test Smells [2024-arXiv]

- Harnessing the Power of LLMs in Source Code Vulnerability Detection [2024-arXiv]

- Multi-role Consensus through LLMs Discussions for Vulnerability Detection [2024-arXiv]

- Towards Effectively Detecting and Explaining Vulnerabilities Using Large Language Models [2024-arXiv]

- Llbezpeky: Leveraging large language models for vulnerability detection [2024-arXiv]

- Can Large Language Models Find And Fix Vulnerable Software? [2023-arXiv]

- Chain-of-Thought Prompting of Large Language Models for Discovering and Fixing Software Vulnerabilities [2024-arXiv]

- Automated Software Vulnerability Static Code Analysis Using Generative Pre-Trained Transformer Models [2024-arXiv]

- XGV-BERT: Leveraging Contextualized Language Model and Graph Neural Network for Efficient Software Vulnerability Detection [2023-arXiv]

- ALPINE: An adaptive language-agnostic pruning method for language models for code [2024-arXiv]

- Finetuning Large Language Models for Vulnerability Detection [2024-arXiv]

- A Comprehensive Study of the Capabilities of Large Language Models for Vulnerability Detection [2024-arXiv]

- Dataflow Analysis-Inspired Deep Learning for Efficient Vulnerability Detection [2024-ICSE]

- An Empirical Study of Deep Learning Models for Vulnerability Detection [2023-ICSE]

- DexBERT: Effective, Task-Agnostic and Fine-grained Representation Learning of Android Bytecode [2023-TSE]

- GPTScan: Detecting Logic Vulnerabilities in Smart Contracts by Combining GPT with Program Analysis [2024-ICSE]

- LLM4Vuln: A Unified Evaluation Framework for Decoupling and Enhancing LLMs' Vulnerability Reasoning [2024-arXiv]

- Using large language models to better detect and handle software vulnerabilities and cyber security threats [2024-arXiv]

- Harnessing Large Language Models for Software Vulnerability Detection: A Comprehensive Benchmarking Study [2024-arXiv]

- CSGVD: a deep learning approach combining sequence and graph embedding for source code vulnerability detection [2023-JSS]

- Just-in-Time Security Patch Detection -- LLM At the Rescue for Data Augmentation [2023-arXiv]

- Transformer-based language models for software vulnerability detection [2022-ACSAC]

- Can Large Language Models Identify And Reason About Security Vulnerabilities? Not Yet [2023-arXiv]

- Bridging the Gap: A Study of AI-based Vulnerability Management between Industry and Academia [2024-arXiv]

- Code Structure-Aware through Line-level Semantic Learning for Code Vulnerability Detection [2024-arXiv]

- M2CVD: Multi-Model Collaboration for Code Vulnerability Detection [2024-arXiv]

- VulEval: Towards Repository-Level Evaluation of Software Vulnerability Detection [2024-arXiv]

- Natural Language Generation and Understanding of Big Code for AI-Assisted Programming: A Review [2023-Entropy]

- Peculiar: Smart Contract Vulnerability Detection Based on Crucial Data Flow Graph and Pre-training Techniques [2022-ISSRE]

- DLAP: A Deep Learning Augmented Large Language Model Prompting Framework for Software Vulnerability Detection [2024-arXiv]

- Security Vulnerability Detection with Multitask Self-Instructed Fine-Tuning of Large Language Models [2024-arXiv]

- Multitask-based Evaluation of Open-Source LLM on Software Vulnerability [2024-arXiv]

- Pros and Cons! Evaluating ChatGPT on Software Vulnerability [2024-arXiv]

- Security Code Review by LLMs: A Deep Dive into Responses [2024-arXiv]

- Enhancing Deep Learning-based Vulnerability Detection by Building Behavior Graph Model [2023-ICSE]

- Prompt-Enhanced Software Vulnerability Detection Using ChatGPT [2023-arXiv]

- Coding-PTMs: How to Find Optimal Code Pre-trained Models for Code Embedding in Vulnerability Detection? [2024-arXiv]

- Comparison of Static Application Security Testing Tools and Large Language Models for Repo-level Vulnerability Detection [2024-arXiv]

- Large Language Model for Vulnerability Detection and Repair: Literature Review and Roadmap [2024-arXiv]

- Large language model for vulnerability detection: Emerging results and future directions [2024-ICSE]

- An exploratory study on just-in-time multi-programming-language bug prediction [2024-IST]

- Assessing the Effectiveness of Vulnerability Detection via Prompt Tuning: An Empirical Study [2023-APSEC]

- Detecting Common Weakness Enumeration Through Training the Core Building Blocks of Similar Languages Based on the CodeBERT Model [2023-APSEC]

- Improving long-tail vulnerability detection through data augmentation based on large language models [2024-ICSME]

- Large Language Models can Connect the Dots: Exploring Model Optimization Bugs with Domain Knowledge-aware Prompts [2024-ISSTA]

- Silent Vulnerable Dependency Alert Prediction with Vulnerability Key Aspect Explanation [2023-ICSE]

- LLM-Enhanced Theorem Proving with Term Explanation and Tactic Parameter Repair [2024-Internetware]

- Large Language Model vs. Stack Overflow in Addressing Android Permission Related Challenges [2024-MSR]

- T-FREX: A Transformer-based Feature Extraction Method from Mobile App Reviews [2024-SANER]

- Where is Your App Frustrating Users? [2022-ICSE]

- Duplicate bug report detection by using sentence embedding and fine-tuning [2021-ICSME]

- Can LLMs Demystify Bug Reports? [2023-arXiv]

- Refining GPT-3 Embeddings with a Siamese Structure for Technical Post Duplicate Detection [2024-SANER]

- Cupid: Leveraging ChatGPT for More Accurate Duplicate Bug Report Detection [2023-arXiv]

- Few-shot learning for sentence pair classification and its applications in software engineering [2023-arXiv]

- 🔥PyBugHive: A Comprehensive Database of Manually Validated, Reproducible Python Bugs[2024-IEEE Access]

- Prompting Is All Your Need: Automated Android Bug Replay with Large Language Models [2023-ICSE]

- CrashTranslator: Automatically Reproducing Mobile Application Crashes Directly from Stack Trace [2024-ICSE]

- Evaluating Diverse Large Language Models for Automatic and General Bug Reproduction [2023-arXiv]

- Large Language Models are Few-shot Testers: Exploring LLM-based General Bug Reproduction [2023-ICSE]

- Neighborhood contrastive learning-based graph neural network for bug triaging [2024-SCP]

- A Comparative Study of Transformer-based Neural Text Representation Techniques on Bug Triaging [2023-ASE]

- A Light Bug Triage Framework for Applying Large Pre-trained Language Model [2022-ASE]

- GPTCloneBench: A comprehensive benchmark of semantic clones and cross-language clones using GPT-3 model and SemanticCloneBench [2023-ICSME]

- Using a Nearest-Neighbour, BERT-Based Approach for Scalable Clone Detection [2022-ICSME]

- Towards Understanding the Capability of Large Language Models on Code Clone Detection: A Survey [2023-arXiv]

- AdaCCD: Adaptive Semantic Contrasts Discovery Based Cross Lingual Adaptation for Code Clone Detection [2024-AAAI]

- Investigating the Efficacy of Large Language Models for Code Clone Detection [2024-ICPC]

- Large Language Models for cross-language code clone detection [2024-arXiv]

- Utilization of Pre-trained Language Model for Adapter-based Knowledge Transfer in Software Engineering [2023-EMSE]

- An exploratory study on code attention in bert [2022-ICPC]

- Assessing the Code Clone Detection Capability of Large Language Models [2024-ICCQ]

- Interpreting CodeBERT for Semantic Code Clone Detection [2023-APSEC]

- Predicting Code Coverage without Execution [2023-arXiv]

- Multilingual Code Co-Evolution Using Large Language Models [2023-FSE]

- Enabling Memory Safety of C Programs using LLMs [2024-arXiv]

- Hybrid API Migration: A Marriage of Small API Mapping Models and Large Language Models [2023-Internetware]

- RefBERT: A Two-Stage Pre-trained Framework for Automatic Rename Refactoring [2023-ISSTA]

- Next-Generation Refactoring: Combining LLM Insights and IDE Capabilities for Extract Method [2024-ICSME]

- Refactoring Programs Using Large Language Models with Few-Shot Examples [2023-APSEC]

- Refactoring to Pythonic Idioms: A Hybrid Knowledge-Driven Approach Leveraging Large Language Models [2024-FSE]

- 🔥Fine-tuning Large Language Models to Improve Accuracy and Comprehensibility of Automated Code Review[2024-TOSEM]

- Can LLMs Replace Manual Annotation of Software Engineering Artifacts? [2024-arXiv]

- Improving the learning of code review successive tasks with cross-task knowledge distillation [2024-FSE]

- A GPT-based Code Review System for Programming Language Learning [2024-arXiv]

- Exploring the Capabilities of LLMs for Code Change Related Tasks [2024-arXiv]

- Incivility Detection in Open Source Code Review and Issue Discussions [2024-JSS]

- Augmenting commit classification by using fine-grained source code changes and a pre-trained deep neural language model [2021-IST]

- Exploring the Potential of ChatGPT in Automated Code Refinement: An Empirical Study [2023-ICSE]

- Automated Summarization of Stack Overflow Posts [2023-ICSE]

- Evaluating Language Models for Generating and Judging Programming Feedback [2024-arXiv]

- AUGER: automatically generating review comments with pre-training models [2022-FSE]

- Automating code review activities by large-scale pre-training [2022-FSE]

- Improving Code Refinement for Code Review Via Input Reconstruction and Ensemble Learning [2023-APSEC]

- LLaMA-Reviewer: Advancing code review automation with large language models through parameter-efficient fine-tuning [2023-ISSRE]

- LLM Critics Help Catch LLM Bugs [2024-arXiv]

- Fine-tuning and prompt engineering for large language models-based code review automation [2024-IST]

- AI-powered Code Review with LLMs: Early Results [2024-arXiv]

- A Multi-Step Learning Approach to Assist Code Review [2023-SANER]

- Code Review Automation: Strengths and Weaknesses of the State of the Art [2024-TSE]

- Using Pre-Trained Models to Boost Code Review Automation [2022-ICSE]

- AI-Assisted Assessment of Coding Practices in Modern Code Review [2024-arXiv]

- Explaining Explanation: An Empirical Study on Explanation in Code Reviews [2023-arXiv]

- Aspect-based api review classification: How far can pre-trained transformer model go? [2022-SANER]

- Automatic Code Review by Learning the Structure Information of Code Graph [2023-Sensors]

- The Right Prompts for the Job: Repair Code-Review Defects with Large Language Model [2023-arXiv]

- Pre-trained Model Based Feature Envy Detection [2023-MSR]

- Commitbert: Commit message generation using pre-trained programming language mode [2021-nlp4prog]

- Only diff is Not Enough: Generating Commit Messages Leveraging Reasoning and Action of Large Language Model [2024-FSE]

- Commit Messages in the Age of Large Language Models [2024-arXiv]

- Automated Commit Message Generation with Large Language Models: An Empirical Study and Beyond [2024-arXiv]

- Automatic Commit Message Generation: A Critical Review and Directions for Future Work [2024-TSE]

- Large Language Models for Compiler Optimization [2023-arXiv]

- Meta Large Language Model Compiler: Foundation Models of Compiler Optimization [2024-arXiv]

- ViC: Virtual Compiler Is All You Need For Assembly Code Search [2024-arXiv]

- Priority Sampling of Large Language Models for Compilers [2024-arXiv]

- Should AI Optimize Your Code? A Comparative Study of Current Large Language Models Versus Classical Optimizing Compilers [2024-arXiv]

- Learning Performance-Improving Code Edits [2023-ICLR]

- Isolating Compiler Bugs by Generating Effective Witness Programs With Large Language Models [2024-TSE]

- Iterative or Innovative? A Problem-Oriented Perspective for Code Optimization [2024-arXiv]

- Explainable Automated Debugging via Large Language Model-driven Scientific Debugging [2023-arXiv]

Programming Assistant for Exception Handling with CodeBERT [2024-ICSE]

Flakify: a black-box, language model-based predictor for flaky tests [2022-TSE]

- Recommending Root-Cause and Mitigation Steps for Cloud Incidents using Large Language Models [2023-ICSE]

- Xpert: Empowering Incident Management with Query Recommendations via Large Language Models [2024-ICSE]

- Impact of data quality for automatic issue classification using pre-trained language models [2024-JSS]

- Leveraging GPT-like LLMs to Automate Issue Labeling [2024-MSR]

- GLOSS: Guiding Large Language Models to Answer Questions from System Logs [2024-SANER]

- ULog: Unsupervised Log Parsing with Large Language Models through Log Contrastive Units [2024-arXiv]

- LILAC: Log Parsing using LLMs with Adaptive Parsing Cache [2024-FSE]

- Log Parsing with Prompt-based Few-shot Learning [2023-ICSE]

- Log Parsing: How Far Can ChatGPT Go? [2023-ASE]

- Exploring the Effectiveness of LLMs in Automated Logging Generation: An Empirical Study [2023-arXiv]

- Interpretable Online Log Analysis Using Large Language Models with Prompt Strategies [2024-ICPC]

- LogPrompt: Prompt Engineering Towards Zero-Shot and Interpretable Log Analysis [2023-arXiv]

- KnowLog: Knowledge Enhanced Pre-trained Language Model for Log Understanding [2024-ICSE]

- LLMParser: An Exploratory Study on Using Large Language Models for Log Parsing [2024-ICSE]

- Using deep learning to generate complete log statements [2022-ICSE]

- Log statements generation via deep learning: Widening the support provided to developers [2024-JSS]

- The Effectiveness of Compact Fine-Tuned LLMs for Log Parsing [2024-ICSME]

- An Assessment of ChatGPT on Log Data [2023-AIGC]

- LogStamp: Automatic Online Log Parsing Based on Sequence Labelling [2022-SIGMETRICS]

- Log Parsing with Self-Generated In-Context Learning and Self-Correction [2024-arXiv]

- Stronger, Faster, and Cheaper Log Parsing with LLMs [2024-arXiv]

- UniLog: Automatic Logging via LLM and In-Context Learning [2024-ICSE]

- Log Parsing with Generalization Ability under New Log Types [2023-FSE]

- A Comparative Study on Large Language Models for Log Parsing [2024-ESEM]

- Log Sequence Anomaly Detection based on Template and Parameter Parsing via BERT [2024-TDSC]

- LogBERT: Log Anomaly Detection via BERT [2021-IJCNN]

- Anomaly Detection on Unstable Logs with GPT Models [2024-arXiv]

- Parameter-Efficient Log Anomaly Detection based on Pre-training model and LORA [2023-ISSRE]