LLM-eval-survey

The official GitHub page for the survey paper "A Survey on Evaluation of Large Language Models".

Stars: 1510

LLM-eval-survey is a collection of papers and resources related to evaluations on large language models. It includes a survey on the evaluation of large language models, covering various aspects such as natural language processing, robustness, ethics, biases, trustworthiness, social science, natural science, engineering, medical applications, agent applications, and other applications. The repository provides a comprehensive overview of different evaluation tasks and benchmarks for large language models.

README:

Yupeng Chang*1   Xu Wang*1   Jindong Wang#2   Yuan Wu#1   Kaijie Zhu3   Hao Chen4   Linyi Yang5   Xiaoyuan Yi2   Cunxiang Wang5   Yidong Wang6   Wei Ye6   Yue Zhang5   Yi Chang1   Philip S. Yu7   Qiang Yang8   Xing Xie2

1 Jilin University,

2 Microsoft Research,

3 Institute of Automation, CAS

4 Carnegie Mellon University,

5 Westlake University,

6 Peking University,

7 University of Illinois,

8 Hong Kong University of Science and Technology

(*: Co-first authors, #: Co-corresponding authors)

The papers are organized according to our survey: A Survey on Evaluation of Large Language Models.

NOTE: As we cannot update the arXiv paper in real time, please refer to this repo for the latest updates and the paper may be updated later. We also welcome any pull request or issues to help us make this survey perfect. Your contributions will be acknowledged in acknowledgements.

Related projects:

- Prompt benchmark for large language models: [PromptBench: robustness evaluation of LLMs]

- Evlauation of large language models: [LLM-eval]

Table of Contents

- [12/07/2023] The second version of the paper is released on arXiv, along with Chinese blog.

- [07/07/2023] The first version of the paper is released on arXiv: A Survey on Evaluation of Large Language Models.

- A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. Yejin Bang et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- Holistic Evaluation of Language Models. Percy Liang et al. arXiv 2022. [paper]

- Can ChatGPT forecast stock price movements? return predictability and large language models. Alejandro Lopez-Lira et al. SSRN 2023. [paper]

- Is ChatGPT a general-purpose natural language processing task solver? Chengwei Qin et al. arXiv 2023. [paper]

- Is ChatGPT a Good Sentiment Analyzer? A Preliminary Study. Zengzhi Wang et al. arXiv 2023. [paper]

- Sentiment analysis in the era of large language models: A reality check. Wenxuan Zhang et al. arXiv 2023. [paper]

- Holistic evaluation of language models. Percy Liang et al. arXiv 2022. [paper]

- Leveraging large language models for topic classification in the domain of public affairs. Alejandro Peña et al. arXiv 2023. [paper]

- Large language models can rate news outlet credibility. Kai-Cheng Yang et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- Can Large Language Models Infer and Disagree like Humans? Noah Lee et al. arXiv 2023. [paper]

- Is ChatGPT a general-purpose natural language processing task solver? Chengwei Qin et al. arXiv 2023. [paper]

- Do LLMs Understand Social Knowledge? Evaluating the Sociability of Large Language Models with SocKET Benchmark. Minje Choi et al. arXiv 2023. [paper]

- The two word test: A semantic benchmark for large language models. Nicholas Riccardi et al. arXiv 2023. [paper]

- EvEval: A Comprehensive Evaluation of Event Semantics for Large Language Models. Zhengwei Tao et al. arXiv 2023. [paper]

- A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. Yejin Bang et al. arXiv 2023. [paper]

- ChatGPT is a knowledgeable but inexperienced solver: An investigation of commonsense problem in large language models. Ning Bian et al. arXiv 2023. [paper]

- Chain-of-Thought Hub: A continuous effort to measure large language models' reasoning performance. Yao Fu et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- Large Language Models Are Not Abstract Reasoners, Gaël Gendron et al. arXiv 2023. [paper]

- Can large language models reason about medical questions? Valentin Liévin et al. arXiv 2023. [paper]

- Evaluating the Logical Reasoning Ability of ChatGPT and GPT-4. Hanmeng Liu et al. arXiv 2023. [paper]

- Mathematical Capabilities of ChatGPT. Simon Frieder et al. arXiv 2023. [paper]

- Human-like problem-solving abilities in large language models using ChatGPT. Graziella Orrù et al. Front. Artif. Intell. 2023 [paper]

- Is ChatGPT a general-purpose natural language processing task solver? Chengwei Qin et al. arXiv 2023. [paper]

- Testing the general deductive reasoning capacity of large language models using OOD examples. Abulhair Saparov et al. arXiv 2023. [paper]

- MindGames: Targeting Theory of Mind in Large Language Models with Dynamic Epistemic Modal Logic. Damien Sileo et al. arXiv 2023. [paper]

- Reasoning or Reciting? Exploring the Capabilities and Limitations of Language Models Through Counterfactual Tasks. Zhaofeng Wu arXiv 2023. [paper]

- Are large language models really good logical reasoners? a comprehensive evaluation from deductive, inductive and abductive views. Fangzhi Xu et al. arXiv 2023. [paper]

- Efficiently Measuring the Cognitive Ability of LLMs: An Adaptive Testing Perspective. Yan Zhuang et al. arXiv 2023. [paper]

- Autoformalization with Large Language Models. Yuhuai Wu et al. NeurIPS 2022. [paper]

- Evaluating and Improving Tool-Augmented Computation-Intensive Math Reasoning. Beichen Zhang et al. arXiv 2023. [paper]

- StructGPT: A General Framework for Large Language Model to Reason over Structured Data. Jinhao Jiang et al. arXiv 2023. [paper]

- Unifying Large Language Models and Knowledge Graphs: A Roadmap. Shirui Pan et al. arXiv 2023. [paper]

- A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. Yejin Bang et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- Holistic Evaluation of Language Models. Percy Liang et al. arXiv 2022. [paper]

- ChatGPT vs Human-authored text: Insights into controllable text summarization and sentence style transfer. Dongqi Pu et al. arXiv 2023. [paper]

- Is ChatGPT a general-purpose natural language processing task solver? Chengwei Qin et al. arXiv 2023. [paper]

- A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. Yejin Bang et al. arXiv 2023. [paper]

- LLM-Eval: Unified Multi-Dimensional Automatic Evaluation for Open-Domain Conversations with Large Language Models. Yen-Ting Lin et al. arXiv 2023. [paper]

- Is ChatGPT a general-purpose natural language processing task solver? Chengwei Qin et al. arXiv 2023. [paper]

- LMSYS-Chat-1M: A Large-Scale Real-World LLM Conversation Dataset. Lianmin Zheng et al. arXiv 2023. [paper]

- A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. Yejin Bang et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- Translating Radiology Reports into Plain Language using ChatGPT and GPT-4 with Prompt Learning: Promising Results, Limitations, and Potential. Qing Lyu et al. arXiv 2023. [paper]

- Document-Level Machine Translation with Large Language Models. Longyue Wang et al. arXiv 2023. [paper]

- Case Study of Improving English-Arabic Translation Using the Transformer Model. Donia Gamal et al. ijicis 2023. [paper]

- Taqyim: Evaluating Arabic NLP Tasks Using ChatGPT Models. Zaid Alyafeai et al. arXiv 2023. [paper]

- Benchmarking Foundation Models with Language-Model-as-an-Examiner. Yushi Bai et al. arXiv 2023. [paper]

- A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. Yejin Bang et al. arXiv 2023. [paper]

- ChatGPT is a knowledgeable but inexperienced solver: An investigation of commonsense problem in large language models. Ning Bian et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- Holistic Evaluation of Language Models. Percy Liang et al. arXiv 2022. [paper]

- Is ChatGPT a general-purpose natural language processing task solver? Chengwei Qin et al. arXiv 2023. [paper]

- Exploring the use of large language models for reference-free text quality evaluation: A preliminary empirical study. Yi Chen et al. arXiv 2023. [paper]

- INSTRUCTEVAL: Towards Holistic Evaluation of Instruction-Tuned Large Language Models. Yew Ken Chia et al. arXiv 2023. [paper]

- ChatGPT vs Human-authored Text: Insights into Controllable Text Summarization and Sentence Style Transfer. Dongqi Pu et al. arXiv 2023. [paper]

- Benchmarking Arabic AI with large language models. Ahmed Abdelali et al. arXiv 2023. [paper]

- MEGA: Multilingual Evaluation of Generative AI. Kabir Ahuja et al. arXiv 2023. [paper]

- A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. Yejin Bang et al. arXiv 2023. [paper]

- ChatGPT beyond English: Towards a comprehensive evaluation of large language models in multilingual learning. Viet Dac Lai et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- M3Exam: A Multilingual, Multimodal, Multilevel Benchmark for Examining Large Language Models. Wenxuan Zhang et al. arXiv 2023. [paper]

- Measuring Massive Multitask Chinese Understanding. Hui Zeng et al. arXiv 2023. [paper]

- CMMLU: Measuring massive multitask language understanding in Chinese. Haonan Li et al. arXiv 2023. [paper]

- TrueTeacher: Learning Factual Consistency Evaluation with Large Language Models. Zorik Gekhman et al. arXiv 2023. [paper]

- TRUE: Re-evaluating Factual Consistency Evaluation. Or Honovich et al. arXiv 2022. [paper]

- SelfCheckGPT: Zero-Resource Black-Box Hallucination Detection for Generative Large Language Models. Potsawee Manakul et al. arXiv 2023. [paper]

- FActScore: Fine-grained Atomic Evaluation of Factual Precision in Long Form Text Generation. Sewon Min et al. arXiv 2023. [paper]

- Measuring and Modifying Factual Knowledge in Large Language Models. Pouya Pezeshkpour arXiv 2023. [paper]

- Evaluating Open-QA Evaluation. Cunxiang Wang arXiv 2023. [paper]

- A Survey on Out-of-Distribution Evaluation of Neural NLP Models. Xinzhe Li et al. arXiv 2023. [paper]

- Mitigating Hallucination in Large Multi-Modal Models via Robust Instruction Tuning. Fuxiao Liu et al. arXiv 2023. [paper]

- Generalizing to Unseen Domains: A Survey on Domain Generalization. Jindong Wang et al. TKDE 2022. [paper]

- On the Robustness of ChatGPT: An Adversarial and Out-of-distribution Perspective. Jindong Wang et al. arXiv 2023. [paper]

- GLUE-X: Evaluating Natural Language Understanding Models from an Out-of-Distribution Generalization Perspective. Linyi Yang et al. arXiv 2022. [paper]

- On Evaluating Adversarial Robustness of Large Vision-Language Models. Yunqing Zhao et al. arXiv 2023. [paper]

- PromptBench: Towards Evaluating the Robustness of Large Language Models on Adversarial Prompts. Kaijie Zhu et al. arXiv 2023. [paper]

- On Robustness of Prompt-based Semantic Parsing with Large Pre-trained Language Model: An Empirical Study on Codex. Terry Yue Zhuo et al. arXiv 2023. [paper]

- Assessing Cross-Cultural Alignment between ChatGPT and Human Societies: An Empirical Study. Yong Cao et al. C3NLP@EACL 2023. [paper]

- Toxicity in ChatGPT: Analyzing persona-assigned language models. Ameet Deshpande et al. arXiv 2023. [paper]

- BOLD: Dataset and Metrics for Measuring Biases in Open-Ended Language Generation. Jwala Dhamala et al. FAccT 2021 [paper]

- Should ChatGPT be Biased? Challenges and Risks of Bias in Large Language Models. Emilio Ferrara arXiv 2023. [paper]

- RealToxicityPrompts: Evaluating Neural Toxic Degeneration in Language Models. Samuel Gehman et al. EMNLP 2020. [paper]

- The political ideology of conversational AI: Converging evidence on ChatGPT's pro-environmental, left-libertarian orientation. Jochen Hartmann et al. arXiv 2023. [paper]

- Aligning AI With Shared Human Values. Dan Hendrycks et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- BBQ: A hand-built bias benchmark for question answering. Alicia Parrish et al. ACL 2022. [paper]

- The Self-Perception and Political Biases of ChatGPT. Jérôme Rutinowski et al. arXiv 2023. [paper]

- Societal Biases in Language Generation: Progress and Challenges. Emily Sheng et al. ACL-IJCNLP 2021. [paper]

- Moral Mimicry: Large Language Models Produce Moral Rationalizations Tailored to Political Identity. Gabriel Simmons et al. arXiv 2022. [paper]

- Large Language Models are not Fair Evaluators. Peiyi Wang et al. arXiv 2023. [paper]

- Exploring AI Ethics of ChatGPT: A Diagnostic Analysis. Terry Yue Zhuo et al. arXiv 2023. [paper]

- CHBias: Bias Evaluation and Mitigation of Chinese Conversational Language Models. Jiaxu Zhao et al. ACL 2023. [paper]

- Human-Like Intuitive Behavior and Reasoning Biases Emerged in Language Models -- and Disappeared in GPT-4. Thilo Hagendorff et al. arXiv 2023. [paper]

- DecodingTrust: A Comprehensive Assessment of Trustworthiness in GPT Models. Boxin Wang et al. arXiv 2023. [paper]

- Mitigating Hallucination in Large Multi-Modal Models via Robust Instruction Tuning. Fuxiao Liu et al. arXiv 2023. [paper]

- Evaluating Object Hallucination in Large Vision-Language Models. Yifan Li et al. arXiv 2023. [paper]

- A Survey of Hallucination in Large Foundation Models. Vipula Rawte et al. arXiv 2023. [paper]

- Siren's Song in the AI Ocean: A Survey on Hallucination in Large Language Models. Yue Zhang et al. arXiv 2023. [paper]

- Beyond Factuality: A Comprehensive Evaluation of Large Language Models as Knowledge Generators. Liang Chen et al. EMNLP 2023. [paper]

- Ask Again, Then Fail: Large Language Models' Vacillations in Judgement. Qiming Xie et al. arXiv 2023. [paper]

- How ready are pre-trained abstractive models and LLMs for legal case judgement summarization. Aniket Deroy et al. arXiv 2023. [paper]

- Baby steps in evaluating the capacities of large language models. Michael C. Frank Nature Reviews Psychology 2023. [paper]

- Large Language Models as Tax Attorneys: A Case Study in Legal Capabilities Emergence. John J. Nay et al. arXiv 2023. [paper]

- Large language models can be used to estimate the ideologies of politicians in a zero-shot learning setting. Patrick Y. Wu et al. arXiv 2023. [paper]

- Can large language models transform computational social science? Caleb Ziems et al. arXiv 2023. [paper]

- Have LLMs Advanced Enough? A Challenging Problem Solving Benchmark for Large Language Models. Daman Arora et al. arXiv 2023. [paper]

- Sparks of Artificial General Intelligence: Early experiments with GPT-4. Sébastien Bubeck et al. arXiv 2023. [paper]

- Evaluating Language Models for Mathematics through Interactions. Katherine M. Collins et al. arXiv 2023. [paper]

- Investigating the effectiveness of ChatGPT in mathematical reasoning and problem solving: Evidence from the Vietnamese national high school graduation examination. Xuan-Quy Dao et al. arXiv 2023. [paper]

- A Systematic Study and Comprehensive Evaluation of ChatGPT on Benchmark Datasets, Laskar et al. ACL 2023 (Findings). [paper]

- CMATH: Can Your Language Model Pass Chinese Elementary School Math Test? Tianwen Wei et al. arXiv 2023. [paper]

- An empirical study on challenging math problem solving with GPT-4. Yiran Wu et al. arXiv 2023. [paper]

- How well do Large Language Models perform in Arithmetic Tasks? Zheng Yuan et al. arXiv 2023. [paper]

- MetaMath: Bootstrap Your Own Mathematical Questions for Large Language Models. Longhui Yu et al. arXiv 2023. [paper]

- Have LLMs Advanced Enough? A Challenging Problem Solving Benchmark for Large Language Models. Daman Arora et al. arXiv 2023. [paper]

- Do Large Language Models Understand Chemistry? A Conversation with ChatGPT. Castro Nascimento CM et al. JCIM 2023. [paper]

- What indeed can GPT models do in chemistry? A comprehensive benchmark on eight tasks. Taicheng Guo et al. arXiv 2023. [paper][GitHub]

- Sparks of Artificial General Intelligence: Early Experiments with GPT-4. Sébastien Bubeck et al. arXiv 2023. [paper]

- Is your code generated by ChatGPT really correct? Rigorous evaluation of large language models for code generation. Jiawei Liu et al. arXiv 2023. [paper]

- Understanding the Capabilities of Large Language Models for Automated Planning. Vishal Pallagani et al. arXiv 2023. [paper]

- ChatGPT: A study on its utility for ubiquitous software engineering tasks. Giriprasad Sridhara et al. arXiv 2023. [paper]

- Large language models still can't plan (A benchmark for LLMs on planning and reasoning about change). Karthik Valmeekam et al. arXiv 2022. [paper]

- On the Planning Abilities of Large Language Models – A Critical Investigation. Karthik Valmeekam et al. arXiv 2023. [paper]

- Efficiently Measuring the Cognitive Ability of LLMs: An Adaptive Testing Perspective. Yan Zhuang et al. arXiv 2023. [paper]

- The promise and peril of using a large language model to obtain clinical information: ChatGPT performs strongly as a fertility counseling tool with limitation. Joseph Chervenak M.D. et al. Fertility and Sterility 2023. [paper]

- Analysis of large-language model versus human performance for genetics questions. Dat Duong et al. European Journal of Human Genetics 2023. [paper]

- Evaluation of AI Chatbots for Patient-Specific EHR Questions. Alaleh Hamidi et al. arXiv 2023. [paper]

- Evaluating Large Language Models on a Highly-specialized Topic, Radiation Oncology Physics. Jason Holmes et al. arXiv 2023. [paper]

- Evaluation of ChatGPT on Biomedical Tasks: A Zero-Shot Comparison with Fine-Tuned Generative Transformers. Israt Jahan et al. arXiv 2023. [paper]

- Assessing the Accuracy and Reliability of AI-Generated Medical Responses: An Evaluation of the Chat-GPT Model. Douglas Johnson et al. Residential Square 2023. [paper]

- Assessing the Accuracy of Responses by the Language Model ChatGPT to Questions Regarding Bariatric Surgery. Jamil S. Samaan et al. Obesity Surgery 2023. [paper]

- Trialling a Large Language Model (ChatGPT) in General Practice With the Applied Knowledge Test: Observational Study Demonstrating Opportunities and Limitations in Primary Care. Arun James Thirunavukarasu et al. JMIR Med Educ. 2023. [paper]

- CARE-MI: Chinese Benchmark for Misinformation Evaluation in Maternity and Infant Care. Tong Xiang et al. arXiv 2023. [paper]

- How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. Aidan Gilson et al. JMIR Med Educ. 2023. [paper]

- Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. Tiffany H. Kung et al. PLOS Digit Health. 2023. [paper]

- Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. Marco Cascella et al. Journal of Medical Systems 2023. [paper]

- covLLM: Large Language Models for COVID-19 Biomedical Literature. Yousuf A. Khan et al. arXiv 2023. [paper]

- Evaluating the use of large language model in identifying top research questions in gastroenterology. Adi Lahat et al. Scientific reports 2023. [paper]

- Translating Radiology Reports into Plain Language using ChatGPT and GPT-4 with Prompt Learning: Promising Results, Limitations, and Potential. Qing Lyu et al. arXiv 2023. [paper]

- ChatGPT goes to the operating room: Evaluating GPT-4 performance and its potential in surgical education and training in the era of large language models. Namkee Oh et al. Ann Surg Treat Res. 2023. [paper]

- Can LLMs like GPT-4 outperform traditional AI tools in dementia diagnosis? Maybe, but not today. Zhuo Wang et al. arXiv 2023. [paper]

- Language Is Not All You Need: Aligning Perception with Language Models. Shaohan Huang et al. arXiv 2023. [paper]

- MRKL Systems: A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning. Ehud Karpas et al. [paper]

- The Unsurprising Effectiveness of Pre-Trained Vision Models for Control. Simone Parisi et al. ICMl 2022. [paper]

- Tool Learning with Foundation Models. Qin et al. arXiv 2023. [paper]

- ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs. Qin et al. arXiv 2023. [paper]

- Toolformer: Language Models Can Teach Themselves to Use Tools. Timo Schick et al. arXiv 2023. [paper]

- HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face. Yongliang Shen et al. arXiv 2023. [paper]

- Can Large Language Models Provide Feedback to Students? A Case Study on ChatGPT. Wei Dai et al. ICALT 2023. [paper]

- Can ChatGPT pass high school exams on English Language Comprehension? Joost de Winter Researchgate. [paper]

- Exploring the Responses of Large Language Models to Beginner Programmers' Help Requests. Arto Hellas et al. arXiv 2023. [paper]

- Is ChatGPT a Good Teacher Coach? Measuring Zero-Shot Performance For Scoring and Providing Actionable Insights on Classroom Instruction. Rose E. Wang et al. arXiv 2023. [paper]

- CMATH: Can Your Language Model Pass Chinese Elementary School Math Test? Tianwen Wei et al. arXiv 2023. [paper]

- Uncovering ChatGPT's Capabilities in Recommender Systems. Sunhao Dai et al. arXiv 2023. [paper]

- Recommender Systems in the Era of Large Language Models (LLMs). Wenqi Fan et al. Researchgate. [paper]

- Exploring the Upper Limits of Text-Based Collaborative Filtering Using Large Language Models: Discoveries and Insights. Ruyu Li et al. arXiv 2023. [paper]

- Is ChatGPT Good at Search? Investigating Large Language Models as Re-Ranking Agent. Weiwei Sun et al. arXiv 2023. [paper]

- ChatGPT vs. Google: A Comparative Study of Search Performance and User Experience. Ruiyun Xu et al. arXiv 2023. [paper]

- Where to Go Next for Recommender Systems? ID- vs. Modality-based Recommender Models Revisited. Zheng Yuan et al. arXiv 2023. [paper]

- Is ChatGPT Fair for Recommendation? Evaluating Fairness in Large Language Model Recommendation. Jizhi Zhang et al. arXiv 2023. [paper]

- Zero-shot recommendation as language modeling. Damien Sileo et al. ECIR 2022. [paper]

- ChatGPT is fun, but it is not funny! Humor is still challenging Large Language Models. Sophie Jentzsch et al. arXiv 2023. [paper]

- Leveraging Word Guessing Games to Assess the Intelligence of Large Language Models. Tian Liang et al. arXiv 2023. [paper]

- Personality Traits in Large Language Models. Mustafa Safdari et al. arXiv 2023. [paper]

- Have Large Language Models Developed a Personality?: Applicability of Self-Assessment Tests in Measuring Personality in LLMs. Xiaoyang Song et al. arXiv 2023. [paper]

- Emotional Intelligence of Large Language Models. Xuena Wang et al. arXiv 2023. [paper]

- ChatGPT and Other Large Language Models as Evolutionary Engines for Online Interactive Collaborative Game Design. Pier Luca Lanzi et al. arXiv 2023. [paper]

- An Evaluation of Log Parsing with ChatGPT. Van-Hoang Le et al. arXiv 2023. [paper]

- PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization. Yidong Wang et al. arXiv 2023. [paper]

The paper lists several popular benchmarks. For better summarization, these benchmarks are divided into two categories: general language task benchmarks and specific downstream task benchmarks.

NOTE: We may miss some benchmarks. Your suggestions are highly welcomed!

| Benchmark | Focus | Domain | Evaluation Criteria |

|---|---|---|---|

| SOCKET [paper] | Social knowledge | Specific downstream task | Social language understanding |

| MME [paper] | Multimodal LLMs | Multi-modal task | Ability of perception and cognition |

| Xiezhi [paper][GitHub] | Comprehensive domain knowledge | General language task | Overall performance across multiple benchmarks |

| Choice-75 [paper][GitHub] | Script learning | Specific downstream task | Overall performance of LLMs |

| CUAD [paper] | Legal contract review | Specific downstream task | Legal contract understanding |

| TRUSTGPT [paper] | Ethic | Specific downstream task | Toxicity, bias, and value-alignment |

| MMLU [paper] | Text models | General language task | Multitask accuracy |

| MATH [paper] | Mathematical problem | Specific downstream task | Mathematical ability |

| APPS [paper] | Coding challenge competence | Specific downstream task | Code generation ability |

| CELLO[paper][GitHub] | Complex instructions | Specific downstream task | Count limit, answer format, task-prescribed phrases and input-dependent query |

| C-Eval [paper][GitHub] | Chinese evaluation | General language task | 52 Exams in a Chinese context |

| EmotionBench [paper] | Empathy ability | Specific downstream task | Emotional changes |

| OpenLLM [Link] | Chatbots | General language task | Leaderboard rankings |

| DynaBench [paper] | Dynamic evaluation | General language task | NLI, QA, sentiment, and hate speech |

| Chatbot Arena [Link] | Chat assistants | General language task | Crowdsourcing and Elo rating system |

| AlpacaEval [GitHub] | Automated evaluation | General language task | Metrics, robustness, and diversity |

| CMMLU [paper][GitHub] | Chinese multi-tasking | Specific downstream task | Multi-task language understanding capabilities |

| HELM [paper][Link] | Holistic evaluation | General language task | Multi-metric |

| API-Bank [paper] | Tool-augmented | Specific downstream task | API call, response, and planning |

| M3KE [paper] | Multi-task | Specific downstream task | Multi-task accuracy |

| MMBench [paper][GitHub] | Large vision-language models(LVLMs) | Multi-modal task | Multifaceted capabilities of VLMs |

| SEED-Bench [paper][GitHub] | Multi-modal Large Language Models | Multi-modal task | Generative understanding of MLLMs |

| ARB [paper] | Advanced reasoning ability | Specific downstream task | Multidomain advanced reasoning ability |

| BIG-bench [paper][GitHub] | Capabilities and limitations of LMs | General language task | Model performance and calibration |

| MultiMedQA [paper] | Medical QA | Specific downstream task | Accuracy and human evaluation |

| CVALUES [paper] [GitHub] | Safety and responsibility | Specific downstream task | Alignment ability of LLMs |

| LVLM-eHub [paper] | LVLMs | Multi-modal task | Multimodal capabilities of LVLMs |

| ToolBench [GitHub] | Software tools | Specific downstream task | Execution success rate |

| FRESHQA [paper] [GitHub] | Dynamic QA | Specific downstream task | Correctness and hallucination |

| CMB [paper] [Link] | Chinese comprehensive medicine | Specific downstream task | Expert evaluation and automatic evaluation |

| PandaLM [paper] [GitHub] | Instruction tuning | General language task | Winrate judged by PandaLM |

| MINT [paper] [GitHub] | Multi-turn interaction, tools and language feedback | Specific downstream task | Success rate with k-turn budget SRk |

| Dialogue CoT [paper] [GitHub] | In-depth dialogue | Specific downstream task | Helpfulness and acceptness of LLMs |

| BOSS [paper] [GitHub] | OOD robustness in NLP | General language task | OOD robustness |

| MM-Vet [paper] [GitHub] | Complicated multi-modal tasks | Multi-modal task | Integrated vision-language capabilities |

| LAMM [paper] [GitHub] | Multi-modal point clouds | Multi-modal task | Task-specific metrics |

| GLUE-X [paper] [GitHub] | OOD robustness for NLU tasks | General language task | OOD robustness |

| CONNER [paper][GitHub] | Knowledge-oriented evaluation | Knowledge-intensive task | Intrinsic and extrinsic metrics |

| KoLA [paper] | Knowledge-oriented evaluation | General language task | Self-contrast metrics |

| AGIEval [paper] | Human-centered foundational models | General language task | General |

| PromptBench [paper] [GitHub] | Adversarial prompt resilience | General language task | Adversarial robustness |

| MT-Bench [paper] | Multi-turn conversation | General language task | Winrate judged by GPT-4 |

| M3Exam [paper] [GitHub] | Multilingual, multimodal and multilevel | Specific downstream task | Task-specific metrics |

| GAOKAO-Bench [paper] | Chinese Gaokao examination | Specific downstream task | Accuracy and scoring rate |

| SafetyBench [paper] [GitHub] | Safety | Specific downstream task | Safety abilities of LLMs |

| LLMEval² [paper] [Link] | LLM Evaluator | General language task | Accuracy, Macro-F1 and Kappa Correlation Coefficient |

| FinanceBench [paper] [GitHub] | Finance Question and Answering | Specific downstream task | Accuracy compared with human annotated labels |

| LLMBox [paper] [GitHub] | Comprehensive model evaluation | General language task | Flexible and efficent evaluation on 59+ tasks | | SciSafeEval [paper] [GitHub] [Huggingface] | Safety | Specific downstream task | Safety abilities of LLMs in Scientific Tasks

We welcome contributions to LLM-eval-survey! If you'd like to contribute, please follow these steps:

- Fork the repository.

- Create a new branch with your changes.

- Submit a pull request with a clear description of your changes.

You can also open an issue if you have anything to add or comment.

If you find this project useful in your research or work, please consider citing it:

@article{chang2023survey,

title={A Survey on Evaluation of Large Language Models},

author={Chang, Yupeng and Wang, Xu and Wang, Jindong and Wu, Yuan and Zhu, Kaijie and Chen, Hao and Yang, Linyi and Yi, Xiaoyuan and Wang, Cunxiang and Wang, Yidong and Ye, Wei and Zhang, Yue and Chang, Yi and Yu, Philip S. and Yang, Qiang and Xie, Xing},

journal={arXiv preprint arXiv:2307.03109},

year={2023}

}

- Tahmid Rahman (@tahmedge) for PR#1.

- Hao Zhao for suggestions on new benchmarks.

- Chenhui Zhang for suggestions on robustness, ethics, and trustworthiness.

- Damien Sileo (@sileod) for PR#2.

- Peiyi Wang (@Wangpeiyi9979) for issue#3.

- Zengzhi Wang for sentiment analysis.

- Kenneth Leung (@kennethleungty) for multiple PRs (#4, #5, #6)

- @Aml-Hassan-Abd-El-hamid for PR#7.

- @taichengguo for issue#9

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-eval-survey

Similar Open Source Tools

LLM-eval-survey

LLM-eval-survey is a collection of papers and resources related to evaluations on large language models. It includes a survey on the evaluation of large language models, covering various aspects such as natural language processing, robustness, ethics, biases, trustworthiness, social science, natural science, engineering, medical applications, agent applications, and other applications. The repository provides a comprehensive overview of different evaluation tasks and benchmarks for large language models.

Awesome-TimeSeries-SpatioTemporal-LM-LLM

Awesome-TimeSeries-SpatioTemporal-LM-LLM is a curated list of Large (Language) Models and Foundation Models for Temporal Data, including Time Series, Spatio-temporal, and Event Data. The repository aims to summarize recent advances in Large Models and Foundation Models for Time Series and Spatio-Temporal Data with resources such as papers, code, and data. It covers various applications like General Time Series Analysis, Transportation, Finance, Healthcare, Event Analysis, Climate, Video Data, and more. The repository also includes related resources, surveys, and papers on Large Language Models, Foundation Models, and their applications in AIOps.

Awesome-LLM-Post-training

The Awesome-LLM-Post-training repository is a curated collection of influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies. It covers various aspects of LLMs, including reasoning, decision-making, reinforcement learning, reward learning, policy optimization, explainability, multimodal agents, benchmarks, tutorials, libraries, and implementations. The repository aims to provide a comprehensive overview and resources for researchers and practitioners interested in advancing LLM technologies.

awesome-deliberative-prompting

The 'awesome-deliberative-prompting' repository focuses on how to ask Large Language Models (LLMs) to produce reliable reasoning and make reason-responsive decisions through deliberative prompting. It includes success stories, prompting patterns and strategies, multi-agent deliberation, reflection and meta-cognition, text generation techniques, self-correction methods, reasoning analytics, limitations, failures, puzzles, datasets, tools, and other resources related to deliberative prompting. The repository provides a comprehensive overview of research, techniques, and tools for enhancing reasoning capabilities of LLMs.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.

RLHF-Reward-Modeling

This repository, RLHF-Reward-Modeling, is dedicated to training reward models for DRL-based RLHF (PPO), Iterative SFT, and iterative DPO. It provides state-of-the-art performance in reward models with a base model size of up to 13B. The installation instructions involve setting up the environment and aligning the handbook. Dataset preparation requires preprocessing conversations into a standard format. The code can be run with Gemma-2b-it, and evaluation results can be obtained using provided datasets. The to-do list includes various reward models like Bradley-Terry, preference model, regression-based reward model, and multi-objective reward model. The repository is part of iterative rejection sampling fine-tuning and iterative DPO.

Efficient-LLMs-Survey

This repository provides a systematic and comprehensive review of efficient LLMs research. We organize the literature in a taxonomy consisting of three main categories, covering distinct yet interconnected efficient LLMs topics from **model-centric** , **data-centric** , and **framework-centric** perspective, respectively. We hope our survey and this GitHub repository can serve as valuable resources to help researchers and practitioners gain a systematic understanding of the research developments in efficient LLMs and inspire them to contribute to this important and exciting field.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

awesome-ai4db-paper

The 'awesome-ai4db-paper' repository is a curated paper list focusing on AI for database (AI4DB) theory, frameworks, resources, and tools for data engineers. It includes a collection of research papers related to learning-based query optimization, training data set preparation, cardinality estimation, query-driven approaches, data-driven techniques, hybrid methods, pretraining models, plan hints, cost models, SQL embedding, join order optimization, query rewriting, end-to-end systems, text-to-SQL conversion, traditional database technologies, storage solutions, learning-based index design, and a learning-based configuration advisor. The repository aims to provide a comprehensive resource for individuals interested in AI applications in the field of database management.

Awesome-explainable-AI

This repository contains frontier research on explainable AI (XAI), a hot topic in the field of artificial intelligence. It includes trends, use cases, survey papers, books, open courses, papers, and Python libraries related to XAI. The repository aims to organize and categorize publications on XAI, provide evaluation methods, and list various Python libraries for explainable AI.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

AwesomeLLM4APR

Awesome LLM for APR is a repository dedicated to exploring the capabilities of Large Language Models (LLMs) in Automated Program Repair (APR). It provides a comprehensive collection of research papers, tools, and resources related to using LLMs for various scenarios such as repairing semantic bugs, security vulnerabilities, syntax errors, programming problems, static warnings, self-debugging, type errors, web UI tests, smart contracts, hardware bugs, performance bugs, API misuses, crash bugs, test case repairs, formal proofs, GitHub issues, code reviews, motion planners, human studies, and patch correctness assessments. The repository serves as a valuable reference for researchers and practitioners interested in leveraging LLMs for automated program repair.

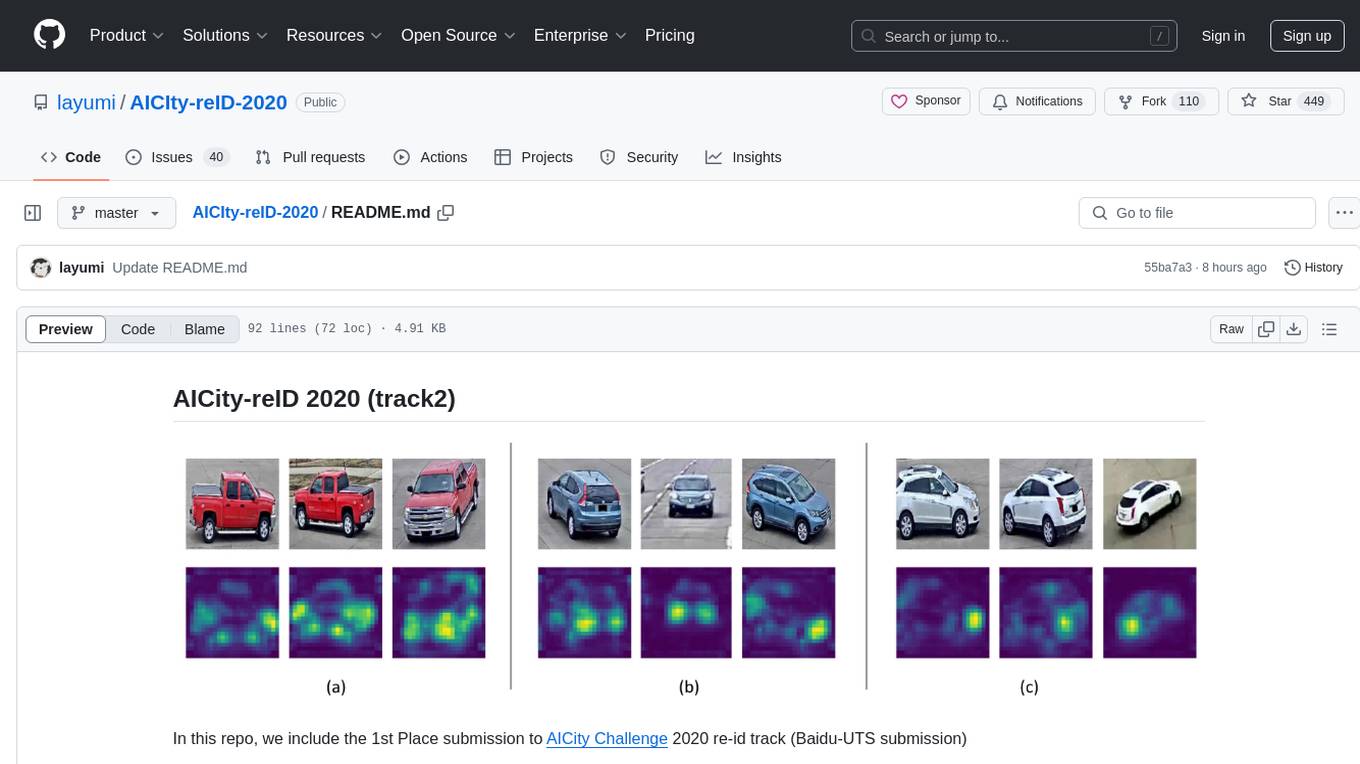

AICIty-reID-2020

AICIty-reID 2020 is a repository containing the 1st Place submission to AICity Challenge 2020 re-id track by Baidu-UTS. It includes models trained on Paddlepaddle and Pytorch, with performance metrics and trained models provided. Users can extract features, perform camera and direction prediction, and access related repositories for drone-based building re-id, vehicle re-ID, person re-ID baseline, and person/vehicle generation. Citations are also provided for research purposes.

For similar tasks

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

adata

AData is a free and open-source A-share database that focuses on transaction-related data. It provides comprehensive data on stocks, including basic information, market data, and sentiment analysis. AData is designed to be easy to use and integrate with other applications, making it a valuable tool for quantitative trading and AI training.

PIXIU

PIXIU is a project designed to support the development, fine-tuning, and evaluation of Large Language Models (LLMs) in the financial domain. It includes components like FinBen, a Financial Language Understanding and Prediction Evaluation Benchmark, FIT, a Financial Instruction Dataset, and FinMA, a Financial Large Language Model. The project provides open resources, multi-task and multi-modal financial data, and diverse financial tasks for training and evaluation. It aims to encourage open research and transparency in the financial NLP field.

hezar

Hezar is an all-in-one AI library designed specifically for the Persian community. It brings together various AI models and tools, making it easy to use AI with just a few lines of code. The library seamlessly integrates with Hugging Face Hub, offering a developer-friendly interface and task-based model interface. In addition to models, Hezar provides tools like word embeddings, tokenizers, feature extractors, and more. It also includes supplementary ML tools for deployment, benchmarking, and optimization.

text-embeddings-inference

Text Embeddings Inference (TEI) is a toolkit for deploying and serving open source text embeddings and sequence classification models. TEI enables high-performance extraction for popular models like FlagEmbedding, Ember, GTE, and E5. It implements features such as no model graph compilation step, Metal support for local execution on Macs, small docker images with fast boot times, token-based dynamic batching, optimized transformers code for inference using Flash Attention, Candle, and cuBLASLt, Safetensors weight loading, and production-ready features like distributed tracing with Open Telemetry and Prometheus metrics.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

spark-nlp

Spark NLP is a state-of-the-art Natural Language Processing library built on top of Apache Spark. It provides simple, performant, and accurate NLP annotations for machine learning pipelines that scale easily in a distributed environment. Spark NLP comes with 36000+ pretrained pipelines and models in more than 200+ languages. It offers tasks such as Tokenization, Word Segmentation, Part-of-Speech Tagging, Named Entity Recognition, Dependency Parsing, Spell Checking, Text Classification, Sentiment Analysis, Token Classification, Machine Translation, Summarization, Question Answering, Table Question Answering, Text Generation, Image Classification, Image to Text (captioning), Automatic Speech Recognition, Zero-Shot Learning, and many more NLP tasks. Spark NLP is the only open-source NLP library in production that offers state-of-the-art transformers such as BERT, CamemBERT, ALBERT, ELECTRA, XLNet, DistilBERT, RoBERTa, DeBERTa, XLM-RoBERTa, Longformer, ELMO, Universal Sentence Encoder, Llama-2, M2M100, BART, Instructor, E5, Google T5, MarianMT, OpenAI GPT2, Vision Transformers (ViT), OpenAI Whisper, and many more not only to Python and R, but also to JVM ecosystem (Java, Scala, and Kotlin) at scale by extending Apache Spark natively.

scikit-llm

Scikit-LLM is a tool that seamlessly integrates powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. It allows users to leverage large language models for various text analysis applications within the familiar scikit-learn framework. The tool simplifies the process of incorporating advanced language processing capabilities into machine learning pipelines, enabling users to benefit from the latest advancements in natural language processing.

For similar jobs

LLM-and-Law

This repository is dedicated to summarizing papers related to large language models with the field of law. It includes applications of large language models in legal tasks, legal agents, legal problems of large language models, data resources for large language models in law, law LLMs, and evaluation of large language models in the legal domain.

start-llms

This repository is a comprehensive guide for individuals looking to start and improve their skills in Large Language Models (LLMs) without an advanced background in the field. It provides free resources, online courses, books, articles, and practical tips to become an expert in machine learning. The guide covers topics such as terminology, transformers, prompting, retrieval augmented generation (RAG), and more. It also includes recommendations for podcasts, YouTube videos, and communities to stay updated with the latest news in AI and LLMs.

aiverify

AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognised principles through standardised tests. It offers a new API Connector feature to bypass size limitations, test various AI frameworks, and configure connection settings for batch requests. The toolkit operates within an enterprise environment, conducting technical tests on common supervised learning models for tabular and image datasets. It does not define AI ethical standards or guarantee complete safety from risks or biases.

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.

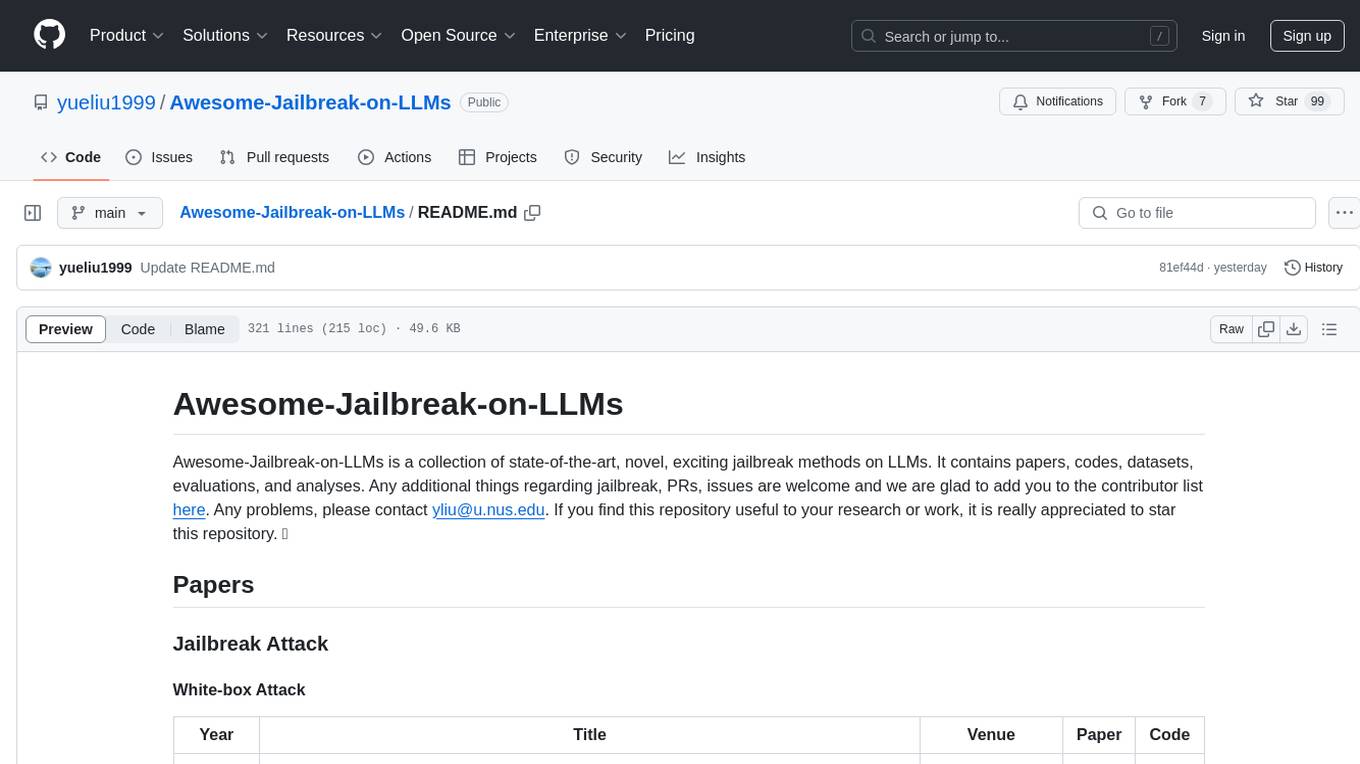

Awesome-Jailbreak-on-LLMs

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.