Recommendation-Systems-without-Explicit-ID-Features-A-Literature-Review

Paper List of Pre-trained Foundation Recommender Models

Stars: 261

This repository is a collection of papers and resources related to recommendation systems, focusing on foundation models, transferable recommender systems, large language models, and multimodal recommender systems. It explores questions such as the necessity of ID embeddings, the shift from matching to generating paradigms, and the future of multimodal recommender systems. The papers cover various aspects of recommendation systems, including pretraining, user representation, dataset benchmarks, and evaluation methods. The repository aims to provide insights and advancements in the field of recommendation systems through literature reviews, surveys, and empirical studies.

README:

Welcome to open an issue or make a pull request!

Keyword: Recommend System, pretraining, large language model, multimodal recommender system, transferable recommender system, foundation recommender models, universal user representation, one-model-fit-all, ID features, ID embeddings

These papers attempt to address the following questions:

(1) Can recommender systems have their own foundation models similar to those used in NLP and CV?

(2) Is ID embedding necessary for recommender models, can we replace or abondon it?

(3) Will recommender systems shift from a matching paradigm to a generating paradigm?

(4) How can LLM be utilized to enhance recommender systems?

(5) What does the future hold for multimodal recommender systems?

- Where to Go Next for Recommender Systems? ID-vs. Modality-based recommender models revisited, SIGIR2023, 2022/09, [paper] [code]

- Exploring the Upper Limits of Text-Based Collaborative Filtering Using Large Language Models: Discoveries and Insights, arxiv 2023/05, [paper]

- Exploring Adapter-based Transfer Learning for Recommender Systems: Empirical Studies and Practical Insights, WSDM2024, [paper] [code]

- The Elephant in the Room: Rethinking the Usage of Pre-trained Language Model in Sequential Recommendation, arxiv2024/04, [paper]

- NineRec: A Benchmark Dataset Suite for Evaluating Transferable Recommendation, TPAMI2024, [paper] [link] | Images, Text, Nine downstream datasets

- TenRec: A Large-scale Multipurpose Benchmark Dataset for Recommender Systems, NeurIPS 2022 [paper]

- PixelRec: An Image Dataset for Benchmarking Recommender Systems with Raw Pixels, SDM 2023/09 [paper] |[link]| Images, Text, Tags, 200 million interactions

- MicroLens: A Content-Driven Micro-Video Recommendation Dataset at Scale [paper] [link] [DeepMind Talk] | Images, Text, Video, Audio, comments, tags, etc.

- MIND: A Large-scale Dataset for News Recommendation, ACL2020, [paper] | Text

- Parameter-Efficient Transfer from Sequential Behaviors for User Modeling and Recommendation, SIGIR 2020 [link]

- MoRec: [link] Netflix: [link] Amazon: [link]

- Exploring Multi-Scenario Multi-Modal CTR Prediction with a Large Scale Dataset, SIGIR 2024/07 [paper] |[link]| Images, Text, Multi-domain, True Negative, 100 million CTR data

- A Survey on Large Language Models for Recommendation, arxiv 2023/05, [paper]

- How Can Recommender Systems Benefit from Large Language Models: A Survey, arxiv 2023/06, [paper]

- Recommender Systems in the Era of Large Language Models, arxiv, 2023/07, [paper]

- A Survey on Evaluation of Large Language Models, arxiv, 2023/07, [paper]

- Self-Supervised Learning for Recommender Systems: A Survey, arxiv, 2023/06, [paper]

- Pre-train, Prompt and Recommendation: A Comprehensive Survey of Language Modelling Paradigm Adaptations in Recommender Systems, 2022/09, [paper]

- User Modeling in the Era of Large Language Models: Current Research and Future Directions,2023/12, [paper]

- USER MODELING AND USER PROFILING: A COMPREHENSIVE SURVEY,2024/02, [paper]

- Foundation Models for Recommender Systems: A Survey and New Perspectives, 2024/02, [paper]

- Multimodal Pretraining, Adaptation, and Generation for Recommendation: A Survey, 2024/05, [paper]

- Emergent Abilities of Large Language Models, TMLR 2022/08, [paper]

- Exploring the Upper Limits of Text-Based Collaborative Filtering Using Large Language Models: Discoveries and Insights, arxiv 2023/05, [paper]

- Do LLMs Understand User Preferences? Evaluating LLMs On User Rating Prediction, arxiv 2023/05, [paper]

- Scaling Law for Recommendation Models: Towards General-purpose User Representations, AAAI 2023, [paper]

- StackRec: Efficient Training of Very Deep Sequential Recommender Models by Iterative Stacking, SIGIR 2021, [paper]

- A User-Adaptive Layer Selection Framework for Very Deep Sequential Recommender Models, AAAI 2021, [paper]

- A Generic Network Compression Framework for Sequential Recommender Systems, SIGIR 2020, [paper]

- Scaling Law of Large Sequential Recommendation Models, arxiv 2023/11, [paper]

- Actions Speak Louder than Words: Trillion-Parameter Sequential Transducers for Generative Recommendations, arxiv 2024/03, [paper]

- Breaking the Length Barrier: LLM-Enhanced CTR Prediction in Long Textual User Behaviors, SIGIR 2024, [paper]

- M6-Rec: Generative Pretrained Language Models are Open-Ended Recommender Systems,arxiv 2022/05, [paper]

- TALLRec: An Effective and Efficient Tuning Framework to Align Large Language Model with Recommendation, arxiv 2023/04, [paper]

- GPT4Rec: A Generative Framework for Personalized Recommendation and User Interests Interpretation, 2023/04, [paper]

- A Bi-Step Grounding Paradigm for Large Language Models in Recommendation Systems, arxiv, 2023/08, [paper]

- LlamaRec: Two-Stage Recommendation using Large Language Models for Ranking, PGAI@CIKM 2023, [paper] [code]

- Improving Sequential Recommendations with LLMs, arxiv 2024/02, [paper]

Freezing LLM [link]

- CTR-BERT: Cost-effective knowledge distillation for billion-parameter teacher models,arxiv 2022/04, [paper]

- Towards Unified Conversational Recommender Systems via Knowledge-Enhanced Prompt Learning, arxiv 2022/06, [paper]

- Generative Recommendation: Towards Next-generation Recommender Paradigm, arxiv 2023/04, [paper]

- Exploring the Upper Limits of Text-Based Collaborative Filtering Using Large Language Models: Discoveries and Insights, arxiv 2023/05, [paper]

- A First Look at LLM-Powered Generative News Recommendation, arxiv 2023/05, [paper]

- Privacy-Preserving Recommender Systems with Synthetic Query Generation using Differentially Private Large Language Models, arxiv 2023/05,[paper]

- RecAgent: A Novel Simulation Paradigm for Recommender Systems, arxiv 2023/06, [paper]

- Zero-Shot Next-Item Recommendation using Large Pretrained Language Models, arxiv 2023/04, [paper]

- Can ChatGPT Make Fair Recommendation? A Fairness Evaluation Benchmark for Recommendation with Large Language Model, RecSys 2023

- Leveraging Large Language Models for Sequential Recommendation, Recsys 2023/09, [paper]

- LLMRec: Large Language Models with Graph Augmentation for Recommendation, WSDM 2024 Oral, [paper] [code]

- Are ID Embeddings Necessary? Whitening Pre-trained Text Embeddings for Effective Sequential Recommendation, arxiv 2024/02 , [paper]

- Large Language Models are Zero-Shot Rankers for Recommender Systems, arxiv 2023/05, [paper]

- Recommendation as Language Processing (RLP): A Unified Pretrain, Personalized Prompt & Predict Paradigm (P5), arxiv 2022/03, [paper]

- Language Models as Recommender Systems: Evaluations and Limitations, NeurIPS Workshop ICBINB 2021/10, [paper]

- Prompt Learning for News Recommendation, SIGIR 2023/04, [paper]

- LLM-Rec: Personalized Recommendation via Prompting Large Language Models, arxiv,2023/07 [paper]

ChatGPT [link]

- Is ChatGPT a Good Recommender A Preliminary Study, arxiv 2023/04, [paper]

- Is ChatGPT Good at Search? Investigating Large Language Models as Re-Ranking Agent, arxiv 2023/04, [paper]

- Chat-REC: Towards Interactive and Explainable LLMs-Augmented Recommender System, arxiv 2023/04,[paper]

- Recommendation as Instruction Following: A Large Language Model Empowered Recommendation Approach, arxiv 2023/05, [paper]

- Leveraging Large Language Models in Conversational Recommender Systems, arxiv 2023/05, [paper]

- Uncovering ChatGPT’s Capabilities in Recommender Systems, arxiv 2023/05, [paper][code]

- Sparks of Artificial General Recommender (AGR): Early Experiments with ChatGPT, arxiv 2023/05, [paper]

- Is ChatGPT Fair for Recommendation? Evaluating Fairness in Large Language Model Recommendation, arxiv 2023/05,[paper] [code]

- Sparks of Artificial General Recommender (AGR): Early Experiments with ChatGPT, arxiv 2023/05,[paper]

- PALR: Personalization Aware LLMs for Recommendation, arxiv 2023/05, [paper]

- Privacy-Preserving Recommender Systems with Synthetic Query Generation using Differentially Private Large Language Models, arxiv 2023/05, [paper]

- Rethinking the Evaluation for Conversational Recommendation in the Era of Large Language Models, arxiv 2023/05, [paper]

- CTRL: Connect Tabular and Language Model for CTR Prediction, arxiv 2023/06,[paper].

- VBPR: Visual Bayesian Personalized Ranking from Implicit Feedback, AAAI2016, [paper]

- Adversarial Training Towards Robust Multimedia Recommender System, TKDE2019, [paper]

- Multi-modal Knowledge Graphs for Recommender Systems, CIKM 2020, [paper]

- Online Distillation-enhanced Multi-modal Transformer for Sequential Recommendation, ACMMM 2023, [paper]

- Self-Supervised Multi-Modal Sequential Recommendation, arxiv2023/02, [paper]

- FMMRec: Fairness-aware Multimodal Recommendation, arxiv2023/10, [paper]

- Self-Supervised Multi-Modal Sequential Recommendation, arxiv 2024/02, [paper]

- ID Embedding as Subtle Features of Content and Structure for Multimodal Recommendation, arxiv2023/10, [paper]

- Enhancing ID and Text Fusion via Alternative Training in Session-based Recommendation, arxiv2023/2, [paper]

- BiVRec: Bidirectional View-based Multimodal Sequential Recommendation,arxiv2023/2, [paper]

- A Large Language Model Enhanced Sequential Recommender for Joint Video and Comment Recommendation, arxiv2024/2, [paper]

- An Empirical Study of Training ID-Agnostic Multi-modal Sequential Recommenders, arxiv2024/3, [paper]

- Discrete Semantic Tokenization for Deep CTR Prediction, arxiv2024/3, [paper]

- End-to-end training of Multimodal Model and ranking Model, arxiv2023/3, [paper]

- TransRec: Learning Transferable Recommendation from Mixture-of-Modality Feedback, arxiv 2022/06, [paper]

- Towards Universal Sequence Representation Learning for Recommender Systems, KDD2022,2022/06, [paper]

- Learning Vector-Quantized Item Representation for Transferable Sequential Recommenders, WWW 2023, [paper] [code]

- UP5: Unbiased Foundation Model for Fairness-aware Recommendation, arxiv 2023/05, [paper]

- Exploring Adapter-based Transfer Learning for Recommender Systems: Empirical Studies and Practical Insights, arxiv 2023/05, [paper] [code]

- OpenP5: Benchmarking Foundation Models for Recommendation, arxiv 2023/06, [paper]

- Thoroughly Modeling Multi-domain Pre-trained Recommendation as Language, arxiv 2023/10, [paper]

- MISSRec: Pre-training and Transferring Multi-modal Interest-aware Sequence Representation for Recommendation, arxiv 2023/10, [paper]

- Collaborative Word-based Pre-trained Item Representation for Transferable Recommendation, arxiv 2023/11, [paper]

- Universal Multi-modal Multi-domain Pre-trained Recommendation, arxiv 2023/11, [paper]

- Multi-Modality is All You Need for Transferable Recommender Systems, arxiv 2023, [paper]

- TransFR: Transferable Federated Recommendation with Pre-trained Language Models, arxiv 2024/02 ,[paper]

- Rethinking Cross-Domain Sequential Recommendation under Open-World Assumptions, arxiv 2024/02 ,[paper]

- Large Language Models meet Collaborative Filtering: An Efficient All-round LLM-based Recommender System, arxiv 2024/04 ,[paper]

- Language Models Encode Collaborative Signals in Recommendation, arxiv 2024/07 ,[paper]

- Parameter-Efficient Transfer from Sequential Behaviors for User Modeling and Recommendation, SIGIR 2020, [paper], [code]

- One4all User Representation for Recommender Systems in E-commerce, arxiv 2021, [paper]

- Learning Transferable User Representations with Sequential Behaviors via Contrastive Pre-training, ICDM 2021, [paper]

- User-specific Adaptive Fine-tuning for Cross-domain Recommendations, TKDE 2021, [paper]

- Scaling Law for Recommendation Models: Towards General-purpose User Representations, AAAI 2023, [paper]

- U-BERT: Pre-training user representations for improved recommendation, AAAI 2021, [paper]

- One for All, All for One: Learning and Transferring User Embeddings for Cross-Domain Recommendation, WSDM 2022, [paper]

- Field-aware Variational Autoencoders for Billion-scale User Representation Learning,ICDE2022, [paper]

- Learning Large-scale Universal User Representation with Sparse Mixture of Experts, ICML2022workshop, [paper]

- Multi Datasource LTV User Representation (MDLUR), KDD2023, [paper]

- Pivotal Role of Language Modeling in Recommender Systems: Enriching Task-specific and Task-agnostic Representation Learning. arxiv2022/12, [paper]

- USER MODELING AND USER PROFILING: A COMPREHENSIVE SURVEY,2024/02, [paper]

- Generalized User Representations for Transfer Learning, arxiv 2024/03, [paper]

- Bridging Language and Items for Retrieval and Recommendation,arxiv 2024/04, [paper]

- One Person, One Model, One World: Learning Continual User Representation without Forgetting, SIGIR 2021, [paper], [code]

- Tenrec: A Large-scale Multipurpose Benchmark Dataset for Recommender Systems, NeurIPS 2022 [paper]

- STAN: Stage-Adaptive Network for Multi-Task Recommendation by Learning User Lifecycle-Based Representationg, Recsys 2023, [paper]

- Task Relation-aware Continual User Representation Learning, KDD2023, [paper]

- ReLLa: Retrieval-enhanced Large Language Models for Lifelong Sequential Behavior Comprehension in Recommendation, arxiv2023/08, [paper]

Generative Recommender Systems [link]

- A Simple Convolutional Generative Network for Next Item Recommendation, WSDM 2018/08, [paper] [code]

- Future Data Helps Training: Modeling Future Contexts for Session-based Recommendation, WWW 2020/04, [paper] [code]

- Recommendation via Collaborative Diffusion Generative Model, KSEM 2022/08, [paper]

- Blurring-Sharpening Process Models for Collaborative Filtering, arxiv 2022/09, [paper]

- Generative Slate Recommendation with Reinforcement Learning, arxiv 2023/01, [paper]

- Recommender Systems with Generative Retrieval, arxiv 2023/04, [paper]

- DiffuRec: A Diffusion Model for Sequential Recommendation, arxiv 2023/04, [paper]

- Diffusion Recommender Model, arxiv 2023/04, [paper]

- A First Look at LLM-Powered Generative News Recommendation, arxiv 2023/05, [paper]

- Recommender Systems with Generative Retrieval, arxiv 2023/05, [paper]

- Generative Retrieval as Dense Retrieval, arxiv 2023/06, [paper]

- RecFusion: A Binomial Diffusion Process for 1D Data for Recommendation, arxiv 2023/06, [paper]

- Generative Sequential Recommendation with GPTRec, SIGIR workshop 2023, [paper]

- FANS: Fast Non-Autoregressive Sequence Generation for Item List Continuation, WWW 2023, [paper]

- Generative Next-Basket Recommendation, RecSys 2023

- Large Language Model Augmented Narrative Driven Recommendations, RecSys 2023, [paper]

- LightLM: A Lightweight Deep and Narrow Language Model forGenerative Recommendation, arxiv 2023/10, [paper]

- Xiangyang Li Github [Link]

- nancheng58i Github [Link]

- enoche Github [Link]

- WLiK Github [Link]

- https://github.com/CHIANGEL/Awesome-LLM-for-RecSys

- https://medium.com/@lifengyi_6964/a-large-scale-short-video-recommender-system-dataset-160fdfe81b79

- https://medium.com/@lifengyi_6964/rethinking-the-id-paradigm-in-recommender-systems-the-rise-of-modality-98f449dec016

- https://medium.com/@lifengyi_6964/one-model-for-all-universal-recommender-system-82dab214a07d

- https://zhuanlan.zhihu.com/p/437671278

- https://zhuanlan.zhihu.com/p/675213913

- https://zhuanlan.zhihu.com/p/684805058

- https://zhuanlan.zhihu.com/p/665467596

If you have an innovative idea for building a universal foundation recommendation model but require large-scale dataset and computational resources, consider joining our lab as an intern or visiting scholar. We can provide access to 100 NVIDIA 80G A100 GPUs and a billion-level dataset of user-video/image/text interactions.

The laboratory is hiring research assistants, interns, doctoral students, and postdoctoral researchers. Please contact the corresponding author for details.

实验室招聘科研助理,实习生,博士生和博士后,请联系[email protected].

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Recommendation-Systems-without-Explicit-ID-Features-A-Literature-Review

Similar Open Source Tools

Recommendation-Systems-without-Explicit-ID-Features-A-Literature-Review

This repository is a collection of papers and resources related to recommendation systems, focusing on foundation models, transferable recommender systems, large language models, and multimodal recommender systems. It explores questions such as the necessity of ID embeddings, the shift from matching to generating paradigms, and the future of multimodal recommender systems. The papers cover various aspects of recommendation systems, including pretraining, user representation, dataset benchmarks, and evaluation methods. The repository aims to provide insights and advancements in the field of recommendation systems through literature reviews, surveys, and empirical studies.

Awesome-LLM4RS-Papers

This paper list is about Large Language Model-enhanced Recommender System. It also contains some related works. Keywords: recommendation system, large language models

Awesome-LLM-Survey

This repository, Awesome-LLM-Survey, serves as a comprehensive collection of surveys related to Large Language Models (LLM). It covers various aspects of LLM, including instruction tuning, human alignment, LLM agents, hallucination, multi-modal capabilities, and more. Researchers are encouraged to contribute by updating information on their papers to benefit the LLM survey community.

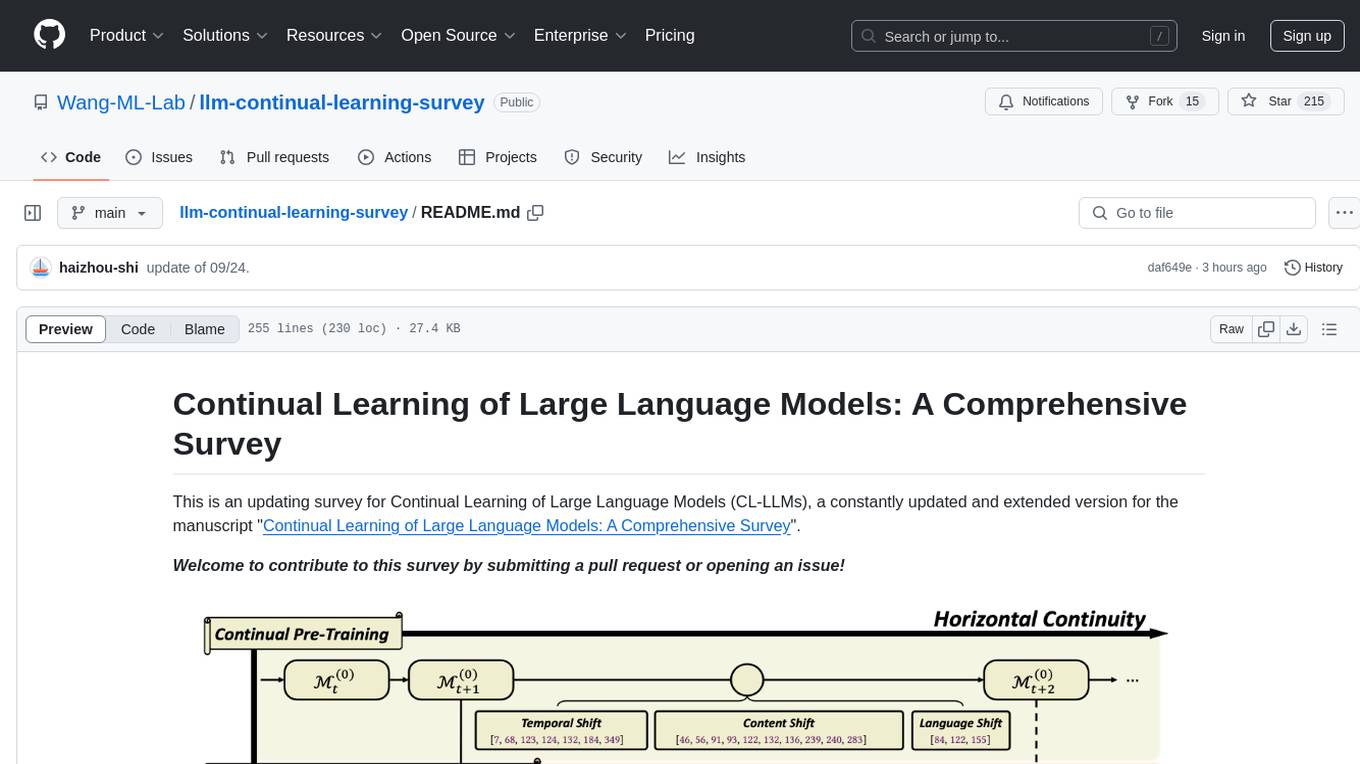

llm-continual-learning-survey

This repository is an updating survey for Continual Learning of Large Language Models (CL-LLMs), providing a comprehensive overview of various aspects related to the continual learning of large language models. It covers topics such as continual pre-training, domain-adaptive pre-training, continual fine-tuning, model refinement, model alignment, multimodal LLMs, and miscellaneous aspects. The survey includes a collection of relevant papers, each focusing on different areas within the field of continual learning of large language models.

Awesome-LLM-in-Social-Science

This repository compiles a list of academic papers that evaluate, align, simulate, and provide surveys or perspectives on the use of Large Language Models (LLMs) in the field of Social Science. The papers cover various aspects of LLM research, including assessing their alignment with human values, evaluating their capabilities in tasks such as opinion formation and moral reasoning, and exploring their potential for simulating social interactions and addressing issues in diverse fields of Social Science. The repository aims to provide a comprehensive resource for researchers and practitioners interested in the intersection of LLMs and Social Science.

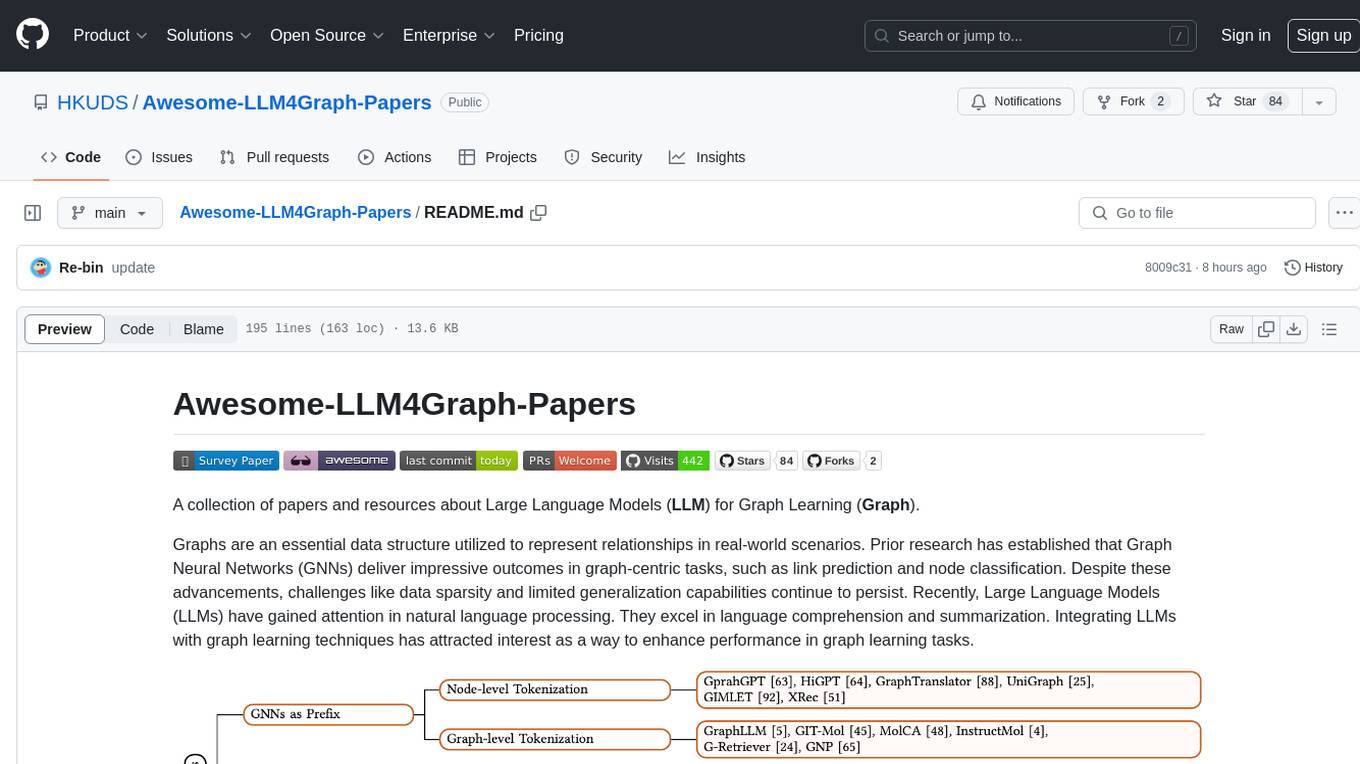

Awesome-LLM4Graph-Papers

A collection of papers and resources about Large Language Models (LLM) for Graph Learning (Graph). Integrating LLMs with graph learning techniques to enhance performance in graph learning tasks. Categorizes approaches based on four primary paradigms and nine secondary-level categories. Valuable for research or practice in self-supervised learning for recommendation systems.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

LightLLM

LightLLM is a lightweight library for linear and logistic regression models. It provides a simple and efficient way to train and deploy machine learning models for regression tasks. The library is designed to be easy to use and integrate into existing projects, making it suitable for both beginners and experienced data scientists. With LightLLM, users can quickly build and evaluate regression models using a variety of algorithms and hyperparameters. The library also supports feature engineering and model interpretation, allowing users to gain insights from their data and make informed decisions based on the model predictions.

Awesome-local-LLM

Awesome-local-LLM is a curated list of platforms, tools, practices, and resources that help run Large Language Models (LLMs) locally. It includes sections on inference platforms, engines, user interfaces, specific models for general purpose, coding, vision, audio, and miscellaneous tasks. The repository also covers tools for coding agents, agent frameworks, retrieval-augmented generation, computer use, browser automation, memory management, testing, evaluation, research, training, and fine-tuning. Additionally, there are tutorials on models, prompt engineering, context engineering, inference, agents, retrieval-augmented generation, and miscellaneous topics, along with a section on communities for LLM enthusiasts.

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.

Medical_Image_Analysis

The Medical_Image_Analysis repository focuses on X-ray image-based medical report generation using large language models. It provides pre-trained models and benchmarks for CheXpert Plus dataset, context sample retrieval for X-ray report generation, and pre-training on high-definition X-ray images. The goal is to enhance diagnostic accuracy and reduce patient wait times by improving X-ray report generation through advanced AI techniques.

FuseAI

FuseAI is a repository that focuses on knowledge fusion of large language models. It includes FuseChat, a state-of-the-art 7B LLM on MT-Bench, and FuseLLM, which surpasses Llama-2-7B by fusing three open-source foundation LLMs. The repository provides tech reports, releases, and datasets for FuseChat and FuseLLM, showcasing their performance and advancements in the field of chat models and large language models.

long-llms-learning

A repository sharing the panorama of the methodology literature on Transformer architecture upgrades in Large Language Models for handling extensive context windows, with real-time updating the newest published works. It includes a survey on advancing Transformer architecture in long-context large language models, flash-ReRoPE implementation, latest news on data engineering, lightning attention, Kimi AI assistant, chatglm-6b-128k, gpt-4-turbo-preview, benchmarks like InfiniteBench and LongBench, long-LLMs-evals for evaluating methods for enhancing long-context capabilities, and LLMs-learning for learning technologies and applicated tasks about Large Language Models.

gorilla

Gorilla is a tool that enables LLMs to use tools by invoking APIs. Given a natural language query, Gorilla comes up with the semantically- and syntactically- correct API to invoke. With Gorilla, you can use LLMs to invoke 1,600+ (and growing) API calls accurately while reducing hallucination. Gorilla also releases APIBench, the largest collection of APIs, curated and easy to be trained on!

For similar tasks

Recommendation-Systems-without-Explicit-ID-Features-A-Literature-Review

This repository is a collection of papers and resources related to recommendation systems, focusing on foundation models, transferable recommender systems, large language models, and multimodal recommender systems. It explores questions such as the necessity of ID embeddings, the shift from matching to generating paradigms, and the future of multimodal recommender systems. The papers cover various aspects of recommendation systems, including pretraining, user representation, dataset benchmarks, and evaluation methods. The repository aims to provide insights and advancements in the field of recommendation systems through literature reviews, surveys, and empirical studies.

RecAI

RecAI is a project that explores the integration of Large Language Models (LLMs) into recommender systems, addressing the challenges of interactivity, explainability, and controllability. It aims to bridge the gap between general-purpose LLMs and domain-specific recommender systems, providing a holistic perspective on the practical requirements of LLM4Rec. The project investigates various techniques, including Recommender AI agents, selective knowledge injection, fine-tuning language models, evaluation, and LLMs as model explainers, to create more sophisticated, interactive, and user-centric recommender systems.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.