LakeSoul

LakeSoul is an end-to-end, realtime and cloud native Lakehouse framework with fast data ingestion, concurrent update and incremental data analytics on cloud storages for both BI and AI applications.

Stars: 3004

LakeSoul is a cloud-native Lakehouse framework that supports scalable metadata management, ACID transactions, efficient and flexible upsert operation, schema evolution, and unified streaming & batch processing. It supports multiple computing engines like Spark, Flink, Presto, and PyTorch, and computing modes such as batch, stream, MPP, and AI. LakeSoul scales metadata management and achieves ACID control by using PostgreSQL. It provides features like automatic compaction, table lifecycle maintenance, redundant data cleaning, and permission isolation for metadata.

README:

2025.09: LakeSoul has released newest version 3.0.0, check out our release note

LakeSoul is a cloud-native Lakehouse framework that supports scalable metadata management, ACID transactions, efficient and flexible upsert operation, schema evolution, and unified streaming & batch processing.

LakeSoul supports multiple computing engines to read and write lake warehouse table data, including Spark, Flink, Presto, and PyTorch, and supports multiple computing modes such as batch, stream, MPP, and AI. LakeSoul supports storage systems such as HDFS and S3.

LakeSoul was originally created by DMetaSoul company and was donated to Linux Foundation AI & Data as a sandbox project since May 2023.

LakeSoul implements incremental upserts for both row and column and allows concurrent updates.

LakeSoul uses LSM-Tree like structure to support updates on hash partitioning table with primary key, and achieves very high write throughput while providing optimized merge on read performance (refer to Performance Benchmarks). LakeSoul scales metadata management and achieves ACID control by using PostgreSQL.

LakeSoul uses Rust to implement the native metadata layer and IO layer, and provides C/Java/Python interfaces to support the connecting of multiple computing frameworks such as big data and AI.

LakeSoul supports concurrent batch or streaming read and write. Both read and write supports CDC semantics, and together with auto schema evolution and exacly-once guarantee, constructing realtime data warehouses is made easy.

LakeSoul supports multi-workspace and RBAC. LakeSoul uses Postgres's RBAC and row-level security policies to implement permission isolation for metadata. Together with Hadoop users and groups, physical data isolation can be achieved. LakeSoul's permission isolation is effective for SQL/Java/Python jobs.

LakeSoul supports automatic disaggregated size-tiered multi-level compaction, automatic table life cycle maintenance, automatic data asset statistics, and automatic redundant data cleaning, reducing operation costs and improving usability.

More detailed features please refer to our doc page: Documentations

Follow the Quick Start to quickly set up a test env.

Please find tutorials in doc site:

- Checkout Examples of Python Data Processing and AI Model Training on LakeSoul on how LakeSoul connecting AI to Lakehouse to build a unified and modern data infrastructure.

- Checkout LakeSoul Flink CDC Whole Database Synchronization Tutorial on how to sync an entire MySQL database into LakeSoul in realtime, with auto table creation, auto DDL sync and exactly once guarantee.

- Checkout Flink SQL Usage on using Flink SQL to read or write LakeSoul in both batch and streaming mode, with the supports of Flink Changelog Stream semantics and row-level upsert and delete.

- Checkout Multi Stream Merge and Build Wide Table Tutorial on how to merge multiple stream with same primary key (and different other columns) concurrently without join.

- Checkout Upsert Data and Merge UDF Tutorial on how to upsert data and Merge UDF to customize merge logic.

- Checkout Snapshot API Usage on how to do snapshot read (time travel), snapshot rollback and cleanup.

- Checkout Incremental Query Tutorial on how to do incremental query in Spark in batch or stream mode.

Please find usage documentations in doc site: Usage Doc

- Data Science and AI

- [x] Native Python Reader (without PySpark)

- [x] PyTorch Dataset and distributed training

- Meta Management (#23)

- [x] Multiple Level Partitioning: Multiple range partition and at most one hash partition

- [x] Concurrent write with auto conflict resolution

- [x] MVCC with read isolation

- [x] Write transaction (two-stage commit) through Postgres Transaction

- [x] Schema Evolution: Column add/delete supported

- Table operations

- [x] LSM-Tree style upsert for hash partitioned table

- [x] Merge on read for hash partition with upsert delta file

- [x] Copy on write update for non hash partitioned table

- [x] Automatic Disaggregated Compaction Service

- Data Warehousing

- Spark Integration

- [x] Table/Dataframe API

- [x] SQL support with catalog except upsert

- [x] Query optimization

- [x] Shuffle/Join elimination for operations on primary key

- [x] Merge UDF (Merge operator)

- [x] Merge Into SQL support

- [x] Merge Into SQL with match on Primary Key (Merge on read)

- Flink Integration and CDC Ingestion (#57)

- [x] Table API

- [x] Batch/Stream Sink

- [x] Batch/Stream source

- [x] Stream Source/Sink for ChangeLog Stream Semantics

- [x] Exactly Once Source and Sink

- [x] Flink CDC

- [x] Auto Schema Change (DDL) Sync

- [x] Auto Table Creation (depends on #78)

- [x] Support sink multiple source tables with different schemas (#84)

- [x] Table API

- Hive Integration

- [x] Export to Hive partition after compaction

- [x] Apache Kyuubi (Hive JDBC) Integration

- Realtime Data Warehousing

- [x] CDC ingestion

- [x] Time Travel (Snapshot read)

- [x] Snapshot rollback

- [x] Automatic global compaction service

- [x] MPP Engine Integration (depends on #66)

- [x] Presto

- [x] Compatibility with Presto Native Execution(with Velox)

- [x] Apache Doris

- Cloud and Native IO (#66)

- [x] Object storage IO optimization

- [x] Native vectorized merge on read

- [x] Multi-layer storage classes support with local-disk data cache

Please feel free to open an issue or dicussion if you have any questions.

Join our Discord server for discussions.

Email us at [email protected].

LakeSoul is opensourced under Apache License v2.0.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LakeSoul

Similar Open Source Tools

LakeSoul

LakeSoul is a cloud-native Lakehouse framework that supports scalable metadata management, ACID transactions, efficient and flexible upsert operation, schema evolution, and unified streaming & batch processing. It supports multiple computing engines like Spark, Flink, Presto, and PyTorch, and computing modes such as batch, stream, MPP, and AI. LakeSoul scales metadata management and achieves ACID control by using PostgreSQL. It provides features like automatic compaction, table lifecycle maintenance, redundant data cleaning, and permission isolation for metadata.

vts

VTS (Vector Transport Service) is an open-source tool developed by Zilliz based on Apache Seatunnel for moving vectors and unstructured data. It addresses data migration needs, supports real-time data streaming and offline import, simplifies unstructured data transformation, and ensures end-to-end data quality. Core capabilities include rich connectors, stream and batch processing, distributed snapshot support, high performance, and real-time monitoring. Future developments include incremental synchronization, advanced data transformation, and enhanced monitoring. VTS supports various connectors for data migration and offers advanced features like Transformers, cluster mode deployment, RESTful API, Docker deployment, and more.

awesome-mcp-servers

A curated list of awesome Model Context Protocol (MCP) servers that enable AI models to securely interact with local and remote resources through standardized server implementations. The list focuses on production-ready and experimental servers extending AI capabilities through file access, database connections, API integrations, and other contextual services.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

clearml

ClearML is an auto-magical suite of tools designed to streamline AI workflows. It includes modules for experiment management, MLOps/LLMOps, data management, model serving, and more. ClearML offers features like experiment tracking, model serving, orchestration, and automation. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm for remote debugging. ClearML aims to simplify collaboration, automate processes, and enhance visibility in AI projects.

Mooncake

Mooncake is a serving platform for Kimi, a leading LLM service provided by Moonshot AI. It features a KVCache-centric disaggregated architecture that separates prefill and decoding clusters, leveraging underutilized CPU, DRAM, and SSD resources of the GPU cluster. Mooncake's scheduler balances throughput and latency-related SLOs, with a prediction-based early rejection policy for highly overloaded scenarios. It excels in long-context scenarios, achieving up to a 525% increase in throughput while handling 75% more requests under real workloads.

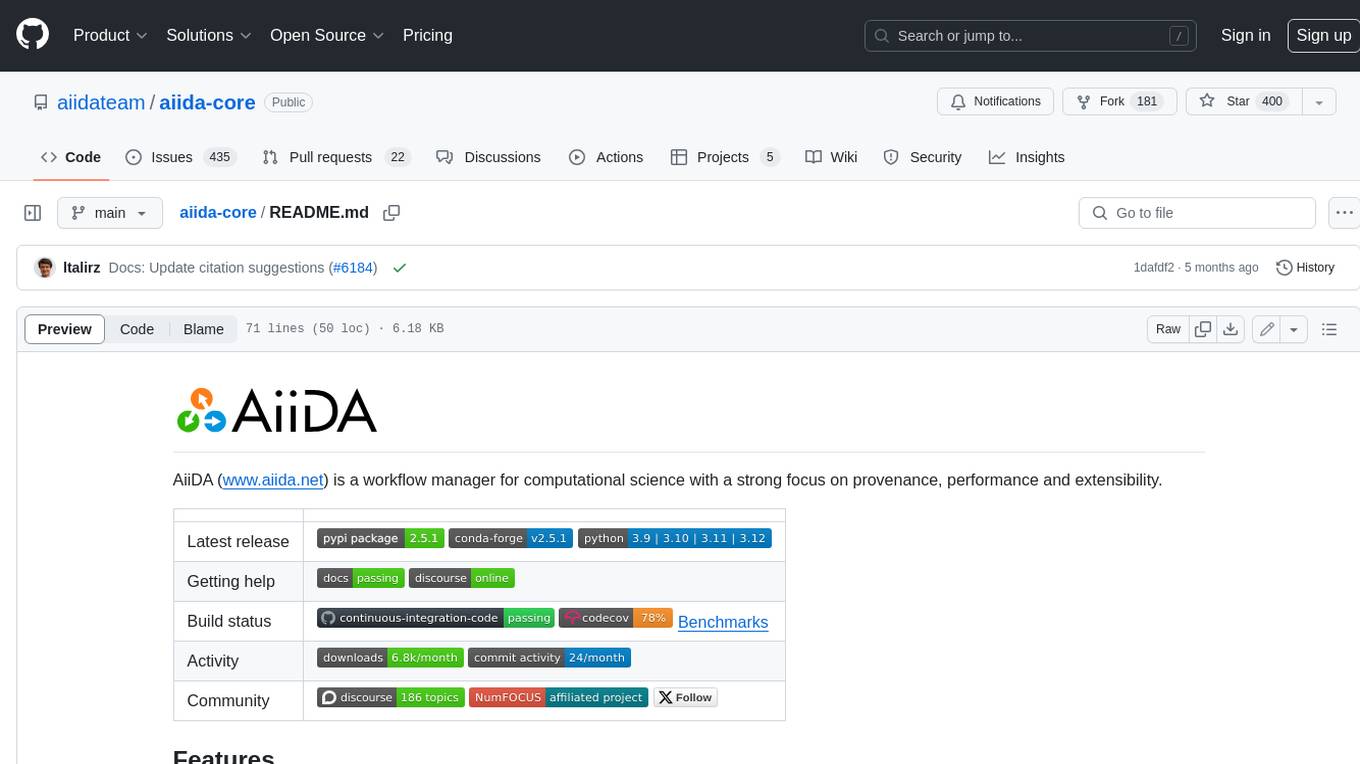

aiida-core

AiiDA (www.aiida.net) is a workflow manager for computational science with a strong focus on provenance, performance and extensibility. **Features** * **Workflows:** Write complex, auto-documenting workflows in python, linked to arbitrary executables on local and remote computers. The event-based workflow engine supports tens of thousands of processes per hour with full checkpointing. * **Data provenance:** Automatically track inputs, outputs & metadata of all calculations in a provenance graph for full reproducibility. Perform fast queries on graphs containing millions of nodes. * **HPC interface:** Move your calculations to a different computer by changing one line of code. AiiDA is compatible with schedulers like SLURM, PBS Pro, torque, SGE or LSF out of the box. * **Plugin interface:** Extend AiiDA with plugins for new simulation codes (input generation & parsing), data types, schedulers, transport modes and more. * **Open Science:** Export subsets of your provenance graph and share them with peers or make them available online for everyone on the Materials Cloud. * **Open source:** AiiDA is released under the MIT open source license

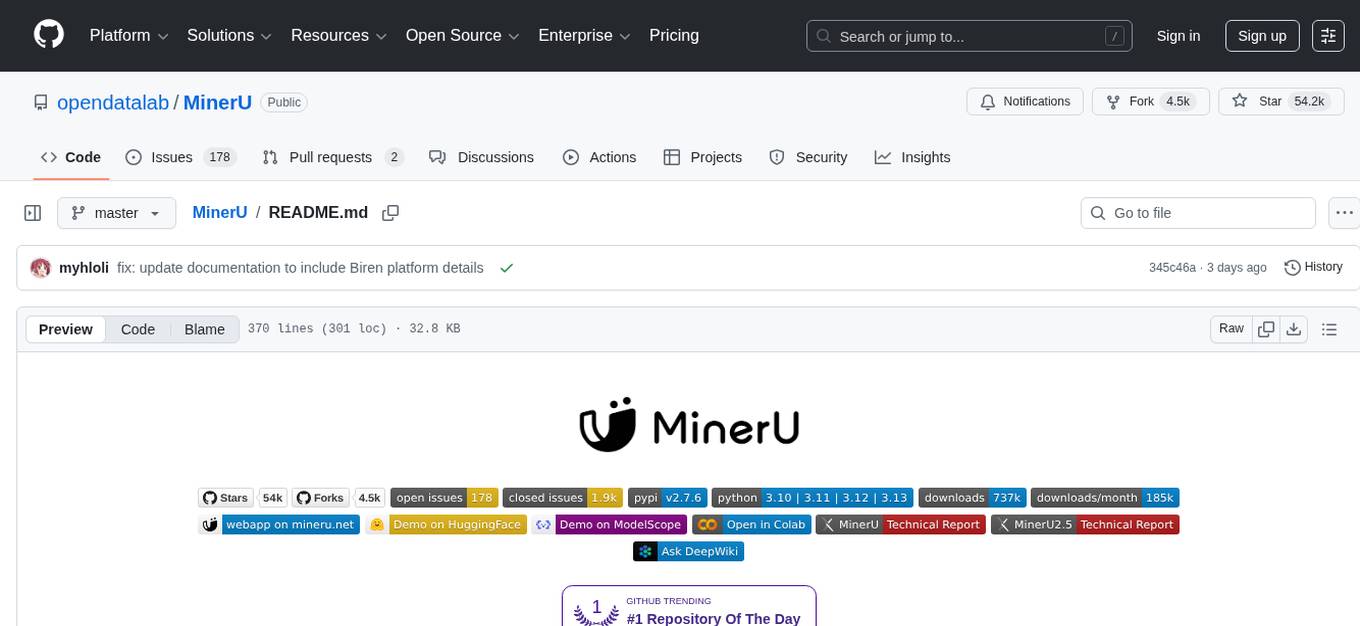

MinerU

MinerU is a tool that converts PDFs into machine-readable formats, allowing for easy extraction into any format. It focuses on solving symbol conversion issues in scientific literature and contributes to technological development. It removes headers, footers, footnotes, and page numbers, preserves document structure, extracts images, tables, and formulas, and supports OCR in 109 languages. MinerU supports various visualization results, runs on CPU/GPU/NPU, and is compatible with Windows, Linux, and Mac platforms.

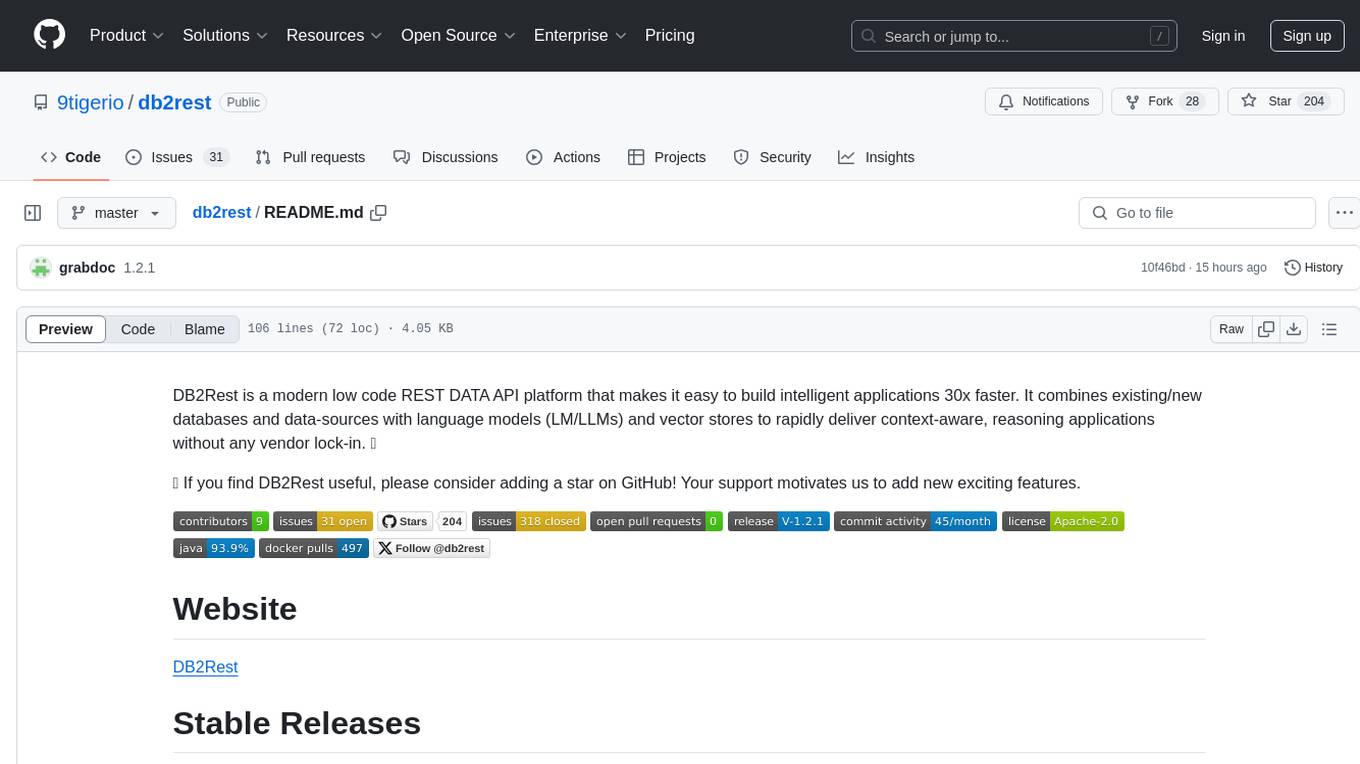

db2rest

DB2Rest is a modern low code REST DATA API platform that enables the rapid development of intelligent applications by combining databases, language models, and vector stores. It facilitates context-aware, reasoning applications without vendor lock-in. The tool accelerates application delivery, fosters faster innovation with AI, serves as a secure database gateway, and simplifies integration. It supports various databases like PostgreSQL, MySQL, MS SQL Server, Oracle, MongoDB, and more, with planned support for additional databases. Users can connect on Discord for support and contact [email protected] for inquiries.

dash-infer

DashInfer is a C++ runtime tool designed to deliver production-level implementations highly optimized for various hardware architectures, including x86 and ARMv9. It supports Continuous Batching and NUMA-Aware capabilities for CPU, and can fully utilize modern server-grade CPUs to host large language models (LLMs) up to 14B in size. With lightweight architecture, high precision, support for mainstream open-source LLMs, post-training quantization, optimized computation kernels, NUMA-aware design, and multi-language API interfaces, DashInfer provides a versatile solution for efficient inference tasks. It supports x86 CPUs with AVX2 instruction set and ARMv9 CPUs with SVE instruction set, along with various data types like FP32, BF16, and InstantQuant. DashInfer also offers single-NUMA and multi-NUMA architectures for model inference, with detailed performance tests and inference accuracy evaluations available. The tool is supported on mainstream Linux server operating systems and provides documentation and examples for easy integration and usage.

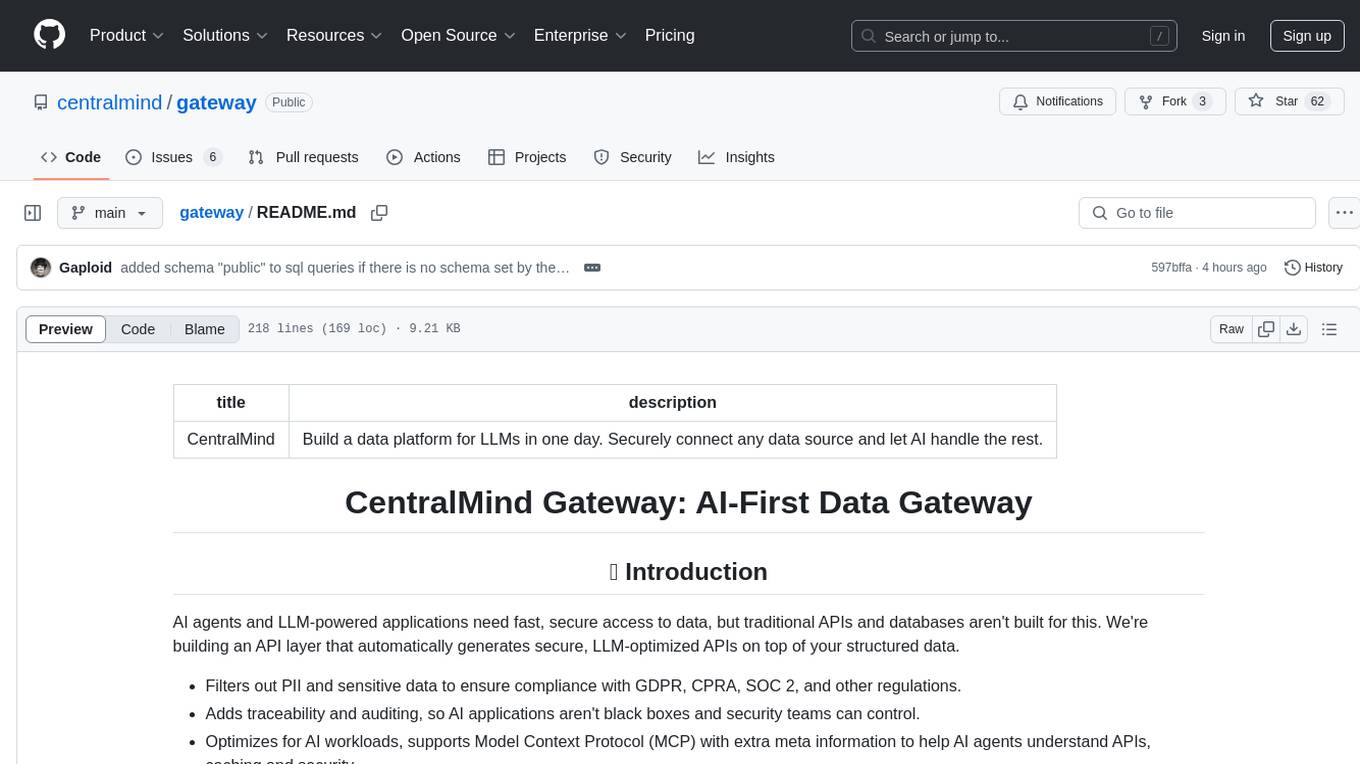

gateway

CentralMind Gateway is an AI-first data gateway that securely connects any data source and automatically generates secure, LLM-optimized APIs. It filters out sensitive data, adds traceability, and optimizes for AI workloads. Suitable for companies deploying AI agents for customer support and analytics.

extractous

Extractous offers a fast and efficient solution for extracting content and metadata from various document types such as PDF, Word, HTML, and many other formats. It is built with Rust, providing high performance, memory safety, and multi-threading capabilities. The tool eliminates the need for external services or APIs, making data processing pipelines faster and more efficient. It supports multiple file formats, including Microsoft Office, OpenOffice, PDF, spreadsheets, web documents, e-books, text files, images, and email formats. Extractous provides a clear and simple API for extracting text and metadata content, with upcoming support for JavaScript/TypeScript. It is free for commercial use under the Apache 2.0 License.

paperless-ai

Paperless-AI is an automated document analyzer tool designed for Paperless-ngx users. It utilizes the OpenAI API and Ollama (Mistral, llama, phi 3, gemma 2) to automatically scan, analyze, and tag documents. The tool offers features such as automatic document scanning, AI-powered document analysis, automatic title and tag assignment, manual mode for analyzing documents, easy setup through a web interface, document processing dashboard, error handling, and Docker support. Users can configure the tool through a web interface and access a debug interface for monitoring and troubleshooting. Paperless-AI aims to streamline document organization and analysis processes for users with access to Paperless-ngx and AI capabilities.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

ai-optimizer

The Oracle AI Optimizer and Toolkit provides a streamlined environment for developers and data scientists to explore Generative Artificial Intelligence (GenAI) and Retrieval-Augmented Generation (RAG) capabilities. It integrates Oracle Database 23ai AI VectorSearch and SelectAI to enhance Large Language Models (LLMs) through RAG.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

For similar tasks

LakeSoul

LakeSoul is a cloud-native Lakehouse framework that supports scalable metadata management, ACID transactions, efficient and flexible upsert operation, schema evolution, and unified streaming & batch processing. It supports multiple computing engines like Spark, Flink, Presto, and PyTorch, and computing modes such as batch, stream, MPP, and AI. LakeSoul scales metadata management and achieves ACID control by using PostgreSQL. It provides features like automatic compaction, table lifecycle maintenance, redundant data cleaning, and permission isolation for metadata.

bella-openapi

Bella OpenAPI is an API gateway that provides rich AI capabilities, similar to openrouter. In addition to chat completion ability, it also offers text embedding, ASR, TTS, image-to-image, and text-to-image AI capabilities. It integrates billing, rate limiting, and resource management functions. All integrated capabilities have been validated in large-scale production environments. The tool supports various AI capabilities, metadata management, unified login service, billing and rate limiting, and has been validated in large-scale production environments for stability and reliability. It offers a user-friendly experience with Java-friendly technology stack, convenient cloud-based experience service, and Dockerized deployment.

datachain

DataChain is a Python-based AI-data warehouse for transforming and analyzing unstructured data like images, audio, videos, text, and PDFs. It integrates with external storage to process data efficiently without duplication and manages metadata for easy querying. Use cases include ETL, analytics, versioning, and incremental processing. Key features include multimodal dataset versioning, Python-friendly operations, data enrichment, and processing. The tool allows for generating metadata using AI models, filtering, joining, and grouping datasets, and performing high-performance vectorized operations.

aiometadata

AIOMetadata is a next-generation metadata addon for Stremio that aggregates and enriches movie, series, and anime metadata from multiple sources like TMDB, TVDB, MyAnimeList, AniList, IMDb, and more. It offers rich artwork, custom catalogs, streaming provider integration, dynamic search, user configuration, global caching, advanced ID mapping, and a modern UI. Users can host it or self-host using Docker Compose, configure catalogs, providers, search engines, integrations, and security settings via a UI, and access various API endpoints for managing user config, cache, posters, images, and more. Supported providers include TMDB, TVDB, IMDb, MyAnimeList, AniList, Kitsu, Fanart.tv, MDBList, and more. Development involves backend and frontend setup using Redis for caching and SQLite/PostgreSQL for config storage. The project is licensed under Apache 2.0.

aioreactive

Aioreactive is a Python library that brings ReactiveX functionality to asyncio using async and await. It is built on the Expression functional library and aims to provide a simple, clean, and async-based approach to reactive programming in Python. The library supports Python 3.10+ and focuses on using plain old functions for operators, running on the asyncio event loop, and providing implicit synchronous back-pressure for event processing.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.