AIL-framework

AIL framework - Analysis Information Leak framework. Project moved to https://github.com/ail-project

Stars: 1346

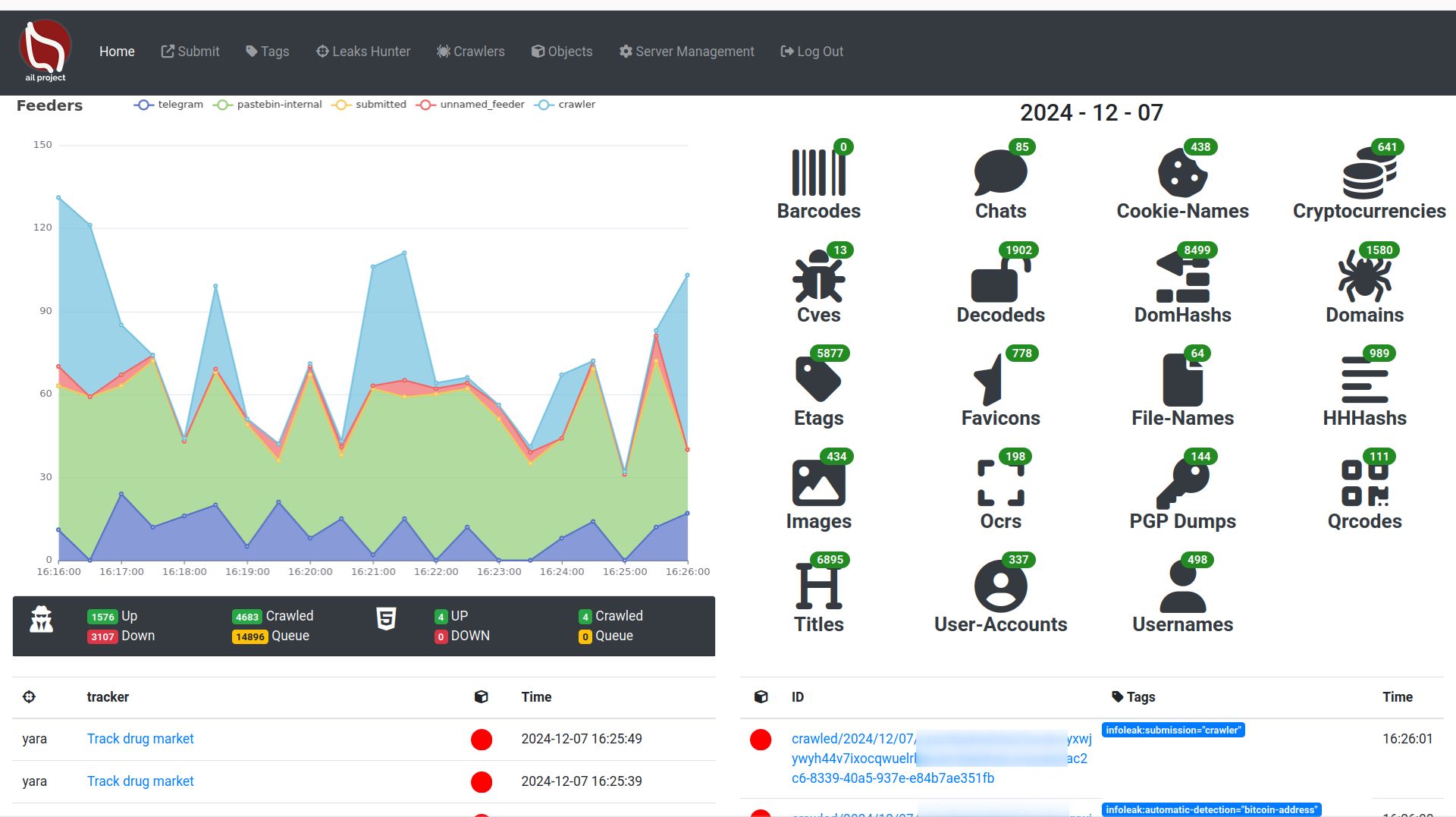

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

README:

| Latest Release |  |

| CI | |

| Gitter | |

| Contributors |  |

| License |  |

AIL framework - Framework for Analysis of Information Leaks

AIL is a modular framework to analyse potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

AIL v5.0 introduces significant improvements and new features:

- Codebase Rewrite: The codebase has undergone a substantial rewrite, resulting in enhanced performance and speed improvements.

- Database Upgrade: The database has been migrated from ARDB to Kvrocks.

- New Correlation Engine: AIL v5.0 introduces a new powerful correlation engine with two new correlation types: CVE and Title.

- Enhanced Logging: The logging system has been improved to provide better troubleshooting capabilities.

- Tagging Support: AIL objects now support tagging, allowing users to categorize and label extracted information for easier analysis and organization.

- Trackers: Improved objects filtering, PGP and decoded tracking added.

- UI Content Visualization: The user interface has been upgraded to visualize extracted and tracked information.

- New Crawler Lacus: improve crawling capabilities.

- Modular Importers and Exporters: New importers (ZMQ, AIL Feeders) and exporters (MISP, Mail, TheHive) modular design. Allow easy creation and customization by extending an abstract class.

- Module Queues: improved the queuing mechanism between detection modules.

- New Object CVE and Title: Extract an correlate CVE IDs and web page titles.

- Modular architecture to handle streams of unstructured or structured information

- Default support for external ZMQ feeds, such as provided by CIRCL or other providers

- Multiple Importers and feeds support

- Each module can process and reprocess the information already analyzed by AIL

- Detecting and extracting URLs including their geographical location (e.g. IP address location)

- Extracting and validating potential leaks of credit card numbers, credentials, ...

- Extracting and validating leaked email addresses, including DNS MX validation

- Module for extracting Tor .onion addresses for further analysis

- Keep tracks of credentials duplicates (and diffing between each duplicate found)

- Extracting and validating potential hostnames (e.g. to feed Passive DNS systems)

- A full-text indexer module to index unstructured information

- Terms, Set of terms, Regex, typo squatting and YARA tracking and occurrence

- YARA Retro Hunt

- Many more modules for extracting phone numbers, credentials, and more

- Alerting to MISP to share found leaks within a threat intelligence platform using MISP standard

- Detecting and decoding encoded file (Base64, hex encoded or your own decoding scheme) and storing files

- Detecting Amazon AWS and Google API keys

- Detecting Bitcoin address and Bitcoin private keys

- Detecting private keys, certificate, keys (including SSH, OpenVPN)

- Detecting IBAN bank accounts

- Tagging system with MISP Galaxy and MISP Taxonomies tags

- UI submission

- Create events on MISP and cases on The Hive

- Automatic export on detection with MISP (events) and The Hive (alerts) on selected tags

- Extracted and decoded files can be searched by date range, type of file (mime-type) and encoding discovered

- Correlations engine and Graph to visualize relationships between decoded files (hashes), PGP UIDs, domains, username, and cryptocurrencies addresses

- Websites, Forums and Tor Hidden-Services hidden services crawler to crawl and parse output

- Domain availability monitoring to detect up and down of websites and hidden services

- Browsed hidden services are automatically captured and integrated into the analyzed output, including a blurring screenshot interface (to avoid "burning the eyes" of security analysts with sensitive content)

- Tor hidden services is part of the standard framework, all the AIL modules are available to the crawled hidden services

- Crawler scheduler to trigger crawling on demand or at regular intervals for URLs or Tor hidden services

Trackers are user-defined rules or patterns that automatically detect, tag and notify about relevant information collected by AIL.

Trackers types: Documentation

- word or set of words

- YARA rules

- Regex

- Typo Squatting

To install the AIL framework, run the following commands:

# Clone the repo first

git clone https://github.com/ail-project/ail-framework.git

git submodule update --init --recursive

cd ail-framework

# For Debian and Ubuntu based distributions

./installing_deps.sh

# Launch ail

cd ~/ail-framework/

cd bin/

./LAUNCH.sh -lThe default installing_deps.sh is for Debian and Ubuntu based distributions.

Requirement:

- Python 3.8+

For Lacus Crawler and LibreTranslate installation instructions (if you want to use those features), refer to the HOWTO

To start AIL, use the following commands:

cd bin/

./LAUNCH.sh -lYou can access the AIL framework web interface at the following URL:

https://localhost:7000/

The default credentials for the web interface are located in the DEFAULT_PASSWORDfile, which is deleted when you change your password.

CIRCL organises training on how to use or extend the AIL framework. AIL training materials are available at https://github.com/ail-project/ail-training.

The documentation is available in doc/README.md

The API documentation is available in doc/api.md

HOWTO are available in HOWTO.md

For information on AIL's compliance with GDPR and privacy considerations, refer to the AIL information leaks analysis and the GDPR in the context of collection, analysis and sharing information leaks document.

this document provides an overview how to use AIL in a lawfulness context especially in the scope of General Data Protection Regulation.

If you use or reference AIL in an academic paper, you can cite it using the following BibTeX:

@inproceedings{mokaddem2018ail,

title={AIL-The design and implementation of an Analysis Information Leak framework},

author={Mokaddem, Sami and Wagener, G{\'e}rard and Dulaunoy, Alexandre},

booktitle={2018 IEEE International Conference on Big Data (Big Data)},

pages={5049--5057},

year={2018},

organization={IEEE}

}

Websites, Forums and Tor Hidden-Services

Copyright (C) 2014 Jules Debra

Copyright (c) 2021 Olivier Sagit

Copyright (C) 2014-2024 CIRCL - Computer Incident Response Center Luxembourg (c/o smile, security made in Lëtzebuerg, Groupement d'Intérêt Economique)

Copyright (c) 2014-2024 Raphaël Vinot

Copyright (c) 2014-2024 Alexandre Dulaunoy

Copyright (c) 2016-2024 Sami Mokaddem

Copyright (c) 2018-2024 Thirion Aurélien

This program is free software: you can redistribute it and/or modify

it under the terms of the GNU Affero General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU Affero General Public License for more details.

You should have received a copy of the GNU Affero General Public License

along with this program. If not, see <http://www.gnu.org/licenses/>.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIL-framework

Similar Open Source Tools

AIL-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

ail-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

logfire

Pydantic Logfire is an observability platform that provides simple and powerful dashboard, Python-centric insights, SQL querying, OpenTelemetry integration, and Pydantic validation analytics. It offers unparalleled visibility into Python applications' behavior and allows querying data using standard SQL. Logfire is an opinionated wrapper around OpenTelemetry, supporting traces, metrics, and logs. The Python SDK for logfire is open source, while the server application for recording and displaying data is closed source.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

nous

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

ExtractThinker

ExtractThinker is a library designed for extracting data from files and documents using Language Model Models (LLMs). It offers ORM-style interaction between files and LLMs, supporting multiple document loaders such as Tesseract OCR, Azure Form Recognizer, AWS TextExtract, and Google Document AI. Users can customize extraction using contract definitions, process documents asynchronously, handle various document formats efficiently, and split and process documents. The project is inspired by the LangChain ecosystem and focuses on Intelligent Document Processing (IDP) using LLMs to achieve high accuracy in document extraction tasks.

sdialog

SDialog is an MIT-licensed open-source toolkit for building, simulating, and evaluating LLM-based conversational agents end-to-end. It aims to bridge agent construction, user simulation, dialog generation, and evaluation in a single reproducible workflow, enabling the generation of reliable, controllable dialog systems or data at scale. The toolkit standardizes a Dialog schema, offers persona-driven multi-agent simulation with LLMs, provides composable orchestration for precise control over behavior and flow, includes built-in evaluation metrics, and offers mechanistic interpretability. It allows for easy creation of user-defined components and interoperability across various AI platforms.

instructor-php

Instructor for PHP is a library designed for structured data extraction in PHP, powered by Large Language Models (LLMs). It simplifies the process of extracting structured, validated data from unstructured text or chat sequences. Instructor enhances workflow by providing a response model, validation capabilities, and max retries for requests. It supports classes as response models and provides features like partial results, string input, extracting scalar and enum values, and specifying data models using PHP type hints or DocBlock comments. The library allows customization of validation and provides detailed event notifications during request processing. Instructor is compatible with PHP 8.2+ and leverages PHP reflection, Symfony components, and SaloonPHP for communication with LLM API providers.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

SMRY

SMRY.ai is a Next.js application that bypasses paywalls and generates AI-powered summaries by fetching content from multiple sources simultaneously. It provides a distraction-free reader with summary builder, cleans articles, offers multi-source fetching, built-in AI summaries in 14 languages, rich debug context, soft paywall access, smart extraction using Diffbot's AI, multi-source parallel fetching, type-safe error handling, dual caching strategy, intelligent source routing, content parsing pipeline, multilingual summaries, and more. The tool aims to make referencing reporting easier, provide original articles alongside summaries, and offer concise summaries in various languages.

XLearning

XLearning is a scheduling platform for big data and artificial intelligence, supporting various machine learning and deep learning frameworks. It runs on Hadoop Yarn and integrates frameworks like TensorFlow, MXNet, Caffe, Theano, PyTorch, Keras, XGBoost. XLearning offers scalability, compatibility, multiple deep learning framework support, unified data management based on HDFS, visualization display, and compatibility with code at native frameworks. It provides functions for data input/output strategies, container management, TensorBoard service, and resource usage metrics display. XLearning requires JDK >= 1.7 and Maven >= 3.3 for compilation, and deployment on CentOS 7.2 with Java >= 1.7 and Hadoop 2.6, 2.7, 2.8.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

inngest

Inngest is a platform that offers durable functions to replace queues, state management, and scheduling for developers. It allows writing reliable step functions faster without dealing with infrastructure. Developers can create durable functions using various language SDKs, run a local development server, deploy functions to their infrastructure, sync functions with the Inngest Platform, and securely trigger functions via HTTPS. Inngest Functions support retrying, scheduling, and coordinating operations through triggers, flow control, and steps, enabling developers to build reliable workflows with robust support for various operations.

For similar tasks

AIL-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

ail-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

For similar jobs

Copilot-For-Security

Microsoft Copilot for Security is a generative AI-powered assistant for daily operations in security and IT that empowers teams to protect at the speed and scale of AI.

AIL-framework

AIL framework is a modular framework to analyze potential information leaks from unstructured data sources like pastes from Pastebin or similar services or unstructured data streams. AIL framework is flexible and can be extended to support other functionalities to mine or process sensitive information (e.g. data leak prevention).

beelzebub

Beelzebub is an advanced honeypot framework designed to provide a highly secure environment for detecting and analyzing cyber attacks. It offers a low code approach for easy implementation and utilizes virtualization techniques powered by OpenAI Generative Pre-trained Transformer. Key features include OpenAI Generative Pre-trained Transformer acting as Linux virtualization, SSH Honeypot, HTTP Honeypot, TCP Honeypot, Prometheus openmetrics integration, Docker integration, RabbitMQ integration, and kubernetes support. Beelzebub allows easy configuration for different services and ports, enabling users to create custom honeypot scenarios. The roadmap includes developing Beelzebub into a robust PaaS platform. The project welcomes contributions and encourages adherence to the Code of Conduct for a supportive and respectful community.

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

awesome-business-of-cybersecurity

The 'Awesome Business of Cybersecurity' repository is a comprehensive resource exploring the cybersecurity market, focusing on publicly traded companies, industry strategy, and AI capabilities. It provides insights into how cybersecurity companies operate, compete, and evolve across 18 solution categories and beyond. The repository offers structured information on the cybersecurity market snapshot, specialists vs. multiservice cybersecurity companies, cybersecurity stock lists, endpoint protection and threat detection, network security, identity and access management, cloud and application security, data protection and governance, security analytics and threat intelligence, non-US traded cybersecurity companies, cybersecurity ETFs, blogs and newsletters, podcasts, market insights and research, and cybersecurity solutions categories.

mcp-scan

MCP-Scan is a security scanning tool designed to detect common security vulnerabilities in Model Context Protocol (MCP) servers. It can auto-discover various MCP configurations, scan both local and remote servers for security issues like prompt injection attacks, tool poisoning attacks, and toxic flows. The tool operates in two main modes - 'scan' for static scanning of installed servers and 'proxy' for real-time monitoring and guardrailing of MCP connections. It offers features like scanning for specific attacks, enforcing guardrailing policies, auditing MCP traffic, and detecting changes to MCP tools. MCP-Scan does not store or log usage data and can be used to enhance the security of MCP environments.

AI-Infra-Guard

A.I.G (AI-Infra-Guard) is an AI red teaming platform by Tencent Zhuque Lab that integrates capabilities such as AI infra vulnerability scan, MCP Server risk scan, and Jailbreak Evaluation. It aims to provide users with a comprehensive, intelligent, and user-friendly solution for AI security risk self-examination. The platform offers features like AI Infra Scan, AI Tool Protocol Scan, and Jailbreak Evaluation, along with a modern web interface, complete API, multi-language support, cross-platform deployment, and being free and open-source under the MIT license.

HydraDragonPlatform

Hydra Dragon Automatic Malware/Executable Analysis Platform offers dynamic and static analysis for Windows, including open-source XDR projects, ClamAV, YARA-X, machine learning AI, behavioral analysis, Unpacker, Deobfuscator, Decompiler, website signatures, Ghidra, Suricata, Sigma, Kernel based protection, and more. It is a Unified Executable Analysis & Detection Framework.