DataFlow

Easy Data Preparation with latest LLMs-based Operators and Pipelines.

Stars: 2936

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

README:

🎉 If you like our project, please give us a star ⭐ on GitHub for the latest update.

Beginner-friendly learning resources (continuously updated): [🎬 Video Tutorials] [📚 Written Tutorials]

简体中文 | English

-

[2026-02-02] 🖥️ DataFlow WebUI is now available! Launch the visual pipeline builder with a single command:

dataflow webui. Build and run DataFlow pipelines through an intuitive web interface. 👉 WebUI Docs -

[2026-01-20] 🌟 Awesome Works Using DataFlow is now live! A new section showcasing open-source projects and research built on DataFlow. Contributions are welcome! 👉 Awesome Works

-

[2025-12-19] 🎉 Our DataFlow technical report is now available! Read and cite our work on arXiv: https://arxiv.org/abs/2512.16676

-

[2025-11-20] 🤖 Introducing New Data Agents for DataFlow! Try them out and follow the tutorial on Bilibili: https://space.bilibili.com/3546929239689711/lists/6761342?type=season

-

[2025-06-28] 🎉 DataFlow is officially released! Our data-centric AI system is now public. Stay tuned for future updates.

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources (PDF, plain-text, low-quality QA), thereby improving the performance of large language models (LLMs) in specific domains through targeted training (Pre-training, Supervised Fine-tuning, RL training) or RAG using knowledge base cleaning. DataFlow has been empirically validated to improve domain-oriented LLMs' performance in fields such as healthcare, finance, and law.

Specifically, we are constructing diverse operators leveraging rule-based methods, deep learning models, LLMs, and LLM APIs. These operators are systematically integrated into distinct pipelines, collectively forming the comprehensive DataFlow system. Additionally, we develop an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

DataFlow adopts a modular operator design philosophy, building flexible data processing pipelines by combining different types of operators. As the basic unit of data processing, an operator can receive structured data input (such as in json/jsonl/csv format) and, after intelligent processing, output high-quality data results. For a detailed guide on using operators, please refer to the Operator Documentation.

In the DataFlow framework, operators are divided into three core categories based on their functional characteristics:

| Operator Type | Quantity | Main Function |

|---|---|---|

| Generic Operators | 80+ | Covers general functions for text evaluation, processing, and synthesis |

| Domain-Specific Operators | 40+ | Specialized processing for specific domains (e.g., medical, financial, legal) |

| Evaluation Operators | 20+ | Comprehensively evaluates data quality from 6 dimensions |

Current Pipelines in Dataflow are as follows:

- 📝 Text Pipeline: Mine question-answer pairs from large-scale plain-text data (mostly crawed from InterNet) for use in SFT and RL training.

- 🧠 Reasoning Pipeline: Enhances existing question–answer pairs with (1) extended chain-of-thought, (2) category classification, and (3) difficulty estimation.

- 🗃️ Text2SQL Pipeline: Translates natural language questions into SQL queries, supplemented with explanations, chain-of-thought reasoning, and contextual schema information.

- 📚 Knowlege Base Cleaning Pipeline: Extract and structure knowledge from unorganized sources like tables, PDFs, and Word documents into usable entries for downstream RAG or QA pair generation.

- 🤖 Agentic RAG Pipeline: Identify and extract QA pairs from existing QA datasets or knowledge bases that require external knowledge to answer, for use in downstream training of Agnetic RAG tasks.

In this framework, operators are categorized into Fundamental Operators, Generic Operators, Domain-Specific Operators, and Evaluation Operators, etc., supporting data processing and evaluation functionalities. Please refer to the documentation for details.

-

DataFlow Agent: An intelligent assistant that performs data analysis, writes custom

operators, and automatically orchestrates them intopipelinesbased on specific task objectives.

Please use the following commands for environment setup and installation👇

conda create -n dataflow python=3.10

conda activate dataflow

pip install open-dataflowIf you want to use your own GPU for local inference, please use:

pip install open-dataflow[vllm]DataFlow supports Python>=3.10 environments

After installation, you can use the following command to check if dataflow has been installed correctly:

dataflow -vIf installed correctly, you should see:

open-dataflow codebase version: 1.0.0

Checking for updates...

Local version: 1.0.0

PyPI newest version: 1.0.0

You are using the latest version: 1.0.0.

We also provide a Dockerfile for easy deployment and a pre-built Docker image for immediate use.

You can directly pull and use our pre-built Docker image:

# Pull the pre-built image

docker pull molyheci/dataflow:cu124

# Run the container with GPU support

docker run --gpus all -it molyheci/dataflow:cu124

# Inside the container, verify installation

dataflow -vAlternatively, you can build the Docker image from the provided Dockerfile:

# Clone the repository (HTTPS)

git clone https://github.com/OpenDCAI/DataFlow.git

# Or use SSH

# git clone [email protected]:OpenDCAI/DataFlow.git

cd DataFlow

# Build the Docker image

docker build -t dataflow:custom .

# Run the container

docker run --gpus all -it dataflow:custom

# Inside the container, verify installation

dataflow -vNote: The Docker image includes CUDA 12.4.1 support and comes with vLLM pre-installed for GPU acceleration. Make sure you have NVIDIA Container Toolkit installed to use GPU features.

You can start your first DataFlow translation project directly on Google Colab. By following the provided guidelines, you can seamlessly scale from a simple translation example to more complex DataFlow pipelines.

👉 Start DataFlow with Google Colab

For detailed usage instructions and getting started guide, please visit our Documentation.

DataFlow provides a Web-based UI (WebUI) for visual pipeline construction and execution.

After installing the DataFlow main repository, simply run:

dataflow webuiThis will automatically download and launch the latest DataFlow-WebUI and open it in your browser

(http://localhost:<port>/ if it does not open automatically).

- Chinese: https://wcny4qa9krto.feishu.cn/wiki/F4PDw76uDiOG42k76gGc6FaBnod

- English: https://wcny4qa9krto.feishu.cn/wiki/SYELwZhh9ixcNwkNRnhcLGmWnEg

For Detailed Experiments setting, please visit our DataFlow Technical Report.

From the SlimPajama-627B corpus, we extract a 100B-token subset and apply multiple DataFlow text-pretraining filters. We train a Qwen2.5-0.5B model from scratch for 30B tokens using the Megatron-DeepSpeed framework, the results are as follows:

| Methods | ARC-C | ARC-E | MMLU | HellaSwag | WinoGrande | Gaokao-MathQA | Avg |

|---|---|---|---|---|---|---|---|

| Random-30B | 25.26 | 43.94 | 27.03 | 37.02 | 50.99 | 27.35 | 35.26 |

| Qurating-30B | 25.00 | 43.14 | 27.50 | 37.03 | 50.67 | 26.78 | 35.02 |

| FineWeb-Edu-30B | 26.45 | 45.41 | 27.41 | 38.06 | 50.43 | 25.64 | 35.57 |

| DataFlow-30B | 25.51 | 45.58 | 27.42 | 37.58 | 50.67 | 27.35 | 35.69 |

To study small-scale SFT data quality, we fine-tune the Qwen2.5-7B base model using LLaMA-Factory on WizardLM and Alpaca datasets. For each dataset, we compared a randomly sampled set of 5K instances against a set of 5K instances filtered by DataFlow's SFT pipeline. Additionally, we synthesize a 15k-size dataset, DataFlow-SFT-15K, using DataFlow’s Condor Generator and Condor Refiner pipeline, followed by DataFlow’s SFT filtering pipeline (excluding the Instagram filter). Benchmarks include comprehensive Math, Code, and Knowledge evaluation suites.

| Methods | math | gsm8k | aime24 | minerva | olympiad | Avg |

|---|---|---|---|---|---|---|

| Alpaca (random) | 54.9 | 77.2 | 13.3 | 14.0 | 27.0 | 37.3 |

| Alpaca (filtered) | 60.3 | 80.0 | 13.3 | 14.7 | 30.7 | 39.8 |

| WizardLM (random) | 61.1 | 84.2 | 6.7 | 18.0 | 29.3 | 39.9 |

| WizardLM (filtered) | 69.7 | 88.8 | 10.0 | 19.9 | 35.4 | 44.8 |

| DataFlow-SFT-15K (random) | 72.6 | 89.6 | 13.3 | 37.9 | 32.9 | 49.3 |

| DataFlow-SFT-15K (filtered) | 73.3 | 90.2 | 13.3 | 36.0 | 35.9 | 49.7 |

| Methods | HumanEval | MBPP | Avg |

|---|---|---|---|

| Alpaca (random) | 71.3 | 75.9 | 73.6 |

| Alpaca (filtered) | 73.8 | 75.7 | 74.8 |

| WizardLM (random) | 75.6 | 82.0 | 78.8 |

| WizardLM (filtered) | 77.4 | 80.4 | 78.9 |

| DataFlow-SFT-15K (random) | 79.9 | 75.9 | 77.9 |

| DataFlow-SFT-15K (filtered) | 82.9 | 74.9 | 78.9 |

| Methods | MMLU | C-EVAL | Avg |

|---|---|---|---|

| Alpaca (random) | 71.8 | 80.0 | 75.9 |

| Alpaca (filtered) | 71.8 | 80.0 | 75.9 |

| WizardLM (random) | 71.8 | 79.2 | 75.5 |

| WizardLM (filtered) | 71.9 | 79.6 | 75.8 |

| DataFlow-SFT-15K (random) | 72.1 | 80.0 | 76.1 |

| DataFlow-SFT-15K (filtered) | 72.2 | 80.4 | 76.3 |

We synthesize DataFlow-Chat-15K using DataFlow's conversation-generation pipeline and fine-tune Qwen2.5-7B-Base on it. Baselines include ShareGPT-15K, UltraChat-15K, and their full (non-truncated) versions. We evaluate on domain-specific tasks (TopDial, Light) and general benchmarks (MMLU, AlpacaEval, Arena-Hard).

| Model | TopDial | Light | Avg |

|---|---|---|---|

| Qwen2.5-7B | 7.71 | 7.79 | 7.75 |

| + ShareGPT-15K | 7.75 | 6.72 | 7.24 |

| + UltraChat-15K | 7.72 | 6.83 | 7.28 |

| + DataFlow-Chat-15K | 7.98 | 8.10 | 8.04 |

| Model | MMLU | AlpacaEval | Arena-Hard | Avg |

|---|---|---|---|---|

| Qwen2.5-7B | 71.45 | 7.05 | 0.60 | 26.36 |

| + ShareGPT-15K | 73.09 | 3.70 | 1.30 | 26.03 |

| + UltraChat-15K | 72.97 | 3.97 | 0.80 | 25.91 |

| + DataFlow-Chat-15K | 73.41 | 10.11 | 1.10 | 28.21 |

We adopt the NuminaMath dataset as a high-quality seed dataset. We compare three training sources: (1) a random 10K subset from Open-R1, (2) a random 10K subset from Synthetic-1, and (3) our 10K synthesized DataFlow-Reasoning-10K dataset constructed using DataFlow.

| Setting | Model | gsm8k | math | amc23 | olympiad | gaokao24_mix | minerva | AIME24@32 | AIME25@32 | Avg |

|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | Qwen2.5-32B-Instruct | 95.8 | 73.5 | 70.0 | 38.5 | 42.9 | 26.5 | 16.8 | 11.6 | 46.95 |

| 1 Epoch | + SYNTHETIC-1-10k | 92.9 | 71.8 | 52.5 | 38.4 | 23.1 | 24.3 | 35.6 | 34.0 | 46.6 |

| 1 Epoch | + Open-R1-10k | 91.5 | 72.3 | 65.0 | 38.4 | 20.9 | 24.6 | 43.0 | 33.5 | 48.7 |

| 1 Epoch | + DataFlow-Reasoning-10K | 93.9 | 72.3 | 72.5 | 38.7 | 38.5 | 26.5 | 35.9 | 34.5 | 51.6 |

| 2 Epochs | + SYNTHETIC-1-10k | 94.5 | 78.4 | 75.0 | 45.0 | 24.2 | 28.3 | 48.4 | 37.9 | 54.0 |

| 2 Epochs | + Open-R1-10k | 93.9 | 77.2 | 80.0 | 44.1 | 20.9 | 25.4 | 51.0 | 40.7 | 54.2 |

| 2 Epochs | + DataFlow-Reasoning-10K | 94.4 | 76.6 | 75.0 | 45.2 | 42.9 | 25.7 | 45.4 | 40.0 | 55.7 |

We randomly sample 20k instances from the Ling-Coder-SFT corpus and process them through the DataFlow Code Pipeline. This yields three curated code instruction datasets of different scales, DataFlow-Code-1K, DataFlow-Code-5K, and DataFlow-Code-10K, each designed to provide high-quality, pipeline-refined supervision signals for code generation tasks.

We compare our synthesized datasets against Code-Alpaca-1k and Self-OSS-Instruct-SC2-Exec-Filter-1k.

| Training Data | BigCodeBench | LiveCodeBench (v6) | CruxEval (Input) | CruxEval (Output) | HumanEval+ | Avg |

|---|---|---|---|---|---|---|

| Qwen2.5-7B-Instruct | 35.3 | 23.4 | 44.8 | 43.9 | 72.6 | 44.0 |

| + Code Alpaca-1K | 33.3 | 18.7 | 45.6 | 46.4 | 66.5 | 42.1 |

| + Self-OSS | 31.9 | 21.4 | 46.9 | 45.9 | 70.1 | 43.2 |

| + DataFlow-Code-1K | 35.5 | 25.7 | 48.0 | 45.1 | 72.6 | 45.4 |

| + DataFlow-Code-5K | 36.2 | 26.4 | 48.6 | 45.0 | 73.2 | 45.9 |

| + DataFlow-Code-10K | 36.8 | 26.0 | 48.8 | 45.4 | 73.8 | 46.2 |

| Training Data | BigCodeBench | LiveCodeBench (v6) | CruxEval (Input) | CruxEval (Output) | HumanEval+ | Avg |

|---|---|---|---|---|---|---|

| Qwen2.5-14B-Instruct | 37.5 | 33.4 | 48.0 | 48.5 | 74.4 | 48.4 |

| + Code Alpaca-1K | 37.0 | 28.2 | 50.2 | 49.6 | 71.3 | 47.3 |

| + Self-OSS | 36.9 | 22.3 | 52.6 | 50.1 | 68.3 | 46.0 |

| + DataFlow-Code-1K | 41.4 | 33.7 | 51.0 | 50.9 | 77.3 | 50.9 |

| + DataFlow-Code-5K | 41.1 | 33.2 | 52.5 | 50.6 | 76.2 | 50.7 |

| + DataFlow-Code-10K | 41.9 | 33.2 | 52.9 | 51.0 | 76.2 | 51.0 |

Our team has published the following papers that form core components of the DataFlow system:

| Paper Title | DataFlow Component | Venue | Year |

|---|---|---|---|

| Text2SQL-Flow: A Robust SQL-Aware Data Augmentation Framework for Text-to-SQL | Text2SQL Data Augmentation | ICDE | 2026 |

| Let's Verify Math Questions Step by Step | Math question quality evaluation | KDD | 2026 |

| MM-Verify: Enhancing Multimodal Reasoning with Chain-of-Thought Verification | Multimodal reasoning verification framework for data processing and evaluation | ACL | 2025 |

| Efficient Pretraining Data Selection for Language Models via Multi-Actor Collaboration | Multi-actor collaborative data selection mechanism for enhanced data filtering and processing | ACL | 2025 |

We are honored to have received first-place awards in two major international AI competitions, recognizing the excellence and robustness of DataFlow and its reasoning capabilities:

| Competition | Track | Award | Organizer | Date |

|---|---|---|---|---|

| ICML 2025 Challenges on Automated Math Reasoning and Extensions | Track 2:Physics Reasoning with Diagrams and Expressions | 🥇First Place Winner | ICML AI for Math Workshop & AWS Codabench | July 18, 2025 |

| 2025 Language and Intelligence Challenge (LIC) | Track 2:Beijing Academy of Artificial Intelligence | 🥇First Prize | Beijing Academy of Artificial Intelligence (BAAI) & Baidu | August 10, 2025 |

|

ICML 2025 Automated Math Reasoning Challenge — First Place Winner |

BAAI Language & Intelligence Challenge 2025 — First Prize |

We sincerely thank MinerU for their outstanding work, whose powerful PDF/document text extraction capabilities provided essential support for our data loading process. We also thank LLaMA-Factory for offering an efficient and user-friendly framework for large model fine-tuning, which greatly facilitated rapid iteration in our training and experimentation workflows. Our gratitude extends to all contributors in the open-source community—their efforts collectively drive the development of DataFlow. We thank Zhongguancun Academy for their API and GPU support.

This section highlights projects, research works, and applications built on top of DataFlow or deeply integrated with the DataFlow ecosystem.

📌 Curated list of featured projects: [Awesome Work Using DataFlow]

We warmly welcome the community to contribute new entries via Pull Requests. 🙌 Detailed Guidance can help you creating a Dataflow extension repository from DataFlow-CLI.

Join the DataFlow open-source community to ask questions, share ideas, and collaborate with other developers!

• 📮 GitHub Issues: Report bugs or suggest features

• 🔧 GitHub Pull Requests: Contribute code improvements

• 💬 Join our community groups to connect with us and other contributors!

If you use DataFlow in your research, feel free to give us a cite.

@article{liang2025dataflow,

title={DataFlow: An LLM-Driven Framework for Unified Data Preparation and Workflow Automation in the Era of Data-Centric AI},

author={Liang, Hao and Ma, Xiaochen and Liu, Zhou and Wong, Zhen Hao and Zhao, Zhengyang and Meng, Zimo and He, Runming and Shen, Chengyu and Cai, Qifeng and Han, Zhaoyang and others},

journal={arXiv preprint arXiv:2512.16676},

year={2025}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for DataFlow

Similar Open Source Tools

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

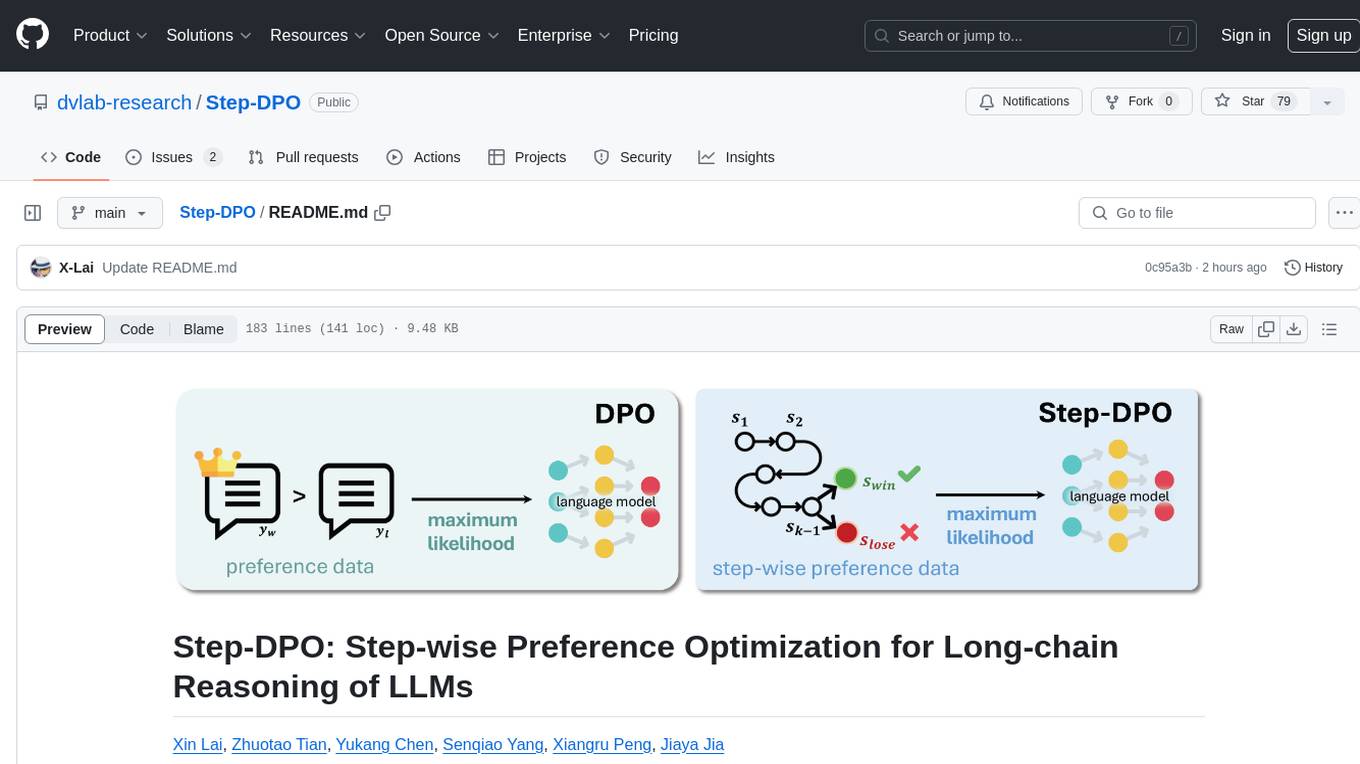

Step-DPO

Step-DPO is a method for enhancing long-chain reasoning ability of LLMs with a data construction pipeline creating a high-quality dataset. It significantly improves performance on math and GSM8K tasks with minimal data and training steps. The tool fine-tunes pre-trained models like Qwen2-7B-Instruct with Step-DPO, achieving superior results compared to other models. It provides scripts for training, evaluation, and deployment, along with examples and acknowledgements.

LlamaV-o1

LlamaV-o1 is a Large Multimodal Model designed for spontaneous reasoning tasks. It outperforms various existing models on multimodal reasoning benchmarks. The project includes a Step-by-Step Visual Reasoning Benchmark, a novel evaluation metric, and a combined Multi-Step Curriculum Learning and Beam Search Approach. The model achieves superior performance in complex multi-step visual reasoning tasks in terms of accuracy and efficiency.

Xwin-LM

Xwin-LM is a powerful and stable open-source tool for aligning large language models, offering various alignment technologies like supervised fine-tuning, reward models, reject sampling, and reinforcement learning from human feedback. It has achieved top rankings in benchmarks like AlpacaEval and surpassed GPT-4. The tool is continuously updated with new models and features.

ZhiLight

ZhiLight is a highly optimized large language model (LLM) inference engine developed by Zhihu and ModelBest Inc. It accelerates the inference of models like Llama and its variants, especially on PCIe-based GPUs. ZhiLight offers significant performance advantages compared to mainstream open-source inference engines. It supports various features such as custom defined tensor and unified global memory management, optimized fused kernels, support for dynamic batch, flash attention prefill, prefix cache, and different quantization techniques like INT8, SmoothQuant, FP8, AWQ, and GPTQ. ZhiLight is compatible with OpenAI interface and provides high performance on mainstream NVIDIA GPUs with different model sizes and precisions.

END-TO-END-GENERATIVE-AI-PROJECTS

The 'END TO END GENERATIVE AI PROJECTS' repository is a collection of awesome industry projects utilizing Large Language Models (LLM) for various tasks such as chat applications with PDFs, image to speech generation, video transcribing and summarizing, resume tracking, text to SQL conversion, invoice extraction, medical chatbot, financial stock analysis, and more. The projects showcase the deployment of LLM models like Google Gemini Pro, HuggingFace Models, OpenAI GPT, and technologies such as Langchain, Streamlit, LLaMA2, LLaMAindex, and more. The repository aims to provide end-to-end solutions for different AI applications.

InternVL

InternVL scales up the ViT to _**6B parameters**_ and aligns it with LLM. It is a vision-language foundation model that can perform various tasks, including: **Visual Perception** - Linear-Probe Image Classification - Semantic Segmentation - Zero-Shot Image Classification - Multilingual Zero-Shot Image Classification - Zero-Shot Video Classification **Cross-Modal Retrieval** - English Zero-Shot Image-Text Retrieval - Chinese Zero-Shot Image-Text Retrieval - Multilingual Zero-Shot Image-Text Retrieval on XTD **Multimodal Dialogue** - Zero-Shot Image Captioning - Multimodal Benchmarks with Frozen LLM - Multimodal Benchmarks with Trainable LLM - Tiny LVLM InternVL has been shown to achieve state-of-the-art results on a variety of benchmarks. For example, on the MMMU image classification benchmark, InternVL achieves a top-1 accuracy of 51.6%, which is higher than GPT-4V and Gemini Pro. On the DocVQA question answering benchmark, InternVL achieves a score of 82.2%, which is also higher than GPT-4V and Gemini Pro. InternVL is open-sourced and available on Hugging Face. It can be used for a variety of applications, including image classification, object detection, semantic segmentation, image captioning, and question answering.

microgpt-c

MicroGPT-C is a project that focuses on tiny specialist models working together to outperform monoliths on specific tasks. It is a C port of a Python GPT model, rewritten in pure C99 with zero dependencies. The project explores the concept of coordinated intelligence through 'organelles' that differentiate based on training data, resulting in improved performance across logic games and real-world data experiments.

speechless

Speechless.AI is committed to integrating the superior language processing and deep reasoning capabilities of large language models into practical business applications. By enhancing the model's language understanding, knowledge accumulation, and text creation abilities, and introducing long-term memory, external tool integration, and local deployment, our aim is to establish an intelligent collaborative partner that can independently interact, continuously evolve, and closely align with various business scenarios.

MMOS

MMOS (Mix of Minimal Optimal Sets) is a dataset designed for math reasoning tasks, offering higher performance and lower construction costs. It includes various models and data subsets for tasks like arithmetic reasoning and math word problem solving. The dataset is used to identify minimal optimal sets through reasoning paths and statistical analysis, with a focus on QA-pairs generated from open-source datasets. MMOS also provides an auto problem generator for testing model robustness and scripts for training and inference.

albumentations

Albumentations is a Python library for image augmentation. Image augmentation is used in deep learning and computer vision tasks to increase the quality of trained models. The purpose of image augmentation is to create new training samples from the existing data.

YuLan-Mini

YuLan-Mini is a lightweight language model with 2.4 billion parameters that achieves performance comparable to industry-leading models despite being pre-trained on only 1.08T tokens. It excels in mathematics and code domains. The repository provides pre-training resources, including data pipeline, optimization methods, and annealing approaches. Users can pre-train their own language models, perform learning rate annealing, fine-tune the model, research training dynamics, and synthesize data. The team behind YuLan-Mini is AI Box at Renmin University of China. The code is released under the MIT License with future updates on model weights usage policies. Users are advised on potential safety concerns and ethical use of the model.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

Awesome-Knowledge-Distillation-of-LLMs

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

langfuse

Langfuse is a powerful tool that helps you develop, monitor, and test your LLM applications. With Langfuse, you can: * **Develop:** Instrument your app and start ingesting traces to Langfuse, inspect and debug complex logs, and manage, version, and deploy prompts from within Langfuse. * **Monitor:** Track metrics (cost, latency, quality) and gain insights from dashboards & data exports, collect and calculate scores for your LLM completions, run model-based evaluations, collect user feedback, and manually score observations in Langfuse. * **Test:** Track and test app behaviour before deploying a new version, test expected in and output pairs and benchmark performance before deploying, and track versions and releases in your application. Langfuse is easy to get started with and offers a generous free tier. You can sign up for Langfuse Cloud or deploy Langfuse locally or on your own infrastructure. Langfuse also offers a variety of integrations to make it easy to connect to your LLM applications.

For similar tasks

deep-searcher

DeepSearcher is a tool that combines reasoning LLMs and Vector Databases to perform search, evaluation, and reasoning based on private data. It is suitable for enterprise knowledge management, intelligent Q&A systems, and information retrieval scenarios. The tool maximizes the utilization of enterprise internal data while ensuring data security, supports multiple embedding models, and provides support for multiple LLMs for intelligent Q&A and content generation. It also includes features like private data search, vector database management, and document loading with web crawling capabilities under development.

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

marvin

Marvin is a lightweight AI toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. Each of Marvin's tools is simple and self-documenting, using AI to solve common but complex challenges like entity extraction, classification, and generating synthetic data. Each tool is independent and incrementally adoptable, so you can use them on their own or in combination with any other library. Marvin is also multi-modal, supporting both image and audio generation as well using images as inputs for extraction and classification. Marvin is for developers who care more about _using_ AI than _building_ AI, and we are focused on creating an exceptional developer experience. Marvin users should feel empowered to bring tightly-scoped "AI magic" into any traditional software project with just a few extra lines of code. Marvin aims to merge the best practices for building dependable, observable software with the best practices for building with generative AI into a single, easy-to-use library. It's a serious tool, but we hope you have fun with it. Marvin is open-source, free to use, and made with 💙 by the team at Prefect.

data-juicer

Data-Juicer is a one-stop data processing system to make data higher-quality, juicier, and more digestible for LLMs. It is a systematic & reusable library of 80+ core OPs, 20+ reusable config recipes, and 20+ feature-rich dedicated toolkits, designed to function independently of specific LLM datasets and processing pipelines. Data-Juicer allows detailed data analyses with an automated report generation feature for a deeper understanding of your dataset. Coupled with multi-dimension automatic evaluation capabilities, it supports a timely feedback loop at multiple stages in the LLM development process. Data-Juicer offers tens of pre-built data processing recipes for pre-training, fine-tuning, en, zh, and more scenarios. It provides a speedy data processing pipeline requiring less memory and CPU usage, optimized for maximum productivity. Data-Juicer is flexible & extensible, accommodating most types of data formats and allowing flexible combinations of OPs. It is designed for simplicity, with comprehensive documentation, easy start guides and demo configs, and intuitive configuration with simple adding/removing OPs from existing configs.

ChainForge

ChainForge is a visual programming environment for battle-testing prompts to LLMs. It is geared towards early-stage, quick-and-dirty exploration of prompts, chat responses, and response quality that goes beyond ad-hoc chatting with individual LLMs. With ChainForge, you can: * Query multiple LLMs at once to test prompt ideas and variations quickly and effectively. * Compare response quality across prompt permutations, across models, and across model settings to choose the best prompt and model for your use case. * Setup evaluation metrics (scoring function) and immediately visualize results across prompts, prompt parameters, models, and model settings. * Hold multiple conversations at once across template parameters and chat models. Template not just prompts, but follow-up chat messages, and inspect and evaluate outputs at each turn of a chat conversation. ChainForge comes with a number of example evaluation flows to give you a sense of what's possible, including 188 example flows generated from benchmarks in OpenAI evals. This is an open beta of Chainforge. We support model providers OpenAI, HuggingFace, Anthropic, Google PaLM2, Azure OpenAI endpoints, and Dalai-hosted models Alpaca and Llama. You can change the exact model and individual model settings. Visualization nodes support numeric and boolean evaluation metrics. ChainForge is built on ReactFlow and Flask.

Vodalus-Expert-LLM-Forge

Vodalus Expert LLM Forge is a tool designed for crafting datasets and efficiently fine-tuning models using free open-source tools. It includes components for data generation, LLM interaction, RAG engine integration, model training, fine-tuning, and quantization. The tool is suitable for users at all levels and is accompanied by comprehensive documentation. Users can generate synthetic data, interact with LLMs, train models, and optimize performance for local execution. The tool provides detailed guides and instructions for setup, usage, and customization.

hCaptcha-Solver

hCaptcha-Solver is an AI-based hcaptcha text challenge solver that utilizes the playwright module to generate the hsw N data. It can solve any text challenge without any problem, but may be flagged on some websites like Discord. The tool requires proxies since hCaptcha also rate limits. Users can run the 'hsw_api.py' before running anything and then integrate the usage shown in 'main.py' into their projects that require hCaptcha solving. Please note that this tool only works on sites that support hCaptcha text challenge.

seahorse

A handy package for kickstarting AI contests. This Python framework simplifies the creation of an environment for adversarial agents, offering various functionalities for game setup, playing against remote agents, data generation, and contest organization. The package is user-friendly and provides easy-to-use features out of the box. Developed by an enthusiastic team of M.Sc candidates at Polytechnique Montréal, 'seahorse' is distributed under the 3-Clause BSD License.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.