NExT-GPT

Code and models for NExT-GPT: Any-to-Any Multimodal Large Language Model

Stars: 3245

NExT-GPT is an end-to-end multimodal large language model that can process input and generate output in various combinations of text, image, video, and audio. It leverages existing pre-trained models and diffusion models with end-to-end instruction tuning. The repository contains code, data, and model weights for NExT-GPT, allowing users to work with different modalities and perform tasks like encoding, understanding, reasoning, and generating multimodal content.

README:

Shengqiong Wu, Hao Fei*, Leigang Qu, Wei Ji, and Tat-Seng Chua. (*Correspondence )

NExT++, School of Computing, National University of Singapore

This repository hosts the code, data and model weight of NExT-GPT, the first end-to-end MM-LLM that perceives input and generates output in arbitrary combinations (any-to-any) of text, image, video, and audio and beyond.

Noted: we wrap the former old codebase into the NExT-GPT-Lagacy. Please refer to this new codebase for all training and tuning procedures.

- [x] [2023.09.15] 🚀🚀 Release the code of NExT-GPT in version

7b_tiva_v0. - [x] [2023.09.27] 🔨🧩 Added modality-blended batch sampler.

- [x] [2023.10.01] 📢📢 Release the T2M instruction dataset.

- [x] [2023.10.04] 👏👏 Release the checkpoint of NExT-GPT in version 7b_tiva_v0 .

- [x] [2023.10.15] 🔨🚀 Update of NExT-GPT in version 7b_tiva_v0 .

- [x] [2024.10.07] 👏👏 Release the data and the corresponding construction methods, please refer DATA_README.md for more details.

- [ ] Updating NExT-GPT in more types&sizes of LLMs.

- [ ] Empowering NExT-GPT with more modalities of inputs&outputs.

- [ ] ...

Here we showcase examples generated from NExT-GPT. For more examples, kindly visit the webpage, or the online live demo.

https://github.com/NExT-GPT/NExT-GPT/assets/18722770/0c2b3d88-a533-4899-ab44-65580fe54538

https://github.com/NExT-GPT/NExT-GPT/assets/18722770/eb1319a6-38aa-4546-a96e-163207e7de93

https://github.com/NExT-GPT/NExT-GPT/assets/18722770/36bec0ad-9bad-4bcf-bc37-92b028f1bc6a

NExt-GPT is built on top of existing pre-trained LLM, multimodal encoder and SoTA diffusion models, with sufficient end-to-end instruction tuning.

- Multimodal Encoding Stage. Leveraging established encoders to encode inputs in various modalities, where these representations are projected into language-like representations comprehensible to the LLM through a projection layer.

- LLM Understanding and Reasoning Stage. Harnessing an existing open-sourced LLM as the core to process input information for semantic understanding and reasoning. The LLM not only directly generates text tokens but also produces unique “modality signal” tokens that serve as instructions to dictate the decoding layers whether & what modal content to output correspondingly.

- Multimodal Generation Stage. Receiving the multimodal signals with specific instructions from LLM (if any), the Transformer-based output projection layers map the signal token representations into the ones that are understandable to following multimodal decoders.

For more technical details, kindly refer to the paper.

- 1. Code Structure

- 2. Environment Preparation

- 3. Training/Adapting NExt-GPT on Your Own

- 4. Running NExT-GPT System

- 5. Fine-tuning your own System

.

|-- NExT-GPT-Lagacy # the previous version of the model

|-- assets

|-- checkpoints # save the pretraining and tuning checkpoints

|-- data

| |-- IT_data

| | |-- MosIT_data

| | |-- T+X-T_data # text+[image/audio/video] to text instruction data

| | `-- T-T+X_data # synthesized text to text+[image/audio/video] instruction data

| |-- T_X_pair_data # text-autio pairs data

| | |-- audiocap

| | |-- cc3m

| | `-- webvid

| |-- embed

| `-- prepare_data.py

|-- figures

|-- merge_lora_weights.py

|-- nextgpt

| |-- __init__.py

| |-- constants.py

| |-- conversation.py

| |-- dataset

| | |-- __init__.py

| | |-- audio_processor.py

| | |-- base_dataset.py

| | |-- catalog.py

| | |-- concat_dataset.py

| | |-- dataset_utils.py

| | `-- sampler.py

| |-- mm_utils.py

| |-- model

| | |-- __init__.py

| | |-- apply_delta.py

| | |-- builder.py

| | |-- consolidate.py

| | |-- language_model

| | |-- make_delta.py

| | |-- multimodal_decoder

| | |-- multimodal_encoder

| | |-- multimodal_projector

| | |-- nextgpt_arch.py

| | `-- utils.py

| `-- utils.py

|-- scripts

| |-- finetune.sh

| |-- pretrain_dec.sh

| |-- pretrain_enc.sh

| |-- zero2.json

| |-- zero3.json

| `-- zero3_offload.json

|-- LICENSE.md

|-- README.md

|-- nextgpt_trainer.py

|-- predict.py

|-- preprocess_embeddings.py

|-- requirements.txt

|-- train.py

|-- train_mem.py

`-- training_utils.py

2. Environment Preparation [Back to Top]

Please first clone the repo and install the required environment, which can be done by running the following commands:

conda env create -n nextgpt python=3.8

conda activate nextgpt

# CUDA 12.1

conda install pytorch==2.1.2 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.6 -c pytorch -c nvidia

git clone https://github.com/NExT-GPT/NExT-GPT.git

cd NExT-GPT

pip install -r requirements.txt

3.1. Preparing Pre-trained Checkpoint [Back to Top]

NExT-GPT is trained based on following excellent existing models. Please follow the instructions to prepare the checkpoints.

-

ImageBindis the unified image/video/audio encoder. The pre-trained checkpoint can be downloaded from here with versionhuge. Afterward, put theimagebind_huge.pthfile at [.pretrain_ckpt/imagebind]. -

Vicuna: prepare the pretrained vicuna from [here]. Then put the pre-trained model at [./pretrain_ckpt/vicuna-7b-v1.5/]. -

Image Diffusionis used to generate images. NExT-GPT uses Stable Diffusion with versionv2. (will be automatically downloaded) -

Audio Diffusionfor producing audio content. NExT-GPT employs AudioLDM with versionl-full. (will be automatically downloaded) -

Video Diffusionfor the video generation. We employ ZeroScope with versionv2_576w. (will be automatically downloaded)

3.2. Preparing Dataset [Back to Top]

Please download the following datasets used for model training:

A) T-X pairs data

-

CC3Mof text-image pairs, please follow this instruction [here]. Then put the data at [./data/T-X_pair_data/cc3m]. -

WebVidof text-video pairs, see the [instruction]. The file should be saved at [./data/T-X_pair_data/webvid]. -

AudioCapof text-audio pairs, see the [instruction]. Save the data in [./data/T-X_pair_data/audiocap].

B) Instruction data

-

T+X-T

-

LLaVAof the visual instruction data, download it from here, and then put it at [./data/IT_data/T+X-T_data/llava]. -

Alpacaof the textual instruction data, download it from here, and then put it at [./data/IT_data/T+X-T_data/alpaca/]. -

VideoChat, download the video instruction data here, and then put it at [./data/IT_data/T+X-T_data/videochat/].

Side note:After downloading dataset, please run

prepare_data.pyto preprocess the dataset. -

-

T-X+T (T2M)

- The

T-X+Tinstruction datasets (T2M) are saved at [./data/IT_data/T-T+X_data].

- The

-

MosIT

- Download the file from here, put them in [./data/IT_data/MosIT_data/]. (We are in the process of finalizing the data and handling the copyright issue.)

3.3. Precomputing Embeddings [Back to Top]

In decoding-side alignment training, we minimize the distance between the representation of signal tokens and captions. To save costs of time and memory, we precompute the text embeddings for image, audio and video captions using the text encoder within the respective diffusion models.

Please run this command before the following training of NExT-GPT, where the produced embedding file will be saved at [./data/embed].

cd ./code/

python preprocess_embeddings.py ../data/T-X_pair_data/cc3m/cc3m_generation.json image ../data/embed/ stabilityai/stable-diffusion-2

Note of arguments:

- args[1]: path of caption file;

- args[2]: modality, which can be

image,video, andaudio; - args[3]: saving path of embedding file;

- args[4]: corresponding pre-trained diffusion model name.

3.4. Training NExT-GPT [Back to Top]

First of all, please refer to the base configuration file [training_utils.py] for the basic system setting of overall modules, and dataset configuration nextgpt/dataset/catalog.py. The whole NExT-GPT training involves 3 steps:

-

Step-1: Encoding-side LLM-centric Multimodal Alignment. This stage trains the input projection layer while freezing the ImageBind, LLM, output projection layer.

# Encoding-side LLM-centric Multimodal Alignment bash scripts/pretrain_enc.sh -

Step-2: Decoding-side Instruction-following Alignment. This stage trains the output projection layers while freezing the ImageBind, LLM, input projection layers.

# Encoding-side LLM-centric Multimodal Alignment bash scripts/pretrain_enc.sh -

Step-3: Instruction Tuning. This stage instruction-tune 1) the LLM via LoRA, 2) input projection layer and 3) output projection layer on the instruction dataset.

# Encoding-side LLM-centric Multimodal Alignment bash scripts/pretrain_enc.sh

4. Running NExT-GPT System [Back to Top]

First, loading the pre-trained NExT-GPT system.

-

Step-1: load

Frozen parameters. Please refer to 3.1 Preparing Pre-trained Checkpoint. -

Step-2: load

Tunable parameters. Please put the NExT-GPT system at ./checkpoints/nextgpt-v1.5-7b. You may either 1) use the params trained yourselves, or 2) download our checkpoints from Huggingface.

Upon completion of the checkpoint loading, you can run the prediction via:

python predict.py

5. Fine-tuning Your Own System [Back to Top]

You can define your own dataset, please refer to the base_dataset.py, and then add the dataset catalog in catalog.py, including the target and parameters.

- Multimodal Encoder: You can leverage your own multimodal encoder in multimodal encoder directory, and add corresponding code in the builder.py.

- Multimodal Decoder: You can add your own multimodal decoder, in multimodal decoder directory, and modify the corresponding code in the builder.py.

- Projector: You can design your own input and output projector in multimodal projector.

You can pre-define the model, data, and training parameters in training_utils.py. Please refer the finetune.sh for fine-tuning your own model.

For any questions or feedback, feel free to contact Shengqiong Wu and Hao Fei.

If you find NextGPT useful in your research or applications, please kindly cite:

@articles{wu2023nextgpt,

title={NExT-GPT: Any-to-Any Multimodal LLM},

author={Shengqiong Wu and Hao Fei and Leigang Qu and Wei Ji and Tat-Seng Chua},

journal = {CoRR},

volume = {abs/2309.05519},

year={2023}

}

You may refer to related work that serves as foundations for our framework and code repository,

Vicuna,

ImageBind,

Stable Diffusion,

AudioLDM, and

Zeroscope.

We also partially draw inspirations from

PandaGPT,

GILL,

CoDi,

Video-LLaMA,

LLaVA,

and MiniGPT-4.

Thanks for their wonderful works.

This repository is under BSD 3-Clause License. NExT-GPT is a research project intended for non-commercial use only. One must NOT use the code of NExT-GPT for any illegal, harmful, violent, racist, or sexual purposes. One is strictly prohibited from engaging in any activity that will potentially violate these guidelines. Any potential commercial use of this code should be approved by the authors.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for NExT-GPT

Similar Open Source Tools

NExT-GPT

NExT-GPT is an end-to-end multimodal large language model that can process input and generate output in various combinations of text, image, video, and audio. It leverages existing pre-trained models and diffusion models with end-to-end instruction tuning. The repository contains code, data, and model weights for NExT-GPT, allowing users to work with different modalities and perform tasks like encoding, understanding, reasoning, and generating multimodal content.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

HuixiangDou

HuixiangDou is a **group chat** assistant based on LLM (Large Language Model). Advantages: 1. Design a two-stage pipeline of rejection and response to cope with group chat scenario, answer user questions without message flooding, see arxiv2401.08772 2. Low cost, requiring only 1.5GB memory and no need for training 3. Offers a complete suite of Web, Android, and pipeline source code, which is industrial-grade and commercially viable Check out the scenes in which HuixiangDou are running and join WeChat Group to try AI assistant inside. If this helps you, please give it a star ⭐

EasyInstruct

EasyInstruct is a Python package proposed as an easy-to-use instruction processing framework for Large Language Models (LLMs) like GPT-4, LLaMA, ChatGLM in your research experiments. EasyInstruct modularizes instruction generation, selection, and prompting, while also considering their combination and interaction.

LLM4Decompile

LLM4Decompile is an open-source large language model dedicated to decompilation of Linux x86_64 binaries, supporting GCC's O0 to O3 optimization levels. It focuses on assessing re-executability of decompiled code through HumanEval-Decompile benchmark. The tool includes models with sizes ranging from 1.3 billion to 33 billion parameters, available on Hugging Face. Users can preprocess C code into binary and assembly instructions, then decompile assembly instructions into C using LLM4Decompile. Ongoing efforts aim to expand capabilities to support more architectures and configurations, integrate with decompilation tools like Ghidra and Rizin, and enhance performance with larger training datasets.

ichigo

Ichigo is a local real-time voice AI tool that uses an early fusion technique to extend a text-based LLM to have native 'listening' ability. It is an open research experiment with improved multiturn capabilities and the ability to refuse processing inaudible queries. The tool is designed for open data, open weight, on-device Siri-like functionality, inspired by Meta's Chameleon paper. Ichigo offers a web UI demo and Gradio web UI for users to interact with the tool. It has achieved enhanced MMLU scores, stronger context handling, advanced noise management, and improved multi-turn capabilities for a robust user experience.

DB-GPT

DB-GPT is a personal database administrator that can solve database problems by reading documents, using various tools, and writing analysis reports. It is currently undergoing an upgrade. **Features:** * **Online Demo:** * Import documents into the knowledge base * Utilize the knowledge base for well-founded Q&A and diagnosis analysis of abnormal alarms * Send feedbacks to refine the intermediate diagnosis results * Edit the diagnosis result * Browse all historical diagnosis results, used metrics, and detailed diagnosis processes * **Language Support:** * English (default) * Chinese (add "language: zh" in config.yaml) * **New Frontend:** * Knowledgebase + Chat Q&A + Diagnosis + Report Replay * **Extreme Speed Version for localized llms:** * 4-bit quantized LLM (reducing inference time by 1/3) * vllm for fast inference (qwen) * Tiny LLM * **Multi-path extraction of document knowledge:** * Vector database (ChromaDB) * RESTful Search Engine (Elasticsearch) * **Expert prompt generation using document knowledge** * **Upgrade the LLM-based diagnosis mechanism:** * Task Dispatching -> Concurrent Diagnosis -> Cross Review -> Report Generation * Synchronous Concurrency Mechanism during LLM inference * **Support monitoring and optimization tools in multiple levels:** * Monitoring metrics (Prometheus) * Flame graph in code level * Diagnosis knowledge retrieval (dbmind) * Logical query transformations (Calcite) * Index optimization algorithms (for PostgreSQL) * Physical operator hints (for PostgreSQL) * Backup and Point-in-time Recovery (Pigsty) * **Continuously updated papers and experimental reports** This project is constantly evolving with new features. Don't forget to star ⭐ and watch 👀 to stay up to date.

RLAIF-V

RLAIF-V is a novel framework that aligns MLLMs in a fully open-source paradigm for super GPT-4V trustworthiness. It maximally exploits open-source feedback from high-quality feedback data and online feedback learning algorithm. Notable features include achieving super GPT-4V trustworthiness in both generative and discriminative tasks, using high-quality generalizable feedback data to reduce hallucination of different MLLMs, and exhibiting better learning efficiency and higher performance through iterative alignment.

bigcodebench

BigCodeBench is an easy-to-use benchmark for code generation with practical and challenging programming tasks. It aims to evaluate the true programming capabilities of large language models (LLMs) in a more realistic setting. The benchmark is designed for HumanEval-like function-level code generation tasks, but with much more complex instructions and diverse function calls. BigCodeBench focuses on the evaluation of LLM4Code with diverse function calls and complex instructions, providing precise evaluation & ranking and pre-generated samples to accelerate code intelligence research. It inherits the design of the EvalPlus framework but differs in terms of execution environment and test evaluation.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

EmbodiedScan

EmbodiedScan is a holistic multi-modal 3D perception suite designed for embodied AI. It introduces a multi-modal, ego-centric 3D perception dataset and benchmark for holistic 3D scene understanding. The dataset includes over 5k scans with 1M ego-centric RGB-D views, 1M language prompts, 160k 3D-oriented boxes spanning 760 categories, and dense semantic occupancy with 80 common categories. The suite includes a baseline framework named Embodied Perceptron, capable of processing multi-modal inputs for 3D perception tasks and language-grounded tasks.

TokenFormer

TokenFormer is a fully attention-based neural network architecture that leverages tokenized model parameters to enhance architectural flexibility. It aims to maximize the flexibility of neural networks by unifying token-token and token-parameter interactions through the attention mechanism. The architecture allows for incremental model scaling and has shown promising results in language modeling and visual modeling tasks. The codebase is clean, concise, easily readable, state-of-the-art, and relies on minimal dependencies.

ALMA

ALMA (Advanced Language Model-based Translator) is a many-to-many LLM-based translation model that utilizes a two-step fine-tuning process on monolingual and parallel data to achieve strong translation performance. ALMA-R builds upon ALMA models with LoRA fine-tuning and Contrastive Preference Optimization (CPO) for even better performance, surpassing GPT-4 and WMT winners. The repository provides ALMA and ALMA-R models, datasets, environment setup, evaluation scripts, training guides, and data information for users to leverage these models for translation tasks.

qa-mdt

This repository provides an implementation of QA-MDT, integrating state-of-the-art models for music generation. It offers a Quality-Aware Masked Diffusion Transformer for enhanced music generation. The code is based on various repositories like AudioLDM, PixArt-alpha, MDT, AudioMAE, and Open-Sora. The implementation allows for training and fine-tuning the model with different strategies and datasets. The repository also includes instructions for preparing datasets in LMDB format and provides a script for creating a toy LMDB dataset. The model can be used for music generation tasks, with a focus on quality injection to enhance the musicality of generated music.

OpenMusic

OpenMusic is a repository providing an implementation of QA-MDT, a Quality-Aware Masked Diffusion Transformer for music generation. The code integrates state-of-the-art models and offers training strategies for music generation. The repository includes implementations of AudioLDM, PixArt-alpha, MDT, AudioMAE, and Open-Sora. Users can train or fine-tune the model using different strategies and datasets. The model is well-pretrained and can be used for music generation tasks. The repository also includes instructions for preparing datasets, training the model, and performing inference. Contact information is provided for any questions or suggestions regarding the project.

MathVerse

MathVerse is an all-around visual math benchmark designed to evaluate the capabilities of Multi-modal Large Language Models (MLLMs) in visual math problem-solving. It collects high-quality math problems with diagrams to assess how well MLLMs can understand visual diagrams for mathematical reasoning. The benchmark includes 2,612 problems transformed into six versions each, contributing to 15K test samples. It also introduces a Chain-of-Thought (CoT) Evaluation strategy for fine-grained assessment of output answers.

For similar tasks

NExT-GPT

NExT-GPT is an end-to-end multimodal large language model that can process input and generate output in various combinations of text, image, video, and audio. It leverages existing pre-trained models and diffusion models with end-to-end instruction tuning. The repository contains code, data, and model weights for NExT-GPT, allowing users to work with different modalities and perform tasks like encoding, understanding, reasoning, and generating multimodal content.

ml-engineering

This repository provides a comprehensive collection of methodologies, tools, and step-by-step instructions for successful training of large language models (LLMs) and multi-modal models. It is a technical resource suitable for LLM/VLM training engineers and operators, containing numerous scripts and copy-n-paste commands to facilitate quick problem-solving. The repository is an ongoing compilation of the author's experiences training BLOOM-176B and IDEFICS-80B models, and currently focuses on the development and training of Retrieval Augmented Generation (RAG) models at Contextual.AI. The content is organized into six parts: Insights, Hardware, Orchestration, Training, Development, and Miscellaneous. It includes key comparison tables for high-end accelerators and networks, as well as shortcuts to frequently needed tools and guides. The repository is open to contributions and discussions, and is licensed under Attribution-ShareAlike 4.0 International.

distributed-llama

Distributed Llama is a tool that allows you to run large language models (LLMs) on weak devices or make powerful devices even more powerful by distributing the workload and dividing the RAM usage. It uses TCP sockets to synchronize the state of the neural network, and you can easily configure your AI cluster by using a home router. Distributed Llama supports models such as Llama 2 (7B, 13B, 70B) chat and non-chat versions, Llama 3, and Grok-1 (314B).

Awesome-LLMs-for-Video-Understanding

Awesome-LLMs-for-Video-Understanding is a repository dedicated to exploring Video Understanding with Large Language Models. It provides a comprehensive survey of the field, covering models, pretraining, instruction tuning, and hybrid methods. The repository also includes information on tasks, datasets, and benchmarks related to video understanding. Contributors are encouraged to add new papers, projects, and materials to enhance the repository.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

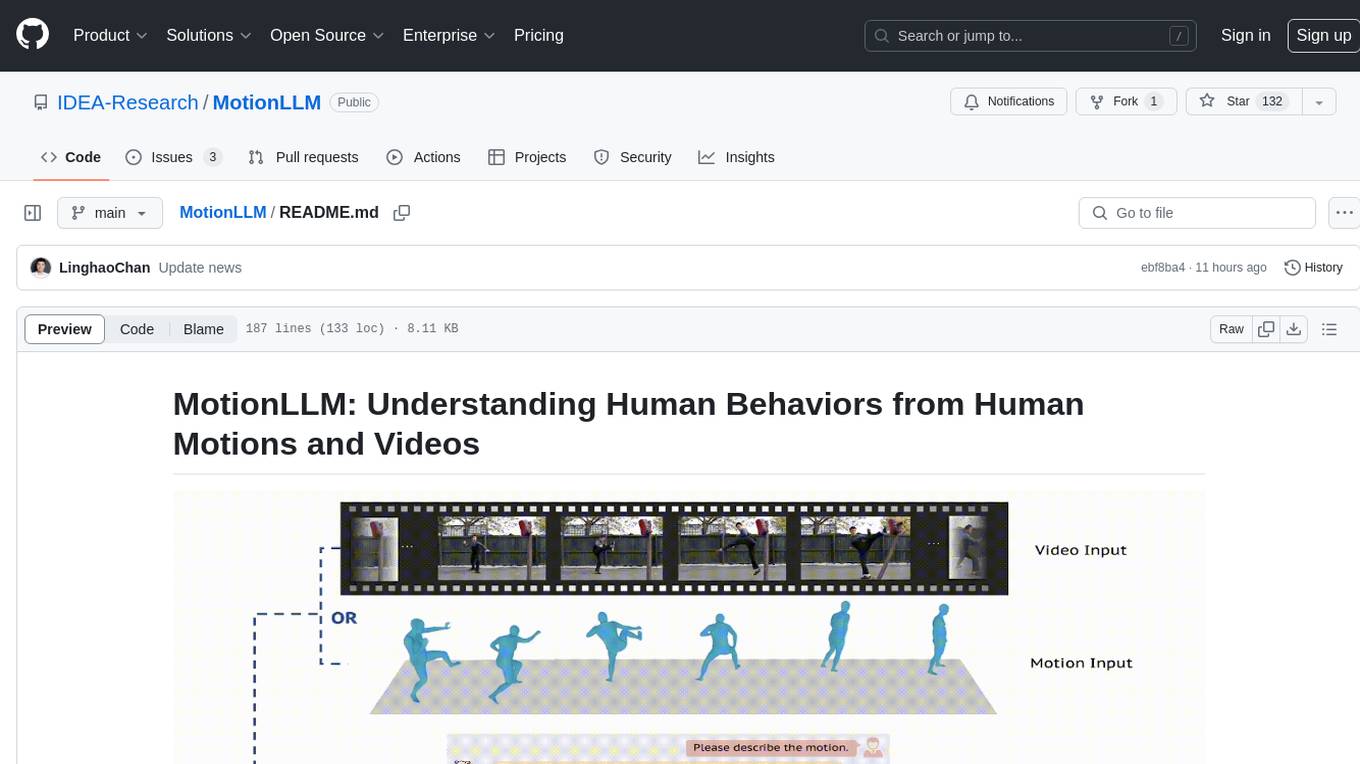

MotionLLM

MotionLLM is a framework for human behavior understanding that leverages Large Language Models (LLMs) to jointly model videos and motion sequences. It provides a unified training strategy, dataset MoVid, and MoVid-Bench for evaluating human behavior comprehension. The framework excels in captioning, spatial-temporal comprehension, and reasoning abilities.

LLMGA

LLMGA (Multimodal Large Language Model-based Generation Assistant) is a tool that leverages Large Language Models (LLMs) to assist users in image generation and editing. It provides detailed language generation prompts for precise control over Stable Diffusion (SD), resulting in more intricate and precise content in generated images. The tool curates a dataset for prompt refinement, similar image generation, inpainting & outpainting, and visual question answering. It offers a two-stage training scheme to optimize SD alignment and a reference-based restoration network to alleviate texture, brightness, and contrast disparities in image editing. LLMGA shows promising generative capabilities and enables wider applications in an interactive manner.

LLMs

LLMs is a Chinese large language model technology stack for practical use. It includes high-availability pre-training, SFT, and DPO preference alignment code framework. The repository covers pre-training data cleaning, high-concurrency framework, SFT dataset cleaning, data quality improvement, and security alignment work for Chinese large language models. It also provides open-source SFT dataset construction, pre-training from scratch, and various tools and frameworks for data cleaning, quality optimization, and task alignment.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.