gemini-next-chat

Deploy your private Gemini application for free with one click, supporting Gemini 1.5, Gemini 2.0 models.

Stars: 1075

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

README:

Deploy your private Gemini application for free with one click, supporting Gemini 1.5 Pro, Gemini 1.5 Flash, Gemini Pro and Gemini Pro Vision models.

English · 简体中文

Web App / Desktop App / Issues

Share GeminiNextChat Repository

Simple interface, supports image recognition and voice conversation

Supports Gemini 1.5 and Gemini 2.0 multimodal models

Support plugins, with built-in Web search, Web reader, Arxiv search, Weather and other practical plugins

A cross-platform application client that supports a permanent menu bar, doubling your work efficiency

Note: If you encounter problems during the use of the project, you can check the known problems and solutions of FAQ.

- Deploy for free with one-click on Vercel in under 1 minute

- Provides a very small (~4MB) cross-platform client (Windows/MacOS/Linux), can stay in the menu bar to improve office efficiency

- Supports multi-modal models and can understand images, videos, audios and some text documents

- Talk mode: Let you talk directly to Gemini

- Visual recognition allows Gemini to understand the content of the picture

- Assistant market with hundreds of selected system instruction

- Support plugins, with built-in Web search, Web reader, Arxiv search, Weather and other practical plugins

- Conversation list, so you can keep track of important conversations or discuss different topics with Gemini

- Artifact support, allowing you to modify the conversation content more elegantly

- Full Markdown support: KaTex formulas, code highlighting, Mermaid charts, etc.

- Automatically compress contextual chat records to save Tokens while supporting very long conversations

- Privacy and security, all data is saved locally in the user's browser

- Support PWA, can run as an application

- Well-designed UI, responsive design, supports dark mode

- Extremely fast first screen loading speed, supporting streaming response

- Static deployment supports deployment on any website service that supports static pages, such as Github Page, Cloudflare, Vercel, etc.

- Multi-language support: English、简体中文、繁体中文、日本語、한국어、Español、Deutsch、Français、Português、Русский and العربية

- [x] Reconstruct the topic square and introduce Prompt list

- [x] Use tauri to package desktop applications

- [x] Implementation based on functionCall plug-in

- [x] Support conversation list

- [x] Support conversation export features

- [ ] Enable Multimodal Live API

-

Get Gemini API Key

-

One-click deployment of the project, you can choose to deploy to Vercel or Cloudflare

-

Start using

Currently the project supports deployment to Cloudflare, but you need to follow How to deploy to Cloudflare Page to do it.

If you want to update instantly, you can check out the GitHub documentation to learn how to synchronize a forked project with upstream code.

You can star or watch this project or follow author to get release notifications in time.

Your Gemini api key. If you need to enable the server api, this is required.

Supports multiple keys, each key is separated by ,, i.e. key1,key2,key3

Default:

https://generativelanguage.googleapis.com

Examples:

http://your-gemini-proxy.com

Override Gemini api request base url. **To avoid server-side proxy url leaks, links in front-end pages will not be overwritten. **

Custom model list, default: all.

File upload size limit. There is no file size limit by default.

Access password.

Injected script code can be used for statistics or error tracking.

Only used to set the page base path in static deployment mode.

This project provides limited access control. Please add an environment variable named ACCESS_PASSWORD on the vercel environment variables page.

After adding or modifying this environment variable, please redeploy the project for the changes to take effect.

This project supports custom model lists. Please add an environment variable named NEXT_PUBLIC_GEMINI_MODEL_LIST in the .env file or environment variables page.

The default model list is represented by all, and multiple models are separated by ,.

If you need to add a new model, please directly write the model name all,new-model-name, or use the + symbol plus the model name to add, that is, all,+new-model-name.

If you want to remove a model from the model list, use the - symbol followed by the model name to indicate removal, i.e. all,-existing-model-name. If you want to remove the default model list, you can use -all.

If you want to set a default model, you can use the @ symbol plus the model name to indicate the default model, that is, all,@default-model-name.

If you have not installed pnpm

npm install -g pnpm# 1. install nodejs and yarn first

# 2. config local variables, please change `.env.example` to `.env` or `.env.local`

# 3. run

pnpm install

pnpm devNodeJS >= 18, Docker >= 20

The Docker version needs to be 20 or above, otherwise it will prompt that the image cannot be found.

⚠️ Note: Most of the time, the docker version will lag behind the latest version by 1 to 2 days, so the "update exists" prompt will continue to appear after deployment, which is normal.

docker pull xiangfa/talk-with-gemini:latest

docker run -d --name talk-with-gemini -p 5481:3000 xiangfa/talk-with-geminiYou can also specify additional environment variables:

docker run -d --name talk-with-gemini \

-p 5481:3000 \

-e GEMINI_API_KEY=AIzaSy... \

-e ACCESS_PASSWORD=your-password \

xiangfa/talk-with-geminiIf you need to specify other environment variables, please add -e key=value to the above command to specify it.

Deploy using docker-compose.yml:

version: '3.9'

services:

talk-with-gemini:

image: xiangfa/talk-with-gemini

container_name: talk-with-gemini

environment:

- GEMINI_API_KEY=AIzaSy...

- ACCESS_PASSWORD=your-password

ports:

- 5481:3000You can also build a static page version directly, and then upload all files in the out directory to any website service that supports static pages, such as Github Page, Cloudflare, Vercel, etc..

pnpm build:exportIf you deploy the project in a subdirectory and encounter resource loading failures when accessing, please add EXPORT_BASE_PATH=/path/project in the .env file or variable setting page.

-

Use Cloudflare AI Gateway to forward APIs. Currently, Cloudflare AI Gateway already supports Google Vertex AI related APIs. For how to use it, please refer to How to Use Cloudflare AI Gateway. This solution is fast and stable, and is recommended.

-

Use Cloudflare Worker for API proxy forwarding. For detailed settings, please refer to How to Use Cloudflare Worker Proxy API. Note that this solution may not work properly in some cases.

Currently, the two kind models Gemini 1.5 and Gemini 2.0 support most images, audios, videos and some text files. For details. For other document types, we will try to use LangChain.js later.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gemini-next-chat

Similar Open Source Tools

gemini-next-chat

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

esp-ai

ESP-AI provides a complete AI conversation solution for your development board, including IAT+LLM+TTS integration solutions for ESP32 series development boards. It can be injected into projects without affecting existing ones. By providing keys from platforms like iFlytek, Jiling, and local services, you can run the services without worrying about interactions between services or between development boards and services. The project's server-side code is based on Node.js, and the hardware code is based on Arduino IDE.

PySpur

PySpur is a graph-based editor designed for LLM workflows, offering modular building blocks for easy workflow creation and debugging at node level. It allows users to evaluate final performance and promises self-improvement features in the future. PySpur is easy-to-hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies, making it a versatile tool for workflow management in the field of AI and machine learning.

gpt-translate

Markdown Translation BOT is a GitHub action that translates markdown files into multiple languages using various AI models. It supports markdown, markdown-jsx, and json files only. The action can be executed by individuals with write permissions to the repository, preventing API abuse by non-trusted parties. Users can set up the action by providing their API key and configuring the workflow settings. The tool allows users to create comments with specific commands to trigger translations and automatically generate pull requests or add translated files to existing pull requests. It supports multiple file translations and can interpret any language supported by GPT-4 or GPT-3.5.

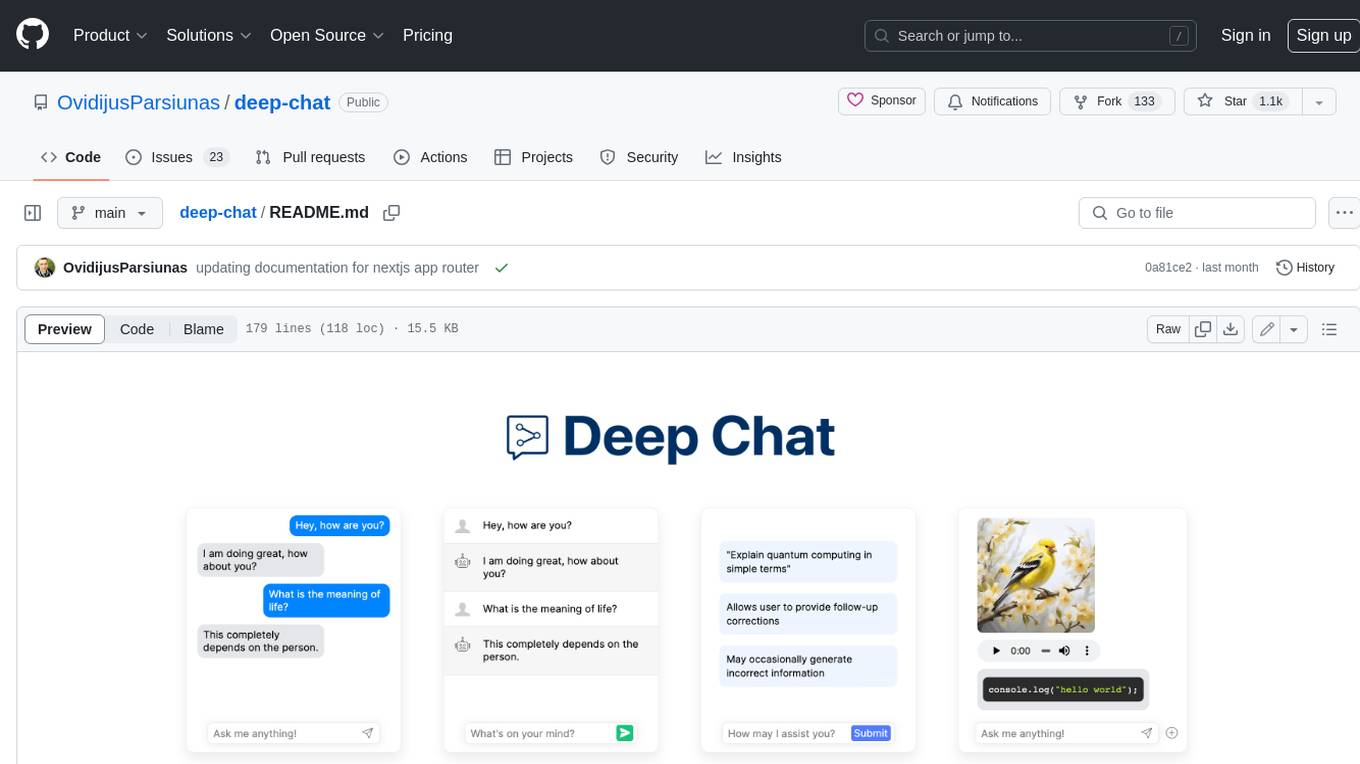

deep-chat

Deep Chat is a fully customizable AI chat component that can be injected into your website with minimal to no effort. Whether you want to create a chatbot that leverages popular APIs such as ChatGPT or connect to your own custom service, this component can do it all! Explore deepchat.dev to view all of the available features, how to use them, examples and more!

airi

Airi is a VTuber project heavily inspired by Neuro-sama. It is capable of various functions such as playing Minecraft, chatting in Telegram and Discord, audio input from browser and Discord, client side speech recognition, VRM and Live2D model support with animations, and more. The project also includes sub-projects like unspeech, hfup, Drizzle ORM driver for DuckDB WASM, and various other tools. Airi uses models like whisper-large-v3-turbo from Hugging Face and is similar to projects like z-waif, amica, eliza, AI-Waifu-Vtuber, and AIVTuber. The project acknowledges contributions from various sources and implements packages to interact with LLMs and models.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

aimeos

Aimeos is a full-featured e-commerce platform that is ultra-fast, cloud-native, and API-first. It offers a wide range of features including JSON REST API, GraphQL API, multi-vendor support, various product types, subscriptions, multiple payment gateways, admin backend, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. It is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and various cloud environments. Aimeos is designed for scalability, security, and performance, catering to a diverse range of e-commerce needs.

EmotiVoice

EmotiVoice is a powerful and modern open-source text-to-speech engine that supports emotional synthesis, enabling users to create speech with a wide range of emotions such as happy, excited, sad, and angry. It offers over 2000 different voices in both English and Chinese. Users can access EmotiVoice through an easy-to-use web interface or a scripting interface for batch generation of results. The tool is continuously evolving with new features and updates, prioritizing community input and user feedback.

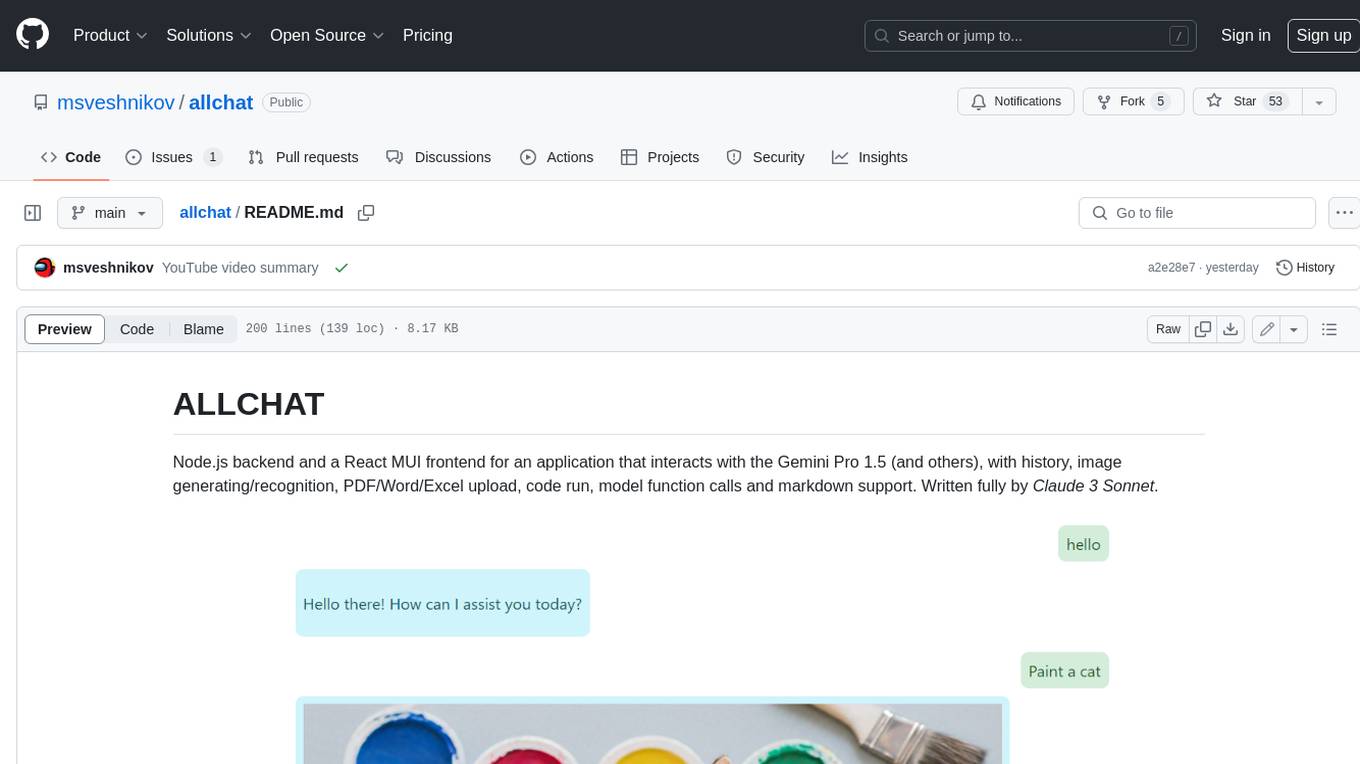

allchat

ALLCHAT is a Node.js backend and React MUI frontend for an application that interacts with the Gemini Pro 1.5 (and others), with history, image generating/recognition, PDF/Word/Excel upload, code run, model function calls and markdown support. It is a comprehensive tool that allows users to connect models to the world with Web Tools, run locally, deploy using Docker, configure Nginx, and monitor the application using a dockerized monitoring solution (Loki+Grafana).

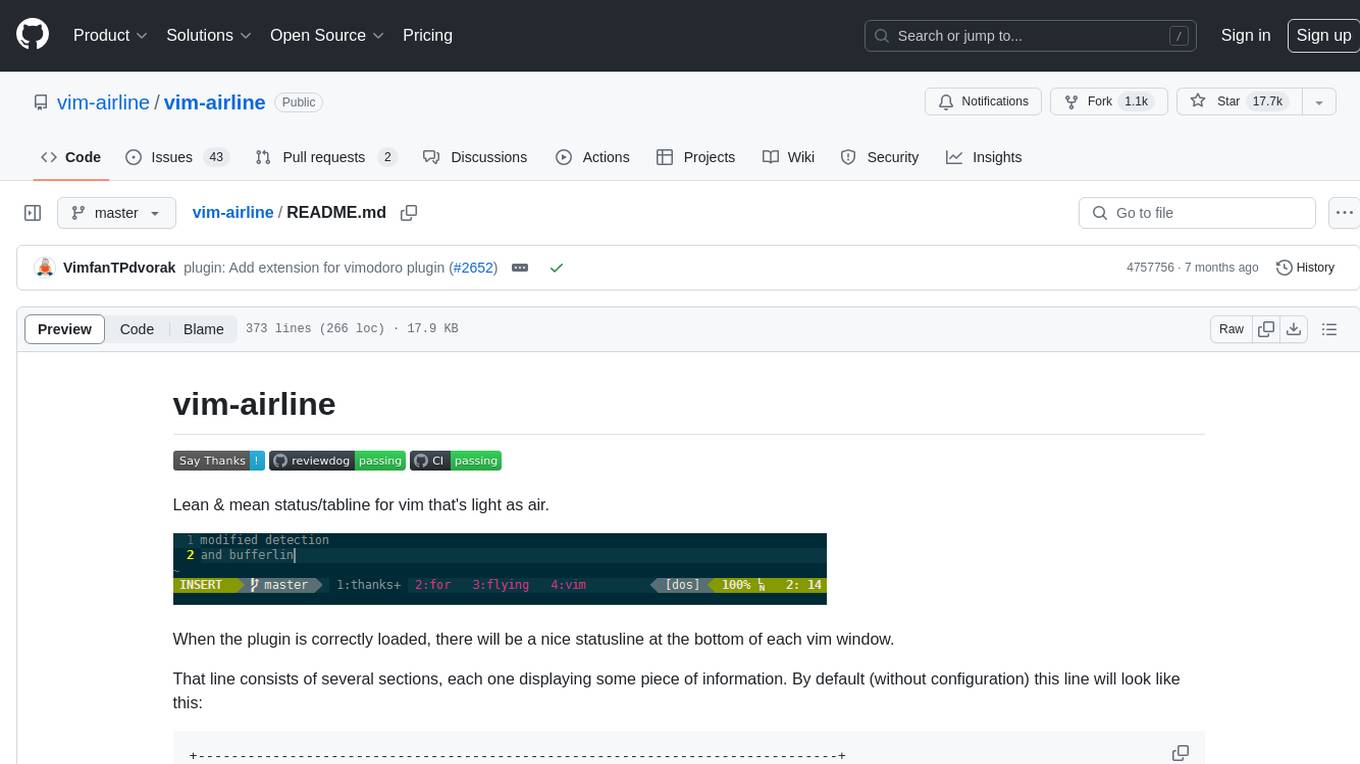

vim-airline

Vim-airline is a lean and mean status/tabline plugin for Vim that provides a nice statusline at the bottom of each Vim window. It consists of several sections displaying information such as mode, environment status, filename, filetype, file encoding, and current position in the file. The plugin is highly customizable and integrates with various plugins, providing a tiny core with extensibility in mind. It is optimized for speed, supports multiple themes, and integrates seamlessly with other plugins. Vim-airline is written in 100% Vimscript, eliminating the need for Python. The plugin aims to be stable and includes a unit testing suite for reliability.

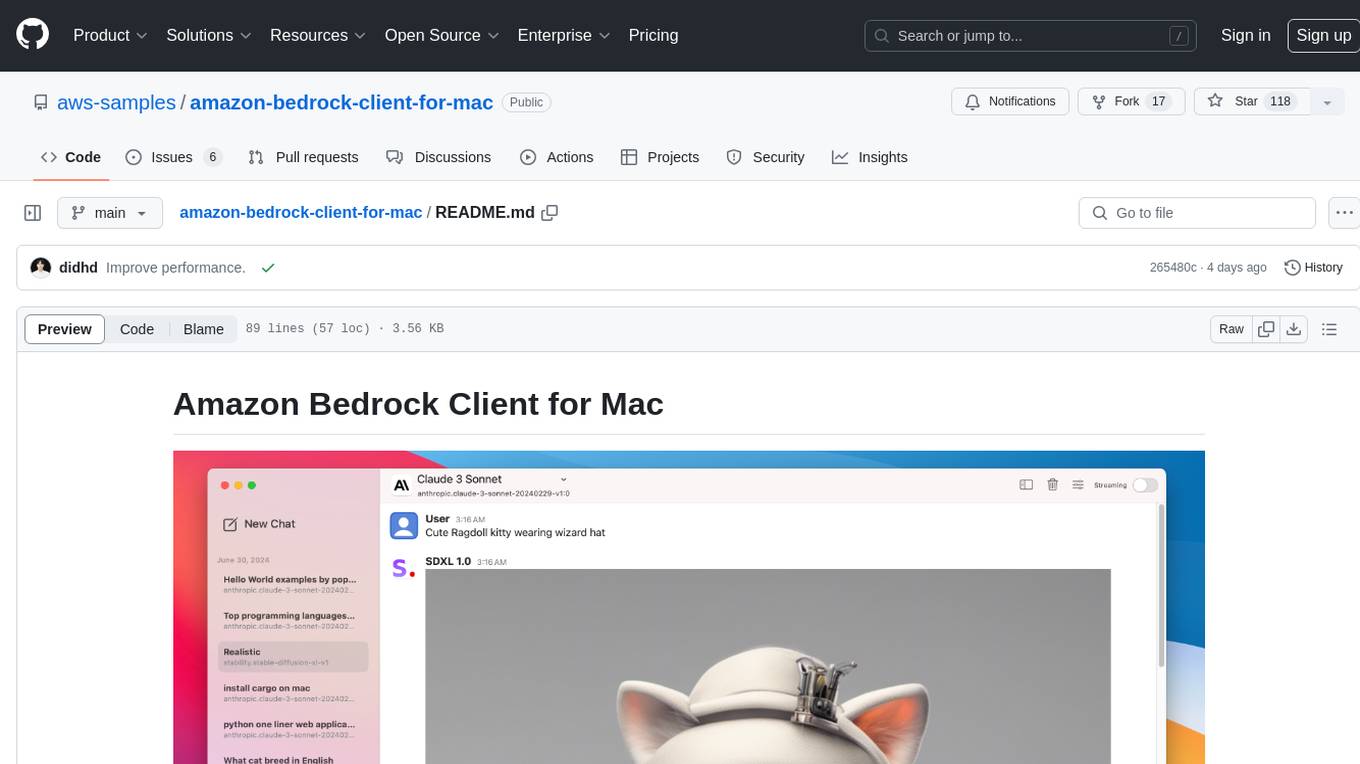

amazon-bedrock-client-for-mac

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop. It provides seamless interaction with multiple Amazon Bedrock models, real-time chat interface, easy model switching, support for various AI tasks, and native Dark Mode support. Built with SwiftUI for optimal performance and modern UI.

svelte-commerce

Svelte Commerce is an open-source frontend for eCommerce, utilizing a PWA and headless approach with a modern JS stack. It supports integration with various eCommerce backends like MedusaJS, Woocommerce, Bigcommerce, and Shopify. The API flexibility allows seamless connection with third-party tools such as payment gateways, POS systems, and AI services. Svelte Commerce offers essential eCommerce features, is both SSR and SPA, superfast, and free to download and modify. Users can easily deploy it on Netlify or Vercel with zero configuration. The tool provides features like headless commerce, authentication, cart & checkout, TailwindCSS styling, server-side rendering, proxy + API integration, animations, lazy loading, search functionality, faceted filters, and more.

ChatTTS

ChatTTS is a generative speech model optimized for dialogue scenarios, providing natural and expressive speech synthesis with fine-grained control over prosodic features. It supports multiple speakers and surpasses most open-source TTS models in terms of prosody. The model is trained with 100,000+ hours of Chinese and English audio data, and the open-source version on HuggingFace is a 40,000-hour pre-trained model without SFT. The roadmap includes open-sourcing additional features like VQ encoder, multi-emotion control, and streaming audio generation. The tool is intended for academic and research use only, with precautions taken to limit potential misuse.

quickvid

QuickVid is an open-source video summarization tool that uses AI to generate summaries of YouTube videos. It is built with Whisper, GPT, LangChain, and Supabase. QuickVid can be used to save time and get the essence of any YouTube video with intelligent summarization.

For similar tasks

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

ChatGPT-Telegram-Bot

The ChatGPT Telegram Bot is a powerful Telegram bot that utilizes various GPT models, including GPT3.5, GPT4, GPT4 Turbo, GPT4 Vision, DALL·E 3, Groq Mixtral-8x7b/LLaMA2-70b, and Claude2.1/Claude3 opus/sonnet API. It enables users to engage in efficient conversations and information searches on Telegram. The bot supports multiple AI models, online search with DuckDuckGo and Google, user-friendly interface, efficient message processing, document interaction, Markdown rendering, and convenient deployment options like Zeabur, Replit, and Docker. Users can set environment variables for configuration and deployment. The bot also provides Q&A functionality, supports model switching, and can be deployed in group chats with whitelisting. The project is open source under GPLv3 license.

LLM_AppDev-HandsOn

This repository showcases how to build a simple LLM-based chatbot for answering questions based on documents using retrieval augmented generation (RAG) technique. It also provides guidance on deploying the chatbot using Podman or on the OpenShift Container Platform. The workshop associated with this repository introduces participants to LLMs & RAG concepts and demonstrates how to customize the chatbot for specific purposes. The software stack relies on open-source tools like streamlit, LlamaIndex, and local open LLMs via Ollama, making it accessible for GPU-constrained environments.

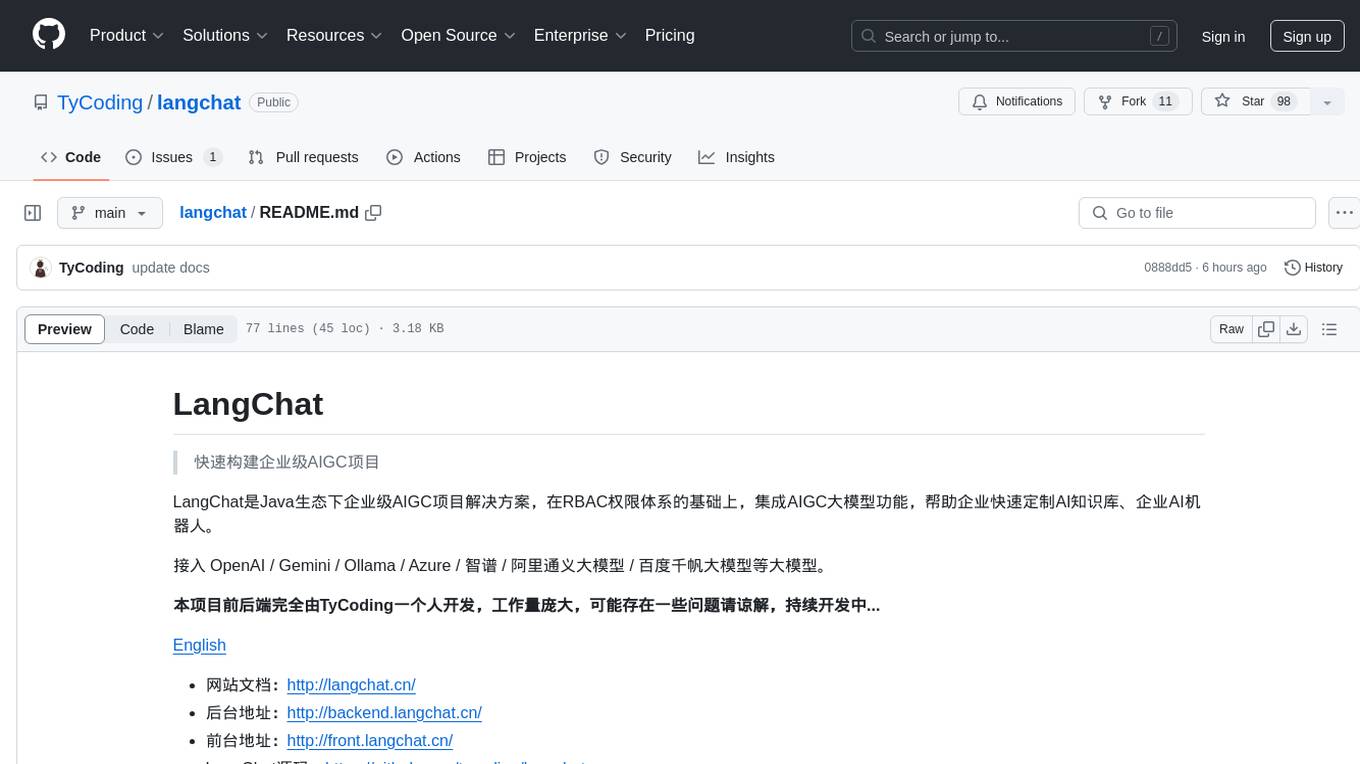

langchat

LangChat is an enterprise AIGC project solution in the Java ecosystem. It integrates AIGC large model functionality on top of the RBAC permission system to help enterprises quickly customize AI knowledge bases and enterprise AI robots. It supports integration with various large models such as OpenAI, Gemini, Ollama, Azure, Zhifu, Alibaba Tongyi, Baidu Qianfan, etc. The project is developed solely by TyCoding and is continuously evolving. It features multi-modality, dynamic configuration, knowledge base support, advanced RAG capabilities, function call customization, multi-channel deployment, workflows visualization, AIGC client application, and more.

ai-sdk-chrome-ai

The ai-sdk-chrome-ai repository is an open-source chatbot built with Next.js, the Vercel AI SDK, and the Chrome AI provider. It features Next.js App Router, Vercel AI SDK for interacting with the Gemini Nano model, shadcn/ui, Tailwind CSS styling, and Radix UI for headless component primitives. Users can deploy their own version of the chatbot to Vercel with one click and run it locally by installing dependencies and running the dev server. The repository provides a template for creating and customizing a chatbot powered by AI technology.

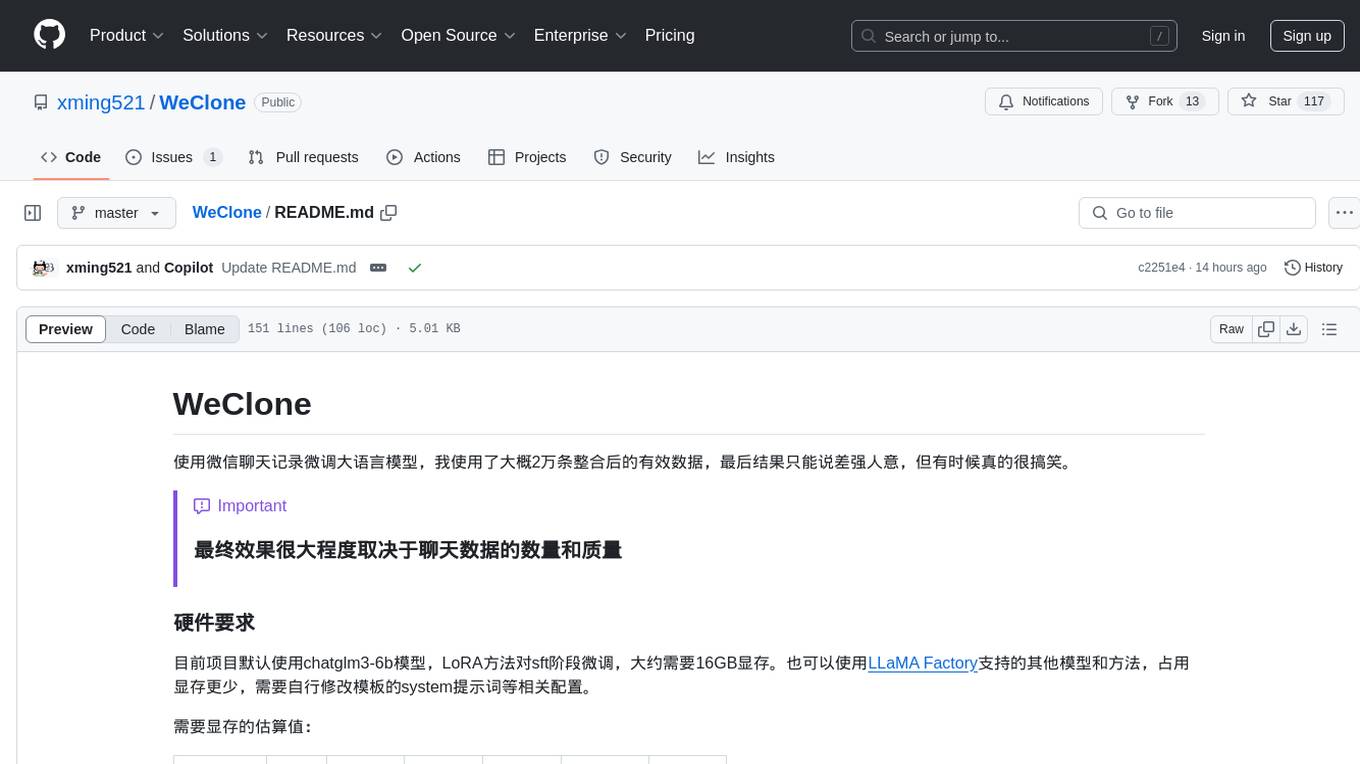

WeClone

WeClone is a tool that fine-tunes large language models using WeChat chat records. It utilizes approximately 20,000 integrated and effective data points, resulting in somewhat satisfactory outcomes that are occasionally humorous. The tool's effectiveness largely depends on the quantity and quality of the chat data provided. It requires a minimum of 16GB of GPU memory for training using the default chatglm3-6b model with LoRA method. Users can also opt for other models and methods supported by LLAMA Factory, which consume less memory. The tool has specific hardware and software requirements, including Python, Torch, Transformers, Datasets, Accelerate, and other optional packages like CUDA and Deepspeed. The tool facilitates environment setup, data preparation, data preprocessing, model downloading, parameter configuration, model fine-tuning, and inference through a browser demo or API service. Additionally, it offers the ability to deploy a WeChat chatbot, although users should be cautious due to the risk of account suspension by WeChat.

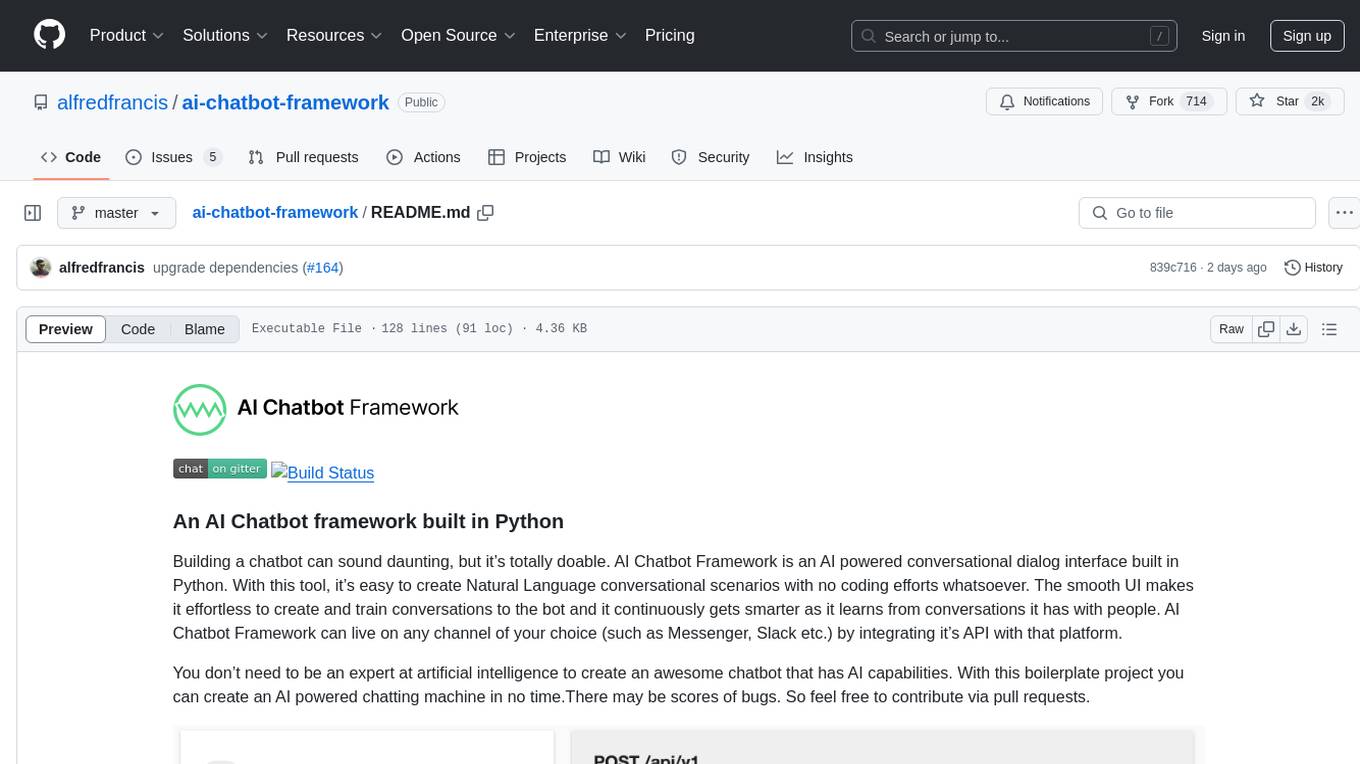

ai-chatbot-framework

An AI Chatbot framework built in Python. It allows users to easily create Natural Language conversational scenarios with no coding efforts. The tool continuously learns from conversations to improve its capabilities. It can be integrated with various channels like Messenger and Slack. Users can create AI-powered chatbots without expertise in artificial intelligence.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.