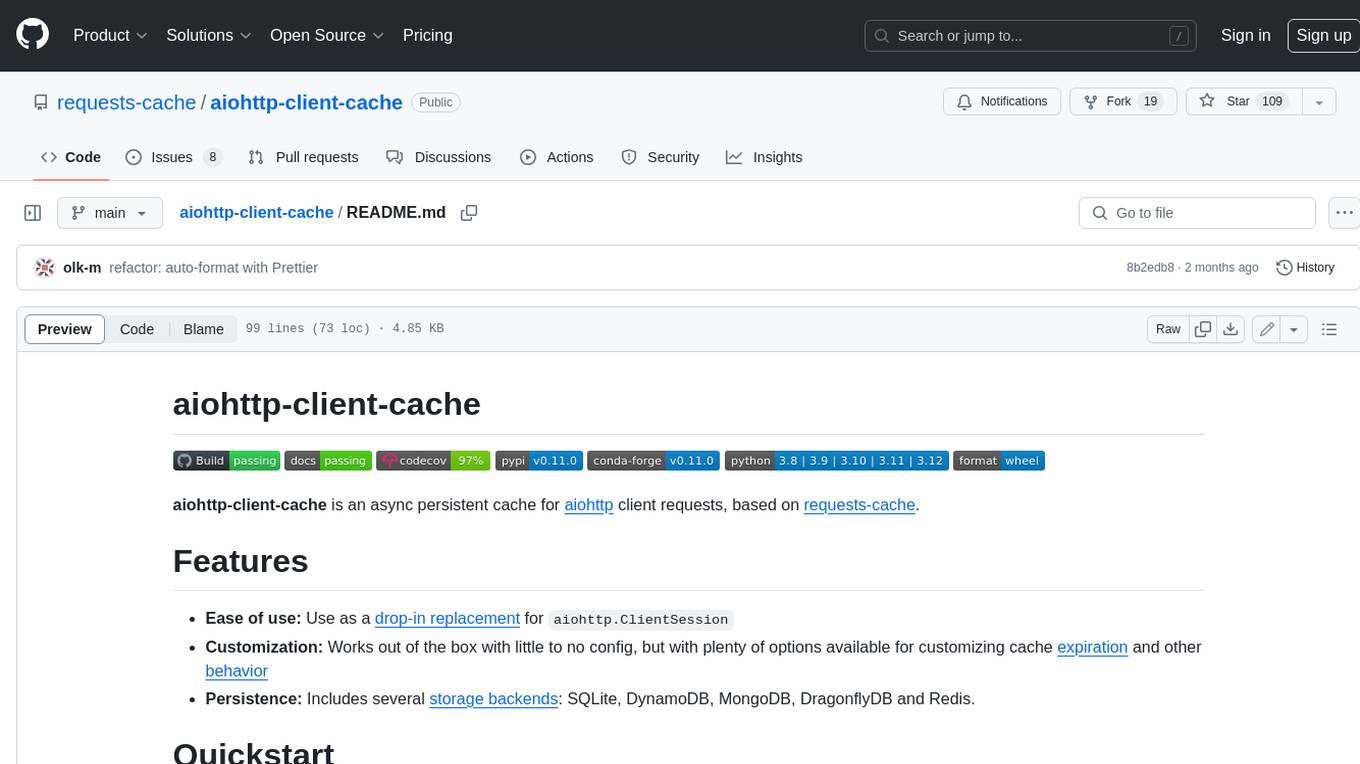

aiohttp-client-cache

An async persistent cache for aiohttp requests

Stars: 118

aiohttp-client-cache is an asynchronous persistent cache for aiohttp client requests, based on requests-cache. It is easy to use, customizable, and persistent, with several storage backends available, including SQLite, DynamoDB, MongoDB, DragonflyDB, and Redis.

README:

aiohttp-client-cache is an async persistent cache for aiohttp client requests, based on requests-cache.

-

Ease of use: Use as a drop-in replacement

for

aiohttp.ClientSession - Customization: Works out of the box with little to no config, but with plenty of options available for customizing cache expiration and other behavior

- Persistence: Includes several storage backends: SQLite, DynamoDB, MongoDB, DragonflyDB and Redis.

First, install with pip (python 3.8+ required):

pip install aiohttp-client-cache[all]Note:

Adding [all] will install optional dependencies for all supported backends. When adding this

library to your application, you can include only the dependencies you actually need; see individual

backend docs and pyproject.toml

for details.

Next, use aiohttp_client_cache.CachedSession in place of aiohttp.ClientSession. To briefly demonstrate how to use it:

Replace this:

from aiohttp import ClientSession

async with ClientSession() as session:

await session.get('http://httpbin.org/delay/1')With this:

from aiohttp_client_cache import CachedSession, SQLiteBackend

async with CachedSession(cache=SQLiteBackend('demo_cache')) as session:

await session.get('http://httpbin.org/delay/1')The URL in this example adds a delay of 1 second, simulating a slow or rate-limited website.

With caching, the response will be fetched once, saved to demo_cache.sqlite, and subsequent

requests will return the cached response near-instantly.

Several options are available to customize caching behavior. This example demonstrates a few of them:

# fmt: off

from aiohttp_client_cache import SQLiteBackend

cache = SQLiteBackend(

cache_name='~/.cache/aiohttp-requests.db', # For SQLite, this will be used as the filename

expire_after=60*60, # By default, cached responses expire in an hour

urls_expire_after={'*.fillmurray.com': -1}, # Requests for any subdomain on this site will never expire

allowed_codes=(200, 418), # Cache responses with these status codes

allowed_methods=['GET', 'POST'], # Cache requests with these HTTP methods

include_headers=True, # Cache requests with different headers separately

ignored_params=['auth_token'], # Keep using the cached response even if this param changes

timeout=2.5, # Connection timeout for SQLite backend

)To learn more, see:

If there is a feature you want, if you've discovered a bug, or if you have other general feedback, please create an issue for it!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiohttp-client-cache

Similar Open Source Tools

aiohttp-client-cache

aiohttp-client-cache is an asynchronous persistent cache for aiohttp client requests, based on requests-cache. It is easy to use, customizable, and persistent, with several storage backends available, including SQLite, DynamoDB, MongoDB, DragonflyDB, and Redis.

scalene

Scalene is a high-performance CPU, GPU, and memory profiler for Python that provides detailed information and runs faster than many other profilers. It incorporates AI-powered proposed optimizations, allowing users to generate optimization suggestions by clicking on specific lines or regions of code. Scalene separates time spent in Python from native code, highlights hotspots, and identifies memory usage per line. It supports GPU profiling on NVIDIA-based systems and detects memory leaks. Users can generate reduced profiles, profile specific functions using decorators, and suspend/resume profiling for background processes. Scalene is available as a pip or conda package and works on various platforms. It offers features like profiling at the line level, memory trends, copy volume reporting, and leak detection.

mmore

MMORE is an open-source, end-to-end pipeline for ingesting, processing, indexing, and retrieving knowledge from various file types such as PDFs, Office docs, images, audio, video, and web pages. It standardizes content into a unified multimodal format, supports distributed CPU/GPU processing, and offers hybrid dense+sparse retrieval with an integrated RAG service through CLI and APIs.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

SciPIP

SciPIP is a scientific paper idea generation tool powered by a large language model (LLM) designed to assist researchers in quickly generating novel research ideas. It conducts a literature review based on user-provided background information and generates fresh ideas for potential studies. The tool is designed to help researchers in various fields by providing a GUI environment for idea generation, supporting NLP, multimodal, and CV fields, and allowing users to interact with the tool through a web app or terminal. SciPIP uses Neo4j as its database and provides functionalities for generating new ideas, fetching papers, and constructing the database.

node-llama-cpp

node-llama-cpp is a tool that allows users to run AI models locally on their machines. It provides pre-built bindings with the option to build from source using cmake. Users can interact with text generation models, chat with models using a chat wrapper, and force models to generate output in a parseable format like JSON. The tool supports Metal and CUDA, offers CLI functionality for chatting with models without coding, and ensures up-to-date compatibility with the latest version of llama.cpp. Installation includes pre-built binaries for macOS, Linux, and Windows, with the option to build from source if binaries are not available for the platform.

Easy-Translate

Easy-Translate is a script designed for translating large text files with a single command. It supports various models like M2M100, NLLB200, SeamlessM4T, LLaMA, and Bloom. The tool is beginner-friendly and offers seamless and customizable features for advanced users. It allows acceleration on CPU, multi-CPU, GPU, multi-GPU, and TPU, with support for different precisions and decoding strategies. Easy-Translate also provides an evaluation script for translations. Built on HuggingFace's Transformers and Accelerate library, it supports prompt usage and loading huge models efficiently.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

lunary

Lunary is an open-source observability and prompt platform for Large Language Models (LLMs). It provides a suite of features to help AI developers take their applications into production, including analytics, monitoring, prompt templates, fine-tuning dataset creation, chat and feedback tracking, and evaluations. Lunary is designed to be usable with any model, not just OpenAI, and is easy to integrate and self-host.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

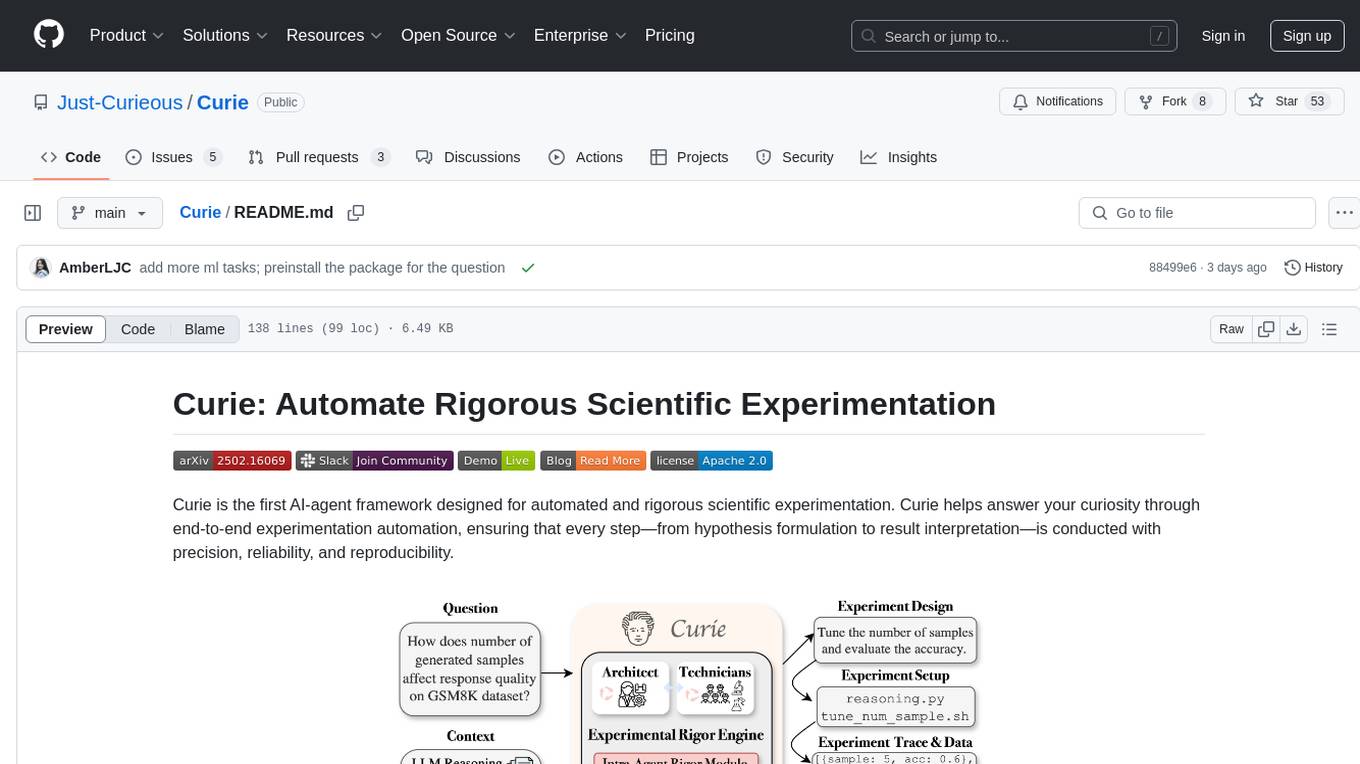

Curie

Curie is an AI-agent framework designed for automated and rigorous scientific experimentation. It automates end-to-end workflow management, ensures methodical procedure, reliability, and interpretability, and supports ML research, system analysis, and scientific discovery. It provides a benchmark with questions from 4 Computer Science domains. Users can customize experiment agents and adapt to their own tasks by configuring base_config.json. Curie is suitable for hyperparameter tuning, algorithm behavior analysis, system performance benchmarking, and automating computational simulations.

dLLM-RL

dLLM-RL is a revolutionary reinforcement learning framework designed for Diffusion Large Language Models. It supports various models with diverse structures, offers inference acceleration, RL training capabilities, and SFT functionalities. The tool introduces TraceRL for trajectory-aware RL and diffusion-based value models for optimization stability. Users can download and try models like TraDo-4B-Instruct and TraDo-8B-Instruct. The tool also provides support for multi-node setups and easy building of reinforcement learning methods. Additionally, it offers supervised fine-tuning strategies for different models and tasks.

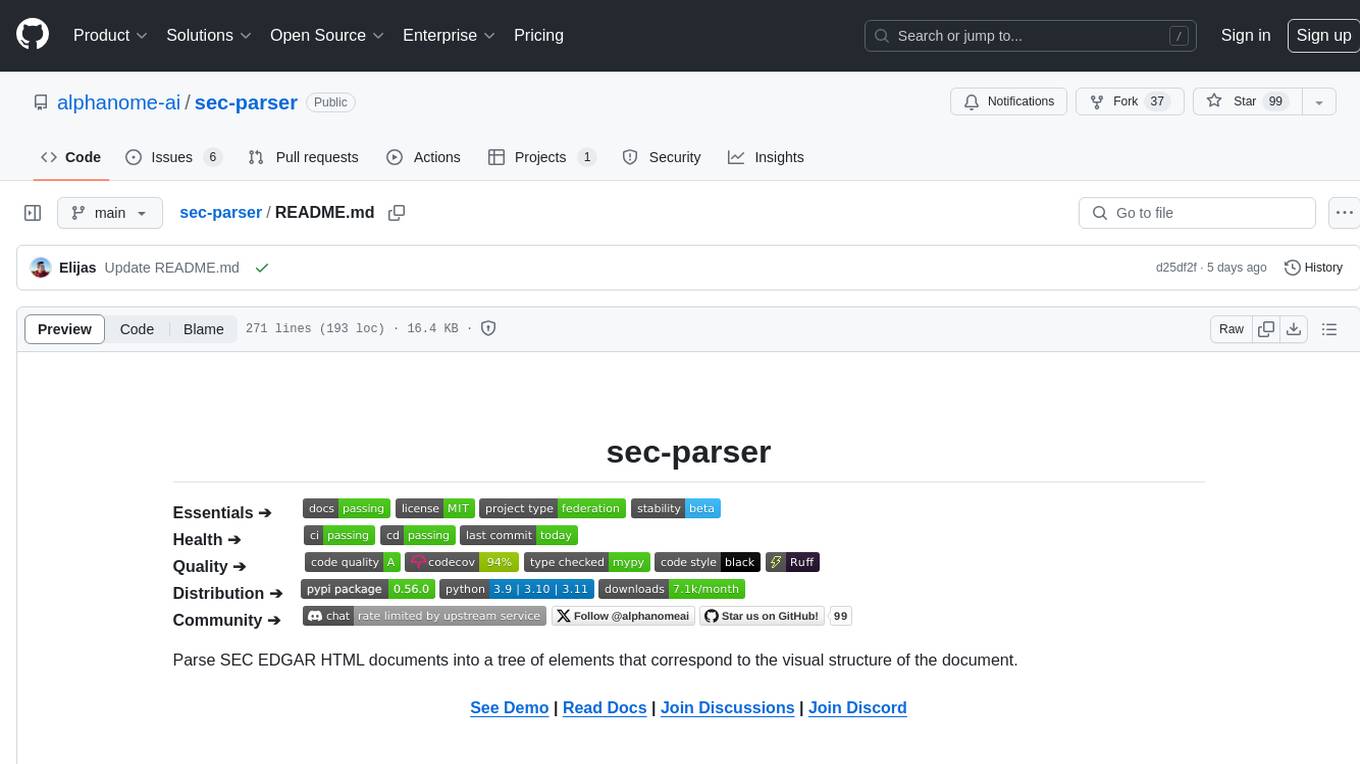

sec-parser

The `sec-parser` project simplifies extracting meaningful information from SEC EDGAR HTML documents by organizing them into semantic elements and a tree structure. It helps in parsing SEC filings for financial and regulatory analysis, analytics and data science, AI and machine learning, causal AI, and large language models. The tool is especially beneficial for AI, ML, and LLM applications by streamlining data pre-processing and feature extraction.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

For similar tasks

aiohttp-client-cache

aiohttp-client-cache is an asynchronous persistent cache for aiohttp client requests, based on requests-cache. It is easy to use, customizable, and persistent, with several storage backends available, including SQLite, DynamoDB, MongoDB, DragonflyDB, and Redis.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.