RainbowGPT

🌈RainbowGPT AI Agent & Dalle3 free & Stock Analysis & GPT-4 Free API & Private LLM Application & SQL Agent for Everyone

Stars: 86

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

README:

😜 [2024-12-27]RainbowStock_Analysis support gpt-4o-mini, gpt-4o,qwen2.5, vllm

🌈 [2023-12-15]Dalle3 Artistic Image Generation Unveiled 🎨

🎨 [2023-12-10]Simplified MySQL Management: Effortlessly navigate MySQL databases with our cornerstone Mysql Agent UI module. It offers a user-friendly interface suitable for all skill levels.

📉 [2023-12-05]Comprehensive Stock Insights: Empower financial decisions with our Stock Analysis module. Advanced technology provides a holistic view of market trends, risk assessments, and personalized recommendations.

⚙️ Technological Synergy: Benefit from the seamless integration of AI technologies like GPT-4, GPT3.5, ChatGlm3, Qwen, and more. This synergy enhances adaptability and ensures smooth information flow.

🚀 Innovation Roadmap: Stay at the forefront of AI advancements with RainbowGPT's commitment to continuous expansion and integration of emerging technologies.

Experience simplicity, insight, and creativity with RainbowGPT's powerful features!

✨ Navigate at [cookbook.openai.com] •

🦜️🔗 LangChain ⚡ Building applications with LLMs through composability ⚡ •

| Documentation | Blog | Paper | Discord | Twitter/X | Developer Slack |

- Getting Started

- Free Use of GPT API

- Knowledge Base QA Search Algorithm

- BM25 Retrievers

- EnsembleRetriever

- Common Usage Pattern

- RainbowGPT Overview

-

Install Required Packages:

Make sure your environment is set up, and install the necessary packages using the following command:

Note: If you encounter any issues, ensure that you have the correct dependencies installed.

pip install -r requirements.txt

[!TIP] To launch the entire project, you only need to execute

RainbowGPT_Launchpad_UI.py

make sure to relocate the modifiedThis step is no longer required in the newest version!3rd_modify/langchain/vectorstores/chroma.pyfile to the Langchain module's library folder and rename it to match the library file when use Local Search tool.Make sure to select the right

Rainbow_utils/chromedriver.exeto match your Chrome version when use Google Search toolThis step is crucial for proper execution. 🌈

Before using the application, follow these steps to configure API-related information in the .env file:

-

OpenAI API Key:

- Create an account on OpenAI and obtain your API key.

- Open the

.envfile and set your API key:

ReplaceOPENAI_API_KEY=YOUR_OPENAI_API_KEYYOUR_OPENAI_API_KEYwith the actual API key you obtained from OpenAI. Ensure accuracy to prevent authentication issues.

-

Local API URL (Qwen examples):

- To start a Qwen server with OpenAI-like capabilities, use the following commands:

After starting the server, configure the

pip install fastapi uvicorn openai pydantic sse_starlette python Rainbow_utils/get_local_openai_api.py

api_baseandapi_keyin your client. Ensure that the configuration follows the specified format.✨ I have already integrated it. Please fill in the corresponding apibase and apikey in UI.llm = ChatOpenAI( model_name="Qwen", openai_api_base="http://localhost:8000/v1", openai_api_key="EMPTY", streaming=False, )

- To start a Qwen server with OpenAI-like capabilities, use the following commands:

Now your environment is set up, and the API is configured. You are ready to run the application! Feel free to let me know if you have any specific preferences or additional details you'd like to include!

🌐 We are committed to expanding capacity based on usage and providing the API for free as long as we are not officially sanctioned. If you find this project helpful, please consider giving us a ⭐.

This API Key is used for forwarding API requests. Change the Host to api.chatanywhere.com.cn (preferred for domestic usage) or api.chatanywhere.cn (for international usage, domestic users need a global proxy).

- 🚀 Apply for a Free API Key in Beta

- Forwarding Host1:

https://api.chatanywhere.com.cn(Domestic relay, lower latency, recommended) - Forwarding Host2:

https://api.chatanywhere.cn(For international usage, domestic users need a global proxy) - Check your balance and usage records (announcements are also posted here): Balance Inquiry and Announcements

- The forwarding API cannot directly make requests to the official api.openai.com endpoint. Change the request address to

api.chatanywhere.com.cnto use it. Most plugins and software can be modified accordingly.

Method 1

import openai

openai.api_base = "https://api.chatanywhere.com.cn/v1"

# openai.api_base = "https://api.chatanywhere.cn/v1"Method 2 (Use if Method 1 doesn't work)

Modify the environment variable OPENAI_API_BASE. Search for how to change environment variables on your specific system. If changes to the environment variable don't take effect, restart your system.

OPENAI_API_BASE=https://api.chatanywhere.com.cn/v1

or OPENAI_API_BASE=https://api.chatanywhere.cn/v1Open Source gpt_academic

Locate the config.py file and modify the API_URL_REDIRECT configuration to the following:

API_URL_REDIRECT = {"https://api.openai.com/v1/chat/completions": "https://api.chatanywhere.com.cn/v1/chat/completions"}

# API_URL_REDIRECT = {"https://api.openai.com/v1/chat/completions": "https://api.chatanywhere.cn/v1/chat/completions"}The free API Key has a limit of 60 requests per hour per IP address and Key. If you use multiple keys under the same IP, the total hourly request limit for all keys cannot exceed 60. Similarly, if you use a single key across multiple IPs, the hourly request limit for that key cannot exceed 60.

🧠 The knowledge base QA search algorithm optimizes document retrieval through context compression. Leveraging the query context, it strategically reduces document content using a document compressor, enhancing retrieval efficiency by returning only information relevant to the query. The ensemble of retrievers combines diverse results, creating a synergy that elevates overall performance.

- BM25-based Retriever: Specialized in efficiently locating relevant documents based on keywords, making it particularly effective for sparse retrieval.

- Embedding Similarity Retriever: Utilizes embedding vectors for document and query embedding, excelling in identifying relevant documents through semantic similarity. This retriever is well-suited for dense retrieval scenarios.

🚀EnsembleRetriever is a powerful retrieval mechanism that combines the strengths of various retrievers. It takes a list of retrievers as input, integrates their results using the get_relevant_documents() methods, and reranks the outcomes using the Reciprocal Rank Fusion algorithm.

By leveraging the diverse strengths of different algorithms, EnsembleRetriever achieves superior performance compared to individual retrievers.

🔄 The most effective use of the Knowledge Base QA Search involves combining a sparse retriever (e.g., BM25) with a dense retriever (e.g., embedding similarity). This "hybrid search" optimally utilizes the complementary strengths of both retrievers for comprehensive Knowledge.

📊 Explore the Stock Analysis module and unlock valuable insights for your investment decisions! 🚀 #StockAnalysis #RainbowGPT #AIInvesting

| 👋 Retrieval Search | 📚 SQL Agent |

|---|---|

|

|

| ⚡🌐 Web Scraping Summarization | 🤖 Chatbots |

|---|---|

|

|

🚀 Explore the diverse capabilities of RainbowGPT and leverage its powerful modules for your projects! 🌈✨

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RainbowGPT

Similar Open Source Tools

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

sec-parser

The `sec-parser` project simplifies extracting meaningful information from SEC EDGAR HTML documents by organizing them into semantic elements and a tree structure. It helps in parsing SEC filings for financial and regulatory analysis, analytics and data science, AI and machine learning, causal AI, and large language models. The tool is especially beneficial for AI, ML, and LLM applications by streamlining data pre-processing and feature extraction.

atropos

Atropos is a robust and scalable framework for Reinforcement Learning Environments with Large Language Models (LLMs). It provides a flexible platform to accelerate LLM-based RL research across diverse interactive settings. Atropos supports multi-turn and asynchronous RL interactions, integrates with various inference APIs, offers a standardized training interface for experimenting with different RL algorithms, and allows for easy scalability by launching more environment instances. The framework manages diverse environment types concurrently for heterogeneous, multi-modal training.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

ChatDev

ChatDev is a virtual software company powered by intelligent agents like CEO, CPO, CTO, programmer, reviewer, tester, and art designer. These agents collaborate to revolutionize the digital world through programming. The platform offers an easy-to-use, highly customizable, and extendable framework based on large language models, ideal for studying collective intelligence. ChatDev introduces innovative methods like Iterative Experience Refinement and Experiential Co-Learning to enhance software development efficiency. It supports features like incremental development, Docker integration, Git mode, and Human-Agent-Interaction mode. Users can customize ChatChain, Phase, and Role settings, and share their software creations easily. The project is open-source under the Apache 2.0 License and utilizes data licensed under CC BY-NC 4.0.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

sec-code-bench

SecCodeBench is a benchmark suite for evaluating the security of AI-generated code, specifically designed for modern Agentic Coding Tools. It addresses challenges in existing security benchmarks by ensuring test case quality, employing precise evaluation methods, and covering Agentic Coding Tools. The suite includes 98 test cases across 5 programming languages, focusing on functionality-first evaluation and dynamic execution-based validation. It offers a highly extensible testing framework for end-to-end automated evaluation of agentic coding tools, generating comprehensive reports and logs for analysis and improvement.

helix-db

HelixDB is a database designed specifically for AI applications, providing a single platform to manage all components needed for AI applications. It supports graph + vector data model and also KV, documents, and relational data. Key features include built-in tools for MCP, embeddings, knowledge graphs, RAG, security, logical isolation, and ultra-low latency. Users can interact with HelixDB using the Helix CLI tool and SDKs in TypeScript and Python. The roadmap includes features like organizational auth, server code improvements, 3rd party integrations, educational content, and binary quantisation for better performance. Long term projects involve developing in-house tools for knowledge graph ingestion, graph-vector storage engine, and network protocol & serdes libraries.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.

LightAgent

LightAgent is a lightweight, open-source active Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex goals, and multi-model support. It enables simpler self-learning agents, seamless integration with major chat frameworks, and quick tool generation. LightAgent also supports memory modules, tool integration, tree of thought planning, multi-agent collaboration, streaming API, agent self-learning, Langfuse log tracking, and agent assessment. It is compatible with various large models and offers features like intelligent customer service, data analysis, automated tools, and educational assistance.

giskard-oss

Giskard-oss is an Evaluation & Testing framework for AI systems that aims to control risks of performance, bias, and security issues. It focuses on LLM systems, with plans for a new scan and a rewrite of RAGET for version 3. The repository is structured as a Python workspace with three packages: giskard-core, giskard-checks, and giskard-agents. Developers can use the Makefile for common tasks, and contributions from the AI community are welcome. The project encourages stars for visibility and offers sponsorship options for support.

LightAgent

LightAgent is a lightweight, open-source Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex task handling, and multi-model support. It also features a streaming API, tool generator, agent self-learning, adaptive tool mechanism, and more. LightAgent is designed for intelligent customer service, data analysis, automated tools, and educational assistance.

codellm-devkit

Codellm-devkit (CLDK) is a Python library that serves as a multilingual program analysis framework bridging traditional static analysis tools and Large Language Models (LLMs) specialized for code (CodeLLMs). It simplifies the process of analyzing codebases across multiple programming languages, enabling the extraction of meaningful insights and facilitating LLM-based code analysis. The library provides a unified interface for integrating outputs from various analysis tools and preparing them for effective use by CodeLLMs. Codellm-devkit aims to enable the development and experimentation of robust analysis pipelines that combine traditional program analysis tools and CodeLLMs, reducing friction in multi-language code analysis and ensuring compatibility across different tools and LLM platforms. It is designed to seamlessly integrate with popular analysis tools like WALA, Tree-sitter, LLVM, and CodeQL, acting as a crucial intermediary layer for efficient communication between these tools and CodeLLMs. The project is continuously evolving to include new tools and frameworks, maintaining its versatility for code analysis and LLM integration.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

portia-sdk-python

Portia AI is an open source developer framework for predictable, stateful, authenticated agentic workflows. It allows developers to have oversight over their multi-agent deployments and focuses on production readiness. The framework supports iterating on agents' reasoning, extensive tool support including MCP support, authentication for API and web agents, and is production-ready with features like attribute multi-agent runs, large inputs and outputs storage, and connecting any LLM. Portia AI aims to provide a flexible and reliable platform for developing AI agents with tools, authentication, and smart control.

For similar tasks

FinMem-LLM-StockTrading

This repository contains the Python source code for FINMEM, a Performance-Enhanced Large Language Model Trading Agent with Layered Memory and Character Design. It introduces FinMem, a novel LLM-based agent framework devised for financial decision-making, encompassing three core modules: Profiling, Memory with layered processing, and Decision-making. FinMem's memory module aligns closely with the cognitive structure of human traders, offering robust interpretability and real-time tuning. The framework enables the agent to self-evolve its professional knowledge, react agilely to new investment cues, and continuously refine trading decisions in the volatile financial environment. It presents a cutting-edge LLM agent framework for automated trading, boosting cumulative investment returns.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

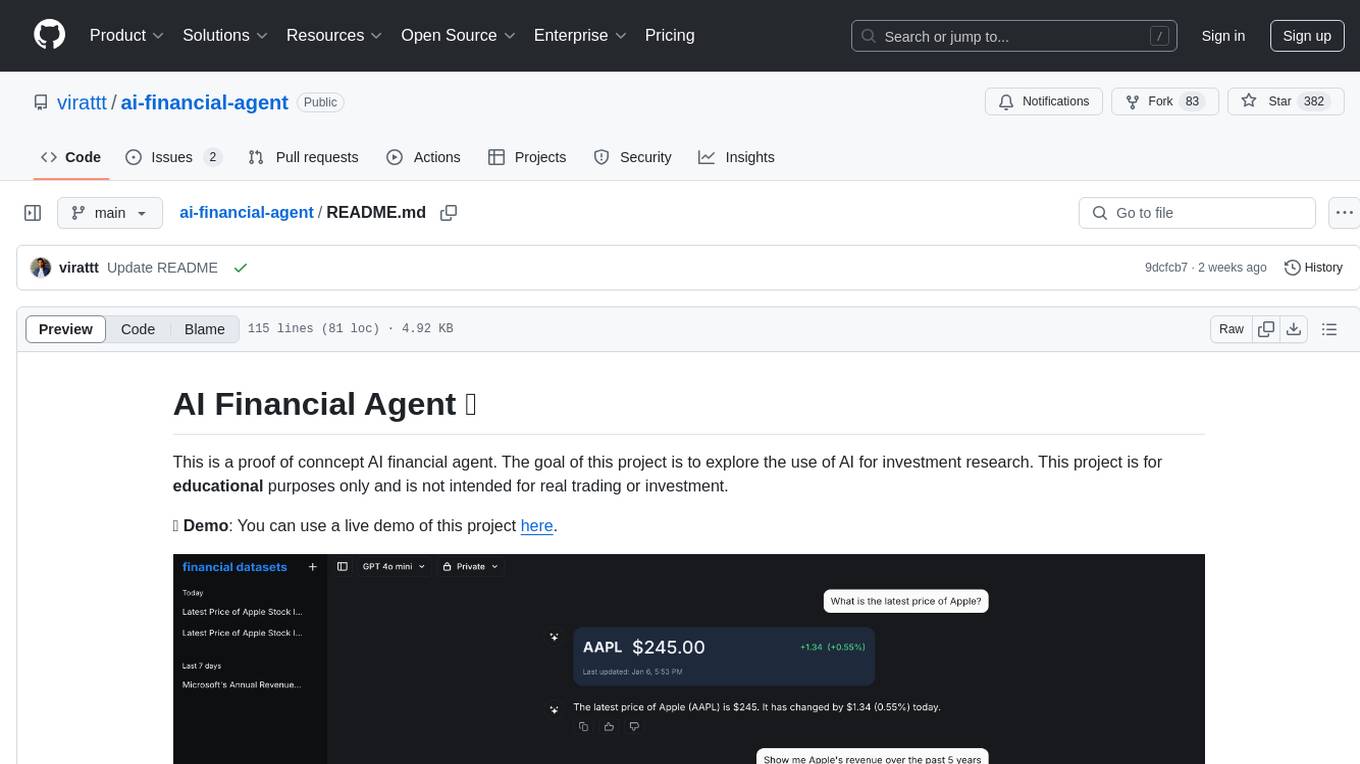

ai-financial-agent

AI Financial Agent is a proof of concept project exploring the use of AI for investment research. It provides an AI SDK with a unified API for generating text and structured objects, along with access to real-time and historical stock market data optimized for AI financial agents. The project includes features like dynamic chat interfaces, support for multiple model providers, and styling with Tailwind CSS. Users can deploy their own version of the AI Financial Agent using Vercel and GitHub integration.

stock-trading

StockTrading AI is a small model stock automatic trading system that integrates with securities platforms, implements automated stock trading, utilizes QuartZ for scheduled tasks to update data daily, employs DL4J framework for LSTM model guidance on stock buying with T+1 short-term trading strategy, utilizes K8S+GithubAction for DevOps, and supports distributed offline training. Future optimizations include obtaining more historical stock data for incremental model training and tuning model hyperparameters to improve price trend prediction accuracy. The system provides various page displays for profit data statistics, trade order queries, stock price viewing, model prediction performance, scheduled task scheduling, and real-time log tracking.

FinanceMCP

FinanceMCP is a professional financial data server based on the MCP protocol, integrating the Tushare API to provide real-time financial data and technical indicator analysis for AI assistants like Claude. It offers various free public cloud services, including a web-based experience version and desktop configuration for production environments. The core features include an intelligent technical indicator system with 5 core indicators, comprehensive market coverage across 10 markets, tools for stock, index, company, macroeconomic, and fund data analysis, as well as specific modules for analyzing US and Hong Kong stock companies. The tool supports tasks like stock technical analysis, comprehensive analysis, news and macroeconomic analysis, fund and bond data queries, among others. It can be locally deployed using Streamable HTTP or SSE modes, with detailed installation and configuration instructions provided.

PanWatch

PanWatch is a private AI stock assistant for real-time market monitoring, intelligent technical analysis, and multi-account portfolio management. It offers data privacy through self-hosted deployment, AI-native features that understand user's holdings, style, and goals, and easy setup with Docker. The core functions include intelligent agent system for pre-market analysis, real-time intraday monitoring, end-of-day reports, and news updates. It also provides professional technical analysis with trend indicators, momentum indicators, volume-price analysis, pattern recognition, and support/resistance calculations. PanWatch supports multiple markets and accounts, covering A shares, Hong Kong stocks, and US stocks, with customizable trading styles for accurate AI suggestions. Notifications are available through various channels like Telegram, WeChat Work, DingTalk, Feishu, Bark, and custom webhooks.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.