ai-financial-agent

A financial agent for investment research

Stars: 382

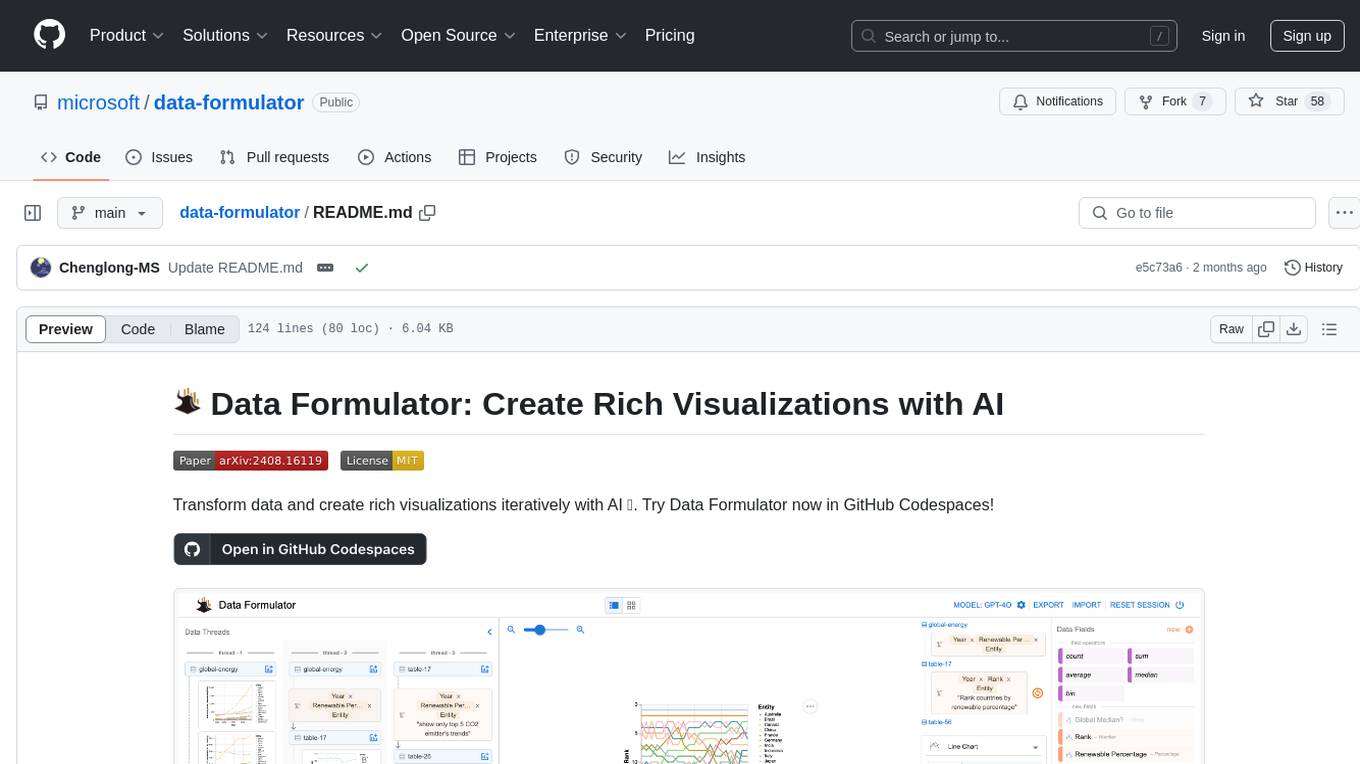

AI Financial Agent is a proof of concept project exploring the use of AI for investment research. It provides an AI SDK with a unified API for generating text and structured objects, along with access to real-time and historical stock market data optimized for AI financial agents. The project includes features like dynamic chat interfaces, support for multiple model providers, and styling with Tailwind CSS. Users can deploy their own version of the AI Financial Agent using Vercel and GitHub integration.

README:

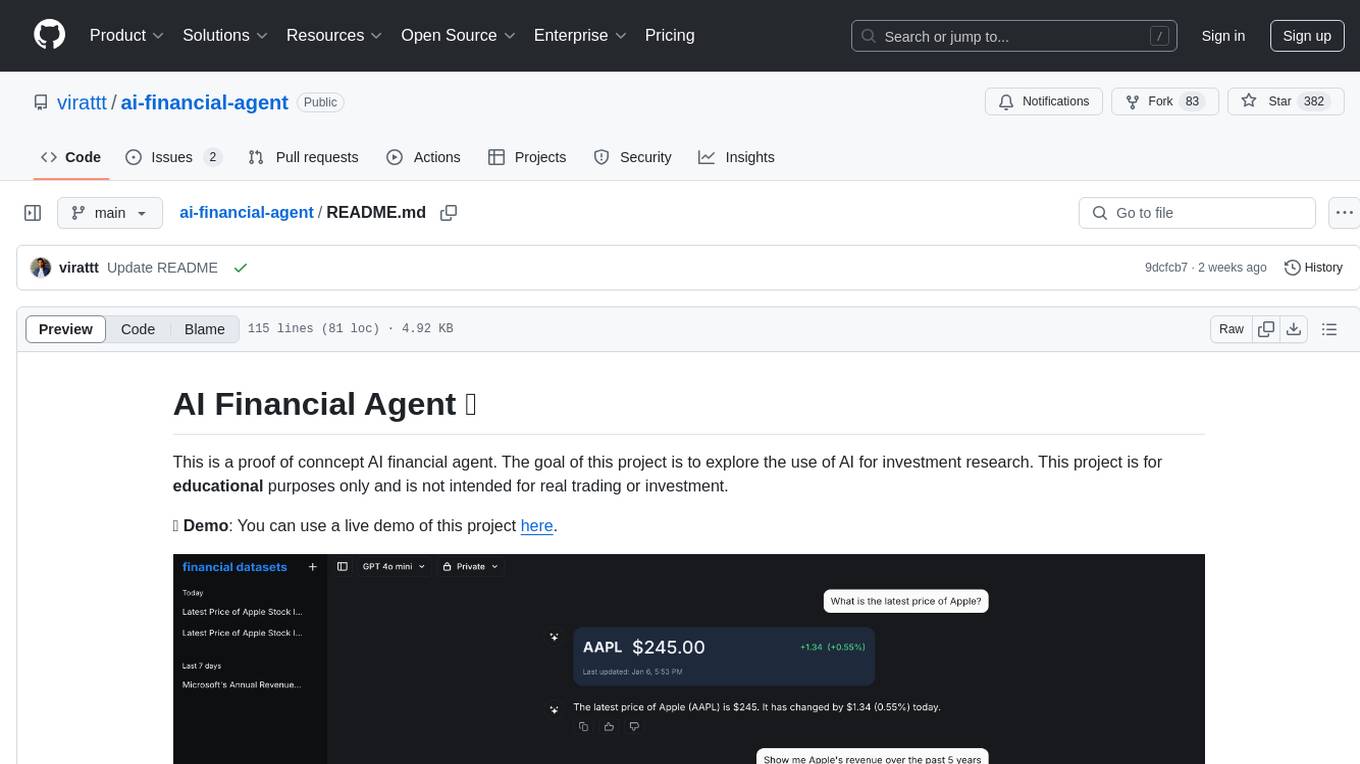

This is a proof of conncept AI financial agent. The goal of this project is to explore the use of AI for investment research. This project is for educational purposes only and is not intended for real trading or investment.

👋 Demo: You can use a live demo of this project here.

This project is for educational and research purposes only.

- Not intended for real trading or investment

- No warranties or guarantees provided

- Past performance does not indicate future results

- Creator assumes no liability for financial losses

- Consult a financial advisor for investment decisions

By using this software, you agree to use it solely for learning purposes.

-

AI SDK

- Unified API for generating text, structured objects, and tool calls with LLMs

- Hooks for building dynamic chat and generative user interfaces

- Supports OpenAI (default), Anthropic, Cohere, and other model providers

-

Financial Datasets API

- Access to real-time and historical stock market data

- Data is optimized for AI financial agents

- 30+ years of financial data with 100% market coverage

- Documentation available here

-

shadcn/ui

- Styling with Tailwind CSS

- Component primitives from Radix UI for accessibility and flexibility

git clone https://github.com/virattt/ai-financial-agent.git

cd ai-financial-agentIf you do not have npm installed, please install it from here.

- Install pnpm (if not already installed):

npm install -g pnpm- Install dependencies:

pnpm install- Set up your environment variables:

# Create .env file for your API keys

cp .env.example .envSet the API keys in the .env file:

# Get your OpenAI API key from https://platform.openai.com/

OPENAI_API_KEY=your-openai-api-key

# Get your Financial Datasets API key from https://financialdatasets.ai/

FINANCIAL_DATASETS_API_KEY=your-financial-datasets-api-key

# Get your LangSmith API key from https://smith.langchain.com/

LANGCHAIN_API_KEY=your-langsmith-api-key

LANGCHAIN_TRACING_V2=true

LANGCHAIN_PROJECT=ai-financial-agent

Note: You should not commit your

.envfile or it will expose secrets that will allow others to control access to your various OpenAI and authentication provider accounts.

If you want to deploy your own version of the AI Financial Agent in production, you need to link your local instance with your Vercel and GitHub accounts.

- Install Vercel CLI:

npm i -g vercel - Link local instance with Vercel and GitHub accounts (creates

.verceldirectory):vercel link - Download your environment variables:

vercel env pull

After completing the steps above, simply run the following command to start the development server:

pnpm devYour app template should now be running on localhost:3000.

This template uses the Financial Datasets API as the financial data provider. The Financial Datasets API is specifically designed for AI financial agents and LLMs.

The Financial Datasets API provides real-time and historical stock market data and covers 100% of the US market over the past 30 years.

Data includes financial statements, stock prices, options data, insider trades, institutional ownership, and much more. You can learn more about the API via the documentation here.

Note: Data is free for AAPL, GOOGL, MSFT, NVDA, and TSLA.

If you do not want to use the Financial Datasets API, you can easily switch to another data provider by modifying a few lines of code.

You can deploy your own version of the AI Financial Agent in production via Vercel with one click:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-financial-agent

Similar Open Source Tools

ai-financial-agent

AI Financial Agent is a proof of concept project exploring the use of AI for investment research. It provides an AI SDK with a unified API for generating text and structured objects, along with access to real-time and historical stock market data optimized for AI financial agents. The project includes features like dynamic chat interfaces, support for multiple model providers, and styling with Tailwind CSS. Users can deploy their own version of the AI Financial Agent using Vercel and GitHub integration.

dataherald

Dataherald is a natural language-to-SQL engine built for enterprise-level question answering over structured data. It allows you to set up an API from your database that can answer questions in plain English. You can use Dataherald to: * Allow business users to get insights from the data warehouse without going through a data analyst * Enable Q+A from your production DBs inside your SaaS application * Create a ChatGPT plug-in from your proprietary data

OpenBB

The OpenBB Platform is the first financial platform that is free and fully open source, offering access to equity, options, crypto, forex, macro economy, fixed income, and more. It provides a broad range of extensions to enhance the user experience according to their needs. Users can sign up to the OpenBB Hub to maximize the benefits of the OpenBB ecosystem. Additionally, the platform includes an AI-powered Research and Analytics Workspace for free. There is also an open source AI financial analyst agent available that can access all the data within OpenBB.

git-mcp

GitMCP is a free, open-source service that transforms any GitHub project into a remote Model Context Protocol (MCP) endpoint, allowing AI assistants to access project documentation effortlessly. It empowers AI with semantic search capabilities, requires zero setup, is completely free and private, and serves as a bridge between GitHub repositories and AI assistants.

airbroke

Airbroke is an open-source error catcher tool designed for modern web applications. It provides a PostgreSQL-based backend with an Airbrake-compatible HTTP collector endpoint and a React-based frontend for error management. The tool focuses on simplicity, maintaining a small database footprint even under heavy data ingestion. Users can ask AI about issues, replay HTTP exceptions, and save/manage bookmarks for important occurrences. Airbroke supports multiple OAuth providers for secure user authentication and offers occurrence charts for better insights into error occurrences. The tool can be deployed in various ways, including building from source, using Docker images, deploying on Vercel, Render.com, Kubernetes with Helm, or Docker Compose. It requires Node.js, PostgreSQL, and specific system resources for deployment.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

moon-dev-ai-agents-for-trading

Moon Dev AI Agents for Trading is an experimental project exploring the potential of artificial financial intelligence for trading and investing research. The project aims to develop AI agents to complement and potentially replace human trading operations by addressing common trading challenges such as emotional reactions, ego-driven decisions, inconsistent execution, fatigue effects, impatience, and fear & greed cycles. The project focuses on research areas like risk control, exit timing, entry strategies, sentiment collection, and strategy execution. It is important to note that this project is not a profitable trading solution and involves substantial risk of loss.

intelligence-toolkit

The Intelligence Toolkit is a suite of interactive workflows designed to help domain experts make sense of real-world data by identifying patterns, themes, relationships, and risks within complex datasets. It utilizes generative AI (GPT models) to create reports on findings of interest. The toolkit supports analysis of case, entity, and text data, providing various interactive workflows for different intelligence tasks. Users are expected to evaluate the quality of data insights and AI interpretations before taking action. The system is designed for moderate-sized datasets and responsible use of personal case data. It uses the GPT-4 model from OpenAI or Azure OpenAI APIs for generating reports and insights.

hi-ml

The Microsoft Health Intelligence Machine Learning Toolbox is a repository that provides low-level and high-level building blocks for Machine Learning / AI researchers and practitioners. It simplifies and streamlines work on deep learning models for healthcare and life sciences by offering tested components such as data loaders, pre-processing tools, deep learning models, and cloud integration utilities. The repository includes two Python packages, 'hi-ml-azure' for helper functions in AzureML, 'hi-ml' for ML components, and 'hi-ml-cpath' for models and workflows related to histopathology images.

aisuite

Aisuite is a simple, unified interface to multiple Generative AI providers. It allows developers to easily interact with various Language Model (LLM) providers like OpenAI, Anthropic, Azure, Google, AWS, and more through a standardized interface. The library focuses on chat completions and provides a thin wrapper around python client libraries, enabling creators to test responses from different LLM providers without changing their code. Aisuite maximizes stability by using HTTP endpoints or SDKs for making calls to the providers. Users can install the base package or specific provider packages, set up API keys, and utilize the library to generate chat completion responses from different models.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

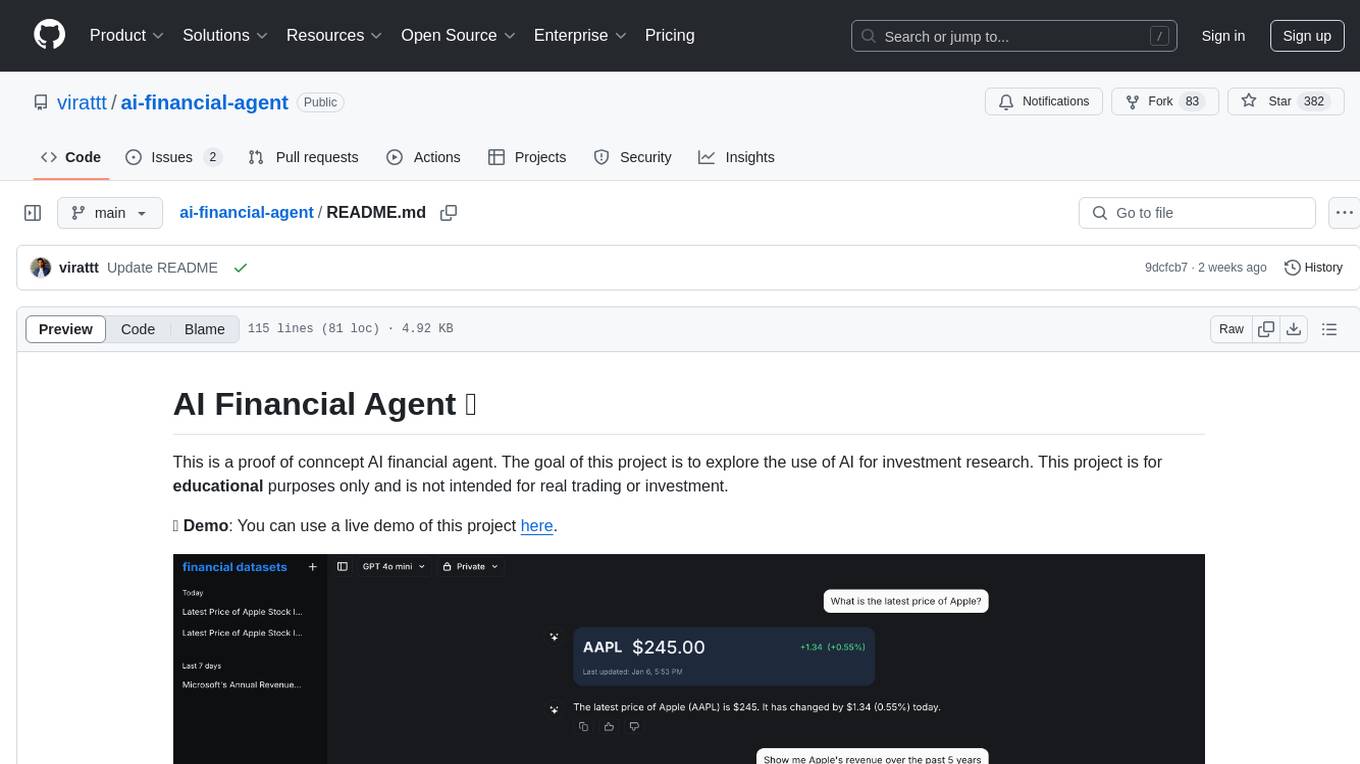

data-formulator

Data Formulator is an AI-powered tool developed by Microsoft Research to help data analysts create rich visualizations iteratively. It combines user interface interactions with natural language inputs to simplify the process of describing chart designs while delegating data transformation to AI. Users can utilize features like blended UI and NL inputs, data threads for history navigation, and code inspection to create impressive visualizations. The tool supports local installation for customization and Codespaces for quick setup. Developers can build new data analysis tools on top of Data Formulator, and research papers are available for further reading.

For similar tasks

ai-financial-agent

AI Financial Agent is a proof of concept project exploring the use of AI for investment research. It provides an AI SDK with a unified API for generating text and structured objects, along with access to real-time and historical stock market data optimized for AI financial agents. The project includes features like dynamic chat interfaces, support for multiple model providers, and styling with Tailwind CSS. Users can deploy their own version of the AI Financial Agent using Vercel and GitHub integration.

FinMem-LLM-StockTrading

This repository contains the Python source code for FINMEM, a Performance-Enhanced Large Language Model Trading Agent with Layered Memory and Character Design. It introduces FinMem, a novel LLM-based agent framework devised for financial decision-making, encompassing three core modules: Profiling, Memory with layered processing, and Decision-making. FinMem's memory module aligns closely with the cognitive structure of human traders, offering robust interpretability and real-time tuning. The framework enables the agent to self-evolve its professional knowledge, react agilely to new investment cues, and continuously refine trading decisions in the volatile financial environment. It presents a cutting-edge LLM agent framework for automated trading, boosting cumulative investment returns.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

stock-trading

StockTrading AI is a small model stock automatic trading system that integrates with securities platforms, implements automated stock trading, utilizes QuartZ for scheduled tasks to update data daily, employs DL4J framework for LSTM model guidance on stock buying with T+1 short-term trading strategy, utilizes K8S+GithubAction for DevOps, and supports distributed offline training. Future optimizations include obtaining more historical stock data for incremental model training and tuning model hyperparameters to improve price trend prediction accuracy. The system provides various page displays for profit data statistics, trade order queries, stock price viewing, model prediction performance, scheduled task scheduling, and real-time log tracking.

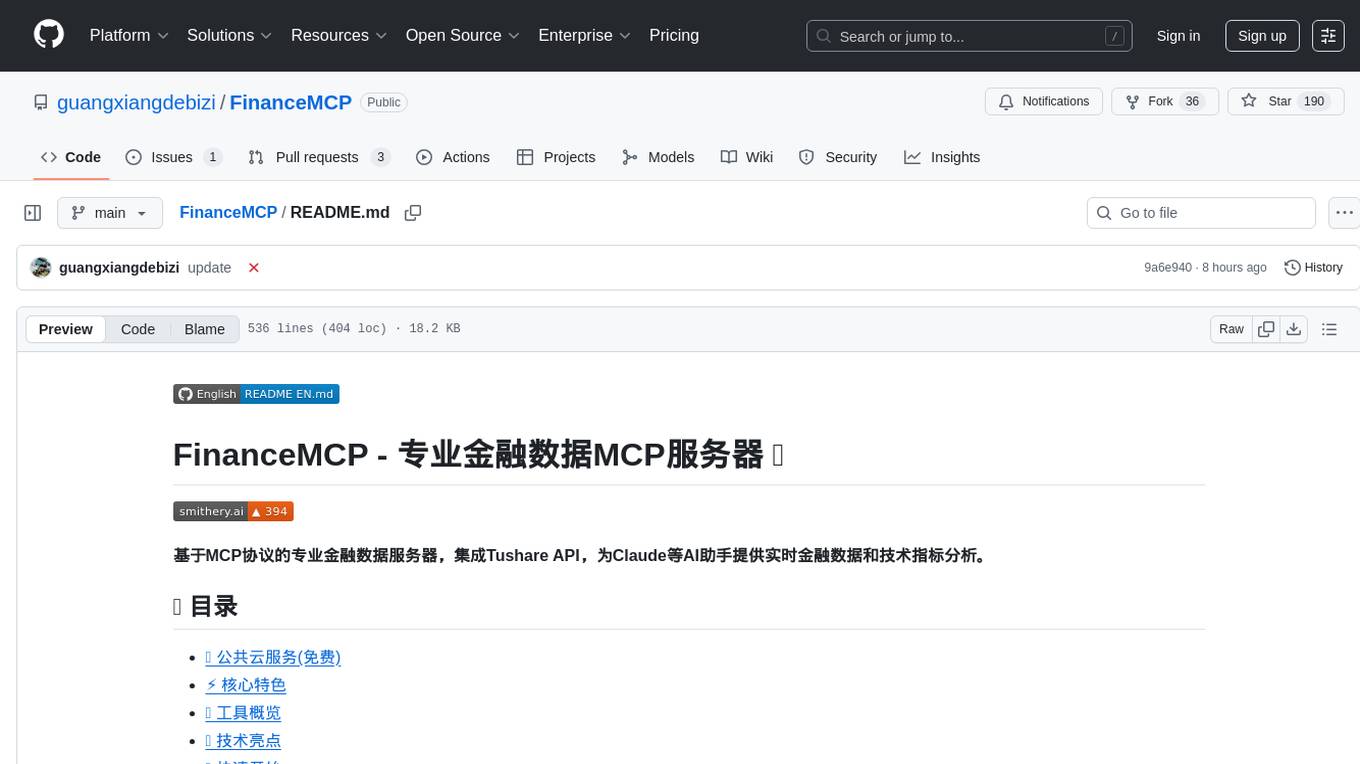

FinanceMCP

FinanceMCP is a professional financial data server based on the MCP protocol, integrating the Tushare API to provide real-time financial data and technical indicator analysis for AI assistants like Claude. It offers various free public cloud services, including a web-based experience version and desktop configuration for production environments. The core features include an intelligent technical indicator system with 5 core indicators, comprehensive market coverage across 10 markets, tools for stock, index, company, macroeconomic, and fund data analysis, as well as specific modules for analyzing US and Hong Kong stock companies. The tool supports tasks like stock technical analysis, comprehensive analysis, news and macroeconomic analysis, fund and bond data queries, among others. It can be locally deployed using Streamable HTTP or SSE modes, with detailed installation and configuration instructions provided.

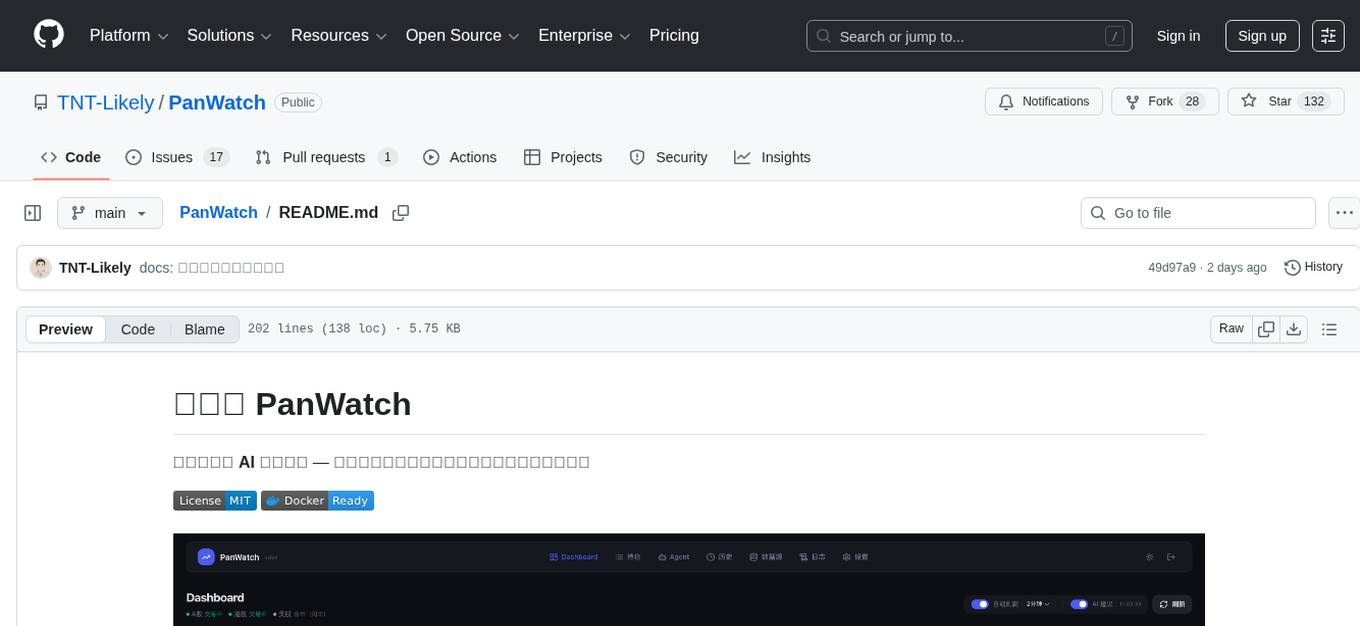

PanWatch

PanWatch is a private AI stock assistant for real-time market monitoring, intelligent technical analysis, and multi-account portfolio management. It offers data privacy through self-hosted deployment, AI-native features that understand user's holdings, style, and goals, and easy setup with Docker. The core functions include intelligent agent system for pre-market analysis, real-time intraday monitoring, end-of-day reports, and news updates. It also provides professional technical analysis with trend indicators, momentum indicators, volume-price analysis, pattern recognition, and support/resistance calculations. PanWatch supports multiple markets and accounts, covering A shares, Hong Kong stocks, and US stocks, with customizable trading styles for accurate AI suggestions. Notifications are available through various channels like Telegram, WeChat Work, DingTalk, Feishu, Bark, and custom webhooks.

Pathway-AI-Bootcamp

Welcome to the μLearn x Pathway Initiative, an exciting adventure into the world of Artificial Intelligence (AI)! This comprehensive course, developed in collaboration with Pathway, will empower you with the knowledge and skills needed to navigate the fascinating world of AI, with a special focus on Large Language Models (LLMs).

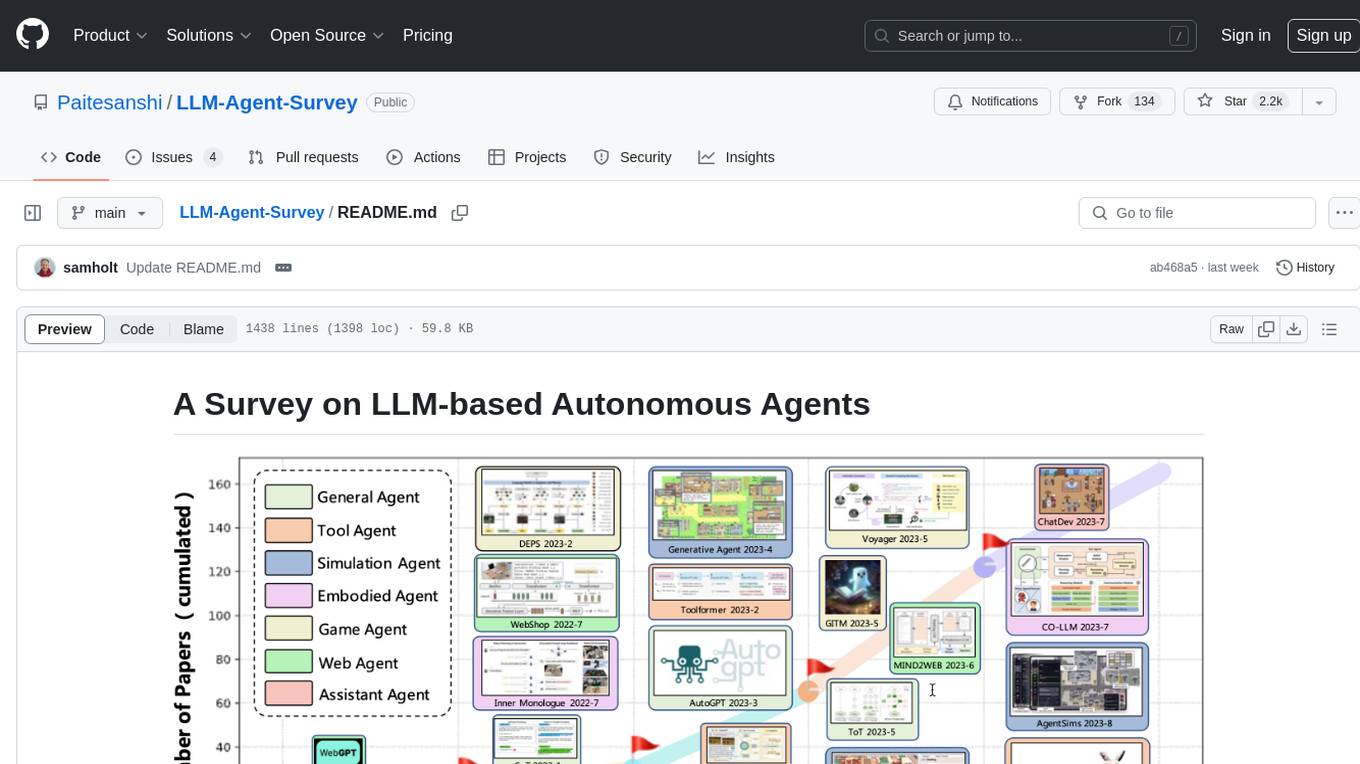

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.