SalesGPT

Context-aware AI Sales Agent to automate sales outreach.

Stars: 1872

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

README:

This repo is an implementation of a context-aware AI Agent for Sales using LLMs and can work across voice, email and texting (SMS, WhatsApp, WeChat, Weibo, Telegram, etc.).

SalesGPT is context-aware, which means it can understand what stage of a sales conversation it is in and act accordingly. Morever, SalesGPT has access to tools, such as your own pre-defined product knowledge base, significantly reducing hallucinations.

We are building SalesGPT to power your best AI Sales Agents. Hence, we would love to learn more about use cases you are building towards which will fuel SalesGPT development roadmap, so please don't hesitate to reach out.

- The AI Agent can access data from any data system (new, old or legacy) via an integration to Mindware's AI Solutions Engineer.

- AI Sales Agents can now ACTUALLY sell! They autonomously generate Stripe payment links to sell products and services to customers.

- You can now test your AI Sales Agents via our frontend.

- Sales Agent can now take advantage of tools, such as look up products in a product catalog!

Unload AI Sales Agent Demos - Powered by SalesGPT: Our new virtual workforce? 🤔

Demo #1: Sarah - Patient Coordinator at South Orange Pediatrics

- 100X Your Healthcare Admin with our Virtual Workforce

Demo #2: Ted - Autonomously create payment links and collect customer payments

Try it yourself on our SalesGPT hosted frontend demo.

We are building SalesGPT to power your best AI Sales Agents. Hence, we would love to learn more about use cases you are building towards which will fuel SalesGPT development roadmap.

If you want us to build better towards your needs, or need help with your AI Sales Agents, please reach out to chat with us: SalesGPT Use Case Intake Survey

The AI Sales Agent understands the conversation stage (you can define your own stages fitting your needs):

- Introduction: Start the conversation by introducing yourself and your company.

- Qualification: Qualify the prospect by confirming if they are the right person to talk to regarding your product/service.

- Value Proposition: Briefly explain how your product/service can benefit the prospect.

- Needs Analysis: Ask open-ended questions to uncover the prospect's needs and pain points.

- Solution Presentation: Based on the prospect's needs, present your product/service as the solution that can address their pain points.

- Objection Handling: Address any objections that the prospect may have regarding your product/service.

- Close: Ask for the sale by proposing a next step.

- End Conversation: The user does not want to continue the conversation, so end the call.

- Reference only your business information & products and significantly reduce hallucinations!

- The AI Agent can actually close sales by generating Stripe payment link and closing orders from customers.

- The AI Agent can leverage data from any data system (new, old or legacy) via an integration to Mindware.

- Enhance your sales process with automated email communication. SalesGPT can now send personalized emails to prospects, including follow-ups or product information.

- The AI Agent can now facilitate scheduling meetings by generating Calendly links.

- Thanks to our integration with LiteLLM, you can choose any closed/open-sourced LLM to work with SalesGPT! Thanks to LiteLLM maintainers for this contribution!

- Synchronous & Asynchronous Completion with LLMs

- Synchronous & Asynchronous Streaming from LLMs

- Voice AI Sales Agent boasts <1s round trip response rate to human speakers which includes the entire pipeline - speech to text, LLM inference, and text to speech - while ensuring stability and scalability.

- For use cases where AI sales agent needs human supervision.

- debug, test, evaluate, and monitor chains and intelligent agents built on any LLM framework

- Upcoming integration with PromptArmor to protect your AI Sales Agents against security vulnerabilities (see our roadmap).

import os

from salesgpt.agents import SalesGPT

from langchain_community.chat_models import ChatLiteLLM

from dotenv import load_dotenv

load_dotenv() # make sure you have .env file with your API keys, eg., OPENAI_API_KEY=sk-xxx, MINDWARE_API_KEY etc.

# select your model - we support 50+ LLMs via LiteLLM https://docs.litellm.ai/docs/providers

llm = ChatLiteLLM(temperature=0.4, model_name="gpt-4-0125-preview")

sales_agent = SalesGPT.from_llm(llm, use_tools=True, verbose=False,

product_catalog = "examples/sample_product_catalog.txt",

salesperson_name="Ted Lasso",

salesperson_role="Sales Representative",

company_name="Sleep Haven",

company_business='''Sleep Haven

is a premium mattress company that provides

customers with the most comfortable and

supportive sleeping experience possible.

We offer a range of high-quality mattresses,

pillows, and bedding accessories

that are designed to meet the unique

needs of our customers.'''

)

sales_agent.seed_agent()

sales_agent.determine_conversation_stage() # optional for demonstration, built into the prompt

# agent

sales_agent.step()

# user

user_input = input('Your response: ') # Yea, sure

sales_agent.human_step(user_input)

# agent

sales_agent.determine_conversation_stage() # optional for demonstration, built into the prompt

sales_agent.step()

# user

user_input = input('Your response: ') # What pricing do you have for your mattresses?

sales_agent.human_step(user_input)

# agent

sales_agent.determine_conversation_stage() # optional for demonstration, built into the prompt

sales_agent.step()Conversation Stage: Introduction: Start the conversation by introducing yourself and your company.

Thought: Do I need to use a tool? No

Ted Lasso: Hello, my name is Ted Lasso and I'm calling on behalf of Sleep Haven. We are a premium mattress company that provides customers with the most comfortable and supportive sleeping experience possible. I was wondering if you would be interested in learning more about our products and how they can improve your sleep?

User: Yea, sure

Conversation Stage: Value proposition: Briefly explain how your product/service can benefit the prospect.

Thought: Do I need to use a tool? No

Ted Lasso: Great to hear that! Our mattresses are specially designed to contour to your body shape, providing the perfect level of support and comfort for a better night's sleep. Plus, they're made with high-quality materials that are built to last. Would you like to hear more about our different mattress options?

User: What pricing do you have for your mattresses?

Thought: Do I need to use a tool? Yes --> AI Agent uses tools here.

Action: ProductSearch

ActionInput pricing for mattresses

Observation: Sleep Haven offers three mattresses: the Classic Harmony Spring Mattress for $1,299, the Plush Serenity Bamboo Mattress for $2,599, and the Luxury Cloud-Comfort Memory Foam Mattress for $999. The sizes available vary by mattress.

Thought: Do I need to use a tool? No

Ted Lasso: Sleep Haven offers three mattresses at different price points. The Classic Harmony Spring Mattress is priced at $1,299, the Plush Serenity Bamboo Mattress is priced at $2,599, and the Luxury Cloud-Comfort Memory Foam Mattress is priced at $999. The prices may vary depending on the size you choose. Would you like more information about the specific sizes and features of each mattress?

User: No, I will take two Classic Harmony Spring Mattress please.

Thought: Do I need to use a tool? Yes --> AI Agent uses tools here.

Action: GeneratePaymentLink

Action Input: Classic Harmony Spring Mattress, 2, John Doe Observation: {"response":"https://buy.stripe.com/test_9AQ6rygc397Bbv228p"}

Thought: Do I need to use a tool? No

Ted Lasso: Fantastic choice! I've generated a payment link for you to order two Classic Harmony Spring Mattresses. You can complete your purchase by clicking on this link: https://buy.stripe.com/test_9AQ6rygc397Bbv228p. Is there anything else I can assist you with today?

Make sure you have a python >=3.8,<3.12:

Create a virtual environment at a location on your computer. We use the generic "env" name for our virtual environment in the setup. You can rename this, but make sure to then use this name later when working with the environment (also rename the VENV variable in the Makefile accordingly to be able to use make commands successfully after cloning our repository):

- Open Command Prompt or PowerShell.

- Navigate to your project directory:

cd path\to\your\project - Create a virtual environment:

python -m venv env - Activate the virtual environment:

.\env\Scripts\activate

- Open Terminal.

- Navigate to your project directory:

cd path/to/your/project - Create a virtual environment:

python3 -m venv env - Activate the virtual environment:

source env/bin/activate

To deactivate a virtual environment after you have stopped using it simply run: deactivate

Clone the SalesGPT Github repository:

git clone https://github.com/filip-michalsky/SalesGPT.git

Navigate to the repository and in case you used a different venv name rename the VENV variable in the Makefile:

cd SalesGPT

If you simply want to work with SalesGPT as an end user without local changes you can install from PyPI using:

pip install salesgpt

If you want to work on your own version of SalesGPT or contribute to our open-source version install by activating your virtual environment as aforementioned and then run:

make setup

For more detailed installation steps along with the reasons for doing each please visit CONTRIBUTING.md

Finally, for use of SalesGPT create an .env file just as our .env.example and put your API keys there by specifying a new line just as we have done.

Navigate into the SalesGPT directory:

cd SalesGPT

SalesGPT can be run in various ways, tailored to your preferences and setup. Below are the methods available:

For those who prefer containerization, Docker offers an isolated and consistent environment. Ensure Docker is installed on your system by following the official Docker installation guide.

To run SalesGPT with Docker, execute the following steps:

-

Start the Application with Docker Compose:

Use the command below to start SalesGPT in detached mode:

docker-compose up -dIf you've made changes and want them to reflect, append

--buildto the command above. -

Stopping the Application:

To stop and remove all running containers related to SalesGPT, execute:

docker-compose down

Troubleshooting:

-

Clean Up Docker Resources: If you encounter errors, you can clean up Docker by removing all unused containers, networks, images, and volumes with caution:

docker system prune --volumes -

Rebuild Without Cache: To rebuild and start the services afresh without using cache, run:

docker-compose up -d --build --no-cache

After successful setup, access SalesGPT at localhost:3000/chat in your browser.

If Docker is not part of your workflow, you can directly launch the SalesGPT user interface. Please refer to the README.md file in the frontend directory for instructions on setting up the UI locally.

For terminal enthusiasts or automation scripts, run SalesGPT with the following command:

python run.py --verbose True --config examples/example_agent_setup.json

For those who wish to integrate SalesGPT's backend with their own user interface or application, running only the backend is a straightforward process. This allows you to leverage the powerful features of SalesGPT while maintaining full control over the user experience.

To run only the backend of SalesGPT, follow these steps:

-

Start the Backend Service:

Use the following command to start the backend service. This will initiate the server on port 8000 by default, making the API accessible:

docker-compose up -d backendIf you need to rebuild the backend image, perhaps after making changes, you can add

--buildto the command above. -

Accessing the Backend:

With the backend running, you can access the API endpoints at

http://localhost:8000. Refer to the API documentation for details on available endpoints and their usage. -

Stopping the Backend:

To stop the backend service, execute:

docker-compose stop backendIf you wish to remove the backend container entirely, use:

docker-compose down

This setup is ideal for developers looking to integrate SalesGPT's backend into custom applications or those who prefer to use a different frontend technology.

- Activate your environment as described above. (run

source env/bin/activateon Unix-like systems and.\env\Scripts\activateon Windows. Replace env with the name of your virtual environment) - cd

SalesGPTIf you haven't already navigated to the SalesGPT home directory make test

All tests should pass. Warnings can be ignored.

Regular updates are added to the SalesGPT repository. If your code is not working please check the current README file for possible changes in launch. With updates often extra environment variables are added, which are however required for certain functionality. Please check current .env.example file.

For any problems you can not solve please don't hesitate to reach out to our discord #support channel.

To delete the virtual environment you used for SalesGPT programming and your SalesGPT repository from your system navigate to the directory where you installed your virtual environment and cloned SalesGPT and run:

make clean

We leverage the langchain library in this implementation, specifically Custom Agent Configuration and are inspired by BabyAGI architecture.

LangSmith is a platform for building production-grade LLM applications.

It lets you debug, test, evaluate, and monitor chains and intelligent agents built on any LLM framework and seamlessly integrates with LangChain, the go-to open source framework for building with LLMs.

LangSmith is developed by LangChain, the company behind the open source LangChain framework.

To switch on the LangSmith tracing you have to do the following steps:

- Create a LangSmith account

- Create an API key in settings

- Add you API key and Project name from LangSmith account to .env file or run.py module

- Switch on the "LANGCHAIN_TRACING_V2" setting in run.py to "true"

- That's it. You'll get better understanding of your agents and chaing performance in LangChain admin panel.

For futher reading take a look at the docs

- Improve SalesGPT observability

- Enhance prompt versioning

- Add prompt evaluation

- Write more documentation

- Better code documentation

- Refactor

Improve reliability of the parser issue here and here- Improve Deployment Instructions

- Calling Functionality - sample code

- Enterprise-Grade Security - integration with PromptArmor to protect your AI Sales Agents against security vulnerabilities

- LLM evaluations

- Resolve tickets and PRs (ongoing)

- Add example implementation of OpenAI functions agentissue here

- Add support for multiple tools issue here

- Add an agent controller for when stages need to be traversed linearly without skips issue here

- Add

tool_getterto choose a tool based on vector distance to the tasks needed to be done - What tools should the agent have? (e.g., the ability to search the internet)

- Add the ability of Sales Agent to interact with AI plugins on your website (.well-known/ai-plugin.json)

- More SalesGPT examples

Lead Maintaner: Filip Michalsky

- Contact Email

- Follow us on X at @FilipMichalsky

Our Support Team:

- AI Engineering: Honza Michna (LinkedIn)

- Software Engineer: Illia Makovoz

Contributions are highly encouraged! Please fork and submit a PR.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SalesGPT

Similar Open Source Tools

SalesGPT

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

AutoGPT

AutoGPT is a revolutionary tool that empowers everyone to harness the power of AI. With AutoGPT, you can effortlessly build, test, and delegate tasks to AI agents, unlocking a world of possibilities. Our mission is to provide the tools you need to focus on what truly matters: innovation and creativity.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

OpenCopilot

OpenCopilot allows you to have your own product's AI copilot. It integrates with your underlying APIs and can execute API calls whenever needed. It uses LLMs to determine if the user's request requires calling an API endpoint. Then, it decides which endpoint to call and passes the appropriate payload based on the given API definition.

merlinn

Merlinn is an open-source AI-powered on-call engineer that automatically jumps into incidents & alerts, providing useful insights and RCA in real time. It integrates with popular observability tools, lives inside Slack, offers an intuitive UX, and prioritizes security. Users can self-host Merlinn, use it for free, and benefit from automatic RCA, Slack integration, integrations with various tools, intuitive UX, and security features.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

CyberScraper-2077

CyberScraper 2077 is an advanced web scraping tool powered by AI, designed to extract data from websites with precision and style. It offers a user-friendly interface, supports multiple data export formats, operates in stealth mode to avoid detection, and promises lightning-fast scraping. The tool respects ethical scraping practices, including robots.txt and site policies. With upcoming features like proxy support and page navigation, CyberScraper 2077 is a futuristic solution for data extraction in the digital realm.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

devika

Devika is an advanced AI software engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika utilizes large language models, planning and reasoning algorithms, and web browsing abilities to intelligently develop software. Devika aims to revolutionize the way we build software by providing an AI pair programmer who can take on complex coding tasks with minimal human guidance. Whether you need to create a new feature, fix a bug, or develop an entire project from scratch, Devika is here to assist you.

nobodywho

NobodyWho is a plugin for the Godot game engine that enables interaction with local LLMs for interactive storytelling. Users can install it from Godot editor or GitHub releases page, providing their own LLM in GGUF format. The plugin consists of `NobodyWhoModel` node for model file, `NobodyWhoChat` node for chat interaction, and `NobodyWhoEmbedding` node for generating embeddings. It offers a programming interface for sending text to LLM, receiving responses, and starting the LLM worker.

open-source-slack-ai

This repository provides a ready-to-run basic Slack AI solution that allows users to summarize threads and channels using OpenAI. Users can generate thread summaries, channel overviews, channel summaries since a specific time, and full channel summaries. The tool is powered by GPT-3.5-Turbo and an ensemble of NLP models. It requires Python 3.8 or higher, an OpenAI API key, Slack App with associated API tokens, Poetry package manager, and ngrok for local development. Users can customize channel and thread summaries, run tests with coverage using pytest, and contribute to the project for future enhancements.

SolidGPT

SolidGPT is an AI searching assistant for developers that helps with code and workspace semantic search. It provides features such as talking to your codebase, asking questions about your codebase, semantic search and summary in Notion, and getting questions answered from your codebase and Notion without context switching. The tool ensures data safety by not collecting users' data and uses the OpenAI series model API.

obsidian-chat-cbt-plugin

ChatCBT is an AI-powered journaling assistant for Obsidian, inspired by cognitive behavioral therapy (CBT). It helps users reframe negative thoughts and rewire reactions to distressful situations. The tool provides kind and objective responses to uncover negative thinking patterns, store conversations privately, and summarize reframed thoughts. Users can choose between a cloud-based AI service (OpenAI) or a local and private service (Ollama) for handling data. ChatCBT is not a replacement for therapy but serves as a journaling assistant to help users gain perspective on their problems.

For similar tasks

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

SalesGPT

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

J.A.R.V.I.S.

J.A.R.V.I.S.1.0 is an advanced virtual assistant tool designed to assist users in various tasks. It provides a wide range of functionalities including voice commands, task automation, information retrieval, and communication management. With its intuitive interface and powerful capabilities, J.A.R.V.I.S.1.0 aims to enhance productivity and streamline daily activities for users.

pocketpal-ai

PocketPal AI is a versatile virtual assistant tool designed to streamline daily tasks and enhance productivity. It leverages artificial intelligence technology to provide personalized assistance in managing schedules, organizing information, setting reminders, and more. With its intuitive interface and smart features, PocketPal AI aims to simplify users' lives by automating routine activities and offering proactive suggestions for optimal time management and task prioritization.

Call

Call is an open-source AI-native alternative to Google Meet and Zoom, offering video calling, team collaboration, contact management, meeting scheduling, AI-powered features, security, and privacy. It is cross-platform, web-based, mobile responsive, and supports offline capabilities. The tech stack includes Next.js, TypeScript, Tailwind CSS, Mediasoup-SFU, React Query, Zustand, Hono, PostgreSQL, Drizzle ORM, Better Auth, Turborepo, Docker, Vercel, and Rate Limiting.

groundup-toolkit

GroundUp Toolkit is an open-source automation toolkit designed for venture capital teams to streamline deal flow, meeting management, CRM updates, and team communication through an AI assistant connected via WhatsApp. It offers various skills such as meeting reminders, meeting bot, deal automation, deck analyzer, VC automation, ping teammate, Google Workspace operations, LinkedIn profile research, keep on radar feature, and deal logger. Additionally, it includes operational scripts for health check, WhatsApp watchdog, and Shabbat-aware scheduler to ensure smooth automation processes. The toolkit's architecture involves WhatsApp, OpenClaw, and various skills and scripts for seamless automation. It requires Ubuntu 22.04+ server, Node.js 18+, and Python 3.10+ for installation and operation.

For similar jobs

SalesGPT

SalesGPT is an open-source AI agent designed for sales, utilizing context-awareness and LLMs to work across various communication channels like voice, email, and texting. It aims to enhance sales conversations by understanding the stage of the conversation and providing tools like product knowledge base to reduce errors. The agent can autonomously generate payment links, handle objections, and close sales. It also offers features like automated email communication, meeting scheduling, and integration with various LLMs for customization. SalesGPT is optimized for low latency in voice channels and ensures human supervision where necessary. The tool provides enterprise-grade security and supports LangSmith tracing for monitoring and evaluation of intelligent agents built on LLM frameworks.

qrev

QRev is an open-source alternative to Salesforce, offering AI agents to scale sales organizations infinitely. It aims to provide digital workers for various sales roles or a superagent named Qai. The tech stack includes TypeScript for frontend, NodeJS for backend, MongoDB for app server database, ChromaDB for vector database, SQLite for AI server SQL relational database, and Langchain for LLM tooling. The tool allows users to run client app, app server, and AI server components. It requires Node.js and MongoDB to be installed, and provides detailed setup instructions in the README file.

wppconnect

WPPConnect is an open source project developed by the JavaScript community with the aim of exporting functions from WhatsApp Web to the node, which can be used to support the creation of any interaction, such as customer service, media sending, intelligence recognition based on phrases artificial and many other things.

linkedin-api

The Linkedin API for Python allows users to programmatically search profiles, send messages, and find jobs using a regular Linkedin user account. It does not require 'official' API access, just a valid Linkedin account. However, it is important to note that this library is not officially supported by LinkedIn and using it may violate LinkedIn's Terms of Service. Users can authenticate using any Linkedin account credentials and access features like getting profiles, profile contact info, and connections. The library also provides commercial alternatives for extracting data, scraping public profiles, and accessing a full LinkedIn API. It is not endorsed or supported by LinkedIn and is intended for educational purposes and personal use only.

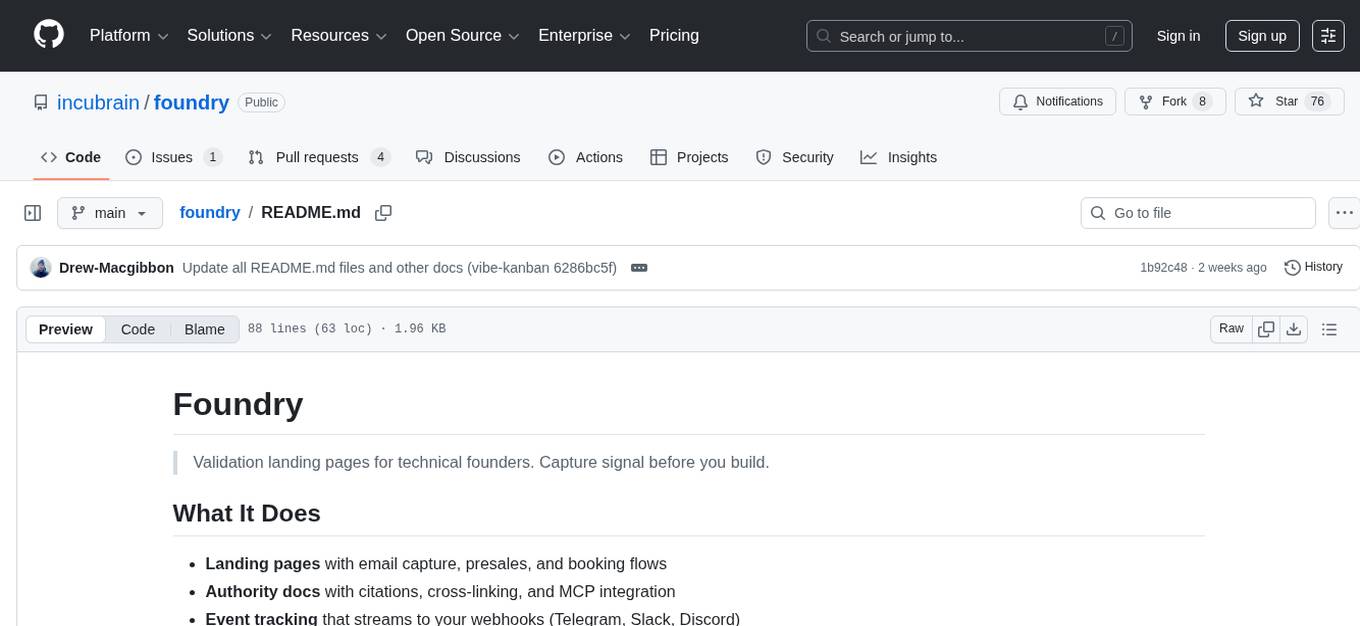

foundry

Foundry is a tool designed for technical founders to create validation landing pages. It allows users to capture email signals before building, with features such as landing pages, authority docs, event tracking, and zero lock-in. The tool is built using Nuxt 4, Tailwind v4, and TypeScript, and provides quick start options for easy setup. Foundry helps founders test interest, collect payments, and sell services before fully developing their products.

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.