Call

open-source AI-native alternative to Google Meet and Zoom.

Stars: 395

Call is an open-source AI-native alternative to Google Meet and Zoom, offering video calling, team collaboration, contact management, meeting scheduling, AI-powered features, security, and privacy. It is cross-platform, web-based, mobile responsive, and supports offline capabilities. The tech stack includes Next.js, TypeScript, Tailwind CSS, Mediasoup-SFU, React Query, Zustand, Hono, PostgreSQL, Drizzle ORM, Better Auth, Turborepo, Docker, Vercel, and Rate Limiting.

README:

An Open-source AI-native alternative to Google Meet and Zoom

Ready to escape complex, data-hungry meeting apps? Join the future of video calling.

- Web-based - works on any modern browser

- Mobile responsive design

- Progressive Web App (PWA) support

- Offline capabilities for basic features

- Next.js 15 - React framework with App Router

- TypeScript - Type-safe development

- Tailwind CSS - Utility-first styling

- shadcn/ui - Beautiful component library

- Mediasoup-SFU - Scalable Selective Forwarding Unit for real-time audio/video conferencing

- React Query - Server state management

- Zustand - For Distributed state management

- Hono - Fast web framework

- PostgreSQL - Reliable database

- Drizzle ORM - Type-safe database queries

- Better Auth - Authentication system

- Turborepo - Monorepo build system

- Docker - Containerization

- Vercel - Deployment platform

- Rate Limiting - API protection

- Node.js 20 or higher

- pnpm package manager

- Docker and Docker Compose

- Git

- Microsoft Visual C++ Redistributable for Visual Studio 2022 (For Windows)

-

Clone the repository

git clone https://github.com/joincalldotco/call.git cd call

2.1 Start the development environment

./setup-dev.sh2.2 Start the development environment (Windows)

./setup_dev_windows.shThis script will automatically:

- Create a

.envfile if it doesn't exist - Install dependencies if needed

- Start Docker services (PostgreSQL)

- Wait for the database to be ready

- Start the development environment

Note: If you encounter any issues during setup, check the ERRORS.md file for troubleshooting guidance.

-

Open your browser

- Web app: http://localhost:3000

- Server: http://localhost:1284

Create a .env file in the root directory with the following variables:

# Database Configuration

DATABASE_URL=postgresql://postgres:postgres@localhost:5434/call

# Google OAuth (for authentication)

GOOGLE_CLIENT_ID=your_google_client_id

GOOGLE_CLIENT_SECRET=your_google_client_secret

# Email Configuration (for notifications)

EMAIL_FROM=[email protected]

RESEND_API_KEY=your_resend_api_key

# App URLs

FRONTEND_URL=http://localhost:3000

BACKEND_URL=http://localhost:1284

# App Configuration

NODE_ENV=development

go to https://www.better-auth.com/docs/installation and click on 'Generate Secret'.

BETTER_AUTH_SECRET=your_generated_secretThe project uses Docker Compose to run PostgreSQL:

# Start services

pnpm docker:up

# Stop services

pnpm docker:down

# Clean up (removes volumes)

pnpm docker:cleancall/

├── apps/ # Applications

│ ├── web/ # Next.js web application

│ │ ├── app/ # App Router pages

│ │ │ ├── (app)/ # Main app routes

│ │ │ ├── (auth)/ # Authentication routes

│ │ │ └── (waitlist)/ # Landing page

│ │ ├── components/ # React components

│ │ ├── lib/ # Utilities and configs

│ │ └── public/ # Static assets

│ └── server/ # Hono backend API

│ ├── routes/ # API routes

│ ├── config/ # Server configuration

│ ├── utils/ # Server utilities

│ └── validators/ # Request validation

├── packages/ # Shared packages

│ ├── auth/ # Authentication utilities

│ ├── db/ # Database schemas and migrations

│ ├── ui/ # Shared UI components (shadcn/ui)

│ ├── eslint-config/ # ESLint configuration

│ └── typescript-config/ # TypeScript configuration

├── docker-compose.yml # Docker services

├── setup-dev.sh # Development setup script

└── turbo.json # Turborepo configuration

# Development

pnpm dev # Start all applications

pnpm dev --filter web # Start only web app

pnpm dev --filter server # Start only server

# Building

pnpm build # Build all packages

pnpm build --filter web # Build only web app

# Linting & Formatting

pnpm lint # Lint all packages

pnpm lint:fix # Fix linting issues

pnpm format # Check formatting

pnpm format:fix # Fix formatting

# Database

pnpm db:generate # Generate database types

pnpm db:migrate # Run database migrations

pnpm db:push # Push schema changes

pnpm db:studio # Open database studio

# Docker

pnpm docker:up # Start Docker services

pnpm docker:down # Stop Docker services

pnpm docker:clean # Clean up Docker volumesWe use pnpm workspaces to manage this monorepo:

# Install a dependency in a specific workspace

pnpm add <package> --filter <workspace-name>

# Install a dependency in all workspaces

pnpm add -w <package>

# Link a local package in another workspace

pnpm add @call/<package-name> --filter <workspace-name> --workspace-

Create a feature branch

git checkout -b feature/your-feature-name

-

Make your changes following our coding standards

-

Run checks before committing

pnpm lint # Lint all packages pnpm build # Build all packages

-

Commit your changes using conventional commits:

feat: add new feature fix: resolve bug docs: update documentation chore: update dependencies refactor: improve code structure test: add tests ui: for ui changes -

Push and create a pull request

- Place shared code in

packages/ - Keep applications in

apps/ - Use consistent naming conventions:

- Applications:

@call/app-name - Packages:

@call/package-name

- Applications:

This project adheres to a Code of Conduct. By participating, you are expected to uphold this code.

- All contributors who help make Call better every day

- Discord: Join our community

- Email: [email protected]

- Twitter: @joincalldotco

Made with ❤️ by the Call team

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Call

Similar Open Source Tools

Call

Call is an open-source AI-native alternative to Google Meet and Zoom, offering video calling, team collaboration, contact management, meeting scheduling, AI-powered features, security, and privacy. It is cross-platform, web-based, mobile responsive, and supports offline capabilities. The tech stack includes Next.js, TypeScript, Tailwind CSS, Mediasoup-SFU, React Query, Zustand, Hono, PostgreSQL, Drizzle ORM, Better Auth, Turborepo, Docker, Vercel, and Rate Limiting.

GitVizz

GitVizz is an AI-powered repository analysis tool that helps developers understand and navigate codebases quickly. It transforms complex code structures into interactive documentation, dependency graphs, and intelligent conversations. With features like interactive dependency graphs, AI-powered code conversations, advanced code visualization, and automatic documentation generation, GitVizz offers instant understanding and insights for any repository. The tool is built with modern technologies like Next.js, FastAPI, and OpenAI, making it scalable and efficient for analyzing large codebases. GitVizz also provides a standalone Python library for core code analysis and dependency graph generation, offering multi-language parsing, AST analysis, dependency graphs, visualizations, and extensibility for custom applications.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

AgriTech

AgriTech is an AI-powered smart agriculture platform designed to assist farmers with crop recommendations, yield prediction, plant disease detection, and community-driven collaboration—enabling sustainable and data-driven farming practices. It offers AI-driven decision support for modern agriculture, early-stage plant disease detection, crop yield forecasting using machine learning models, and a collaborative ecosystem for farmers and stakeholders. The platform includes features like crop recommendation, yield prediction, disease detection, an AI chatbot for platform guidance and agriculture support, a farmer community, and shopkeeper listings. AgriTech's AI chatbot provides comprehensive support for farmers with features like platform guidance, agriculture support, decision making, image analysis, and 24/7 support. The tech stack includes frontend technologies like HTML5, CSS3, JavaScript, backend technologies like Python (Flask) and optional Node.js, machine learning libraries like TensorFlow, Scikit-learn, OpenCV, and database & DevOps tools like MySQL, MongoDB, Firebase, Docker, and GitHub Actions.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

pluely

Pluely is a versatile and user-friendly tool for managing tasks and projects. It provides a simple interface for creating, organizing, and tracking tasks, making it easy to stay on top of your work. With features like task prioritization, due date reminders, and collaboration options, Pluely helps individuals and teams streamline their workflow and boost productivity. Whether you're a student juggling assignments, a professional managing multiple projects, or a team coordinating tasks, Pluely is the perfect solution to keep you organized and efficient.

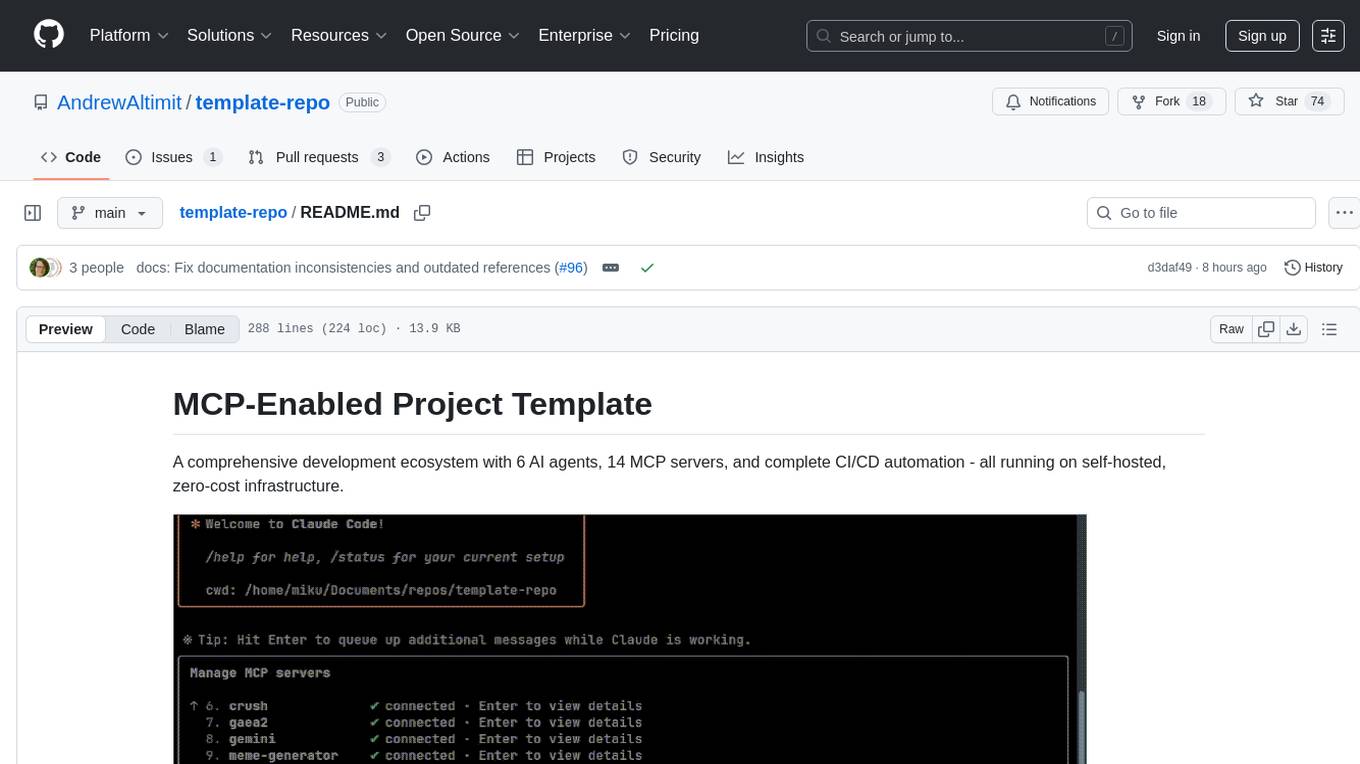

template-repo

The template-repo is a comprehensive development ecosystem with 6 AI agents, 14 MCP servers, and complete CI/CD automation running on self-hosted, zero-cost infrastructure. It follows a container-first approach, with all tools and operations running in Docker containers, zero external dependencies, self-hosted infrastructure, single maintainer design, and modular MCP architecture. The repo provides AI agents for development and automation, features 14 MCP servers for various tasks, and includes security measures, safety training, and sleeper detection system. It offers features like video editing, terrain generation, 3D content creation, AI consultation, image generation, and more, with a focus on maximum portability and consistency.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

Archon

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

chat-ollama

ChatOllama is an open-source chatbot based on LLMs (Large Language Models). It supports a wide range of language models, including Ollama served models, OpenAI, Azure OpenAI, and Anthropic. ChatOllama supports multiple types of chat, including free chat with LLMs and chat with LLMs based on a knowledge base. Key features of ChatOllama include Ollama models management, knowledge bases management, chat, and commercial LLMs API keys management.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

claude-code-mastery

Claude Code Mastery is a comprehensive tool for maximizing Claude Code, offering a production-ready project template with 16 slash commands, deterministic hook enforcement, MongoDB wrapper, live AI monitoring, and three-layer security. It provides a security gatekeeper, project scaffolding blueprint, MCP server integration, workflow automation through custom commands, and emphasizes the importance of single-purpose chats to avoid performance degradation.

bifrost

Bifrost is a high-performance AI gateway that unifies access to multiple providers through a single OpenAI-compatible API. It offers features like automatic failover, load balancing, semantic caching, and enterprise-grade functionalities. Users can deploy Bifrost in seconds with zero configuration, benefiting from its core infrastructure, advanced features, enterprise and security capabilities, and developer experience. The repository structure is modular, allowing for maximum flexibility. Bifrost is designed for quick setup, easy configuration, and seamless integration with various AI models and tools.

openwhispr

OpenWhispr is an open source desktop dictation application that converts speech to text using OpenAI Whisper. It features both local and cloud processing options for maximum flexibility and privacy. The application supports multiple AI providers, customizable hotkeys, agent naming, and various AI processing models. It offers a modern UI built with React 19, TypeScript, and Tailwind CSS v4, and is optimized for speed using Vite and modern tooling. Users can manage settings, view history, configure API keys, and download/manage local Whisper models. The application is cross-platform, supporting macOS, Windows, and Linux, and offers features like automatic pasting, draggable interface, global hotkeys, and compound hotkeys.

For similar tasks

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing a user-friendly interface for AI copilot assistance on Windows, Mac, and Linux. It offers features like local data storage, multiple LLM provider support, image generation with Dall-E-3, enhanced prompting, keyboard shortcuts, and more. Users can collaborate, access the tool on various platforms, and enjoy multilingual support. Chatbox is constantly evolving with new features to enhance the user experience.

singulatron

Singulatron is an AI Superplatform that runs on your computer(s) and server(s) without using third party APIs, providing complete control over data and privacy. It offers AI functionality, user management, supports different database backends, collaboration, and mini-apps. It aims to be a desktop app for local usage and a distributed daemon for servers, with a web app frontend client. The tool is stack-based on Electron, Angular, and Go, and currently dual-licensed under AGPL-3.0-or-later and a commercial license.

helicone

Helicone is an open-source observability platform designed for Language Learning Models (LLMs). It logs requests to OpenAI in a user-friendly UI, offers caching, rate limits, and retries, tracks costs and latencies, provides a playground for iterating on prompts and chat conversations, supports collaboration, and will soon have APIs for feedback and evaluation. The platform is deployed on Cloudflare and consists of services like Web (NextJs), Worker (Cloudflare Workers), Jawn (Express), Supabase, and ClickHouse. Users can interact with Helicone locally by setting up the required services and environment variables. The platform encourages contributions and provides resources for learning, documentation, and integrations.

vidur

Vidur is an open-source next-gen Recruiting OS that offers an intuitive and modern interface for forward-thinking companies to efficiently manage their recruitment processes. It combines advanced candidate profiles, team workspace, plugins, and one-click apply features. The project is under active development, and contributors are welcome to join by addressing open issues. To ensure privacy, security issues should be reported via email to [email protected].

postiz-app

Postiz is an ultimate AI social media scheduling tool that offers everything you need to manage your social media posts, build an audience, capture leads, and grow your business. It allows you to schedule posts with AI features, measure work with analytics, collaborate with team members, and invite others to comment and schedule posts. The tech stack includes NX (Monorepo), NextJS (React), NestJS, Prisma, Redis, and Resend for email notifications.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing features like local data storage, multiple LLM provider support, image generation, enhanced prompting, keyboard shortcuts, and more. It offers a user-friendly interface with dark theme, team collaboration, cross-platform availability, web version access, iOS & Android apps, multilingual support, and ongoing feature enhancements. Developed for prompt and API debugging, it has gained popularity for daily chatting and professional role-playing with AI assistance.

lightfriend

Lightfriend is a lightweight and user-friendly tool designed to assist developers in managing their GitHub repositories efficiently. It provides a simple and intuitive interface for users to perform various repository-related tasks, such as creating new repositories, managing branches, and reviewing pull requests. With Lightfriend, developers can streamline their workflow and collaborate more effectively with team members. The tool is designed to be easy to use and requires minimal setup, making it ideal for developers of all skill levels. Whether you are a beginner looking to get started with GitHub or an experienced developer seeking a more efficient way to manage your repositories, Lightfriend is the perfect companion for your GitHub workflow.

LlamaBot

LlamaBot is an open-source AI coding agent that rapidly builds MVPs, prototypes, and internal tools. It works for non-technical founders, product teams, and engineers by generating working prototypes, embedding AI directly into the app, and running real workflows. Unlike typical codegen tools, LlamaBot can embed directly in your app and run real workflows, making it ideal for collaborative software building where founders guide the vision, engineers stay in control, and AI fills the gap. LlamaBot is built for moving ideas fast, allowing users to prototype an AI MVP in a weekend, experiment with workflows, and collaborate with teammates to bridge the gap between non-technical founders and engineering teams.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.