pluely

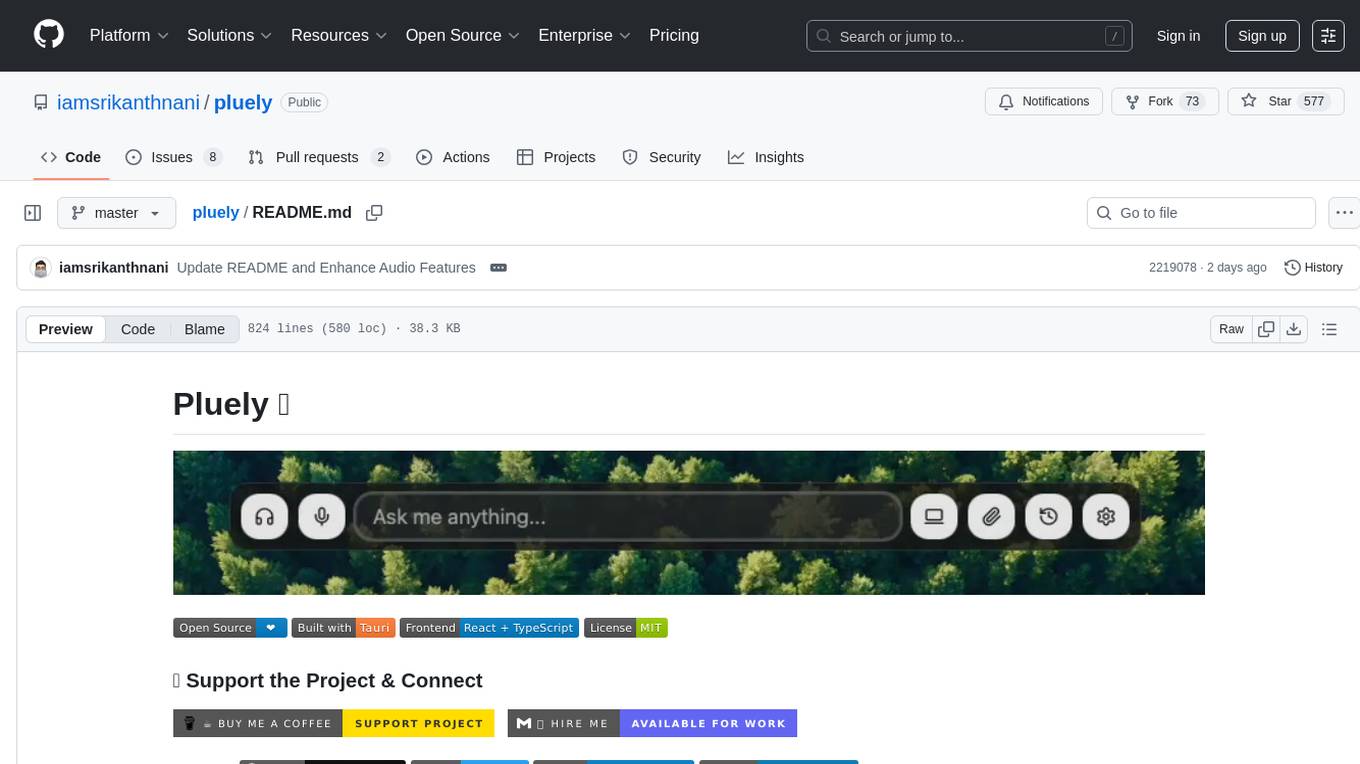

The Open Source Alternative to Cluely - A lightning-fast, privacy-first AI assistant that works seamlessly during meetings, interviews, and conversations without anyone knowing. Built with Tauri for native performance, just 10MB. Completely undetectable in video calls, screen shares, and recordings.

Stars: 687

Pluely is a versatile and user-friendly tool for managing tasks and projects. It provides a simple interface for creating, organizing, and tracking tasks, making it easy to stay on top of your work. With features like task prioritization, due date reminders, and collaboration options, Pluely helps individuals and teams streamline their workflow and boost productivity. Whether you're a student juggling assignments, a professional managing multiple projects, or a team coordinating tasks, Pluely is the perfect solution to keep you organized and efficient.

README:

The Open Source Alternative to Cluely - A lightning-fast, privacy-first AI assistant that works seamlessly during meetings, interviews, and conversations without anyone knowing.

This is the open source version of the $15M company Cluely 🎯. Experience the same powerful real-time AI assistance, but with complete transparency, privacy, and customization control.

Available formats: .dmg (macOS) • .msi (Windows) • .exe (Windows) • .deb (Linux) • .rpm (Linux) • .AppImage (Linux)

The world's most efficient AI assistant that lives on your desktop

| 🪶 Ultra Lightweight | 📺 Always Visible | ⚡ Instant Access |

|---|---|---|

| Only ~10MB total app size | Translucent overlay on any window | One click to activate AI assistance |

| 27x smaller than Cluely (~270MB) | Always on top, never intrusive | Overlaps seamlessly with your workflow |

| 50% less compute power usage | Perfect transparency level | Ready when you need it most |

| Feature | 🟢 Pluely (Open Source) | 🔴 Original Cluely |

|---|---|---|

| App Size | ~10MB ⚡ | ~270MB 🐌 |

| Size Difference | 27x Smaller 🪶 | Bloated with unnecessary overhead |

| Compute Usage | 50% Less CPU/RAM 💚 | Heavy resource consumption |

| Startup Time | <100ms ⚡ | Several seconds |

| Privacy | 100% Local with your LLM 🔒 | Data sent to servers |

| Cost | Free & Open Source 💝 | $15M company pricing 💸 |

Pluely comes with powerful global keyboard shortcuts that work from anywhere on your system:

| Shortcut | macOS | Windows/Linux | Function |

|---|---|---|---|

| Toggle Window | Cmd + \ |

Ctrl + \ |

Show/Hide the main window + app icon (based on settings) |

| Voice Input | Cmd + Shift + A |

Ctrl + Shift + A |

Start voice recording |

| Screenshot | Cmd + Shift + S |

Ctrl + Shift + S |

Capture screenshot |

| System Audio | Cmd + Shift + M |

Ctrl + Shift + M |

Toggle system audio capture |

Note: Pluely system audio capture is actively in development and improving.

Everything Your Computer Plays: Transform any audio on your system into an intelligent AI assistant with real-time transcription and contextual help:

- Real-Time AI Assistant: 8 specialized modes for meetings, interviews, presentations, learning, and more

- Background Processing: Captures system audio continuously with smart context detection

- Multi-Scenario Support: Meeting insights, interview help, translation, presentation coaching, and learning assistance

- Cross-Platform: Works on macOS, Windows, and Linux with platform-specific audio routing

- Settings Control: Toggle system audio capture in Settings → Audio section

- AI Integration: Seamlessly connects transcription & completion to AI models for instant responses

🔒 Security by Design: Operating systems block direct system audio access for privacy protection - this is universal across all platforms and applications.

⚙️ Quick Setup Process:

- macOS: Install BlackHole (free) + Audio MIDI Setup (5 min)

- Windows: Enable built-in Stereo Mix OR install VB-Cable (free) (3 min)

- Linux: Use built-in PulseAudio monitors (usually works out-of-box)

📖 Complete Audio Setup Guide • Step-by-step for all platforms

Voice Input: Transform your speech into an intelligent AI assistant with real-time transcription and contextual help:

- Voice Activity Detection: Uses advanced VAD technology for automatic speech detection

- Real-Time Processing: Instant speech-to-text conversion with immediate AI responses

-

Keyboard Shortcuts: Quick voice input with

Cmd+Shift+A(macOS) orCtrl+Shift+A(Windows/Linux) - No Setup Required: Works immediately on any platform without additional configuration

- Multi-STT Provider Support: Choose from OpenAI Whisper, Groq, ElevenLabs, Google, Deepgram, and more

- AI Integration: Seamlessly connects transcription to AI models for instant contextual responses

Smooth, instant input that appears first for quick AI help - paste any images directly:

Experience the seamless input flow that prioritizes speed and simplicity:

| Input 1 | Paste image 2 |

|---|---|

|

|

| Ultra-smooth text input that appears instantly for immediate AI assistance | Paste any images directly for instant AI analysis and contextual help |

- Instant Focus: Input automatically appears first whenever you show the window

- Smooth Experience: Ultra-responsive text input without any lag or delays

- Image Pasting: Paste any images directly from clipboard for instant AI analysis

- Quick Access: Always ready for immediate help with seamless hide/show transitions

- Simple Interface: Clean, distraction-free input that prioritizes speed

- Smart Positioning: Input field stays focused for continuous conversation flow

Capture and analyze screenshots with intelligent auto/manual modes for instant AI assistance:

Experience two powerful screenshot modes that adapt to your workflow:

| Manual Mode Screenshot |

|---|

|

| Perfect for coding challenges - capture LeetCode problems and get step-by-step solutions with your custom prompts |

| Auto Mode Screenshot |

|---|

|

| Ideal for single image - automatically analyze image content with your predefined AI prompt for instant insights |

- Manual Mode: Capture multiple screenshots submit to AI analysis, with a prompt

- Auto Mode: Screenshots automatically submit to AI using your saved custom prompt with instant analysis

-

Shortcuts: Capture screenshots with

Cmd+Shift+S(macOS) orCtrl+Shift+S(Windows/Linux) - Smart Configuration: Toggle between modes in Settings → Screenshot Configuration

- Custom Prompts: Define your auto-analysis prompt for consistent, personalized AI responses

- Seamless Integration: Screenshots work with all AI providers (OpenAI, Gemini, Claude, Grok, includes with your custom providers)

- Privacy First: Screenshots are processed locally and only sent to your chosen AI provider

Control whether the Pluely window stays above all other applications for instant access:

Watch how the Pluely window stays perfectly visible above all other applications

- Enabled: Window always appears on top of other applications

- Disabled: Window behaves like normal applications

- Settings Control: Toggle in Settings → Always On Top Mode

- Independent Control: Works separately from stealth features

- Perfect for: Quick access during meetings, presentations, or when you need instant AI assistance |

Complete stealth mode control integrated with main toggle:

See how the toggle the app icon from your dock/taskbar while staying fully functional

- Show Mode (Default): App icon remains visible in dock/taskbar when window is hidden

- Hide Mode: App icon completely disappears from dock/taskbar when window is hidden (app keeps running in background)

- Settings Control: Configure dock/taskbar icon visibility in Settings → App Icon Visibility

-

Auto-Integration: Works automatically with main toggle shortcut (

Cmd+\/Ctrl+\) based on your settings - Cross-Platform: Works seamlessly on macOS (ActivationPolicy::Accessory), Windows (skip_taskbar), and Linux (skip_taskbar) |

Complete control over title tooltips across the entire application:

See how to toggle all element title tooltips on/off globally with instant effect

- Show Mode (Default): All button and interactive element tooltips are visible on hover

- Hide Mode: All title tooltips are completely hidden while elements remain fully functional

- Settings Control: Toggle in Settings → Element Titles to enable/disable globally

- Instant Effect: Changes apply immediately across all 50+ interactive elements

- Accessibility Aware: Perfect for users who prefer clean interfaces without tooltip clutter

- Preserves Functionality: Elements remain clickable and accessible, only tooltips are controlled |

Complete conversation management stored locally on your device:

- Local Storage - All conversations saved to your device with zero data transmission

- Instant Access - History loads immediately with real-time updates

- Message History - See complete message threads with user/AI distinction

- Reuse Chats - Continue any conversation from where you left off with one click

- Download Markdown - Export conversations as formatted markdown files with timestamps

- Delete Chats - Remove individual conversations or delete all from Settings

- Quick Navigation - Browse all your conversations with auto-generated titles

Get Pluely API if you don't want to maintain your own providers

Unlock premium features, faster responses, advanced speech to text, and priority support with Pluely License:

Pluely License activation unlocks faster AI responses, premium features, and dedicated support

- Get Unlimited AI: Unlimited AI interactions and responses

- Faster AI Responses: Optimized processing with dedicated infrastructure

- Premium Features: Access to advanced AI capabilities and upcoming features

- Priority Support: Direct support for any issues or questions

- Exclusive Benefits: Early access to new features and improvements

- Purchase License: Click "Get License Key" to visit our secure checkout page

- Receive Key: After payment, you'll receive your license key via email

- Activate: Paste your license key in Settings → Pluely Access section

- Enjoy: Start experiencing faster responses and premium features immediately

Switch between Pluely API and your custom providers anytime in Settings → Pluely Access. Whether you want the convenience of our optimized service or prefer to maintain your own AI provider setup, Pluely gives you complete flexibility.

Pluely now supports curl requests when adding custom providers, giving you ultimate flexibility to integrate any AI provider. This means you can connect to any LLM service using simple curl commands, opening up endless possibilities for your AI providers.

Unlock Any LLM Provider - Pluely supports any LLM provider with full streaming and non-streaming capabilities. Configure custom endpoints, authentication, and response parsing for complete flexibility.

See how easy it is to configure any AI provider with Pluely's custom provider setup

Pluely supports these dynamic variables that are automatically replaced:

| Variable | Purpose |

|---|---|

{{TEXT}} |

User's text input |

{{IMAGE}} |

Base64 image data |

{{SYSTEM_PROMPT}} |

System instructions |

{{MODEL}} |

AI model name |

{{API_KEY}} |

API authentication key |

- Streaming Support: Toggle streaming on/off for real-time responses

-

Response Path: Configure where to extract content from API responses (e.g.,

choices[0].message.content) - Authentication: Support for Bearer tokens, API keys, and custom headers

- Any Endpoint: Works with any REST API that accepts JSON requests

Add your own variables using the {{VARIABLE_NAME}} format directly in your curl command. They'll appear as configurable fields when you select the provider.

Get started with your preferred AI provider in seconds. Pluely supports all major LLM providers, custom providers, and seamless integration:

| OpenAI Setup | Grok setup |

|---|---|

|

|

| Add your OpenAI API key and enter model name | Add your xAI Grok API key and enter your model |

| Google Gemini Setup | Anthropic Claude Setup |

|---|---|

|

|

| Add your Google Gemini API key and enter your model | Add your Anthropic Claude API key and enter your model |

Additional Providers:

- Mistral AI: Add your Mistral API key and select from available models

- Cohere: Add your Cohere API key and enter your model name

- Perplexity: Add your Perplexity API key and select from available models

- Groq: Add your Groq API key and select from available models

- Ollama: Configure your local Ollama instance and select models

Pluely now supports advanced curl-based integration for speech-to-text providers, giving you ultimate flexibility to integrate any STT service. This means you can connect to any speech API using simple curl commands, opening up endless possibilities for your voice workflows.

Get started with your preferred speech-to-text provider in seconds. Pluely supports all major STT providers, custom providers, and seamless voice integration:

| OpenAI Whisper Setup | ElevenLabs STT Setup |

|---|---|

|

|

| Add your OpenAI API key and select Whisper model | Add your ElevenLabs API key and select model |

Additional STT Providers:

- Groq Whisper: Add your Groq API key and select Whisper model

- Google Speech-to-Text: Add your Google API key for speech recognition

- Deepgram STT: Add your Deepgram API key and select model

- Azure Speech-to-Text: Add your Azure subscription key and configure region

- Speechmatics: Add your Speechmatics API key for transcription

- Rev.ai STT: Add your Rev.ai API key for speech-to-text

- IBM Watson STT: Add your IBM Watson API key and configure service

Each STT provider comes pre-configured with optimal settings:

- 🎯 Real-time Processing: Instant speech recognition with voice activity detection

- 🌍 Multi-Language Support: Choose providers optimized for specific languages

- ⚡ Fast & Accurate: Industry-leading transcription accuracy and speed

- 🔒 Secure Authentication: API keys stored locally and securely

- 🎨 Seamless Integration: Works with Pluely's voice input features

- 🔧 Custom Variables: Support for dynamic variables in custom STT providers

- 🌐 Any STT Provider: Full compatibility with any speech recognition API endpoint

For STT providers not in our list, use the custom STT provider option with full control:

- 🌐 Any Speech Recognition API: Add any STT provider with REST API support

- 🔐 Flexible Authentication: Bearer tokens, API keys, custom headers, or direct key embedding

- 📡 Response Path Mapping: Configure where to extract transcription text from any API response structure

-

🔧 Dynamic Variables: Create custom variables using

{{VARIABLE_NAME}}format in curl commands - 📝 Request Customization: Full control over headers, body structure, and parameters

- ⚙️ Audio Format Support: Support for various audio formats and sample rates

- 🔄 Real-Time Testing: Test your custom STT provider setup instantly

🎯 The Ultimate Stealth AI Assistant - Invisible to Everyone

Pluely is engineered to be completely invisible during your most sensitive moments:

- 🔍 Undetectable in video calls - Works seamlessly in Zoom, Google Meet, Microsoft Teams, Slack Huddles, and all other meeting platforms

- 📺 Invisible in screen shares - Your audience will never know you're using AI assistance

- 📸 Screenshot-proof design - Extremely difficult to capture in screenshots due to translucent overlay

- 🏢 Meeting room safe - Won't appear on projectors or shared screens

- 🎥 Recording invisible - Doesn't show up in meeting recordings or live streams

- ⚡ Instant hide/show - Overlaps with any window on your PC/Desktop for quick access

Perfect for confidential scenarios where discretion is absolutely critical.

Built with Tauri, Pluely delivers native desktop performance with minimal resource usage:

- Instant startup - launches in milliseconds

- 10x smaller than Electron apps (~10MB vs ~100MB)

- Native performance - no browser overhead

- Memory efficient - uses 50% less RAM than web-based alternatives

- Cross-platform - runs natively on macOS, Windows, and Linux

Unlike cloud-based solutions, Pluely keeps everything local:

- Your data never touches any servers - all processing happens locally and with your LLM provider

- API keys stored securely in your browser's localStorage

- No telemetry or tracking - your conversations stay private, not stored anywhere

- Offline-first design - works without internet (except for AI API calls)

Pluely sits quietly on your desktop, ready to assist instantly with zero setup time:

Completely undetectable in all these critical situations:

- Job interviews - Get real-time answers without anyone knowing you're using AI

- Sales calls - Access product information instantly while maintaining professionalism

- Technical meetings - Quick reference to documentation without breaking flow

- Educational presentations - Learning assistance that's invisible to your audience

- Client consultations - Professional knowledge at your fingertips, completely discrete

- Live coding sessions - Get syntax help, debug errors, explain algorithms without detection

- Design reviews - Analyze screenshots, get UI/UX suggestions invisibly

- Writing assistance - Grammar check, tone adjustment, content optimization in stealth mode

- Board meetings - Access information without anyone noticing

- Negotiations - Real-time strategy assistance that remains completely hidden

Important: Before installing the app, ensure all required system dependencies are installed for your platform:

👉 Tauri Prerequisites & Dependencies

This includes essential packages like WebKitGTK (Linux), system libraries, and other dependencies required for Tauri applications to run properly on your operating system.

- Node.js (v18 or higher)

- Rust (latest stable)

- npm or yarn

# Clone the repository

git clone https://github.com/iamsrikanthnani/pluely.git

cd pluely

# Install dependencies

npm install

# Start development server

npm run tauri dev# Build the application

npm run tauri buildThis creates platform-specific installers in src-tauri/target/release/bundle/:

-

macOS:

.dmg -

Windows:

.msi,.exe -

Linux:

.deb,.rpm,.AppImage

- API keys are stored in browser localStorage (not sent to any server)

- No telemetry - your keys never leave your device

- Session-based - keys can be cleared anytime

- Direct HTTPS connections to AI providers

- No proxy servers - your requests go straight to the AI service

- Request signing handled locally

- TLS encryption for all API communications

- No conversation logging - messages aren't stored anywhere

- Temporary session data - cleared on app restart

- Local file processing - images processed in-browser

- No analytics - completely private usage

Pluely makes API calls directly from your frontend because:

- 🔒 Maximum Privacy: Your conversations never touch our servers

- 🚀 Better Performance: Direct connection = faster responses

- 📱 Always Local: Your data stays on your device, always

- 🔍 Transparent: You can inspect every network request in dev tools

- ⚡ No Bottlenecks: No server capacity limits or downtime

- Native binary - no JavaScript runtime overhead

- Rust backend - memory-safe, ultra-fast

- Small bundle size - ~10MB total app size

- Instant startup - launches in <100ms

- Low memory usage - typically <50MB RAM

- React 18 with concurrent features

- TypeScript for compile-time optimization

- Vite for lightning-fast development

- Tree shaking - only bundle used code

- Lazy loading - components load on demand

src/

├── components/ # Reusable UI components

│ ├── completion/ # AI completion interface

│ │ ├── Audio.tsx # Audio recording component

│ │ ├── AutoSpeechVad.tsx # Voice activity detection

│ │ ├── Files.tsx # File handling component

│ │ ├── index.tsx # Main completion component

│ │ └── Input.tsx # Text input component

│ ├── Header/ # Application header

│ ├── history/ # Chat history components

│ │ ├── ChatHistory.tsx # Chat history management

│ │ ├── index.tsx # History exports

│ │ └── MessageHistory.tsx # Message history display

│ ├── Markdown/ # Markdown rendering

│ ├── Selection/ # Text selection handling

│ ├── settings/ # Configuration components

│ │ ├── ai-configs/ # AI provider configurations

│ │ │ ├── CreateEditProvider.tsx # Custom AI provider setup

│ │ │ ├── CustomProvider.tsx # Custom provider component

│ │ │ ├── index.tsx # AI configs exports

│ │ │ └── Providers.tsx # AI providers list

│ │ ├── DeleteChats.tsx # Chat deletion functionality

│ │ ├── Disclaimer.tsx # Legal disclaimers

│ │ ├── index.tsx # Settings panel

│ │ ├── ScreenshotConfigs.tsx # Screenshot configuration

│ │ ├── stt-configs/ # Speech-to-text configurations

│ │ │ ├── CreateEditProvider.tsx # Custom STT provider setup

│ │ │ ├── CustomProvider.tsx # Custom STT provider

│ │ │ ├── index.tsx # STT configs exports

│ │ │ └── Providers.tsx # STT providers list

│ │ └── SystemPrompt.tsx # Custom system prompt editor

│ ├── TextInput/ # Text input components

│ ├── ui/ # shadcn/ui component library

│ └── updater/ # Application update handling

├── config/ # Application configuration

│ ├── ai-providers.constants.ts # AI provider configurations

│ ├── constants.ts # General constants

│ ├── index.ts # Config exports

│ └── stt.constants.ts # Speech-to-text provider configs

├── contexts/ # React contexts

│ ├── app.context.tsx # Main application context

│ ├── index.ts # Context exports

│ └── theme.context.tsx # Theme management context

├── hooks/ # Custom React hooks

│ ├── index.ts # Hook exports

│ ├── useCompletion.ts # AI completion hook

│ ├── useCustomProvider.ts # Custom provider hook

│ ├── useCustomSttProviders.ts # Custom STT providers hook

│ ├── useSettings.ts # Settings management hook

│ ├── useVersion.ts # Version management hook

│ └── useWindow.ts # Window management hook

├── lib/ # Core utilities

│ ├── chat-history.ts # Chat history management

│ ├── functions/ # Core functionality modules

│ │ ├── ai-models.function.ts # AI model handling

│ │ ├── ai-response.function.ts # AI response processing

│ │ ├── common.function.ts # Common utilities

│ │ ├── index.ts # Function exports

│ │ └── stt.function.ts # Speech-to-text functions

│ ├── storage/ # Local storage management

│ │ ├── ai-providers.ts # AI provider storage

│ │ ├── helper.ts # Storage helpers

│ │ ├── index.ts # Storage exports

│ │ └── stt-providers.ts # STT provider storage

│ ├── index.ts # Lib exports

│ ├── utils.ts # Utility functions

│ └── version.ts # Version utilities

├── types/ # TypeScript type definitions

│ ├── ai-provider.type.ts # AI provider types

│ ├── completion.hook.ts # Completion hook types

│ ├── completion.ts # Completion types

│ ├── context.type.ts # Context types

│ ├── index.ts # Type exports

│ ├── settings.hook.ts # Settings hook types

│ ├── settings.ts # Settings types

│ └── stt.types.ts # Speech-to-text types

├── App.tsx # Main application component

├── main.tsx # Application entry point

├── global.css # Global styles

└── vite-env.d.ts # Vite environment types

src-tauri/

├── src/

│ ├── main.rs # Application entry point

│ ├── lib.rs # Core Tauri setup and IPC handlers

│ └── window.rs # Window management & positioning

├── build.rs # Build script for additional resources

├── Cargo.toml # Rust dependencies and build configuration

├── tauri.conf.json # Tauri configuration (windows, bundles, etc.)

├── Cargo.lock # Dependency lock file

├── capabilities/ # Permission configurations

│ └── default.json # Default capabilities

├── gen/ # Generated files

├── icons/ # Application icons (PNG, ICNS, ICO)

├── info.plist # macOS application info

├── pluely.desktop # Linux desktop file

└── target/ # Build output directory

# Start Tauri development

npm run tauri dev

# Build for production

npm run build

npm run tauri build

# Type checking

npm run type-check

# Linting

npm run lintWe welcome contributions! Here's how to get started:

🚀 Only fixes and feature PRs are welcome.

❌ Please do NOT submit pull requests that:

- Adding new AI providers

- Adding new speech-to-text providers

✅ What we DO welcome:

- Bug fixes and performance improvements

- Feature improvements to existing functionality

💡 Like this project? Consider buying me a coffee ☕ or hiring me for your next project!

# Fork and clone the repository

git clone https://github.com/iamsrikanthnani/pluely.git

cd pluely

# Install dependencies

npm install

# Start development

npm run tauri dev- Fork the repository

-

Create a feature branch (

git checkout -b feature/amazing-feature) -

Commit your changes (

git commit -m 'Add amazing feature') -

Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

- TypeScript for type safety

- ESLint + Prettier for formatting

- Conventional Commits for commit messages

- Component documentation with JSDoc

This project is licensed under the MIT License - see the LICENSE file for details.

- Cluely - Inspiration for this open source alternative

- Tauri - Amazing desktop framework

- shadcn/ui - Beautiful UI components

- @ricky0123/vad-react - Voice Activity Detection

- OpenAI - GPT models and Whisper API

- Anthropic - Claude AI models

- xAI - Grok AI models

- Google - Gemini AI models

- Website: pluely.com (Pluely website)

- 🎵 Audio Setup Guide: SYSTEM_AUDIO_SETUP.md - Complete cross-platform audio configuration

- Website: cluely.com (Original Cluely)

- Documentation: GitHub Wiki

- Issues: GitHub Issues

- Discussions: GitHub Discussions

Made with ❤️ by Srikanth Nani

Experience the power of Cluely, but with complete transparency and control over your data.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pluely

Similar Open Source Tools

pluely

Pluely is a versatile and user-friendly tool for managing tasks and projects. It provides a simple interface for creating, organizing, and tracking tasks, making it easy to stay on top of your work. With features like task prioritization, due date reminders, and collaboration options, Pluely helps individuals and teams streamline their workflow and boost productivity. Whether you're a student juggling assignments, a professional managing multiple projects, or a team coordinating tasks, Pluely is the perfect solution to keep you organized and efficient.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

tandem

Tandem is a local-first, privacy-focused AI workspace that runs entirely on your machine. It is inspired by early AI coworking research previews, open source, and provider-agnostic. Tandem offers privacy-first operation, provider agnosticism, zero trust model, true cross-platform support, open-source licensing, modern stack, and developer superpowers for everyone. It provides folder-wide intelligence, multi-step automation, visual change review, complete undo, zero telemetry, provider freedom, secure design, cross-platform support, visual permissions, full undo, long-term memory, skills system, document text extraction, workspace Python venv, rich themes, execution planning, auto-updates, multiple specialized agent modes, multi-agent orchestration, project management, and various artifacts and outputs.

GitVizz

GitVizz is an AI-powered repository analysis tool that helps developers understand and navigate codebases quickly. It transforms complex code structures into interactive documentation, dependency graphs, and intelligent conversations. With features like interactive dependency graphs, AI-powered code conversations, advanced code visualization, and automatic documentation generation, GitVizz offers instant understanding and insights for any repository. The tool is built with modern technologies like Next.js, FastAPI, and OpenAI, making it scalable and efficient for analyzing large codebases. GitVizz also provides a standalone Python library for core code analysis and dependency graph generation, offering multi-language parsing, AST analysis, dependency graphs, visualizations, and extensibility for custom applications.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

Lynkr

Lynkr is a self-hosted proxy server that unlocks various AI coding tools like Claude Code CLI, Cursor IDE, and Codex Cli. It supports multiple LLM providers such as Databricks, AWS Bedrock, OpenRouter, Ollama, llama.cpp, Azure OpenAI, Azure Anthropic, OpenAI, and LM Studio. Lynkr offers cost reduction, local/private execution, remote or local connectivity, zero code changes, and enterprise-ready features. It is perfect for developers needing provider flexibility, cost control, self-hosted AI with observability, local model execution, and cost reduction strategies.

J.A.R.V.I.S.2.0

J.A.R.V.I.S. 2.0 is an AI-powered assistant designed for voice commands, capable of tasks like providing weather reports, summarizing news, sending emails, and more. It features voice activation, speech recognition, AI responses, and handles multiple tasks including email sending, weather reports, news reading, image generation, database functions, phone call automation, AI-based task execution, website & application automation, and knowledge-based interactions. The assistant also includes timeout handling, automatic input processing, and the ability to call multiple functions simultaneously. It requires Python 3.9 or later and specific API keys for weather, news, email, and AI access. The tool integrates Gemini AI for function execution and Ollama as a fallback mechanism. It utilizes a RAG-based knowledge system and ADB integration for phone automation. Future enhancements include deeper mobile integration, advanced AI-driven automation, improved NLP-based command execution, and multi-modal interactions.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

retrace

Retrace is a local-first screen recording and search application for macOS, inspired by Rewind AI. It captures screen activity, extracts text via OCR, and makes everything searchable locally on-device. The project is in very early development, offering features like continuous screen capture, OCR text extraction, full-text search, timeline viewer, dashboard analytics, Rewind AI import, settings panel, global hotkeys, HEVC video encoding, search highlighting, privacy controls, and more. Built with a modular architecture, Retrace uses Swift 5.9+, SwiftUI, Vision framework, SQLite with FTS5, HEVC video encoding, CryptoKit for encryption, and more. Future releases will include features like audio transcription and semantic search. Retrace requires macOS 13.0+ (Apple Silicon required) and Xcode 15.0+ for building from source, with permissions for screen recording and accessibility. Contributions are welcome, and the project is licensed under the MIT License.

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

AgriTech

AgriTech is an AI-powered smart agriculture platform designed to assist farmers with crop recommendations, yield prediction, plant disease detection, and community-driven collaboration—enabling sustainable and data-driven farming practices. It offers AI-driven decision support for modern agriculture, early-stage plant disease detection, crop yield forecasting using machine learning models, and a collaborative ecosystem for farmers and stakeholders. The platform includes features like crop recommendation, yield prediction, disease detection, an AI chatbot for platform guidance and agriculture support, a farmer community, and shopkeeper listings. AgriTech's AI chatbot provides comprehensive support for farmers with features like platform guidance, agriculture support, decision making, image analysis, and 24/7 support. The tech stack includes frontend technologies like HTML5, CSS3, JavaScript, backend technologies like Python (Flask) and optional Node.js, machine learning libraries like TensorFlow, Scikit-learn, OpenCV, and database & DevOps tools like MySQL, MongoDB, Firebase, Docker, and GitHub Actions.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

timeline-studio

Timeline Studio is a next-generation professional video editor with AI integration that automates content creation for social media. It combines the power of desktop applications with the convenience of web interfaces. With 257 AI tools, GPU acceleration, plugin system, multi-language interface, and local processing, Timeline Studio offers complete video production automation. Users can create videos for various social media platforms like TikTok, YouTube, Vimeo, Telegram, and Instagram with optimized versions. The tool saves time, understands trends, provides professional quality, and allows for easy feature extension through plugins. Timeline Studio is open source, transparent, and offers significant time savings and quality improvements for video editing tasks.

For similar tasks

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

OpenAGI

OpenAGI is an AI agent creation package designed for researchers and developers to create intelligent agents using advanced machine learning techniques. The package provides tools and resources for building and training AI models, enabling users to develop sophisticated AI applications. With a focus on collaboration and community engagement, OpenAGI aims to facilitate the integration of AI technologies into various domains, fostering innovation and knowledge sharing among experts and enthusiasts.

sirji

Sirji is an agentic AI framework for software development where various AI agents collaborate via a messaging protocol to solve software problems. It uses standard or user-generated recipes to list tasks and tips for problem-solving. Agents in Sirji are modular AI components that perform specific tasks based on custom pseudo code. The framework is currently implemented as a Visual Studio Code extension, providing an interactive chat interface for problem submission and feedback. Sirji sets up local or remote development environments by installing dependencies and executing generated code.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

Awesome-CVPR2024-ECCV2024-AIGC

A Collection of Papers and Codes for CVPR 2024 AIGC. This repository compiles and organizes research papers and code related to CVPR 2024 and ECCV 2024 AIGC (Artificial Intelligence and Graphics Computing). It serves as a valuable resource for individuals interested in the latest advancements in the field of computer vision and artificial intelligence. Users can find a curated list of papers and accompanying code repositories for further exploration and research. The repository encourages collaboration and contributions from the community through stars, forks, and pull requests.

ZetaForge

ZetaForge is an open-source AI platform designed for rapid development of advanced AI and AGI pipelines. It allows users to assemble reusable, customizable, and containerized Blocks into highly visual AI Pipelines, enabling rapid experimentation and collaboration. With ZetaForge, users can work with AI technologies in any programming language, easily modify and update AI pipelines, dive into the code whenever needed, utilize community-driven blocks and pipelines, and share their own creations. The platform aims to accelerate the development and deployment of advanced AI solutions through its user-friendly interface and community support.

NeoHaskell

NeoHaskell is a newcomer-friendly and productive dialect of Haskell. It aims to be easy to learn and use, while also powerful enough for app development with minimal effort and maximum confidence. The project prioritizes design and documentation before implementation, with ongoing work on design documents for community sharing.

For similar jobs

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

vanna

Vanna is an open-source Python framework for SQL generation and related functionality. It uses Retrieval-Augmented Generation (RAG) to train a model on your data, which can then be used to ask questions and get back SQL queries. Vanna is designed to be portable across different LLMs and vector databases, and it supports any SQL database. It is also secure and private, as your database contents are never sent to the LLM or the vector database.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

marvin

Marvin is a lightweight AI toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. Each of Marvin's tools is simple and self-documenting, using AI to solve common but complex challenges like entity extraction, classification, and generating synthetic data. Each tool is independent and incrementally adoptable, so you can use them on their own or in combination with any other library. Marvin is also multi-modal, supporting both image and audio generation as well using images as inputs for extraction and classification. Marvin is for developers who care more about _using_ AI than _building_ AI, and we are focused on creating an exceptional developer experience. Marvin users should feel empowered to bring tightly-scoped "AI magic" into any traditional software project with just a few extra lines of code. Marvin aims to merge the best practices for building dependable, observable software with the best practices for building with generative AI into a single, easy-to-use library. It's a serious tool, but we hope you have fun with it. Marvin is open-source, free to use, and made with 💙 by the team at Prefect.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide