structured-prompt-builder

A lightweight, browser‑first tool for designing well‑structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini‑powered prompt optimization.

Stars: 105

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

README:

A lightweight, browser‑first tool for designing well‑structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini‑powered prompt optimization.

Support development

If this tool saves you time, consider sponsoring to help keep it free and add features like template sharing and cloud sync.

👉 Become a sponsor

-

Structured fields: Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs (

name: value), Few‑shot examples - Live preview in Markdown, JSON, and YAML

- Copy / Download each format in one click; Import from JSON

- Model parameters: Temperature, Top‑p, Max tokens, Presence & Frequency penalties

-

Local Prompt Library (save, load, duplicate, delete) using

localStorage - Gemini Optimizer (optional): cleans grammar/clarity without changing your schema

- Built‑in dark/light friendly styles; focused reading mode for long prompts

- React 18 (UMD) for UI

- Tailwind‑style utility classes (or your own CSS)

- Babel Standalone (optional) for in‑browser JSX

- Vercel for zero‑config deploys

-

Clone the repo

git clone https://github.com/Siddhesh2377/structured-prompt-builder.git cd structured-prompt-builder -

Open

index.htmldirectly in the browser or serve the folder:npx serve . -

Start editing

src/app.jsx(or the inlined script). Refresh to see changes.

- Fill out the Prompt Composition fields (Role, Task, etc.).

- Use Sections to add Constraints, Steps, Inputs, and Examples.

- Switch Preview tabs (MD/JSON/YAML). Click Copy or Download.

- Save to the local Prompt Library; later Load, Duplicate, or Delete.

- To Import, choose a JSON file that matches the app’s schema.

- Paste your Gemini API key (stored locally under

gemini_api_key). - Choose a model (e.g.,

gemini-1.5-flash) and an Output format (MD/JSON/YAML). - Click Generate with Gemini.

What it does:

- Fixes spelling/grammar and clarifies wording.

- Preserves the original structure/keys and array order/length.

- Returns only the selected format—no commentary or code fences.

Tip: For the strictest structure preservation, choose JSON as the output format.

structured-prompt-builder/

├── public/

│ ├── favicon.ico

│ ├── image.png

│ ├── robots.txt

│ ├── sitemap.xml

│ └── site.webmanifest

├── src/

│ ├── app.jsx # Main application logic (builder + library + Gemini)

│ ├── components/

│ │ └── Section.jsx # Reusable list editor

│ └── utils/

│ └── jsonToYaml.js # JSON → YAML helper (small, dependency‑free)

├── index.html # Entry point (loads React UMD, app script, styles)

└── vercel.json # Static deploy config

vercelVercel will detect a static site and deploy automatically.

-

No server: All data (library items, API key) lives in your browser (

localStorage). - Keys are never sent anywhere except directly to Google’s Gemini endpoint when you click Generate.

PRs and ideas are welcome. Potential improvements:

- Prompt metadata (name, version, author, tags)

- Template gallery & sharing

- Cloud export/import providers (Drive, Dropbox, GitHub Gist)

- Streaming + token usage stats

Fork → branch → PR. Please include a brief description and before/after screenshots for UI changes.

MIT. See LICENSE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for structured-prompt-builder

Similar Open Source Tools

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

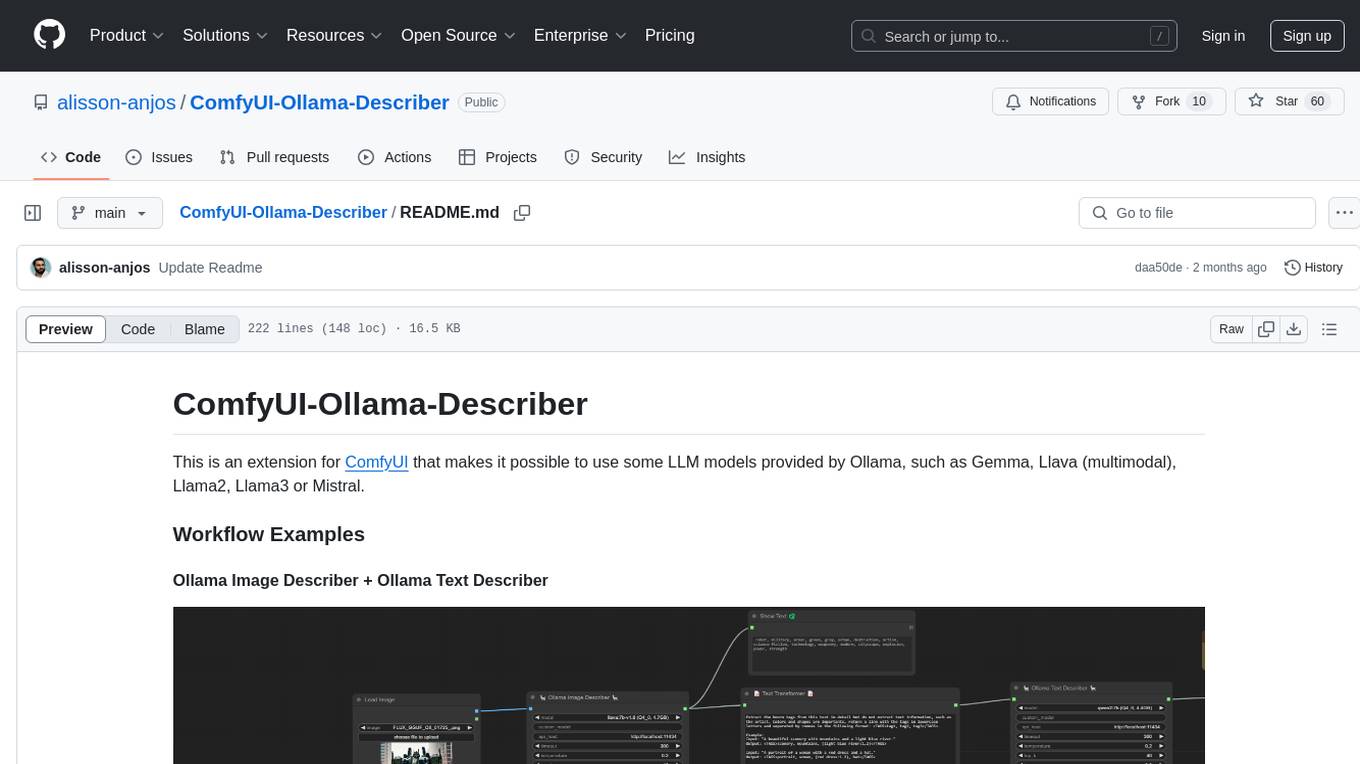

ComfyUI-Ollama-Describer

ComfyUI-Ollama-Describer is an extension for ComfyUI that enables the use of LLM models provided by Ollama, such as Gemma, Llava (multimodal), Llama2, Llama3, or Mistral. It requires the Ollama library for interacting with large-scale language models, supporting GPUs using CUDA and AMD GPUs on Windows, Linux, and Mac. The extension allows users to run Ollama through Docker and utilize NVIDIA GPUs for faster processing. It provides nodes for image description, text description, image captioning, and text transformation, with various customizable parameters for model selection, API communication, response generation, and model memory management.

retrace

Retrace is a local-first screen recording and search application for macOS, inspired by Rewind AI. It captures screen activity, extracts text via OCR, and makes everything searchable locally on-device. The project is in very early development, offering features like continuous screen capture, OCR text extraction, full-text search, timeline viewer, dashboard analytics, Rewind AI import, settings panel, global hotkeys, HEVC video encoding, search highlighting, privacy controls, and more. Built with a modular architecture, Retrace uses Swift 5.9+, SwiftUI, Vision framework, SQLite with FTS5, HEVC video encoding, CryptoKit for encryption, and more. Future releases will include features like audio transcription and semantic search. Retrace requires macOS 13.0+ (Apple Silicon required) and Xcode 15.0+ for building from source, with permissions for screen recording and accessibility. Contributions are welcome, and the project is licensed under the MIT License.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

pluely

Pluely is a versatile and user-friendly tool for managing tasks and projects. It provides a simple interface for creating, organizing, and tracking tasks, making it easy to stay on top of your work. With features like task prioritization, due date reminders, and collaboration options, Pluely helps individuals and teams streamline their workflow and boost productivity. Whether you're a student juggling assignments, a professional managing multiple projects, or a team coordinating tasks, Pluely is the perfect solution to keep you organized and efficient.

J.A.R.V.I.S.2.0

J.A.R.V.I.S. 2.0 is an AI-powered assistant designed for voice commands, capable of tasks like providing weather reports, summarizing news, sending emails, and more. It features voice activation, speech recognition, AI responses, and handles multiple tasks including email sending, weather reports, news reading, image generation, database functions, phone call automation, AI-based task execution, website & application automation, and knowledge-based interactions. The assistant also includes timeout handling, automatic input processing, and the ability to call multiple functions simultaneously. It requires Python 3.9 or later and specific API keys for weather, news, email, and AI access. The tool integrates Gemini AI for function execution and Ollama as a fallback mechanism. It utilizes a RAG-based knowledge system and ADB integration for phone automation. Future enhancements include deeper mobile integration, advanced AI-driven automation, improved NLP-based command execution, and multi-modal interactions.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

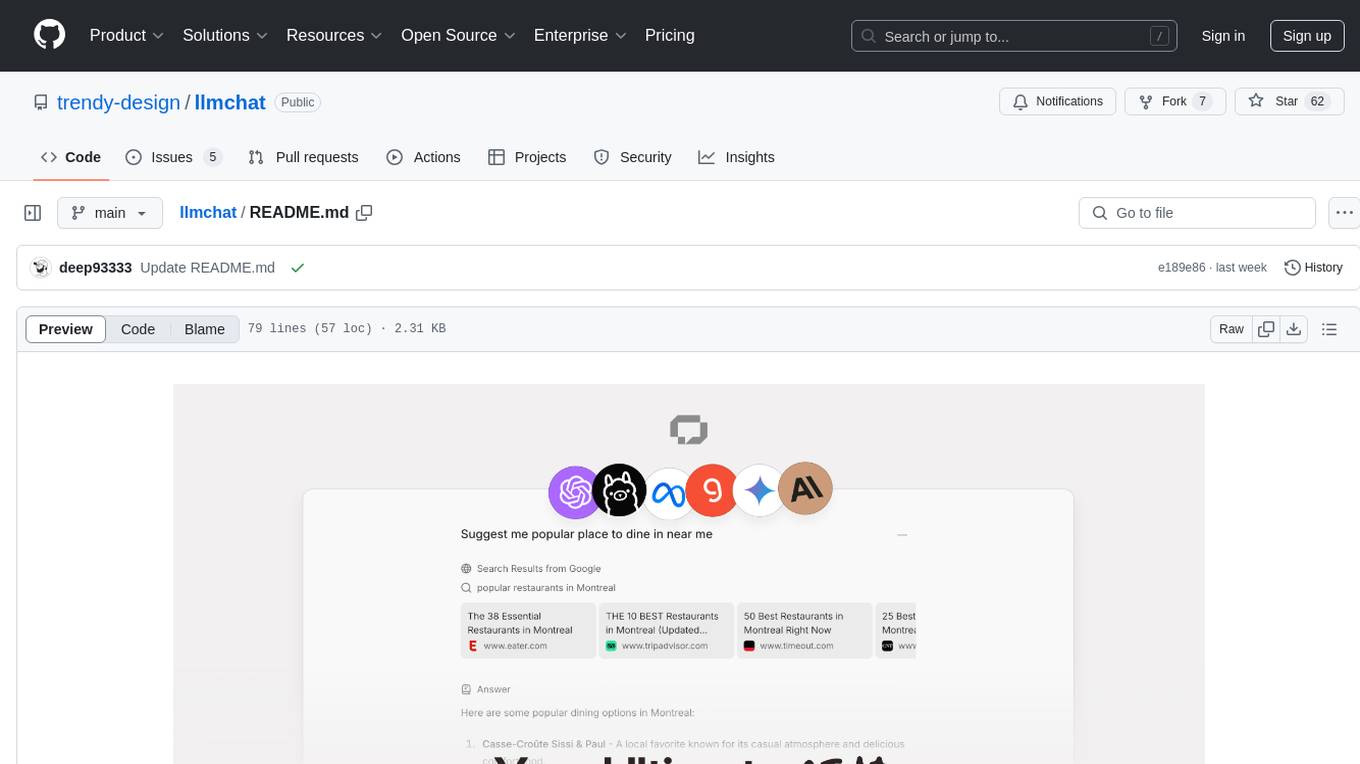

llmchat

LLMChat is an all-in-one AI chat interface that supports multiple language models, offers a plugin library for enhanced functionality, enables web search capabilities, allows customization of AI assistants, provides text-to-speech conversion, ensures secure local data storage, and facilitates data import/export. It also includes features like knowledge spaces, prompt library, personalization, and can be installed as a Progressive Web App (PWA). The tech stack includes Next.js, TypeScript, Pglite, LangChain, Zustand, React Query, Supabase, Tailwind CSS, Framer Motion, Shadcn, and Tiptap. The roadmap includes upcoming features like speech-to-text and knowledge spaces.

AgriTech

AgriTech is an AI-powered smart agriculture platform designed to assist farmers with crop recommendations, yield prediction, plant disease detection, and community-driven collaboration—enabling sustainable and data-driven farming practices. It offers AI-driven decision support for modern agriculture, early-stage plant disease detection, crop yield forecasting using machine learning models, and a collaborative ecosystem for farmers and stakeholders. The platform includes features like crop recommendation, yield prediction, disease detection, an AI chatbot for platform guidance and agriculture support, a farmer community, and shopkeeper listings. AgriTech's AI chatbot provides comprehensive support for farmers with features like platform guidance, agriculture support, decision making, image analysis, and 24/7 support. The tech stack includes frontend technologies like HTML5, CSS3, JavaScript, backend technologies like Python (Flask) and optional Node.js, machine learning libraries like TensorFlow, Scikit-learn, OpenCV, and database & DevOps tools like MySQL, MongoDB, Firebase, Docker, and GitHub Actions.

openwhispr

OpenWhispr is an open source desktop dictation application that converts speech to text using OpenAI Whisper. It features both local and cloud processing options for maximum flexibility and privacy. The application supports multiple AI providers, customizable hotkeys, agent naming, and various AI processing models. It offers a modern UI built with React 19, TypeScript, and Tailwind CSS v4, and is optimized for speed using Vite and modern tooling. Users can manage settings, view history, configure API keys, and download/manage local Whisper models. The application is cross-platform, supporting macOS, Windows, and Linux, and offers features like automatic pasting, draggable interface, global hotkeys, and compound hotkeys.

FastFlowLM

FastFlowLM is a Python library for efficient and scalable language model inference. It provides a high-performance implementation of language model scoring using n-gram language models. The library is designed to handle large-scale text data and can be easily integrated into natural language processing pipelines for tasks such as text generation, speech recognition, and machine translation. FastFlowLM is optimized for speed and memory efficiency, making it suitable for both research and production environments.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

ComfyUI_Yvann-Nodes

ComfyUI_Yvann-Nodes is a pack of custom nodes that enable audio reactivity within ComfyUI, allowing users to create AI-driven animations that sync with music. Users can generate audio reactive AI videos, control AI generation styles, content, and composition with any audio input. The tool is simple to use by dropping workflows in ComfyUI and specifying audio and visual inputs. It is flexible and works with existing ComfyUI AI tech and nodes like IPAdapter, AnimateDiff, and ControlNet. Users can pick workflows for Images → Video or Video → Video, download the corresponding .json file, drop it into ComfyUI, install missing custom nodes, set inputs, and generate audio-reactive animations.

presenton

Presenton is an open-source AI presentation generator and API that allows users to create professional presentations locally on their devices. It offers complete control over the presentation workflow, including custom templates, AI template generation, flexible generation options, and export capabilities. Users can use their own API keys for various models, integrate with Ollama for local model running, and connect to OpenAI-compatible endpoints. The tool supports multiple providers for text and image generation, runs locally without cloud dependencies, and can be deployed as a Docker container with GPU support.

For similar tasks

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

chatWeb

ChatWeb is a tool that can crawl web pages, extract text from PDF, DOCX, TXT files, and generate an embedded summary. It can answer questions based on text content using chatAPI and embeddingAPI based on GPT3.5. The tool calculates similarity scores between text vectors to generate summaries, performs nearest neighbor searches, and designs prompts to answer user questions. It aims to extract relevant content from text and provide accurate search results based on keywords. ChatWeb supports various modes, languages, and settings, including temperature control and PostgreSQL integration.

ellmer

ellmer is a tool that facilitates the use of large language models (LLM) from R. It supports various LLM providers and offers features such as streaming outputs, tool/function calling, and structured data extraction. Users can interact with ellmer in different ways, including interactive chat console, interactive method call, and programmatic chat. The tool provides support for multiple model providers and offers recommendations for different use cases, such as exploration or organizational use.

log10

Log10 is a one-line Python integration to manage your LLM data. It helps you log both closed and open-source LLM calls, compare and identify the best models and prompts, store feedback for fine-tuning, collect performance metrics such as latency and usage, and perform analytics and monitor compliance for LLM powered applications. Log10 offers various integration methods, including a python LLM library wrapper, the Log10 LLM abstraction, and callbacks, to facilitate its use in both existing production environments and new projects. Pick the one that works best for you. Log10 also provides a copilot that can help you with suggestions on how to optimize your prompt, and a feedback feature that allows you to add feedback to your completions. Additionally, Log10 provides prompt provenance, session tracking and call stack functionality to help debug prompt chains. With Log10, you can use your data and feedback from users to fine-tune custom models with RLHF, and build and deploy more reliable, accurate and efficient self-hosted models. Log10 also supports collaboration, allowing you to create flexible groups to share and collaborate over all of the above features.

LMOps

LMOps is a research initiative focusing on fundamental research and technology for building AI products with foundation models, particularly enabling AI capabilities with Large Language Models (LLMs) and Generative AI models. The project explores various aspects such as prompt optimization, longer context handling, LLM alignment, acceleration of LLMs, LLM customization, and understanding in-context learning. It also includes tools like Promptist for automatic prompt optimization, Structured Prompting for efficient long-sequence prompts consumption, and X-Prompt for extensible prompts beyond natural language. Additionally, LLMA accelerators are developed to speed up LLM inference by referencing and copying text spans from documents. The project aims to advance technologies that facilitate prompting language models and enhance the performance of LLMs in various scenarios.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

PromptAgent

PromptAgent is a repository for a novel automatic prompt optimization method that crafts expert-level prompts using language models. It provides a principled framework for prompt optimization by unifying prompt sampling and rewarding using MCTS algorithm. The tool supports different models like openai, palm, and huggingface models. Users can run PromptAgent to optimize prompts for specific tasks by strategically sampling model errors, generating error feedbacks, simulating future rewards, and searching for high-reward paths leading to expert prompts.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.