ComfyUI_Yvann-Nodes

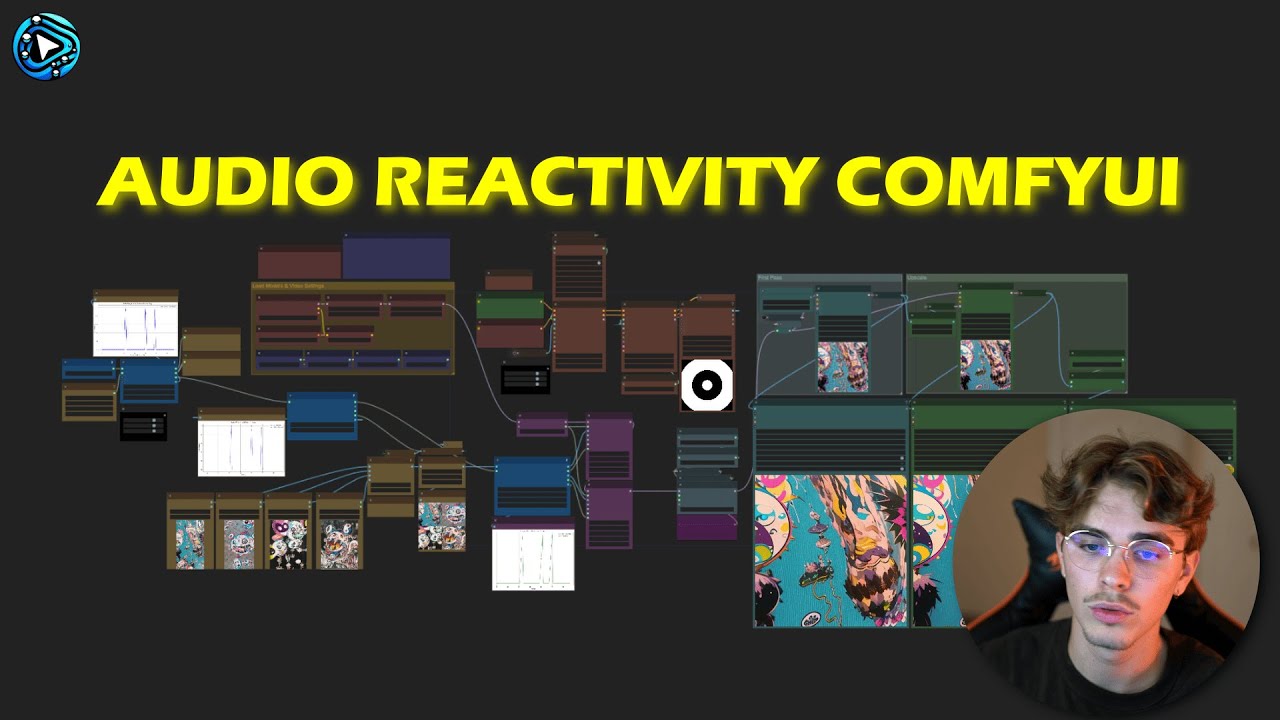

Audio Reactivity Nodes for ComfyUI 🔊 Create AI generated audio-driven animations. Compatible with IPAdapter, ControlNets, AnimateDiff...

Stars: 340

ComfyUI_Yvann-Nodes is a pack of custom nodes that enable audio reactivity within ComfyUI, allowing users to create AI-driven animations that sync with music. Users can generate audio reactive AI videos, control AI generation styles, content, and composition with any audio input. The tool is simple to use by dropping workflows in ComfyUI and specifying audio and visual inputs. It is flexible and works with existing ComfyUI AI tech and nodes like IPAdapter, AnimateDiff, and ControlNet. Users can pick workflows for Images → Video or Video → Video, download the corresponding .json file, drop it into ComfyUI, install missing custom nodes, set inputs, and generate audio-reactive animations.

README:

Made with the help of Lilien

A pack of custom nodes that enable audio reactivity within ComfyUI, allowing you to generate AI-driven animations that sync with music

- Create Audio Reactive AI videos, enable controls over AI generations styles, content and composition with any audio

- Simple: Just Drop one of our Workflows in ComfyUI and specify your audio and visuals input

- Flexible: Works with existing ComfyUI AI tech and nodes (eg: IPAdapter, AnimateDiff, ControlNet, etc.)

- Install ComfyUI and ComfyUI-Manager

-

Images → Video

- Takes a set of images plus an audio track.

-

Watch Tutorial:

- Example Render (Sound On): https://github.com/user-attachments/assets/1e6590fc-e0d7-42d7-a205-433adf6c405c

-

Video → Video

- Takes a source video plus an audio track.

-

Watch Tutorial:

- Example Render (Sound On): https://github.com/user-attachments/assets/6b0aa544-aa20-4257-b6be-28673082c7ef

-

Download the

.jsonfile for the workflow you picked: -

Drop the

.jsonfile into the ComfyUI window. -

Open the “🧩 Manager” → “Install Missing Custom Nodes”

- Install each pack of nodes that appears.

- Restart ComfyUI if prompted.

-

Set Your Inputs & Generate

- Provide the inputs needed (everything explained here

- Click Queue button to produce your audio-reactive animation!

That’s it! Have fun playing with the differents settings now !! (if you have any questions or problems, check my Youtube Tutorials

Click to Expand: Node-by-Node Reference

Analyzes audio to generate reactive weights for each frame.

Node Parameters

- audio_sep_model: Model from "Load Audio Separation Model"

- audio: Input audio file

- batch_size: Frames to associate with audio weights

- fps: Frame rate for the analysis

Parameters:

- analysis_mode: e.g., Drums Only, Vocals, Full Audio

- threshold: Minimum weight pass-through

- multiply: Amplification factor

Outputs:

- graph_audio (image preview),

- processed_audio, original_audio,

- audio_weights (list of values).

Loads or downloads an audio separation model (e.g., HybridDemucs, OpenUnmix).

Node Parameters

- model: Choose between HybridDemucs / OpenUnmix.

- Outputs: audio_sep_model (connect to Audio Analysis or Remixer).

Identifies peaks in the audio weights to trigger transitions or events.

Node Parameters

- peaks_threshold: Sensitivity.

- min_peaks_distance: Minimum gap in frames between peaks.

- Outputs: Binary peak list, alternate list, peak indices/count, graph.

Manages transitions between images based on peaks. Great for stable or style transitions.

Node Parameters

- images: Batch of images.

- peaks_weights: From “Audio Peaks Detection”.

- blend_mode, transitions_length, min_IPA_weight, etc.

Links text prompts to peak indices.

Node Parameters

- peaks_index: Indices from peaks detection.

- prompts: multiline string.

- Outputs: mapped schedule string.

Adjusts volume levels (drums, vocals, bass, others) in a track.

Node Parameters

- drums_volume, vocals_volume, bass_volume, others_volume

- Outputs: single merged audio track.

Repeats a set of images N times.

Node Parameters

- mask: Mask input.

- Outputs: Repeated images.

Flips sign of float values.

Node Parameters

- floats: list of floats.

- Outputs: inverted list.

Plots float values as a graph.

Node Parameters

- floats (and optional second/third).

- Outputs: visual graph image.

Converts a mask into a single float value.

Node Parameters

- mask: input.

- Outputs: float.

Transforms float lists into an IPAdapter “weight strategy.”

Node Parameters

- floats: list of floats.

- Outputs: dictionary with strategy info.

Please give a ⭐ on GitHub it helps us enhance our Tool and it's Free for you !! (:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ComfyUI_Yvann-Nodes

Similar Open Source Tools

ComfyUI_Yvann-Nodes

ComfyUI_Yvann-Nodes is a pack of custom nodes that enable audio reactivity within ComfyUI, allowing users to create AI-driven animations that sync with music. Users can generate audio reactive AI videos, control AI generation styles, content, and composition with any audio input. The tool is simple to use by dropping workflows in ComfyUI and specifying audio and visual inputs. It is flexible and works with existing ComfyUI AI tech and nodes like IPAdapter, AnimateDiff, and ControlNet. Users can pick workflows for Images → Video or Video → Video, download the corresponding .json file, drop it into ComfyUI, install missing custom nodes, set inputs, and generate audio-reactive animations.

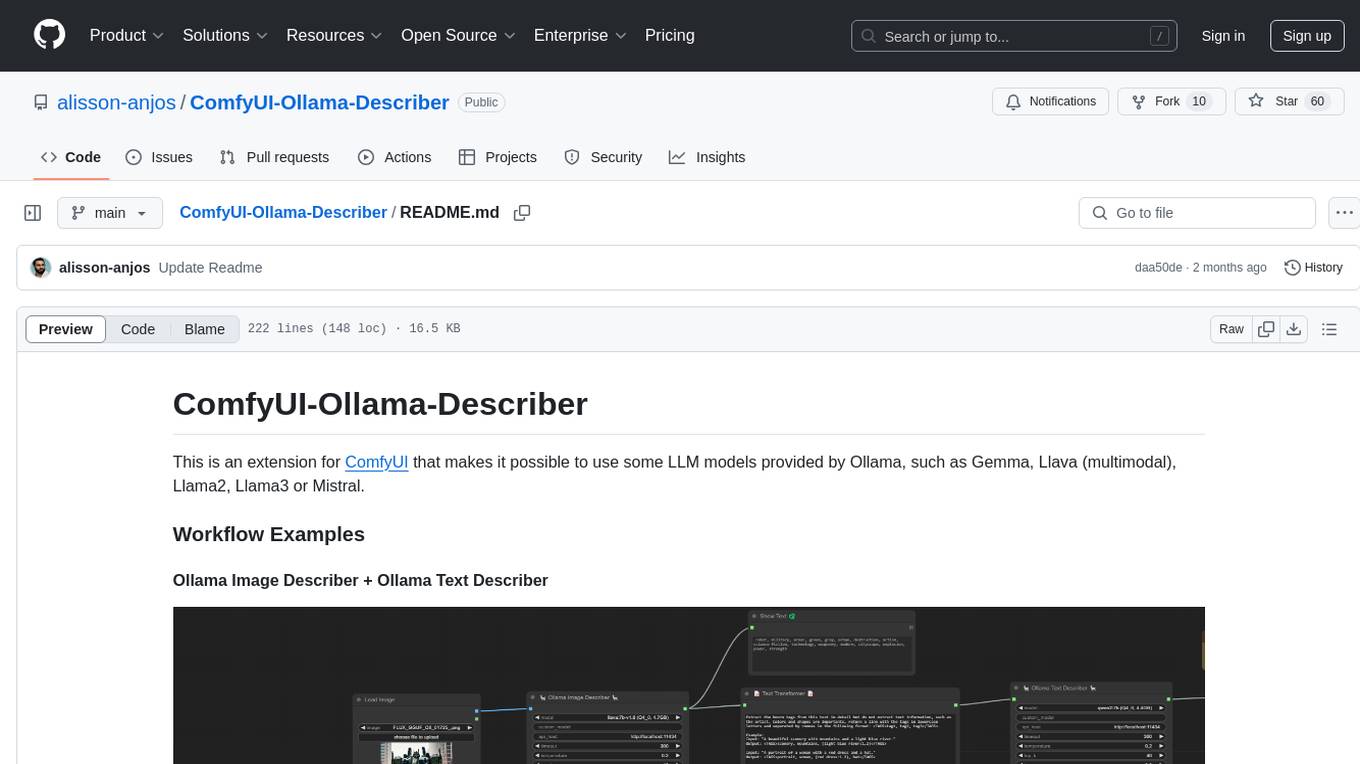

ComfyUI-Ollama-Describer

ComfyUI-Ollama-Describer is an extension for ComfyUI that enables the use of LLM models provided by Ollama, such as Gemma, Llava (multimodal), Llama2, Llama3, or Mistral. It requires the Ollama library for interacting with large-scale language models, supporting GPUs using CUDA and AMD GPUs on Windows, Linux, and Mac. The extension allows users to run Ollama through Docker and utilize NVIDIA GPUs for faster processing. It provides nodes for image description, text description, image captioning, and text transformation, with various customizable parameters for model selection, API communication, response generation, and model memory management.

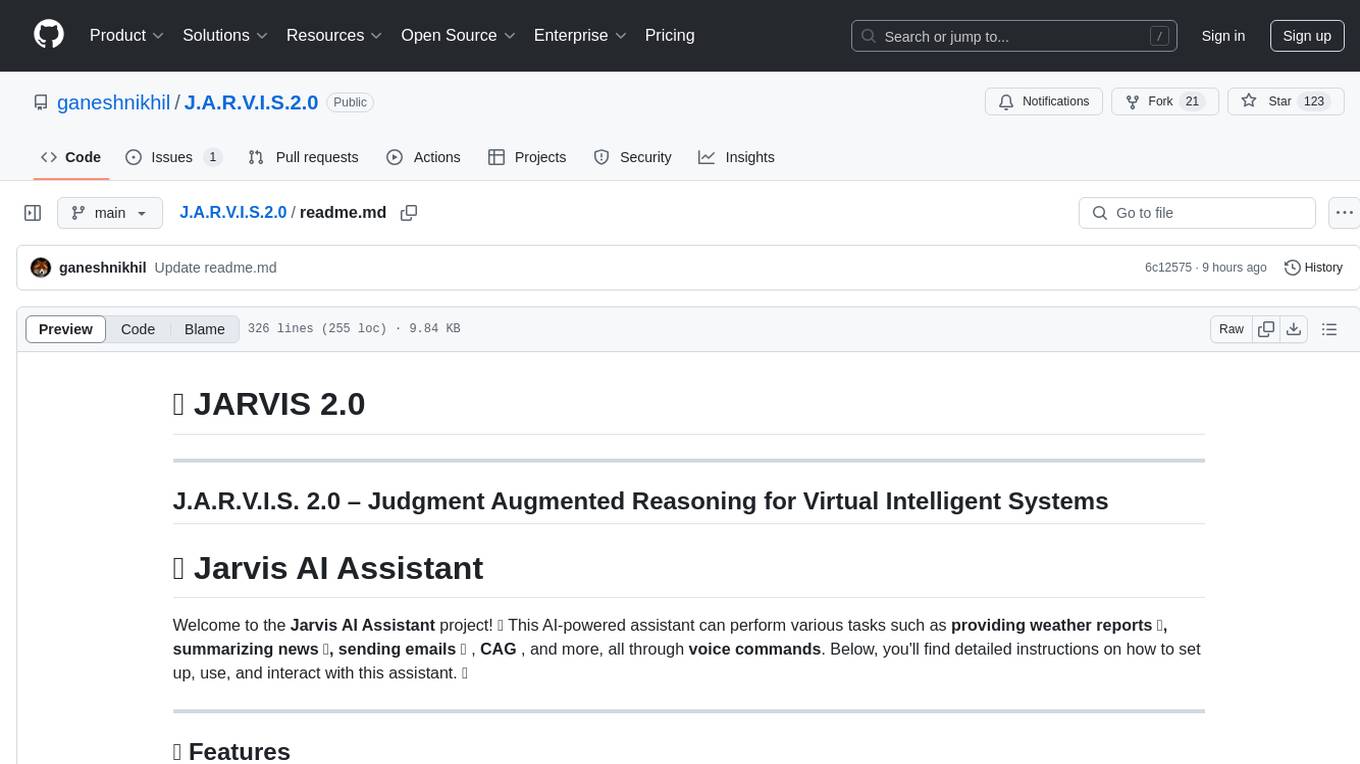

J.A.R.V.I.S.2.0

J.A.R.V.I.S. 2.0 is an AI-powered assistant designed for voice commands, capable of tasks like providing weather reports, summarizing news, sending emails, and more. It features voice activation, speech recognition, AI responses, and handles multiple tasks including email sending, weather reports, news reading, image generation, database functions, phone call automation, AI-based task execution, website & application automation, and knowledge-based interactions. The assistant also includes timeout handling, automatic input processing, and the ability to call multiple functions simultaneously. It requires Python 3.9 or later and specific API keys for weather, news, email, and AI access. The tool integrates Gemini AI for function execution and Ollama as a fallback mechanism. It utilizes a RAG-based knowledge system and ADB integration for phone automation. Future enhancements include deeper mobile integration, advanced AI-driven automation, improved NLP-based command execution, and multi-modal interactions.

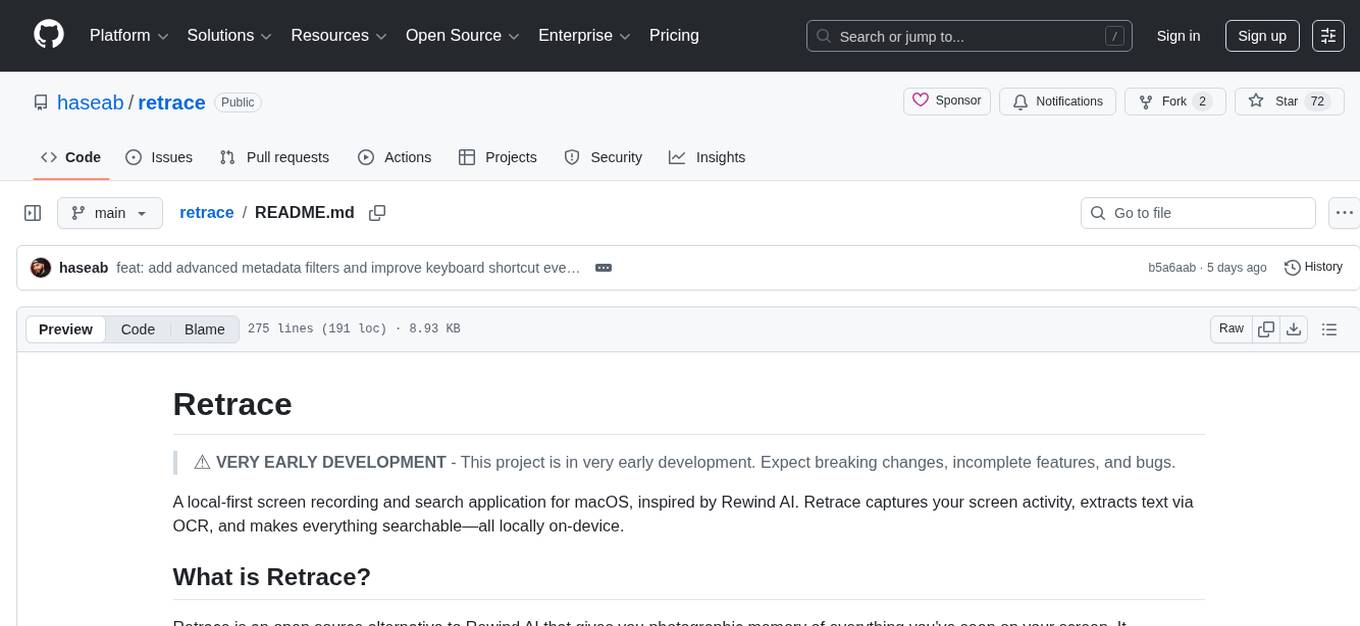

retrace

Retrace is a local-first screen recording and search application for macOS, inspired by Rewind AI. It captures screen activity, extracts text via OCR, and makes everything searchable locally on-device. The project is in very early development, offering features like continuous screen capture, OCR text extraction, full-text search, timeline viewer, dashboard analytics, Rewind AI import, settings panel, global hotkeys, HEVC video encoding, search highlighting, privacy controls, and more. Built with a modular architecture, Retrace uses Swift 5.9+, SwiftUI, Vision framework, SQLite with FTS5, HEVC video encoding, CryptoKit for encryption, and more. Future releases will include features like audio transcription and semantic search. Retrace requires macOS 13.0+ (Apple Silicon required) and Xcode 15.0+ for building from source, with permissions for screen recording and accessibility. Contributions are welcome, and the project is licensed under the MIT License.

ito

Ito is an intelligent voice assistant that provides seamless voice dictation to any application on your computer. It works in any app, offers global keyboard shortcuts, real-time transcription, and instant text insertion. It is smart and adaptive with features like custom dictionary, context awareness, multi-language support, and intelligent punctuation. Users can customize trigger keys, audio preferences, and privacy controls. It also offers data management features like a notes system, interaction history, cloud sync, and export capabilities. Ito is built as a modern Electron application with a multi-process architecture and utilizes technologies like React, TypeScript, Rust, gRPC, and AWS CDK.

oneclick-subtitles-generator

A comprehensive web application for auto-subtitling videos and audio, translating SRT files, generating AI narration with voice cloning, creating background images, and rendering professional subtitled videos. Designed for content creators, educators, and general users who need high-quality subtitle generation and video production capabilities.

RSTGameTranslation

RSTGameTranslation is a tool designed for translating game text into multiple languages efficiently. It provides a user-friendly interface for game developers to easily manage and localize their game content. With RSTGameTranslation, developers can streamline the translation process, ensuring consistency and accuracy across different language versions of their games. The tool supports various file formats commonly used in game development, making it versatile and adaptable to different project requirements. Whether you are working on a small indie game or a large-scale production, RSTGameTranslation can help you reach a global audience by making localization a seamless and hassle-free experience.

structured-prompt-builder

A lightweight, browser-first tool for designing well-structured AI prompts with a clean UI, live previews, a local Prompt Library, and optional Gemini-powered prompt optimization. It supports structured fields like Role, Task, Audience, Style, Tone, Constraints, Steps, Inputs, and Few-shot examples. Users can copy/download prompts in Markdown, JSON, and YAML formats, and utilize model parameters like Temperature, Top-p, Max tokens, Presence & Frequency penalties. The tool also features a Local Prompt Library for saving, loading, duplicating, and deleting prompts, as well as a Gemini Optimizer for cleaning grammar/clarity without altering the schema. It offers dark/light friendly styles and a focused reading mode for long prompts.

llxprt-code

LLxprt Code is an AI-powered coding assistant that works with any LLM provider, offering a command-line interface for querying and editing codebases, generating applications, and automating development workflows. It supports various subscriptions, provider flexibility, top open models, local model support, and a privacy-first approach. Users can interact with LLxprt Code in both interactive and non-interactive modes, leveraging features like subscription OAuth, multi-account failover, load balancer profiles, and extensive provider support. The tool also allows for the creation of advanced subagents for specialized tasks and integrates with the Zed editor for in-editor chat and code selection.

system-prompts-and-models-of-ai-tools

This repository contains a significant portion of the FULL official v0, Manus, and Cursor system prompts and AI models. It includes over 5,000+ lines of insights into their structure and functionality. The available files include FULL v0, v0 model.txt, v0 tools.txt, Cursor (with cursor agent.txt, cursor ask.txt, cursor edit.txt), and Manus Folder with multiple files inside.

llm-apps-java-spring-ai

The 'LLM Applications with Java and Spring AI' repository provides samples demonstrating how to build Java applications powered by Generative AI and Large Language Models (LLMs) using Spring AI. It includes projects for question answering, chat completion models, prompts, templates, multimodality, output converters, embedding models, document ETL pipeline, function calling, image models, and audio models. The repository also lists prerequisites such as Java 21, Docker/Podman, Mistral AI API Key, OpenAI API Key, and Ollama. Users can explore various use cases and projects to leverage LLMs for text generation, vector transformation, document processing, and more.

llmchat

LLMChat is an all-in-one AI chat interface that supports multiple language models, offers a plugin library for enhanced functionality, enables web search capabilities, allows customization of AI assistants, provides text-to-speech conversion, ensures secure local data storage, and facilitates data import/export. It also includes features like knowledge spaces, prompt library, personalization, and can be installed as a Progressive Web App (PWA). The tech stack includes Next.js, TypeScript, Pglite, LangChain, Zustand, React Query, Supabase, Tailwind CSS, Framer Motion, Shadcn, and Tiptap. The roadmap includes upcoming features like speech-to-text and knowledge spaces.

ChordMiniApp

ChordMini is an advanced music analysis platform with AI-powered chord recognition, beat detection, and synchronized lyrics. It features a clean and intuitive interface for YouTube search, chord progression visualization, interactive guitar diagrams with accurate fingering patterns, lead sheet with AI assistant for synchronized lyrics transcription, and various add-on features like Roman Numeral Analysis, Key Modulation Signals, Simplified Chord Notation, and Enhanced Chord Correction. The tool requires Node.js, Python 3.9+, and a Firebase account for setup. It offers a hybrid backend architecture for local development and production deployments, with features like beat detection, chord recognition, lyrics processing, rate limiting, and audio processing supporting MP3, WAV, and FLAC formats. ChordMini provides a comprehensive music analysis workflow from user input to visualization, including dual input support, environment-aware processing, intelligent caching, advanced ML pipeline, and rich visualization options.

gen-ai-experiments

Gen-AI-Experiments is a structured collection of Jupyter notebooks and AI experiments designed to guide users through various AI tools, frameworks, and models. It offers valuable resources for both beginners and experienced practitioners, covering topics such as AI agents, model testing, RAG systems, real-world applications, and open-source tools. The repository includes folders with curated libraries, AI agents, experiments, LLM testing, open-source libraries, RAG experiments, and educhain experiments, each focusing on different aspects of AI development and application.

stable-diffusion.cpp

The stable-diffusion.cpp repository provides an implementation for inferring stable diffusion in pure C/C++. It offers features such as support for different versions of stable diffusion, lightweight and dependency-free implementation, various quantization support, memory-efficient CPU inference, GPU acceleration, and more. Users can download the built executable program or build it manually. The repository also includes instructions for downloading weights, building from scratch, using different acceleration methods, running the tool, converting weights, and utilizing various features like Flash Attention, ESRGAN upscaling, PhotoMaker support, and more. Additionally, it mentions future TODOs and provides information on memory requirements, bindings, UIs, contributors, and references.

presenton

Presenton is an open-source AI presentation generator and API that allows users to create professional presentations locally on their devices. It offers complete control over the presentation workflow, including custom templates, AI template generation, flexible generation options, and export capabilities. Users can use their own API keys for various models, integrate with Ollama for local model running, and connect to OpenAI-compatible endpoints. The tool supports multiple providers for text and image generation, runs locally without cloud dependencies, and can be deployed as a Docker container with GPU support.

For similar tasks

ComfyUI_Yvann-Nodes

ComfyUI_Yvann-Nodes is a pack of custom nodes that enable audio reactivity within ComfyUI, allowing users to create AI-driven animations that sync with music. Users can generate audio reactive AI videos, control AI generation styles, content, and composition with any audio input. The tool is simple to use by dropping workflows in ComfyUI and specifying audio and visual inputs. It is flexible and works with existing ComfyUI AI tech and nodes like IPAdapter, AnimateDiff, and ControlNet. Users can pick workflows for Images → Video or Video → Video, download the corresponding .json file, drop it into ComfyUI, install missing custom nodes, set inputs, and generate audio-reactive animations.

For similar jobs

latentbox

Latent Box is a curated collection of resources for AI, creativity, and art. It aims to bridge the information gap with high-quality content, promote diversity and interdisciplinary collaboration, and maintain updates through community co-creation. The website features a wide range of resources, including articles, tutorials, tools, and datasets, covering various topics such as machine learning, computer vision, natural language processing, generative art, and creative coding.

LeaferJS

LeaferJS is a colorful HTML5 Canvas 2D graphics rendering engine that can be combined with AI drawing to generate interfaces. It gives you the superpower to instantly create 1 million graphics, free and open source, easy to learn and use, with rich scenes.

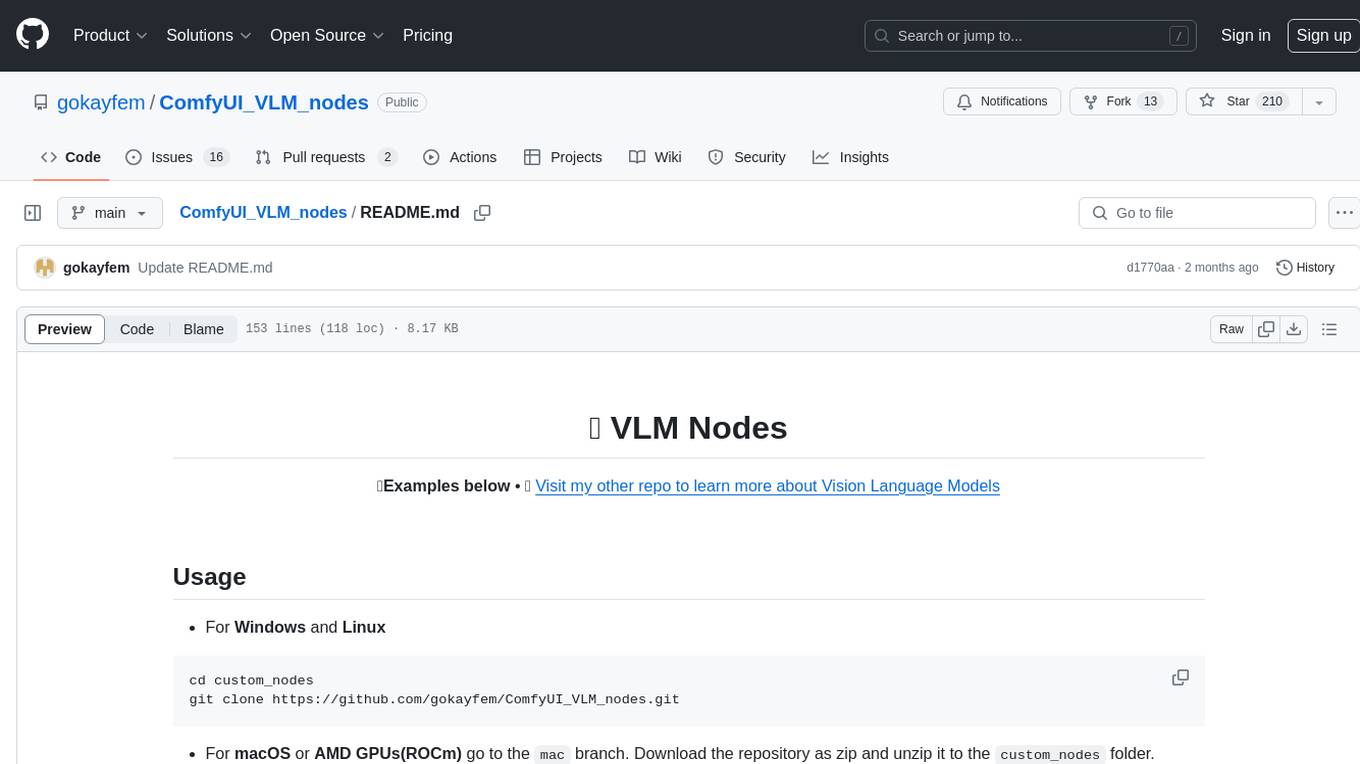

ComfyUI_VLM_nodes

ComfyUI_VLM_nodes is a repository containing various nodes for utilizing Vision Language Models (VLMs) and Language Models (LLMs). The repository provides nodes for tasks such as structured output generation, image to music conversion, LLM prompt generation, automatic prompt generation, and more. Users can integrate different models like InternLM-XComposer2-VL, UForm-Gen2, Kosmos-2, moondream1, moondream2, JoyTag, and Chat Musician. The nodes support features like extracting keywords, generating prompts, suggesting prompts, and obtaining structured outputs. The repository includes examples and instructions for using the nodes effectively.

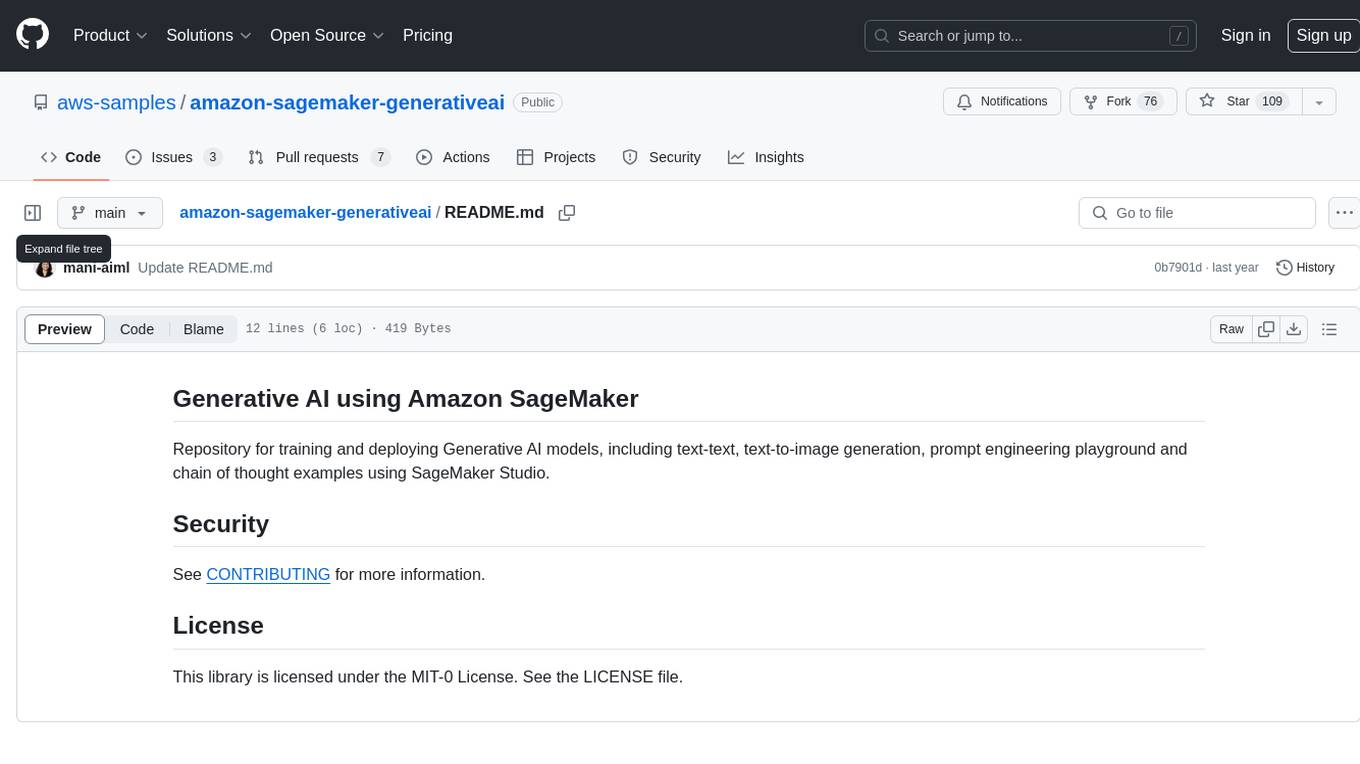

amazon-sagemaker-generativeai

Repository for training and deploying Generative AI models, including text-text, text-to-image generation, prompt engineering playground and chain of thought examples using SageMaker Studio. The tool provides a platform for users to experiment with generative AI techniques, enabling them to create text and image outputs based on input data. It offers a range of functionalities for training and deploying models, as well as exploring different generative AI applications.

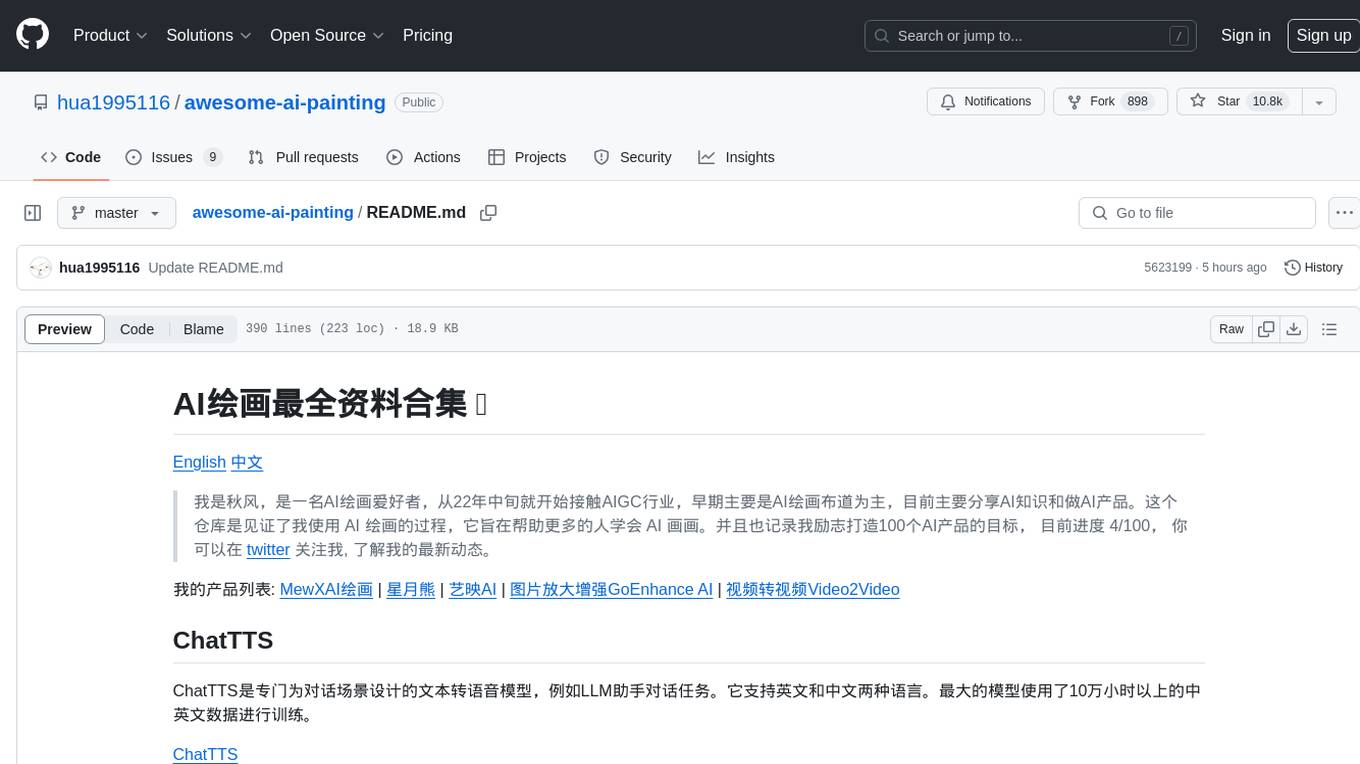

awesome-ai-painting

This repository, named 'awesome-ai-painting', is a comprehensive collection of resources related to AI painting. It is curated by a user named 秋风, who is an AI painting enthusiast with a background in the AIGC industry. The repository aims to help more people learn AI painting and also documents the user's goal of creating 100 AI products, with current progress at 4/100. The repository includes information on various AI painting products, tutorials, tools, and models, providing a valuable resource for individuals interested in AI painting and related technologies.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

ComfyUI_Yvann-Nodes

ComfyUI_Yvann-Nodes is a pack of custom nodes that enable audio reactivity within ComfyUI, allowing users to create AI-driven animations that sync with music. Users can generate audio reactive AI videos, control AI generation styles, content, and composition with any audio input. The tool is simple to use by dropping workflows in ComfyUI and specifying audio and visual inputs. It is flexible and works with existing ComfyUI AI tech and nodes like IPAdapter, AnimateDiff, and ControlNet. Users can pick workflows for Images → Video or Video → Video, download the corresponding .json file, drop it into ComfyUI, install missing custom nodes, set inputs, and generate audio-reactive animations.

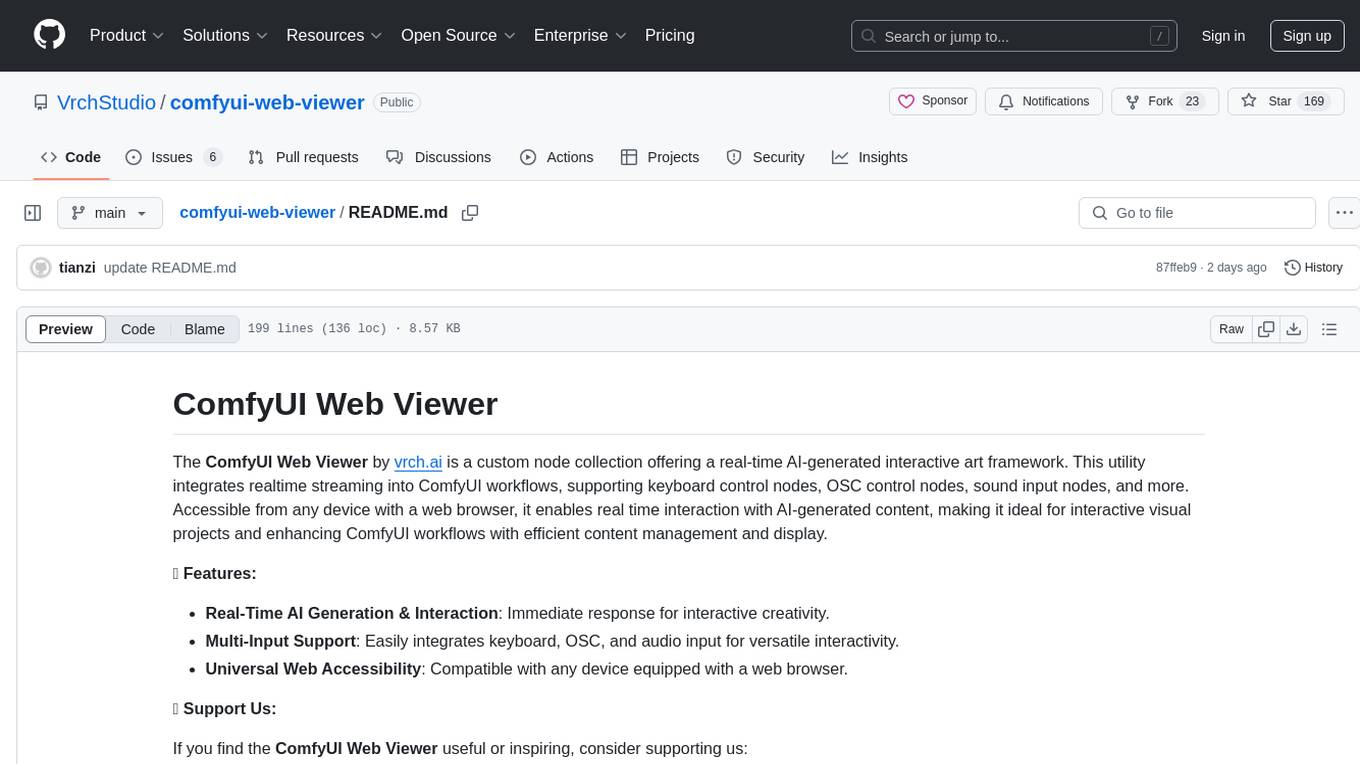

comfyui-web-viewer

The ComfyUI Web Viewer by vrch.ai is a real-time AI-generated interactive art framework that integrates realtime streaming into ComfyUI workflows. It supports keyboard control nodes, OSC control nodes, sound input nodes, and more, accessible from any device with a web browser. It enables real-time interaction with AI-generated content, ideal for interactive visual projects and enhancing ComfyUI workflows with efficient content management and display.