aegra

Open source LangGraph Platform alternative - Self-hosted AI agent backend with FastAPI and PostgreSQL. Zero vendor lock-in, full control over your agent infrastructure.

Stars: 637

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

README:

Self-hosted LangSmith Deployments alternative. Your infrastructure, your rules.

Aegra is a drop-in replacement for LangSmith Deployments. Use the same LangGraph SDK, same APIs, but run it on your own infrastructure with PostgreSQL persistence.

Works with: Agent Chat UI | LangGraph Studio | AG-UI / CopilotKit

Prerequisites: Python 3.11+, Docker (for PostgreSQL)

pip install aegra-cli

# Initialize a new project — prompts for location, template, and name

aegra init

# Follow the printed next steps:

cd <your-project>

cp .env.example .env # Add your OPENAI_API_KEY to .env

uv sync # Install dependencies

uv run aegra dev # Start PostgreSQL + dev serverNote: Always install

aegra-clidirectly — not theaegrameta-package. Theaegrapackage on PyPI is a convenience wrapper that does not support version pinning.

git clone https://github.com/ibbybuilds/aegra.git

cd aegra

cp .env.example .env

# Add your OPENAI_API_KEY to .env

docker compose upOpen http://localhost:8000/docs to explore the API.

Your existing LangGraph code works without changes:

from langgraph_sdk import get_client

client = get_client(url="http://localhost:8000")

assistant = await client.assistants.create(graph_id="agent")

thread = await client.threads.create()

async for chunk in client.runs.stream(

thread_id=thread["thread_id"],

assistant_id=assistant["assistant_id"],

input={"messages": [{"type": "human", "content": "Hello!"}]},

):

print(chunk)Based on LangChain pricing as of February 2026. An enterprise tier with self-hosting is also available at custom pricing.

| LangSmith Deployments | Aegra | |

|---|---|---|

| Deploy agents | Local dev only (Free), paid cloud (Plus) | Free, unlimited |

| Custom auth | Not available (Free), available (Plus) | Python handlers (JWT/OAuth/Firebase) |

| Self-hosted | Enterprise only (license key required) | Always (Apache 2.0) |

| Own database | Managed only (Free/Plus), bring your own (Enterprise) | Bring your own Postgres |

| Tracing | LangSmith only | Any OTLP backend (Langfuse, Phoenix, etc.) |

| Data residency | LangChain cloud (Free/Plus), your infrastructure (Enterprise) | Your infrastructure |

| SDK | LangGraph SDK | Same LangGraph SDK |

- Agent Protocol compliant - Works with Agent Chat UI, LangGraph Studio, CopilotKit

- Human-in-the-loop - Approval gates and user intervention points

- Streaming - Real-time responses with network resilience

- Persistent state - PostgreSQL checkpoints via LangGraph

- Configurable auth - JWT, OAuth, Firebase, or none

- Unified Observability - Fan-out tracing support via OpenTelemetry

- Semantic store - Vector embeddings with pgvector

- Custom routes - Add your own FastAPI endpoints

aegra init # Interactive — asks for location, template, and name

aegra init ./my-agent # Create at path (still prompts for template)

aegra dev # Start development server (hot reload + auto PostgreSQL)

aegra serve # Start production server (no reload)

aegra up # Build and start all Docker services

aegra down # Stop Docker services

aegra version # Show version infodocs.aegra.dev — Full documentation with guides, API reference, and configuration.

| Topic | Description |

|---|---|

| Quickstart | Get a running server in under 5 minutes |

| Configuration | aegra.json format and all options |

| Authentication | JWT, OAuth, Firebase, or custom auth handlers |

| Streaming | 8 SSE stream modes with reconnection |

| Store | Key-value and semantic search storage |

| Observability | Fan-out tracing to Langfuse, Phoenix, or any OTLP backend |

| Deployment | Docker, PaaS, and Kubernetes deployment |

| Migration | Migrate from LangSmith Deployments |

- Discord - Chat with the community

- GitHub Discussions - Ask questions, share ideas

- GitHub Issues - Report bugs

- FastAPI - HTTP layer

- LangGraph - State management & graph execution

- PostgreSQL - Persistence & checkpoints

- OpenTelemetry - Observability standard

- pgvector - Vector embeddings

We welcome contributions! See Contributing guide and check out good first issues.

The best contribution is code, PRs, and bug reports - that's what makes open source thrive.

For those who want to support Aegra financially, whether you're using it in production or just believe in what we're building, you can become a sponsor. Sponsorships help keep development active and the project healthy.

Apache 2.0 - see LICENSE.

⭐ Star us if Aegra helps you escape vendor lock-in ⭐

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aegra

Similar Open Source Tools

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

zeptoclaw

ZeptoClaw is an ultra-lightweight personal AI assistant that offers a compact Rust binary with 29 tools, 8 channels, 9 providers, and container isolation. It focuses on integrations, security, and size discipline without compromising on performance. With features like container isolation, prompt injection detection, secret leak scanner, policy engine, input validator, and more, ZeptoClaw ensures secure AI agent execution. It supports migration from OpenClaw, deployment on various platforms, and configuration of LLM providers. ZeptoClaw is designed for efficient AI assistance with minimal resource consumption and maximum security.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

quotio

Quotio is a native macOS application designed as the ultimate command center for managing CLIProxyAPI, a local proxy server that powers AI coding agents. It allows users to connect multiple AI accounts, track quotas, configure CLI tools, and monitor request traffic in real-time. With features like multi-provider support, standalone quota mode, one-click agent configuration, real-time dashboard, smart quota management, API key management, menu bar integration, notifications, auto-update, and multilingual support, Quotio offers a comprehensive solution for AI coding assistants on macOS.

sdk

The Kubeflow SDK is a set of unified Pythonic APIs that simplify running AI workloads at any scale without needing to learn Kubernetes. It offers consistent APIs across the Kubeflow ecosystem, enabling users to focus on building AI applications rather than managing complex infrastructure. The SDK provides a unified experience, simplifies AI workloads, is built for scale, allows rapid iteration, and supports local development without a Kubernetes cluster.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

openakita

OpenAkita is a self-evolving AI Agent framework that autonomously learns new skills, performs daily self-checks and repairs, accumulates experience from task execution, and persists until the task is done. It auto-generates skills, installs dependencies, learns from mistakes, and remembers preferences. The framework is standards-based, multi-platform, and provides a Setup Center GUI for intuitive installation and configuration. It features self-learning and evolution mechanisms, a Ralph Wiggum Mode for persistent execution, multi-LLM endpoints, multi-platform IM support, desktop automation, multi-agent architecture, scheduled tasks, identity and memory management, a tool system, and a guided wizard for setup.

agnix

Agnix is a tool designed to validate agent configurations before they disrupt your workflow. It offers 230 rules to check various agent files like CLAUDE.md, SKILL.md, hooks, and MCP configs. The tool aims to prevent AI tools from ignoring misconfigured agents by catching errors early. Agnix provides auto-fix functionality and supports multiple tools like Claude Code, Codex CLI, OpenCode, Cursor, and Copilot. It constantly updates its rules and tool support to ensure accurate validation of agent configurations.

axonhub

AxonHub is an all-in-one AI development platform that serves as an AI gateway allowing users to switch between model providers without changing any code. It provides features like vendor lock-in prevention, integration simplification, observability enhancement, and cost control. Users can access any model using any SDK with zero code changes. The platform offers full request tracing, enterprise RBAC, smart load balancing, and real-time cost tracking. AxonHub supports multiple databases, provides a unified API gateway, and offers flexible model management and API key creation for authentication. It also integrates with various AI coding tools and SDKs for seamless usage.

orchestkit

OrchestKit is a powerful and flexible orchestration tool designed to streamline and automate complex workflows. It provides a user-friendly interface for defining and managing orchestration tasks, allowing users to easily create, schedule, and monitor workflows. With support for various integrations and plugins, OrchestKit enables seamless automation of tasks across different systems and applications. Whether you are a developer looking to automate deployment processes or a system administrator managing complex IT operations, OrchestKit offers a comprehensive solution to simplify and optimize your workflow management.

agentscope

AgentScope is an agent-oriented programming tool for building LLM (Large Language Model) applications. It provides transparent development, realtime steering, agentic tools management, model agnostic programming, LEGO-style agent building, multi-agent support, and high customizability. The tool supports async invocation, reasoning models, streaming returns, async/sync tool functions, user interruption, group-wise tools management, streamable transport, stateful/stateless mode MCP client, distributed and parallel evaluation, multi-agent conversation management, and fine-grained MCP control. AgentScope Studio enables tracing and visualization of agent applications. The tool is highly customizable and encourages customization at various levels.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

cua

Cua is a tool for creating and running high-performance macOS and Linux virtual machines on Apple Silicon, with built-in support for AI agents. It provides libraries like Lume for running VMs with near-native performance, Computer for interacting with sandboxes, and Agent for running agentic workflows. Users can refer to the documentation for onboarding, explore demos showcasing AI-Gradio and GitHub issue fixing, and utilize accessory libraries like Core, PyLume, Computer Server, and SOM. Contributions are welcome, and the tool is open-sourced under the MIT License.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

For similar tasks

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

azure-ai-foundry-baseline

This repository serves as a reference implementation for running a chat application and an AI orchestration layer using Azure AI Foundry Agent service and OpenAI foundation models. It covers common generative AI chat application characteristics such as creating agents, querying data stores, chat memory database, orchestration logic, and calling language models. The implementation also includes production requirements like network isolation, Azure AI Foundry Agent Service dependencies, availability zone reliability, and limiting egress network traffic with Azure Firewall.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

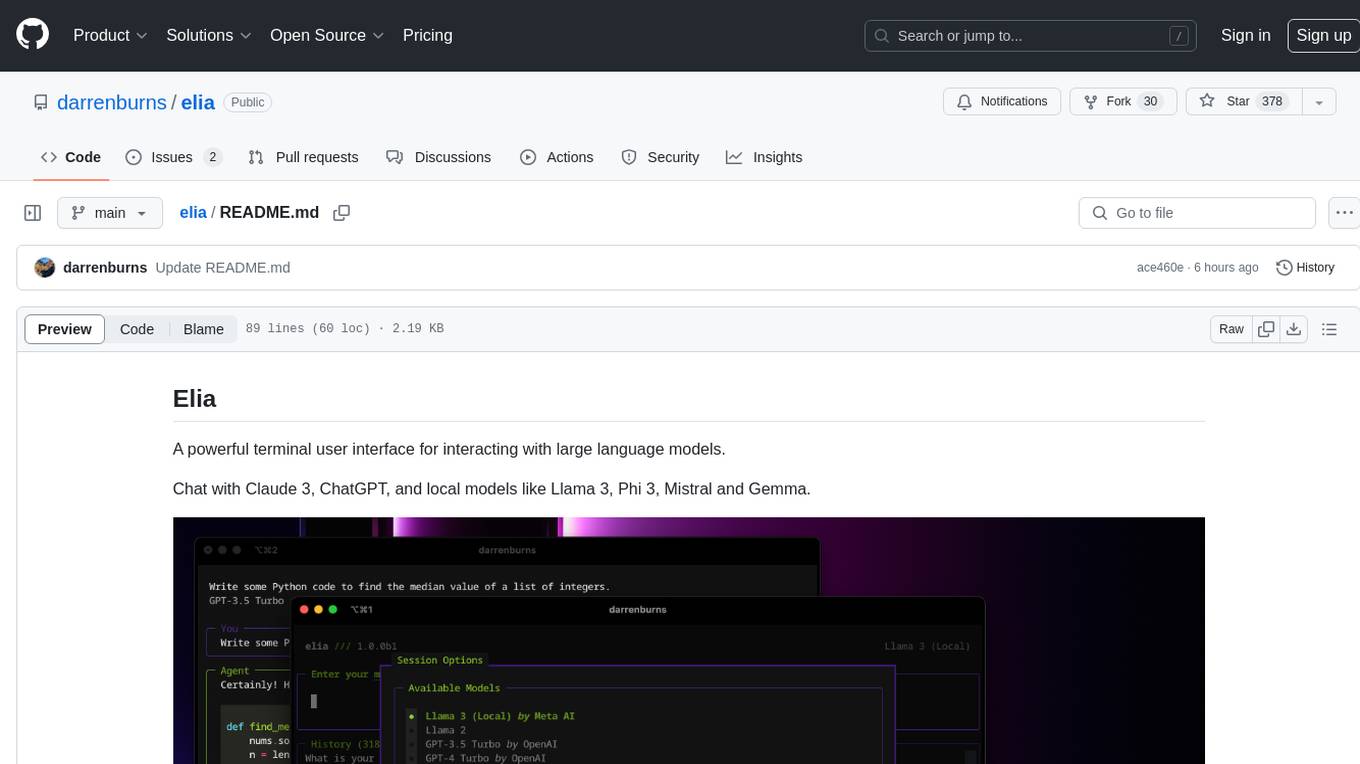

elia

Elia is a powerful terminal user interface designed for interacting with large language models. It allows users to chat with models like Claude 3, ChatGPT, Llama 3, Phi 3, Mistral, and Gemma. Conversations are stored locally in a SQLite database, ensuring privacy. Users can run local models through 'ollama' without data leaving their machine. Elia offers easy installation with pipx and supports various environment variables for different models. It provides a quick start to launch chats and manage local models. Configuration options are available to customize default models, system prompts, and add new models. Users can import conversations from ChatGPT and wipe the database when needed. Elia aims to enhance user experience in interacting with language models through a user-friendly interface.

EDDI

E.D.D.I (Enhanced Dialog Driven Interface) is an enterprise-certified chatbot middleware that offers advanced prompt and conversation management for Conversational AI APIs. Developed in Java using Quarkus, it is lean, RESTful, scalable, and cloud-native. E.D.D.I is highly scalable and designed to efficiently manage conversations in AI-driven applications, with seamless API integration capabilities. Notable features include configurable NLP and Behavior rules, support for multiple chatbots running concurrently, and integration with MongoDB, OAuth 2.0, and HTML/CSS/JavaScript for UI. The project requires Java 21, Maven 3.8.4, and MongoDB >= 5.0 to run. It can be built as a Docker image and deployed using Docker or Kubernetes, with additional support for integration testing and monitoring through Prometheus and Kubernetes endpoints.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

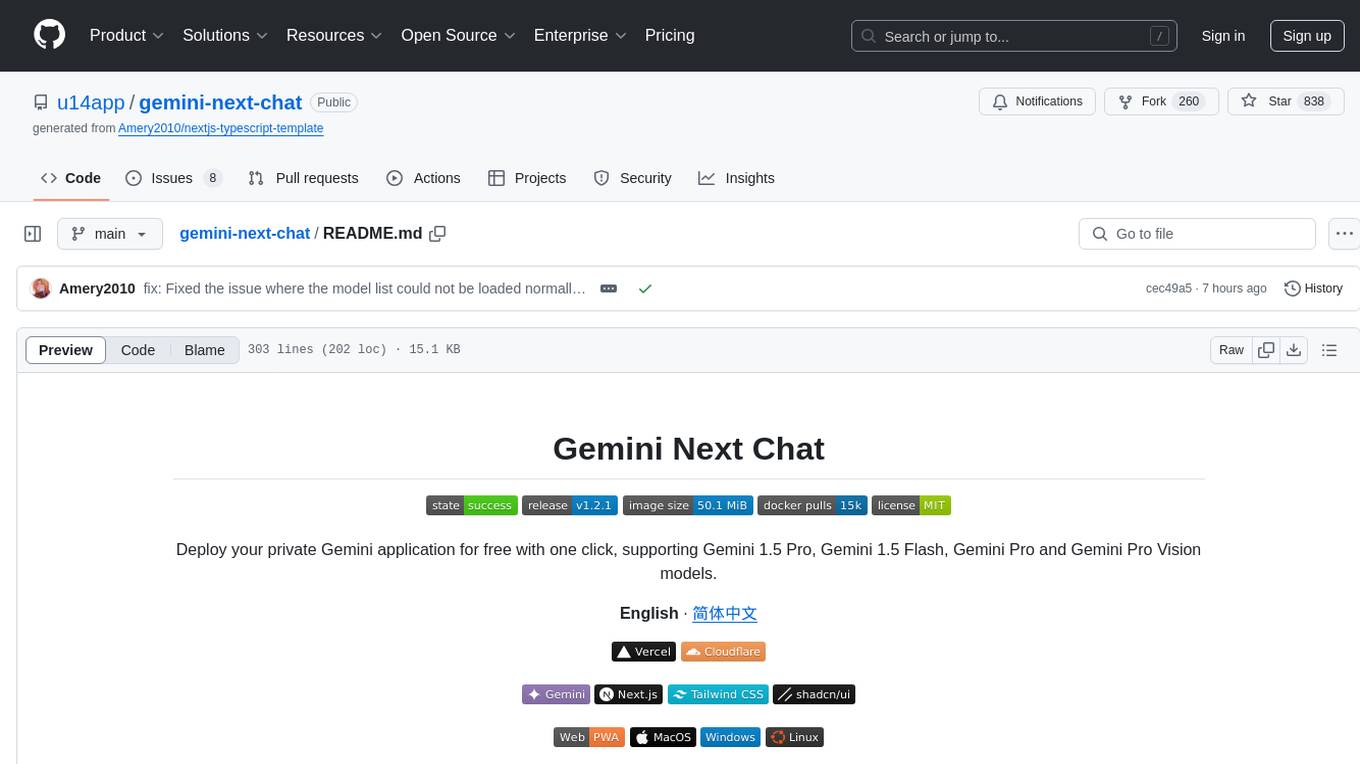

gemini-next-chat

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

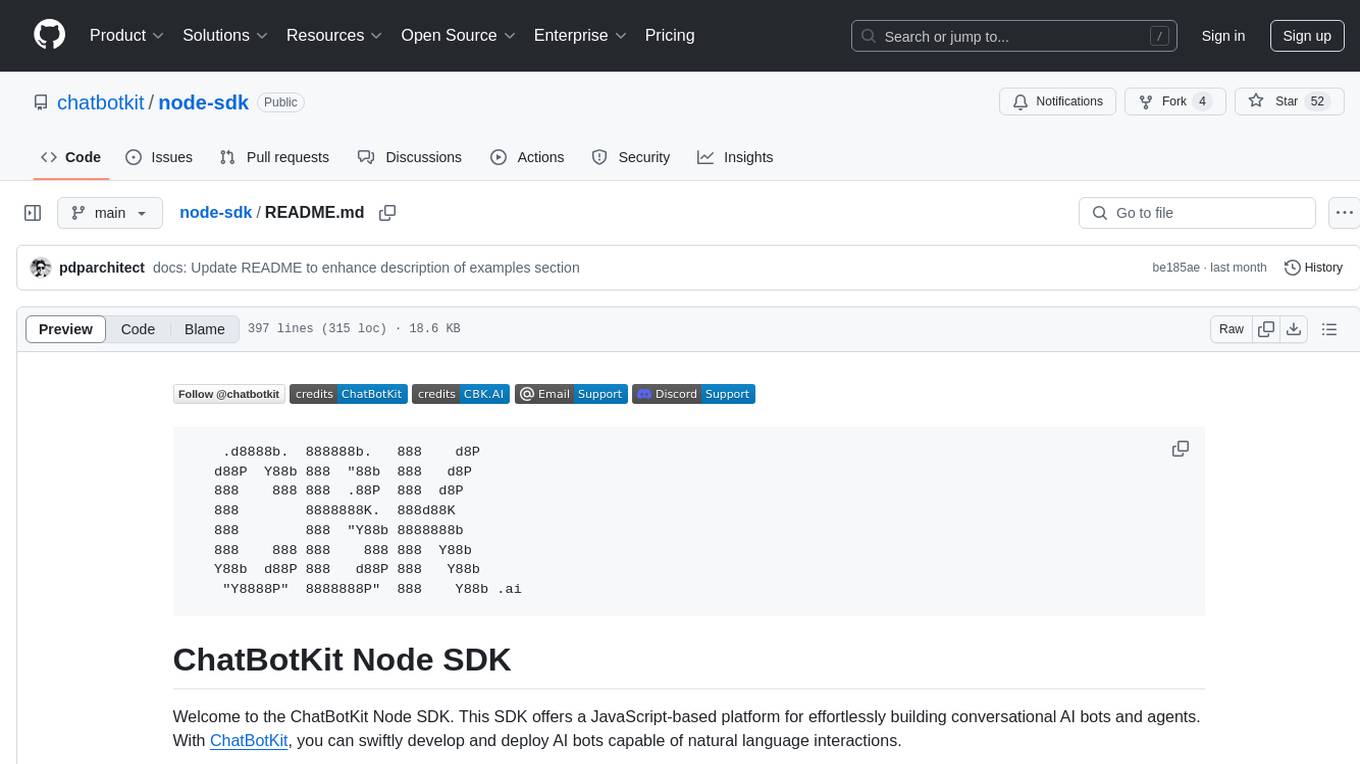

node-sdk

The ChatBotKit Node SDK is a JavaScript-based platform for building conversational AI bots and agents. It offers easy setup, serverless compatibility, modern framework support, customizability, and multi-platform deployment. With capabilities like multi-modal and multi-language support, conversation management, chat history review, custom datasets, and various integrations, this SDK enables users to create advanced chatbots for websites, mobile apps, and messaging platforms.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.