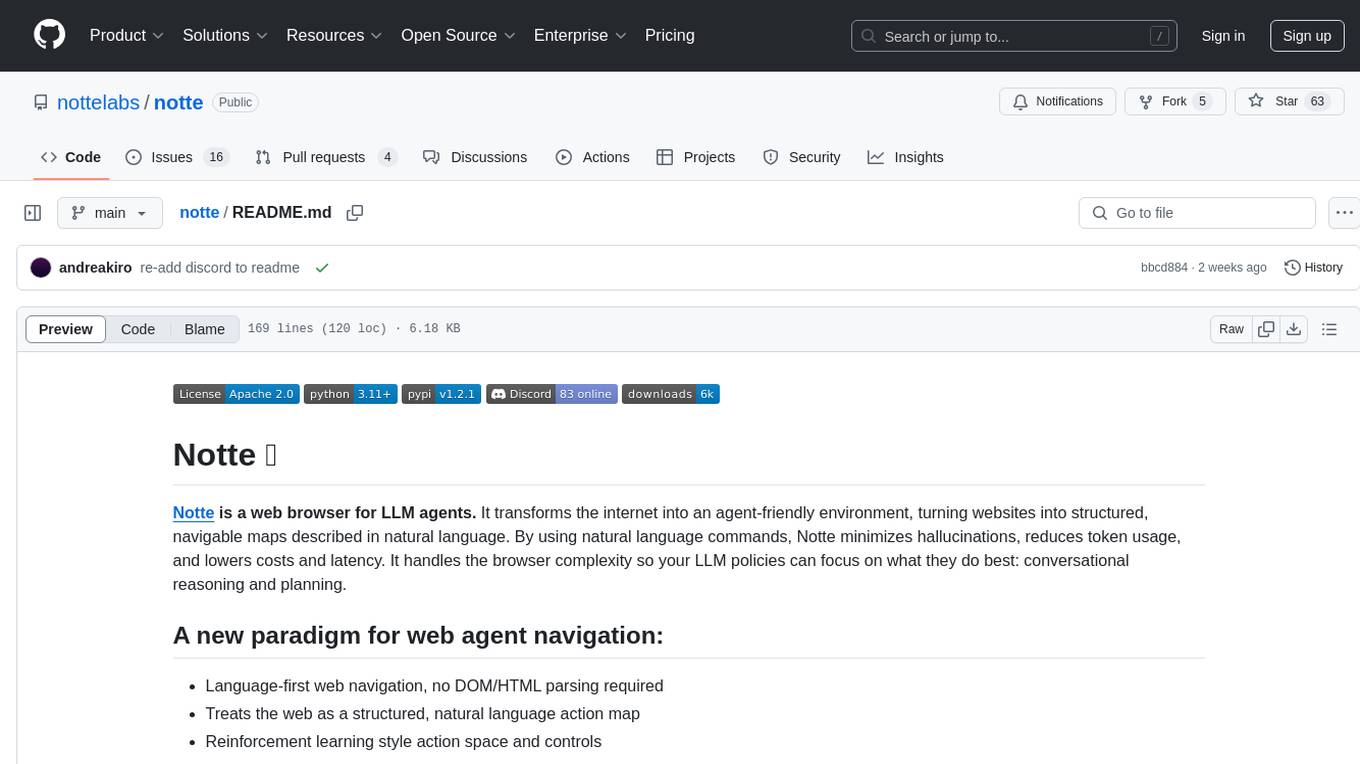

notte

🌸 Best framework to build web agents, and deploy serverless web automation functions on reliable browser infra.

Stars: 1868

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

README:

The web agent framework built for speed, cost-efficiency, scale, and reliability

→ Read more at: open-operator-evals • X • LinkedIn • Landing • Console

Notte provides all the essential tools for building and deploying AI agents that interact seamlessly with the web. Our full-stack framework combines AI agents with traditional scripting for maximum efficiency - letting you script deterministic parts and use AI only when needed, cutting costs by 50%+ while improving reliability. We allow you to develop, deploy, and scale your own agents and web automations, all with a single API. Read more in our documentation here 🔥

Opensource Core:

- Run web agents → Give AI agents natural language tasks to complete on websites

- Structured Output → Get data in your exact format with Pydantic models

- Site Interactions → Observe website states, scrape data and execute actions using Playwright compatible primitives and natural language commands

API service (Recommended)

- Stealth Browser Sessions → Browser instances with built-in CAPTCHA solving, proxies, and anti-detection

- Hybrid Workflows → Combine scripting and AI agents to reduce costs and improve reliability

- Secrets Vaults → Enterprise-grade credential management to store emails, passwords, MFA tokens, SSO, etc.

- Digital Personas → Create digital identities with unique emails, phones, and automated 2FA for account creation workflows

pip install notte

patchright install --with-deps chromium

Use the following script to spinup an agent using opensource features (you'll need your own LLM API keys):

import notte

from dotenv import load_dotenv

load_dotenv()

with notte.Session(headless=False) as session:

agent = notte.Agent(session=session, reasoning_model='gemini/gemini-2.5-flash', max_steps=30)

response = agent.run(task="doom scroll cat memes on google images")We also provide an effortless API that hosts the browser sessions for you - and provide plenty of premium features. To run the agent you'll need to first sign up on the Notte Console and create a free Notte API key 🔑

from notte_sdk import NotteClient

import os

client = NotteClient(api_key=os.getenv("NOTTE_API_KEY"))

with client.Session(open_viewer=True) as session:

agent = client.Agent(session=session, reasoning_model='gemini/gemini-2.5-flash', max_steps=30)

response = agent.run(task="doom scroll cat memes on google images")Our setup allows you to experiment locally, then drop-in replace the import and prefix notte objects with cli to switch to SDK and get hosted browser sessions plus access to premium features!

| Rank | Provider | Agent Self-Report | LLM Evaluation | Time per Task | Task Reliability |

|---|---|---|---|---|---|

| 🏆 | Notte | 86.2% | 79.0% | 47s | 96.6% |

| 2️⃣ | Browser-Use | 77.3% | 60.2% | 113s | 83.3% |

| 3️⃣ | Convergence | 38.4% | 31.4% | 83s | 50% |

Read the full story here: https://github.com/nottelabs/open-operator-evals

Structured output is a feature of the agent's run function that allows you to specify a Pydantic model as the response_format parameter. The agent will return data in the specified structure.

from notte_sdk import NotteClient

from pydantic import BaseModel

class HackerNewsPost(BaseModel):

title: str

url: str

points: int

author: str

comments_count: int

class TopPosts(BaseModel):

posts: list[HackerNewsPost]

client = NotteClient()

with client.Session(open_viewer=True, browser_type="firefox") as session:

agent = client.Agent(session=session, reasoning_model='gemini/gemini-2.5-flash', max_steps=15)

response = agent.run(

task="Go to Hacker News (news.ycombinator.com) and extract the top 5 posts with their titles, URLs, points, authors, and comment counts.",

response_format=TopPosts,

)

print(response.answer)Vaults are tools you can attach to your Agent instance to securely store and manage credentials. The agent automatically uses these credentials when needed.

from notte_sdk import NotteClient

client = NotteClient()

with client.Vault() as vault, client.Session(open_viewer=True) as session:

vault.add_credentials(

url="https://x.com",

username="your-email",

password="your-password",

)

agent = client.Agent(session=session, vault=vault, max_steps=10)

response = agent.run(

task="go to twitter; login and go to my messages",

)

print(response.answer)Personas are tools you can attach to your Agent instance to provide digital identities with unique email addresses, phone numbers, and automated 2FA handling.

from notte_sdk import NotteClient

client = NotteClient()

with client.Persona(create_phone_number=False) as persona:

with client.Session(browser_type="firefox", open_viewer=True) as session:

agent = client.Agent(session=session, persona=persona, max_steps=15)

response = agent.run(

task="Open the Google form and RSVP yes with your name",

url="https://forms.google.com/your-form-url",

)

print(response.answer)Stealth features include automatic CAPTCHA solving and proxy configuration to enhance automation reliability and anonymity.

from notte_sdk import NotteClient

from notte_sdk.types import NotteProxy, ExternalProxy

client = NotteClient()

# Built-in proxies with CAPTCHA solving

with client.Session(

solve_captchas=True,

proxies=True, # US-based proxy

browser_type="firefox",

open_viewer=True

) as session:

agent = client.Agent(session=session, max_steps=5)

response = agent.run(

task="Try to solve the CAPTCHA using internal tools",

url="https://www.google.com/recaptcha/api2/demo"

)

# Custom proxy configuration

proxy_settings = ExternalProxy(

server="http://your-proxy-server:port",

username="your-username",

password="your-password",

)

with client.Session(proxies=[proxy_settings]) as session:

agent = client.Agent(session=session, max_steps=5)

response = agent.run(task="Navigate to a website")File Storage allows you to upload files to a session and download files that agents retrieve during their work. Files are session-scoped and persist beyond the session lifecycle.

from notte_sdk import NotteClient

client = NotteClient()

storage = client.FileStorage()

# Upload files before agent execution

storage.upload("/path/to/document.pdf")

# Create session with storage attached

with client.Session(storage=storage) as session:

agent = client.Agent(session=session, max_steps=5)

response = agent.run(

task="Upload the PDF document to the website and download the cat picture",

url="https://example.com/upload"

)

# Download files that the agent downloaded

downloaded_files = storage.list(type="downloads")

for file_name in downloaded_files:

storage.download(file_name=file_name, local_dir="./results")Cookies provide a flexible way to authenticate your sessions. While we recommend using the secure vault for credential management, cookies offer an alternative approach for certain use cases.

from notte_sdk import NotteClient

import json

client = NotteClient()

# Upload cookies for authentication

cookies = [

{

"name": "sb-db-auth-token",

"value": "base64-cookie-value",

"domain": "github.com",

"path": "/",

"expires": 9778363203.913704,

"httpOnly": False,

"secure": False,

"sameSite": "Lax"

}

]

with client.Session() as session:

session.set_cookies(cookies=cookies) # or cookie_file="path/to/cookies.json"

agent = client.Agent(session=session, max_steps=5)

response = agent.run(

task="go to nottelabs/notte get repo info",

)

# Get cookies from the session

cookies_resp = session.get_cookies()

with open("cookies.json", "w") as f:

json.dump(cookies_resp, f)You can plug in any browser session provider you want and use our agent on top. Use external headless browser providers via CDP to benefit from Notte's agentic capabilities with any CDP-compatible browser.

from notte_sdk import NotteClient

client = NotteClient()

cdp_url = "wss://your-external-cdp-url"

with client.Session(cdp_url=cdp_url) as session:

agent = client.Agent(session=session)

response = agent.run(task="extract pricing plans from https://www.notte.cc/")Notte's close compatibility with Playwright allows you to mix web automation primitives with agents for specific parts that require reasoning and adaptability. This hybrid approach cuts LLM costs and is much faster by using scripting for deterministic parts and agents only when needed.

from notte_sdk import NotteClient

client = NotteClient()

with client.Session(open_viewer=True) as session:

# Start with a deterministic navigation

session.execute(type="goto", url="https://duckduckgo.com/")

session.execute(type="fill", selector="internal:role=combobox[name=\"Search with DuckDuckGo\"i]", value="nottelabs")

agent = client.Agent(session=session, max_steps=3)

# Use an agent to reason about the next step

agent.run(task="Open nottelabs github repository")

# Use a scraping endpoint to extract data

data = session.scrape(instructions="Extract number of stars")Workflows are a powerful way to combine scripting and agents to reduce costs and improve reliability. However, deterministic parts of the workflow can still fail. To gracefully handle these failures with agents, you can use the AgentFallback class:

import notte

with notte.Session() as session:

_ = session.execute(type="goto", url="https://shop.notte.cc/")

_ = session.observe()

with notte.AgentFallback(session, "Go to cart"):

# Force execution failure -> trigger an agent fallback to gracefully fix the issue

res = session.execute(type="click", id="INVALID_ACTION_ID")For fast data extraction, we provide a dedicated scraping endpoint that automatically creates and manages sessions. You can pass custom instructions for structured outputs and enable stealth mode.

from notte_sdk import NotteClient

from pydantic import BaseModel

client = NotteClient()

# Simple scraping

response = client.scrape(

url="https://notte.cc",

scrape_links=True,

only_main_content=True

)

# Structured scraping with custom instructions

class Article(BaseModel):

title: str

content: str

date: str

response = client.scrape(

url="https://example.com/blog",

response_format=Article,

instructions="Extract only the title, date and content of the articles"

)Or directly with cURL

curl -X POST 'https://api.notte.cc/scrape' \

-H 'Authorization: Bearer <NOTTE-API-KEY>' \

-H 'Content-Type: application/json' \

-d '{

"url": "https://notte.cc",

"only_main_content": false,

}'Search: We've built a cool demo of an LLM leveraging the scraping endpoint in an MCP server to make real-time search in an LLM chatbot - works like a charm! Available here: https://search.notte.cc/

This project is licensed under the Server Side Public License v1. See the LICENSE file for details.

If you use notte in your research or project, please cite:

@software{notte2025,

author = {Pinto, Andrea and Giordano, Lucas and {nottelabs-team}},

title = {Notte: Software suite for internet-native agentic systems},

url = {https://github.com/nottelabs/notte},

year = {2025},

publisher = {GitHub},

license = {SSPL-1.0}

version = {1.4.4},

}Copyright © 2025 Notte Labs, Inc.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for notte

Similar Open Source Tools

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

stagehand

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

any-llm

The `any-llm` repository provides a unified API to access different LLM (Large Language Model) providers. It offers a simple and developer-friendly interface, leveraging official provider SDKs for compatibility and maintenance. The tool is framework-agnostic, actively maintained, and does not require a proxy or gateway server. It addresses challenges in API standardization and aims to provide a consistent interface for various LLM providers, overcoming limitations of existing solutions like LiteLLM, AISuite, and framework-specific integrations.

navigator

Navigator is a versatile tool for navigating through complex codebases efficiently. It provides a user-friendly interface to explore code files, search for specific functions or variables, and visualize code dependencies. With Navigator, developers can easily understand the structure of a project and quickly locate relevant code snippets. The tool supports various programming languages and offers customizable settings to enhance the coding experience. Whether you are working on a small project or a large codebase, Navigator can help you streamline your development process and improve code comprehension.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

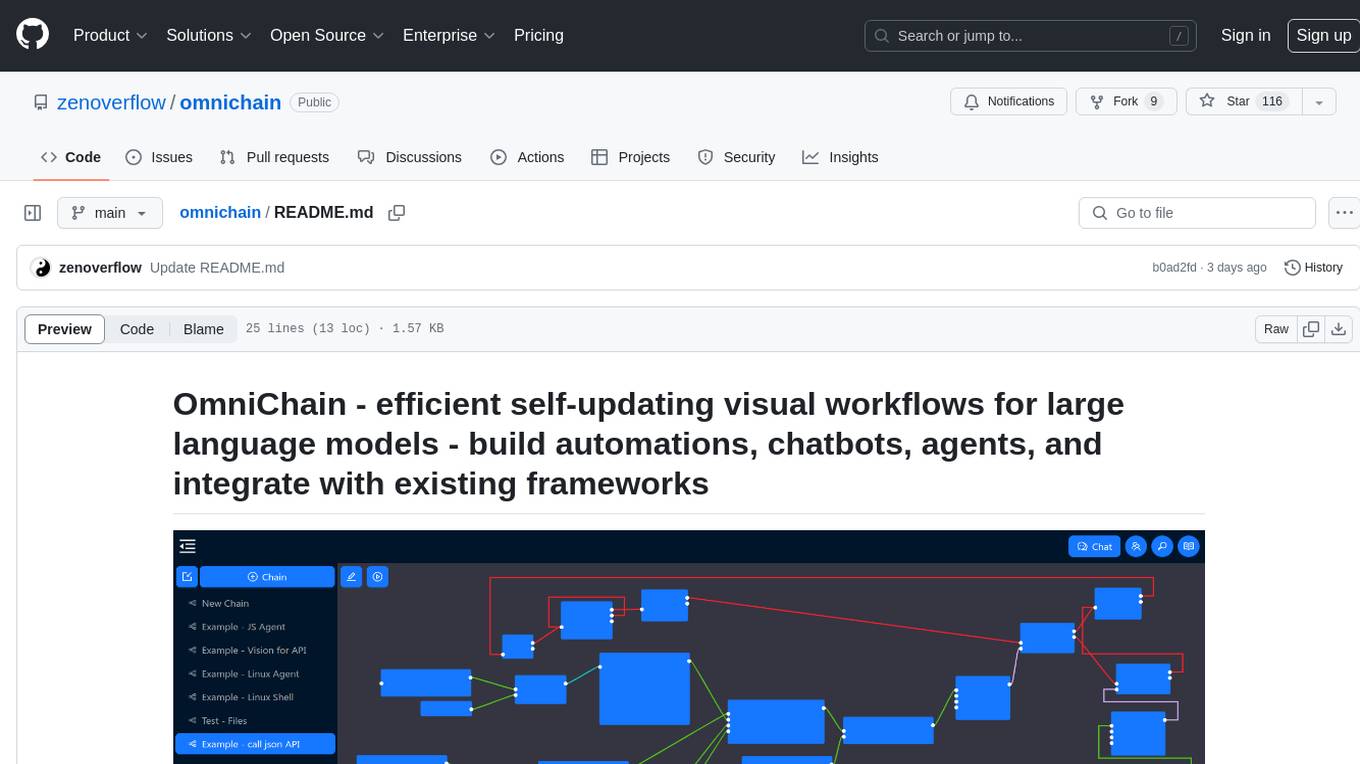

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

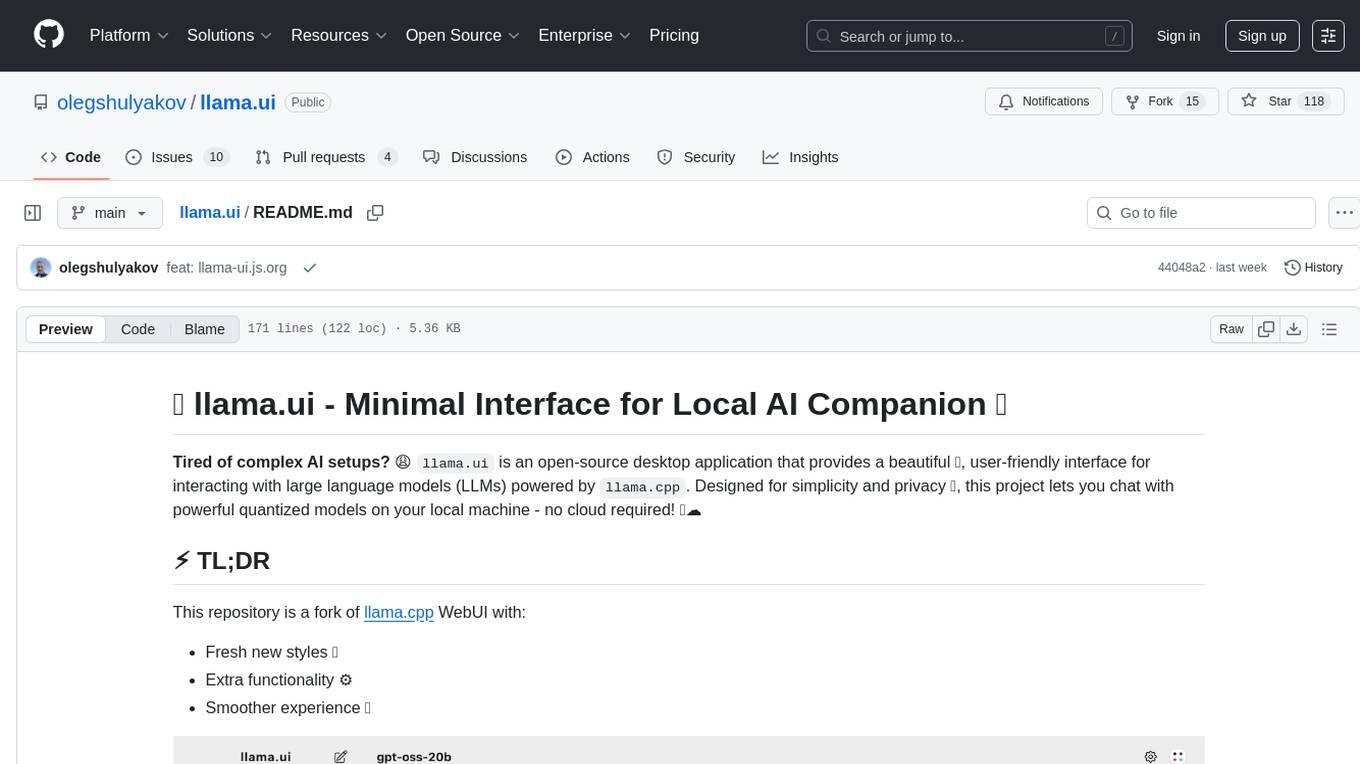

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

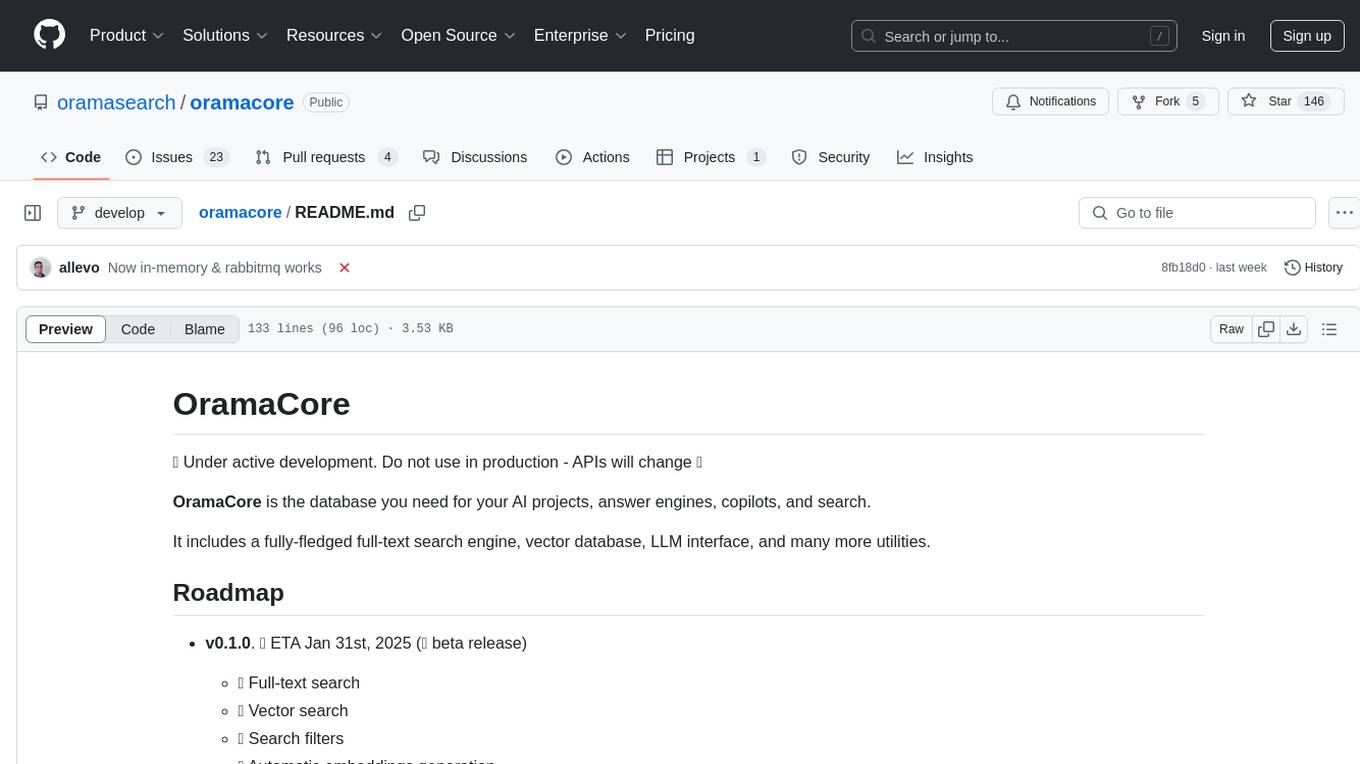

oramacore

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced search capabilities in AI applications.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

chatluna

Chatluna is a machine learning model plugin that provides chat services with large language models. It is highly extensible, supports multiple output formats, and offers features like custom conversation presets, rate limiting, and context awareness. Users can deploy Chatluna under Koishi without additional configuration. The plugin supports various models/platforms like OpenAI, Azure OpenAI, Google Gemini, and more. It also provides preset customization using YAML files and allows for easy forking and development within Koishi projects. However, the project lacks web UI, HTTP server, and project documentation, inviting contributions from the community.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

LocalLLMClient

LocalLLMClient is a Swift package designed to interact with local Large Language Models (LLMs) on Apple platforms. It supports GGUF, MLX models, and the FoundationModels framework, providing streaming API, multimodal capabilities, and tool calling functionalities. Users can easily integrate this tool to work with various models for text generation and processing. The package also includes advanced features for low-level API control and multimodal image processing. LocalLLMClient is experimental and subject to API changes, offering support for iOS, macOS, and Linux platforms.

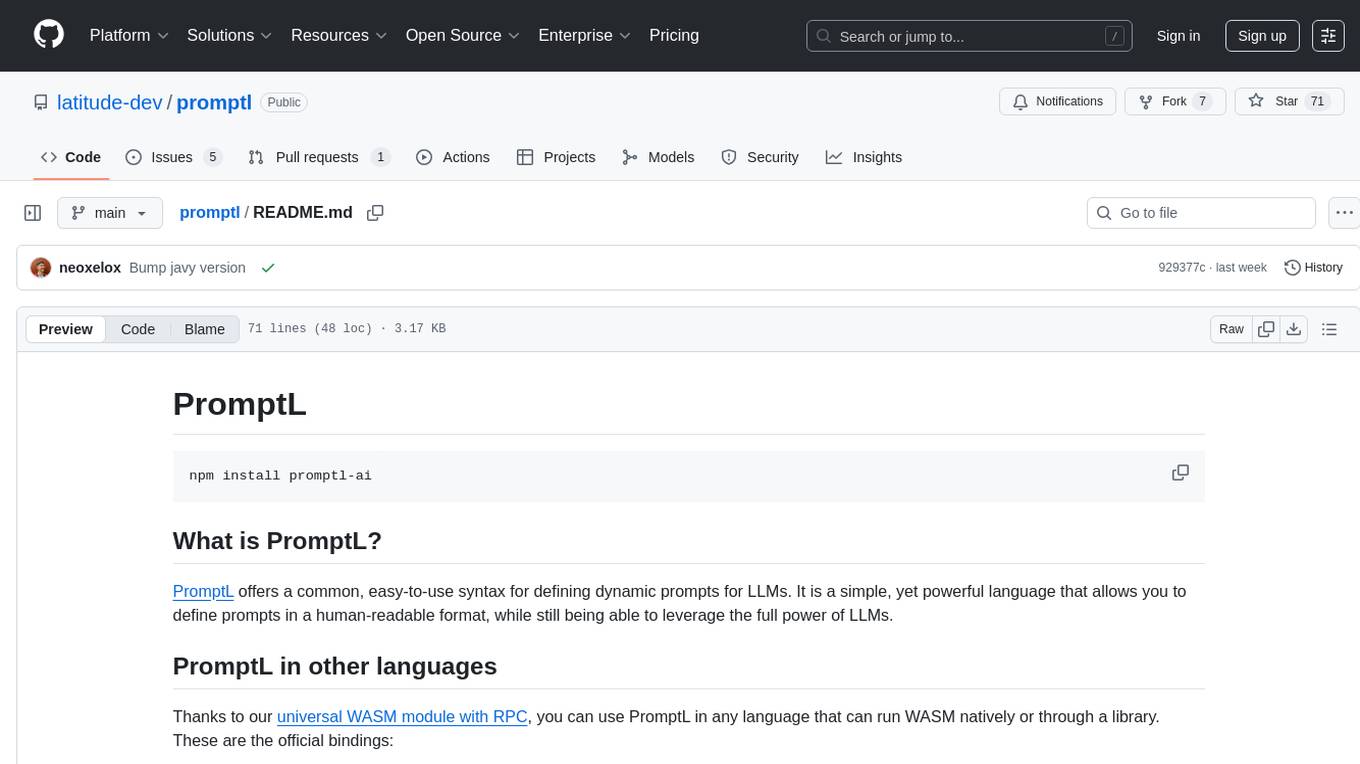

promptl

Promptl is a versatile command-line tool designed to streamline the process of creating and managing prompts for user input in various programming projects. It offers a simple and efficient way to prompt users for information, validate their input, and handle different scenarios based on their responses. With Promptl, developers can easily integrate interactive prompts into their scripts, applications, and automation workflows, enhancing user experience and improving overall usability. The tool provides a range of customization options and features, making it suitable for a wide range of use cases across different programming languages and environments.

For similar tasks

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

fuji-web

Fuji-Web is an intelligent AI partner designed for full browser automation. It autonomously navigates websites and performs tasks on behalf of the user while providing explanations for each action step. Users can easily install the extension in their browser, access the Fuji icon to input tasks, and interact with the tool to streamline web browsing tasks. The tool aims to enhance user productivity by automating repetitive web actions and providing a seamless browsing experience.

chatflow

Chatflow is a tool that provides a chat interface for users to interact with systems using natural language. The engine understands user intent and executes commands for tasks, allowing easy navigation of complex websites/products. This approach enhances user experience, reduces training costs, and boosts productivity.

J.A.R.V.I.S

J.A.R.V.I.S (Just A Rather Very Intelligent System) is an advanced AI assistant inspired by Iron Man's Jarvis, designed to assist with various tasks, from navigating websites to controlling your PC with natural language commands.

agent-q

Agentq is a tool that utilizes various agentic architectures to complete tasks on the web reliably. It includes a planner-navigator multi-agent architecture, a solo planner-actor agent, an actor-critic multi-agent architecture, and an actor-critic architecture with reinforcement learning and DPO finetuning. The repository also contains an open-source implementation of the research paper 'Agent Q'. Users can set up the tool by installing dependencies, starting Chrome in dev mode, and setting up necessary environment variables. The tool can be run to perform various tasks related to autonomous AI agents.

weblinx

WebLINX is a Python library and dataset for real-world website navigation with multi-turn dialogue. The repository provides code for training models reported in the WebLINX paper, along with a comprehensive API to work with the dataset. It includes modules for data processing, model evaluation, and utility functions. The modeling directory contains code for processing, training, and evaluating models such as DMR, LLaMA, MindAct, Pix2Act, and Flan-T5. Users can install specific dependencies for HTML processing, video processing, model evaluation, and library development. The evaluation module provides metrics and functions for evaluating models, with ongoing work to improve documentation and functionality.

cerebellum

Cerebellum is a lightweight browser agent that helps users accomplish user-defined goals on webpages through keyboard and mouse actions. It simplifies web browsing by treating it as navigating a directed graph, with each webpage as a node and user actions as edges. The tool uses a LLM to analyze page content and interactive elements to determine the next action. It is compatible with any Selenium-supported browser and can fill forms using user-provided JSON data. Cerebellum accepts runtime instructions to adjust browsing strategies and actions dynamically.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.