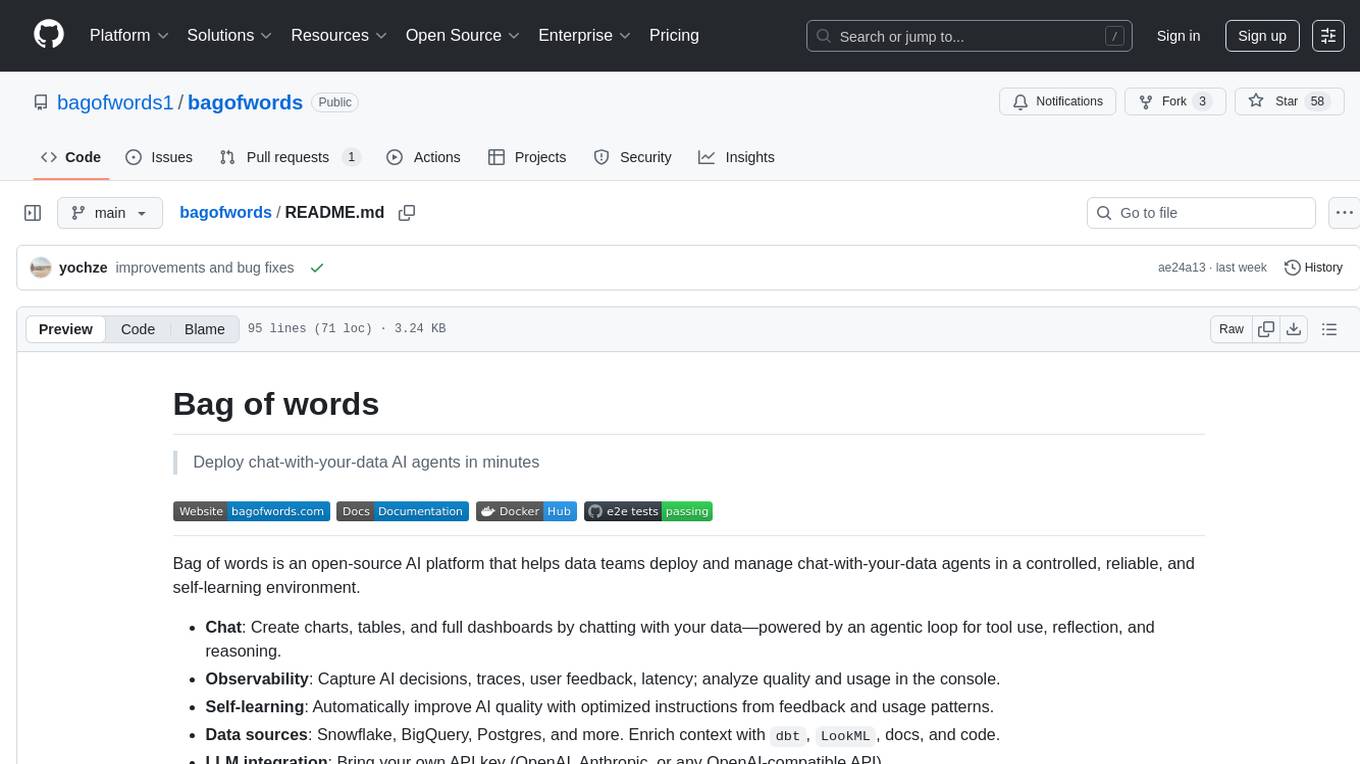

bagofwords

Deploy chat-with-your-data AI agents in minutes

Stars: 58

Bag of words is an open-source AI platform that helps data teams deploy and manage chat-with-your-data agents in a controlled, reliable, and self-learning environment. It enables users to create charts, tables, and dashboards by chatting with their data, capture AI decisions and user feedback, automatically improve AI quality, integrate with various data sources and APIs, and ensure governance and integrations. The platform supports self-hosting in VPC via VMs, Docker/Compose, or Kubernetes, and offers additional integrations for AI Analyst in Slack, Excel, Google Sheets, and more. Users can start in minutes and scale to org-wide analytics.

README:

Deploy chat-with-your-data AI agents in minutes

Bag of words is an open-source AI platform that helps data teams deploy and manage chat-with-your-data agents in a controlled, reliable, and self-learning environment.

- Chat: Create charts, tables, and full dashboards by chatting with your data—powered by an agentic loop for tool use, reflection, and reasoning.

- Observability: Capture AI decisions, traces, user feedback, latency; analyze quality and usage in the console.

- Self-learning: Automatically improve AI quality with optimized instructions from feedback and usage patterns.

-

Data sources: Snowflake, BigQuery, Postgres, and more. Enrich context with

dbt,LookML, docs, and code. - LLM integration: Bring your own API key (OpenAI, Anthropic, or any OpenAI-compatible API).

- Governance & integrations: Users and orgs, RBAC, audit logs, SSO (Google), SMTP. *Deployment: Self-host in your VPC via VMs, Docker/Compose, or Kubernetes.

Additional integrations to offer an AI Analyst in Slack, Excel, Google Sheets, and more. Get started in minutes, scale to org-wide analytics

# Run with SQLite (default)

docker run -p 3000:3000 bagofwords/bagofwordsdocker run -p 3000:3000 \

-e BOW_DATABASE_URL=postgresql://user:password@localhost:5432/dbname \

bagofwords/bagofwordsFor more advanced deployments, see the docs.

- Python 3.12+

- Node.js 18+

- Yarn

# Setup Python environment

cd backend

python3 -m venv venv

source venv/bin/activate # or `venv\Scripts\activate` on Windows

pip install -r requirements_versioned.txt

# Run migrations

alembic upgrade head

# Start server

python main.py # Available at http://localhost:8000cd frontend

yarn install

yarn dev # Regular mode- OpenAPI docs: http://localhost:8000/docs

- Website: https://bagofwords.com

- Docs: https://docs.bagofwords.com

AGPL-3.0

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for bagofwords

Similar Open Source Tools

bagofwords

Bag of words is an open-source AI platform that helps data teams deploy and manage chat-with-your-data agents in a controlled, reliable, and self-learning environment. It enables users to create charts, tables, and dashboards by chatting with their data, capture AI decisions and user feedback, automatically improve AI quality, integrate with various data sources and APIs, and ensure governance and integrations. The platform supports self-hosting in VPC via VMs, Docker/Compose, or Kubernetes, and offers additional integrations for AI Analyst in Slack, Excel, Google Sheets, and more. Users can start in minutes and scale to org-wide analytics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

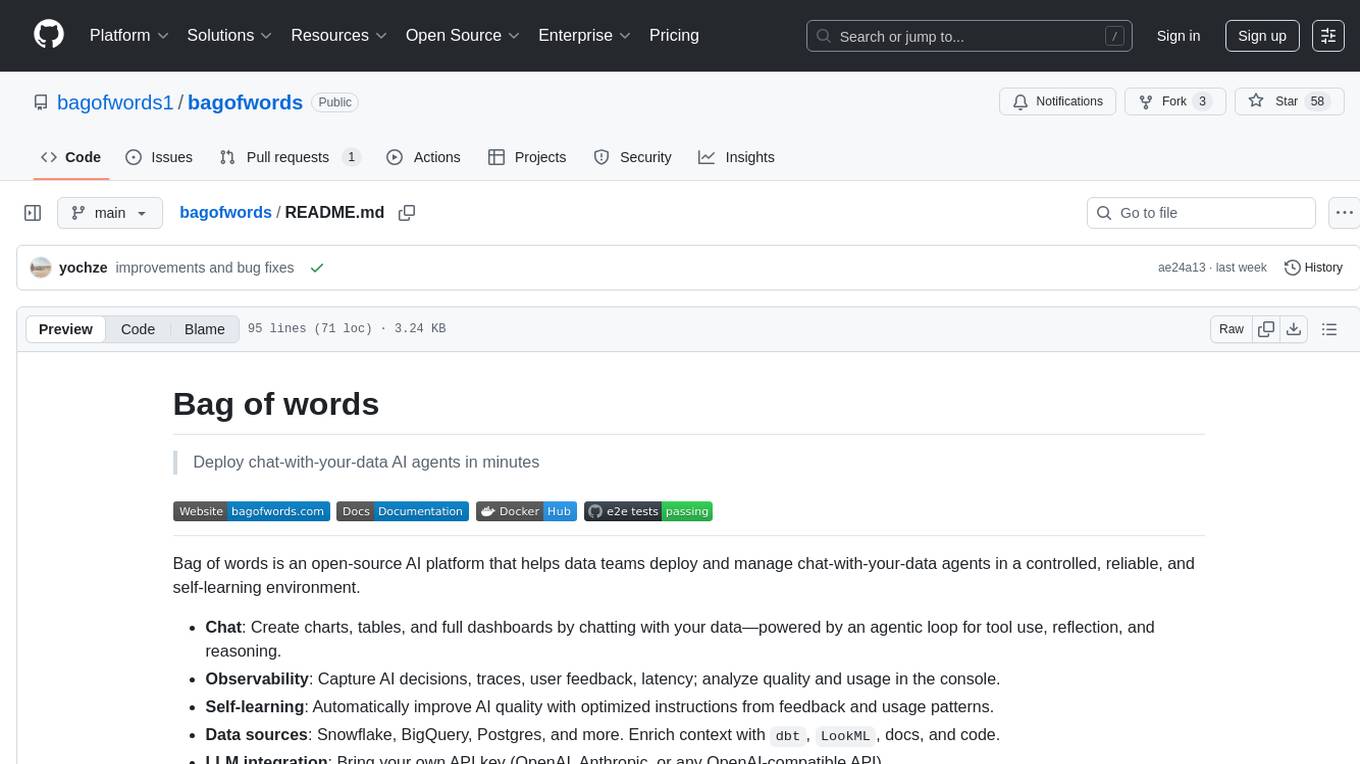

qwery-core

Qwery is a platform for querying and visualizing data using natural language without technical knowledge. It seamlessly integrates with various datasources, generates optimized queries, and delivers outcomes like result sets, dashboards, and APIs. Features include natural language querying, multi-database support, AI-powered agents, visual data apps, desktop & cloud options, template library, and extensibility through plugins. The project is under active development and not yet suitable for production use.

pipeshub-ai

Pipeshub-ai is a versatile tool for automating data pipelines in AI projects. It provides a user-friendly interface to design, deploy, and monitor complex data workflows, enabling seamless integration of various AI models and data sources. With Pipeshub-ai, users can easily create end-to-end pipelines for tasks such as data preprocessing, model training, and inference, streamlining the AI development process and improving productivity. The tool supports integration with popular AI frameworks and cloud services, making it suitable for both beginners and experienced AI practitioners.

pyspur

PySpur is a graph-based editor designed for LLM (Large Language Models) workflows. It offers modular building blocks, node-level debugging, and performance evaluation. The tool is easy to hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies. Users can quickly set up PySpur by cloning the repository, creating a .env file, starting docker services, and accessing the portal. PySpur can also work with local models served using Ollama, with steps provided for configuration. The roadmap includes features like canvas, async/batch execution, support for Ollama, new nodes, pipeline optimization, templates, code compilation, multimodal support, and more.

starwhale

Starwhale is an MLOps/LLMOps platform that brings efficiency and standardization to machine learning operations. It streamlines the model development lifecycle, enabling teams to optimize workflows around key areas like model building, evaluation, release, and fine-tuning. Starwhale abstracts Model, Runtime, and Dataset as first-class citizens, providing tailored capabilities for common workflow scenarios including Models Evaluation, Live Demo, and LLM Fine-tuning. It is an open-source platform designed for clarity and ease of use, empowering developers to build customized MLOps features tailored to their needs.

Ivy-Framework

Ivy-Framework is a powerful tool for building internal applications with AI assistance using C# codebase. It provides a CLI for project initialization, authentication integrations, database support, LLM code generation, secrets management, container deployment, hot reload, dependency injection, state management, routing, and external widget framework. Users can easily create data tables for sorting, filtering, and pagination. The framework offers a seamless integration of front-end and back-end development, making it ideal for developing robust internal tools and dashboards.

orbit

ORBIT (Open Retrieval-Based Inference Toolkit) is a middleware platform that provides a unified API for AI inference. It acts as a central gateway, allowing you to connect various local and remote AI models with your private data sources like SQL databases, vector stores, and local files. ORBIT uses a flexible adapter architecture to connect your data to AI models, creating specialized 'agents' for specific tasks. It supports scenarios like Knowledge Base Q&A and Chat with Your SQL Database, enabling users to interact with AI models seamlessly. The tool offers a RESTful API for programmatic access and includes features like authentication, API key management, system prompts, health monitoring, and file management. ORBIT is designed to streamline AI inference tasks and facilitate interactions between users and AI models.

skypilot

SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud, offering maximum cost savings, highest GPU availability, and managed execution. SkyPilot abstracts away cloud infra burdens: - Launch jobs & clusters on any cloud - Easy scale-out: queue and run many jobs, automatically managed - Easy access to object stores (S3, GCS, R2) SkyPilot maximizes GPU availability for your jobs: * Provision in all zones/regions/clouds you have access to (the _Sky_), with automatic failover SkyPilot cuts your cloud costs: * Managed Spot: 3-6x cost savings using spot VMs, with auto-recovery from preemptions * Optimizer: 2x cost savings by auto-picking the cheapest VM/zone/region/cloud * Autostop: hands-free cleanup of idle clusters SkyPilot supports your existing GPU, TPU, and CPU workloads, with no code changes.

BubbleLab

Bubble Lab is an open-source agentic workflow automation builder designed for developers seeking full control, transparency, and type safety. It compiles workflows into clean, production-ready TypeScript code that can be debugged and deployed anywhere. With features like natural language prompt to workflow generation, full observability, seamless migration from other platforms, and instant export as TypeScript/API, Bubble Lab offers a flexible and code-centric approach to workflow automation.

proxypal

ProxyPal is a desktop app that allows users to utilize multiple AI subscriptions (such as Claude, ChatGPT, Gemini, GitHub Copilot) with any coding tool. It acts as a bridge between various AI providers and coding tools, offering features like connecting to different AI services, GitHub Copilot integration, Antigravity support, usage analytics, request monitoring, and auto-configuration. The app supports multiple platforms and clients, providing a seamless experience for developers to leverage AI capabilities in their coding workflow. ProxyPal simplifies the process of using AI models in coding environments, enhancing productivity and efficiency.

pipecat

Pipecat is an open-source framework designed for building generative AI voice bots and multimodal assistants. It provides code building blocks for interacting with AI services, creating low-latency data pipelines, and transporting audio, video, and events over the Internet. Pipecat supports various AI services like speech-to-text, text-to-speech, image generation, and vision models. Users can implement new services and contribute to the framework. Pipecat aims to simplify the development of applications like personal coaches, meeting assistants, customer support bots, and more by providing a complete framework for integrating AI services.

bytebot

Bytebot is an open-source AI desktop agent that provides a virtual employee with its own computer to complete tasks for users. It can use various applications, download and organize files, log into websites, process documents, and perform complex multi-step workflows. By giving AI access to a complete desktop environment, Bytebot unlocks capabilities not possible with browser-only agents or API integrations, enabling complete task autonomy, document processing, and usage of real applications.

kubewall

kubewall is an open-source, single-binary Kubernetes dashboard with multi-cluster management and AI integration. It provides a simple and rich real-time interface to manage and investigate your clusters. With features like multi-cluster management, AI-powered troubleshooting, real-time monitoring, single-binary deployment, in-depth resource views, browser-based access, search and filter capabilities, privacy by default, port forwarding, live refresh, aggregated pod logs, and clean resource management, kubewall offers a comprehensive solution for Kubernetes cluster management.

ai-flow

AI Flow is an open-source, user-friendly UI application that empowers you to seamlessly connect multiple AI models together, specifically leveraging the capabilities of multiples AI APIs such as OpenAI, StabilityAI and Replicate. In a nutshell, AI Flow provides a visual platform for crafting and managing AI-driven workflows, thereby facilitating diverse and dynamic AI interactions.

Alice

Alice is an open-source AI companion designed to live on your desktop, providing voice interaction, intelligent context awareness, and powerful tooling. More than a chatbot, Alice is emotionally engaging and deeply useful, assisting with daily tasks and creative work. Key features include voice interaction with natural-sounding responses, memory and context management, vision and visual output capabilities, computer use tools, function calling for web search and task scheduling, wake word support, dedicated Chrome extension, and flexible settings interface. Technologies used include Vue.js, Electron, OpenAI, Go, hnswlib-node, and more. Alice is customizable and offers a dedicated Chrome extension, wake word support, and various tools for computer use and productivity tasks.

For similar tasks

bagofwords

Bag of words is an open-source AI platform that helps data teams deploy and manage chat-with-your-data agents in a controlled, reliable, and self-learning environment. It enables users to create charts, tables, and dashboards by chatting with their data, capture AI decisions and user feedback, automatically improve AI quality, integrate with various data sources and APIs, and ensure governance and integrations. The platform supports self-hosting in VPC via VMs, Docker/Compose, or Kubernetes, and offers additional integrations for AI Analyst in Slack, Excel, Google Sheets, and more. Users can start in minutes and scale to org-wide analytics.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

radicalbit-ai-monitoring

The Radicalbit AI Monitoring Platform provides a comprehensive solution for monitoring Machine Learning and Large Language models in production. It helps proactively identify and address potential performance issues by analyzing data quality, model quality, and model drift. The repository contains files and projects for running the platform, including UI, API, SDK, and Spark components. Installation using Docker compose is provided, allowing deployment with a K3s cluster and interaction with a k9s container. The platform documentation includes a step-by-step guide for installation and creating dashboards. Community engagement is encouraged through a Discord server. The roadmap includes adding functionalities for batch and real-time workloads, covering various model types and tasks.

niledatabase

Nile is a serverless Postgres database designed for modern SaaS applications. It virtualizes tenants/customers/organizations into Postgres to enable native tenant data isolation, performance isolation, per-tenant backups, and tenant placement on shared or dedicated compute globally. With Nile, you can manage multiple tenants effortlessly, without complex permissions or buggy scripts. Additionally, it offers opt-in user management capabilities, customer-specific vector embeddings, and instant tenant admin dashboards. Built for the cloud, Nile provides a true serverless experience with effortless scaling.

cube

Cube is a semantic layer for building data applications, helping data engineers and application developers access data from modern data stores, organize it into consistent definitions, and deliver it to every application. It works with SQL-enabled data sources, providing sub-second latency and high concurrency for API requests. Cube addresses SQL code organization, performance, and access control issues in data applications, enabling efficient data modeling, access control, and performance optimizations for various tools like embedded analytics, dashboarding, reporting, and data notebooks.

rill

Rill delivers rapid, self-service dashboards for data engineers and analysts directly on raw data lakes, ensuring reliable, fast-loading dashboards with accurate, real-time metrics. It comes with an embedded in-memory database for lightning-fast performance and supports bringing your own OLAP engine. Rill implements BI-as-code through SQL-based definitions, YAML configuration, Git integration, and CLI tools. Its metrics layer provides a unified way to define, compute, and serve business metrics, while AI agents can access fresh metrics instantly for precise decision-making and intelligent automation.

ai-dev-kit

The AI Dev Kit is a comprehensive toolkit designed to enhance AI-driven development on Databricks. It provides trusted sources for AI coding assistants like Claude Code and Cursor to build faster and smarter on Databricks. The kit includes features such as Spark Declarative Pipelines, Databricks Jobs, AI/BI Dashboards, Unity Catalog, Genie Spaces, Knowledge Assistants, MLflow Experiments, Model Serving, Databricks Apps, and more. Users can choose from different adventures like installing the kit, using the visual builder app, teaching AI assistants Databricks patterns, executing Databricks actions, or building custom integrations with the core library. The kit also includes components like databricks-tools-core, databricks-mcp-server, databricks-skills, databricks-builder-app, and ai-dev-project.

pup

Pup is a Go-based command-line wrapper designed for easy interaction with Datadog APIs. It provides a fast, cross-platform binary with support for OAuth2 authentication and traditional API key authentication. The tool offers simple commands for common Datadog operations, structured JSON output for parsing and automation, and dynamic client registration with unique OAuth credentials per installation. Pup currently implements 38 out of 85+ available Datadog APIs, covering core observability, monitoring & alerting, security & compliance, infrastructure & cloud, incident & operations, CI/CD & development, organization & access, and platform & configuration domains. Users can easily install Pup via Homebrew, Go Install, or manual download, and authenticate using OAuth2 or API key methods. The tool supports various commands for tasks such as testing connection, managing monitors, querying metrics, handling dashboards, working with SLOs, and handling incidents.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.