skypilot

Run, manage, and scale AI workloads on any AI infrastructure. Use one system to access & manage all AI compute (Kubernetes, 20+ clouds, or on-prem).

Stars: 9439

SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud, offering maximum cost savings, highest GPU availability, and managed execution. SkyPilot abstracts away cloud infra burdens: - Launch jobs & clusters on any cloud - Easy scale-out: queue and run many jobs, automatically managed - Easy access to object stores (S3, GCS, R2) SkyPilot maximizes GPU availability for your jobs: * Provision in all zones/regions/clouds you have access to (the _Sky_), with automatic failover SkyPilot cuts your cloud costs: * Managed Spot: 3-6x cost savings using spot VMs, with auto-recovery from preemptions * Optimizer: 2x cost savings by auto-picking the cheapest VM/zone/region/cloud * Autostop: hands-free cleanup of idle clusters SkyPilot supports your existing GPU, TPU, and CPU workloads, with no code changes.

README:

SkyPilot is a system to run, manage, and scale AI workloads on any AI infrastructure.

SkyPilot gives AI teams a simple interface to run jobs on any infra. Infra teams get a unified control plane to manage any AI compute — with advanced scheduling, scaling, and orchestration.

🔥 News 🔥

- [Dec 2025] SkyPilot v0.11 released: Multi-Cloud Pools, Fast Managed Jobs, Enterprise-Readiness at Large Scale, Programmability. Release notes

- [Dec 2025] SkyPilot Pools released: Run batch inference and other jobs on a managed pool of warm workers (across clouds or clusters). blog, docs

- [Dec 2025] Train an agent to use Google Search as a tool with RL on your Kubernetes or clouds: blog, example

- [Nov 2025] Serve Kimi K2 Thinking with reasoning capabilities on your Kubernetes or clouds: example

- [Oct 2025] Run RL training for LLMs with SkyRL on your Kubernetes or clouds: example

- [Oct 2025] Train and serve Andrej Karpathy's nanochat - the best ChatGPT that $100 can buy: example

- [Oct 2025] Run large-scale LLM training with TorchTitan on any AI infra: example

- [Sep 2025] Scaling AI infrastructure at Abridge - 10x faster development with SkyPilot: blog

- [Sep 2025] Network and Storage Benchmarks for LLM training on the cloud: blog

- [Aug 2025] Serve and finetune OpenAI GPT-OSS models (gpt-oss-120b, gpt-oss-20b) with one command on any infra: serve + LoRA and full finetuning

- [Jul 2025] Run distributed RL training for LLMs with Verl (PPO, GRPO) on any cloud: example

SkyPilot is easy to use for AI teams:

- Quickly spin up compute on your own infra

- Environment and job as code — simple and portable

- Easy job management: queue, run, and auto-recover many jobs

SkyPilot makes Kubernetes easy for AI & Infra teams:

- Slurm-like ease of use, cloud-native robustness

- Local dev experience on K8s: SSH into pods, sync code, or connect IDE

- Turbocharge your clusters: gang scheduling, multi-cluster, and scaling

SkyPilot unifies multiple clusters, clouds, and hardware:

- One interface to use reserved GPUs, Kubernetes clusters, Slurm clusters, or 20+ clouds

- Flexible provisioning of GPUs, TPUs, CPUs, with auto-retry

- Team deployment and resource sharing

SkyPilot cuts your cloud costs & maximizes GPU availability:

- Autostop: automatic cleanup of idle resources

- Spot instance support: 3-6x cost savings, with preemption auto-recovery

- Intelligent scheduling: automatically run on the cheapest & most available infra

SkyPilot supports your existing GPU, TPU, and CPU workloads, with no code changes.

Install with pip:

# Choose your clouds:

pip install -U "skypilot[kubernetes,aws,gcp,azure,oci,nebius,lambda,runpod,fluidstack,paperspace,cudo,ibm,scp,seeweb,shadeform]"To get the latest features and fixes, use the nightly build or install from source:

# Choose your clouds:

pip install "skypilot-nightly[kubernetes,aws,gcp,azure,oci,nebius,lambda,runpod,fluidstack,paperspace,cudo,ibm,scp,seeweb,shadeform]"Current supported infra: Kubernetes, Slurm, AWS, GCP, Azure, OCI, CoreWeave, Nebius, Lambda Cloud, RunPod, Fluidstack, Cudo, Digital Ocean, Paperspace, Cloudflare, Samsung, IBM, Vast.ai, VMware vSphere, Seeweb, Prime Intellect, Shadeform.

You can find our documentation here.

A SkyPilot task specifies: resource requirements, data to be synced, setup commands, and the task commands.

Once written in this unified interface (YAML or Python API), the task can be launched on any available infra (Kubernetes, Slurm, cloud, etc.). This avoids vendor lock-in, and allows easily moving jobs to a different provider.

Paste the following into a file my_task.yaml:

resources:

accelerators: A100:8 # 8x NVIDIA A100 GPU

num_nodes: 1 # Number of VMs to launch

# Working directory (optional) containing the project codebase.

# Its contents are synced to ~/sky_workdir/ on the cluster.

workdir: ~/torch_examples

# Commands to be run before executing the job.

# Typical use: pip install -r requirements.txt, git clone, etc.

setup: |

cd mnist

pip install -r requirements.txt

# Commands to run as a job.

# Typical use: launch the main program.

run: |

cd mnist

python main.py --epochs 1Prepare the workdir by cloning:

git clone https://github.com/pytorch/examples.git ~/torch_examplesLaunch with sky launch (note: access to GPU instances is needed for this example):

sky launch my_task.yamlSkyPilot then performs the heavy-lifting for you, including:

- Find the cheapest & available infra across your clusters or clouds

- Provision the GPUs (pods or VMs), with auto-failover if the infra returned capacity errors

- Sync your local

workdirto the provisioned cluster - Auto-install dependencies by running the task's

setupcommands - Run the task's

runcommands, and stream logs

See Quickstart to get started with SkyPilot.

See SkyPilot examples that cover: development, training, serving, LLM models, AI apps, and common frameworks.

Latest featured examples:

| Task | Examples |

|---|---|

| Training | Verl, Finetune Llama 4, TorchTitan, PyTorch, DeepSpeed, NeMo, Ray, Unsloth, Jax/TPU |

| Serving | vLLM, SGLang, Ollama |

| Models | DeepSeek-R1, Llama 4, Llama 3, CodeLlama, Qwen, Kimi-K2, Kimi-K2-Thinking, Mixtral |

| AI apps | RAG, vector databases (ChromaDB, CLIP) |

| Common frameworks | Airflow, Jupyter, marimo |

Source files can be found in llm/ and examples/.

To learn more, see SkyPilot Overview, SkyPilot docs, and SkyPilot blog.

SkyPilot adopters: Testimonials and Case Studies

Partners and integrations: Community Spotlights

Follow updates:

Read the research:

- SkyPilot paper and talk (NSDI 2023)

- Sky Computing whitepaper

- Sky Computing vision paper (HotOS 2021)

- SkyServe: AI serving across regions and clouds (EuroSys 2025)

- Managed jobs spot instance policy (NSDI 2024)

SkyPilot was initially started at the Sky Computing Lab at UC Berkeley and has since gained many industry contributors. To read about the project's origin and vision, see Concept: Sky Computing.

We are excited to hear your feedback:

- For issues and feature requests, please open a GitHub issue.

- For questions, please use GitHub Discussions.

For general discussions, join us on the SkyPilot Slack.

We welcome all contributions to the project! See CONTRIBUTING for how to get involved.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for skypilot

Similar Open Source Tools

skypilot

SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud, offering maximum cost savings, highest GPU availability, and managed execution. SkyPilot abstracts away cloud infra burdens: - Launch jobs & clusters on any cloud - Easy scale-out: queue and run many jobs, automatically managed - Easy access to object stores (S3, GCS, R2) SkyPilot maximizes GPU availability for your jobs: * Provision in all zones/regions/clouds you have access to (the _Sky_), with automatic failover SkyPilot cuts your cloud costs: * Managed Spot: 3-6x cost savings using spot VMs, with auto-recovery from preemptions * Optimizer: 2x cost savings by auto-picking the cheapest VM/zone/region/cloud * Autostop: hands-free cleanup of idle clusters SkyPilot supports your existing GPU, TPU, and CPU workloads, with no code changes.

pipecat

Pipecat is an open-source framework designed for building generative AI voice bots and multimodal assistants. It provides code building blocks for interacting with AI services, creating low-latency data pipelines, and transporting audio, video, and events over the Internet. Pipecat supports various AI services like speech-to-text, text-to-speech, image generation, and vision models. Users can implement new services and contribute to the framework. Pipecat aims to simplify the development of applications like personal coaches, meeting assistants, customer support bots, and more by providing a complete framework for integrating AI services.

flow-like

Flow-Like is an enterprise-grade workflow operating system built upon Rust for uncompromising performance, efficiency, and code safety. It offers a modular frontend for apps, a rich set of events, a node catalog, a powerful no-code workflow IDE, and tools to manage teams, templates, and projects within organizations. With typed workflows, users can create complex, large-scale workflows with clear data origins, transformations, and contracts. Flow-Like is designed to automate any process through seamless integration of LLM, ML-based, and deterministic decision-making instances.

logto

Logto is a modern, open-source authentication infrastructure designed for SaaS and AI applications. It simplifies OIDC and OAuth 2.1 implementation, enabling secure, production-ready authentication with features like multi-tenancy, enterprise SSO, and RBAC. Logto offers pre-built sign-in flows, customizable UIs, and SDKs for various frameworks, supporting protocols like OIDC, OAuth 2.1, and SAML. It is suitable for teams scaling SaaS, AI, and agent-based platforms without authentication complexities.

bagofwords

Bag of words is an open-source AI platform that helps data teams deploy and manage chat-with-your-data agents in a controlled, reliable, and self-learning environment. It enables users to create charts, tables, and dashboards by chatting with their data, capture AI decisions and user feedback, automatically improve AI quality, integrate with various data sources and APIs, and ensure governance and integrations. The platform supports self-hosting in VPC via VMs, Docker/Compose, or Kubernetes, and offers additional integrations for AI Analyst in Slack, Excel, Google Sheets, and more. Users can start in minutes and scale to org-wide analytics.

LMCache

LMCache is a serving engine extension designed to reduce time to first token (TTFT) and increase throughput, particularly in long-context scenarios. It stores key-value caches of reusable texts across different locations like GPU, CPU DRAM, and Local Disk, allowing the reuse of any text in any serving engine instance. By combining LMCache with vLLM, significant delay savings and GPU cycle reduction are achieved in various large language model (LLM) use cases, such as multi-round question answering and retrieval-augmented generation (RAG). LMCache provides integration with the latest vLLM version, offering both online serving and offline inference capabilities. It supports sharing key-value caches across multiple vLLM instances and aims to provide stable support for non-prefix key-value caches along with user and developer documentation.

kubewall

kubewall is an open-source, single-binary Kubernetes dashboard with multi-cluster management and AI integration. It provides a simple and rich real-time interface to manage and investigate your clusters. With features like multi-cluster management, AI-powered troubleshooting, real-time monitoring, single-binary deployment, in-depth resource views, browser-based access, search and filter capabilities, privacy by default, port forwarding, live refresh, aggregated pod logs, and clean resource management, kubewall offers a comprehensive solution for Kubernetes cluster management.

chatbox

Chatbox is a desktop client for ChatGPT, Claude, and other LLMs, providing a user-friendly interface for AI copilot assistance on Windows, Mac, and Linux. It offers features like local data storage, multiple LLM provider support, image generation with Dall-E-3, enhanced prompting, keyboard shortcuts, and more. Users can collaborate, access the tool on various platforms, and enjoy multilingual support. Chatbox is constantly evolving with new features to enhance the user experience.

ToolJet

ToolJet is an open-source platform for building and deploying internal tools, workflows, and AI agents. It offers a visual builder with drag-and-drop UI, integrations with databases, APIs, SaaS apps, and object storage. The community edition includes features like a visual app builder, ToolJet database, multi-page apps, collaboration tools, extensibility with plugins, code execution, and security measures. ToolJet AI, the enterprise version, adds AI capabilities for app generation, query building, debugging, agent creation, security compliance, user management, environment management, GitSync, branding, access control, embedded apps, and enterprise support.

Kiln

Kiln is an intuitive tool for fine-tuning LLM models, generating synthetic data, and collaborating on datasets. It offers desktop apps for Windows, MacOS, and Linux, zero-code fine-tuning for various models, interactive data generation, and Git-based version control. Users can easily collaborate with QA, PM, and subject matter experts, generate auto-prompts, and work with a wide range of models and providers. The tool is open-source, privacy-first, and supports structured data tasks in JSON format. Kiln is free to use and helps build high-quality AI products with datasets, facilitates collaboration between technical and non-technical teams, allows comparison of models and techniques without code, ensures structured data integrity, and prioritizes user privacy.

openrl

OpenRL is an open-source general reinforcement learning research framework that supports training for various tasks such as single-agent, multi-agent, offline RL, self-play, and natural language. Developed based on PyTorch, the goal of OpenRL is to provide a simple-to-use, flexible, efficient and sustainable platform for the reinforcement learning research community. It supports a universal interface for all tasks/environments, single-agent and multi-agent tasks, offline RL training with expert dataset, self-play training, reinforcement learning training for natural language tasks, DeepSpeed, Arena for evaluation, importing models and datasets from Hugging Face, user-defined environments, models, and datasets, gymnasium environments, callbacks, visualization tools, unit testing, and code coverage testing. It also supports various algorithms like PPO, DQN, SAC, and environments like Gymnasium, MuJoCo, Atari, and more.

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

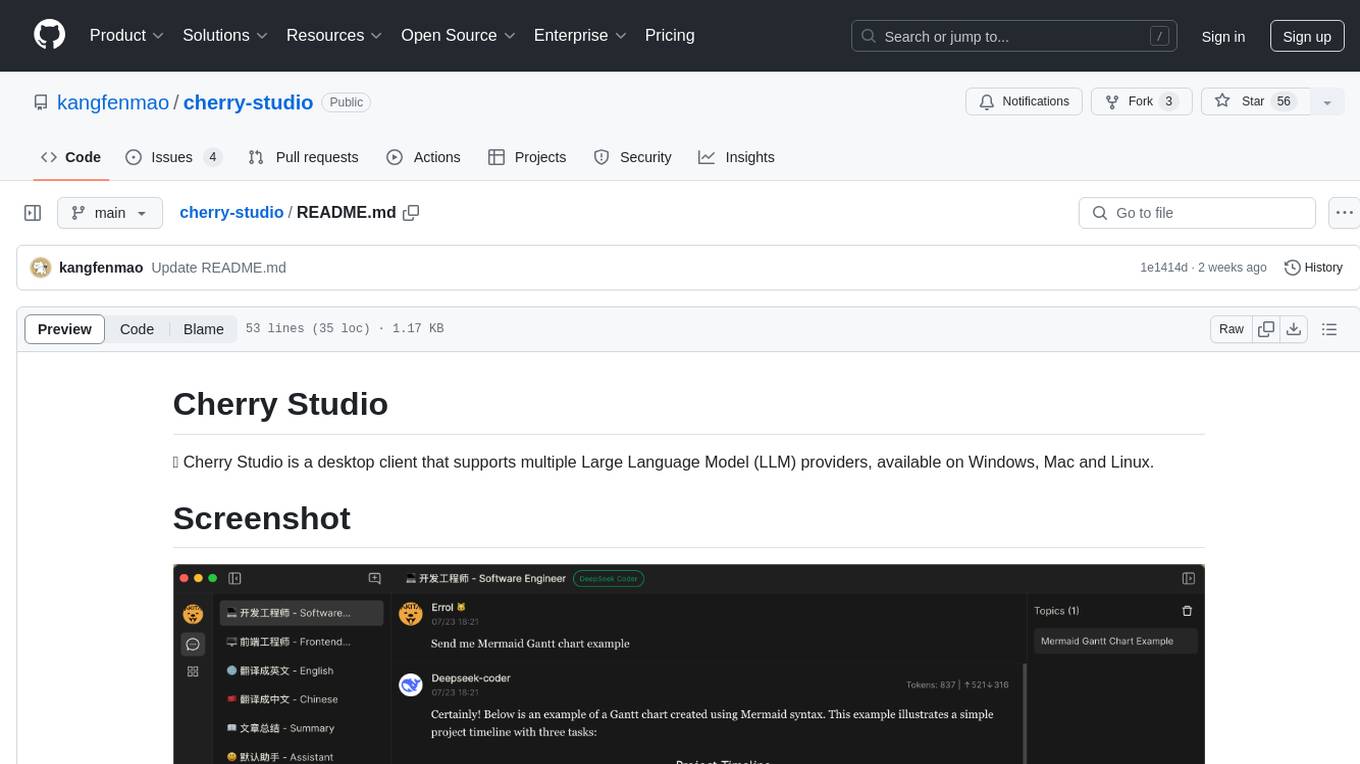

cherry-studio

Cherry Studio is a desktop client that supports multiple Large Language Model (LLM) providers, available on Windows, Mac, and Linux. It allows users to create multiple Assistants and topics, use multiple models to answer questions in the same conversation, and supports drag-and-drop sorting, code highlighting, and Mermaid chart. The tool is designed to enhance productivity and streamline the process of interacting with various language models.

axolotl

Axolotl is a lightweight and efficient tool for managing and analyzing large datasets. It provides a user-friendly interface for data manipulation, visualization, and statistical analysis. With Axolotl, users can easily import, clean, and explore data to gain valuable insights and make informed decisions. The tool supports various data formats and offers a wide range of functions for data processing and modeling. Whether you are a data scientist, researcher, or business analyst, Axolotl can help streamline your data workflows and enhance your data analysis capabilities.

SkyRL

SkyRL is a full-stack RL library that provides components such as 'skyagent' for training long-horizon, real-world agents, 'skyrl-train' for modular RL training, and 'skyrl-gym' for a variety of tool-use tasks. It offers a library of math, coding, search, and SQL environments implemented in the Gymnasium API, optimized for multi-turn tool use LLMs on long-horizon, real-environment tasks.

For similar tasks

agent-os

The Agent OS is an experimental framework and runtime to build sophisticated, long running, and self-coding AI agents. We believe that the most important super-power of AI agents is to write and execute their own code to interact with the world. But for that to work, they need to run in a suitable environment—a place designed to be inhabited by agents. The Agent OS is designed from the ground up to function as a long-term computing substrate for these kinds of self-evolving agents.

AISystem

This open-source project, also known as **Deep Learning System** or **AI System (AISys)**, aims to explore and learn about the system design of artificial intelligence and deep learning. The project is centered around the full-stack content of AI systems that ZOMI has accumulated,整理, and built during his work. The goal is to collaborate with all friends who are interested in AI open-source projects to jointly promote learning and discussion.

skypilot

SkyPilot is a framework for running LLMs, AI, and batch jobs on any cloud, offering maximum cost savings, highest GPU availability, and managed execution. SkyPilot abstracts away cloud infra burdens: - Launch jobs & clusters on any cloud - Easy scale-out: queue and run many jobs, automatically managed - Easy access to object stores (S3, GCS, R2) SkyPilot maximizes GPU availability for your jobs: * Provision in all zones/regions/clouds you have access to (the _Sky_), with automatic failover SkyPilot cuts your cloud costs: * Managed Spot: 3-6x cost savings using spot VMs, with auto-recovery from preemptions * Optimizer: 2x cost savings by auto-picking the cheapest VM/zone/region/cloud * Autostop: hands-free cleanup of idle clusters SkyPilot supports your existing GPU, TPU, and CPU workloads, with no code changes.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

spring-ai-alibaba

Spring AI Alibaba is an AI application framework for Java developers that seamlessly integrates with Alibaba Cloud QWen LLM services and cloud-native infrastructures. It provides features like support for various AI models, high-level AI agent abstraction, function calling, and RAG support. The framework aims to simplify the development, evaluation, deployment, and observability of AI native Java applications. It offers open-source framework and ecosystem integrations to support features like prompt template management, event-driven AI applications, and more.

AimRT

AimRT is a basic runtime framework for modern robotics, developed in modern C++ with lightweight and easy deployment. It integrates research and development for robot applications in various deployment scenarios, providing debugging tools and observability support. AimRT offers a plug-in development interface compatible with ROS2, HTTP, Grpc, and other ecosystems for progressive system upgrades.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.