chatnio

🚀 Next Generation AI One-Stop Internationalization Solution. 🚀 下一代 AI 一站式 B/C 端解决方案,支持 OpenAI,Midjourney,Claude,讯飞星火,Stable Diffusion,DALL·E,ChatGLM,通义千问,腾讯混元,360 智脑,百川 AI,火山方舟,新必应,Gemini,Moonshot 等模型,支持对话分享,自定义预设,云端同步,模型市场,支持弹性计费和订阅计划模式,支持图片解析,支持联网搜索,支持模型缓存,丰富美观的后台管理与仪表盘数据统计。

Stars: 3078

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

README:

English · 简体中文 · Official Website · Community · Developer Resources

- 🤖️ Rich Model Support: Multi-model service provider support (OpenAI / Anthropic / Gemini / Midjourney and more than ten compatible formats & private LLM support)

- 🤯 Beautiful UI Design: UI compatible with PC / Pad / Mobile, following Shadcn UI & Tremor Charts design standards, rich and beautiful interface design and backend dashboard

- 🎃 Complete Markdown Support: Support for LaTeX formulas / Mermaid mind maps / table rendering / code highlighting / chart drawing / progress bars and other advanced Markdown syntax support

- 👀 Multi-theme Support: Support for multiple theme switching, including Light Mode for light themes and Dark Mode for dark themes. 👉 Custom Color Scheme

- 📚 Internationalization Support: Support for internationalization, multi-language switching 🇨🇳 🇺🇸 🇯🇵 🇷🇺 👉 Welcome to contribute translations Pull Request

- 🎨 Text-to-Image Support: Support for multiple text-to-image models: OpenAI DALL-E✅ & Midjourney (support for U/V/R operations)✅ & Stable Diffusion✅ etc.

- 📡 Powerful Conversation Sync: Zero-cost cross-device conversation sync support for users, support for conversation sharing (link sharing & save as image & share management), no need for WebDav / WebRTC and other dependencies and complex learning costs

- 🎈 Model Market & Preset System: Support for customizable model market in the backend, providing model introductions, tags, and other parameters. Site owners can customize model introductions according to the situation. Also supports a preset system, including custom presets and cloud synchronization functions.

- 📖 Rich File Parsing: Out-of-the-box, supports file parsing for all models (PDF / Docx / Pptx / Excel / image formats parsing), supports more cloud image storage solutions (S3 / R2 / MinIO etc.), supports OCR image recognition 👉 See project Chat Nio Blob Service for details (supports Vercel / Docker one-click deployment)

- 🌏 Full Model Internet Search: Based on the SearXNG open-source engine, supports rich search engines such as Google / Bing / DuckDuckGo / Yahoo / Wikipedia / Arxiv / Qwant, supports safe search mode, content truncation, image proxy, test search availability, and other functions.

- 💕 Progressive Web App (PWA): Supports PWA applications & desktop support (desktop based on Tauri)

- 🤩 Comprehensive Backend Management: Supports beautiful and rich dashboard, announcement & notification management, user management, subscription management, gift code & redemption code management, price setting, subscription setting, custom model market, custom site name & logo, SMTP email settings, and other functions

- 🤑 Multiple Billing Methods: Supports 💴 Subscription and 💴 Elastic Billing two billing methods. Elastic billing supports per-request billing / token billing / no billing / anonymous calls and minimum request points detection and other powerful features

- 🎉 Innovative Model Caching: Supports enabling model caching: i.e., under the same request parameter hash, if it has been requested before, it will directly return the cached result (hitting the cache will not be billed), reducing the number of requests. You can customize whether to cache models, cache time, multiple cache result numbers, and other advanced cache settings

- 🥪 Additional Features (Support Discontinued): 🍎 AI Project Generator Function / 📂 Batch Article Generation Function / 🥪 AI Card Function (Deprecated)

- 😎 Excellent Channel Management: Self-written excellent channel algorithm, supports ⚡ multi-channel management, supports 🥳priority setting for channel call order, supports 🥳weight setting for load balancing probability distribution of channels at the same priority, supports 🥳user grouping, 🥳automatic retry on failure, 🥳model redirection, 🥳built-in upstream hiding, 🥳channel status management and other powerful enterprise-level functions

- ⭐ OpenAI API Distribution & Proxy System: Supports calling various large models in OpenAI API standard format, integrates powerful channel management functions, only needs to deploy one site to achieve simultaneous development of B/C-end business💖

- 👌 Quick Upstream Synchronization: Channel settings, model market, price settings, and other settings can quickly synchronize with upstream sites, modify your site configuration based on this, quickly build your site, save time and effort, one-click synchronization, quick launch

- 👋 SEO Optimization: Supports SEO optimization, supports custom site name, site logo, and other SEO optimization settings to make search engines crawl faster, making your site stand out👋

- 🎫 Multiple Redemption Code Systems: Supports multiple redemption code systems, supports gift codes and redemption codes, supports batch generation, gift codes are suitable for promotional distribution, redemption codes are suitable for card sales, for gift codes of one type, a user can only redeem one code, which to some extent reduces the situation of one user redeeming multiple times in promotions😀

- 🥰 Business-Friendly License: Adopts the Apache-2.0 open-source license, friendly for commercial secondary development & distribution (please also comply with the provisions of the Apache-2.0 license, do not use for illegal purposes)

- ✅ Beautiful commercial-grade UI, elegant frontend interface and backend management

- ✅ Supports TTS & STT, plugin marketplace, RAG knowledge base and other rich features and modules

- ✅ More payment providers, more billing models and advanced order management

- ✅ Supports more authentication methods, including SMS login, OAuth login, etc.

- ✅ Supports model monitoring, channel health detection, fault alarm automatic channel switching

- ✅ Supports multi-tenant API Key distribution system, enterprise-level token permission management and visitor restrictions

- ✅ Supports security auditing, logging, model rate limiting, API Gateway and other advanced features

- ✅ Supports promotion rewards, professional data statistics, user profile analysis and other business analysis capabilities

- ✅ Supports Discord/Telegram/Feishu and other bot integration capabilities (extension modules)

- ...

- OpenAI & Azure OpenAI (✅ Vision ✅ Function Calling)

- Anthropic Claude (✅ Vision ✅ Function Calling)

- Google Gemini & PaLM2 (✅ Vision)

- Midjourney (✅ Mode Toggling ✅ U/V/R Actions)

- iFlytek SparkDesk (✅ Vision ✅ Function Calling)

- Zhipu AI ChatGLM (✅ Vision)

- Alibaba Tongyi Qwen

- Tencent Hunyuan

- Baichuan AI

- Moonshot AI (👉 OpenAI)

- DeepSeek AI (👉 OpenAI)

- ByteDance Skylark (✅ Function Calling)

- Groq Cloud AI

- OpenRouter (👉 OpenAI)

- 360 GPT

- LocalAI / Ollama (👉 OpenAI)

- [x] Chat Completions (/v1/chat/completions)

- [x] Image Generation (/v1/images)

- [x] Model List (/v1/models)

- [x] Dashboard Billing (/v1/billing)

[!TIP] After successful deployment, the admin account is

root, with the default passwordchatnio123456

Zeabur provides a certain free quota, you can use non-paid regions for one-click deployment, and also supports plan subscriptions and elastic billing for flexible expansion.

- Click

Deployto deploy, and enter the domain name you wish to bind, wait for the deployment to complete.- After deployment is complete, please visit your domain name and log in to the backend management using the username

rootand passwordchatnio123456. Please follow the prompts to change the password in the chatnio backend in a timely manner.

[!NOTE] After successful execution, the host machine mapping address is

http://localhost:8000

git clone --depth=1 --branch=main --single-branch https://github.com/Deeptrain-Community/chatnio.git

cd chatnio

docker-compose up -d # Run the service

# To use the stable version, use docker-compose -f docker-compose.stable.yaml up -d instead

# To use Watchtower for automatic updates, use docker-compose -f docker-compose.watch.yaml up -d insteadVersion update (If Watchtower automatic updates are enabled, manual updates are not necessary):

docker-compose down

docker-compose pull

docker-compose up -d

- MySQL database mount directory: ~/db

- Redis database mount directory: ~/redis

- Configuration file mount directory: ~/config

[!NOTE] After successful execution, the host machine address is

http://localhost:8094.To use the stable version, use

programzmh/chatnio:stableinstead ofprogramzmh/chatnio:latest

docker run -d --name chatnio \

--network host \

-v ~/config:/config \

-v ~/logs:/logs \

-v ~/storage:/storage \

-e MYSQL_HOST=localhost \

-e MYSQL_PORT=3306 \

-e MYSQL_DB=chatnio \

-e MYSQL_USER=root \

-e MYSQL_PASSWORD=chatnio123456 \

-e REDIS_HOST=localhost \

-e REDIS_PORT=6379 \

-e SECRET=secret \

-e SERVE_STATIC=true \

programzmh/chatnio:latest

- --network host means using the host machine's network, allowing the Docker container to use the host's network. You can modify this as needed.

- SECRET: JWT secret key, generate a random string and modify accordingly

- SERVE_STATIC: Whether to enable static file serving (normally this doesn't need to be changed, see FAQ below for details)

- -v ~/config:/config mounts the configuration file, -v ~/logs:/logs mounts the host machine directory for log files, -v ~/storage:/storage mounts the directory for additional feature generated files

- MySQL and Redis services need to be configured. Please refer to the information above to modify the environment variables accordingly

Version update (After enabling Watchtower, manual updates are not necessary. After execution, follow the steps above to run again):

docker stop chatnio

docker rm chatnio

docker pull programzmh/chatnio:latest[!NOTE] After successful deployment, the default port is 8094, and the access address is

http://localhost:8094Config settings (~/config/config.yaml) can be overridden using environment variables. For example, the

MYSQL_HOSTenvironment variable can override themysql.hostconfiguration item

git clone https://github.com/Deeptrain-Community/chatnio.git

cd chatnio

cd app

npm install -g pnpm

pnpm install

pnpm build

cd ..

go build -o chatnio

# e.g. using nohup (you can also use systemd or other service manager)

nohup ./chatnio > output.log & # using nohup to run in background- 🥗 Frontend: React + Redux + Radix UI + Tailwind CSS

- 🍎 Backend: Golang + Gin + Redis + MySQL

- 🍒 Application Technology: PWA + WebSocket

-

We found that most AIGC commercial sites on the market are frontend-oriented lightweight deployment projects with beautiful UI interface designs, such as the commercial version of Next Chat. Due to its personal privatization-oriented design, there are some limitations in secondary commercial development, presenting some issues, such as:

- Difficult conversation synchronization, for example, requiring services like WebDav, high user learning costs, and difficulties in real-time cross-device synchronization.

- Insufficient billing, for example, only supporting elastic billing or only subscription-based, unable to meet the needs of different users.

- Inconvenient file parsing, for example, only supporting uploading images to an image hosting service first, then returning to the site to input the URL direct link in the input box, without built-in file parsing functionality.

- No support for conversation URL sharing, for example, only supporting conversation screenshot sharing, unable to support conversation URL sharing (or only supporting tools like ShareGPT, which cannot promote the site).

- Insufficient channel management, for example, the backend only supports OpenAI format channels, making it difficult to be compatible with other format channels. And only one channel can be filled in, unable to support multi-channel management.

- No API call support, for example, only supporting user interface calls, unable to support API proxying and management.

-

Another type is API distribution-oriented sites with powerful distribution systems, such as projects based on One API. Although these projects support powerful API proxying and management, they lack interface design and some C-end features, such as:

- Insufficient user interface, for example, only supporting API calls, without built-in user interface chat. User interface chat requires manually copying the key and going to other sites to use, which has a high learning cost for ordinary users.

- No subscription system, for example, only supporting elastic billing, lacking billing design for C-end users, unable to meet different user needs, and not user-friendly in terms of cost perception for users without a foundation.

- Insufficient C-end features, for example, only supporting API calls, not supporting conversation synchronization, conversation sharing, file parsing, and other functions.

- Insufficient load balancing, the open-source version does not support the weight parameter, unable to achieve balanced load distribution probability for channels at the same priority (New API also solves this pain point, with a more beautiful UI).

Therefore, we hope to combine the advantages of these two types of projects to create a project that has both a powerful API distribution system and a rich user interface design, thus meeting the needs of C-end users while developing B-end business, improving user experience, reducing user learning costs, and increasing user stickiness.

Thus, Chat Nio was born. We hope to create a project that has both a powerful API distribution system and a rich user interface design, becoming the next-generation open-source AIGC project's one-stop commercial solution.

If you find this project helpful, you can give it a Star to show your support!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chatnio

Similar Open Source Tools

chatnio

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

ai

Jetify's AI SDK for Go is a unified interface for interacting with multiple AI providers including OpenAI, Anthropic, and more. It addresses the challenges of fragmented ecosystems, vendor lock-in, poor Go developer experience, and complex multi-modal handling by providing a unified interface, Go-first design, production-ready features, multi-modal support, and extensible architecture. The SDK supports language models, embeddings, image generation, multi-provider support, multi-modal inputs, tool calling, and structured outputs.

kelivo

Kelivo is a Flutter LLM Chat Client with modern design, dark mode, multi-language support, multi-provider support, custom assistants, multimodal input, markdown rendering, voice functionality, MCP support, web search integration, prompt variables, QR code sharing, data backup, and custom requests. It is built with Flutter and Dart, utilizes Provider for state management, Hive for local data storage, and supports dynamic theming and Markdown rendering. Kelivo is a versatile tool for creating and managing personalized AI assistants, supporting various input formats, and integrating with multiple search engines and AI providers.

clearml-server

ClearML Server is a backend service infrastructure for ClearML, facilitating collaboration and experiment management. It includes a web app, RESTful API, and file server for storing images and models. Users can deploy ClearML Server using Docker, AWS EC2 AMI, or Kubernetes. The system design supports single IP or sub-domain configurations with specific open ports. ClearML-Agent Services container allows launching long-lasting jobs and various use cases like auto-scaler service, controllers, optimizer, and applications. Advanced functionality includes web login authentication and non-responsive experiments watchdog. Upgrading ClearML Server involves stopping containers, backing up data, downloading the latest docker-compose.yml file, configuring ClearML-Agent Services, and spinning up docker containers. Community support is available through ClearML FAQ, Stack Overflow, GitHub issues, and email contact.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

inngest

Inngest is a platform that offers durable functions to replace queues, state management, and scheduling for developers. It allows writing reliable step functions faster without dealing with infrastructure. Developers can create durable functions using various language SDKs, run a local development server, deploy functions to their infrastructure, sync functions with the Inngest Platform, and securely trigger functions via HTTPS. Inngest Functions support retrying, scheduling, and coordinating operations through triggers, flow control, and steps, enabling developers to build reliable workflows with robust support for various operations.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

Alice

Alice is an open-source AI companion designed to live on your desktop, providing voice interaction, intelligent context awareness, and powerful tooling. More than a chatbot, Alice is emotionally engaging and deeply useful, assisting with daily tasks and creative work. Key features include voice interaction with natural-sounding responses, memory and context management, vision and visual output capabilities, computer use tools, function calling for web search and task scheduling, wake word support, dedicated Chrome extension, and flexible settings interface. Technologies used include Vue.js, Electron, OpenAI, Go, hnswlib-node, and more. Alice is customizable and offers a dedicated Chrome extension, wake word support, and various tools for computer use and productivity tasks.

Foxel

Foxel is a highly extensible private cloud storage solution for individuals and teams, featuring AI-powered semantic search. It offers unified file management, pluggable storage backends, semantic search capabilities, built-in file preview, permissions and sharing options, and a task processing center. Users can easily manage files, search content within unstructured data, preview various file types, share files, and process tasks asynchronously. Foxel is designed to centralize file management and enhance search capabilities for users.

kestra

Kestra is an open-source event-driven orchestration platform that simplifies building scheduled and event-driven workflows. It offers Infrastructure as Code best practices for data, process, and microservice orchestration, allowing users to create reliable workflows using YAML configuration. Key features include everything as code with Git integration, event-driven and scheduled workflows, rich plugin ecosystem for data extraction and script running, intuitive UI with syntax highlighting, scalability for millions of workflows, version control friendly, and various features for structure and resilience. Kestra ensures declarative orchestration logic management even when workflows are modified via UI, API calls, or other methods.

WaterCrawl

WaterCrawl is a powerful web application that uses Python, Django, Scrapy, and Celery to crawl web pages and extract relevant data. It provides advanced web crawling and scraping capabilities, a powerful search engine, multi-language support, asynchronous processing, REST API with OpenAPI, rich ecosystem integrations, self-hosted and open-source options, and advanced results handling. The tool allows users to crawl websites with customizable options, search for relevant content across the web, monitor real-time progress of crawls, and process search results with customizable parameters.

open-webui

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our Open WebUI Documentation.

curiso

Curiso AI is an infinite canvas platform that connects nodes and AI services to explore ideas without repetition. It empowers advanced users to unlock richer AI interactions. Features include multi OS support, infinite canvas, multiple AI provider integration, local AI inference provider integration, custom model support, model metrics, RAG support, local Transformers.js embedding models, inference parameters customization, multiple boards, vision model support, customizable interface, node-based conversations, and secure local encrypted storage. Curiso also offers a Solana token for exclusive access to premium features and enhanced AI capabilities.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

Ivy-Framework

Ivy-Framework is a powerful tool for building internal applications with AI assistance using C# codebase. It provides a CLI for project initialization, authentication integrations, database support, LLM code generation, secrets management, container deployment, hot reload, dependency injection, state management, routing, and external widget framework. Users can easily create data tables for sorting, filtering, and pagination. The framework offers a seamless integration of front-end and back-end development, making it ideal for developing robust internal tools and dashboards.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

For similar tasks

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

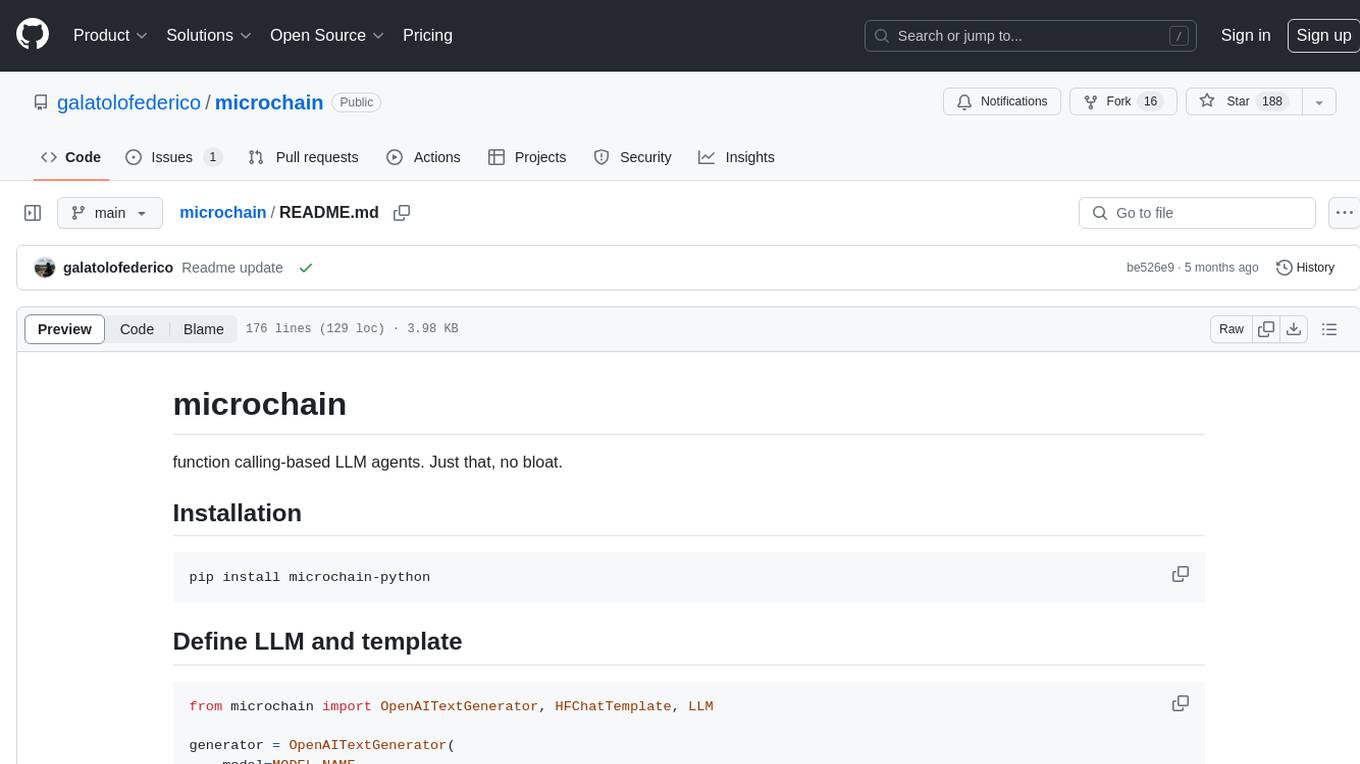

microchain

Microchain is a function calling-based LLM agents tool with no bloat. It allows users to define LLM and templates, use various functions like Sum and Product, and create LLM agents for specific tasks. The tool provides a simple and efficient way to interact with OpenAI models and create conversational agents for various applications.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

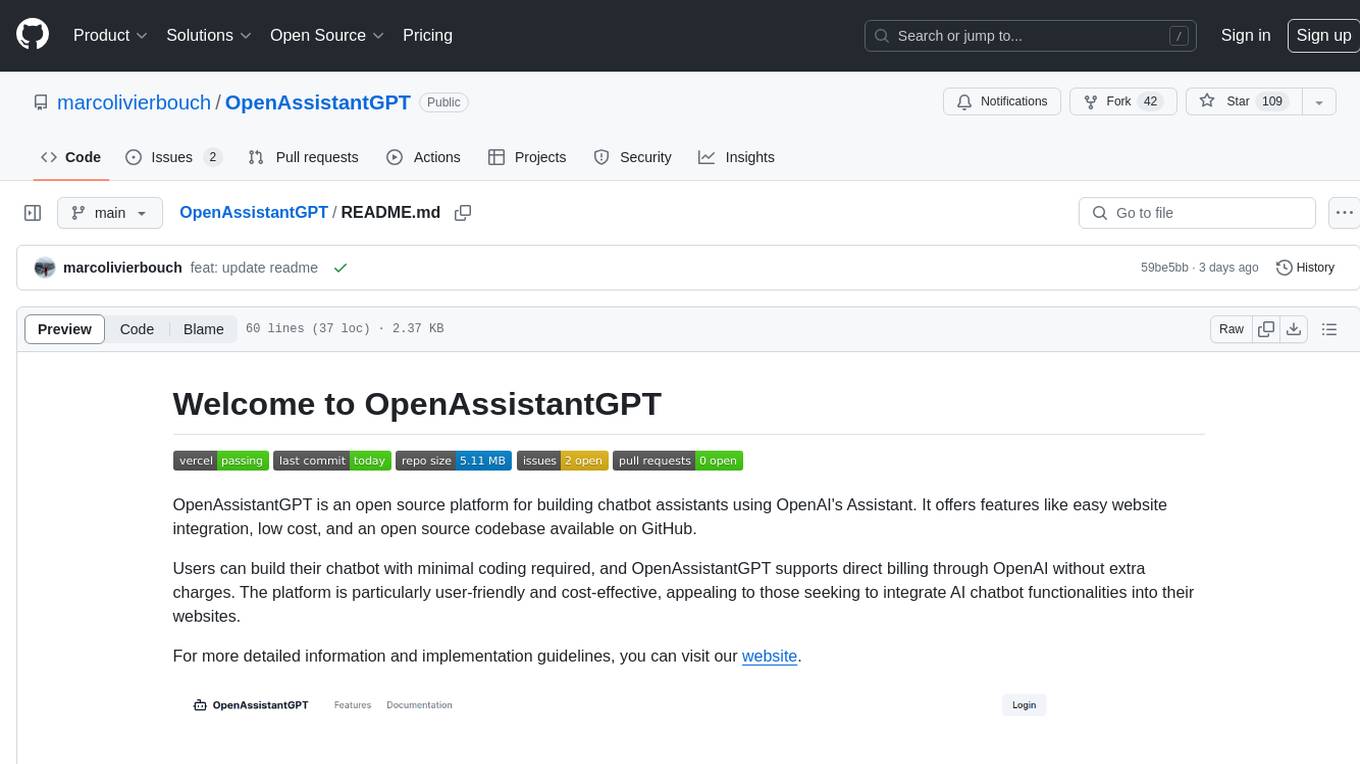

OpenAssistantGPT

OpenAssistantGPT is an open source platform for building chatbot assistants using OpenAI's Assistant. It offers features like easy website integration, low cost, and an open source codebase available on GitHub. Users can build their chatbot with minimal coding required, and OpenAssistantGPT supports direct billing through OpenAI without extra charges. The platform is user-friendly and cost-effective, appealing to those seeking to integrate AI chatbot functionalities into their websites.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.