aiwechat-vercel

使用vercel的functions,将ai功能加入微信公众号

Stars: 645

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

README:

使用vercel的functions,将ai功能加入微信公众号

无需服务器,门槛低,只需一个可以绑定到vercel的域名(无需备案)即可,基本0成本

-

提前到Vercel创建Redis数据库

-

Fork本Github项目,到Vercel点击构建,环境变量填写参数

-

在vercel该项目详情页面的Storage选择连接前面创建的redis数据库

- 数据库链接成功后,Vercel会自动配置KV_URL环境变量

图片步骤:

更多配置config

GPT_TOKEN=sk-*** 你的gpt token

GPT_URL=https://xxx/v1 代理gpt服务器(选填,默认openai官网api 例如https://api.openai.com/v1)

gptModel=gpt-3.5-turbo gpt模型(选填,默认gpt-3.5-turbo)

WX_TOKEN=*** 微信公众号开发平台设置的token

botType=** 机器人类型 目前支持(gpt,echo,spark,qwen,gemini)例如botType=gpt如何检查是否配置成功

部署后访问 vercel提供的域名/api/check 页面返回check ok即可

到域名提供商,域名增加cname解析到cname-china.vercel-dns.com

到vercel的该项目添加自定义域名(使用国内网络在访问你的域名/api/check看看能否访问)

微信公众号配置:

微信公众号。微信公众平台后台管理页面上找到

设置与开发-基本配置-服务器配置,修改服务器地址url为https://你的域名/api/wx消息加解密选择明文模式(后续添加支持加密)

录制了一期简单的视频教程供参考b站

也有大佬写了自己在cloudflare部署的教程discussions

- 支持接入gpt,星火,通义千问,gemini

- 超时回复(go协程很好用)

- 支持连续问答(只需要在vercel创建一个redis实例,在本项目下的Storage设置连接即可,vercel会自动配置KV_URL环境变量,默认记忆对话30分钟内的内容)

- 隐藏功能 你的域名/api/chat?msg=你的问题 (仅用于测试是否配置gpt成功,也可用作于简单的接口api,中文乱码问题已修复)

- 检查配置:你的域名/api/check (显示当前bot的配置信息是否正确)

- 支持图床功能,即发送图片给公众号,返回图片url

- 被关注自定义回复

- 支持设置system prompt

- 支持指令

- /help:查看帮助

- /gpt:切换与GPT对话

- /spark:切换与星火对话

- /qwen:切换与通义千问对话

- /gemini:切换与gemini对话

- /prompt: 你的prompt: 设置system prompt

- /getpt: 获取当前设置prompt

- /cpt: 清除当前设置prompt

- /setmodel model_name:设置当前bot使用的模型

- /setmodel:重置当前bot的模型为默认值

- /getmodel:获取当前bot自定义的模型名

- /clear:清除对话列表

有其它想要支持的指令欢迎提issue或者pr (例如查看天气啥的)

- /fy: 翻译文本

- /wec: 查看天气

- todolist管理: /ta: 添加待办事项 /td: 删除待办事项 /tl: 查看待办事项列表

- 支持国内大部分可以白嫖的ai 如星火(已支持,感谢大佬pr),通义千问(已支持,感谢大佬pr)等(有想要添加的可以提个issue)

- 增加指令控制(已支持),增加管理员设置

- 关键词自定义回复

- 支持限制问答次数

- 支持企业微信群机器人

- todolist功能,用户可以在机器人管理待办事件

- 查看股票和币价

项目起因:偶然看到网上有人使用vercel实现了,但是功能比较单一,看了一下文档,支持go所以就想自己开发下,支持接入多一点ai和自定义功能,项目仅供学习参考 也欢迎各位大佬pr,来个免费的star

- 为啥要使用域名? 答: vercel提供的域名国内被墙了,微信无法访问

- 为啥有时候可以回复,有时候没有回复?答: 微信公众号限制答复500多字,超过回复会失败,可以增加限制字数的提示词解决。还有一个原因是答复太久,接口超时了免费版vercel的functions限制接口10s

- 域名需要备案吗?答:不需要,另外也可以在cloudflare托管域名(白嫖一些2级域名,托管上去,可以达到0成本)

- 我的是订阅号支持吗?答:无论是公众号还是订阅号,自动回复都是一个机制,所以都支持

- 发送信息返回错误error, status code: 403, message: invalid character '<' looking for beginning of value怎么回事?答:检查GPT_URL是不是漏了/v1或者cf开了盾,墙之类的

- 支持接入deepseek吗?答:支持,不过有一点要注意deepseek支持的模型为deepseek-coder,deepseek-chat要正常使用,需要改gptModel为这两个模型之一

- 修改环境变量后,还是不成功?答:在修改环境变量后要重新部署下配置才后生效,因为vercel原来的实例没有被销毁读取的还是未修改的环境变量。建议每次修改环境变量后手动重新部署一下

- 微信字数限制如何解决?答:已经有大佬提pr了,可以通过设置最大token解决,设置环境变量maxOutput即可,一般设置到500,回答没有完整可以和ai说继续即可,pr详情pr

更多功能探讨discussions

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiwechat-vercel

Similar Open Source Tools

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

dify-plus

Dify-Plus is a project that extends and adds management center functionality to the original Dify project. It includes features such as user quota management, key quota settings, web page login authentication, and more. The project aims to address pain points in enterprise scenarios and is open for collaboration and discussion with the community.

FeedCraft

FeedCraft is a powerful tool to process your rss feeds as a middleware. Use it to translate your feed, extract fulltext, emulate browser to render js-heavy page, use llm such as google gemini to generate brief for your rss article, use natural language to filter your rss feed, and more! It is an open-source tool that can be self-deployed and used with any RSS reader. It supports AI-powered processing using Open AI compatible LLMs, custom prompt, saving rules to apply to different RSS sources, portable mode for on-the-go usage, and dock mode for advanced customization of RSS sources and processing parameters.

ai-paint-today-BE

AI Paint Today is an API server repository that allows users to record their emotions and daily experiences, and based on that, AI generates a beautiful picture diary of their day. The project includes features such as generating picture diaries from written entries, utilizing DALL-E 2 model for image generation, and deploying on AWS and Cloudflare. The project also follows specific conventions and collaboration strategies for development.

AIBotPublic

AIBotPublic is an open-source version of AIBotPro, a comprehensive AI tool that provides various features such as knowledge base construction, AI drawing, API hosting, and more. It supports custom plugins and parallel processing of multiple files. The tool is built using bootstrap4 for the frontend, .NET6.0 for the backend, and utilizes technologies like SqlServer, Redis, and Milvus for database and vector database functionalities. It integrates third-party dependencies like Baidu AI OCR, Milvus C# SDK, Google Search, and more to enhance its capabilities.

geekai

GeekAI is an open-source AI assistant solution based on AI large language model API, featuring a complete system with ready-to-use front-end and back-end management, providing a seamless typing experience via Websocket. It integrates various pre-trained character applications like Xiaohongshu writing assistant, English translation master, Socrates, Confucius, Steve Jobs, and weekly report assistant. The tool supports multiple large language models from platforms like OpenAI, Azure, Wenxin Yanyan, Xunfei Xinghuo, and Tsinghua ChatGLM. Additionally, it includes MidJourney and Stable Diffusion AI drawing functionalities for creating various artworks such as text-based images, face swapping, and blending images. Users can utilize personal WeChat QR codes for payment without the need for enterprise payment channels, and the tool offers integrated payment options like Alipay and WeChat Pay with support for multiple membership packages and point card purchases. It also features a plugin API for developing powerful plugins using large language model functions, including built-in plugins for Weibo hot search, today's headlines, morning news, and AI drawing functions.

knowledge

This repository serves as a personal knowledge base for the owner's reference and use. It covers a wide range of topics including cloud-native operations, Kubernetes ecosystem, networking, cloud services, telemetry, CI/CD, electronic engineering, hardware projects, operating systems, homelab setups, high-performance computing applications, openwrt router usage, programming languages, music theory, blockchain, distributed systems principles, and various other knowledge domains. The content is periodically refined and published on the owner's blog for maintenance purposes.

magic-resume

Magic Resume is a modern online resume editor that makes creating professional resumes simple and fun. Built on Next.js and Framer Motion, it supports real-time preview and custom themes. Features include Next.js 14+ based construction, smooth animation effects (Framer Motion), custom theme support, responsive design, dark mode, export to PDF, real-time preview, auto-save, and local storage. The technology stack includes Next.js 14+, TypeScript, Framer Motion, Tailwind CSS, Shadcn/ui, and Lucide Icons.

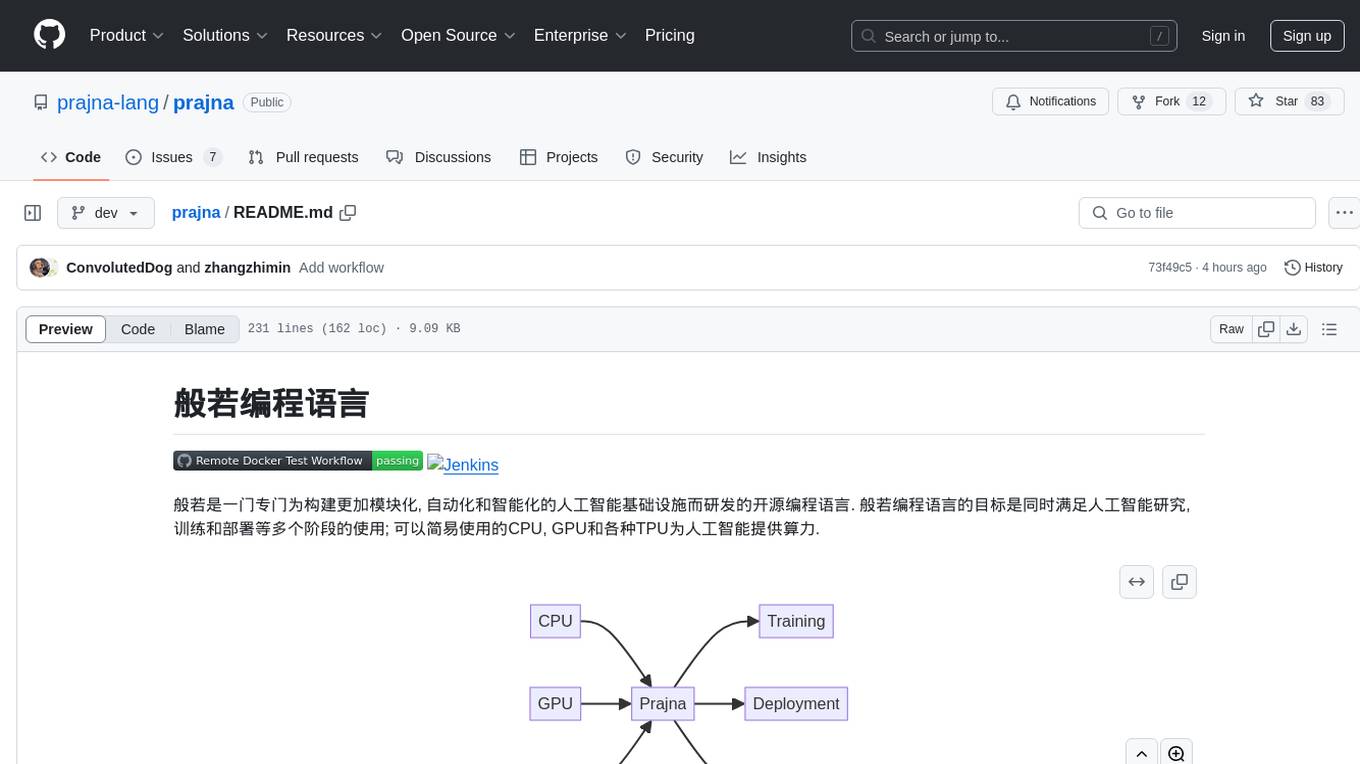

prajna

Prajna is an open-source programming language specifically developed for building more modular, automated, and intelligent artificial intelligence infrastructure. It aims to cater to various stages of AI research, training, and deployment by providing easy access to CPU, GPU, and various TPUs for AI computing. Prajna features just-in-time compilation, GPU/heterogeneous programming support, tensor computing, syntax improvements, and user-friendly interactions through main functions, Repl, and Jupyter, making it suitable for algorithm development and deployment in various scenarios.

chatgpt-plus

ChatGPT-PLUS is an open-source AI assistant solution based on AI large language model API, with a built-in operational management backend for easy deployment. It integrates multiple large language models from platforms like OpenAI, Azure, ChatGLM, Xunfei Xinghuo, and Wenxin Yanyan. Additionally, it includes MidJourney and Stable Diffusion AI drawing features. The system offers a complete open-source solution with ready-to-use frontend and backend applications, providing a seamless typing experience via Websocket. It comes with various pre-trained role applications such as Xiaohongshu writer, English translation master, Socrates, Confucius, Steve Jobs, and weekly report assistant to meet various chat and application needs. Users can enjoy features like Suno Wensheng music, integration with MidJourney/Stable Diffusion AI drawing, personal WeChat QR code for payment, built-in Alipay and WeChat payment functions, support for various membership packages and point card purchases, and plugin API integration for developing powerful plugins using large language model functions.

ChatGPT-Next-Web

ChatGPT Next Web is a well-designed cross-platform ChatGPT web UI tool that supports Claude, GPT4, and Gemini Pro models. It allows users to deploy their private ChatGPT applications with ease. The tool offers features like one-click deployment, compact client for Linux/Windows/MacOS, compatibility with self-deployed LLMs, privacy-first approach with local data storage, markdown support, responsive design, fast loading speed, prompt templates, awesome prompts, chat history compression, multilingual support, and more.

MathModelAgent

MathModelAgent is an agent designed specifically for mathematical modeling tasks. It automates the process of mathematical modeling and generates a complete paper that can be directly submitted. The tool features automatic problem analysis, code writing, error correction, and paper writing. It supports various models, offers low costs, and allows customization through prompt inject. The tool is ideal for individuals or teams working on mathematical modeling projects.

himarket

HiMarket is an out-of-the-box AI open platform solution that can be used to build enterprise-level AI capability markets and developer ecosystem centers. It consists of three core components tailored to different roles within the enterprise: 1. AI open platform management backend (for administrators/operators) for easy packaging of diverse AI capabilities such as model services, MCP Server, Agent, etc., into standardized 'AI products' in API form with comprehensive documentation and examples for one-click publishing to the portal. 2. AI open platform portal (for developers/internal users) as a 'storefront' for developers to complete registration, create consumers, obtain credentials, browse and subscribe to AI products, test online, and monitor their own call status and costs clearly. 3. AI Gateway: As a subproject of the Higress community, the Higress AI Gateway carries out all AI call authentication, security, flow control, protocol conversion, and observability capabilities.

midjourney-proxy

Midjourney-proxy is a proxy for the Discord channel of MidJourney, enabling API-based calls for AI drawing. It supports Imagine instructions, adding image base64 as a placeholder, Blend and Describe commands, real-time progress tracking, Chinese prompt translation, prompt sensitive word pre-detection, user-token connection to WSS, multi-account configuration, and more. For more advanced features, consider using midjourney-proxy-plus, which includes Shorten, focus shifting, image zooming, local redrawing, nearly all associated button actions, Remix mode, seed value retrieval, account pool persistence, dynamic maintenance, /info and /settings retrieval, account settings configuration, Niji bot robot, InsightFace face replacement robot, and an embedded management dashboard.

HyperChat

HyperChat is an open Chat client that utilizes various LLM APIs to enhance the Chat experience and offer productivity tools through the MCP protocol. It supports multiple LLMs like OpenAI, Claude, Qwen, Deepseek, GLM, Ollama. The platform includes a built-in MCP plugin market for easy installation and also allows manual installation of third-party MCPs. Features include Windows and MacOS support, resource support, tools support, English and Chinese language support, built-in MCP client 'hypertools', 'fetch' + 'search', Bot support, Artifacts rendering, KaTeX for mathematical formulas, WebDAV synchronization, and a MCP plugin market. Future plans include permission pop-up, scheduled tasks support, Projects + RAG support, tools implementation by LLM, and a local shell + nodejs + js on web runtime environment.

Juggle

Juggle is a low-code tool for interface orchestration, which can quickly orchestrate simple APIs into a complex interface. The orchestrated interface can be directly used by the front end, greatly improving development efficiency and reducing development costs.

For similar tasks

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

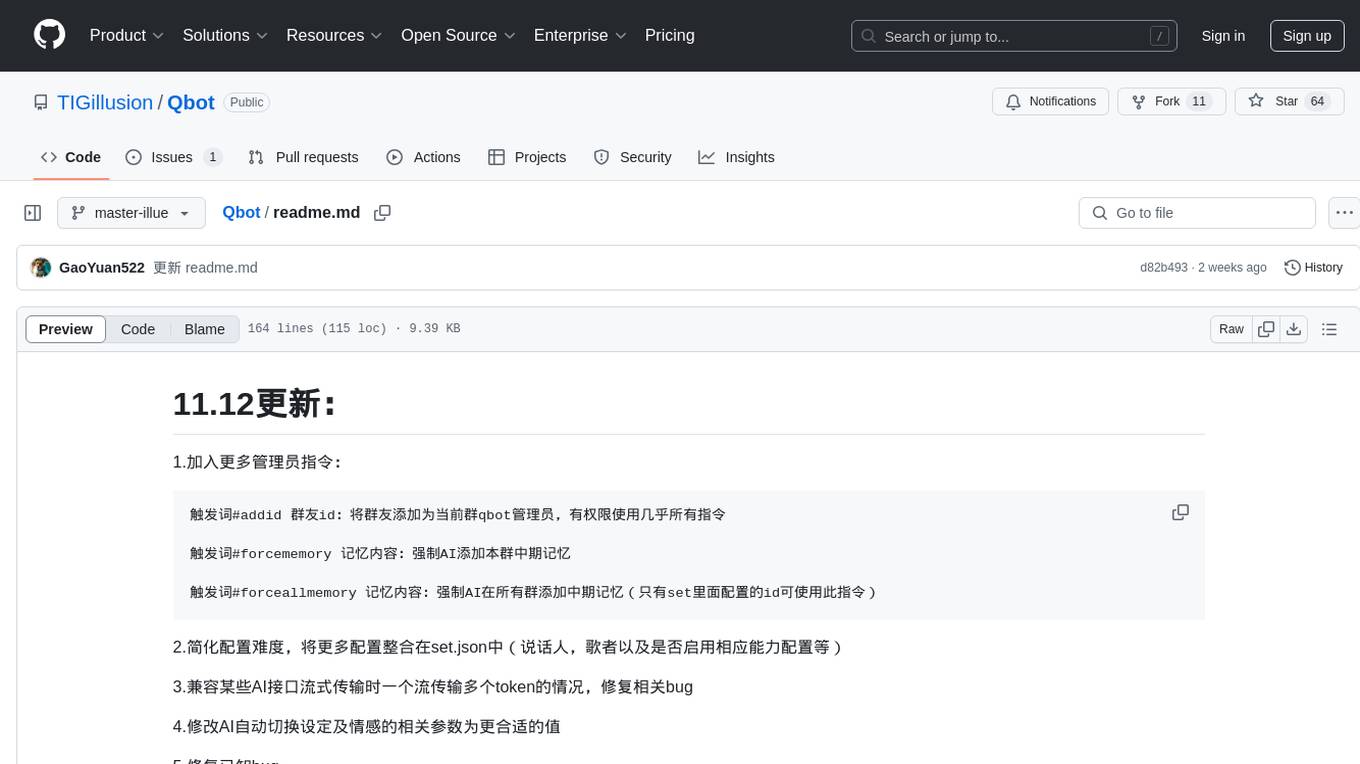

Qbot

Qbot is an open-source project designed to help users quickly build their own QQ chatbot. The bot deployed using this project has various capabilities, including intelligent sentence segmentation, intent recognition, voice and drawing replies, autonomous selection of when to play local music, and decision-making on sending emojis. Qbot leverages other open-source projects and allows users to customize triggers, system prompts, chat models, and more through configuration files. Users can modify the Qbot.py source code to tailor the bot's behavior. The project requires NTQQ and LLonebot's NTQQ plugin for deployment, along with additional configurations for triggers, system prompts, and chat models. Users can start the bot by running Qbot.py after installing necessary libraries and ensuring the NTQQ is running. Qbot also supports features like sending music from the data/smusic folder and emojis based on emotions. Local voice synthesis can be deployed for voice outputs. Qbot provides commands like #reset to clear short-term memory and addresses common issues like program crashes due to encoding format, message sending/receiving failures, voice synthesis failures, and connection issues. Users are encouraged to give the project a star if they find it useful.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

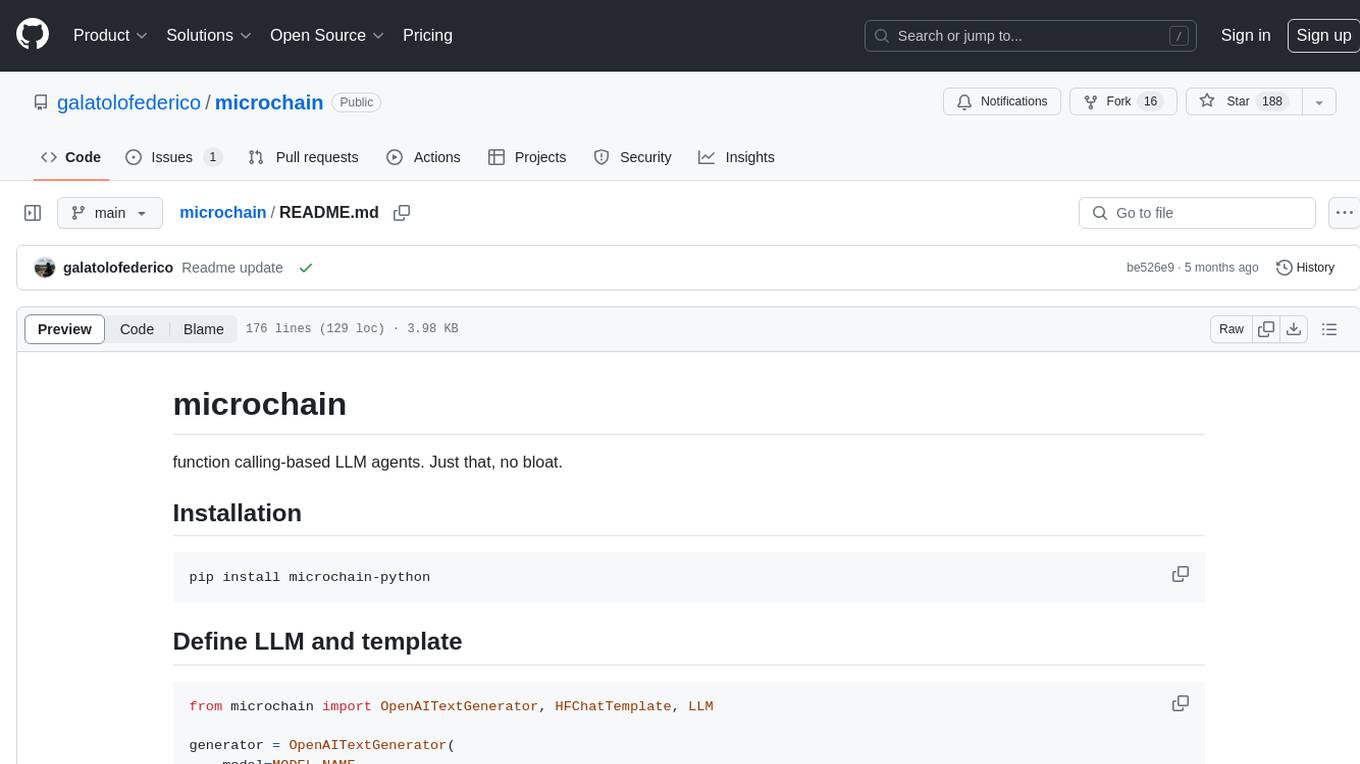

microchain

Microchain is a function calling-based LLM agents tool with no bloat. It allows users to define LLM and templates, use various functions like Sum and Product, and create LLM agents for specific tasks. The tool provides a simple and efficient way to interact with OpenAI models and create conversational agents for various applications.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.