Qbot

快速部署具有极强AI自控自由度的大语言模型AI-QQ机器人,自带联网,AI驱动的提示词切换,AI驱动的中期记忆,长期记忆能力,AI自主分段回复,可附加包含语音,绘画,全曲库翻唱等功能

Stars: 64

Qbot is an open-source project designed to help users quickly build their own QQ chatbot. The bot deployed using this project has various capabilities, including intelligent sentence segmentation, intent recognition, voice and drawing replies, autonomous selection of when to play local music, and decision-making on sending emojis. Qbot leverages other open-source projects and allows users to customize triggers, system prompts, chat models, and more through configuration files. Users can modify the Qbot.py source code to tailor the bot's behavior. The project requires NTQQ and LLonebot's NTQQ plugin for deployment, along with additional configurations for triggers, system prompts, and chat models. Users can start the bot by running Qbot.py after installing necessary libraries and ensuring the NTQQ is running. Qbot also supports features like sending music from the data/smusic folder and emojis based on emotions. Local voice synthesis can be deployed for voice outputs. Qbot provides commands like #reset to clear short-term memory and addresses common issues like program crashes due to encoding format, message sending/receiving failures, voice synthesis failures, and connection issues. Users are encouraged to give the project a star if they find it useful.

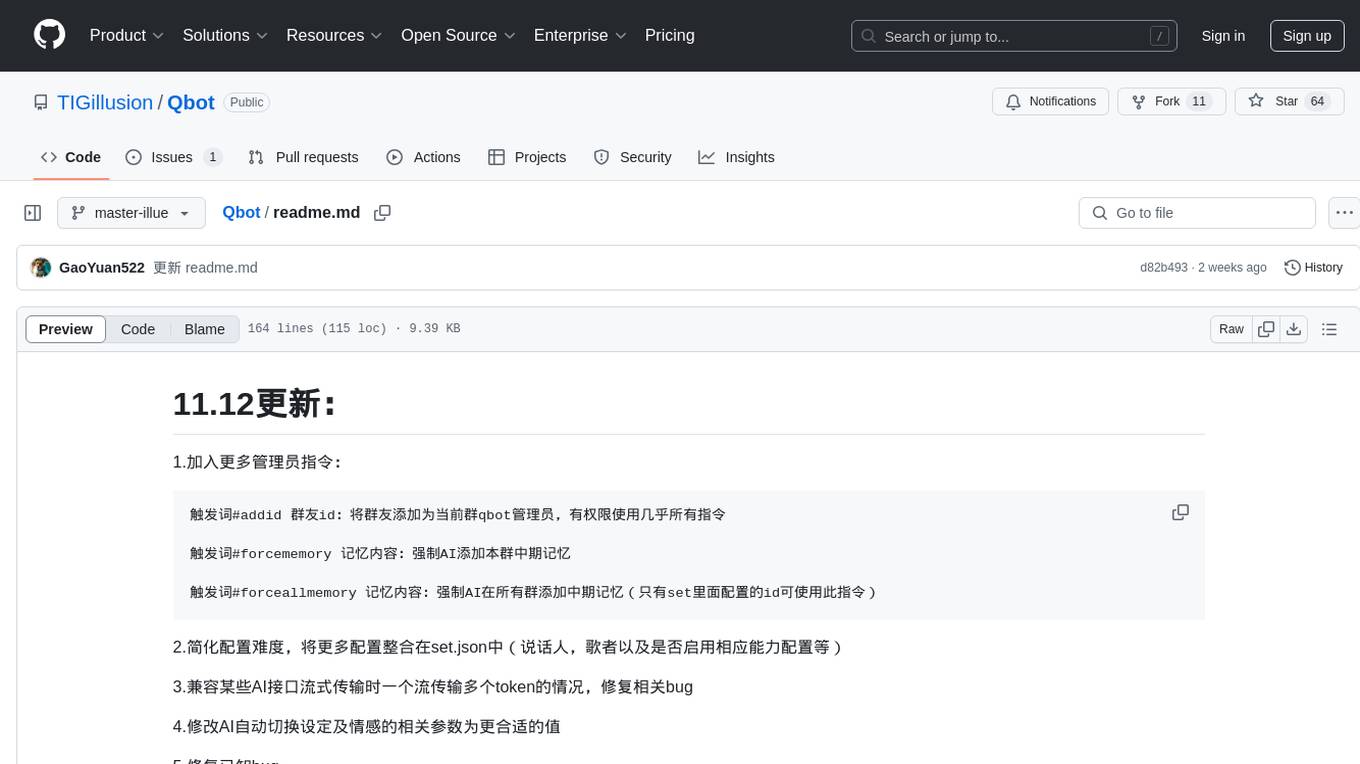

README:

1.加入更多管理员指令:

触发词#addid 群友id:将群友添加为当前群qbot管理员,有权限使用几乎所有指令

触发词#forcememory 记忆内容:强制AI添加本群中期记忆

触发词#forceallmemory 记忆内容:强制AI在所有群添加中期记忆(只有set里面配置的id可使用此指令)

2.简化配置难度,将更多配置整合在set.json中(说话人,歌者以及是否启用相应能力配置等)

3.兼容某些AI接口流式传输时一个流传输多个token的情况,修复相关bug

4.修改AI自动切换设定及情感的相关参数为更合适的值

5.修复已知bug

1.加入管理员功能和指令:在set.json里面填写管理员qq号列表即可,指令包括:“触发词#mood 情绪”(设置本群AI的情绪,相关情绪在set里面的系统人设里面修改或添加),“触发词#random 整数”(修改本群AI无触发词的触发概率,整数小于等于0关闭当前群AI)

2.加入多情绪系统提示词功能,可由AI自行切换或管理员手动切换单群的AI情绪模式,即“触发词#mood 情绪”(设置本群AI的情绪,相关情绪在set里面的系统人设里面修改或添加)指令

3.加入单群AI无触发词触发概率修改指令,可修改当前群的触发概率,若设置为0或负值,即可关闭AI在本群的所有能力,后续再设置为正值恢复工作,即“触发词#random 整数”(修改本群AI无触发词的触发概率,整数小于等于0关闭当前群AI)

4.略微增加记忆容量和上下文轮数,限制长期记忆总量

5.修复私聊切换情绪bug

6.将私聊下的歌唱功能改为从群聊翻唱产生的音频中检索歌唱文件,所以群里唱过的歌就可以在私聊中唱了

1.兼容了智谱清言的绘画模型(可以白嫖)

2.加入模型图兜底机制,将模型团首个模型作为兜底模型,用于弥补模型团中若有模型有问题导致偶尔AI不回复的问题,因此首个模型应以稳定为主

3.新功能歌曲AI翻唱:引入一键AI翻唱方案,实现AI自动抓取网易云音乐并预处理后采用svc翻唱(同样由AI自动确定触发时机,只适用于群聊,可自行修改代码扩展至私聊)新功能需要下载工具整合包链接:https://pan.quark.cn/s/ecae6ee123b4 (下载好后按照配置或训练svc的方法就行配置或训练,然后启动文件夹下的启动服务端.bat,最后在qbot源代码里面修改歌唱者为svc对应歌唱者名就可以了,注意训练好的模型放在翻唱整合包的logs文件夹下,目录结构参考logs下的illue)【如果报错缺少"wcwidth"库,就进入翻唱整合包里面的workenv下打开cmd输入 python -m pip install wcwidth即可解决】

1.采用模型团方案,可以填写多个模型,根据权重调用,建议选取一个高性能模型和两个便宜模型,既可以在保证能力的同时节省费用,还可以避免大模型回复模板化的问题

2.允许AI自己在适当的时候发送data/smusic文件夹内的歌曲【本功能已在10.25次更新中被AI翻唱功能取代】

3.允许多个触发词(在set.json里配置)

4.允许多个屏蔽名称(在set.json里配置)

5.增加更多set.json可配置内容

6.增加由AI自己驱动总结的中期记忆能力(效果明显)[写入记忆]

7.允许AI拒绝回答不相干问题[pass]

8.将file.py集成在Qbot中,不需要再手动启动

9.AI自己根据需要和对应情绪发送表情包图片

感谢大家使用本开源项目,本项目旨在快速帮助大家构建一个自己的QQ机器人

本项目部署的qq机器人拥有以下能力:

1.智能断句:利用AI能力为输出打上断句标签,合理断句,既可以保证长内容回复的完整性,

也可以避免AI回复长段内容

2.智能意图判断:利用AI能力给输出打上标签,实现不同回复内容经过特殊函数处理,例如

文本转语音,AI绘图等

3.拥有语音回复,AI绘画回复等常见的回复形式,让回复更加多样化

4.由AI自己选择或决定何时播放播放本地歌曲

5.由AI自己决定发送表情包的时机

本项目站在巨人的肩膀上,使用了其他的一些开源项目,大家可以给对应项目点一个star哦~

-

首先,下载并安装NTQQ(一种新架构的QQ电脑端): NTQQ下载链接

-

安装LLonebot的NTQQ插件: NTQQ插件链接 安装方法:点击查看 如果是Windows系统,注意下载如下名称的较新版本exe:

-

配置LLonebot插件: 安装好插件后,打开ntqq进入插件设置 填写各种信息(千万不要忘记填写端口号)后,注意保存!!!

-

配置: 进入此文件

解释:

-

triggers后面填入触发词列表,可设置多个触发词,如果用户发送的消息里包含这个词,会触发回复。 -

system_prompt后面填的就是AI人设,建议参考默认人设的格式,效果会更好。 -

chat_models后面填模型团列表信息,其中每个模型信息需要填写请求api,模型名称,请求key;切忌自己编造,需查看模型官方开发者文档,按照官方模型名称严格填写,此项将影响请求模型对象。 - 其他的配置项大家应该可以看懂是什么,画图模型配置类比文本模型。

-

-

修改:

- 进入

Qbot.py源代码编辑页面。

- 进入

-

启动:

打开命令行(win+r,输入cmd并回车),先cd到Qbot-main根目录。可选,推荐大佬/开发者: cmd: 使用venv来避免污染全局环境

创建venv:python -m venv .venv

激活venv:

.venv/Scripts/activate.bat

PowerShell: 激活venv:

.venv/Scripts/activate.ps1

(如果PowerShell报不允许执行)修改该PowerShell实例脚本执行策略:

Set-ExecutionPolicy RemoteSigned -Scope Process

必须:安装必要的库:

python -m pip install -r requirements.txt

保持NTQQ的运行状态,然后使用

python Qbot.py完成启动。

(如果使用venv请先激活venv)

(遇到问题可以联系开发者幻日QQ:2141073363,可选venv遇到问题可以联系观赏鱼QQ:2082895869)

将音乐放在data/smusic文件夹下,AI适当的时候会自己从中选取合适歌曲发送(音乐不适宜太多(<20个),否则AI可能不按要求选取歌曲)【本功能已在10.25次更新中被AI翻唱功能取代】 表情包放在data/image/情绪对应文件夹下,目前只有happy,angry,sad,bored,fear五个情绪,可以自行向文件夹中添加表情包,某些格式文件可能不支持

由于我的服务器可能无法支撑大量的语音合成请求,所以大家需要自己部署本地语音合成。本地语音合成可以使用AI桌宠的语音合成服务(即箱庭GPT-Sovits整合包,使用花火模型,双击starttts.bat即可),二者接口格式一样。

箱庭GPT-Sovits整合包项目 箱庭GPT-Sovits整合包文档

部署好之后,只需要启动其中的后端并在根目录按照格式建立空文件夹([Qbot根目录]\data\voice)即可,本项目会自动使用本地语音合成进行语音输出。

如果启用了绘画或者语音合成等需要发送文件到QQ的服务时,不需要同时启动另一个名为file.py的程序。此程序已经内置于Qbot中

注意:需要等待Qbot.py加载完记忆再启动,否则有概率导致Qbot.py无法收发信息!!!

-

#reset:清空当前聊天(群聊/私聊)短期记忆。

- 程序闪退:很可能是因为

set.json的编码格式不对,推测因为经常使用windows记事本编辑导致编码格式自动转换为UTF-8 with BOM,此处建议安装VScode,然后用VScode打开json,在右下角点击UTF-8 with BOM,在顶部的选项栏中选择保存为编码格式,点击UTF-8即可。 - 无法接收发送消息且无报错:建议检查QQ插件设置项填写是否完整,尤其是请求地址。

- 语音合成失败:玄学问题,建议关了重开。

- 语音合成提示无需重复启动(桌宠用户常见问题):建议把所有Python后台都删掉再试试(推荐);或者直接修改

starttts.bat,将其后台进程检测判断逻辑删除即可(不建议)。 - “由于远程主机积极拒绝,无法连接”:可能是请求次数过多,超过了每分钟或每天的请求次数限制,等一等就好。

-

break limitless turn:正常行为。AI之间防刷屏的代码。

觉得本项目有用的话就点一个star吧~

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Qbot

Similar Open Source Tools

Qbot

Qbot is an open-source project designed to help users quickly build their own QQ chatbot. The bot deployed using this project has various capabilities, including intelligent sentence segmentation, intent recognition, voice and drawing replies, autonomous selection of when to play local music, and decision-making on sending emojis. Qbot leverages other open-source projects and allows users to customize triggers, system prompts, chat models, and more through configuration files. Users can modify the Qbot.py source code to tailor the bot's behavior. The project requires NTQQ and LLonebot's NTQQ plugin for deployment, along with additional configurations for triggers, system prompts, and chat models. Users can start the bot by running Qbot.py after installing necessary libraries and ensuring the NTQQ is running. Qbot also supports features like sending music from the data/smusic folder and emojis based on emotions. Local voice synthesis can be deployed for voice outputs. Qbot provides commands like #reset to clear short-term memory and addresses common issues like program crashes due to encoding format, message sending/receiving failures, voice synthesis failures, and connection issues. Users are encouraged to give the project a star if they find it useful.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

screenpipe

Screenpipe is an open source application that turns your computer into a personal AI, capturing screen and audio to create a searchable memory of your activities. It allows you to remember everything, search with AI, and keep your data 100% local. The tool is designed for knowledge workers, developers, researchers, people with ADHD, remote workers, and anyone looking for a private, local-first alternative to cloud-based AI memory tools.

ccprompts

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities. It includes safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. The repository is under active development, so caution is advised when using it in production environments.

chitu

Chitu is a high-performance inference framework for large language models, focusing on efficiency, flexibility, and availability. It supports various mainstream large language models, including DeepSeek, LLaMA series, Mixtral, and more. Chitu integrates latest optimizations for large language models, provides efficient operators with online FP8 to BF16 conversion, and is deployed for real-world production. The framework is versatile, supporting various hardware environments beyond NVIDIA GPUs. Chitu aims to enhance output speed per unit computing power, especially in decoding processes dependent on memory bandwidth.

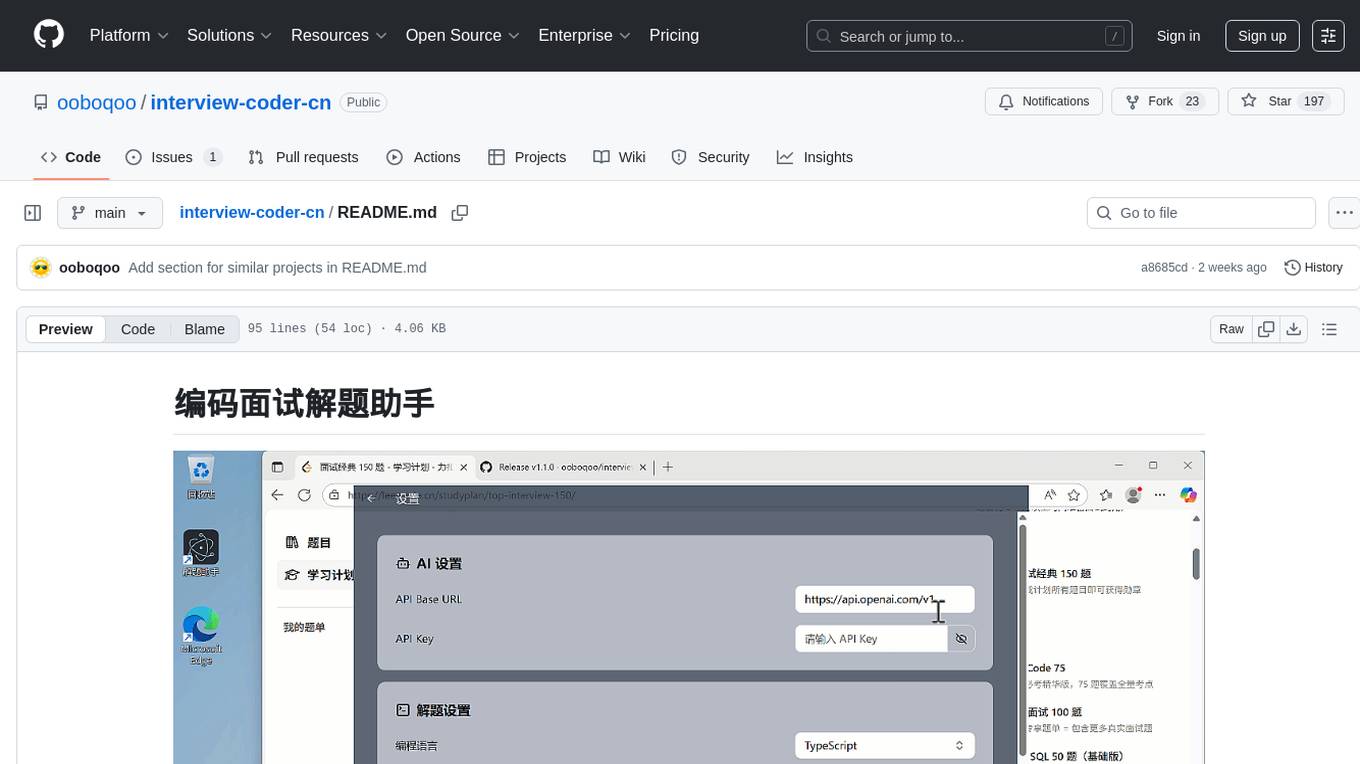

interview-coder-cn

This is a coding problem-solving assistant for Chinese users, tailored to the domestic AI ecosystem, simple and easy to use. It provides real-time problem-solving ideas and code analysis for coding interviews, avoiding detection during screen sharing. Users can also extend its functionality for other scenarios by customizing prompt words. The tool supports various programming languages and has stealth capabilities to hide its interface from interviewers even when screen sharing.

LlamaBot

LlamaBot is an open-source AI coding agent that rapidly builds MVPs, prototypes, and internal tools. It works for non-technical founders, product teams, and engineers by generating working prototypes, embedding AI directly into the app, and running real workflows. Unlike typical codegen tools, LlamaBot can embed directly in your app and run real workflows, making it ideal for collaborative software building where founders guide the vision, engineers stay in control, and AI fills the gap. LlamaBot is built for moving ideas fast, allowing users to prototype an AI MVP in a weekend, experiment with workflows, and collaborate with teammates to bridge the gap between non-technical founders and engineering teams.

OpenManus

OpenManus is an open-source project aiming to replicate the capabilities of the Manus AI agent, known for autonomously executing complex tasks like travel planning and stock analysis. The project provides a modular, containerized framework using Docker, Python, and JavaScript, allowing developers to build, deploy, and experiment with a multi-agent AI system. Features include collaborative AI agents, Dockerized environment, task execution support, tool integration, modular design, and community-driven development. Users can interact with OpenManus via CLI, API, or web UI, and the project welcomes contributions to enhance its capabilities.

Trellis

Trellis is an all-in-one AI framework and toolkit designed for Claude Code, Cursor, and iFlow. It offers features such as auto-injection of required specs and workflows, auto-updated spec library, parallel sessions for running multiple agents simultaneously, team sync for sharing specs, and session persistence. Trellis helps users educate their AI, work on multiple features in parallel, define custom workflows, and provides a structured project environment with workflow guides, spec library, personal journal, task management, and utilities. The tool aims to enhance code review, introduce skill packs, integrate with broader tools, improve session continuity, and visualize progress for each agent.

ai-flow

AI Flow is an open-source, user-friendly UI application that empowers you to seamlessly connect multiple AI models together, specifically leveraging the capabilities of multiples AI APIs such as OpenAI, StabilityAI and Replicate. In a nutshell, AI Flow provides a visual platform for crafting and managing AI-driven workflows, thereby facilitating diverse and dynamic AI interactions.

PageTalk

PageTalk is a browser extension that enhances web browsing by integrating Google's Gemini API. It allows users to select text on any webpage for AI analysis, translation, contextual chat, and customization. The tool supports multi-agent system, image input, rich content rendering, PDF parsing, URL context extraction, personalized settings, chat export, text selection helper, and proxy support. Users can interact with web pages, chat contextually, manage AI agents, and perform various tasks seamlessly.

Alice

Alice is an open-source AI companion designed to live on your desktop, providing voice interaction, intelligent context awareness, and powerful tooling. More than a chatbot, Alice is emotionally engaging and deeply useful, assisting with daily tasks and creative work. Key features include voice interaction with natural-sounding responses, memory and context management, vision and visual output capabilities, computer use tools, function calling for web search and task scheduling, wake word support, dedicated Chrome extension, and flexible settings interface. Technologies used include Vue.js, Electron, OpenAI, Go, hnswlib-node, and more. Alice is customizable and offers a dedicated Chrome extension, wake word support, and various tools for computer use and productivity tasks.

replexica

Replexica is an i18n toolkit for React, to ship multi-language apps fast. It doesn't require extracting text into JSON files, and uses AI-powered API for content processing. It comes in two parts: 1. Replexica Compiler - an open-source compiler plugin for React; 2. Replexica API - an i18n API in the cloud that performs translations using LLMs. (Usage based, has a free tier.) Replexica supports several i18n formats: 1. JSON-free Replexica compiler format; 2. .md files for Markdown content; 3. Legacy JSON and YAML-based formats.

OpenOutreach

OpenOutreach is a self-hosted, open-source LinkedIn automation tool designed for B2B lead generation. It automates the entire outreach process in a stealthy, human-like way by discovering and enriching target profiles, ranking profiles using ML for smart prioritization, sending personalized connection requests, following up with custom messages after acceptance, and tracking everything in a built-in CRM with web UI. It offers features like undetectable behavior, fully customizable Python-based campaigns, local execution with CRM, easy deployment with Docker, and AI-ready templating for hyper-personalized messages.

DeepSeekAI

DeepSeekAI is a browser extension plugin that allows users to interact with AI by selecting text on web pages and invoking the DeepSeek large model to provide AI responses. The extension enhances browsing experience by enabling users to get summaries or answers for selected text directly on the webpage. It features context text selection, API key integration, draggable and resizable window, AI streaming replies, Markdown rendering, one-click copy, re-answer option, code copy functionality, language switching, and multi-turn dialogue support. Users can install the extension from Chrome Web Store or Edge Add-ons, or manually clone the repository, install dependencies, and build the extension. Configuration involves entering the DeepSeek API key in the extension popup window to start using the AI-driven responses.

For similar tasks

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

Qbot

Qbot is an open-source project designed to help users quickly build their own QQ chatbot. The bot deployed using this project has various capabilities, including intelligent sentence segmentation, intent recognition, voice and drawing replies, autonomous selection of when to play local music, and decision-making on sending emojis. Qbot leverages other open-source projects and allows users to customize triggers, system prompts, chat models, and more through configuration files. Users can modify the Qbot.py source code to tailor the bot's behavior. The project requires NTQQ and LLonebot's NTQQ plugin for deployment, along with additional configurations for triggers, system prompts, and chat models. Users can start the bot by running Qbot.py after installing necessary libraries and ensuring the NTQQ is running. Qbot also supports features like sending music from the data/smusic folder and emojis based on emotions. Local voice synthesis can be deployed for voice outputs. Qbot provides commands like #reset to clear short-term memory and addresses common issues like program crashes due to encoding format, message sending/receiving failures, voice synthesis failures, and connection issues. Users are encouraged to give the project a star if they find it useful.

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

unsloth

Unsloth is a tool that allows users to fine-tune large language models (LLMs) 2-5x faster with 80% less memory. It is a free and open-source tool that can be used to fine-tune LLMs such as Gemma, Mistral, Llama 2-5, TinyLlama, and CodeLlama 34b. Unsloth supports 4-bit and 16-bit QLoRA / LoRA fine-tuning via bitsandbytes. It also supports DPO (Direct Preference Optimization), PPO, and Reward Modelling. Unsloth is compatible with Hugging Face's TRL, Trainer, Seq2SeqTrainer, and Pytorch code. It is also compatible with NVIDIA GPUs since 2018+ (minimum CUDA Capability 7.0).

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

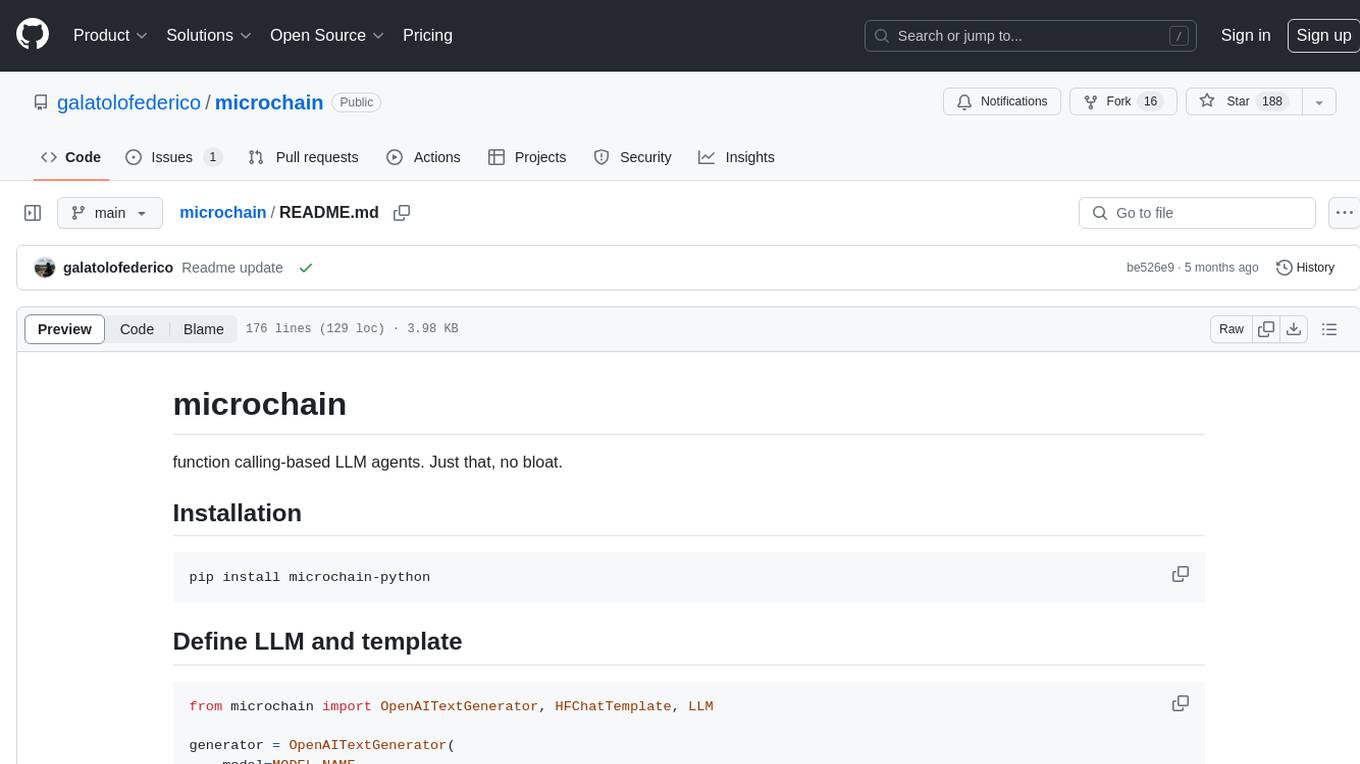

microchain

Microchain is a function calling-based LLM agents tool with no bloat. It allows users to define LLM and templates, use various functions like Sum and Product, and create LLM agents for specific tasks. The tool provides a simple and efficient way to interact with OpenAI models and create conversational agents for various applications.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.