knowledge

(Chinese Only)Everything I know: DevOps & CloudNative, Linux, Embedded, Homelab, Music, Blockchain, AI, etc...

Stars: 479

This repository serves as a personal knowledge base for the owner's reference and use. It covers a wide range of topics including cloud-native operations, Kubernetes ecosystem, networking, cloud services, telemetry, CI/CD, electronic engineering, hardware projects, operating systems, homelab setups, high-performance computing applications, openwrt router usage, programming languages, music theory, blockchain, distributed systems principles, and various other knowledge domains. The content is periodically refined and published on the owner's blog for maintenance purposes.

README:

个人知识库,主要供自己查阅使用。并不是教程,也不保证正确!

为了维护方便,本仓库的内容可能会在完善后,被整理、润色,再发布到我的博客 https://thiscute.world/ 中。这边将只保留一个链接。

主要内容(大致按内容丰富程度排序):

- 云原生运维相关内容

- Kubernetes 生态:部署、配置、组件及使用笔记

- 网络:Kubernetes 集群网络、Linux 网络(学习中)

- 云服务:AWS/GCP/阿里云/... 使用笔记

- Telemetry:监控(Prometheus+Grafana)、日志(ELK/Loki)、链路追踪

- CI/CD:Jenkins/GitLabCI/ArgoWorkflow 等

- 电子工程(最近兴趣很强,正在爬科技树中)

- 各种芯片/板子的玩法:树莓派、RK3588、STM32、ESP32

- 各种好玩的项目:无人机、智能小车、智能机械臂,甚至机器人

- 操作系统: Linux、NixOS、KVM 虚拟化等

- Homelab: 记录我的 Homelab 玩法

- 硬件配置、网络拓扑、购置时间、购置渠道与价格

- PVE 集群的玩法

- 这些高算力可以用来干啥:K3s 集群、分布式监控、HomeAssistant、NAS、测试云原生领域的各种新项 目...

- openwrt 路由器玩法

- 编程语言学习笔记:Go/Python/C/Rust/...

- 音乐:乐理、口琴/竹笛、歌声合成、编曲(Reaper)

- 区块链、分布式系统及原理

- 机器学习/深度学习(貌似还没开始...)

- 其他各种我有所涉猎的知识

文件夹结构就是文档目录,这里就不额外列索引了—_—

由于众所周知的原因,很多时候我们需要为各种系统、应用、包管理器设置镜像源以加速下载。

主要有如下几个镜像站:

-

阿里云开源镜像站: 个人感觉是国内下载速度最快的一个镜像

源。

- 提供了 ubuntu/debian/centos/alpine,以及 pypi/goproxy 等主流 OS/PL 的镜像源。比较全。

-

清华开源镜像源: 非常全,更新也很及时。

- 但是速度比不上阿里云,而且有时会停机维护。。

- 北京外国语大学镜像站: 清华镜像的姊妹站,因为目前用的人少,感觉速度 比清华源快很多。

-

中科大开源镜像源: 这个也很全,更新也很快。但是不够稳定。

- 比清华源要快一点,但是停机维护的频率更高。而且前段时间因为经费问题还将 pypi 源下线了。

- 腾讯镜像源: 才推出没多久的镜像源,还没用过。

首推北京外国语大学镜像站镜像源,稳定可靠速度快。

DevOps/SRE 领域,基本都可以直接参考 CNCF 蓝 图:CNCF Cloud Native Interactive Landscape

CS 全自学指南(汇集全球最牛逼的各种课程):

偏底层的个人博客(CSAPP 笔记):

- 不周山作品集: 学习知识就像不周山,永远不会有『周全』的一天,是为活到 老,学到老。

系统化的 SRE/DevOps 文档:

SRE/DevOps 文章集锦:

分布式系统设计:

- https://github.com/binhnguyennus/awesome-scalability

- https://github.com/Vonng/ddia

- https://github.com/donnemartin/system-design-primer

企业/团队博客,各个方向的内容都有:

- 极客时间《10x 程序员工作法》

需要学习如何进行高效地团队协作,提高效率。(加更少的班,还能更高质量地完成任务。)

-

领域驱动设计

- 方法:事件风暴

- 人月神话:软件项目管理之道

- 程序员修炼之道

- 人件

-

《关键对话》:掌握沟通的方式

- 时刻注意维护对方的安全感;一定要牢记对话的目的。

- 重构

- MacTalk-池建强的随想录: 极客时间创始人,45+

- 李凡希的 Blog:

- paste-markdown: github 官方出的小工具,将 sheet/table 直接 copy 进来,自动转换为 markdown

- domchristie/turndown: 将整个 html 页面转换为 markdown, 不过对表格的支持好像有点问题

Ryan Yin's Knowledge © by Ryan Yin is licensed under CC BY-SA 4.0

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for knowledge

Similar Open Source Tools

knowledge

This repository serves as a personal knowledge base for the owner's reference and use. It covers a wide range of topics including cloud-native operations, Kubernetes ecosystem, networking, cloud services, telemetry, CI/CD, electronic engineering, hardware projects, operating systems, homelab setups, high-performance computing applications, openwrt router usage, programming languages, music theory, blockchain, distributed systems principles, and various other knowledge domains. The content is periodically refined and published on the owner's blog for maintenance purposes.

aiwechat-vercel

aiwechat-vercel is a tool that integrates AI capabilities into WeChat public accounts using Vercel functions. It requires minimal server setup, low entry barriers, and only needs a domain name that can be bound to Vercel, with almost zero cost. The tool supports various AI models, continuous Q&A sessions, chat functionality, system prompts, and custom commands. It aims to provide a platform for learning and experimentation with AI integration in WeChat public accounts.

hdu-cs-wiki

The HDU Computer Science Lecture Notes is a comprehensive guide designed to help students navigate through various challenges in the field of computer science. It covers topics such as programming languages, artificial intelligence, software development, and more. The notes provide insights on how to effectively utilize university time, balance grades with project experience, and make informed decisions regarding career paths. Created by a collaborative effort involving students, teachers, and industry experts, the lecture notes aim to serve as a guiding tool for individuals seeking guidance in the computer science domain.

jd_scripts

jd_scripts is a repository containing scripts for automating various tasks on the JD platform. The scripts provide instructions for setting up and using the tools to enhance user experience and efficiency in managing JD accounts and assets. Users can automate processes such as receiving notifications, redeeming rewards, participating in group purchases, and monitoring ticket availability. The repository also includes resources for optimizing performance and security measures to safeguard user accounts. With a focus on simplifying interactions with the JD platform, jd_scripts offers a comprehensive solution for maximizing benefits and convenience for JD users.

AI-Notes

AI-Notes is a repository dedicated to practical applications of artificial intelligence and deep learning. It covers concepts such as data mining, machine learning, natural language processing, and AI. The repository contains Jupyter Notebook examples for hands-on learning and experimentation. It explores the development stages of AI, from narrow artificial intelligence to general artificial intelligence and superintelligence. The content delves into machine learning algorithms, deep learning techniques, and the impact of AI on various industries like autonomous driving and healthcare. The repository aims to provide a comprehensive understanding of AI technologies and their real-world applications.

Awesome-Lists-and-CheatSheets

Awesome-Lists is a curated index of selected resources spanning various fields including programming languages and theories, web and frontend development, server-side development and infrastructure, cloud computing and big data, data science and artificial intelligence, product design, etc. It includes articles, books, courses, examples, open-source projects, and more. The repository categorizes resources according to the knowledge system of different domains, aiming to provide valuable and concise material indexes for readers. Users can explore and learn from a wide range of high-quality resources in a systematic way.

MaterialSearch

MaterialSearch is a tool for searching local images and videos using natural language. It provides functionalities such as text search for images, image search for images, text search for videos (providing matching video clips), image search for videos (searching for the segment in a video through a screenshot), image-text similarity calculation, and Pexels video search. The tool can be deployed through the source code or Docker image, and it supports GPU acceleration. Users can configure the tool through environment variables or a .env file. The tool is still under development, and configurations may change frequently. Users can report issues or suggest improvements through issues or pull requests.

Awesome-Lists

Awesome-Lists is a curated list of awesome lists across various domains of computer science and beyond, including programming languages, web development, data science, and more. It provides a comprehensive index of articles, books, courses, open source projects, and other resources. The lists are organized by topic and subtopic, making it easy to find the information you need. Awesome-Lists is a valuable resource for anyone looking to learn more about a particular topic or to stay up-to-date on the latest developments in the field.

Verbiverse

Verbiverse is a tool that uses a large language model to assist in reading PDFs and watching videos, aimed at improving language proficiency. It provides a more convenient and efficient way to use large models through predefined prompts, designed for those looking to enhance their language skills. The tool analyzes unfamiliar words and sentences in foreign language PDFs or video subtitles, providing better contextual understanding compared to traditional dictionary translations or ambiguous meanings. It offers features such as automatic loading of subtitles, word analysis by clicking or double-clicking, and a word database for collecting words. Users can run the tool on Windows x86_64 or ubuntu_22.04 x86_64 platforms by downloading the precompiled packages or by cloning the source code and setting up a virtual environment with Python. It is recommended to use a local model or smaller PDF files for testing due to potential token consumption issues with large files.

wp-ai-chat

The 'wp-ai-chat' repository is an open-source and free WordPress AI assistant plugin that enables various AI functionalities such as AI chat conversations, AI voice playback, AI article generation, AI article summarization, AI article translation, AI PPT generation, AI document analysis, and article content voice playback. It supports integration with multiple AI text interfaces and intelligent applications from platforms like Alibaba, Tencent, and ByteDance. Users can generate articles, summarize articles, translate articles, play article content via text-to-speech services, and customize AI models and prompts. The plugin requires WordPress 6.7.1 and PHP 8.0, and provides a front-end chat interface for logged-in users.

aidea

AIdea is an app that integrates mainstream large language models and drawing models, developed using Flutter. The code is completely open-source and supports various functions such as GPT-3.5, GPT-4 from OpenAI, Claude instant, Claude 2.1 from Anthropic, Gemini Pro and visual language models from Google, as well as various Chinese and open-source models. It also supports features like text-to-image, super-resolution, coloring black and white images, artistic fonts, artistic QR codes, and more.

YaneuraOu

YaneuraOu is the World's Strongest Shogi engine (AI player), winner of WCSC29 and other prestigious competitions. It is an educational and USI compliant engine that supports various features such as Ponder, MultiPV, and ultra-parallel search. The engine is known for its compatibility with different platforms like Windows, Ubuntu, macOS, and ARM. Additionally, YaneuraOu offers a standard opening book format, on-the-fly opening book support, and various maintenance commands for opening books. With a massive transposition table size of up to 33TB, YaneuraOu is a powerful and versatile tool for Shogi enthusiasts and developers.

WatchAlert

WatchAlert is a lightweight monitoring and alerting engine tailored for cloud-native environments, focusing on observability and stability themes. It provides comprehensive monitoring and alerting support, including AI-powered alert analysis for efficient troubleshooting. WatchAlert integrates with various data sources such as Prometheus, VictoriaMetrics, Loki, Elasticsearch, AliCloud SLS, Jaeger, Kubernetes, and different network protocols for monitoring and supports alert notifications via multiple channels like Feishu, DingTalk, WeChat Work, email, and custom hooks. It is optimized for cloud-native environments, easy to use, offers flexible alert rule configurations, and specializes in stability scenarios to help users quickly identify and resolve issues, providing a reliable monitoring and alerting solution to enhance operational efficiency and reduce maintenance costs.

poco-agent

Poco Agent is a cloud-based tool that provides a secure sandbox environment for running tasks without affecting the host machine. It offers a modern UI with mobile adaptability, easy configuration through Docker, and extensive capabilities with support for MCP protocol and custom skills. Users can run tasks asynchronously and schedule them, even when the web interface is closed. Additional features include a built-in browser for internet research and GitHub repository integration. Poco Agent aims to be a more secure, visually appealing, and user-friendly alternative to OpenClaw.

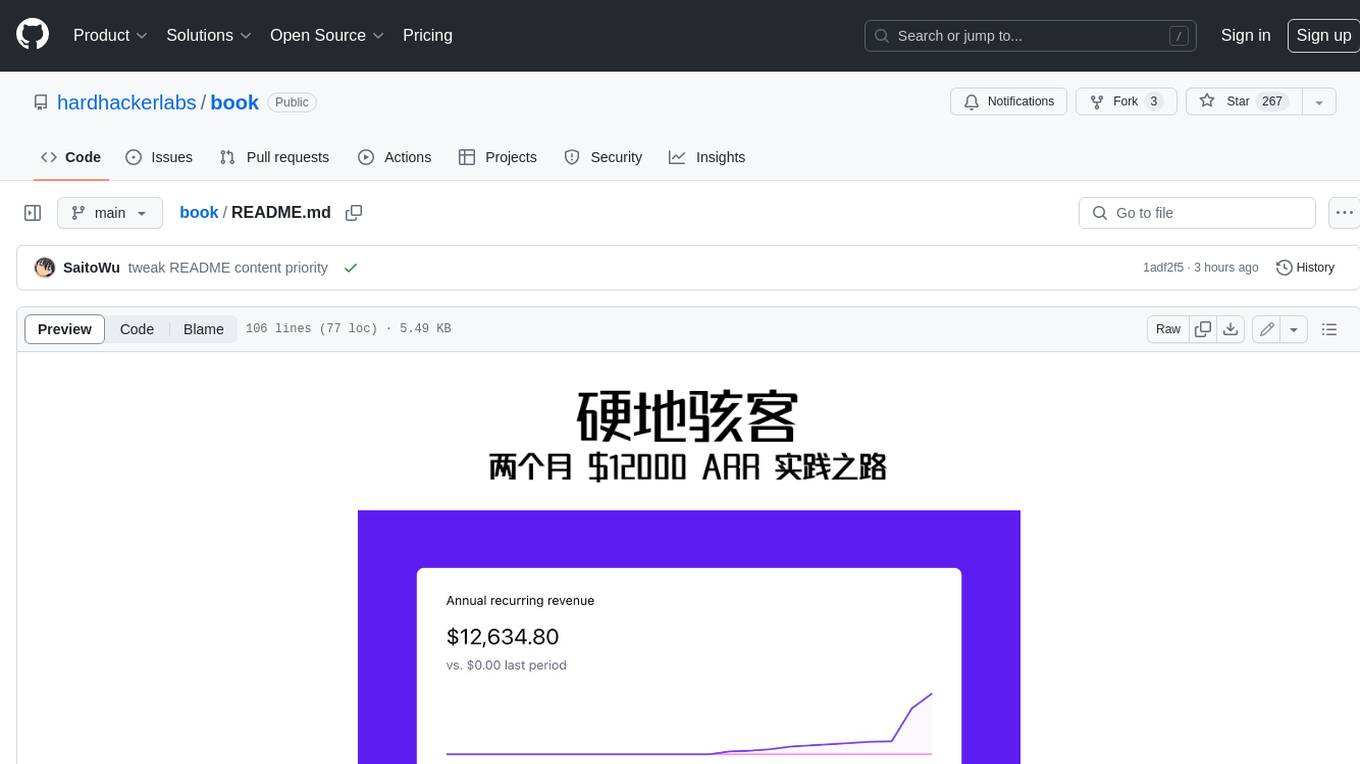

book

Podwise is an AI knowledge management app designed specifically for podcast listeners. With the Podwise platform, you only need to follow your favorite podcasts, such as "Hardcore Hackers". When a program is released, Podwise will use AI to transcribe, extract, summarize, and analyze the podcast content, helping you to break down the hard-core podcast knowledge. At the same time, it is connected to platforms such as Notion, Obsidian, Logseq, and Readwise, embedded in your knowledge management workflow, and integrated with content from other channels including news, newsletters, and blogs, helping you to improve your second brain 🧠.

chitu

Chitu is a high-performance inference framework for large language models, focusing on efficiency, flexibility, and availability. It supports various mainstream large language models, including DeepSeek, LLaMA series, Mixtral, and more. Chitu integrates latest optimizations for large language models, provides efficient operators with online FP8 to BF16 conversion, and is deployed for real-world production. The framework is versatile, supporting various hardware environments beyond NVIDIA GPUs. Chitu aims to enhance output speed per unit computing power, especially in decoding processes dependent on memory bandwidth.

For similar tasks

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

knowledge

This repository serves as a personal knowledge base for the owner's reference and use. It covers a wide range of topics including cloud-native operations, Kubernetes ecosystem, networking, cloud services, telemetry, CI/CD, electronic engineering, hardware projects, operating systems, homelab setups, high-performance computing applications, openwrt router usage, programming languages, music theory, blockchain, distributed systems principles, and various other knowledge domains. The content is periodically refined and published on the owner's blog for maintenance purposes.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.