IntelliQ

Advanced Multi-Turn QA System with LLM and Intent Recognition. 基于LLM大语言模型意图识别、参数抽取结合slot词槽技术实现多轮问答、NL2API. 打造Function Call多轮问答最佳实践

Stars: 418

IntelliQ is an open-source project aimed at providing a multi-turn question-answering system based on a large language model (LLM). The system combines advanced intent recognition and slot filling technology to enhance the depth of understanding and accuracy of responses in conversation systems. It offers a flexible and efficient solution for developers to build and optimize various conversational applications. The system features multi-turn dialogue management, intent recognition, slot filling, interface slot technology for real-time data retrieval and processing, adaptive learning for improving response accuracy and speed, and easy integration with detailed API documentation supporting multiple programming languages and platforms.

README:

IntelliQ 是一个开源项目,旨在提供一个基于大型语言模型(LLM)的多轮问答系统。该系统结合了先进的意图识别和词槽填充(Slot Filling)技术,致力于提升对话系统的理解深度和响应精确度。本项目为开发者社区提供了一个灵活、高效的解决方案,用于构建和优化各类对话型应用。

- 多轮对话管理:能够处理复杂的对话场景,支持连续多轮交互。

- 意图识别:准确判定用户输入的意图,支持自定义意图扩展。

- 词槽填充:动态识别并填充关键信息(如时间、地点、对象等)。

- 接口槽技术:直接与外部APIs对接,实现数据的实时获取和处理。

- 自适应学习:不断学习用户交互,优化回答准确性和响应速度。

- 易于集成:提供了详细的API文档,支持多种编程语言和平台集成。

确保您已安装 git、python3。然后执行以下步骤:

# 安装步骤

git clone https://github.com/answerlink/IntelliQ.git

cd IntelliQ

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

# 修改配置

配置项在 config/__init__.py

GPT_URL: 可修改为OpenAI的代理地址

API_KEY: 修改为ChatGPT的ApiKey

# 启动

python app.py

# 可视化调试可以浏览器打开 demo/user_input.html 或 127.0.0.1:5000

查阅详细的API文档和使用说明,请访问 [文档链接]。

非常欢迎和鼓励社区贡献。如果您想贡献代码,请遵循以下步骤:

Fork 仓库

创建新的特性分支 (git checkout -b feature/AmazingFeature)

提交更改 (git commit -m 'Add some AmazingFeature')

推送到分支 (git push origin feature/AmazingFeature)

开启Pull Request

查看 CONTRIBUTING.md 了解更多信息。

Apache License, Version 2.0

v1.3 2024-1-15 集成通义千问线上模型

v1.2 2023-12-24 支持Qwen私有化模型

v1.1 2023-12-21 改造通用场景处理器;完成高度抽象封装;提示词调优

v1.0 2023-12-17 首次可用更新;框架完成

v0.1 2023-11-23 首次更新;流程设计

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for IntelliQ

Similar Open Source Tools

IntelliQ

IntelliQ is an open-source project aimed at providing a multi-turn question-answering system based on a large language model (LLM). The system combines advanced intent recognition and slot filling technology to enhance the depth of understanding and accuracy of responses in conversation systems. It offers a flexible and efficient solution for developers to build and optimize various conversational applications. The system features multi-turn dialogue management, intent recognition, slot filling, interface slot technology for real-time data retrieval and processing, adaptive learning for improving response accuracy and speed, and easy integration with detailed API documentation supporting multiple programming languages and platforms.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

agents-towards-production

Agents Towards Production is an open-source playbook for building production-ready GenAI agents that scale from prototype to enterprise. Tutorials cover stateful workflows, vector memory, real-time web search APIs, Docker deployment, FastAPI endpoints, security guardrails, GPU scaling, browser automation, fine-tuning, multi-agent coordination, observability, evaluation, and UI development.

MaxKB

MaxKB is a knowledge base Q&A system based on the LLM large language model. MaxKB = Max Knowledge Base, which aims to become the most powerful brain of the enterprise.

LiveDevAgents

LiveDevAgents is a multi-agent danmaku game engine built using CAMEL. It was developed for the 2024.12 CAMEL-AI Hackathon project. The engine allows for the creation of games in real-time through live bullet comments during streaming, enabling interaction with AI hosts. The project aims to expand and deconstruct simple ideas with a team of agents of different expertise, continuously updating and self-correcting during runtime. It also supports workforce enhancement, migration of anchor agents to a new framework, improvement of bullet comment processing logic, expansion of live control for more platforms, integration of art and music agents, and VR shared workspace for collaborative development.

xpert

Xpert is a powerful tool for data analysis and visualization. It provides a user-friendly interface to explore and manipulate datasets, perform statistical analysis, and create insightful visualizations. With Xpert, users can easily import data from various sources, clean and preprocess data, analyze trends and patterns, and generate interactive charts and graphs. Whether you are a data scientist, analyst, researcher, or student, Xpert simplifies the process of data analysis and visualization, making it accessible to users with varying levels of expertise.

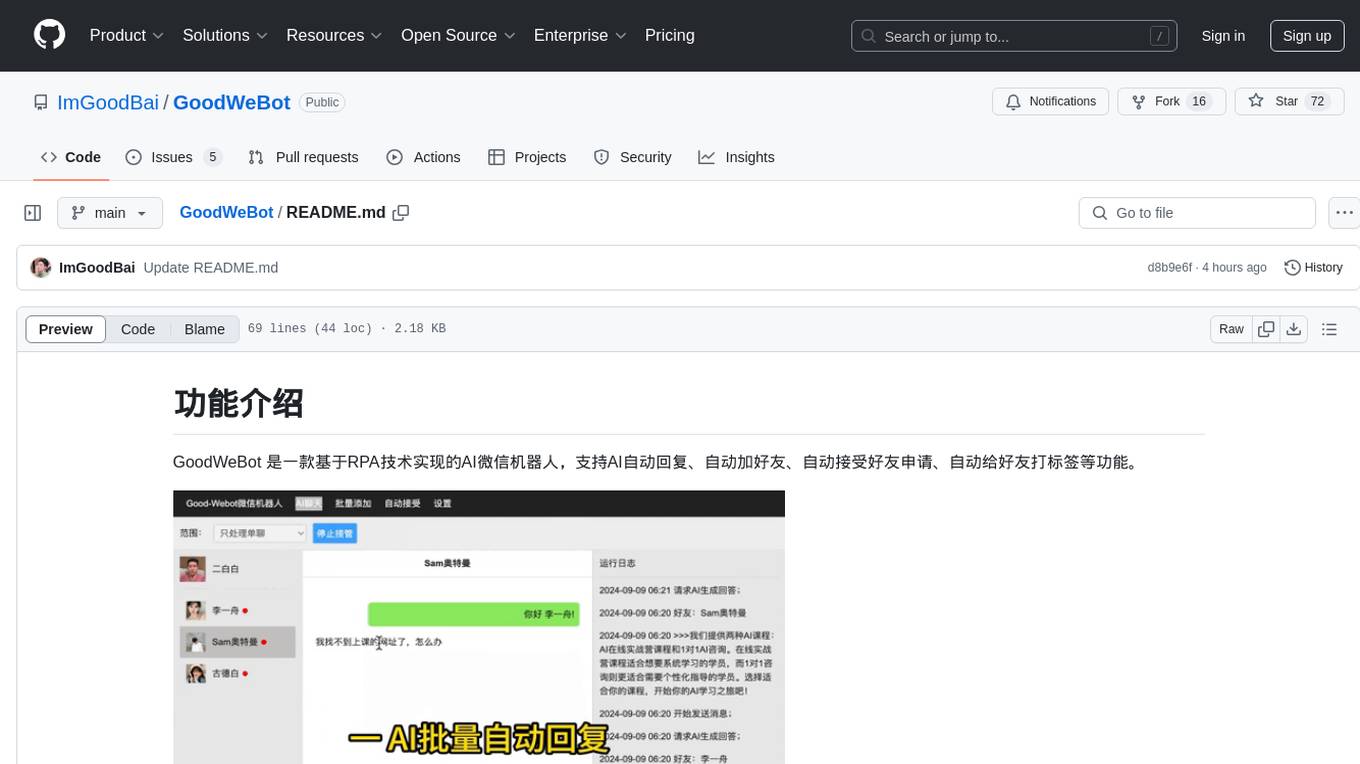

GoodWeBot

GoodWeBot is an AI WeChat robot based on RPA technology, supporting AI automatic replies, automatic friend adding, automatic friend request acceptance, automatic friend tagging, and more. It is fully compliant with RPA technology, easy to use with one-click download and run without installation, and integrates with mainstream AI services like coze. The tool is free to use and provides features like AI chat support, contact synchronization, group messaging, and coze API testing. Users should comply with GPL 3.0 open-source license and use the tool for technical research and learning purposes only, following local laws and regulations. The tool should not be used for any illegal or infringing activities, and users are responsible for the consequences of their usage.

enhance_llm

The enhance_llm repository contains three main parts: 1. Vector model domain fine-tuning based on llama_index and qwen fine-tuning BGE vector model. 2. Large model domain fine-tuning based on PEFT fine-tuning qwen1.5-7b-chat, with sft and dpo. 3. High-order retrieval enhanced generation (RAG) system based on the above domain work, implementing a two-stage RAG system. It includes query rewriting, recall reordering, retrieval reordering, multi-turn dialogue, and more. The repository also provides hardware and environment configurations along with star history and licensing information.

ai-self-coding-book

The 'ai-self-coding-book' repository is a guidebook that aims to teach how to create complex applications with commercial value using natural language and AI, rather than simple toy projects. It provides insights on AI programming concepts and practical applications, emphasizing real-world use cases and best practices for development.

brain4j

Brain4J is a lightweight, performant, and open-source machine learning framework for Java. Designed with portability and speed in mind, it is optimized for high performance and ideal for those looking to implement machine learning solutions in pure Java. The framework provides tools and functionalities to facilitate the development of machine learning models within Java applications, offering ease of use and efficiency.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

BabelDuck

BabelDuck is a highly customizable AI oral conversation practice application for language learners at all levels, with a focus on being more beginner-friendly. It aims to minimize the threshold and mental burden of oral expression practice. The tool supports various AI conversation features such as managing multiple dialogues, customizing system prompts, and providing suggestions for grammar, translation, or expression refinement without interrupting the current conversation. Users can seek further discussion through sub-dialogues when in doubt about AI suggestions, seamlessly returning to the original conversation afterward. BabelDuck also offers voice input and output, integrates browser-built text-to-speech, and Azure TTS, and supports different dialogue preferences, data stored locally for user privacy, multilingual interface, and built-in tutorials.

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

agentic-radar

The Agentic Radar is a security scanner designed to analyze and assess agentic systems for security and operational insights. It helps users understand how agentic systems function, identify potential vulnerabilities, and create security reports. The tool includes workflow visualization, tool identification, and vulnerability mapping, providing a comprehensive HTML report for easy reviewing and sharing. It simplifies the process of assessing complex workflows and multiple tools used in agentic systems, offering a structured view of potential risks and security frameworks.

xpander.ai

xpander.ai is a Backend-as-a-Service for autonomous agents that abstracts the ops layer, allowing AI engineers to focus on behavior and outcomes. It provides managed agent hosting with version control and CI/CD, a fully managed PostgreSQL memory layer, and a library of 2,000+ functions. The platform features an AI native triggering system that processes inputs from various sources and delivers unified messages to agents. With support for any agent framework or SDK, including Agno and OpenAI, xpander.ai enables users to build intelligent, production-ready AI agents without dealing with infrastructure complexity.

For similar tasks

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

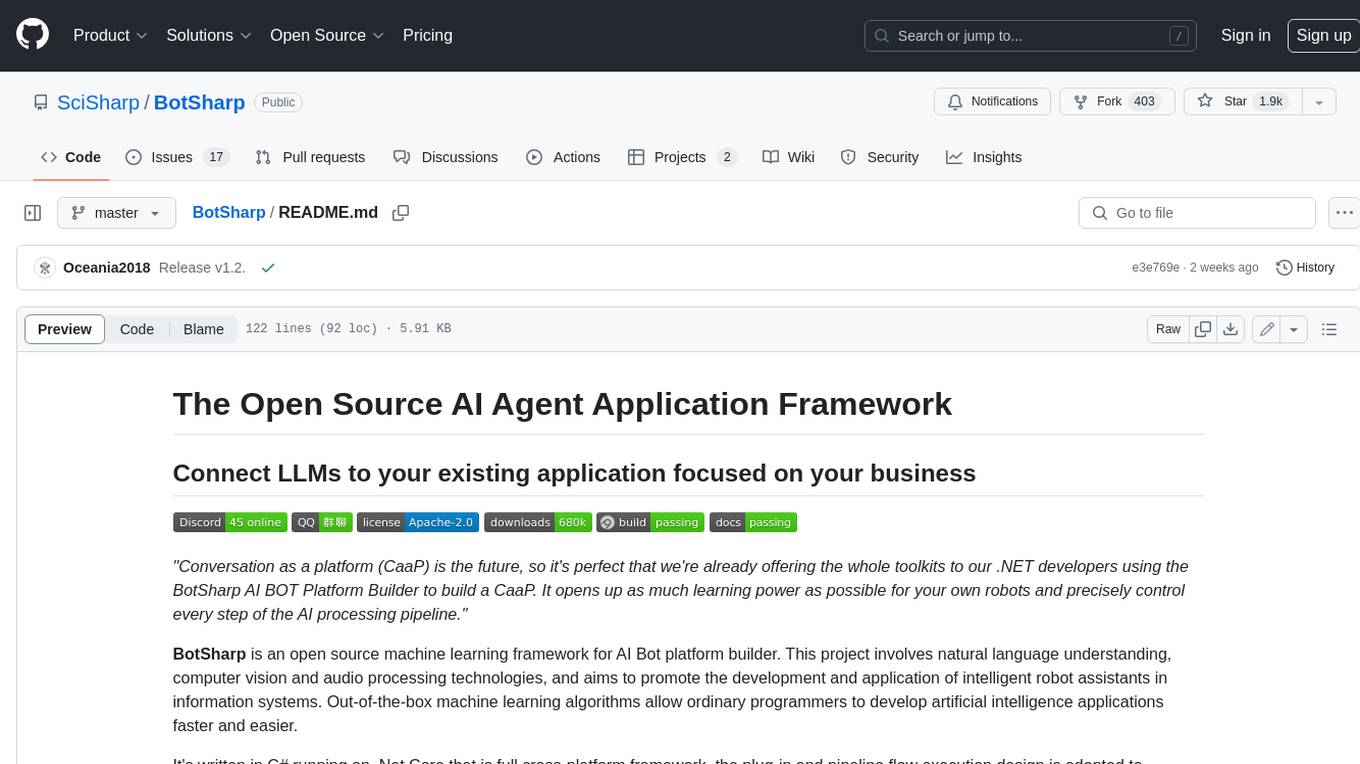

BotSharp

BotSharp is an open-source machine learning framework for building AI bot platforms. It provides a comprehensive set of tools and components for developing and deploying intelligent virtual assistants. BotSharp is designed to be modular and extensible, allowing developers to easily integrate it with their existing systems and applications. With BotSharp, you can quickly and easily create AI-powered chatbots, virtual assistants, and other conversational AI applications.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.