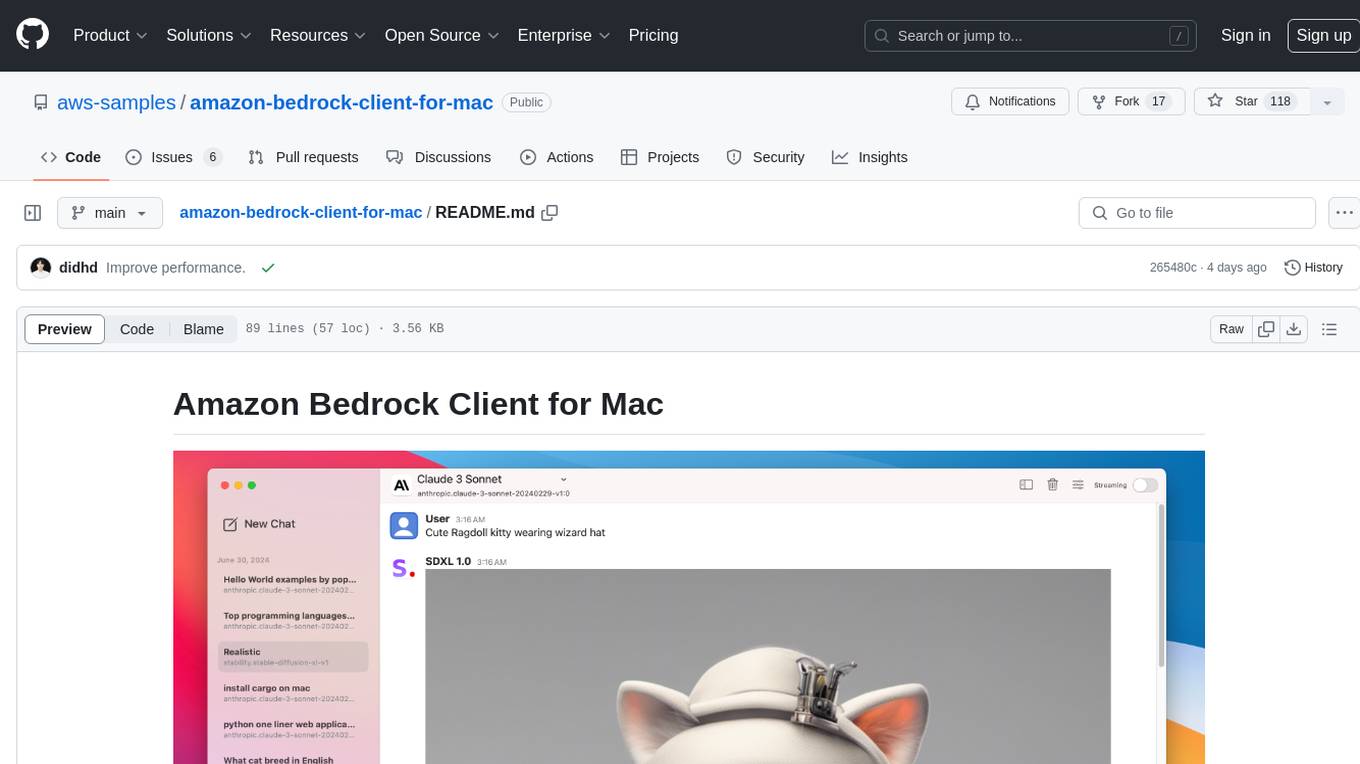

amazon-bedrock-client-for-mac

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop

Stars: 153

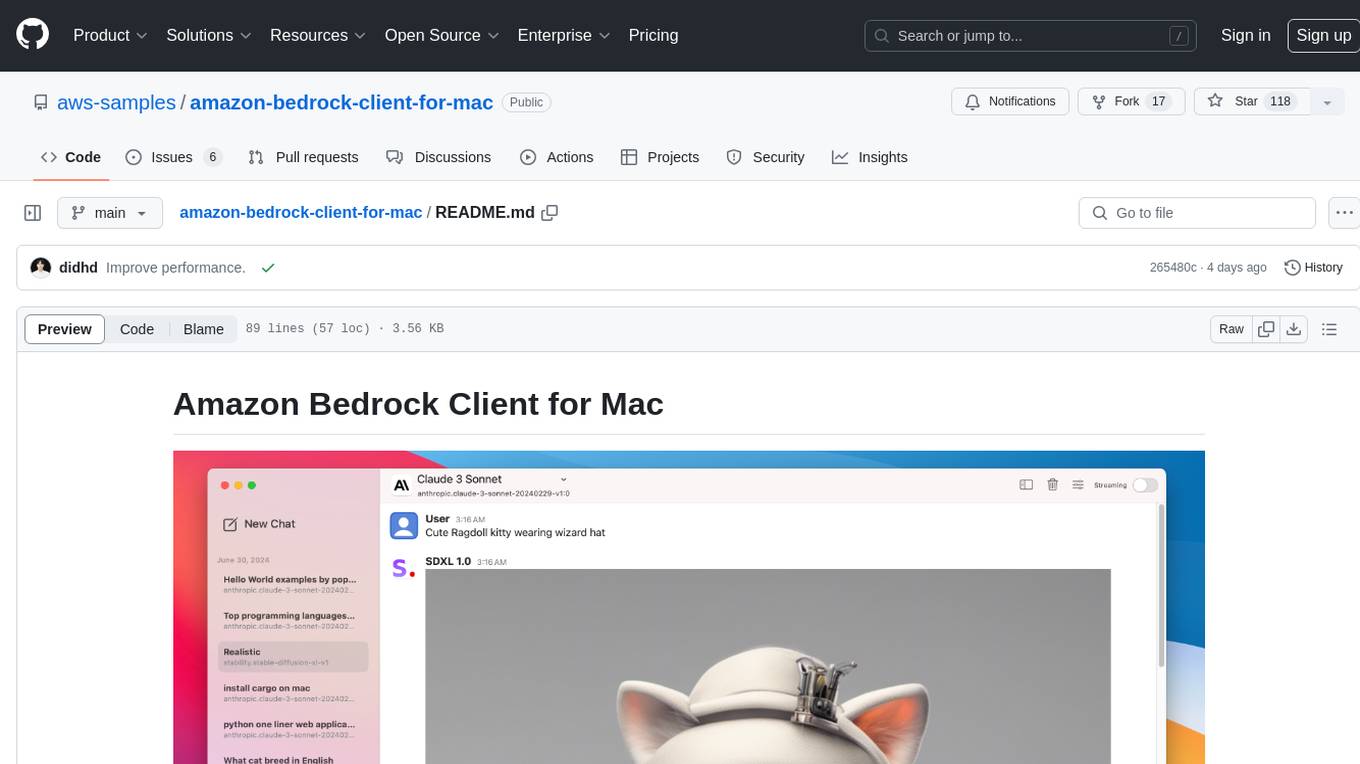

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop. It provides seamless interaction with multiple Amazon Bedrock models, real-time chat interface, easy model switching, support for various AI tasks, and native Dark Mode support. Built with SwiftUI for optimal performance and modern UI.

README:

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop.

Download • Features • Getting Started • Troubleshooting • Contributing

- 🤖 Seamless interaction with multiple Amazon Bedrock models including Claude 3 Sonnet

- 💬 Real-time chat interface with message history

- 🔄 Easy model switching and streaming toggle

- 📊 Support for various AI tasks including code generation and explanation

- 🌓 Native Dark Mode support

- 🚀 Built with SwiftUI for optimal performance and modern UI

Get the latest version of Amazon Bedrock Client for Mac:

- macOS 13 or later

- AWS Account with Bedrock access

- Download the latest release

- Open the DMG file and drag the app to your Applications folder

- Launch the app and configure your AWS credentials

If you see "'Amazon Bedrock Client for Mac.app' can't be opened because Apple cannot check it for malicious software":

- In Finder, locate "Amazon Bedrock Client for Mac.app"

- Right-click (or Control-click) and select "Open"

- Click "Open" in the dialog

- Select a model from the dropdown menu (e.g., Claude 3 Sonnet)

- Type your message or query in the input field

- Press Enter or click the send button to interact with the AI

- View the AI's response in the chat interface

- Toggle streaming on/off for real-time or batch responses

For common issues and their solutions, please refer to our Troubleshooting Guide.

This application is for demonstration and educational purposes only, not for production use. Refer to the AWS Well-Architected Framework Security Pillar for production deployment guidance.

We welcome contributions! Here's how you can help:

- Fork the project

- Create your feature branch (

git checkout -b feature/AmazingFeature) - Commit your changes (

git commit -am 'Add some AmazingFeature') - Push to the branch (

git push origin feature/AmazingFeature) - Open a Pull Request

This project is licensed under the MIT License - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for amazon-bedrock-client-for-mac

Similar Open Source Tools

amazon-bedrock-client-for-mac

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop. It provides seamless interaction with multiple Amazon Bedrock models, real-time chat interface, easy model switching, support for various AI tasks, and native Dark Mode support. Built with SwiftUI for optimal performance and modern UI.

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

macai

Macai is a native macOS client for interacting with modern AI tools, such as ChatGPT and Ollama. It features organized chats with custom system messages, system-defined light/dark themes, backup and restore functionality, customizable context size, support for any model with a compatible API, formatted code blocks and tables, multiple chat tabs, CoreData data storage, streamed responses, and automatic chat name generation. Macai is in active development, with contributions welcome.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

esp-ai

ESP-AI provides a complete AI conversation solution for your development board, including IAT+LLM+TTS integration solutions for ESP32 series development boards. It can be injected into projects without affecting existing ones. By providing keys from platforms like iFlytek, Jiling, and local services, you can run the services without worrying about interactions between services or between development boards and services. The project's server-side code is based on Node.js, and the hardware code is based on Arduino IDE.

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

gemini-next-chat

Gemini Next Chat is an open-source, extensible high-performance Gemini chatbot framework that supports one-click free deployment of private Gemini web applications. It provides a simple interface with image recognition and voice conversation, supports multi-modal models, talk mode, visual recognition, assistant market, support plugins, conversation list, full Markdown support, privacy and security, PWA support, well-designed UI, fast loading speed, static deployment, and multi-language support.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

Ollamac

Ollamac is a macOS app designed for interacting with Ollama models. It is optimized for macOS, allowing users to easily use any model from the Ollama library. The app features a user-friendly interface, chat archive for saving interactions, and real-time communication using HTTP streaming technology. Ollamac is open-source, enabling users to contribute to its development and enhance its capabilities. It requires macOS 14 or later and the Ollama system to be installed on the user's Mac with at least one Ollama model downloaded.

serverless-rag-demo

The serverless-rag-demo repository showcases a solution for building a Retrieval Augmented Generation (RAG) system using Amazon Opensearch Serverless Vector DB, Amazon Bedrock, Llama2 LLM, and Falcon LLM. The solution leverages generative AI powered by large language models to generate domain-specific text outputs by incorporating external data sources. Users can augment prompts with relevant context from documents within a knowledge library, enabling the creation of AI applications without managing vector database infrastructure. The repository provides detailed instructions on deploying the RAG-based solution, including prerequisites, architecture, and step-by-step deployment process using AWS Cloudshell.

enchanted

Enchanted is an open-source, Ollama-compatible app for macOS and iOS that allows users to work with privately hosted models such as Llama 2, Mistral, Vicuna, Starling, and more. It provides a user-friendly interface for interacting with these models, making it easy to generate text, translate languages, write different kinds of creative content, and more. The app is designed to be secure and private, ensuring that user data is protected. It also offers a range of features such as dark/light mode, conversation history, markdown support, voice prompts, and image attachments.

Flare

Flare is an open-source AI-powered decentralized social network client for Android/iOS/macOS, consolidating multiple social networks into one platform. It allows cross-posting content, ensures privacy, and plans to implement features like mixed timeline, AI-powered functions, and support for various platforms. The project is in active development and aims to provide a seamless social networking experience for users.

coreply

Coreply is an open-source Android app that provides texting suggestions while typing, enhancing the typing experience with intelligent, context-aware suggestions. It supports various texting apps and offers real-time AI suggestions, customizable LLM settings, and ensures no data collection. Users can install the app, configure it with an API key, and start receiving suggestions while typing in messaging apps. The tool supports different AI models from providers like OpenAI, Google AI Studio, Openrouter, Groq, and Codestral for chat completion and fill-in-the-middle tasks.

gpt_mobile

GPT Mobile is a chat assistant for Android that allows users to chat with multiple models at once. It supports various platforms such as OpenAI GPT, Anthropic Claude, and Google Gemini. Users can customize temperature, top p (Nucleus sampling), and system prompt. The app features local chat history, Material You style UI, dark mode support, and per app language setting for Android 13+. It is built using 100% Kotlin, Jetpack Compose, and follows a modern app architecture for Android developers.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

GeminiChatUp

Gemini ChatUp is a chat application utilizing the Google GeminiPro API Key. It supports responsive layout and can store multiple sets of conversations with customizable parameters for each set. Users can log in with a test account or provide their own API Key to deploy the feature. The application also offers user authentication through Edge config in Vercel, allowing users to add usernames and passwords in JSON format. Local deployment is possible by installing dependencies, setting up environment variables, and running the application locally.

For similar tasks

amazon-bedrock-client-for-mac

A sleek and powerful macOS client for Amazon Bedrock, bringing AI models to your desktop. It provides seamless interaction with multiple Amazon Bedrock models, real-time chat interface, easy model switching, support for various AI tasks, and native Dark Mode support. Built with SwiftUI for optimal performance and modern UI.

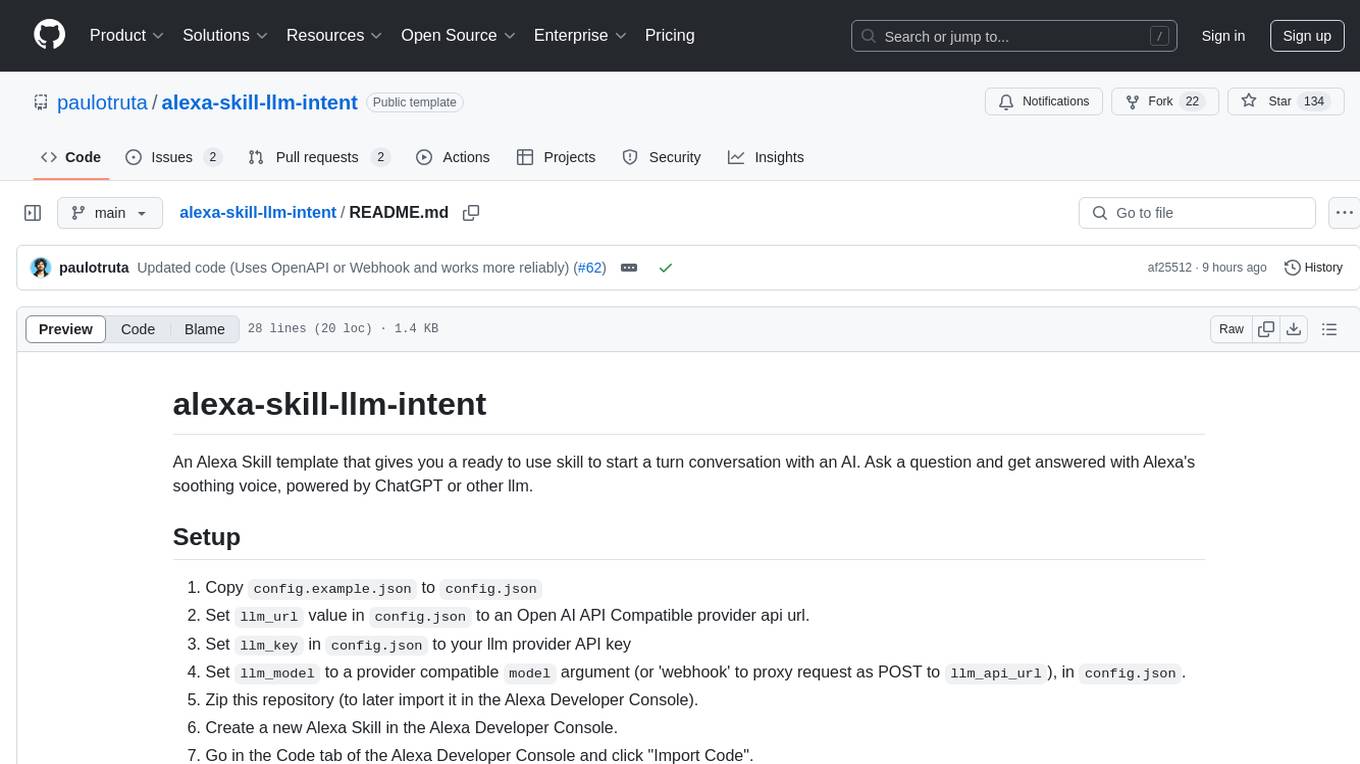

alexa-skill-llm-intent

An Alexa Skill template that provides a ready-to-use skill for starting a conversation with an AI. Users can ask questions and receive answers in Alexa's voice, powered by ChatGPT or other llm. The template includes setup instructions for configuring the AI provider API and model, as well as usage commands for interacting with the skill. It serves as a starting point for creating custom Alexa Skills and should be used at the user's own risk.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.