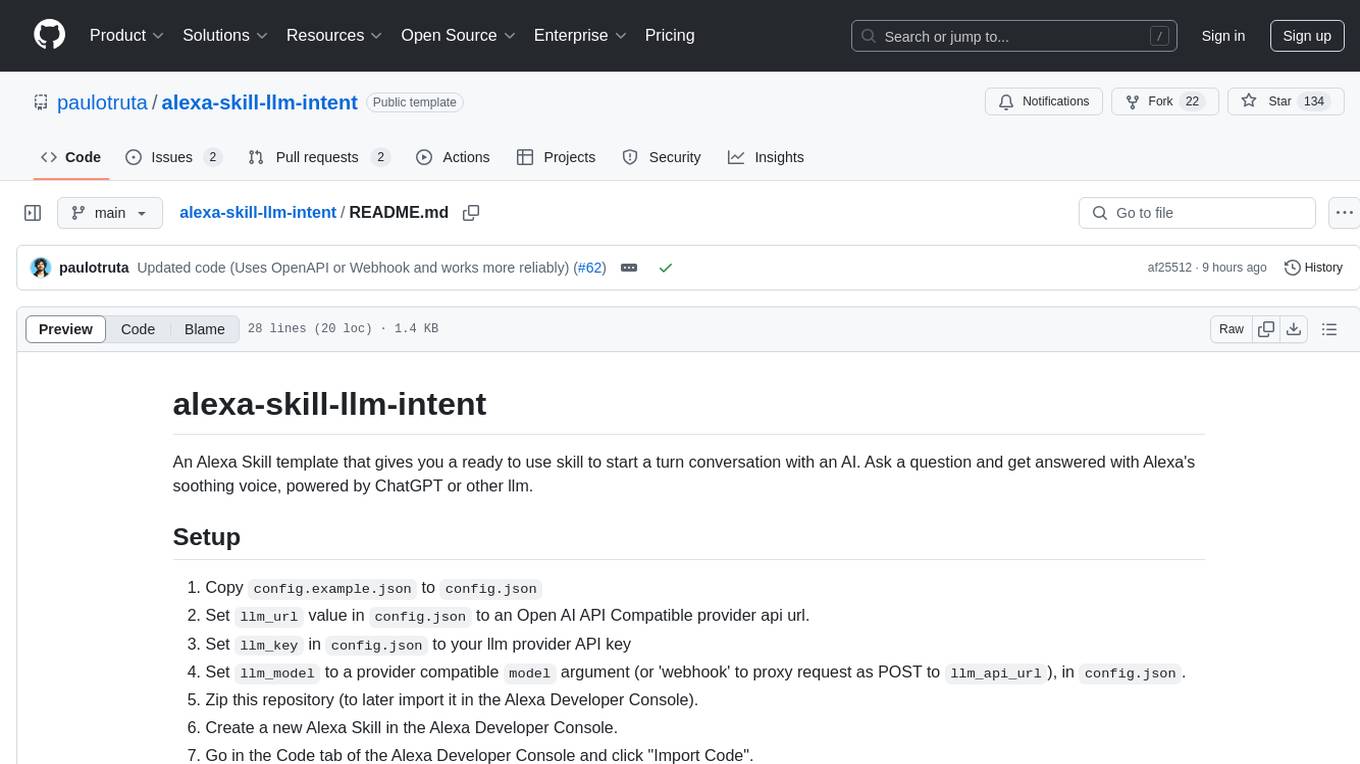

alexa-skill-llm-intent

Alexa Skill that provides turn based conversations with an AI LLM. Bringing AI to your Alexa, because Amazon doesn't.

Stars: 134

An Alexa Skill template that provides a ready-to-use skill for starting a conversation with an AI. Users can ask questions and receive answers in Alexa's voice, powered by ChatGPT or other llm. The template includes setup instructions for configuring the AI provider API and model, as well as usage commands for interacting with the skill. It serves as a starting point for creating custom Alexa Skills and should be used at the user's own risk.

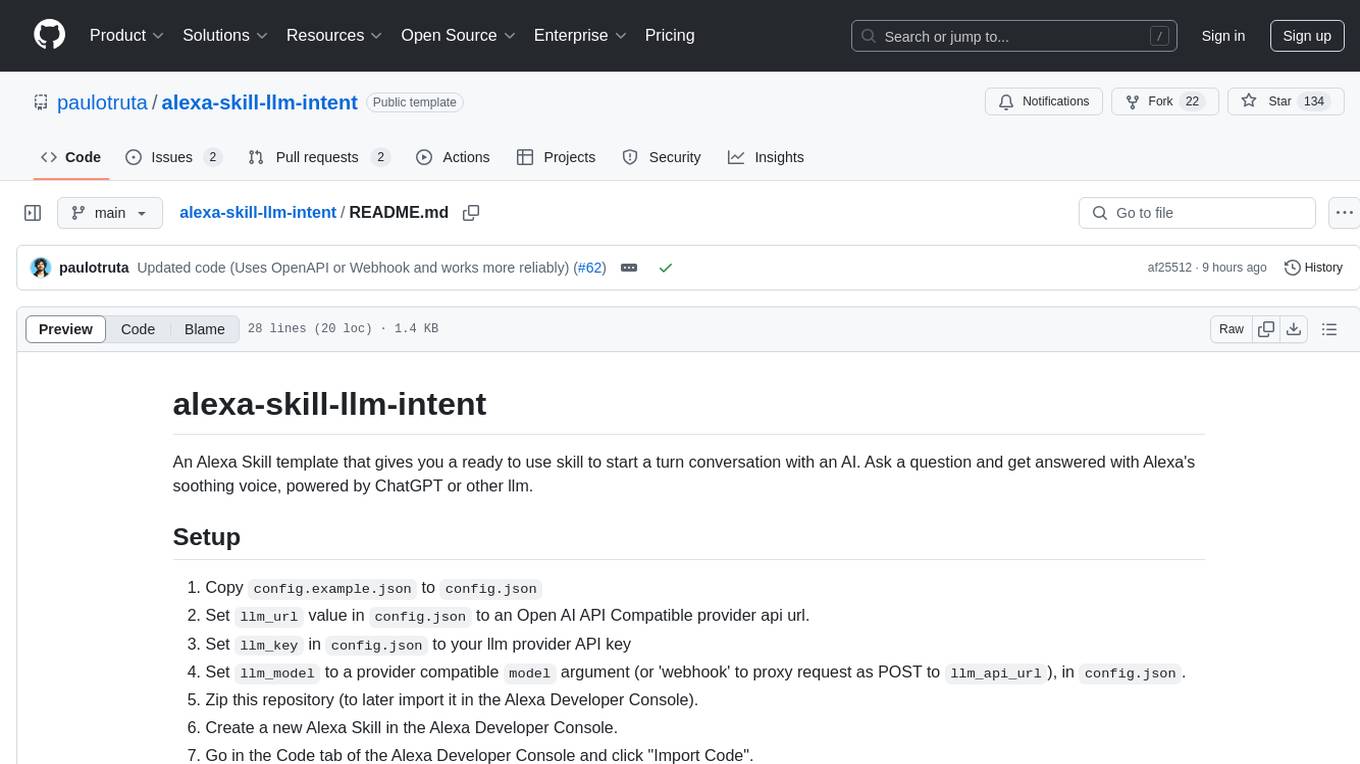

README:

An Alexa Skill template that gives you a ready to use skill to start a turn conversation with an AI. Ask a question and get answered with Alexa's soothing voice, powered by ChatGPT or other llm.

- Alexa Developer Account

- ASK CLI

- OpenAI API schema compatible llm provider API url, key, and model name: Open AI / Anthropic / OpenRouter

- Python 3.8 (optional for local development)

- AWS Account (optional for advanced deployment)

You should setup your configuration file by copying config.example.json to config.json and filling the required fields:

-

llm_url-> OpenAI API Schema Compatible provider api url, for example:https://openrouter.ai/api/v1/chat/completions -

llm_key-> Provider API key. -

llm_model-> Model name/version to use with the provider API, for example:google/gemini-2.0-flash-exp:free. Set to 'webhook' to proxy request as POST tollm_api_url, and sendingllm_keyas thetokenkey of the json body. -

invocation_name-> The anem you want to call your skill by. For example, if you add "gemini flash", you should call the skill like: "Alexa, ask gemini flash a question". (Note: This configuration variable is only taken into account to set the skill invocation name when deploying your skill using the Makefile command. If you're deploying manually or via AWS, you still need to manually edit yourinteractionsModelfile. Please look for further instructions below.)

To use this template, you need to at least have an account setup in the Alexa Developer Console.

There's three ways you can use this template in a skill:

-

Automated -> Using the

Makefileto create and manage a new or imported Alexa Hosted Skill project -

Manual -> in the the

Alexa Developer Consoleitself, by uploading a build package -

Advanced -> Using the

ask CLIto create and manage a new AWS-hosted or Self-hosted skill project using this repository as template.

ℹ️ This is the recommended way to create a new Alexa Skill using this template. It leverages the Ask CLI to create a new project and deploy it to your Alexa Developer Console. You can have multiple targets and deploy the template to different skills.

This method supports version control, testing, and debugging, and integrates with the Alexa Developer Console seamlessly.

⚠️ Make sure you have theaskCLI installed and configured with your Amazon Developer account before running this command. If not, install it by runningnpm install -g ask-cliand configure it by runningask configure.

Run the following command in your terminal:

make newAnd follow the wizard to create a new Alexa Skill project as a target, choosing the following options:

-

? Choose a modeling stack for your skill:

Interaction Model -

? Choose the programming language you will use to code your skill:

Python -

? Choose a method to host your skill's backend resources:

Alexa Hosted

⚠️ If you don't choose the specified options on the New Skill Wizard, the process could fail as this template is made to run an Interaction Model skill in Python, while the Makefile method currently only supports Alexa Hosted skills.

The skill will start being created:

🎯 Creating a new hosted skill target

Please follow the wizard to start your Alexa skill project ->

? Choose a modeling stack for your skill: Interaction Model

The Interaction Model stack enables you to define the user interactions with a combination of utterances, intents, and slots.

? Choose the programming language you will use to code your skill: Python

? Choose a method to host your skill's backend resources: Alexa-hosted skills

Host your skill code by Alexa (free).

? Choose the default region for your skill: eu-west-1

? Please type in your skill name: gemini flash

? Please type in your folder name for the skill project (alphanumeric): geminiflash

⠧ Creating your Alexa hosted skill. It will take about a minute.

(...)

Lambda code for gemini flash created at

./lambda

Skill schema and interactionModels for gemini flash created at

./skill-package

The skill has been enabled.

Hosted skill provisioning finished. Skill-Id: amzn1.ask.skill.b9198cd2-7e05-4119-bc9b-fe264d2b7fe0

Please follow the instructions at https://developer.amazon.com/en-US/docs/alexa/hosted-skills/alexa-hosted-skills-ask-cli.html to learn more about the usage of "git" for Hosted skill.

🔗 Finished. Current targets:

geminiflash perplexitysearch testapplication

✅ Hosted skill created. To push repo code, run 'make update'

A new "hello world" Alexa Hosted Skill target will show up in your Alexa Developer account, and is now ready to be updated with the template code.

⚠️ Due to instabilities on Amazon's infrastrucure side, sometimes this process can hang while the skill is being created. This can result in you seeing the skill in the developer console but not on your machine. Give it an hour, delete the skill and creating a new one again.

If you already have an existing Alexa Skill and want to import this template to it (overriding any previous code, model interactions, and actions), you can run:

make init id=<skill_id>This will import your skill as a Alexa Hosted Skill target, which you can then use to update the skill to use this template.

You can list all the existing Alexa Hosted Skill targets being managed by this project by running:

make listThis will return a list of <skill_slug> and the date they were created or imported, for example:

🔗 Available Targets:

perplexitysearch -> Created on Jan 13 02:12

testapplication -> Created on Jan 13 02:45

ℹ️ These are available in the

build/hostedfolder, and are the target hosted repositories, that can individually be managed by navigating to the respective folder and using theaskCLI.

When your skill was created or imported, it automatically use the config.json in the lambda directory as its configuration. But you might want to set a different configuration per target hosted skill. Use the following command to set a target configuration file:

make config skill=<skill_slug> file=<config_file_path>This will make a copy of this file into /build/hosted/<skill_slug>_config.json, which will be used by the skill when it is updated. The invocation words for the skill are set at update time using the invokation_name value in the config.json file.

After creating a new skill or importing an existing one, you can update the skill to use this template.

You can do this by running:

make update skill=<skill_slug>This will deploy the code to the Alexa Developer Console and trigger a Model and lambda function build. Once the deployment finishes, it will be ready to use.

You should also run this every time you make changes to the skill package or the lambda function code, to update the skill in the Alexa Developer Console.

⚠️ Currently this project only allows sync in one direction, from the local repository to the Alexa Developer Console. Any changes made in the Alexa Developer Console will be overwritten by the local repository when you run the update command.

You can debug the dialog model (using ask dialog) for a skill target project by running:

make dialog skill=<skill_slug> locale=<locale>You can debug the lambda function (using ask run) for a skill target project by running:

make debug skill=<skill_slug>ℹ️ This method is recommended for beginners, as it requires less configuration and manual steps. Follow this method if you are not familiar with the ASK CLI and want to use the Alexa Developer Console directly.

- Make sure you the

config.jsonfile andinvokation_namevalue inskill-package/interactionModels/custom/en-US.jsonis setup correctly. - Build the upload package by running

make package(to later import it in the Alexa Developer Console). - Create a new Alexa Skill in the Alexa Developer Console.

- Go in the Code tab of the Alexa Developer Console and click "Import Code".

- Select the zip file with the contents of this repository.

- Click "Save" and "Build Model". The skill should be ready to use.

For more information, check the documentation here: Importing a Skill into the Alexa Developer Console.

ℹ️ This method is not recommended for beginners, as it requires more manual steps and configuration and requires using an AWS account you own to host the lambda function. Only follow this method if you know what you're doing and have previous experience with Alexa Skills development using AWS.

Choose a location for your new skill project (not this repository, as it will be cloned). Run the following command in your terminal (at your chosen location) to start a new skill project using this template:

ask new --template-url https://github.com/paulotruta/alexa-skill-llm-intent.gitThis will use the contents of this repository to create a new Alexa Skill project in your account. Fill the required information in the wizard, and the project will be created.

After the project is created, you can deploy it to your Alexa Developer Console by running:

cd llm-intent

ask deploy

⚠️ Before running deploy, make sure you modify theconfig.jsonfile andinvokation_namevalue inskill-package/modelInteractions/custom/en-US.jsonwith the required configuration for the skill to work.

Full Documentation on the Ask CLI can be found here.

Once the skill is created, you can test it in the Alexa Developer Console or via your Alexa device directly!

Alexa, I want to ask <invokation_name> a questionAlexa, ask <invokation_name> about our solar systemAlexa, ask <invokation_name> to explain the NP theorem

To develop the skill locally, you should activate the virtual environment and install the required dependencies. You can do this by running:

make devYou can modify the skill package by changing the skill-package/interactionModels/custom/en-US.json file. This file contains the intents, slots and utterances that the skill will use to interact with the user.

skill-package/skill.json contains the skill metadata, such as the name, description, and invocation name. This is not required to be changed to only run the skill in development mode, but will be necessary if you ever want to use this in a live environment as a published skill.

For more information about the skill-package structure, check the Skill Package Format documentation.

When using the

(Automated) Makefilemethod to manage Alexa Hosted Skill targets, you can debug their dialog model by using themake dialog skill=<skill_slug>command, which will open the dialog model test CLI for that specific skill.

The skill code is a python lambda function and is located in the lambda/ folder. The main file is lambda_function.py, which contains the Lambda handlerfor the supported intents, and is the entrypoint for the rest of the code.

ℹ️ When using the

(Automated) Makefilemethod to manage Alexa Hosted Skill targets, you can debug the lambda function by using themake debug skill=<skill_slug>command, which enables you to test your skill code locally against your skill invocations by routing requests to your developer machine. This enables you to verify changes quickly to skill code as you can test without needing to deploy skill code to Lambda.

⚠️ Because of Alexa hosted skills limitations, debugging usingmake debug skill=<skill_slug>(or theask runCLI command) is currently only available to customers in the NA region. You will only be able to use the debugger this way if your skill is hosted in one of the US regions.

Use at your own risk. This is a template and should be used as a starting point for your own Alexa Skill. The code is provided as is and I am not responsible for any misuse or damages caused by this code.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for alexa-skill-llm-intent

Similar Open Source Tools

alexa-skill-llm-intent

An Alexa Skill template that provides a ready-to-use skill for starting a conversation with an AI. Users can ask questions and receive answers in Alexa's voice, powered by ChatGPT or other llm. The template includes setup instructions for configuring the AI provider API and model, as well as usage commands for interacting with the skill. It serves as a starting point for creating custom Alexa Skills and should be used at the user's own risk.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

reai-ghidra

The RevEng.AI Ghidra Plugin by RevEng.ai allows users to interact with their API within Ghidra for Binary Code Similarity analysis to aid in Reverse Engineering stripped binaries. Users can upload binaries, rename functions above a confidence threshold, and view similar functions for a selected function.

openui

OpenUI is a tool designed to simplify the process of building UI components by allowing users to describe UI using their imagination and see it rendered live. It supports converting HTML to React, Svelte, Web Components, etc. The tool is open source and aims to make UI development fun, fast, and flexible. It integrates with various AI services like OpenAI, Groq, Gemini, Anthropic, Cohere, and Mistral, providing users with the flexibility to use different models. OpenUI also supports LiteLLM for connecting to various LLM services and allows users to create custom proxy configs. The tool can be run locally using Docker or Python, and it offers a development environment for quick setup and testing.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

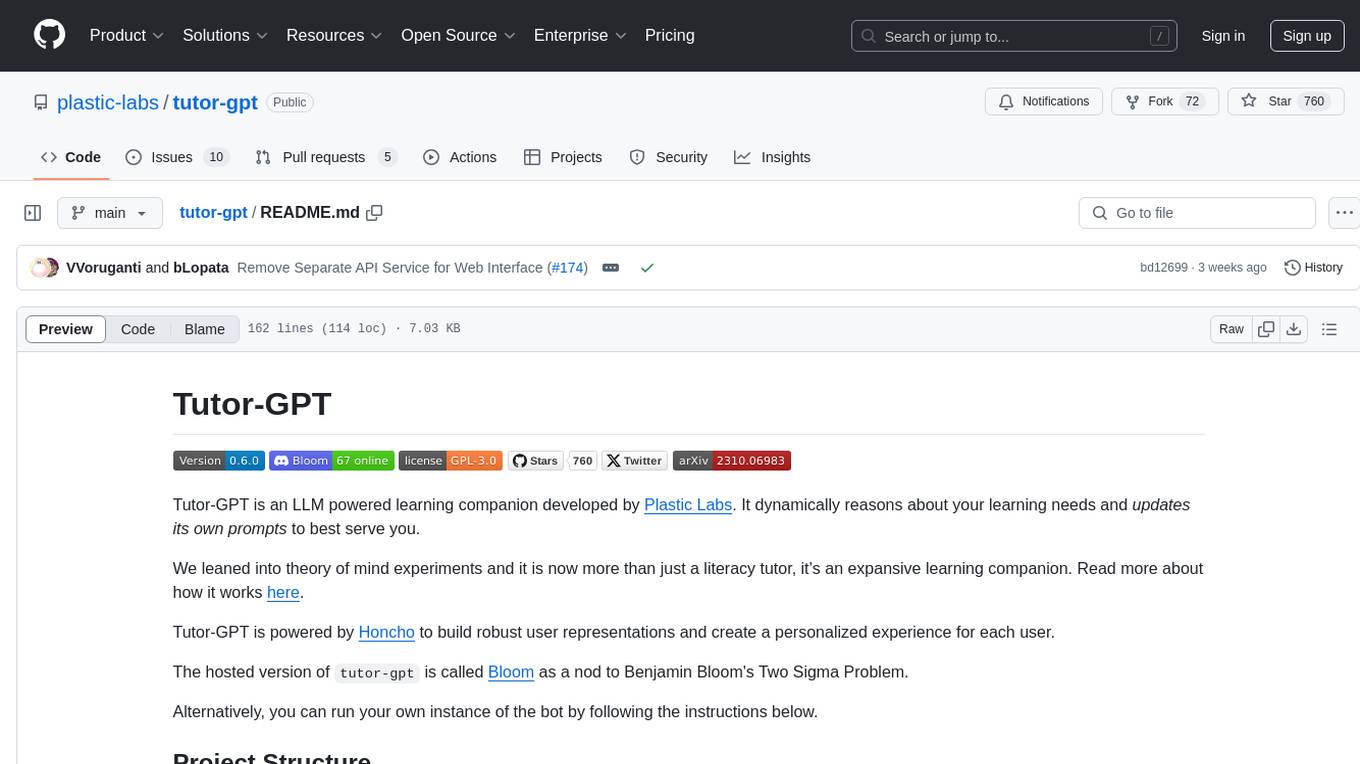

tutor-gpt

Tutor-GPT is an LLM powered learning companion developed by Plastic Labs. It dynamically reasons about your learning needs and updates its own prompts to best serve you. It is an expansive learning companion that uses theory of mind experiments to provide personalized learning experiences. The project is split into different modules for backend logic, including core logic, discord bot implementation, FastAPI API interface, NextJS web front end, common utilities, and SQL scripts for setting up local supabase. Tutor-GPT is powered by Honcho to build robust user representations and create personalized experiences for each user. Users can run their own instance of the bot by following the provided instructions.

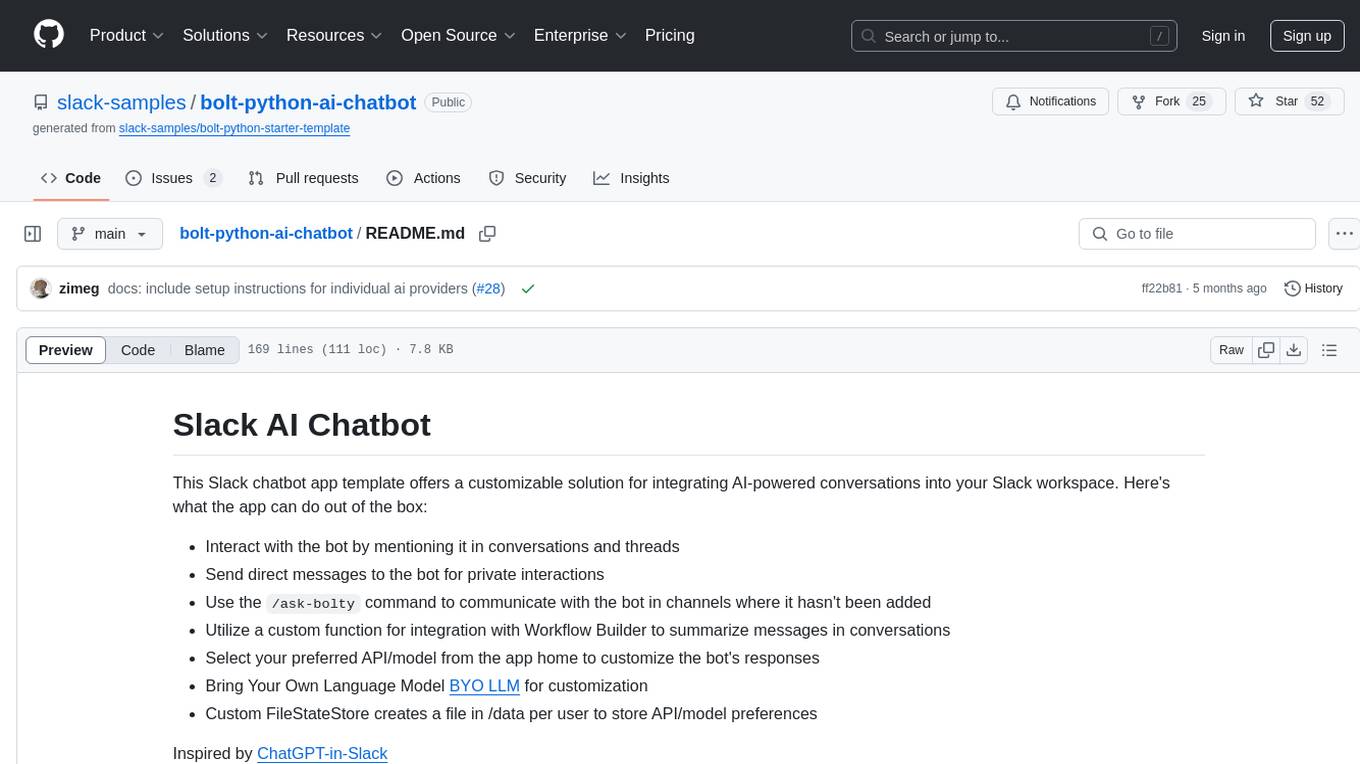

bolt-python-ai-chatbot

The 'bolt-python-ai-chatbot' is a Slack chatbot app template that allows users to integrate AI-powered conversations into their Slack workspace. Users can interact with the bot in conversations and threads, send direct messages for private interactions, use commands to communicate with the bot, customize bot responses, and store user preferences. The app supports integration with Workflow Builder, custom language models, and different AI providers like OpenAI, Anthropic, and Google Cloud Vertex AI. Users can create user objects, manage user states, and select from various AI models for communication.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

micro-agent

Micro Agent is an AI tool designed to write and fix code for users by generating code that passes specified tests or matches design screenshots. It aims to streamline the code generation process by leveraging AI capabilities to iterate and improve code until desired outcomes are achieved. The tool focuses on test-driven development and provides interactive features for user feedback. Micro Agent is not intended to be a comprehensive development tool but rather a specialized agent for code generation and iteration.

civitai

Civitai is a platform where people can share their stable diffusion models (textual inversions, hypernetworks, aesthetic gradients, VAEs, and any other crazy stuff people do to customize their AI generations), collaborate with others to improve them, and learn from each other's work. The platform allows users to create an account, upload their models, and browse models that have been shared by others. Users can also leave comments and feedback on each other's models to facilitate collaboration and knowledge sharing.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

dockershrink

Dockershrink is an AI-powered Commandline Tool designed to help reduce the size of Docker images. It combines traditional Rule-based analysis with Generative AI techniques to optimize Image configurations. The tool supports NodeJS applications and aims to save costs on storage, data transfer, and build times while increasing developer productivity. By automatically applying advanced optimization techniques, Dockershrink simplifies the process for engineers and organizations, resulting in significant savings and efficiency improvements.

cog-comfyui

Cog-comfyui allows users to run ComfyUI workflows on Replicate. ComfyUI is a visual programming tool for creating and sharing generative art workflows. With cog-comfyui, users can access a variety of pre-trained models and custom nodes to create their own unique artworks. The tool is easy to use and does not require any coding experience. Users simply need to upload their API JSON file and any necessary input files, and then click the "Run" button. Cog-comfyui will then generate the output image or video file.

cog-comfyui

Cog-ComfyUI is a tool designed to run ComfyUI workflows on Replicate. It allows users to easily integrate their own workflows into their app or website using the Replicate API. The tool includes popular model weights and custom nodes, with the option to request more custom nodes or models. Users can get their API JSON, gather input files, and use custom LoRAs from CivitAI or HuggingFace. Additionally, users can run their workflows and set up their own dedicated instances for better performance and control. The tool provides options for private deployments, forking using Cog, or creating new models from the train tab on Replicate. It also offers guidance on developing locally and running the Web UI from a Cog container.

LLM_AppDev-HandsOn

This repository showcases how to build a simple LLM-based chatbot for answering questions based on documents using retrieval augmented generation (RAG) technique. It also provides guidance on deploying the chatbot using Podman or on the OpenShift Container Platform. The workshop associated with this repository introduces participants to LLMs & RAG concepts and demonstrates how to customize the chatbot for specific purposes. The software stack relies on open-source tools like streamlit, LlamaIndex, and local open LLMs via Ollama, making it accessible for GPU-constrained environments.

For similar tasks

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

chat-ollama

ChatOllama is an open-source chatbot based on LLMs (Large Language Models). It supports a wide range of language models, including Ollama served models, OpenAI, Azure OpenAI, and Anthropic. ChatOllama supports multiple types of chat, including free chat with LLMs and chat with LLMs based on a knowledge base. Key features of ChatOllama include Ollama models management, knowledge bases management, chat, and commercial LLMs API keys management.

ChatIDE

ChatIDE is an AI assistant that integrates with your IDE, allowing you to converse with OpenAI's ChatGPT or Anthropic's Claude within your development environment. It provides a seamless way to access AI-powered assistance while coding, enabling you to get real-time help, generate code snippets, debug errors, and brainstorm ideas without leaving your IDE.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

googlegpt

GoogleGPT is a browser extension that brings the power of ChatGPT to Google Search. With GoogleGPT, you can ask ChatGPT questions and get answers directly in your search results. You can also use GoogleGPT to generate text, translate languages, and more. GoogleGPT is compatible with all major browsers, including Chrome, Firefox, Edge, and Safari.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.