xiaogpt

Play ChatGPT and other LLM with Xiaomi AI Speaker

Stars: 6499

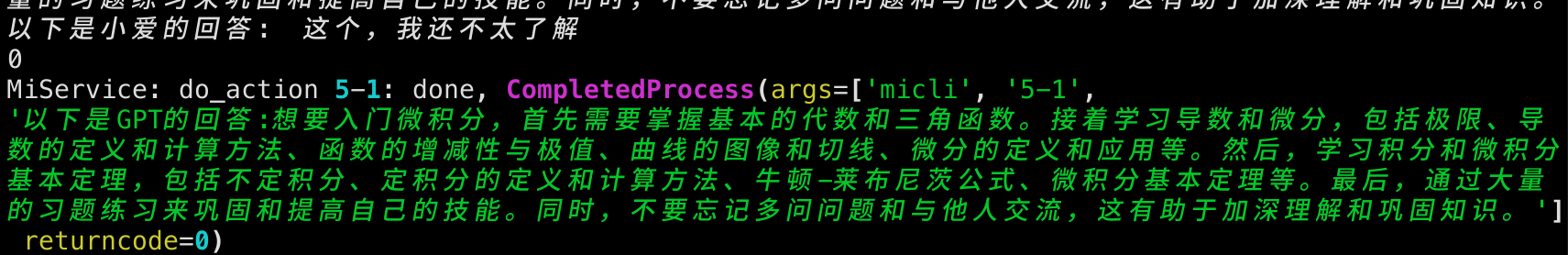

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

README:

Play ChatGPT and other LLM with Xiaomi AI Speaker

| 系统和Shell | Linux *sh | Windows CMD用户 | Windows PowerShell用户 |

|---|---|---|---|

| 1、安装包 | pip install miservice_fork |

pip install miservice_fork |

pip install miservice_fork |

| 2、设置变量 |

export MI_USER=xxx export MI_PASS=xxx

|

set MI_USER=xxxset MI_PASS=xxx

|

$env:MI_USER="xxx" $env:MI_PASS="xxx"

|

| 3、取得MI_DID | micli list |

micli list |

micli list |

| 4、设置MI_DID | export MI_DID=xxx |

set MI_DID=xxx |

$env:MI_DID="xxx" |

- 注意不同shell 对环境变量的处理是不同的,尤其是powershell赋值时,可能需要双引号来包括值。

- 如果获取did报错时,请更换一下无线网络,有很大概率解决问题。

- ChatGPT id

- 小爱音响

- 能正常联网的环境或 proxy

- python3.8+

pip install -U --force-reinstall xiaogpt[locked]- 参考我 fork 的 MiService 项目 README 并在本地 terminal 跑

micli list拿到你音响的 DID 成功 别忘了设置 export MI_DID=xxx 这个 MI_DID 用 - run

xiaogpt --hardware ${your_hardware} --use_chatgpt_apihardware 你看小爱屁股上有型号,输入进来,如果在屁股上找不到或者型号不对,可以用micli mina找到型号 - 跑起来之后就可以问小爱同学问题了,“帮我"开头的问题,会发送一份给 ChatGPT 然后小爱同学用 tts 回答

- 如果上面不可用,可以尝试用手机抓包,https://userprofile.mina.mi.com/device_profile/v2/conversation 找到 cookie 利用

--cookie '${cookie}'cookie 别忘了用单引号包裹 - 默认用目前 ubus, 如果你的设备不支持 ubus 可以使用

--use_command来使用 command 来 tts - 使用

--mute_xiaoai选项,可以快速停掉小爱的回答 - 使用

--account ${account} --password ${password} - 如果有能力可以自行替换唤醒词,也可以去掉唤醒词

- 使用

--use_chatgpt_api的 api 那样可以更流畅的对话,速度特别快,达到了对话的体验, openai api, 命令--use_chatgpt_api - 如果你遇到了墙需要用 Cloudflare Workers 替换 api_base 请使用

--api_base ${url}来替换。 请注意,此处你输入的api应该是'https://xxxx/v1'的字样,域名需要用引号包裹 -

--use_moonshot_apiand other models please refer below - 可以跟小爱说

开始持续对话自动进入持续对话状态,结束持续对话结束持续对话状态。 - 可以使用

--tts edge来获取更好的 tts 能力 - 可以使用

--tts fish --fish_api_key <your-fish-key> --fish_voice_key <fish-voice>来获取 fish-audio 能力(如何获取 fish voice 见下) - 可以使用

--tts openai来获取 openai tts 能力 - 可以使用

--tts azure --azure_tts_speech_key <your-speech-key>来获取 Azure TTS 能力 - 可以使用

--use_langchain替代--use_chatgpt_api来调用 LangChain(默认 chatgpt)服务,实现上网检索、数学运算..

e.g.

export OPENAI_API_KEY=${your_api_key}

xiaogpt --hardware LX06 --use_chatgpt_api

# or

xiaogpt --hardware LX06 --cookie ${cookie} --use_chatgpt_api

# 如果你想直接输入账号密码

xiaogpt --hardware LX06 --account ${your_xiaomi_account} --password ${your_password} --use_chatgpt_api

# 如果你想 mute 小米的回答

xiaogpt --hardware LX06 --mute_xiaoai --use_chatgpt_api

# 使用流式响应,获得更快的响应

xiaogpt --hardware LX06 --mute_xiaoai --stream

# 如果你想使用 google 的 gemini

xiaogpt --hardware LX06 --mute_xiaoai --use_gemini --gemini_key ${gemini_key}

# 如果你想使用自己的 google gemini 服务

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --use_gemini --gemini_key ${gemini_key} --gemini_api_domain ${gemini_api_domain}

# 如果你想使用阿里的通义千问

xiaogpt --hardware LX06 --mute_xiaoai --use_qwen --qwen_key ${qwen_key}

# 如果你想使用 kimi

xiaogpt --hardware LX06 --mute_xiaoai --use_moonshot_api --moonshot_api_key ${moonshot_api_key}

# 如果你想使用 llama3

xiaogpt --hardware LX06 --mute_xiaoai --use_llama --llama_api_key ${llama_api_key}

# 如果你想使用 01

xiaogpt --hardware LX06 --mute_xiaoai --use_yi_api --ti_api_key ${yi_api_key}

# 如果你想使用 LangChain+SerpApi 实现上网检索或其他本地服务(目前仅支持 stream 模式)

export OPENAI_API_KEY=${your_api_key}

export SERPAPI_API_KEY=${your_serpapi_key}

xiaogpt --hardware Lx06 --use_langchain --mute_xiaoai --stream --openai_key ${your_api_key} --serpapi_api_key ${your_serpapi_key}使用 git clone 运行

export OPENAI_API_KEY=${your_api_key}

python3 xiaogpt.py --hardware LX06

# or

python3 xiaogpt.py --hardware LX06 --cookie ${cookie}

# 如果你想直接输入账号密码

python3 xiaogpt.py --hardware LX06 --account ${your_xiaomi_account} --password ${your_password} --use_chatgpt_api

# 如果你想 mute 小米的回答

python3 xiaogpt.py --hardware LX06 --mute_xiaoai

# 使用流式响应,获得更快的响应

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --stream

# 如果你想使用 ChatGLM api

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --use_glm --glm_key ${glm_key}

# 如果你想使用 google 的 gemini

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --use_gemini --gemini_key ${gemini_key}

# 如果你想使用自己的 google gemini 服务

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --use_gemini --gemini_key ${gemini_key} --gemini_api_domain ${gemini_api_domain}

# 如果你想使用阿里的通义千问

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --use_qwen --qwen_key ${qwen_key}

# 如果你想使用 kimi

xiaogpt --hardware LX06 --mute_xiaoai --use_moonshot_api --moonshot_api_key ${moonshot_api_key}

# 如果你想使用 01

xiaogpt --hardware LX06 --mute_xiaoai --use_yi_api --ti_api_key ${yi_api_key}

# 如果你想使用豆包

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --use_doubao --stream --volc_access_key xxxx --volc_secret_key xxx

# 如果你想使用 llama3

python3 xiaogpt.py --hardware LX06 --mute_xiaoai --use_llama --llama_api_key ${llama_api_key}

# 如果你想使用 LangChain+SerpApi 实现上网检索或其他本地服务(目前仅支持 stream 模式)

export OPENAI_API_KEY=${your_api_key}

export SERPAPI_API_KEY=${your_serpapi_key}

python3 xiaogpt.py --hardware Lx06 --use_langchain --mute_xiaoai --stream --openai_key ${your_api_key} --serpapi_api_key ${your_serpapi_key}如果想通过单一配置文件启动也是可以的, 可以通过 --config 参数指定配置文件, config 文件必须是合法的 Yaml 或 JSON 格式

参数优先级

- cli args > default > config

python3 xiaogpt.py --config xiao_config.yaml

# or

xiaogpt --config xiao_config.yaml或者

cp xiao_config.yaml.example xiao_config.yaml

python3 xiaogpt.py若要指定 OpenAI 的模型参数,如 model, temporature, top_p, 请在 config.yaml 中指定:

gpt_options:

temperature: 0.9

top_p: 0.9具体参数作用请参考 Open AI API 文档。 ChatGLM 文档

| 参数 | 说明 | 默认值 | 可选值 |

|---|---|---|---|

| hardware | 设备型号 | ||

| account | 小爱账户 | ||

| password | 小爱账户密码 | ||

| openai_key | openai的apikey | ||

| moonshot_api_key | moonshot kimi 的 apikey | ||

| yi_api_key | 01 wanwu 的 apikey | ||

| llama_api_key | groq 的 llama3 apikey | ||

| serpapi_api_key | serpapi的key 参考 SerpAPI | ||

| glm_key | chatglm 的 apikey | ||

| gemini_key | gemini 的 apikey 参考 | ||

| gemini_api_domain | gemini 的自定义域名 参考 | ||

| qwen_key | qwen 的 apikey 参考 | ||

| cookie | 小爱账户cookie (如果用上面密码登录可以不填) | ||

| mi_did | 设备did | ||

| use_command | 使用 MI command 与小爱交互 | false |

|

| mute_xiaoai | 快速停掉小爱自己的回答 | true |

|

| verbose | 是否打印详细日志 | false |

|

| bot | 使用的 bot 类型,目前支持 chatgptapi,newbing, qwen, gemini | chatgptapi |

|

| tts | 使用的 TTS 类型 | mi |

edge、 openai、azure、volc、baidu、google、minimax

|

| tts_options | TTS 参数字典,参考 tetos 获取可用参数 | ||

| prompt | 自定义prompt | 请用100字以内回答 |

|

| keyword | 自定义请求词列表 | ["请"] |

|

| change_prompt_keyword | 更改提示词触发列表 | ["更改提示词"] |

|

| start_conversation | 开始持续对话关键词 | 开始持续对话 |

|

| end_conversation | 结束持续对话关键词 | 结束持续对话 |

|

| stream | 使用流式响应,获得更快的响应 | true |

|

| proxy | 支持 HTTP 代理,传入 http proxy URL | "" | |

| gpt_options | OpenAI API 的参数字典 | {} |

|

| deployment_id | Azure OpenAI 服务的 deployment ID | 参考这个如何找到deployment_id | |

| api_base | 如果需要替换默认的api,或者使用Azure OpenAI 服务 | 例如:https://abc-def.openai.azure.com/

|

|

| volc_access_key | 火山引擎的 access key 请在这里获取 | ||

| volc_secret_key | 火山引擎的 secret key 请在这里获取 |

- 请开启小爱同学的蓝牙

- 如果要更改提示词和 PROMPT 在代码最上面自行更改

- 目前已知 LX04、X10A 和 L05B L05C 可能需要使用

--use_command,否则可能会出现终端能输出GPT的回复但小爱同学不回答GPT的情况。这几个型号也只支持小爱原本的 tts. - 在wsl使用时, 需要设置代理为 http://wls的ip:port(vpn的代理端口), 否则会出现连接超时的情况, 详情 报错: Error communicating with OpenAI

- 用破解么?不用

- 你做这玩意也没用啊?确实。。。但是挺好玩的,有用对你来说没用,对我们来说不一定呀

- 想把它变得更好?PR Issue always welcome.

- 还有问题?提 Issue 哈哈

- Exception: Error https://api2.mina.mi.com/admin/v2/device_list?master=0&requestId=app_ios_xxx: Login failed @KJZH001

这是由于小米风控导致,海外地区无法登录大陆的账户,请尝试cookie登录 无法抓包的可以在本地部署完毕项目后再用户文件夹C:\Users\用户名下面找到.mi.token,然后扔到你无法登录的服务器去

若是linux则请放到当前用户的home文件夹,此时你可以重新执行先前的命令,不出意外即可正常登录(但cookie可能会过一段时间失效,需要重新获取)

详情请见 https://github.com/yihong0618/xiaogpt/issues/332

https://www.youtube.com/watch?v=K4YA8YwzOOA

X86/ARM Docker Image: yihong0618/xiaogpt

docker run -e OPENAI_API_KEY=<your-openapi-key> yihong0618/xiaogpt <命令行参数>如

docker run -e OPENAI_API_KEY=<your-openapi-key> yihong0618/xiaogpt --account=<your-xiaomi-account> --password=<your-xiaomi-password> --hardware=<your-xiaomi-hardware> --use_chatgpt_apixiaogpt的配置文件可通过指定volume /config,以及指定参数--config来处理,如

docker run -v <your-config-dir>:/config yihong0618/xiaogpt --config=/config/config.yamldocker run -v <your-config-dir>:/config --network=host yihong0618/xiaogpt --config=/config/config.yaml docker build -t xiaogpt .如果在安装依赖时构建失败或安装缓慢时,可以在构建 Docker 镜像时使用 --build-arg 参数来指定国内源地址:

docker build --build-arg PIP_INDEX_URL=https://pypi.tuna.tsinghua.edu.cn/simple -t xiaogpt .如果需要在Apple M1/M2上编译x86

docker buildx build --platform=linux/amd64 -t xiaogpt-x86 .我们目前支持是三种第三方 TTS:edge/openai/azure/volc/baidu/google

edge-tts 提供了类似微软tts的能力 azure-tts 提供了微软 azure tts 的能力 openai-tts 提供了类似 openai tts 的能力 fish-tts 提供了 fish tts 的能力

你可以通过参数 tts, 来启用它

tts: edgeFor edge 查看更多语言支持, 从中选择一个

edge-tts --list-voices如果你想使用 fish-tts

- 注册 https://fish.audio/zh-CN/go-api/ 拿到 api key

- 选择你想要的声音自建声音或者使用热门声音 https://fish.audio/zh-CN/text-to-speech/?modelId=e80ea225770f42f79d50aa98be3cedfc 其中

e80ea225770f42f79d50aa98be3cedfc就声音的 key id - python3 xiaogpt.py --hardware LX06 --account xxxx --password xxxxx --use_chatgpt_api --mute_xiaoai --stream --tts fish --fish_api_key xxxxx --fish_voice_key xxxxx

- 或者在 xiao_config.yaml 中配置

tts: fish

# TTS 参数字典,参考 https://github.com/frostming/tetos 获取可用参数

tts_options: {

"api_key": "xxxxx",

"voice": "xxxxxx"

}

由于 Edge TTS 启动了一个本地的 HTTP 服务,所以需要将容器的端口映射到宿主机上,并且指定本地机器的 hostname:

docker run -v <your-config-dir>:/config -p 9527:9527 -e XIAOGPT_HOSTNAME=<your ip> yihong0618/xiaogpt --config=/config/config.yaml注意端口必须映射为与容器内一致,XIAOGPT_HOSTNAME 需要设置为宿主机的 IP 地址,否则小爱无法正常播放语音。

谢谢就够了

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for xiaogpt

Similar Open Source Tools

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

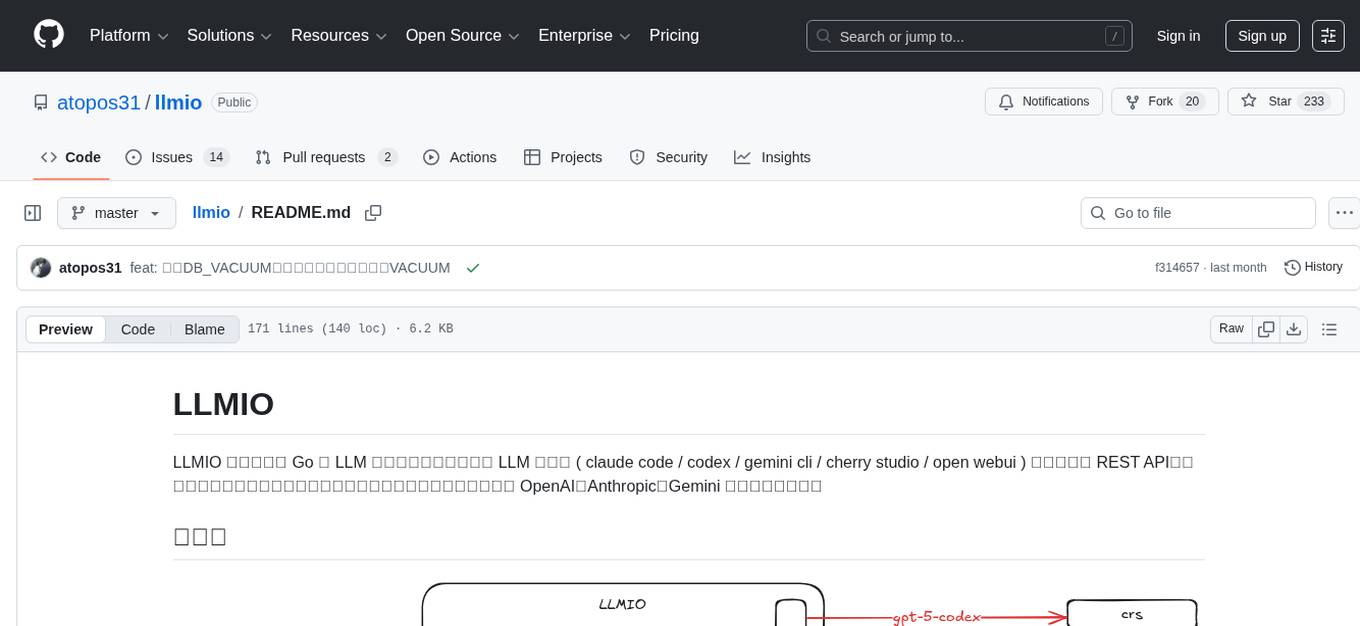

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

daily_stock_analysis

The daily_stock_analysis repository is an intelligent stock analysis system based on AI large models for A-share/Hong Kong stock/US stock selection. It automatically analyzes and pushes a 'decision dashboard' to WeChat Work/Feishu/Telegram/email daily. The system features multi-dimensional analysis, global market support, market review, AI backtesting validation, multi-channel notifications, and scheduled execution using GitHub Actions. It utilizes AI models like Gemini, OpenAI, DeepSeek, and data sources like AkShare, Tushare, Pytdx, Baostock, YFinance for analysis. The system includes built-in trading disciplines like risk warning, trend trading, precise entry/exit points, and checklist marking for conditions.

pi-browser

Pi-Browser is a CLI tool for automating browsers based on multiple AI models. It supports various AI models like Google Gemini, OpenAI, Anthropic Claude, and Ollama. Users can control the browser using natural language commands and perform tasks such as web UI management, Telegram bot integration, Notion integration, extension mode for maintaining Chrome login status, parallel processing with multiple browsers, and offline execution with the local AI model Ollama.

ChatTTS-Forge

ChatTTS-Forge is a powerful text-to-speech generation tool that supports generating rich audio long texts using a SSML-like syntax and provides comprehensive API services, suitable for various scenarios. It offers features such as batch generation, support for generating super long texts, style prompt injection, full API services, user-friendly debugging GUI, OpenAI-style API, Google-style API, support for SSML-like syntax, speaker management, style management, independent refine API, text normalization optimized for ChatTTS, and automatic detection and processing of markdown format text. The tool can be experienced and deployed online through HuggingFace Spaces, launched with one click on Colab, deployed using containers, or locally deployed after cloning the project, preparing models, and installing necessary dependencies.

react-native-nitro-mlx

The react-native-nitro-mlx repository allows users to run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift. It provides functionalities for downloading models, loading and generating responses, streaming audio, text-to-speech, and speech-to-text capabilities. Users can interact with various MLX-compatible models from Hugging Face, with pre-defined models available for convenience. The repository supports iOS 26.0+ and offers detailed API documentation for each feature.

we-mp-rss

We-MP-RSS is a tool for subscribing to and managing WeChat official account content, providing RSS subscription functionality. It allows users to fetch and parse WeChat official account content, generate RSS feeds, manage subscriptions via a user-friendly web interface, automatically update content on a schedule, support multiple databases (default SQLite, optional MySQL), various fetching methods, multiple RSS clients, and expiration reminders for authorizations.

Chinese-Mixtral-8x7B

Chinese-Mixtral-8x7B is an open-source project based on Mistral's Mixtral-8x7B model for incremental pre-training of Chinese vocabulary, aiming to advance research on MoE models in the Chinese natural language processing community. The expanded vocabulary significantly improves the model's encoding and decoding efficiency for Chinese, and the model is pre-trained incrementally on a large-scale open-source corpus, enabling it with powerful Chinese generation and comprehension capabilities. The project includes a large model with expanded Chinese vocabulary and incremental pre-training code.

petercat

Peter Cat is an intelligent Q&A chatbot solution designed for community maintainers and developers. It provides a conversational Q&A agent configuration system, self-hosting deployment solutions, and a convenient integrated application SDK. Users can easily create intelligent Q&A chatbots for their GitHub repositories and quickly integrate them into various official websites or projects to provide more efficient technical support for the community.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

Langchain-Chatchat

LangChain-Chatchat is an open-source, offline-deployable retrieval-enhanced generation (RAG) large model knowledge base project based on large language models such as ChatGLM and application frameworks such as Langchain. It aims to establish a knowledge base Q&A solution that is friendly to Chinese scenarios, supports open-source models, and can run offline.

devops-gpt

DevOpsGPT is a revolutionary tool designed to streamline your workflow and empower you to build systems and automate tasks with ease. Tired of spending hours on repetitive DevOps tasks? DevOpsGPT is here to help! Whether you're setting up infrastructure, speeding up deployments, or tackling any other DevOps challenge, our app can make your life easier and more productive. With DevOpsGPT, you can expect faster task completion, simplified workflows, and increased efficiency. Ready to experience the DevOpsGPT difference? Visit our website, sign in or create an account, start exploring the features, and share your feedback to help us improve. DevOpsGPT will become an essential tool in your DevOps toolkit.

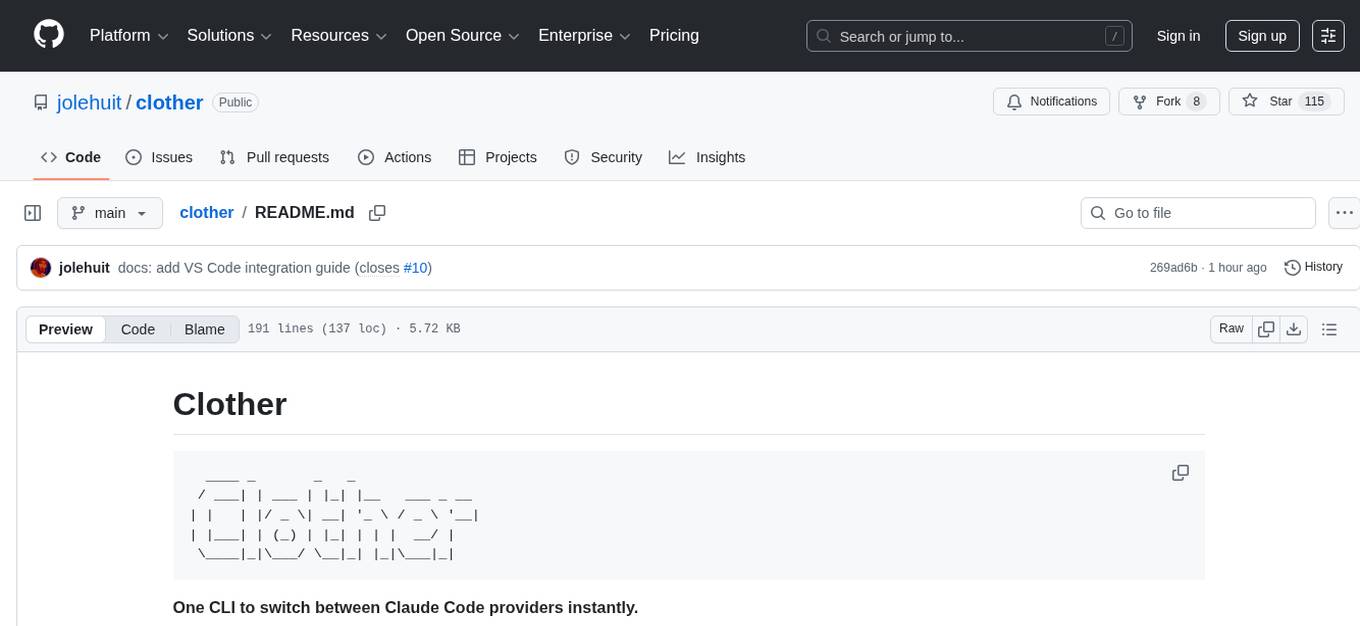

clother

Clother is a command-line tool that allows users to switch between different Claude Code providers instantly. It provides launchers for various cloud, open router, China endpoints, local, and custom providers, enabling users to configure, list profiles, test connectivity, check installation status, and uninstall. Users can also change the default model for each provider and troubleshoot common issues. Clother simplifies the management of API keys and installation directories, supporting macOS, Linux, and Windows (WSL) platforms. It is designed to streamline the workflow of interacting with different AI models and services.

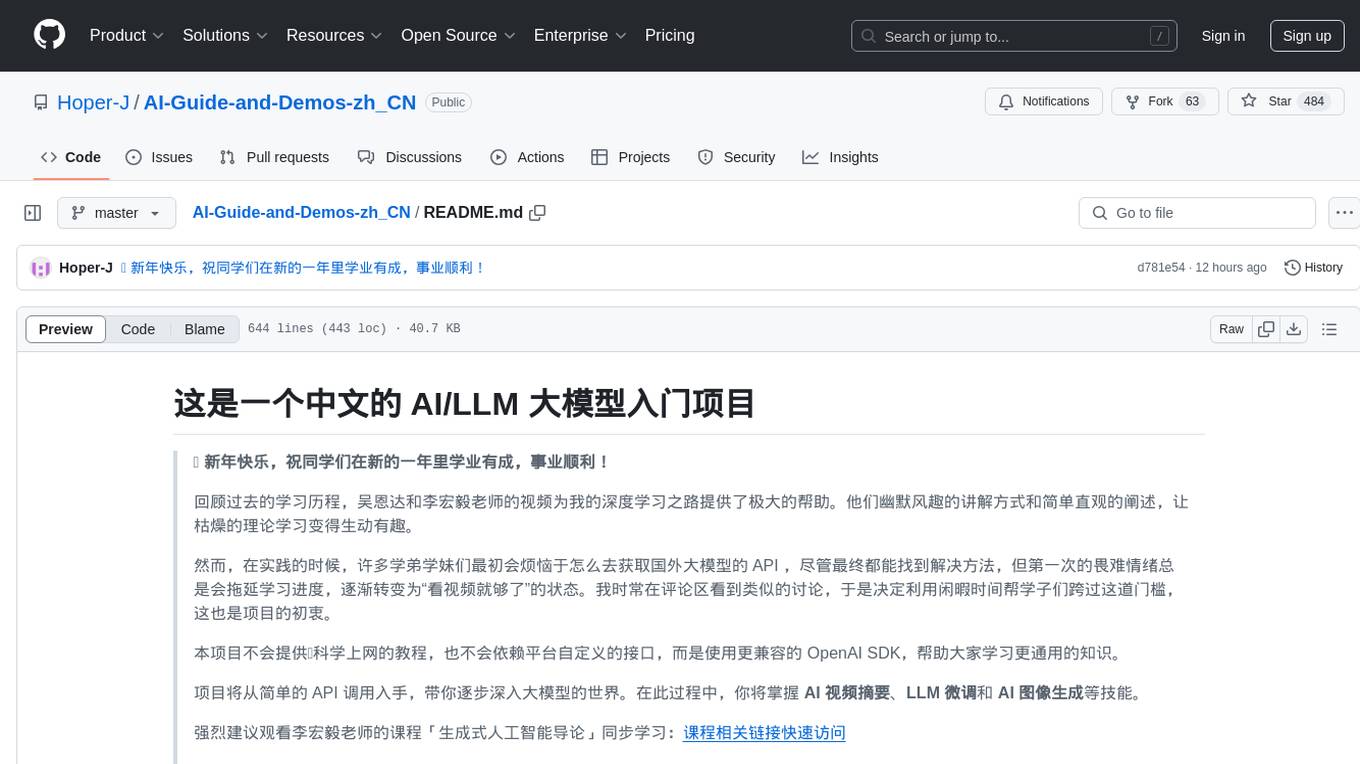

AI-Guide-and-Demos-zh_CN

This is a Chinese AI/LLM introductory project that aims to help students overcome the initial difficulties of accessing foreign large models' APIs. The project uses the OpenAI SDK to provide a more compatible learning experience. It covers topics such as AI video summarization, LLM fine-tuning, and AI image generation. The project also offers a CodePlayground for easy setup and one-line script execution to experience the charm of AI. It includes guides on API usage, LLM configuration, building AI applications with Gradio, customizing prompts for better model performance, understanding LoRA, and more.

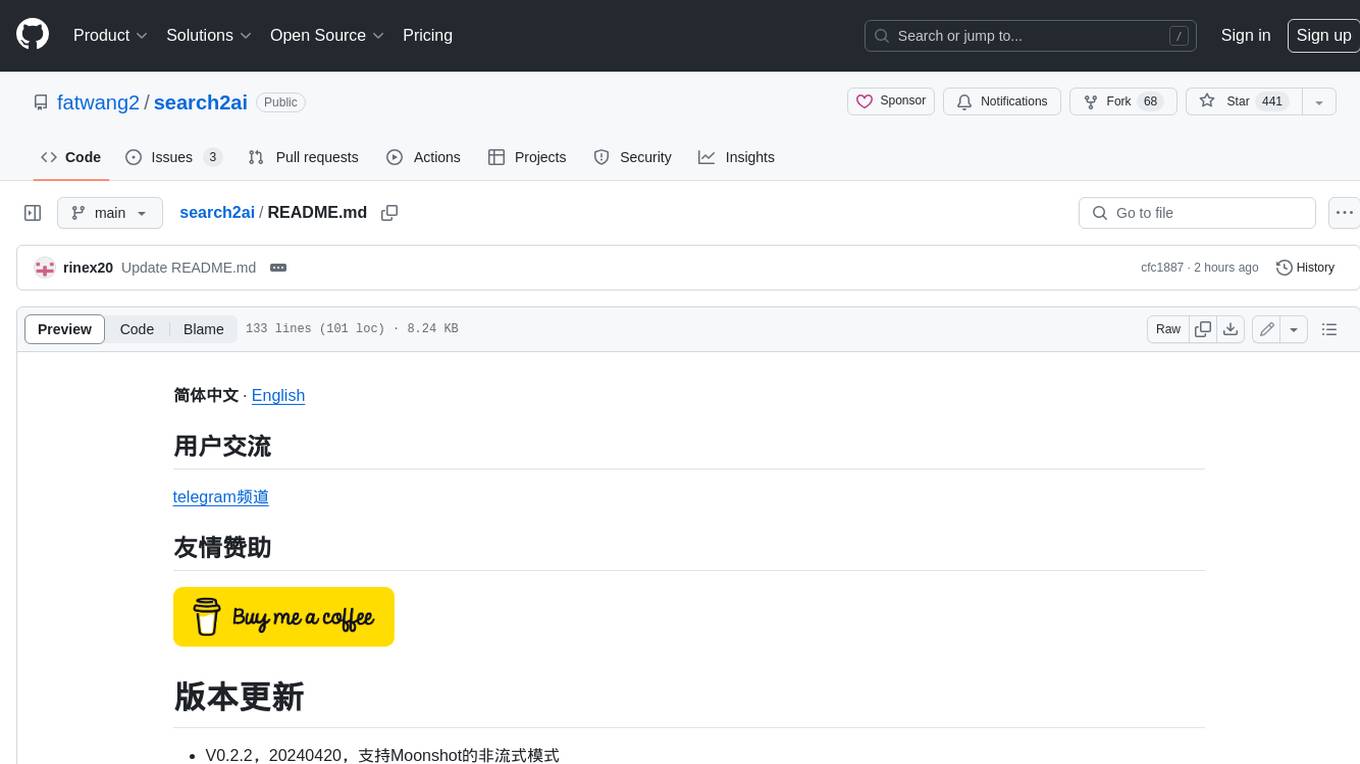

search2ai

S2A allows your large model API to support networking, searching, news, and web page summarization. It currently supports OpenAI, Gemini, and Moonshot (non-streaming). The large model will determine whether to connect to the network based on your input, and it will not connect to the network for searching every time. You don't need to install any plugins or replace keys. You can directly replace the custom address in your commonly used third-party client. You can also deploy it yourself, which will not affect other functions you use, such as drawing and voice.

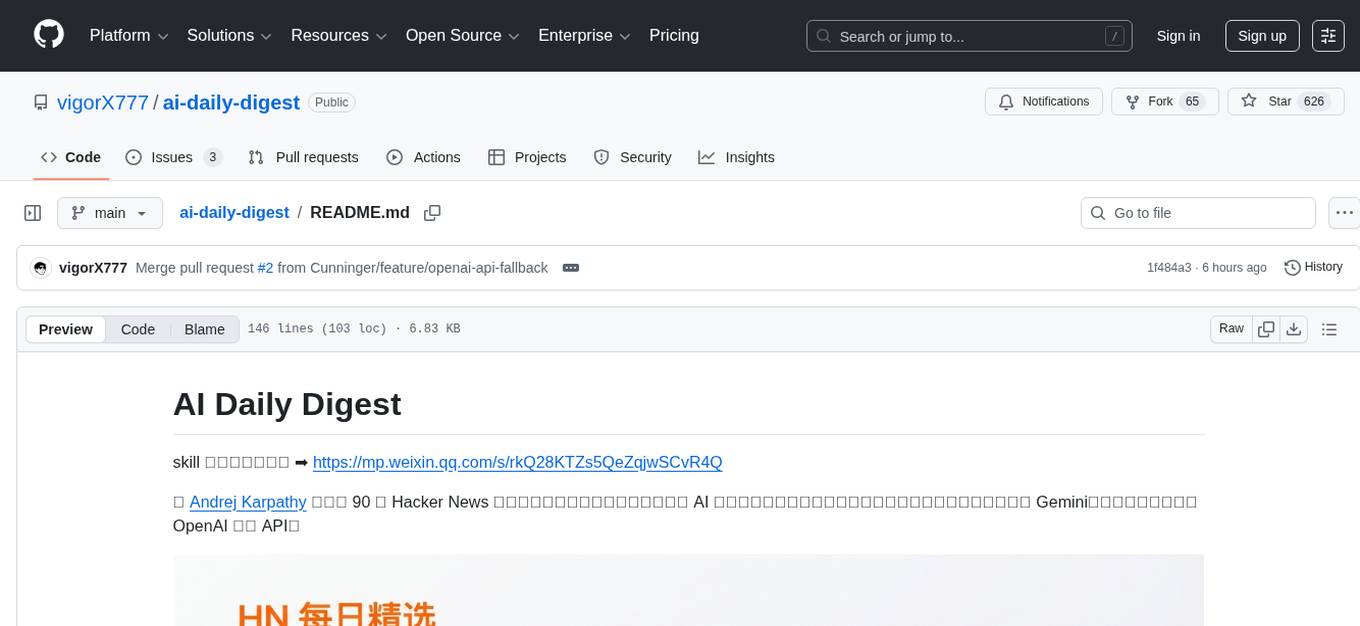

ai-daily-digest

AI Daily Digest is a tool that fetches the latest articles from the top 90 Hacker News technology blogs recommended by Andrej Karpathy. It uses AI multi-dimensional scoring to curate a structured daily digest. The tool supports Gemini by default and can automatically degrade to OpenAI compatible API. It offers a five-step processing pipeline including RSS fetching, time filtering, AI scoring and classification, AI summarization and translation, and trend summarization. The generated daily digest includes sections like today's highlights, must-read articles, data overview, and categorized article lists. The tool is designed to be dependency-free, bilingual, with structured summaries, visual statistics, intelligent categorization, trend insights, and persistent configuration memory.

For similar tasks

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

chat-ollama

ChatOllama is an open-source chatbot based on LLMs (Large Language Models). It supports a wide range of language models, including Ollama served models, OpenAI, Azure OpenAI, and Anthropic. ChatOllama supports multiple types of chat, including free chat with LLMs and chat with LLMs based on a knowledge base. Key features of ChatOllama include Ollama models management, knowledge bases management, chat, and commercial LLMs API keys management.

ChatIDE

ChatIDE is an AI assistant that integrates with your IDE, allowing you to converse with OpenAI's ChatGPT or Anthropic's Claude within your development environment. It provides a seamless way to access AI-powered assistance while coding, enabling you to get real-time help, generate code snippets, debug errors, and brainstorm ideas without leaving your IDE.

azure-search-openai-javascript

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access the ChatGPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval.

xiaogpt

xiaogpt is a tool that allows you to play ChatGPT and other LLMs with Xiaomi AI Speaker. It supports ChatGPT, New Bing, ChatGLM, Gemini, Doubao, and Tongyi Qianwen. You can use it to ask questions, get answers, and have conversations with AI assistants. xiaogpt is easy to use and can be set up in a few minutes. It is a great way to experience the power of AI and have fun with your Xiaomi AI Speaker.

googlegpt

GoogleGPT is a browser extension that brings the power of ChatGPT to Google Search. With GoogleGPT, you can ask ChatGPT questions and get answers directly in your search results. You can also use GoogleGPT to generate text, translate languages, and more. GoogleGPT is compatible with all major browsers, including Chrome, Firefox, Edge, and Safari.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.