dbhub

Universal database MCP server connecting to MySQL, PostgreSQL, SQL Server, SQLite, and etc.

Stars: 76

DBHub is a universal database gateway that implements the Model Context Protocol (MCP) server interface. It allows MCP-compatible clients to connect to and explore different databases. The gateway supports various database resources and tools, providing capabilities such as executing queries, listing connectors, generating SQL, and explaining database elements. Users can easily configure their database connections and choose between different transport modes like stdio and sse. DBHub also offers a demo mode with a sample employee database for testing purposes.

README:

DBHub is a universal database gateway implementing the Model Context Protocol (MCP) server interface. This gateway allows MCP-compatible clients to connect to and explore different databases.

+------------------+ +--------------+ +------------------+

| | | | | |

| | | | | |

| Claude Desktop +--->+ +--->+ PostgreSQL |

| | | | | |

| Cursor +--->+ DBHub +--->+ SQL Server |

| | | | | |

| Other MCP +--->+ +--->+ SQLite |

| Clients | | | | |

| | | +--->+ MySQL |

| | | | | |

| | | +--->+ Other Databases |

| | | | | |

+------------------+ +--------------+ +------------------+

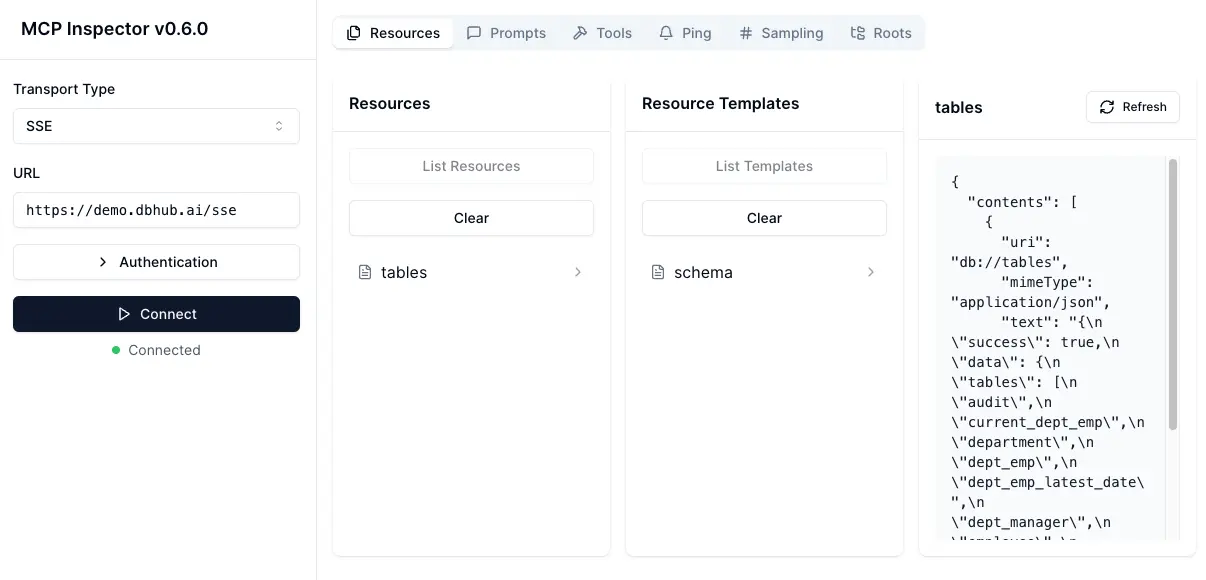

MCP Clients MCP Server Databaseshttps://demo.dbhub.ai/sse connects a sample employee database. You can point Cursor or MCP Inspector to it to see it in action.

| Resource Name | URI Format | PostgreSQL | MySQL | SQL Server | SQLite |

|---|---|---|---|---|---|

| schemas | db://schemas |

✅ | ✅ | ✅ | ✅ |

| tables_in_schema | db://schemas/{schemaName}/tables |

✅ | ✅ | ✅ | ✅ |

| table_structure_in_schema | db://schemas/{schemaName}/tables/{tableName} |

✅ | ✅ | ✅ | ✅ |

| indexes_in_table | db://schemas/{schemaName}/tables/{tableName}/indexes |

✅ | ✅ | ✅ | ✅ |

| procedures_in_schema | db://schemas/{schemaName}/procedures |

✅ | ✅ | ✅ | ❌ |

| procedure_details_in_schema | db://schemas/{schemaName}/procedures/{procedureName} |

✅ | ✅ | ✅ | ❌ |

| Tool | Command Name | PostgreSQL | MySQL | SQL Server | SQLite |

|---|---|---|---|---|---|

| Execute Query | run_query |

✅ | ✅ | ✅ | ✅ |

| List Connectors | list_connectors |

✅ | ✅ | ✅ | ✅ |

| Prompt | Command Name | PostgreSQL | MySQL | SQL Server | SQLite |

|---|---|---|---|---|---|

| Generate SQL | generate_sql |

✅ | ✅ | ✅ | ✅ |

| Explain DB Elements | explain_db |

✅ | ✅ | ✅ | ✅ |

# PostgreSQL example

docker run --rm --init \

--name dbhub \

--publish 8080:8080 \

bytebase/dbhub \

--transport sse \

--port 8080 \

--dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"# Demo mode with sample employee database

docker run --rm --init \

--name dbhub \

--publish 8080:8080 \

bytebase/dbhub \

--transport sse \

--port 8080 \

--demo# PostgreSQL example

npx @bytebase/dbhub --transport sse --port 8080 --dsn "postgres://user:password@localhost:5432/dbname"# Demo mode with sample employee database

npx @bytebase/dbhub --transport sse --port 8080 --demoNote: The demo mode includes a bundled SQLite sample "employee" database with tables for employees, departments, salaries, and more.

- Claude Desktop only supports

stdiotransport https://github.com/orgs/modelcontextprotocol/discussions/16

// claude_desktop_config.json

{

"mcpServers": {

"dbhub-postgres-docker": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"bytebase/dbhub",

"--transport",

"stdio",

"--dsn",

// Use host.docker.internal as the host if connecting to the local db

"postgres://user:[email protected]:5432/dbname?sslmode=disable"

]

},

"dbhub-postgres-npx": {

"command": "npx",

"args": [

"-y",

"@bytebase/dbhub",

"--transport",

"stdio",

"--dsn",

"postgres://user:password@localhost:5432/dbname?sslmode=disable"

]

},

"dbhub-demo": {

"command": "npx",

"args": ["-y", "@bytebase/dbhub", "--transport", "stdio", "--demo"]

}

}

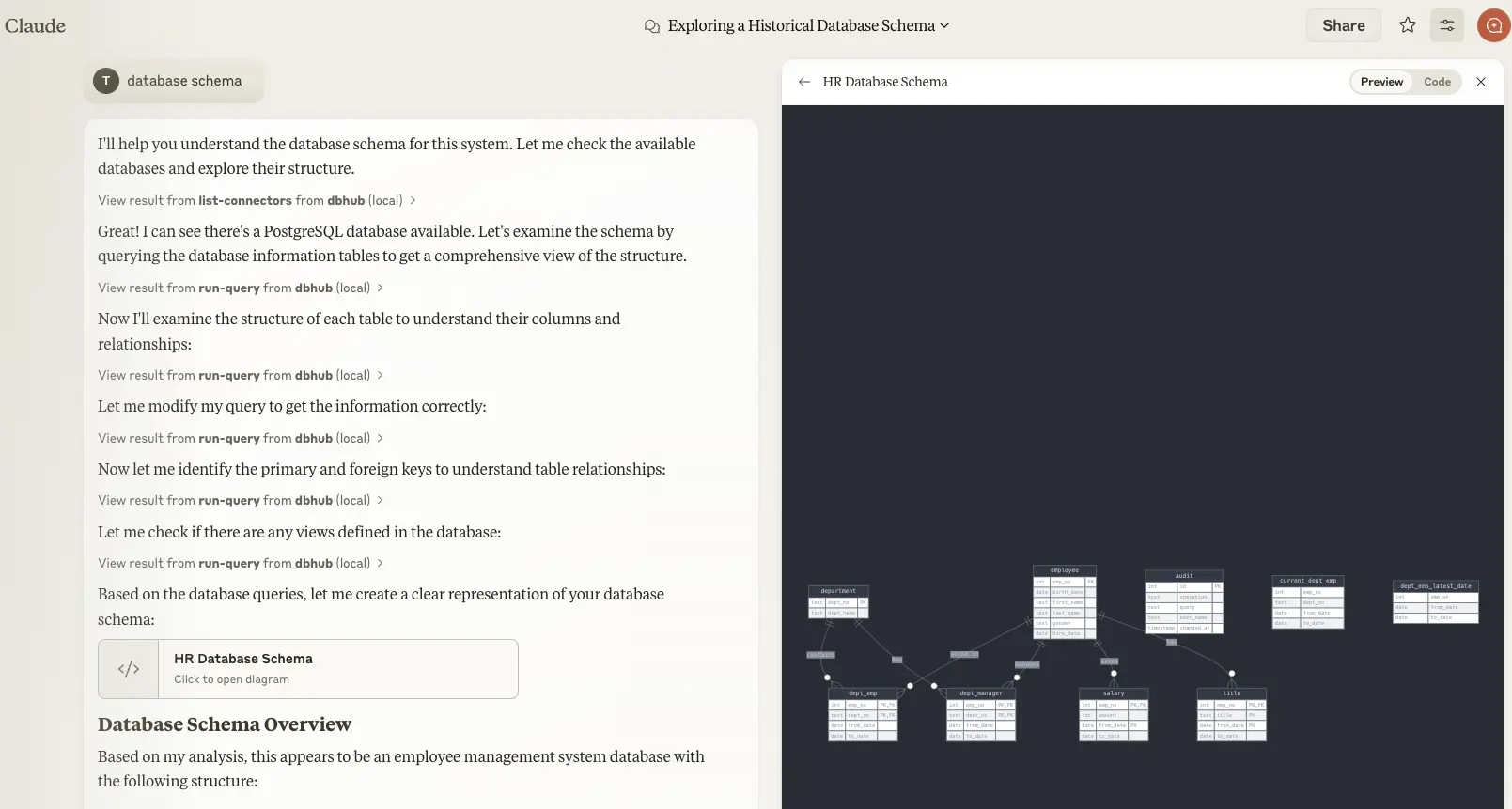

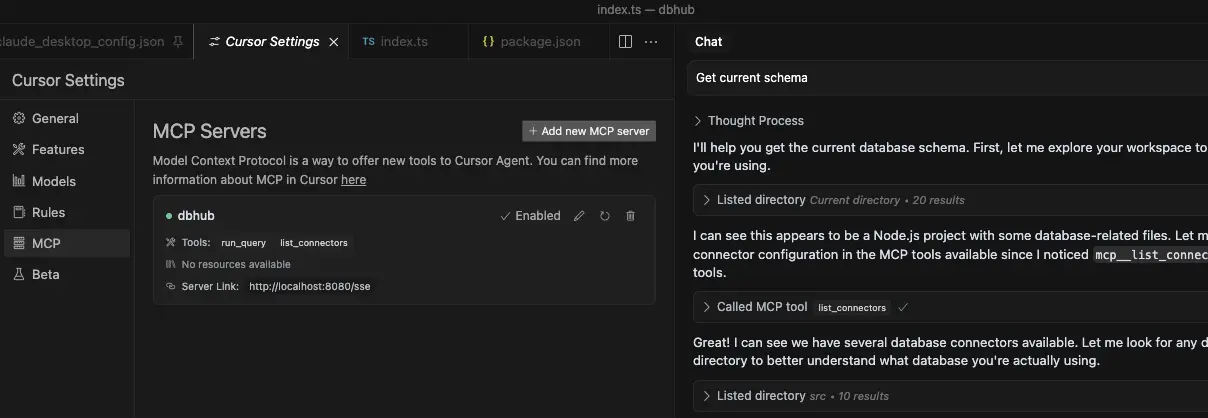

}- Cursor supports both

stdioandsse. - Follow Cursor MCP guide and make sure to use Agent mode.

You can use DBHub in demo mode with a sample employee database for testing:

pnpm dev --demoFor real databases, a Database Source Name (DSN) is required. You can provide this in several ways:

-

Command line argument (highest priority):

pnpm dev --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable" -

Environment variable (second priority):

export DSN="postgres://user:password@localhost:5432/dbname?sslmode=disable" pnpm dev

-

Environment file (third priority):

- For development: Create

.env.localwith your DSN - For production: Create

.envwith your DSN

DSN=postgres://user:password@localhost:5432/dbname?sslmode=disable - For development: Create

[!WARNING] When running in Docker, use

host.docker.internalinstead oflocalhostto connect to databases running on your host machine. For example:mysql://user:[email protected]:3306/dbname

DBHub supports the following database connection string formats:

| Database | DSN Format | Example |

|---|---|---|

| PostgreSQL | postgres://[user]:[password]@[host]:[port]/[database] |

postgres://user:password@localhost:5432/dbname?sslmode=disable |

| SQLite |

sqlite:///[path/to/file] or sqlite::memory:

|

sqlite:///path/to/database.db or sqlite::memory:

|

| SQL Server | sqlserver://[user]:[password]@[host]:[port]/[database] |

sqlserver://user:password@localhost:1433/dbname |

| MySQL | mysql://[user]:[password]@[host]:[port]/[database] |

mysql://user:password@localhost:3306/dbname |

-

stdio (default) - for direct integration with tools like Claude Desktop:

npx @bytebase/dbhub --transport stdio --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable" -

sse - for browser and network clients:

npx @bytebase/dbhub --transport sse --port 5678 --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

| Option | Description | Default |

|---|---|---|

| demo | Run in demo mode with sample employee database | false |

| dsn | Database connection string | Required if not in demo mode |

| transport | Transport mode: stdio or sse

|

stdio |

| port | HTTP server port (only applicable when using --transport=sse) |

8080 |

The demo mode uses an in-memory SQLite database loaded with the sample employee database that includes tables for employees, departments, titles, salaries, department employees, and department managers. The sample database includes SQL scripts for table creation, data loading, and testing.

-

Install dependencies:

pnpm install

-

Run in development mode:

pnpm dev

-

Build for production:

pnpm build pnpm start --transport stdio --dsn "postgres://user:password@localhost:5432/dbname?sslmode=disable"

Debug with MCP Inspector

# PostgreSQL example

TRANSPORT=stdio DSN="postgres://user:password@localhost:5432/dbname?sslmode=disable" npx @modelcontextprotocol/inspector node /path/to/dbhub/dist/index.js# Start DBHub with SSE transport

pnpm dev --transport=sse --port=8080

# Start the MCP Inspector in another terminal

npx @modelcontextprotocol/inspectorConnect to the DBHub server /sse endpoint

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for dbhub

Similar Open Source Tools

dbhub

DBHub is a universal database gateway that implements the Model Context Protocol (MCP) server interface. It allows MCP-compatible clients to connect to and explore different databases. The gateway supports various database resources and tools, providing capabilities such as executing queries, listing connectors, generating SQL, and explaining database elements. Users can easily configure their database connections and choose between different transport modes like stdio and sse. DBHub also offers a demo mode with a sample employee database for testing purposes.

clother

Clother is a command-line tool that allows users to switch between different Claude Code providers instantly. It provides launchers for various cloud, open router, China endpoints, local, and custom providers, enabling users to configure, list profiles, test connectivity, check installation status, and uninstall. Users can also change the default model for each provider and troubleshoot common issues. Clother simplifies the management of API keys and installation directories, supporting macOS, Linux, and Windows (WSL) platforms. It is designed to streamline the workflow of interacting with different AI models and services.

LightMem

LightMem is a lightweight and efficient memory management framework designed for Large Language Models and AI Agents. It provides a simple yet powerful memory storage, retrieval, and update mechanism to help you quickly build intelligent applications with long-term memory capabilities. The framework is minimalist in design, ensuring minimal resource consumption and fast response times. It offers a simple API for easy integration into applications with just a few lines of code. LightMem's modular architecture supports custom storage engines and retrieval strategies, making it flexible and extensible. It is compatible with various cloud APIs like OpenAI and DeepSeek, as well as local models such as Ollama and vLLM.

pup

Pup is a Go-based command-line wrapper designed for easy interaction with Datadog APIs. It provides a fast, cross-platform binary with support for OAuth2 authentication and traditional API key authentication. The tool offers simple commands for common Datadog operations, structured JSON output for parsing and automation, and dynamic client registration with unique OAuth credentials per installation. Pup currently implements 38 out of 85+ available Datadog APIs, covering core observability, monitoring & alerting, security & compliance, infrastructure & cloud, incident & operations, CI/CD & development, organization & access, and platform & configuration domains. Users can easily install Pup via Homebrew, Go Install, or manual download, and authenticate using OAuth2 or API key methods. The tool supports various commands for tasks such as testing connection, managing monitors, querying metrics, handling dashboards, working with SLOs, and handling incidents.

shodh-memory

Shodh-Memory is a cognitive memory system designed for AI agents to persist memory across sessions, learn from experience, and run entirely offline. It features Hebbian learning, activation decay, and semantic consolidation, packed into a single ~17MB binary. Users can deploy it on cloud, edge devices, or air-gapped systems to enhance the memory capabilities of AI agents.

apidash

API Dash is an open-source cross-platform API Client that allows users to easily create and customize API requests, visually inspect responses, and generate API integration code. It supports various HTTP methods, GraphQL requests, and multimedia API responses. Users can organize requests in collections, preview data in different formats, and generate code for multiple languages. The tool also offers dark mode support, data persistence, and various customization options.

llmio

LLMIO is a Go-based LLM load balancing gateway that provides a unified REST API, weight scheduling, logging, and modern management interface for your LLM clients. It helps integrate different model capabilities from OpenAI, Anthropic, Gemini, and more in a single service. Features include unified API compatibility, weight scheduling with two strategies, visual management dashboard, rate and failure handling, and local persistence with SQLite. The tool supports multiple vendors' APIs and authentication methods, making it versatile for various AI model integrations.

react-native-nitro-mlx

The react-native-nitro-mlx repository allows users to run LLMs, Text-to-Speech, and Speech-to-Text on-device in React Native using MLX Swift. It provides functionalities for downloading models, loading and generating responses, streaming audio, text-to-speech, and speech-to-text capabilities. Users can interact with various MLX-compatible models from Hugging Face, with pre-defined models available for convenience. The repository supports iOS 26.0+ and offers detailed API documentation for each feature.

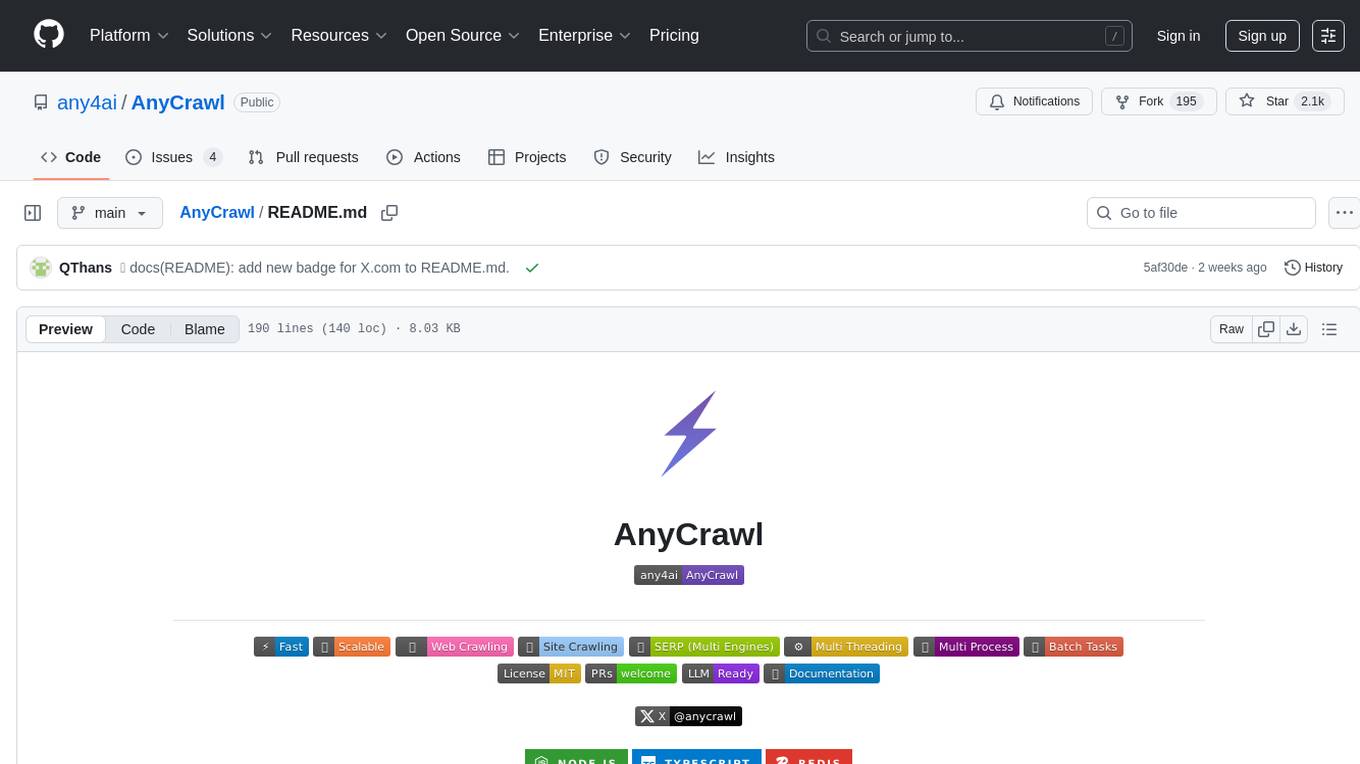

AnyCrawl

AnyCrawl is a high-performance crawling and scraping toolkit designed for SERP crawling, web scraping, site crawling, and batch tasks. It offers multi-threading and multi-process capabilities for high performance. The tool also provides AI extraction for structured data extraction from pages, making it LLM-friendly and easy to integrate and use.

aio-dynamic-push

Aio-dynamic-push is a tool that integrates multiple platforms for dynamic/live streaming alerts detection and push notifications. It currently supports platforms such as Bilibili, Weibo, Xiaohongshu, and Douyin. Users can configure different tasks and push channels in the config file to receive notifications. The tool is designed to simplify the process of monitoring and receiving alerts from various social media platforms, making it convenient for users to stay updated on their favorite content creators or accounts.

llm.nvim

llm.nvim is a universal plugin for a large language model (LLM) designed to enable users to interact with LLM within neovim. Users can customize various LLMs such as gpt, glm, kimi, and local LLM. The plugin provides tools for optimizing code, comparing code, translating text, and more. It also supports integration with free models from Cloudflare, Github models, siliconflow, and others. Users can customize tools, chat with LLM, quickly translate text, and explain code snippets. The plugin offers a flexible window interface for easy interaction and customization.

ReGraph

ReGraph is a decentralized AI compute marketplace that connects hardware providers with developers who need inference and training resources. It democratizes access to AI computing power by creating a global network of distributed compute nodes. It is cost-effective, decentralized, easy to integrate, supports multiple models, and offers pay-as-you-go pricing.

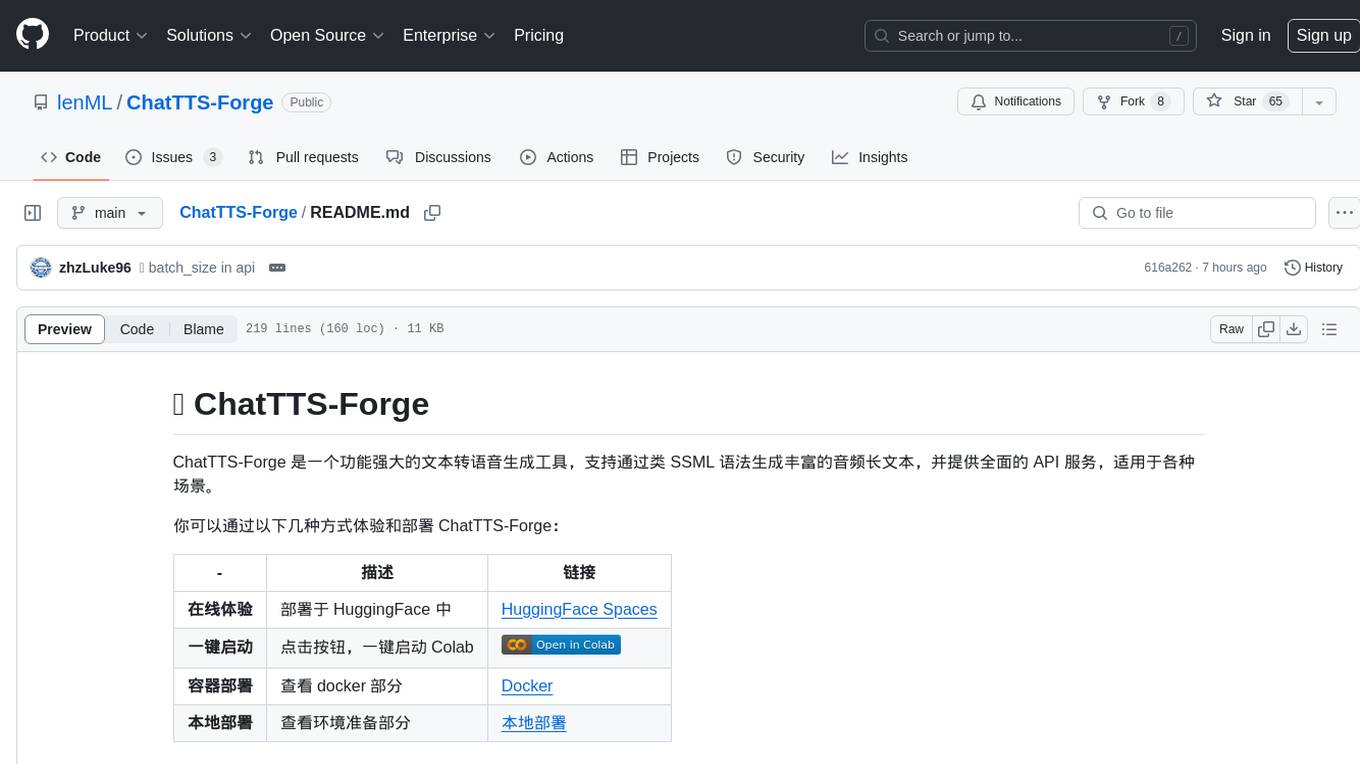

ChatTTS-Forge

ChatTTS-Forge is a powerful text-to-speech generation tool that supports generating rich audio long texts using a SSML-like syntax and provides comprehensive API services, suitable for various scenarios. It offers features such as batch generation, support for generating super long texts, style prompt injection, full API services, user-friendly debugging GUI, OpenAI-style API, Google-style API, support for SSML-like syntax, speaker management, style management, independent refine API, text normalization optimized for ChatTTS, and automatic detection and processing of markdown format text. The tool can be experienced and deployed online through HuggingFace Spaces, launched with one click on Colab, deployed using containers, or locally deployed after cloning the project, preparing models, and installing necessary dependencies.

airport

The 'airport' repository provides free Clash Meta nodes sourced from the internet, with testing every 6 hours to ensure quality and low latency. It includes features such as node deduplication, regional renaming, and geographical grouping.

aikit

AIKit is a comprehensive platform for hosting, deploying, building, and fine-tuning large language models (LLMs). It offers inference using LocalAI, extensible fine-tuning interface, and OCI packaging for distributing models. AIKit supports various models, multi-modal model and image generation, Kubernetes deployment, and supply chain security. It can run on AMD64 and ARM64 CPUs, NVIDIA GPUs, and Apple Silicon (experimental). Users can quickly get started with AIKit without a GPU and access pre-made models. The platform is OpenAI API compatible and provides easy-to-use configuration for inference and fine-tuning.

fittencode.nvim

Fitten Code AI Programming Assistant for Neovim provides fast completion using AI, asynchronous I/O, and support for various actions like document code, edit code, explain code, find bugs, generate unit test, implement features, optimize code, refactor code, start chat, and more. It offers features like accepting suggestions with Tab, accepting line with Ctrl + Down, accepting word with Ctrl + Right, undoing accepted text, automatic scrolling, and multiple HTTP/REST backends. It can run as a coc.nvim source or nvim-cmp source.

For similar tasks

dbhub

DBHub is a universal database gateway that implements the Model Context Protocol (MCP) server interface. It allows MCP-compatible clients to connect to and explore different databases. The gateway supports various database resources and tools, providing capabilities such as executing queries, listing connectors, generating SQL, and explaining database elements. Users can easily configure their database connections and choose between different transport modes like stdio and sse. DBHub also offers a demo mode with a sample employee database for testing purposes.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

aiosql

aiosql is a Python module that allows you to organize SQL statements in .sql files and load them into your Python application as methods to call. It supports various database drivers like SQLite, PostgreSQL, MySQL, MariaDB, and DuckDB. The project is an implementation of Kris Jenkins' yesql library to the Python ecosystem, allowing users to easily reuse SQL code in SQL GUIs or CLI tools. With aiosql, you can write, version control, comment, and run SQL code using files without losing the ability to use them as you would any other SQL file. It provides support for PEP 249 and asyncio based drivers, enabling users to execute parametric SQL queries from Python methods.

mcp-llm-bridge

The MCP LLM Bridge is a tool that acts as a bridge connecting Model Context Protocol (MCP) servers to OpenAI-compatible LLMs. It provides a bidirectional protocol translation layer between MCP and OpenAI's function-calling interface, enabling any OpenAI-compatible language model to leverage MCP-compliant tools through a standardized interface. The tool supports primary integration with the OpenAI API and offers additional compatibility for local endpoints that implement the OpenAI API specification. Users can configure the tool for different endpoints and models, facilitating the execution of complex queries and tasks using cloud-based or local models like Ollama and LM Studio.

mysql_mcp_server

A Model Context Protocol (MCP) server that enables secure interaction with MySQL databases. This server allows AI assistants to list tables, read data, and execute SQL queries through a controlled interface, making database exploration and analysis safer and more structured. It provides features such as listing available MySQL tables as resources, reading table contents, executing SQL queries with proper error handling, secure database access through environment variables, and comprehensive logging. The tool ensures security best practices by never committing environment variables or credentials, using a database user with minimal required permissions, implementing query whitelisting for production use, and monitoring and logging all database operations.

mcp-graphql

mcp-graphql is a Model Context Protocol server that enables Large Language Models (LLMs) to interact with GraphQL APIs. It provides schema introspection and query execution capabilities, allowing models to dynamically discover and use GraphQL APIs. The server offers tools for retrieving the GraphQL schema and executing queries against the endpoint. Mutations are disabled by default for security reasons. Users can install mcp-graphql via Smithery or manually to Claude Desktop. It is recommended to carefully consider enabling mutations in production environments to prevent unauthorized data modifications.

boost

Laravel Boost accelerates AI-assisted development by providing essential context and structure for generating high-quality, Laravel-specific code. It includes an MCP server with specialized tools, AI guidelines, and a Documentation API. Boost is designed to streamline AI-assisted coding workflows by offering precise, context-aware results and extensive Laravel-specific information.

AskDB

AskDB is a revolutionary application that simplifies the way users interact with SQL databases. It allows users to query databases in plain English, provides instant answers, and offers AI-assisted query writing and database exploration. AskDB benefits business analysts, data scientists, managers, developers, and database administrators by making querying databases intuitive, effortless, and safe. It offers features like natural language querying, instant insight from data, multi-database connectivity, intelligent query suggestions, data privacy, and easy data export.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.