OneClickLLAMA

一键运行 Qwen2.5 SakuraLLM 等本地 LLM 模型

Stars: 175

OneClickLLAMA is a tool designed to run local LLM models such as Qwen2.5 and SakuraLLM with ease. It can be used in conjunction with various OpenAI format translators and analyzers, including LinguaGacha and KeywordGacha. By following the setup guides provided on the page, users can optimize performance and achieve a 3-5 times speed improvement compared to default settings. The tool requires a minimum of 8GB dedicated graphics memory, preferably NVIDIA, and the latest version of graphics drivers installed. Users can download the tool from the release page, choose the appropriate model based on usage and memory size, and start the tool by selecting the corresponding launch script.

README:

- 一键运行 Qwen2.5 SakuraLLM 等本地 LLM 模型

- 可与众多支持 OpenAI 格式的翻译器、分析器应用搭配使用,包括但是不限于:

-

LinguaGacha

使用 AI 能力一键翻译小说、游戏、字幕的次世代翻译器推荐👈👈 -

KeywordGacha

使用 AI 能力一键生成术语表的次世代翻译辅助工具推荐👈👈 - AiNiee

- GalTransl

- 绿站(轻小说翻译机器人)

-

LinguaGacha

- 配合本页中的各应用的设置指南,可以得到最优化的性能,相较于默认设置可提升 3-5 倍

- 至少 8G 显存的独立显卡,NVIDIA 显卡最佳,其他显卡很慢

- 确保安装了

最新版本的显卡驱动程序

-

从 发布页 下载最新版本的

OneClickLLAMA并解压缩-

OneClickLLAMA_NV是 NVIDIA 专用的版本 -

OneClickLLAMA_VULKAN是 所有显卡 通用的版本

-

-

根据用途和显存大小下载适合的模型并放入

OneClickLLAMA文件夹 -

日文翻译到中文

| 显存大小 | 模型规模 | 启动脚本 | 下载链接 |

|---|---|---|---|

| 8G/10G | 7B | 01_1280_NP16.bat | sakura-7b-qwen2.5-v1.0-iq4xs.gguf |

| 11G | 14B | 01_1280_NP4.bat | sakura-14b-qwen2.5-v1.0-iq4xs.gguf |

| 12G | 14B | 01_1280_NP6.bat | sakura-14b-qwen2.5-v1.0-iq4xs.gguf |

| 16G | 14B | 01_1280_NP16.bat | sakura-14b-qwen2.5-v1.0-iq4xs.gguf |

| 24G | 14B | 01_1280_NP16.bat | sakura-14b-qwen2.5-v1.0-q6k.gguf |

- 其他语言翻译到中文(7B 效果很差,14B 勉勉强强,最好使用在线接口)

| 显存大小 | 模型规模 | 启动脚本 | 下载链接 |

|---|---|---|---|

| 8G/10G | 7B | 01_1280_NP16.bat | Qwen2.5-7B-Instruct-IQ4_XS.gguf |

| 11G | 14B | 01_1280_NP4.bat | Qwen2.5-14B-Instruct-IQ4_XS.gguf |

| 12G | 14B | 01_1280_NP6.bat | Qwen2.5-14B-Instruct-IQ4_XS.gguf |

| 16G | 14B | 01_1280_NP16.bat | Qwen2.5-14B-Instruct-IQ4_XS.gguf |

| 24G | 14B | 01_1280_NP16.bat | Qwen2.5-14B-Instruct-Q6_K.gguf |

- 搭配 KeywordGacha 抓取实体词语表

| 显存大小 | 模型规模 | 启动脚本 | 下载链接 |

|---|---|---|---|

| 8G/10G/11G/12G/16G/24G | 7B | 01_2k_NP16.bat | Qwen2.5-7B-Instruct-IQ4_XS.gguf |

- 现在你的文件结构应该类似于:

OneClickLLAMA\llama\...

\00_Core.bat

\01_1280_NP16.bat

\sakura-14b-qwen2.5-v1.0-iq4xs.gguf

\...

- 根据

你的显存和模型的搭配组合选择对应的启动脚本,双击启动即可

- 根据你的需求和使用的应用查看对应设置教程

- 搭配 LinguaGacha 进行日中翻译 Wiki - LinguaGacha_Sakura

推荐👈👈 - 搭配 LinguaGacha 进行其他语言翻译 Wiki - LinguaGacha

推荐👈👈 - 搭配 KeywordGacha 进行文本分析 Wiki - KeywordGacha

推荐👈👈 - 搭配 AiNiee 进行日中翻译 Wiki - AiNiee_Sakura

- 搭配 轻小说翻译机器人(绿站) 进行日中翻译 Wiki - AutoNovel_Sakura

- 搭配 LinguaGacha 进行日中翻译 Wiki - LinguaGacha_Sakura

-

什么是

爆显存,会导致什么问题?- 系统需求的显存超过了显卡实际的物理显存大小,称之为

爆显存 -

爆显存时,翻译的速度和结果都会出现异常,基本丧失可用性,所以要避免这种情况

- 系统需求的显存超过了显卡实际的物理显存大小,称之为

-

如何判断是否

爆显存- 如果爆的比较厉害,程序会直接报错或者退出

- 爆了一点又没有完全爆比较难判断

- 一个可参考的方式是通过第三方软件监测显卡功耗

- 满载执行任务时,显卡实际功耗应为最大功耗的

70%-80%或者更高 - 如果显存接近用完,但是显卡实际功耗很低,则大概率是爆显存了

-

如何避免

爆显存- 在模型启动后,模型占用的显存大小是固定的,不会变化,但是系统中的其他应用也会占用显存

- 本项目中的脚本都预留了一定的冗余空间,但如果开启过多应用,依然可能导致显存消耗完

- 所以在使用时,应尽量减少开启其他消耗显存的应用

- 比如

浏览器、动态壁纸、视频播放器或QQNT、VSCODE等基于浏览器内核的应用

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OneClickLLAMA

Similar Open Source Tools

OneClickLLAMA

OneClickLLAMA is a tool designed to run local LLM models such as Qwen2.5 and SakuraLLM with ease. It can be used in conjunction with various OpenAI format translators and analyzers, including LinguaGacha and KeywordGacha. By following the setup guides provided on the page, users can optimize performance and achieve a 3-5 times speed improvement compared to default settings. The tool requires a minimum of 8GB dedicated graphics memory, preferably NVIDIA, and the latest version of graphics drivers installed. Users can download the tool from the release page, choose the appropriate model based on usage and memory size, and start the tool by selecting the corresponding launch script.

devops-gpt

DevOpsGPT is a revolutionary tool designed to streamline your workflow and empower you to build systems and automate tasks with ease. Tired of spending hours on repetitive DevOps tasks? DevOpsGPT is here to help! Whether you're setting up infrastructure, speeding up deployments, or tackling any other DevOps challenge, our app can make your life easier and more productive. With DevOpsGPT, you can expect faster task completion, simplified workflows, and increased efficiency. Ready to experience the DevOpsGPT difference? Visit our website, sign in or create an account, start exploring the features, and share your feedback to help us improve. DevOpsGPT will become an essential tool in your DevOps toolkit.

search2ai

S2A allows your large model API to support networking, searching, news, and web page summarization. It currently supports OpenAI, Gemini, and Moonshot (non-streaming). The large model will determine whether to connect to the network based on your input, and it will not connect to the network for searching every time. You don't need to install any plugins or replace keys. You can directly replace the custom address in your commonly used third-party client. You can also deploy it yourself, which will not affect other functions you use, such as drawing and voice.

agentkit-samples

AgentKit Samples is a repository containing a series of examples and tutorials to help users understand, implement, and integrate various functionalities of AgentKit into their applications. The platform offers a complete solution for building, deploying, and maintaining AI agents, significantly reducing the complexity of developing intelligent applications. The repository provides different levels of examples and tutorials, including basic tutorials for understanding AgentKit's concepts and use cases, as well as more complex examples for experienced developers.

apidash

API Dash is an open-source cross-platform API Client that allows users to easily create and customize API requests, visually inspect responses, and generate API integration code. It supports various HTTP methods, GraphQL requests, and multimedia API responses. Users can organize requests in collections, preview data in different formats, and generate code for multiple languages. The tool also offers dark mode support, data persistence, and various customization options.

flute

FLUTE (Flexible Lookup Table Engine for LUT-quantized LLMs) is a tool designed for uniform quantization and lookup table quantization of weights in lower-precision intervals. It offers flexibility in mapping intervals to arbitrary values through a lookup table. FLUTE supports various quantization formats such as int4, int3, int2, fp4, fp3, fp2, nf4, nf3, nf2, and even custom tables. The tool also introduces new quantization algorithms like Learned Normal Float (NFL) for improved performance and calibration data learning. FLUTE provides benchmarks, model zoo, and integration with frameworks like vLLM and HuggingFace for easy deployment and usage.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

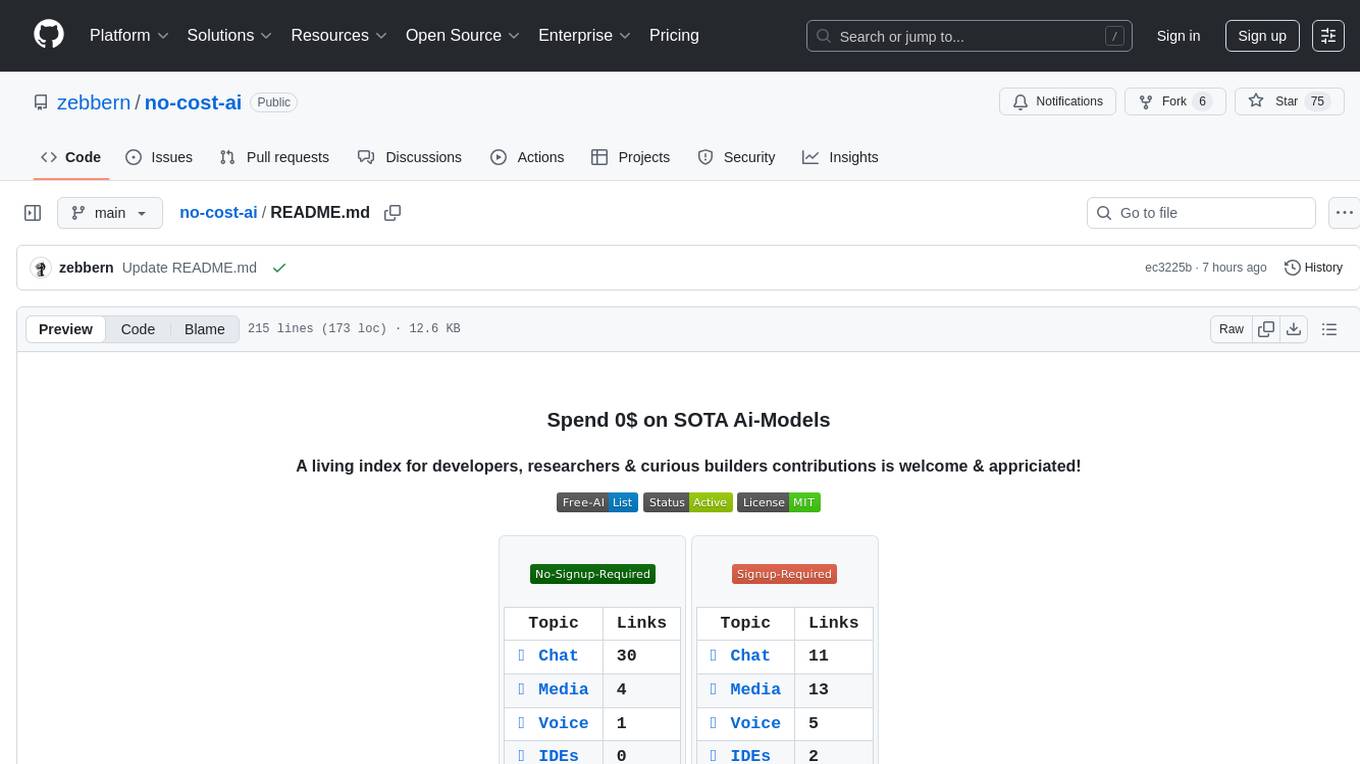

no-cost-ai

No-cost-ai is a repository dedicated to providing a comprehensive list of free AI models and tools for developers, researchers, and curious builders. It serves as a living index for accessing state-of-the-art AI models without any cost. The repository includes information on various AI applications such as chat interfaces, media generation, voice and music tools, AI IDEs, and developer APIs and platforms. Users can find links to free models, their limits, and usage instructions. Contributions to the repository are welcome, and users are advised to use the listed services at their own risk due to potential changes in models, limitations, and reliability of free services.

Free-LLM-Collection

Free-LLM-Collection is a curated list of free resources for mastering the Legal Language Model (LLM) technology. It includes datasets, research papers, tutorials, and tools to help individuals learn and work with LLM models. The repository aims to provide a comprehensive collection of materials to support researchers, developers, and enthusiasts interested in exploring and leveraging LLM technology for various applications in the legal domain.

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

beet

Beet is a collection of crates for authoring and running web pages, games and AI behaviors. It includes crates like `beet_flow` for scenes-as-control-flow bevy library, `beet_spatial` for spatial behaviors, `beet_ml` for machine learning, `beet_sim` for simulation tooling, `beet_rsx` for authoring tools for html and bevy, and `beet_router` for file-based router for web docs. The `beet` crate acts as a base crate that re-exports sub-crates based on feature flags, similar to the `bevy` crate structure.

YuLan-Mini

YuLan-Mini is a lightweight language model with 2.4 billion parameters that achieves performance comparable to industry-leading models despite being pre-trained on only 1.08T tokens. It excels in mathematics and code domains. The repository provides pre-training resources, including data pipeline, optimization methods, and annealing approaches. Users can pre-train their own language models, perform learning rate annealing, fine-tune the model, research training dynamics, and synthesize data. The team behind YuLan-Mini is AI Box at Renmin University of China. The code is released under the MIT License with future updates on model weights usage policies. Users are advised on potential safety concerns and ethical use of the model.

AI0x0.com

AI 0x0 is a versatile AI query generation desktop floating assistant application that supports MacOS and Windows. It allows users to utilize AI capabilities in any desktop software to query and generate text, images, audio, and video data, helping them work more efficiently. The application features a dynamic desktop floating ball, floating dialogue bubbles, customizable presets, conversation bookmarking, preset packages, network acceleration, query mode, input mode, mouse navigation, deep customization of ChatGPT Next Web, support for full-format libraries, online search, voice broadcasting, voice recognition, voice assistant, application plugins, multi-model support, online text and image generation, image recognition, frosted glass interface, light and dark theme adaptation for each language model, and free access to all language models except Chat0x0 with a key.

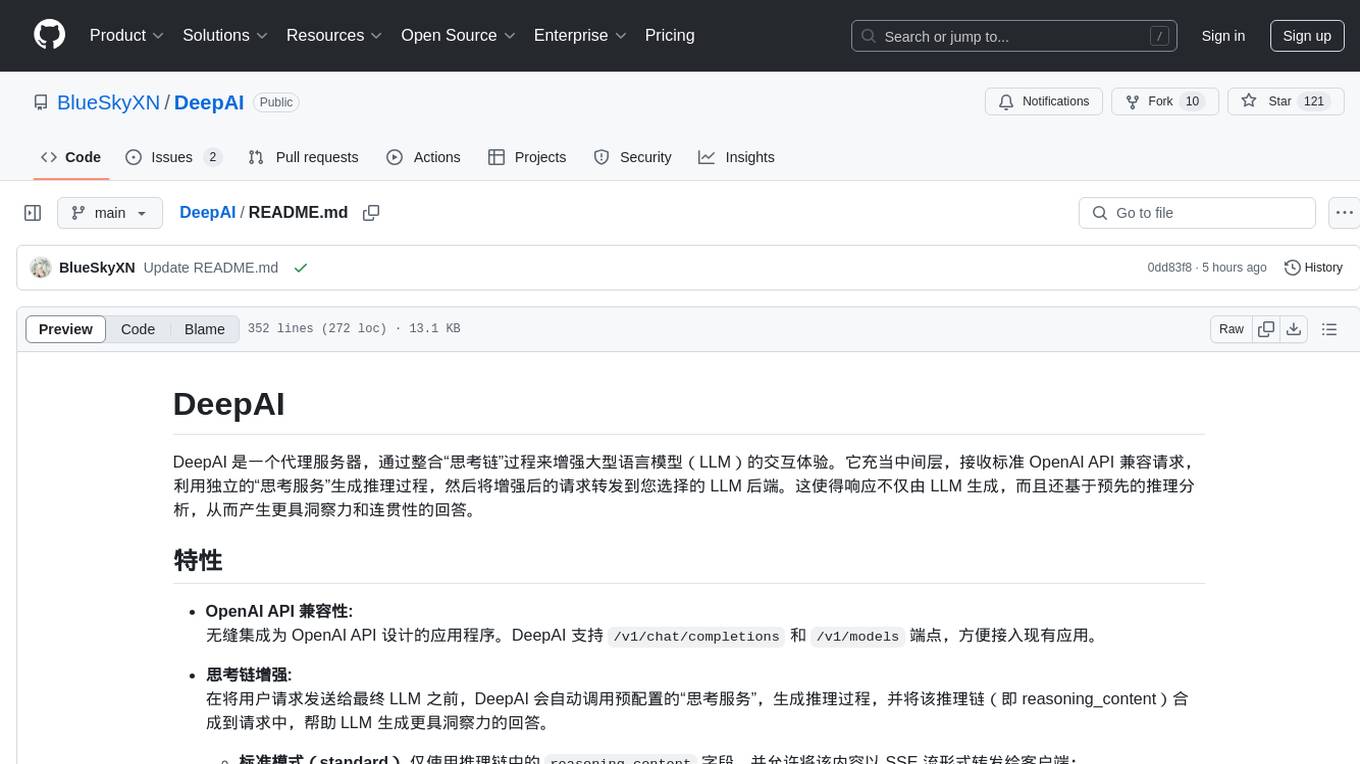

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

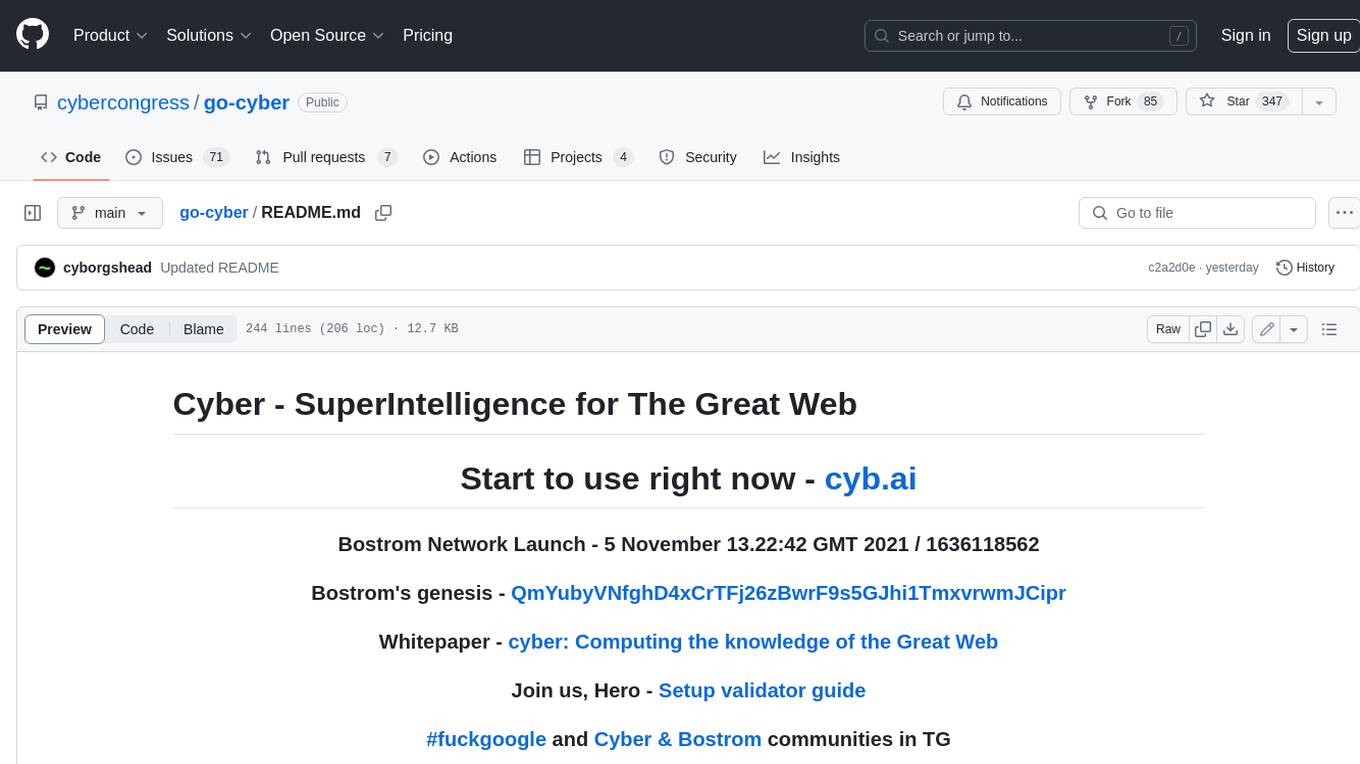

go-cyber

Cyber is a superintelligence protocol that aims to create a decentralized and censorship-resistant internet. It uses a novel consensus mechanism called CometBFT and a knowledge graph to store and process information. Cyber is designed to be scalable, secure, and efficient, and it has the potential to revolutionize the way we interact with the internet.

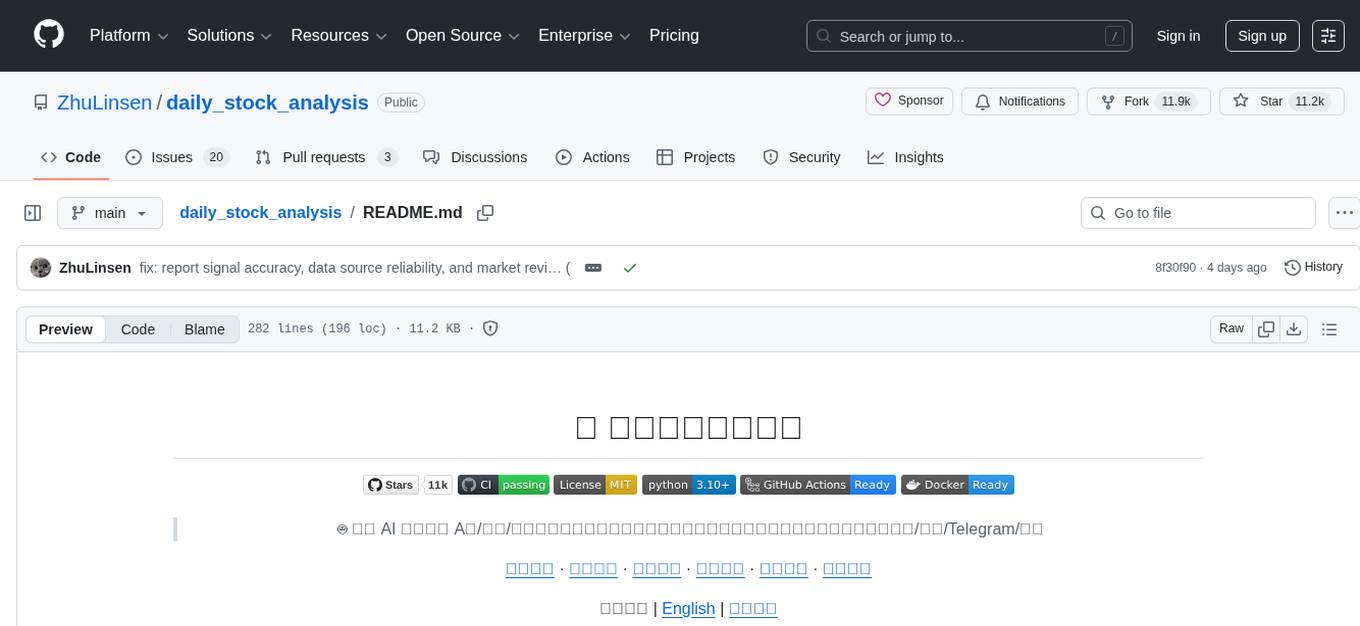

daily_stock_analysis

The daily_stock_analysis repository is an intelligent stock analysis system based on AI large models for A-share/Hong Kong stock/US stock selection. It automatically analyzes and pushes a 'decision dashboard' to WeChat Work/Feishu/Telegram/email daily. The system features multi-dimensional analysis, global market support, market review, AI backtesting validation, multi-channel notifications, and scheduled execution using GitHub Actions. It utilizes AI models like Gemini, OpenAI, DeepSeek, and data sources like AkShare, Tushare, Pytdx, Baostock, YFinance for analysis. The system includes built-in trading disciplines like risk warning, trend trading, precise entry/exit points, and checklist marking for conditions.

For similar tasks

phospho

Phospho is a text analytics platform for LLM apps. It helps you detect issues and extract insights from text messages of your users or your app. You can gather user feedback, measure success, and iterate on your app to create the best conversational experience for your users.

OpenFactVerification

Loki is an open-source tool designed to automate the process of verifying the factuality of information. It provides a comprehensive pipeline for dissecting long texts into individual claims, assessing their worthiness for verification, generating queries for evidence search, crawling for evidence, and ultimately verifying the claims. This tool is especially useful for journalists, researchers, and anyone interested in the factuality of information.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

spaCy

spaCy is an industrial-strength Natural Language Processing (NLP) library in Python and Cython. It incorporates the latest research and is designed for real-world applications. The library offers pretrained pipelines supporting 70+ languages, with advanced neural network models for tasks such as tagging, parsing, named entity recognition, and text classification. It also facilitates multi-task learning with pretrained transformers like BERT, along with a production-ready training system and streamlined model packaging, deployment, and workflow management. spaCy is commercial open-source software released under the MIT license.

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

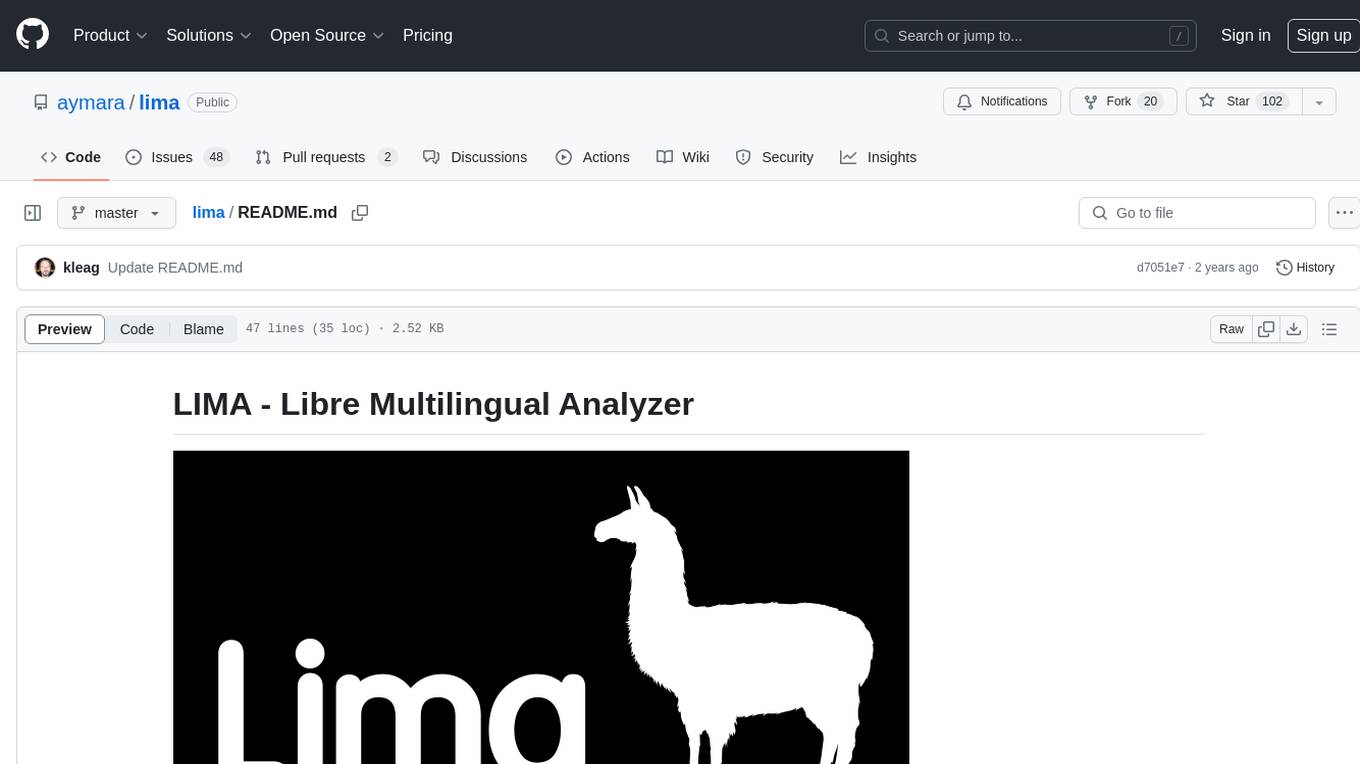

lima

LIMA is a multilingual linguistic analyzer developed by the CEA LIST, LASTI laboratory. It is Free Software available under the MIT license. LIMA has state-of-the-art performance for more than 60 languages using deep learning modules. It also includes a powerful rules-based mechanism called ModEx for extracting information in new domains without annotated data.

liboai

liboai is a simple C++17 library for the OpenAI API, providing developers with access to OpenAI endpoints through a collection of methods and classes. It serves as a spiritual port of OpenAI's Python library, 'openai', with similar structure and features. The library supports various functionalities such as ChatGPT, Audio, Azure, Functions, Image DALL·E, Models, Completions, Edit, Embeddings, Files, Fine-tunes, Moderation, and Asynchronous Support. Users can easily integrate the library into their C++ projects to interact with OpenAI services.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.