commands

A collection of production-ready slash commands for Claude Code

Stars: 774

Production-ready slash commands for Claude Code that accelerate development through intelligent automation and multi-agent orchestration. Contains 52 commands organized into workflows and tools categories. Workflows orchestrate complex tasks with multiple agents, while tools provide focused functionality for specific development tasks. Commands can be used with prefixes for organization or flattened for convenience. Best practices include using workflows for complex tasks and tools for specific scopes, chaining commands strategically, and providing detailed context for effective usage.

README:

A comprehensive collection of production-ready slash commands for Claude Code that provides intelligent automation and multi-agent orchestration capabilities for modern software development.

This repository provides 56 production-ready slash commands (15 workflows, 41 tools) that extend Claude Code's capabilities through:

- Workflows: Multi-agent orchestration systems that coordinate complex, multi-step operations across different domains

- Tools: Specialized single-purpose utilities for focused development tasks

- Claude Code installed and configured

- Claude Code Subagents collection for workflow orchestration capabilities

- Git for repository management

# Navigate to Claude configuration directory

cd ~/.claude

# Clone the commands repository

git clone https://github.com/wshobson/commands.git

# Clone the agents repository (required for workflow execution)

git clone https://github.com/wshobson/agents.gitCommands are organized in tools/ and workflows/ directories and invoked using directory prefixes:

# Workflow invocation

/workflows:feature-development implement OAuth2 authentication

# Tool invocation

/tools:security-scan perform vulnerability assessment

# Multiple argument example

/tools:api-scaffold create user management endpoints with RBACTo invoke commands without directory prefixes, copy files to the root directory:

cp tools/*.md .

cp workflows/*.md .

# Then invoke directly

/api-scaffold create REST endpoints

/feature-development implement payment systemWorkflows implement multi-agent orchestration patterns for complex, cross-domain tasks. Each workflow analyzes requirements, delegates to specialized agents, and coordinates execution across multiple subsystems.

| Command | Purpose | Agent Coordination |

|---|---|---|

feature-development |

End-to-end feature implementation | Backend, frontend, testing, deployment |

full-review |

Multi-perspective code analysis | Architecture, security, performance, quality |

smart-fix |

Intelligent problem resolution | Dynamic agent selection based on issue type |

tdd-cycle |

Test-driven development orchestration | Test writer, implementer, refactoring specialist |

| Command | Purpose | Scope |

|---|---|---|

git-workflow |

Version control process automation | Branching strategies, commit standards, PR templates |

improve-agent |

Agent optimization | Prompt engineering, performance tuning |

legacy-modernize |

Codebase modernization | Architecture migration, dependency updates, pattern refactoring |

ml-pipeline |

Machine learning pipeline construction | Data engineering, model training, deployment |

multi-platform |

Cross-platform development | Web, mobile, desktop coordination |

workflow-automate |

CI/CD pipeline automation | Build, test, deploy, monitor |

| Command | Primary Focus | Specialized Agents |

|---|---|---|

full-stack-feature |

Multi-tier implementation | Backend API, frontend UI, mobile, database |

security-hardening |

Security-first development | Threat modeling, vulnerability assessment, remediation |

data-driven-feature |

ML-powered functionality | Data science, feature engineering, model deployment |

performance-optimization |

System-wide optimization | Profiling, caching, query optimization, load testing |

incident-response |

Production issue resolution | Diagnostics, root cause analysis, hotfix deployment |

Tools provide focused, single-purpose utilities for specific development operations. Each tool is optimized for its domain with production-ready implementations.

| Command | Functionality | Key Features |

|---|---|---|

ai-assistant |

AI assistant implementation | LLM integration, conversation management, context handling |

ai-review |

ML code review | Model architecture validation, training pipeline review |

langchain-agent |

LangChain agent creation | RAG patterns, tool integration, memory management |

ml-pipeline |

ML pipeline construction | Data processing, training, evaluation, deployment |

prompt-optimize |

Prompt engineering | Performance testing, cost optimization, quality metrics |

| Command | Purpose | Capabilities |

|---|---|---|

code-explain |

Code documentation | AST analysis, complexity metrics, flow diagrams |

code-migrate |

Migration automation | Framework upgrades, language porting, API migrations |

refactor-clean |

Code improvement | Pattern detection, dead code removal, structure optimization |

tech-debt |

Debt assessment | Complexity analysis, risk scoring, remediation planning |

| Command | Focus Area | Technologies |

|---|---|---|

data-pipeline |

ETL/ELT architecture | Apache Spark, Airflow, dbt, streaming platforms |

data-validation |

Data quality | Schema validation, anomaly detection, constraint checking |

db-migrate |

Database migrations | Schema versioning, zero-downtime strategies, rollback plans |

| Command | Domain | Implementation |

|---|---|---|

deploy-checklist |

Deployment preparation | Pre-flight checks, rollback procedures, monitoring setup |

docker-optimize |

Container optimization | Multi-stage builds, layer caching, size reduction |

k8s-manifest |

Kubernetes configuration | Deployments, services, ingress, autoscaling, security policies |

monitor-setup |

Observability | Metrics, logging, tracing, alerting rules |

slo-implement |

SLO/SLI definition | Error budgets, monitoring, automated responses |

workflow-automate |

Pipeline automation | CI/CD, GitOps, infrastructure as code |

| Command | Testing Focus | Framework Support |

|---|---|---|

api-mock |

Mock generation | REST, GraphQL, gRPC, WebSocket |

api-scaffold |

Endpoint creation | CRUD operations, authentication, validation |

test-harness |

Test suite generation | Unit, integration, e2e, performance |

tdd-red |

Test-first development | Failing test creation, edge case coverage |

tdd-green |

Implementation | Minimal code to pass tests |

tdd-refactor |

Code improvement | Optimization while maintaining green tests |

| Command | Security Domain | Standards |

|---|---|---|

accessibility-audit |

WCAG compliance | ARIA, keyboard navigation, screen reader support |

compliance-check |

Regulatory compliance | GDPR, HIPAA, SOC2, PCI-DSS |

security-scan |

Vulnerability assessment | OWASP, CVE scanning, dependency audits |

| Command | Analysis Type | Output |

|---|---|---|

debug-trace |

Runtime analysis | Stack traces, memory profiles, execution paths |

error-analysis |

Error patterns | Root cause analysis, frequency analysis, impact assessment |

error-trace |

Production debugging | Log correlation, distributed tracing, error reproduction |

issue |

Issue tracking | Standardized templates, reproduction steps, acceptance criteria |

| Command | Management Area | Features |

|---|---|---|

config-validate |

Configuration management | Schema validation, environment variables, secrets handling |

deps-audit |

Dependency analysis | Security vulnerabilities, license compliance, version conflicts |

deps-upgrade |

Version management | Breaking change detection, compatibility testing, rollback support |

| Command | Documentation Type | Format |

|---|---|---|

doc-generate |

API documentation | OpenAPI, JSDoc, TypeDoc, Sphinx |

pr-enhance |

Pull request optimization | Description generation, checklist creation, review suggestions |

standup-notes |

Status reporting | Progress tracking, blocker identification, next steps |

| Command | Operational Focus | Use Case |

|---|---|---|

cost-optimize |

Resource optimization | Cloud spend analysis, right-sizing, reserved capacity |

onboard |

Environment setup | Development tools, access configuration, documentation |

context-save |

State persistence | Architecture decisions, configuration snapshots |

context-restore |

State recovery | Context reload, decision history, configuration restore |

# Complete feature with multi-agent orchestration

/workflows:feature-development OAuth2 authentication with JWT tokens

# API-first development

/tools:api-scaffold REST endpoints for user management with RBAC

# Test-driven approach

/workflows:tdd-cycle shopping cart with discount calculation logic# Intelligent issue resolution

/workflows:smart-fix high memory consumption in production workers

# Targeted error analysis

/tools:error-trace investigate Redis connection timeouts

# Performance optimization

/workflows:performance-optimization optimize database query performance# Security assessment

/tools:security-scan OWASP Top 10 vulnerability scan

# Compliance verification

/tools:compliance-check GDPR data handling requirements

# Security hardening workflow

/workflows:security-hardening implement zero-trust architecture# Complete TDD cycle with orchestration

/workflows:tdd-cycle payment processing with Stripe integration

# Manual TDD phases for granular control

/tools:tdd-red create failing tests for order validation

/tools:tdd-green implement minimal order validation logic

/tools:tdd-refactor optimize validation performance# Feature implementation pipeline

/workflows:feature-development real-time notifications with WebSockets

/tools:security-scan WebSocket implementation vulnerabilities

/workflows:performance-optimization WebSocket connection handling

/tools:deploy-checklist notification service deployment requirements

/tools:k8s-manifest WebSocket service with session affinity# Legacy system upgrade

/workflows:legacy-modernize migrate monolith to microservices

/tools:deps-audit check dependency vulnerabilities

/tools:deps-upgrade update to latest stable versions

/tools:refactor-clean remove deprecated patterns

/tools:test-harness generate comprehensive test coverage

/tools:docker-optimize create optimized container images

/tools:k8s-manifest deploy with rolling update strategy| Criteria | Use Workflows | Use Tools |

|---|---|---|

| Problem Complexity | Multi-domain, cross-cutting concerns | Single domain, focused scope |

| Solution Clarity | Exploratory, undefined approach | Clear implementation path |

| Agent Coordination | Multiple specialists required | Single expertise sufficient |

| Implementation Scope | End-to-end features | Specific components |

| Control Level | Automated orchestration preferred | Manual control required |

| Requirement | Recommended Workflow | Rationale |

|---|---|---|

| "Build complete authentication system" | /workflows:feature-development |

Multi-tier implementation required |

| "Debug production performance issues" | /workflows:smart-fix |

Unknown root cause, needs analysis |

| "Modernize legacy application" | /workflows:legacy-modernize |

Complex refactoring across stack |

| "Implement ML-powered feature" | /workflows:data-driven-feature |

Requires data science expertise |

| Task | Recommended Tool | Output |

|---|---|---|

| "Generate Kubernetes configs" | /tools:k8s-manifest |

YAML manifests with best practices |

| "Audit security vulnerabilities" | /tools:security-scan |

Vulnerability report with fixes |

| "Create API documentation" | /tools:doc-generate |

OpenAPI/Swagger specifications |

| "Optimize Docker images" | /tools:docker-optimize |

Multi-stage Dockerfile |

- Technology Stack Specification: Include framework versions, database systems, deployment targets

- Constraint Definition: Specify performance requirements, security standards, compliance needs

- Integration Requirements: Define external services, APIs, authentication methods

- Output Preferences: Indicate coding standards, testing frameworks, documentation formats

- Progressive Enhancement: Start with workflows for foundation, refine with tools

- Pipeline Construction: Chain commands in logical sequence for complete solutions

- Iterative Refinement: Use tool outputs as inputs for subsequent commands

- Parallel Execution: Run independent tools simultaneously when possible

- Workflows typically require 30-90 seconds for complete orchestration

- Tools execute in 5-30 seconds for focused operations

- Provide detailed requirements upfront to minimize iteration cycles

- Use saved context (

context-save/context-restore) for multi-session projects

Each slash command is a markdown file with the following characteristics:

| Component | Description | Example |

|---|---|---|

| Filename | Determines command name |

api-scaffold.md → /tools:api-scaffold

|

| Content | Execution instructions | Agent prompts and orchestration logic |

| Variables |

$ARGUMENTS placeholder |

Captures and processes user input |

| Directory | Command category |

tools/ for utilities, workflows/ for orchestration |

~/.claude/commands/

├── workflows/ # Multi-agent orchestration commands

│ ├── feature-development.md

│ ├── smart-fix.md

│ └── ...

├── tools/ # Single-purpose utility commands

│ ├── api-scaffold.md

│ ├── security-scan.md

│ └── ...

└── README.md # This documentation

-

File Creation: Place in

workflows/directory with descriptive naming - Agent Orchestration: Define delegation logic for multiple specialists

- Error Handling: Include fallback strategies and error recovery

- Output Coordination: Specify how agent outputs should be combined

-

File Creation: Place in

tools/directory with single-purpose naming - Implementation: Provide complete, production-ready code generation

- Framework Detection: Auto-detect and adapt to project stack

- Best Practices: Include security, performance, and scalability considerations

- Use lowercase with hyphens:

feature-name.md - Be descriptive but concise:

security-scannotscan - Indicate action clearly:

deps-upgradenotdependencies - Maintain consistency with existing commands

| Issue | Cause | Resolution |

|---|---|---|

| Command not recognized | File missing or misnamed | Verify file exists in correct directory |

| Slow execution | Normal workflow behavior | Workflows coordinate multiple agents (30-90s typical) |

| Incomplete output | Insufficient context | Provide technology stack and requirements |

| Integration failures | Path or configuration issues | Check file paths and dependencies |

-

Context Caching: Use

context-savefor multi-session projects - Batch Operations: Combine related tasks in single workflow

- Tool Selection: Use tools for known problems, workflows for exploration

- Requirement Clarity: Detailed specifications reduce iteration cycles

| Command | Type | Capabilities |

|---|---|---|

tdd-cycle |

Workflow | Complete red-green-refactor orchestration with test coverage analysis |

tdd-red |

Tool | Failing test generation with edge case coverage and mocking |

tdd-green |

Tool | Minimal implementation to achieve test passage |

tdd-refactor |

Tool | Code optimization while maintaining test integrity |

Framework Support: Jest, Mocha, PyTest, RSpec, JUnit, Go testing, Rust tests

| Command | Specialization | Key Features |

|---|---|---|

security-scan |

Vulnerability detection | SAST/DAST analysis, dependency scanning, secret detection |

docker-optimize |

Container optimization | Multi-stage builds, layer caching, size reduction (50-90% typical) |

k8s-manifest |

Kubernetes deployment | HPA, NetworkPolicy, PodSecurityPolicy, service mesh ready |

monitor-setup |

Observability | Prometheus metrics, Grafana dashboards, alert rules |

Security Tools Integration: Bandit, Safety, Trivy, Semgrep, Snyk, GitGuardian

| Command | Database Support | Migration Strategies |

|---|---|---|

db-migrate |

PostgreSQL, MySQL, MongoDB, DynamoDB | Blue-green, expand-contract, versioned schemas |

data-pipeline |

Batch and streaming | Apache Spark, Kafka, Airflow, dbt integration |

data-validation |

Schema and quality | Great Expectations, Pandera, custom validators |

Zero-Downtime Patterns: Rolling migrations, feature flags, dual writes, backfill strategies

| Command | Analysis Type | Optimization Techniques |

|---|---|---|

performance-optimization |

Full-stack profiling | Query optimization, caching strategies, CDN configuration |

cost-optimize |

Cloud resource analysis | Right-sizing, spot instances, reserved capacity planning |

docker-optimize |

Container performance | Build cache optimization, minimal base images, layer reduction |

# Complete feature development pipeline

/workflows:feature-development user authentication system

/tools:security-scan authentication implementation

/tools:test-harness authentication test suite

/tools:docker-optimize authentication service

/tools:k8s-manifest authentication deployment

/tools:monitor-setup authentication metricsMIT License - See LICENSE file for complete terms.

- Issues: GitHub Issues

- Contributions: Pull requests welcome following the development guidelines

- Questions: Open a discussion in the repository

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for commands

Similar Open Source Tools

commands

Production-ready slash commands for Claude Code that accelerate development through intelligent automation and multi-agent orchestration. Contains 52 commands organized into workflows and tools categories. Workflows orchestrate complex tasks with multiple agents, while tools provide focused functionality for specific development tasks. Commands can be used with prefixes for organization or flattened for convenience. Best practices include using workflows for complex tasks and tools for specific scopes, chaining commands strategically, and providing detailed context for effective usage.

agents

The 'agents' repository is a comprehensive collection of 83 specialized AI subagents for Claude Code, providing domain-specific expertise across software development, infrastructure, and business operations. Each subagent incorporates current industry best practices, production-ready patterns, deep domain expertise, modern technology stacks, and optimized model selection based on task complexity.

squirrelscan

Squirrelscan is a website audit tool designed for SEO, performance, and security audits. It offers 230+ rules across 21 categories, AI-native design for Claude Code and AI workflows, smart incremental crawling, and multiple output formats. It provides E-E-A-T auditing, crawl history tracking, and is developer-friendly with a CLI. Users can run audits in the terminal, integrate with AI coding agents, or pipe output to AI assistants. The tool is available for macOS, Linux, Windows, npm, and npx installations, and is suitable for autonomous AI workflows.

rag-web-ui

RAG Web UI is an intelligent dialogue system based on RAG (Retrieval-Augmented Generation) technology. It helps enterprises and individuals build intelligent Q&A systems based on their own knowledge bases. By combining document retrieval and large language models, it delivers accurate and reliable knowledge-based question-answering services. The system is designed with features like intelligent document management, advanced dialogue engine, and a robust architecture. It supports multiple document formats, async document processing, multi-turn contextual dialogue, and reference citations in conversations. The architecture includes a backend stack with Python FastAPI, MySQL + ChromaDB, MinIO, Langchain, JWT + OAuth2 for authentication, and a frontend stack with Next.js, TypeScript, Tailwind CSS, Shadcn/UI, and Vercel AI SDK for AI integration. Performance optimization includes incremental document processing, streaming responses, vector database performance tuning, and distributed task processing. The project is licensed under the Apache-2.0 License and is intended for learning and sharing RAG knowledge only, not for commercial purposes.

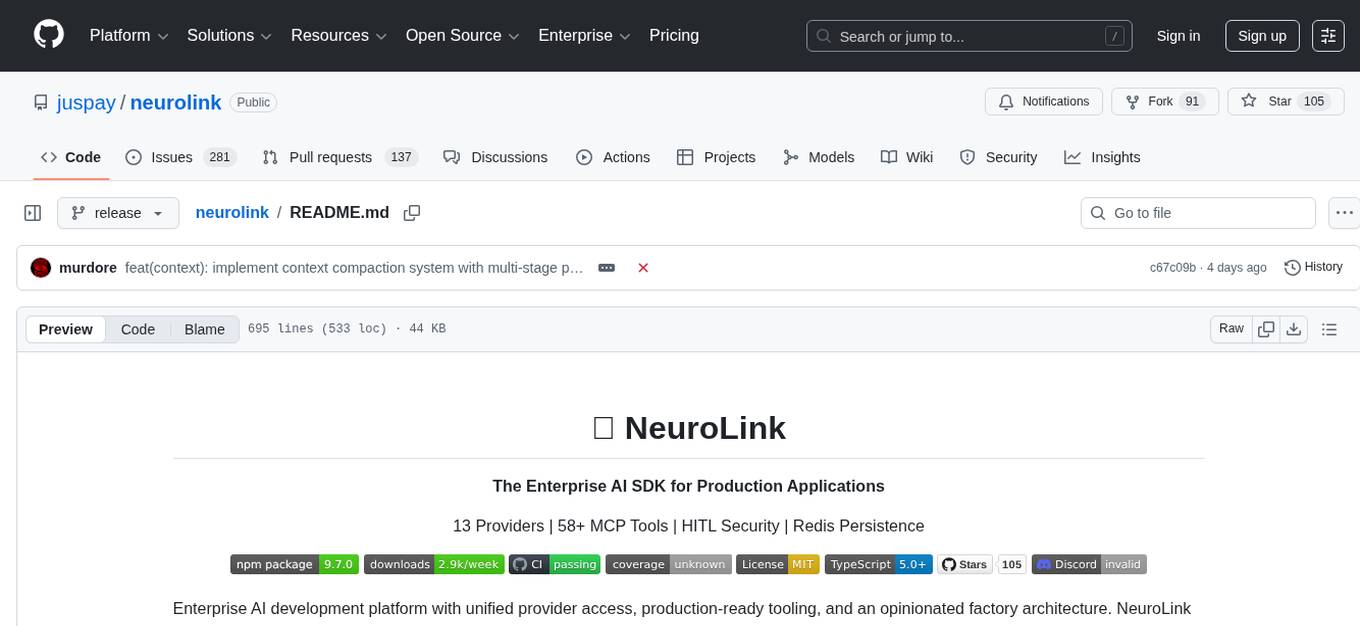

neurolink

NeuroLink is an Enterprise AI SDK for Production Applications that serves as a universal AI integration platform unifying 13 major AI providers and 100+ models under one consistent API. It offers production-ready tooling, including a TypeScript SDK and a professional CLI, for teams to quickly build, operate, and iterate on AI features. NeuroLink enables switching providers with a single parameter change, provides 64+ built-in tools and MCP servers, supports enterprise features like Redis memory and multi-provider failover, and optimizes costs automatically with intelligent routing. It is designed for the future of AI with edge-first execution and continuous streaming architectures.

PredictorLLM

PredictorLLM is an advanced trading agent framework that utilizes large language models to automate trading in financial markets. It includes a profiling module to establish agent characteristics, a layered memory module for retaining and prioritizing financial data, and a decision-making module to convert insights into trading strategies. The framework mimics professional traders' behavior, surpassing human limitations in data processing and continuously evolving to adapt to market conditions for superior investment outcomes.

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

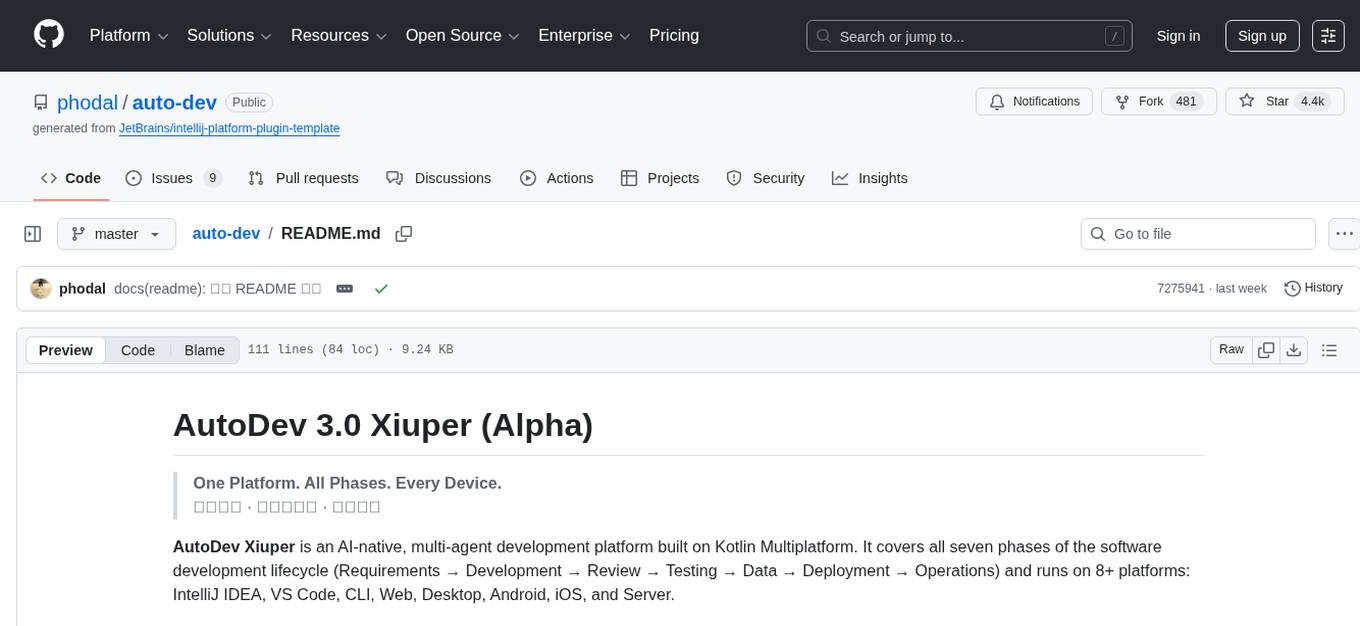

auto-dev

AutoDev Xiuper is an AI-native, multi-agent development platform built on Kotlin Multiplatform. It covers all seven phases of the software development lifecycle and runs on 8+ platforms. The platform provides a unified architecture for writing code once and running it anywhere, with specialized agents for each phase of development. It supports various devices including IntelliJ IDEA, VS Code, CLI, Web, Desktop, Android, iOS, and Server. The platform also offers features like Multi-LLM support, DevIns language for workflow automation, MCP Protocol for extensible tool ecosystem, and code intelligence for multiple programming languages.

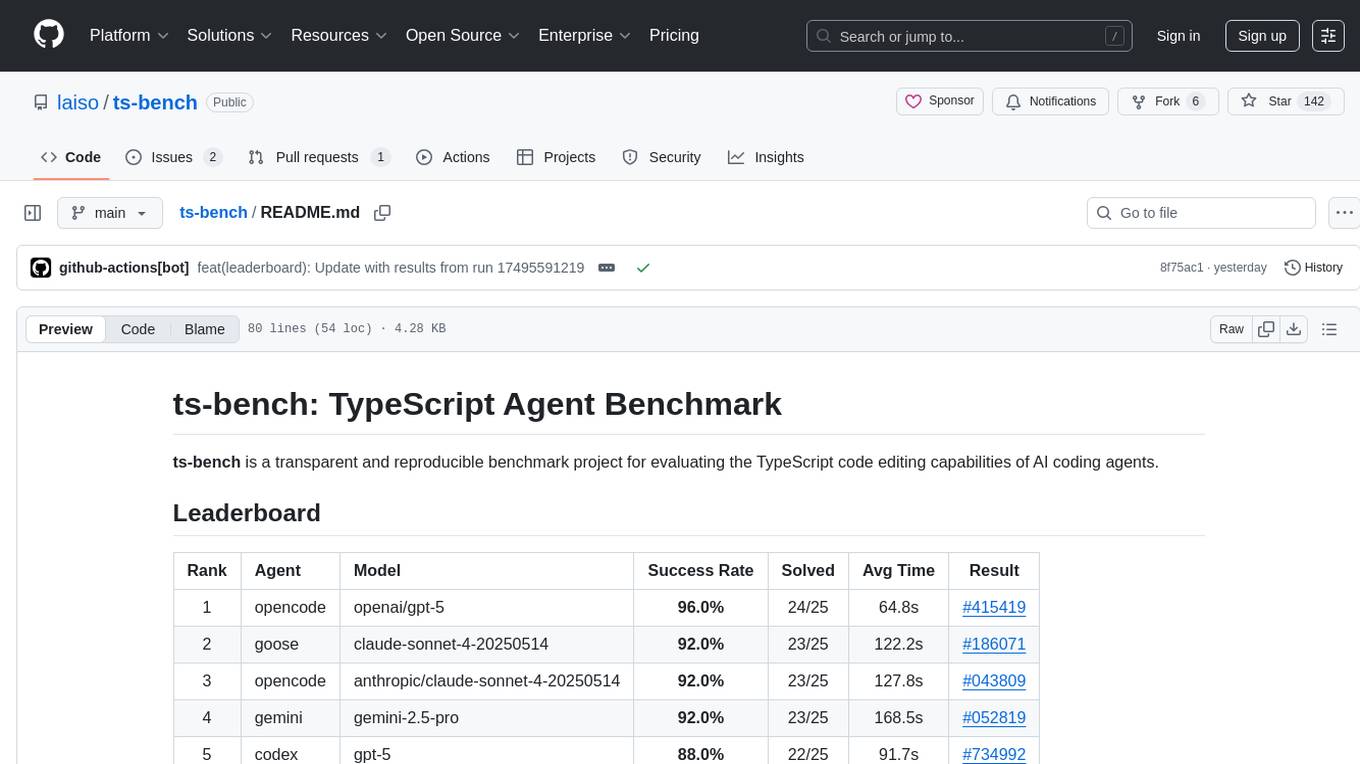

ts-bench

TS-Bench is a performance benchmarking tool for TypeScript projects. It provides detailed insights into the performance of TypeScript code, helping developers optimize their projects. With TS-Bench, users can measure and compare the execution time of different code snippets, functions, or modules. The tool offers a user-friendly interface for running benchmarks and analyzing the results. TS-Bench is a valuable asset for developers looking to enhance the performance of their TypeScript applications.

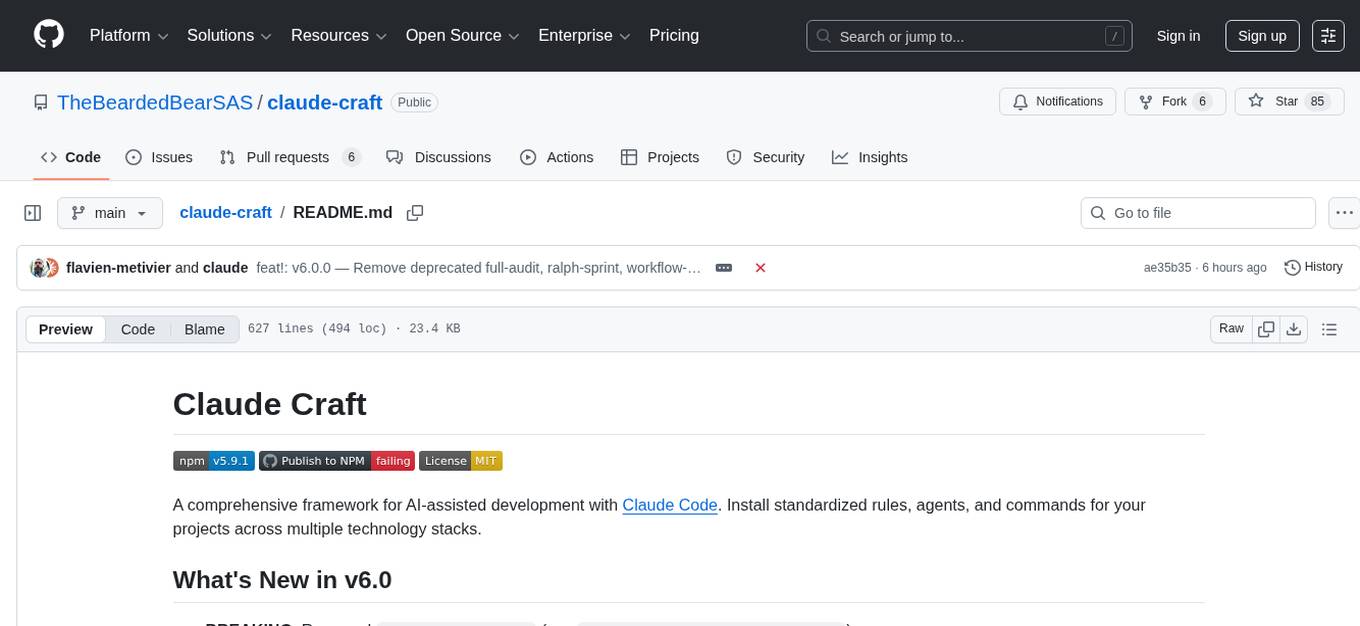

claude-craft

Claude Craft is a comprehensive framework for AI-assisted development with Claude Code, providing standardized rules, agents, and commands across multiple technology stacks. It includes autonomous sprint capabilities, documentation accuracy improvements, CI hardening, and test coverage enhancements. With support for 10 technology stacks, 5 languages, 40 AI agents, 157 slash commands, and various project management features like BMAD v6 framework, Ralph Wiggum loop execution, skills, templates, checklists, and hooks system, Claude Craft offers a robust solution for project development and management. The tool also supports workflow methodology, development tracks, document generation, BMAD v6 project management, quality gates, batch processing, backlog migration, and Claude Code hooks integration.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

agentic-qe

Agentic Quality Engineering Fleet (Agentic QE) is a comprehensive tool designed for quality engineering tasks. It offers a Domain-Driven Design architecture with 13 bounded contexts and 60 specialized QE agents. The tool includes features like TinyDancer intelligent model routing, ReasoningBank learning with Dream cycles, HNSW vector search, Coherence Verification, and integration with other tools like Claude Flow and Agentic Flow. It provides capabilities for test generation, coverage analysis, quality assessment, defect intelligence, requirements validation, code intelligence, security compliance, contract testing, visual accessibility, chaos resilience, learning optimization, and enterprise integration. The tool supports various protocols, LLM providers, and offers a vast library of QE skills for different testing scenarios.

azure-agentic-infraops

Agentic InfraOps is a multi-agent orchestration system for Azure infrastructure development that transforms how you build Azure infrastructure with AI agents. It provides a structured 7-step workflow that coordinates specialized AI agents through a complete infrastructure development cycle: Requirements → Architecture → Design → Plan → Code → Deploy → Documentation. The system enforces Azure Well-Architected Framework (WAF) alignment and Azure Verified Modules (AVM) at every phase, combining the speed of AI coding with best practices in cloud engineering.

sf-skills

sf-skills is a collection of reusable skills for Agentic Salesforce Development, enabling AI-powered code generation, validation, testing, debugging, and deployment. It includes skills for development, quality, foundation, integration, AI & automation, DevOps & tooling. The installation process is newbie-friendly and includes an installer script for various CLIs. The skills are compatible with platforms like Claude Code, OpenCode, Codex, Gemini, Amp, Droid, Cursor, and Agentforce Vibes. The repository is community-driven and aims to strengthen the Salesforce ecosystem.

Athena-Public

Project Athena is a Linux OS designed for AI Agents, providing memory, persistence, scheduling, and governance for AI models. It offers a comprehensive memory layer that survives across sessions, models, and IDEs, allowing users to own their data and port it anywhere. The system is built bottom-up through 1,079+ sessions, focusing on depth and compounding knowledge. Athena features a trilateral feedback loop for cross-model validation, a Model Context Protocol server with 9 tools, and a robust security model with data residency options. The repository structure includes an SDK package, examples for quickstart, scripts, protocols, workflows, and deep documentation. Key concepts cover architecture, knowledge graph, semantic memory, and adaptive latency. Workflows include booting, reasoning modes, planning, research, and iteration. The project has seen significant content expansion, viral validation, and metrics improvements.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

For similar tasks

commands

Production-ready slash commands for Claude Code that accelerate development through intelligent automation and multi-agent orchestration. Contains 52 commands organized into workflows and tools categories. Workflows orchestrate complex tasks with multiple agents, while tools provide focused functionality for specific development tasks. Commands can be used with prefixes for organization or flattened for convenience. Best practices include using workflows for complex tasks and tools for specific scopes, chaining commands strategically, and providing detailed context for effective usage.

apicat

ApiCat is an API documentation management tool that is fully compatible with the OpenAPI specification. With ApiCat, you can freely and efficiently manage your APIs. It integrates the capabilities of LLM, which not only helps you automatically generate API documentation and data models but also creates corresponding test cases based on the API content. Using ApiCat, you can quickly accomplish anything outside of coding, allowing you to focus your energy on the code itself.

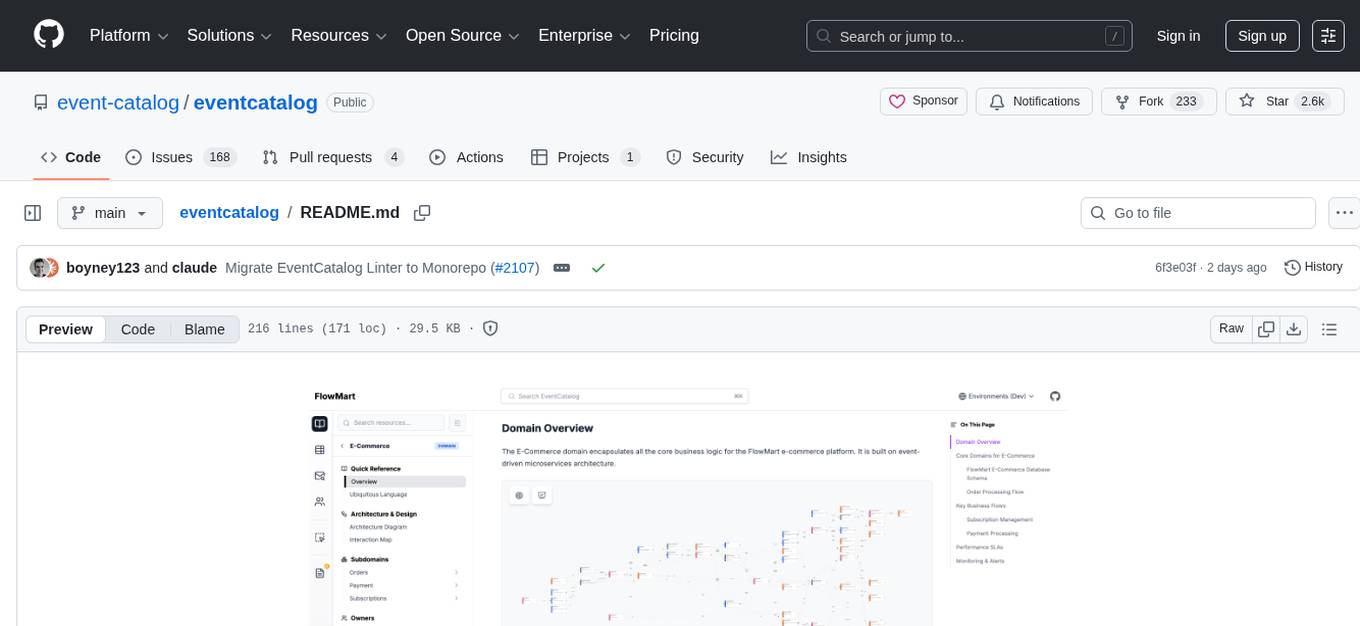

eventcatalog

EventCatalog is an architecture catalog for distributed systems that allows users to document events, services, domains, and flows with AI-powered discovery. It provides features such as AI-native discovery, visual documentation, multi-platform support, enterprise readiness, and customization. The tool is organized as a Turborepo monorepo with different modules for the main catalog application, Node.js SDK, and CLI scaffolding tool. EventCatalog is purpose-built for distributed systems and event-driven architectures, offering advantages over generic documentation tools, vendor-specific tools, and service catalogs.

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

mscclpp

MSCCL++ is a GPU-driven communication stack for scalable AI applications. It provides a highly efficient and customizable communication stack for distributed GPU applications. MSCCL++ redefines inter-GPU communication interfaces, delivering a highly efficient and customizable communication stack for distributed GPU applications. Its design is specifically tailored to accommodate diverse performance optimization scenarios often encountered in state-of-the-art AI applications. MSCCL++ provides communication abstractions at the lowest level close to hardware and at the highest level close to application API. The lowest level of abstraction is ultra light weight which enables a user to implement logics of data movement for a collective operation such as AllReduce inside a GPU kernel extremely efficiently without worrying about memory ordering of different ops. The modularity of MSCCL++ enables a user to construct the building blocks of MSCCL++ in a high level abstraction in Python and feed them to a CUDA kernel in order to facilitate the user's productivity. MSCCL++ provides fine-grained synchronous and asynchronous 0-copy 1-sided abstracts for communication primitives such as `put()`, `get()`, `signal()`, `flush()`, and `wait()`. The 1-sided abstractions allows a user to asynchronously `put()` their data on the remote GPU as soon as it is ready without requiring the remote side to issue any receive instruction. This enables users to easily implement flexible communication logics, such as overlapping communication with computation, or implementing customized collective communication algorithms without worrying about potential deadlocks. Additionally, the 0-copy capability enables MSCCL++ to directly transfer data between user's buffers without using intermediate internal buffers which saves GPU bandwidth and memory capacity. MSCCL++ provides consistent abstractions regardless of the location of the remote GPU (either on the local node or on a remote node) or the underlying link (either NVLink/xGMI or InfiniBand). This simplifies the code for inter-GPU communication, which is often complex due to memory ordering of GPU/CPU read/writes and therefore, is error-prone.

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

AIMr

AIMr is an AI aimbot tool written in Python that leverages modern technologies to achieve an undetected system with a pleasing appearance. It works on any game that uses human-shaped models. To optimize its performance, users should build OpenCV with CUDA. For Valorant, additional perks in the Discord and an Arduino Leonardo R3 are required.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.