mlir-air

None

Stars: 77

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

README:

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

Copyright© 2019-2023 Advanced Micro Devices, Inc.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mlir-air

Similar Open Source Tools

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

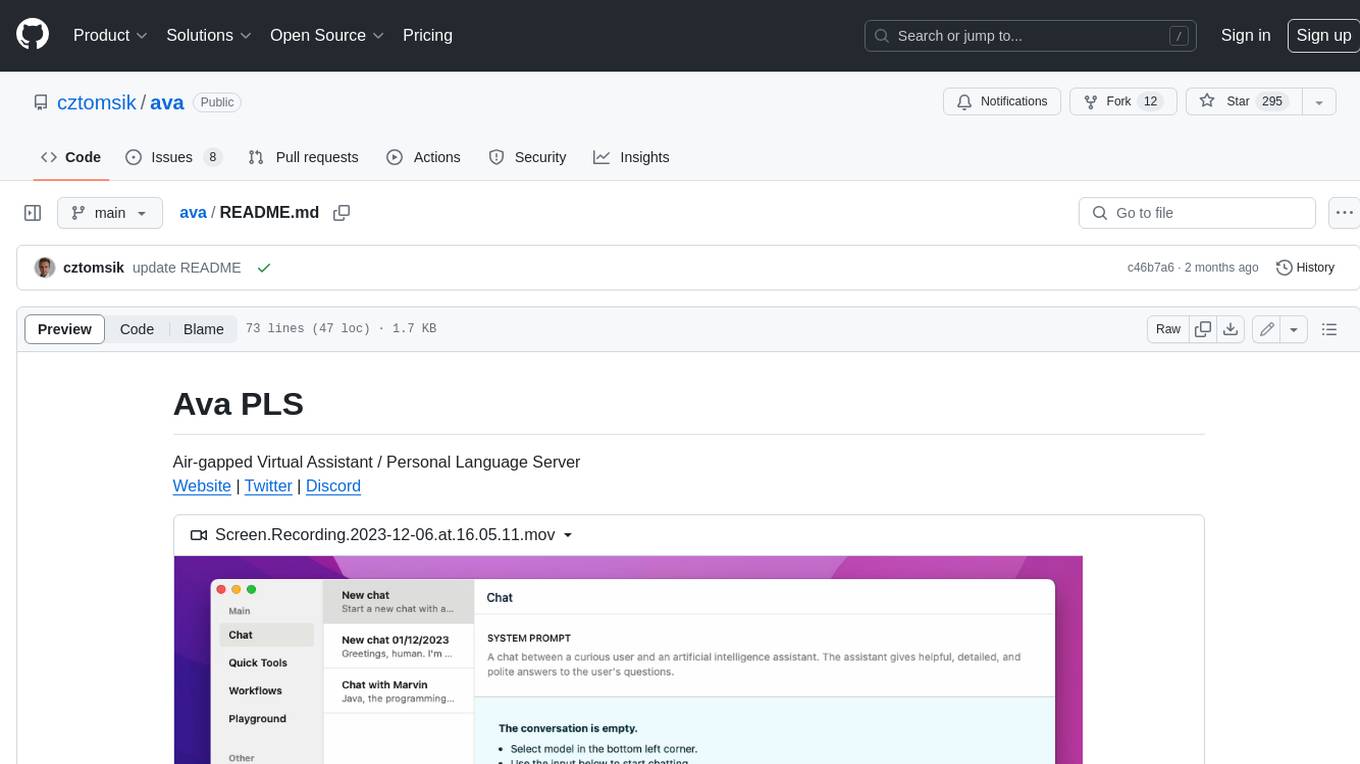

learning-ai

This repository is a collection of notes and code examples related to AI, covering topics such as Tokenization, Architectures, GGML, Llama.cpp, Position Embeddings, GPUs, Vector Databases, and Vision. It also includes in-progress work on Model Context Protocol (MCP) and Voice Activity Detection (VAD) for whisper.cpp. The repository offers exploration code for various AI-related concepts and tools like GGML, Llama.cpp, GPU technologies (CUDA, Kompute, Metal, OpenCL, ROCm, Vulkan), Word embeddings, Huggingface API, and Qdrant Vector Database in both Rust and Python.

jean

Jean is an AI assistant application that helps manage multiple projects, worktrees, and sessions with Claude CLI. It provides automated worktree management, context loading from sessions and GitHub, magic Git commands/prompts, merge conflict resolution, worktree/archiving, and more. The tool is designed to streamline project management and version control tasks.

commandkit

Commandkit is a discord.js meta-framework designed for building powerful, modular, and extensible Discord bots with ease. It provides a range of packages for various functionalities such as AI, caching, devtools, i18n, legacy support, queuing, Redis integration, and task management. The framework aims to simplify the process of creating and managing Discord bots by offering a structured and versatile solution.

ToolJet

ToolJet is an open-source platform for building and deploying internal tools, workflows, and AI agents. It offers a visual builder with drag-and-drop UI, integrations with databases, APIs, SaaS apps, and object storage. The community edition includes features like a visual app builder, ToolJet database, multi-page apps, collaboration tools, extensibility with plugins, code execution, and security measures. ToolJet AI, the enterprise version, adds AI capabilities for app generation, query building, debugging, agent creation, security compliance, user management, environment management, GitSync, branding, access control, embedded apps, and enterprise support.

commandkit

Commandkit is a discord.js meta-framework designed for building powerful, modular, and extensible Discord bots with ease. It provides a range of packages for various functionalities like AI, caching, devtools, i18n, queue, redis, and tasks. The framework simplifies the process of creating and managing Discord bots by offering a structured and organized approach to development. With Commandkit, developers can easily create sophisticated bots with advanced features and functionalities.

graphbit

GraphBit is an industry-grade agentic AI framework built for developers and AI teams that demand stability, scalability, and low resource usage. It is written in Rust for maximum performance and safety, delivering significantly lower CPU usage and memory footprint compared to leading alternatives. The framework is designed to run multi-agent workflows in parallel, persist memory across steps, recover from failures, and ensure 100% task success under load. With lightweight architecture, observability, and concurrency support, GraphBit is suitable for deployment in high-scale enterprise environments and low-resource edge scenarios.

flowdeer-dist

FlowDeer Tree is an AI tool designed for managing complex workflows and facilitating deep thoughts. It provides features such as displaying thinking chains, assigning tasks to AI members, utilizing task conclusions as context, copying and importing AI members in JSON format, adjusting node sequences, calling external APIs as plugins, and customizing default task splitting, execution, summarization, and output rewriting prompts. The tool aims to streamline workflow processes and enhance productivity by leveraging artificial intelligence capabilities.

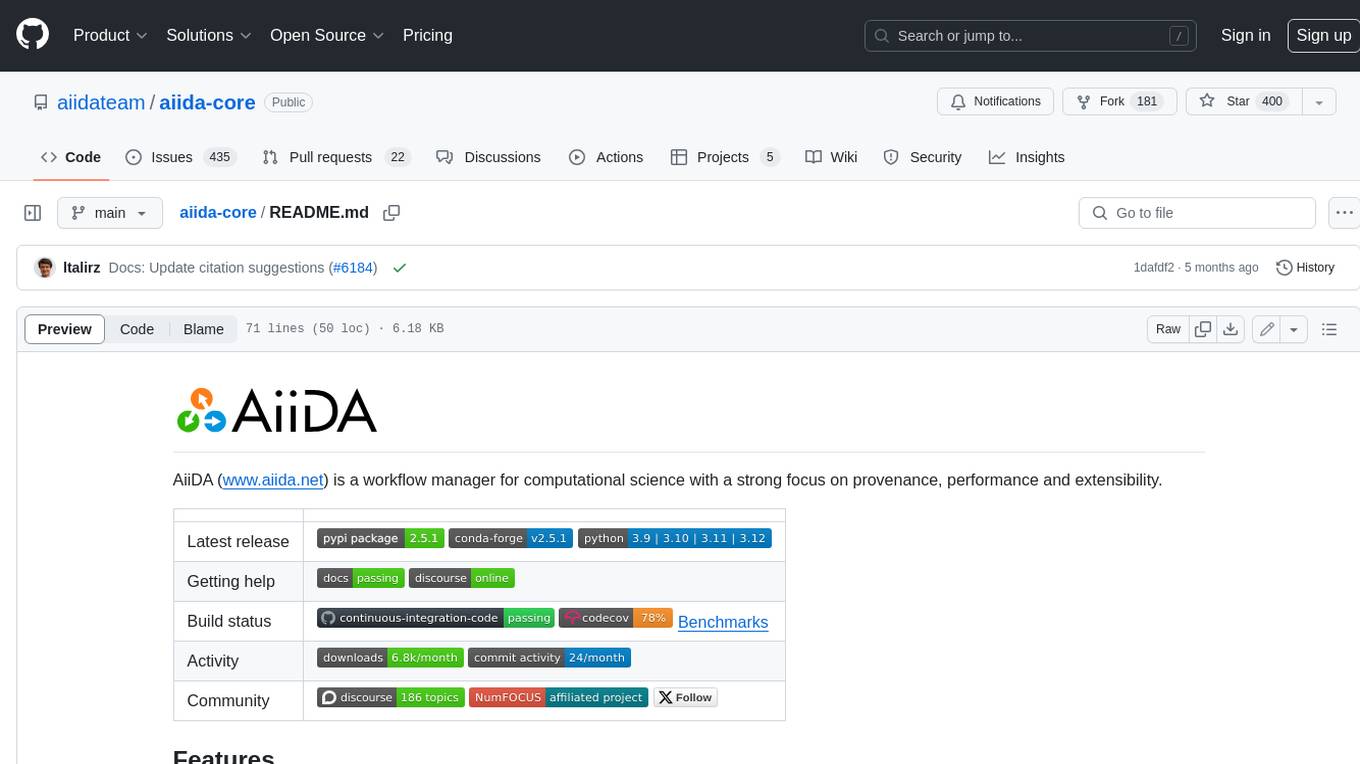

aiida-core

AiiDA (www.aiida.net) is a workflow manager for computational science with a strong focus on provenance, performance and extensibility. **Features** * **Workflows:** Write complex, auto-documenting workflows in python, linked to arbitrary executables on local and remote computers. The event-based workflow engine supports tens of thousands of processes per hour with full checkpointing. * **Data provenance:** Automatically track inputs, outputs & metadata of all calculations in a provenance graph for full reproducibility. Perform fast queries on graphs containing millions of nodes. * **HPC interface:** Move your calculations to a different computer by changing one line of code. AiiDA is compatible with schedulers like SLURM, PBS Pro, torque, SGE or LSF out of the box. * **Plugin interface:** Extend AiiDA with plugins for new simulation codes (input generation & parsing), data types, schedulers, transport modes and more. * **Open Science:** Export subsets of your provenance graph and share them with peers or make them available online for everyone on the Materials Cloud. * **Open source:** AiiDA is released under the MIT open source license

quarkus-langchain4j

This repository contains Quarkus extensions that facilitate seamless integration between Quarkus and LangChain4j, enabling easy incorporation of Large Language Models (LLMs) into your Quarkus applications. Here is a non-exhaustive list of features that are currently supported: Declarative AI services, Integration with diverse LLMs (OpenAI GPTs, Hugging Faces, Ollama...), Tool support, Embedding support, Document store integration (Redis, Chroma, Infinispan...), Native compilation support, Integration with Quarkus observability stack (metrics, tracing...).

1Panel

1Panel is an open-source, modern web-based control panel for Linux server management. It provides efficient management through a user-friendly web graphical interface, enabling users to effortlessly manage their Linux servers. Key features include host monitoring, file management, database administration, container management, rapid website deployment with WordPress integration, an application store for easy installation and updates, security and reliability through containerization and secure application deployment practices, integrated firewall management, log auditing capabilities, and one-click backup & restore functionality supporting various cloud storage solutions.

MaxKB

MaxKB is a knowledge base Q&A system based on the LLM large language model. MaxKB = Max Knowledge Base, which aims to become the most powerful brain of the enterprise.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

DataDreamer

DataDreamer is a powerful open-source Python library designed for prompting, synthetic data generation, and training workflows. It is simple, efficient, and research-grade, allowing users to create prompting workflows, generate synthetic datasets, and train models with ease. The library is built for researchers, by researchers, focusing on correctness, best practices, and reproducibility. It offers features like aggressive caching, resumability, support for bleeding-edge techniques, and easy sharing of datasets and models. DataDreamer enables users to run multi-step prompting workflows, generate synthetic datasets for various tasks, and train models by aligning, fine-tuning, instruction-tuning, and distilling them using existing or synthetic data.

CR-Mentor

CR-Mentor is a project that leverages Knowledge Base + LLM to improve development efficiency in Code Review. It provides comprehensive code context understanding, customizable code standards, global code analysis, and risk code identification. The tool aims to enhance code review processes by automating tracking of related files, supporting custom code review standards, generating comprehensive review reports, and identifying potentially risky changes with improvement suggestions.

For similar tasks

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

awesome-code-ai

A curated list of AI coding tools, including code completion, refactoring, and assistants. This list includes both open-source and commercial tools, as well as tools that are still in development. Some of the most popular AI coding tools include GitHub Copilot, CodiumAI, Codeium, Tabnine, and Replit Ghostwriter.

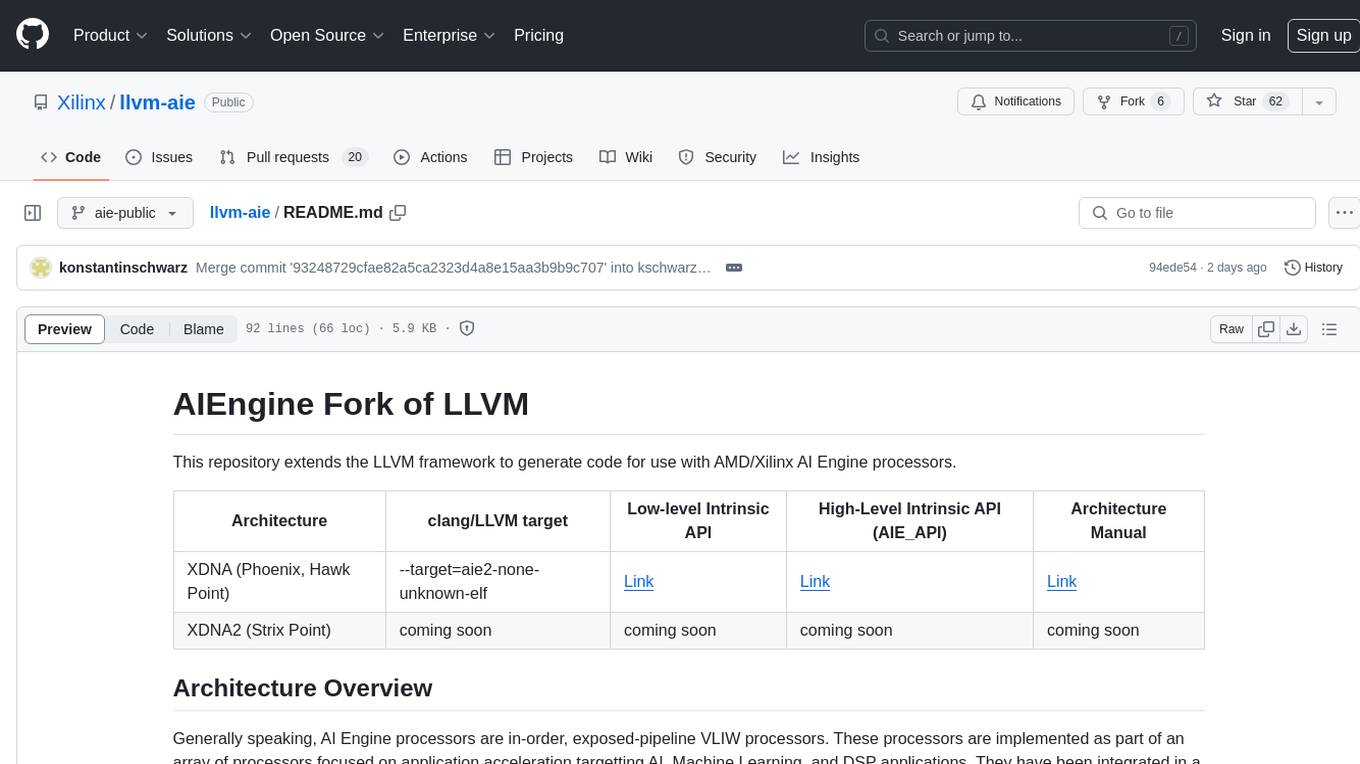

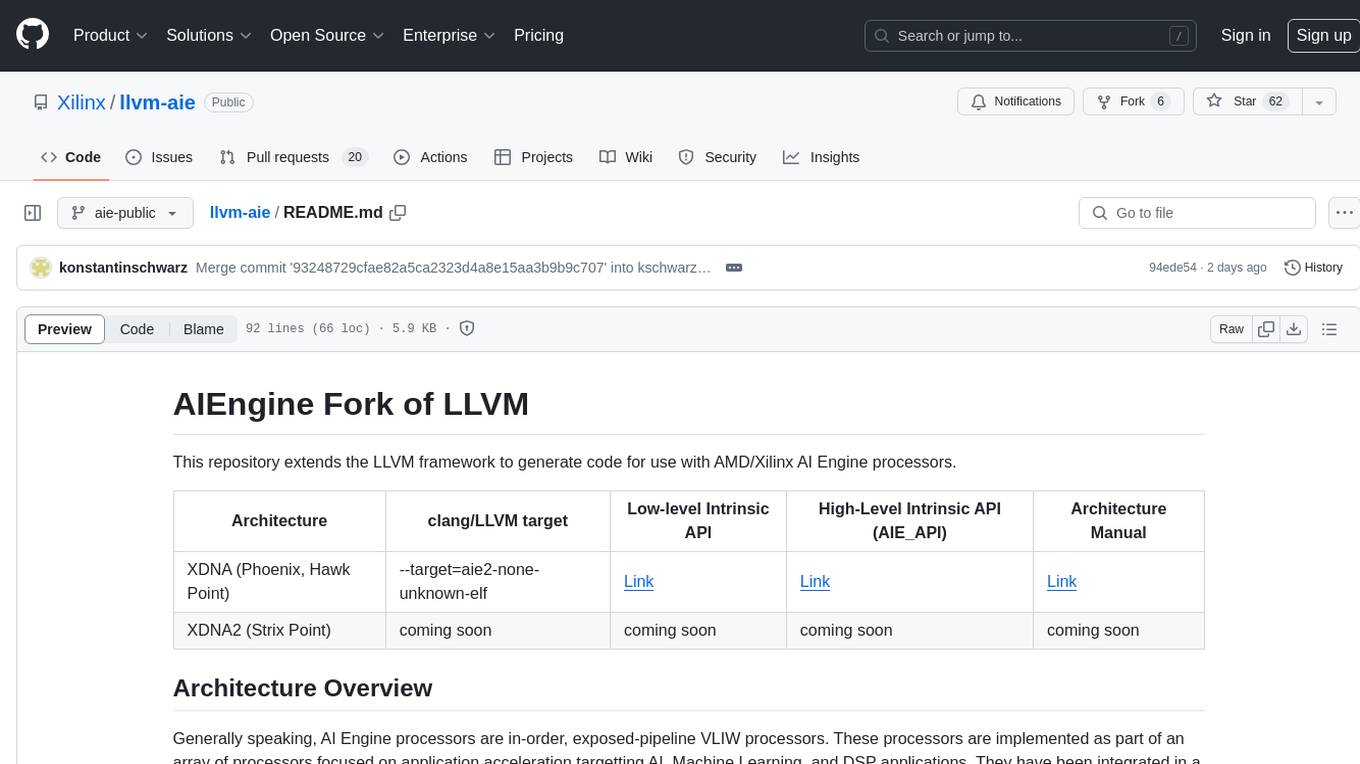

llvm-aie

This repository extends the LLVM framework to generate code for use with AMD/Xilinx AI Engine processors. AI Engine processors are in-order, exposed-pipeline VLIW processors focused on application acceleration for AI, Machine Learning, and DSP applications. The repository adds LLVM support for specific features like non-power of 2 pointers, operand latencies, resource conflicts, negative operand latencies, slot assignment, relocations, code alignment restrictions, and register allocation. It includes support for Clang, LLD, binutils, Compiler-RT, and LLVM-LIBC.

labs-ai-tools-vscode

AI Prompt Runner for VSCode is a research prototype project that provides a VSCode extension to run prompts. Users can install the extension, set a secret key, and run prompts to get results for any project. The tool is designed for developers and researchers to experiment with AI prompts within the VSCode environment.

aily-blockly

Aily Blockly is a blockly IDE under the Aily Project, providing AI-assisted programming capabilities for non-professional users. It aims to integrate numerous AI capabilities to help hardware developers develop more smoothly, ultimately achieving natural language programming. The software offers features like Engineering Project Management, Library Manager, Serial Debug Tool, AI Project Generation, AI Code Generation, AI Library Conversion, Development Board Configuration Generation, and Lightning Compilation Tool. It is currently in the alpha stage, suitable for prototype verification and educational teaching.

langtrace

Langtrace is an open source observability software that lets you capture, debug, and analyze traces and metrics from all your applications that leverage LLM APIs, Vector Databases, and LLM-based Frameworks. It supports Open Telemetry Standards (OTEL), and the traces generated adhere to these standards. Langtrace offers both a managed SaaS version (Langtrace Cloud) and a self-hosted option. The SDKs for both Typescript/Javascript and Python are available, making it easy to integrate Langtrace into your applications. Langtrace automatically captures traces from various vendors, including OpenAI, Anthropic, Azure OpenAI, Langchain, LlamaIndex, Pinecone, and ChromaDB.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

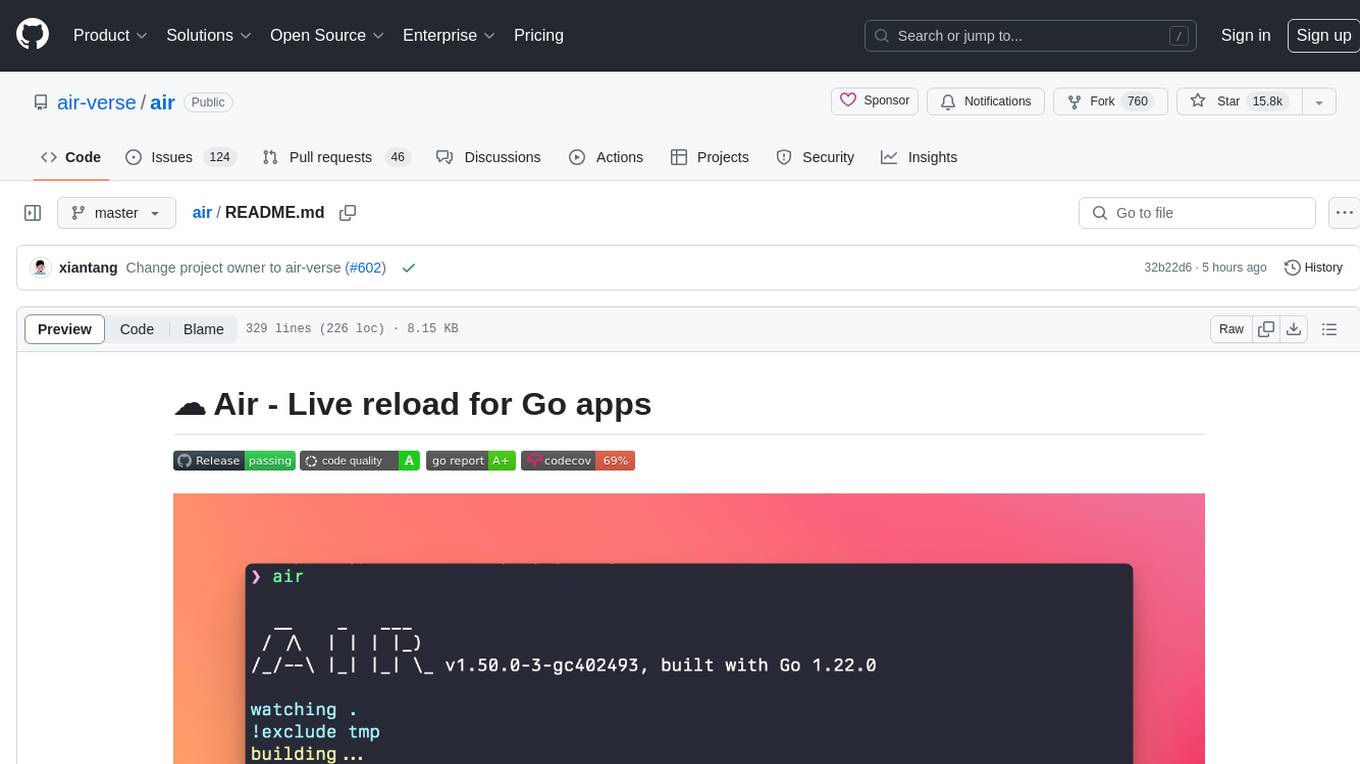

air

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, customizable build or any command, support for excluding subdirectories, and allows watching new directories after Air started. Users can overwrite specific configuration from arguments and pass runtime arguments for running the built binary. Air can be installed via `go install`, `install.sh`, or `goblin.run`, and can also be used with Docker/Podman. It supports debugging, Docker Compose, and provides a Q&A section for common issues. The tool requires Go 1.16+ for development and welcomes pull requests. Air is released under the GNU General Public License v3.0.

For similar jobs

byteir

The ByteIR Project is a ByteDance model compilation solution. ByteIR includes compiler, runtime, and frontends, and provides an end-to-end model compilation solution. Although all ByteIR components (compiler/runtime/frontends) are together to provide an end-to-end solution, and all under the same umbrella of this repository, each component technically can perform independently. The name, ByteIR, comes from a legacy purpose internally. The ByteIR project is NOT an IR spec definition project. Instead, in most scenarios, ByteIR directly uses several upstream MLIR dialects and Google Mhlo. Most of ByteIR compiler passes are compatible with the selected upstream MLIR dialects and Google Mhlo.

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

husky

Husky is a research-focused programming language designed for next-generation computing. It aims to provide a powerful and ergonomic development experience for various tasks, including system level programming, web/native frontend development, parser/compiler tasks, game development, formal verification, machine learning, and more. With a strong type system and support for human-in-the-loop programming, Husky enables users to tackle complex tasks such as explainable image classification, natural language processing, and reinforcement learning. The language prioritizes debugging, visualization, and human-computer interaction, offering agile compilation and evaluation, multiparadigm support, and a commitment to a good ecosystem.

Aiwnios

Aiwnios is a HolyC Compiler/Runtime designed for 64-bit ARM, RISCV, and x86 machines, including Apple M1 Macs, with plans for supporting other architectures in the future. The project is currently a work in progress, with regular updates and improvements planned. Aiwnios includes a sockets API (currently tested on FreeBSD) and a HolyC assembler accessible through AARCH64. The heart of Aiwnios lies in `arm_backend.c`, where the compiler is located, and a powerful AARCH64 assembler in `arm64_asm.c`. The compiler uses reverse Polish notation and statements are reversed. The developer manual is intended for developers working on the C side, providing detailed explanations of the source code.

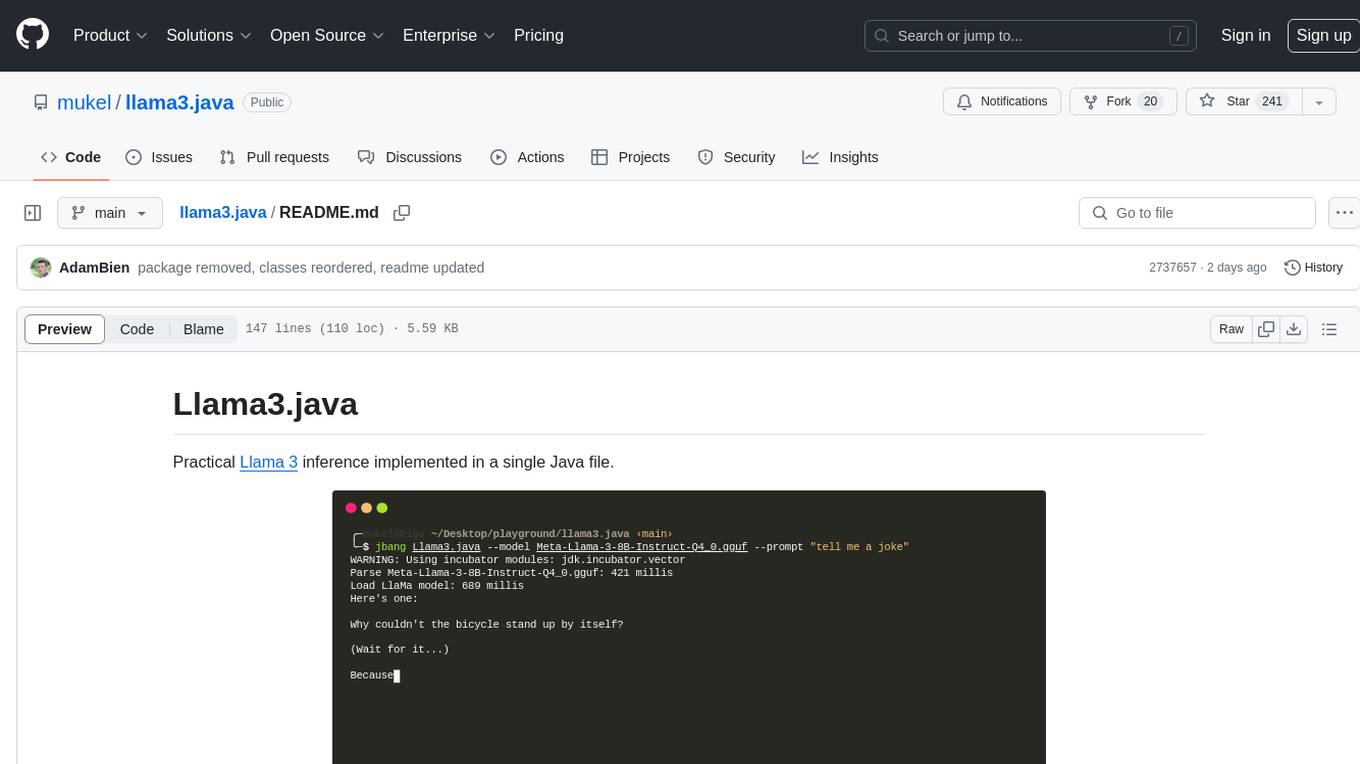

llama3.java

Llama3.java is a practical Llama 3 inference tool implemented in a single Java file. It serves as the successor of llama2.java and is designed for testing and tuning compiler optimizations and features on the JVM, especially for the Graal compiler. The tool features a GGUF format parser, Llama 3 tokenizer, Grouped-Query Attention inference, support for Q8_0 and Q4_0 quantizations, fast matrix-vector multiplication routines using Java's Vector API, and a simple CLI with 'chat' and 'instruct' modes. Users can download quantized .gguf files from huggingface.co for model usage and can also manually quantize to pure 'Q4_0'. The tool requires Java 21+ and supports running from source or building a JAR file for execution. Performance benchmarks show varying tokens/s rates for different models and implementations on different hardware setups.

llvm-aie

This repository extends the LLVM framework to generate code for use with AMD/Xilinx AI Engine processors. AI Engine processors are in-order, exposed-pipeline VLIW processors focused on application acceleration for AI, Machine Learning, and DSP applications. The repository adds LLVM support for specific features like non-power of 2 pointers, operand latencies, resource conflicts, negative operand latencies, slot assignment, relocations, code alignment restrictions, and register allocation. It includes support for Clang, LLD, binutils, Compiler-RT, and LLVM-LIBC.

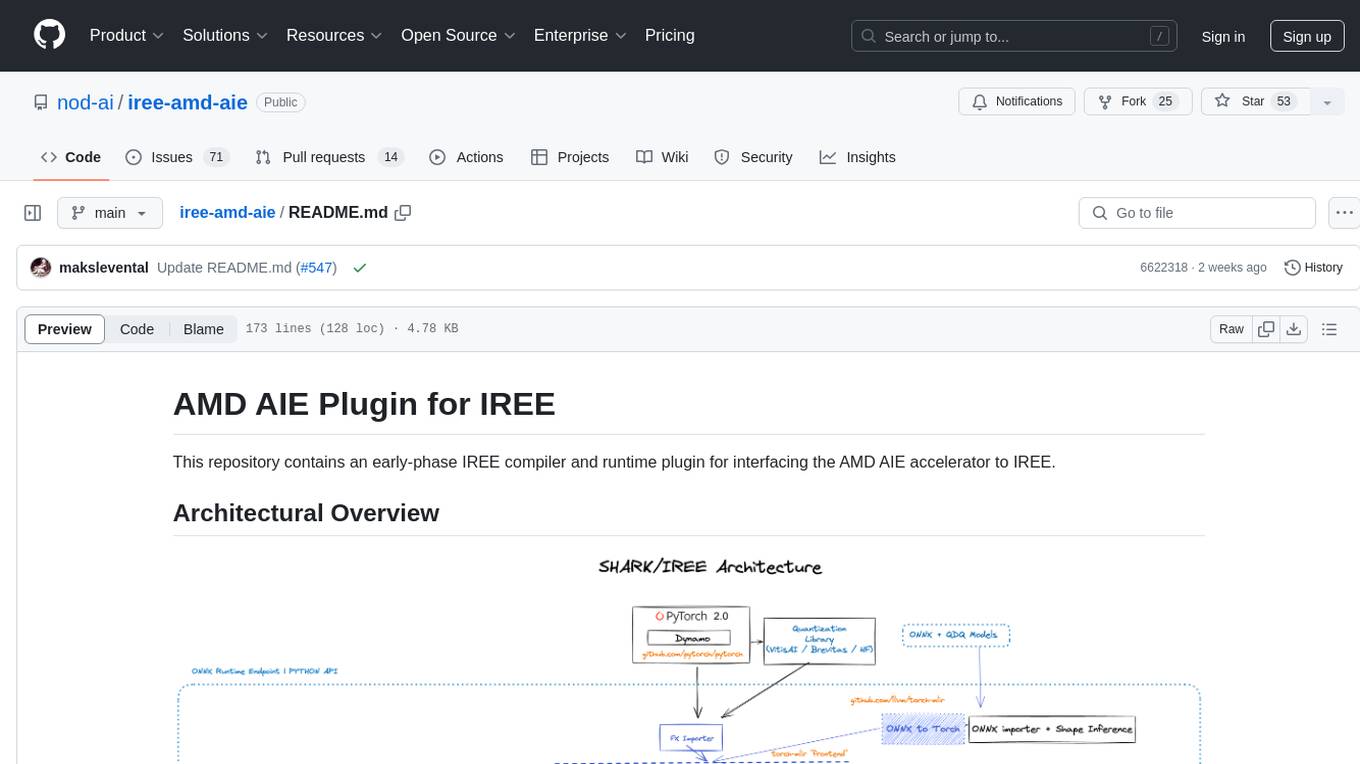

iree-amd-aie

This repository contains an early-phase IREE compiler and runtime plugin for interfacing the AMD AIE accelerator to IREE. It provides architectural overview, developer setup instructions, building guidelines, and runtime driver setup details. The repository focuses on enabling the integration of the AMD AIE accelerator with IREE, offering developers the tools and resources needed to build and run applications leveraging this technology.

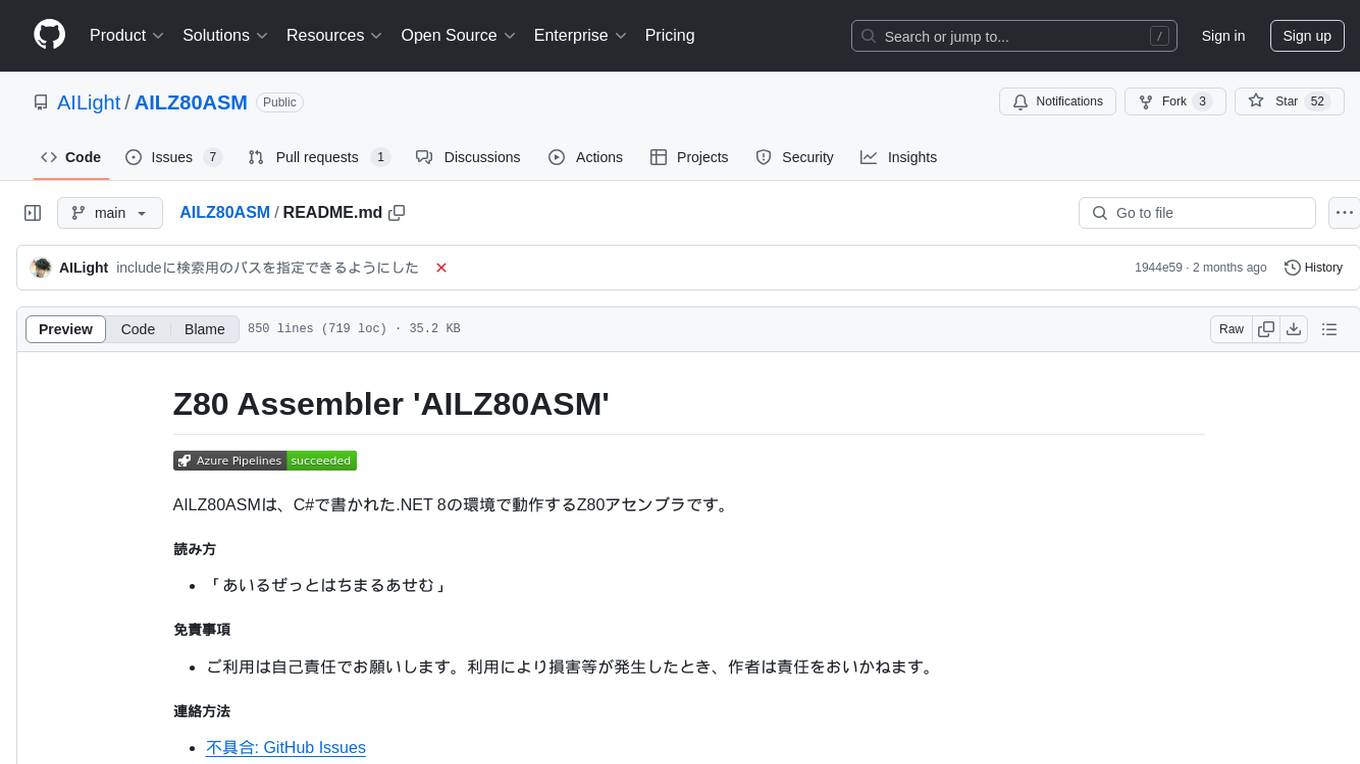

AILZ80ASM

AILZ80ASM is a Z80 assembler that runs in a .NET 8 environment written in C#. It can be used to assemble Z80 assembly code and generate output files in various formats. The tool supports various command-line options for customization and provides features like macros, conditional assembly, and error checking. AILZ80ASM offers good performance metrics with fast assembly times and efficient output file sizes. It also includes support for handling different file encodings and provides a range of built-in functions for working with labels, expressions, and data types.