labs-ai-tools-vscode

Run & debug workflows for AI agents running Dockerized tools in VSCode

Stars: 74

AI Prompt Runner for VSCode is a research prototype project that provides a VSCode extension to run prompts. Users can install the extension, set a secret key, and run prompts to get results for any project. The tool is designed for developers and researchers to experiment with AI prompts within the VSCode environment.

README:

Docker Labs

If you aren't familiar with our experiments and work, please check out https://github.com/docker/labs-ai-tools-for-devs

If you are familiar with our projects, then this is simply a VSCode extension to run prompts.

This project is a research prototype. It is ready to try and will give results for any project you try it on.

Docker internal users: You must be opted-out of mandatory sign-in.

- Install latest VSIX file https://github.com/docker/labs-ai-tools-vscode/releases

- Execute command

>Docker AI: Set Secret Key...to enter the api key for your model provider. This stop is optional if your pompt specifies a local model viaurl:andmodel:attributes. - Run a prompt

Create file test.md

test.md

---

extractors:

- name: project-facts

functions:

- name: write_files

---

# Improv Test

This is a test prompt...

# Prompt system

You are Dwight Schrute.

# Prompt user

Tell me about my project.

My project uses the following languages:

{{project-facts.languages}}

My project has the following files:

{{project-facts.files}}

Run command >Docker AI: Run this prompt

https://vonwig.github.io/prompts.docs

# docker:command=build-and-install

yarn run compile

yarn run package

# Outputs vsix file

code --install-extension your-file.vsixFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for labs-ai-tools-vscode

Similar Open Source Tools

labs-ai-tools-vscode

AI Prompt Runner for VSCode is a research prototype project that provides a VSCode extension to run prompts. Users can install the extension, set a secret key, and run prompts to get results for any project. The tool is designed for developers and researchers to experiment with AI prompts within the VSCode environment.

buildware-ai

Buildware is a tool designed to help developers accelerate their code shipping process by leveraging AI technology. Users can build a code instruction system, submit an issue, and receive an AI-generated pull request. The tool is created by Mckay Wrigley and Tyler Bruno at Takeoff AI. Buildware offers a simple setup process involving cloning the repository, installing dependencies, setting up environment variables, configuring a database, and obtaining a GitHub Personal Access Token (PAT). The tool is currently being updated to include advanced features such as Linear integration, local codebase mode, and team support.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

AilyticMinds

AilyticMinds Chatbot UI is an open-source AI chat app designed for easy deployment and improved backend compatibility. It provides a user-friendly interface for creating and hosting chatbots, with features like mobile layout optimization and support for various providers. The tool utilizes Supabase for data storage and management, offering a secure and scalable solution for chatbot development. Users can quickly set up their own instances locally or in the cloud, with detailed instructions provided for installation and configuration.

LLM-Engineers-Handbook

The LLM Engineer's Handbook is an official repository containing a comprehensive guide on creating an end-to-end LLM-based system using best practices. It covers data collection & generation, LLM training pipeline, a simple RAG system, production-ready AWS deployment, comprehensive monitoring, and testing and evaluation framework. The repository includes detailed instructions on setting up local and cloud dependencies, project structure, installation steps, infrastructure setup, pipelines for data processing, training, and inference, as well as QA, tests, and running the project end-to-end.

PrAIvateSearch

PrAIvateSearch is a NextJS web application that aims to implement similar features to SearchGPT in an open-source, local, and private way. It allows users to search the web using their own AI model. The application provides a user-friendly interface for interacting with the AI model and accessing search results. PrAIvateSearch is designed to be easy to install and use, with detailed instructions provided in the readme file. The project is in beta stage and welcomes contributions from the community to improve and enhance its functionality. Users are encouraged to support the project through funding to help it grow and continue to be maintained as an open-source tool under the MIT license.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

SWELancer-Benchmark

SWE-Lancer is a benchmark repository containing datasets and code for the paper 'SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?'. It provides instructions for package management, building Docker images, configuring environment variables, and running evaluations. Users can use this tool to assess the performance of language models in real-world freelance software engineering tasks.

seer

Seer is a service that provides AI capabilities to Sentry by running inference on Sentry issues and providing user insights. It is currently in early development and not yet compatible with self-hosted Sentry instances. The tool requires access to internal Sentry resources and is intended for internal Sentry employees. Users can set up the environment, download model artifacts, integrate with local Sentry, run evaluations for Autofix AI agent, and deploy to a sandbox staging environment. Development commands include applying database migrations, creating new migrations, running tests, and more. The tool also supports VCRs for recording and replaying HTTP requests.

dravid

Dravid (DRD) is an advanced, AI-powered CLI coding framework designed to follow user instructions until the job is completed, including fixing errors. It can generate code, fix errors, handle image queries, manage file operations, integrate with external APIs, and provide a development server with error handling. Dravid is extensible and requires Python 3.7+ and CLAUDE_API_KEY. Users can interact with Dravid through CLI commands for various tasks like creating projects, asking questions, generating content, handling metadata, and file-specific queries. It supports use cases like Next.js project development, working with existing projects, exploring new languages, Ruby on Rails project development, and Python project development. Dravid's project structure includes directories for source code, CLI modules, API interaction, utility functions, AI prompt templates, metadata management, and tests. Contributions are welcome, and development setup involves cloning the repository, installing dependencies with Poetry, setting up environment variables, and using Dravid for project enhancements.

shinkai-node

Shinkai Node is a tool that enables users to create AI agents without coding. Users can define tasks, schedule actions, and let Shinkai generate custom code. The tool also provides native crypto support. It has a companion repo called Shinkai Apps for the frontend. The tool offers easy local compilation and OpenAPI support for generating schemas and Swagger UI. Users can run tests and dockerized tests for the tool. It also provides guidance on releasing a new version.

CoML

CoML (formerly MLCopilot) is an interactive coding assistant for data scientists and machine learning developers, empowered on large language models. It offers an out-of-the-box interactive natural language programming interface for data mining and machine learning tasks, integration with Jupyter lab and Jupyter notebook, and a built-in large knowledge base of machine learning to enhance the ability to solve complex tasks. The tool is designed to assist users in coding tasks related to data analysis and machine learning using natural language commands within Jupyter environments.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

docetl

DocETL is a tool for creating and executing data processing pipelines, especially suited for complex document processing tasks. It offers a low-code, declarative YAML interface to define LLM-powered operations on complex data. Ideal for maximizing correctness and output quality for semantic processing on a collection of data, representing complex tasks via map-reduce, maximizing LLM accuracy, handling long documents, and automating task retries based on validation criteria.

sagentic-af

Sagentic.ai Agent Framework is a tool for creating AI agents with hot reloading dev server. It allows users to spawn agents locally by calling specific endpoint. The framework comes with detailed documentation and supports contributions, issues, and feature requests. It is MIT licensed and maintained by Ahyve Inc.

CLI

Bito CLI provides a command line interface to the Bito AI chat functionality, allowing users to interact with the AI through commands. It supports complex automation and workflows, with features like long prompts and slash commands. Users can install Bito CLI on Mac, Linux, and Windows systems using various methods. The tool also offers configuration options for AI model type, access key management, and output language customization. Bito CLI is designed to enhance user experience in querying AI models and automating tasks through the command line interface.

For similar tasks

awesome-code-ai

A curated list of AI coding tools, including code completion, refactoring, and assistants. This list includes both open-source and commercial tools, as well as tools that are still in development. Some of the most popular AI coding tools include GitHub Copilot, CodiumAI, Codeium, Tabnine, and Replit Ghostwriter.

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

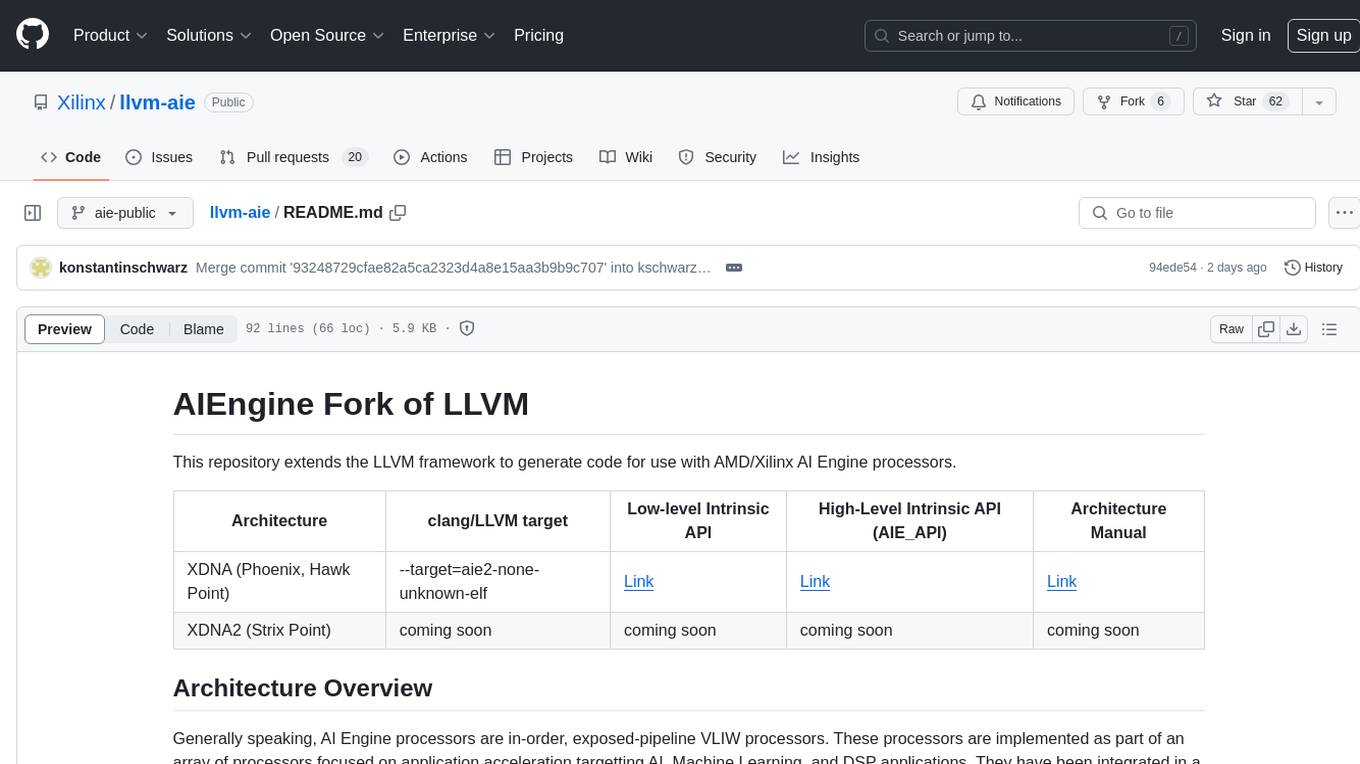

llvm-aie

This repository extends the LLVM framework to generate code for use with AMD/Xilinx AI Engine processors. AI Engine processors are in-order, exposed-pipeline VLIW processors focused on application acceleration for AI, Machine Learning, and DSP applications. The repository adds LLVM support for specific features like non-power of 2 pointers, operand latencies, resource conflicts, negative operand latencies, slot assignment, relocations, code alignment restrictions, and register allocation. It includes support for Clang, LLD, binutils, Compiler-RT, and LLVM-LIBC.

labs-ai-tools-vscode

AI Prompt Runner for VSCode is a research prototype project that provides a VSCode extension to run prompts. Users can install the extension, set a secret key, and run prompts to get results for any project. The tool is designed for developers and researchers to experiment with AI prompts within the VSCode environment.

aily-blockly

Aily Blockly is a blockly IDE under the Aily Project, providing AI-assisted programming capabilities for non-professional users. It aims to integrate numerous AI capabilities to help hardware developers develop more smoothly, ultimately achieving natural language programming. The software offers features like Engineering Project Management, Library Manager, Serial Debug Tool, AI Project Generation, AI Code Generation, AI Library Conversion, Development Board Configuration Generation, and Lightning Compilation Tool. It is currently in the alpha stage, suitable for prototype verification and educational teaching.

ai

Leverage AI to generate pull request descriptions based on the diff & commit messages. Install the Chrome Extension to get started. The project uses Node.js and NPM. It provides developer documentation and usage guide. The extension can be installed on Chromium-based browsers by loading the unpacked `dist` directory. The core team includes Brian Douglas, Divyansh Singh, and Anush Shetty. Contributors can open issues and find good first issues in the Discord channel. The project uses @open-sauced/conventional-commit for commit utility and semantic-release for generating changelogs and releases. Join the community in Discord, watch videos on the YouTube Channel, and find resources on the Dev.to org. Licensed under MIT © Open Sauced.

llm-ollama

LLM-ollama is a plugin that provides access to models running on an Ollama server. It allows users to query the Ollama server for a list of models, register them with LLM, and use them for prompting, chatting, and embedding. The plugin supports image attachments, embeddings, JSON schemas, async models, model aliases, and model options. Users can interact with Ollama models through the plugin in a seamless and efficient manner.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.