air

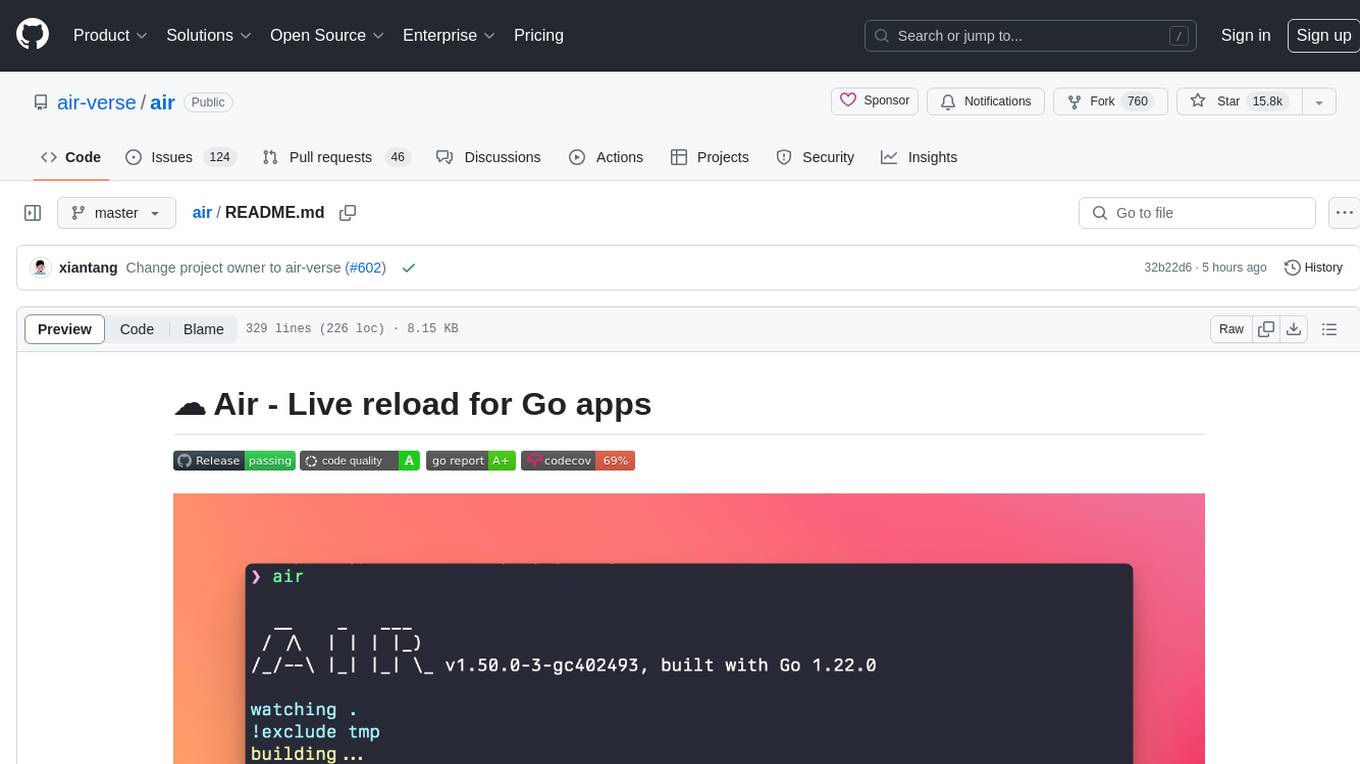

☁️ Live reload for Go apps

Stars: 19553

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, customizable build or any command, support for excluding subdirectories, and allows watching new directories after Air started. Users can overwrite specific configuration from arguments and pass runtime arguments for running the built binary. Air can be installed via `go install`, `install.sh`, or `goblin.run`, and can also be used with Docker/Podman. It supports debugging, Docker Compose, and provides a Q&A section for common issues. The tool requires Go 1.16+ for development and welcomes pull requests. Air is released under the GNU General Public License v3.0.

README:

When I started developing websites in Go and using gin framework, it was a pity that gin lacked a live-reloading function. So I searched around and tried fresh, it seems not much flexible, so I intended to rewrite it better. Finally, Air's born. In addition, great thanks to pilu, no fresh, no air :)

Air is yet another live-reloading command line utility for developing Go applications. Run air in your project root directory, leave it alone,

and focus on your code.

Note: This tool has nothing to do with hot-deploy for production.

- Colorful log output

- Customize build or any command

- Support excluding subdirectories

- Allow watching new directories after Air started

- Better building process

Support air config fields as arguments:

You can view the available command-line arguments by running the following commands:

air -h

or

air --help

If you want to config build command and run command, you can use like the following command without the config file:

air --build.cmd "go build -o bin/api cmd/run.go" --build.bin "./bin/api"Use a comma to separate items for arguments that take a list as input:

air --build.cmd "go build -o bin/api cmd/run.go" --build.bin "./bin/api" --build.exclude_dir "templates,build"With go 1.23 or higher:

go install github.com/air-verse/air@latest# binary will be $(go env GOPATH)/bin/air

curl -sSfL https://raw.githubusercontent.com/air-verse/air/master/install.sh | sh -s -- -b $(go env GOPATH)/bin

# or install it into ./bin/

curl -sSfL https://raw.githubusercontent.com/air-verse/air/master/install.sh | sh -s

air -vVia goblin.run

# binary will be /usr/local/bin/air

curl -sSfL https://goblin.run/github.com/air-verse/air | sh

# to put to a custom path

curl -sSfL https://goblin.run/github.com/air-verse/air | PREFIX=/tmp shPlease pull this Docker image cosmtrek/air.

docker/podman run -it --rm \

-w "<PROJECT>" \

-e "air_wd=<PROJECT>" \

-v $(pwd):<PROJECT> \

-p <PORT>:<APP SERVER PORT> \

cosmtrek/air

-c <CONF>if you want to use air continuously like a normal app, you can create a function in your ${SHELL}rc (Bash, Zsh, etc…)

air() {

podman/docker run -it --rm \

-w "$PWD" -v "$PWD":"$PWD" \

-p "$AIR_PORT":"$AIR_PORT" \

docker.io/cosmtrek/air "$@"

}<PROJECT> is your project path in container, eg: /go/example

if you want to enter the container, Please add --entrypoint=bash.

For example

One of my project runs in Docker:

docker run -it --rm \

-w "/go/src/github.com/cosmtrek/hub" \

-v $(pwd):/go/src/github.com/cosmtrek/hub \

-p 9090:9090 \

cosmtrek/airAnother example:

cd /go/src/github.com/cosmtrek/hub

AIR_PORT=8080 air -c "config.toml"this will replace $PWD with the current directory, $AIR_PORT is the port where to publish and $@ is to accept arguments of the application itself for example -c

For less typing, you could add alias air='~/.air' to your .bashrc or .zshrc.

First enter into your project

cd /path/to/your_projectThe simplest usage is run

# firstly find `.air.toml` in current directory, if not found, use defaults

air -c .air.tomlYou can initialize the .air.toml configuration file to the current directory with the default settings running the following command.

air initAfter this, you can just run the air command without additional arguments, and it will use the .air.toml file for configuration.

airFor modifying the configuration refer to the air_example.toml file.

You can pass arguments for running the built binary by adding them after the air command.

# Will run ./tmp/main bench

air bench

# Will run ./tmp/main server --port 8080

air server --port 8080You can separate the arguments passed for the air command and the built binary with -- argument.

# Will run ./tmp/main -h

air -- -h

# Will run air with custom config and pass -h argument to the built binary

air -c .air.toml -- -hservices:

my-project-with-air:

image: cosmtrek/air

# working_dir value has to be the same of mapped volume

working_dir: /project-package

ports:

- <any>:<any>

environment:

- ENV_A=${ENV_A}

- ENV_B=${ENV_B}

- ENV_C=${ENV_C}

volumes:

- ./project-relative-path/:/project-package/air -d prints all logs.

Dockerfile

# Choose whatever you want, version >= 1.16

FROM golang:1.23-alpine

WORKDIR /app

RUN go install github.com/air-verse/air@latest

COPY go.mod go.sum ./

RUN go mod download

CMD ["air", "-c", ".air.toml"]docker-compose.yaml

version: "3.8"

services:

web:

build:

context: .

# Correct the path to your Dockerfile

dockerfile: Dockerfile

ports:

- 8080:3000

# Important to bind/mount your codebase dir to /app dir for live reload

volumes:

- ./:/appexport GOPATH=$HOME/xxxxx

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

export PATH=$PATH:$(go env GOPATH)/bin #Confirm this line in your .profile and make sure to source the .profile if you add it!!!Should use \ to escape the ' in the bin. related issue: #305

[build]

cmd = "/usr/bin/true"Refer to issue #512 for additional details.

- Ensure your static files in

include_dir,include_ext, orinclude_file. - Ensure your HTML has a

</body>tag - Activate the proxy by configuring the following config:

[proxy]

enabled = true

proxy_port = <air proxy port>

app_port = <your server port>Please note that it requires Go 1.16+ since I use go mod to manage dependencies.

# Fork this project

# Clone it

mkdir -p $GOPATH/src/github.com/cosmtrek

cd $GOPATH/src/github.com/cosmtrek

git clone [email protected]:<YOUR USERNAME>/air.git

# Install dependencies

cd air

make ci

# Explore it and happy hacking!

make installPull requests are welcome.

# Checkout to master

git checkout master

# Add the version that needs to be released

git tag v1.xx.x

# Push to remote

git push origin v1.xx.x

# The CI will process and release a new version. Wait about 5 min, and you can fetch the latest versionGive huge thanks to lots of supporters. I've always been remembering your kindness.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for air

Similar Open Source Tools

air

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, customizable build or any command, support for excluding subdirectories, and allows watching new directories after Air started. Users can overwrite specific configuration from arguments and pass runtime arguments for running the built binary. Air can be installed via `go install`, `install.sh`, or `goblin.run`, and can also be used with Docker/Podman. It supports debugging, Docker Compose, and provides a Q&A section for common issues. The tool requires Go 1.16+ for development and welcomes pull requests. Air is released under the GNU General Public License v3.0.

air

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, allows customization of build or any command, supports excluding subdirectories, and allows watching new directories after Air has started. Air can be installed via `go install`, `install.sh`, `goblin.run`, or Docker/Podman. To use Air, simply run `air` in your project root directory and leave it alone to focus on your code. Air has nothing to do with hot-deploy for production.

shell-pilot

Shell-pilot is a simple, lightweight shell script designed to interact with various AI models such as OpenAI, Ollama, Mistral AI, LocalAI, ZhipuAI, Anthropic, Moonshot, and Novita AI from the terminal. It enhances intelligent system management without any dependencies, offering features like setting up a local LLM repository, using official models and APIs, viewing history and session persistence, passing input prompts with pipe/redirector, listing available models, setting request parameters, generating and running commands in the terminal, easy configuration setup, system package version checking, and managing system aliases.

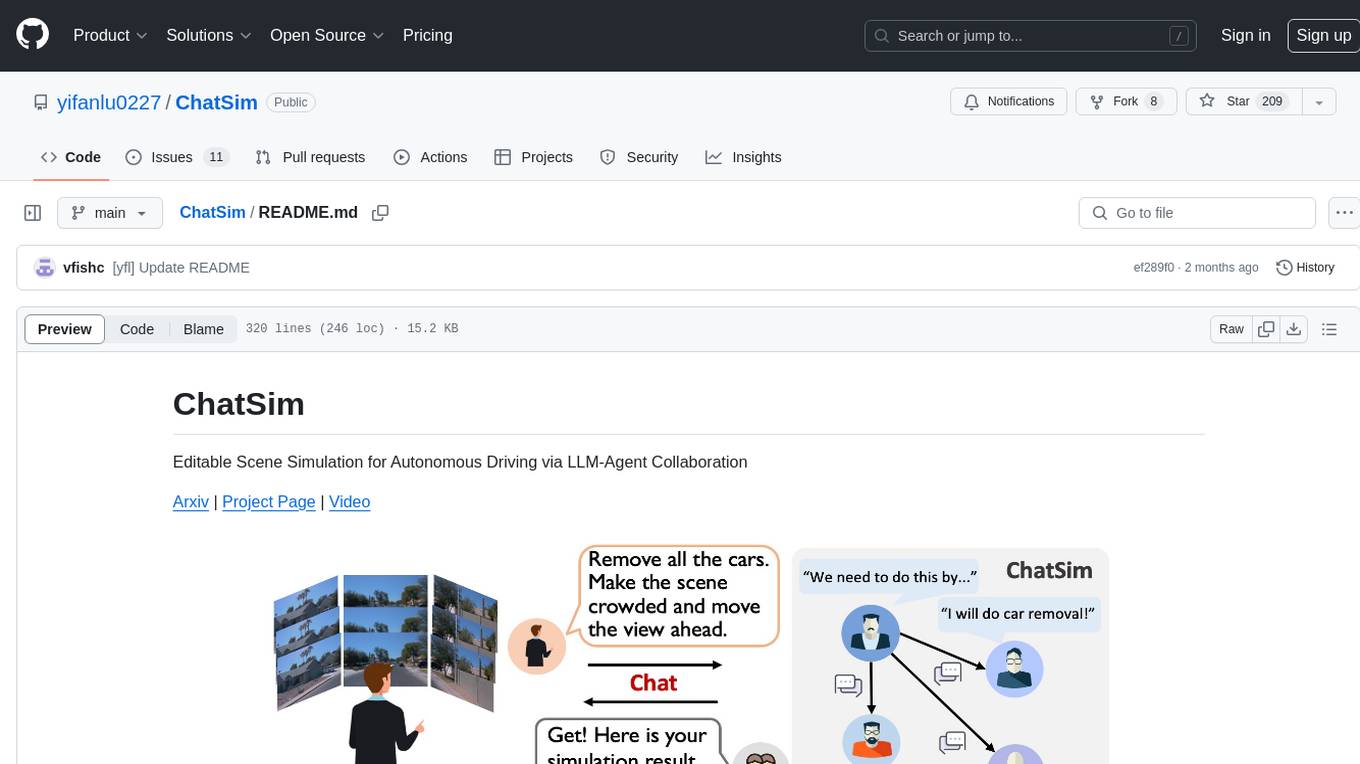

ChatSim

ChatSim is a tool designed for editable scene simulation for autonomous driving via LLM-Agent collaboration. It provides functionalities for setting up the environment, installing necessary dependencies like McNeRF and Inpainting tools, and preparing data for simulation. Users can train models, simulate scenes, and track trajectories for smoother and more realistic results. The tool integrates with Blender software and offers options for training McNeRF models and McLight's skydome estimation network. It also includes a trajectory tracking module for improved trajectory tracking. ChatSim aims to facilitate the simulation of autonomous driving scenarios with collaborative LLM-Agents.

fish-ai

fish-ai is a tool that adds AI functionality to Fish shell. It can be integrated with various AI providers like OpenAI, Azure OpenAI, Google, Hugging Face, Mistral, or a self-hosted LLM. Users can transform comments into commands, autocomplete commands, and suggest fixes. The tool allows customization through configuration files and supports switching between contexts. Data privacy is maintained by redacting sensitive information before submission to the AI models. Development features include debug logging, testing, and creating releases.

nosia

Nosia is a platform that allows users to run an AI model on their own data. It is designed to be easy to install and use. Users can follow the provided guides for quickstart, API usage, upgrading, starting, stopping, and troubleshooting. The platform supports custom installations with options for remote Ollama instances, custom completion models, and custom embeddings models. Advanced installation instructions are also available for macOS with a Debian or Ubuntu VM setup. Users can access the platform at 'https://nosia.localhost' and troubleshoot any issues by checking logs and job statuses.

raycast_api_proxy

The Raycast AI Proxy is a tool that acts as a proxy for the Raycast AI application, allowing users to utilize the application without subscribing. It intercepts and forwards Raycast requests to various AI APIs, then reformats the responses for Raycast. The tool supports multiple AI providers and allows for custom model configurations. Users can generate self-signed certificates, add them to the system keychain, and modify DNS settings to redirect requests to the proxy. The tool is designed to work with providers like OpenAI, Azure OpenAI, Google, and more, enabling tasks such as AI chat completions, translations, and image generation.

tenere

Tenere is a TUI interface for Language Model Libraries (LLMs) written in Rust. It provides syntax highlighting, chat history, saving chats to files, Vim keybindings, copying text from/to clipboard, and supports multiple backends. Users can configure Tenere using a TOML configuration file, set key bindings, and use different LLMs such as ChatGPT, llama.cpp, and ollama. Tenere offers default key bindings for global and prompt modes, with features like starting a new chat, saving chats, scrolling, showing chat history, and quitting the app. Users can interact with the prompt in different modes like Normal, Visual, and Insert, with various key bindings for navigation, editing, and text manipulation.

mods

AI for the command line, built for pipelines. LLM based AI is really good at interpreting the output of commands and returning the results in CLI friendly text formats like Markdown. Mods is a simple tool that makes it super easy to use AI on the command line and in your pipelines. Mods works with OpenAI, Groq, Azure OpenAI, and LocalAI To get started, install Mods and check out some of the examples below. Since Mods has built-in Markdown formatting, you may also want to grab Glow to give the output some _pizzazz_.

k8sgpt

K8sGPT is a tool for scanning your Kubernetes clusters, diagnosing, and triaging issues in simple English. It has SRE experience codified into its analyzers and helps to pull out the most relevant information to enrich it with AI.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

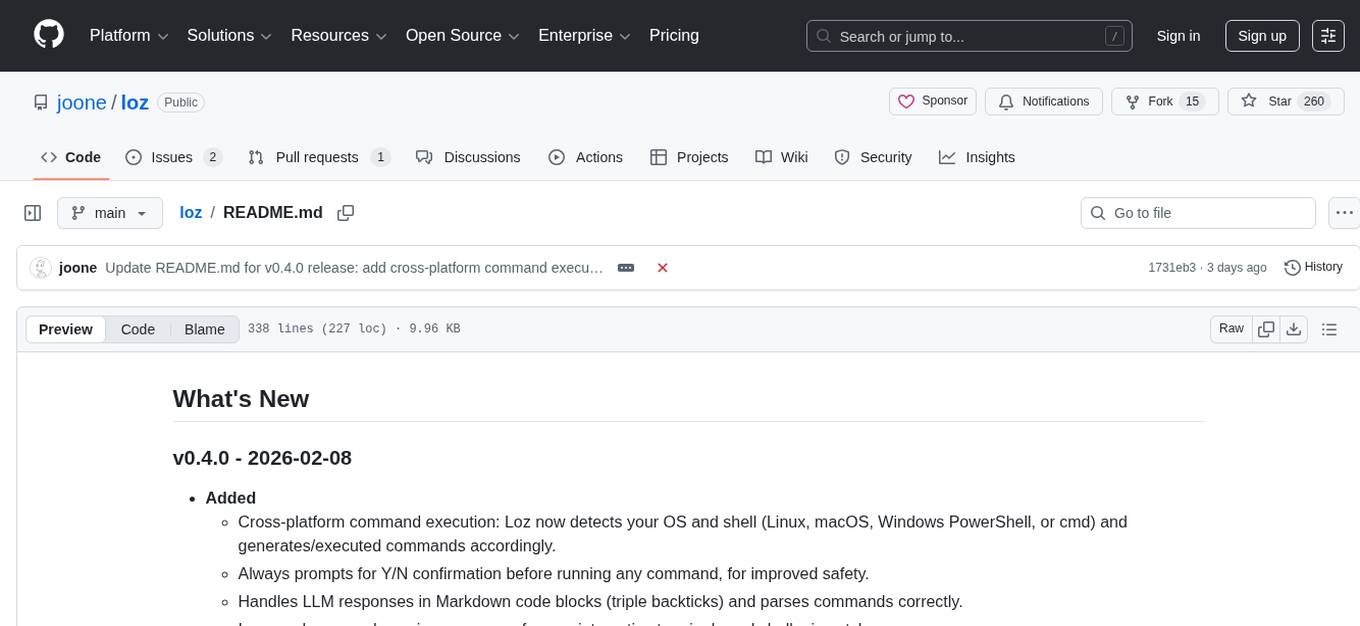

loz

Loz is a command-line tool that integrates AI capabilities with Unix tools, enabling users to execute system commands and utilize Unix pipes. It supports multiple LLM services like OpenAI API, Microsoft Copilot, and Ollama. Users can run Linux commands based on natural language prompts, enhance Git commit formatting, and interact with the tool in safe mode. Loz can process input from other command-line tools through Unix pipes and automatically generate Git commit messages. It provides features like chat history access, configurable LLM settings, and contribution opportunities.

alcless

Alcoholless is a lightweight security sandbox for macOS programs, originally designed for securing Homebrew but can be used for any CLI programs. It allows AI agents to run shell commands with reduced risk of breaking the host OS. The tool creates a separate environment for executing commands, syncing changes back to the host directory upon command exit. It uses utilities like sudo, su, pam_launchd, and rsync, with potential future integration of FSKit for file syncing. The tool also generates a sudo configuration for user-specific sandbox access, enabling users to run commands as the sandbox user without a password.

1.5-Pints

1.5-Pints is a repository that provides a recipe to pre-train models in 9 days, aiming to create AI assistants comparable to Apple OpenELM and Microsoft Phi. It includes model architecture, training scripts, and utilities for 1.5-Pints and 0.12-Pint developed by Pints.AI. The initiative encourages replication, experimentation, and open-source development of Pint by sharing the model's codebase and architecture. The repository offers installation instructions, dataset preparation scripts, model training guidelines, and tools for model evaluation and usage. Users can also find information on finetuning models, converting lit models to HuggingFace models, and running Direct Preference Optimization (DPO) post-finetuning. Additionally, the repository includes tests to ensure code modifications do not disrupt the existing functionality.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

ChatDBG

ChatDBG is an AI-based debugging assistant for C/C++/Python/Rust code that integrates large language models into a standard debugger (`pdb`, `lldb`, `gdb`, and `windbg`) to help debug your code. With ChatDBG, you can engage in a dialog with your debugger, asking open-ended questions about your program, like `why is x null?`. ChatDBG will _take the wheel_ and steer the debugger to answer your queries. ChatDBG can provide error diagnoses and suggest fixes. As far as we are aware, ChatDBG is the _first_ debugger to automatically perform root cause analysis and to provide suggested fixes.

For similar tasks

air

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, customizable build or any command, support for excluding subdirectories, and allows watching new directories after Air started. Users can overwrite specific configuration from arguments and pass runtime arguments for running the built binary. Air can be installed via `go install`, `install.sh`, or `goblin.run`, and can also be used with Docker/Podman. It supports debugging, Docker Compose, and provides a Q&A section for common issues. The tool requires Go 1.16+ for development and welcomes pull requests. Air is released under the GNU General Public License v3.0.

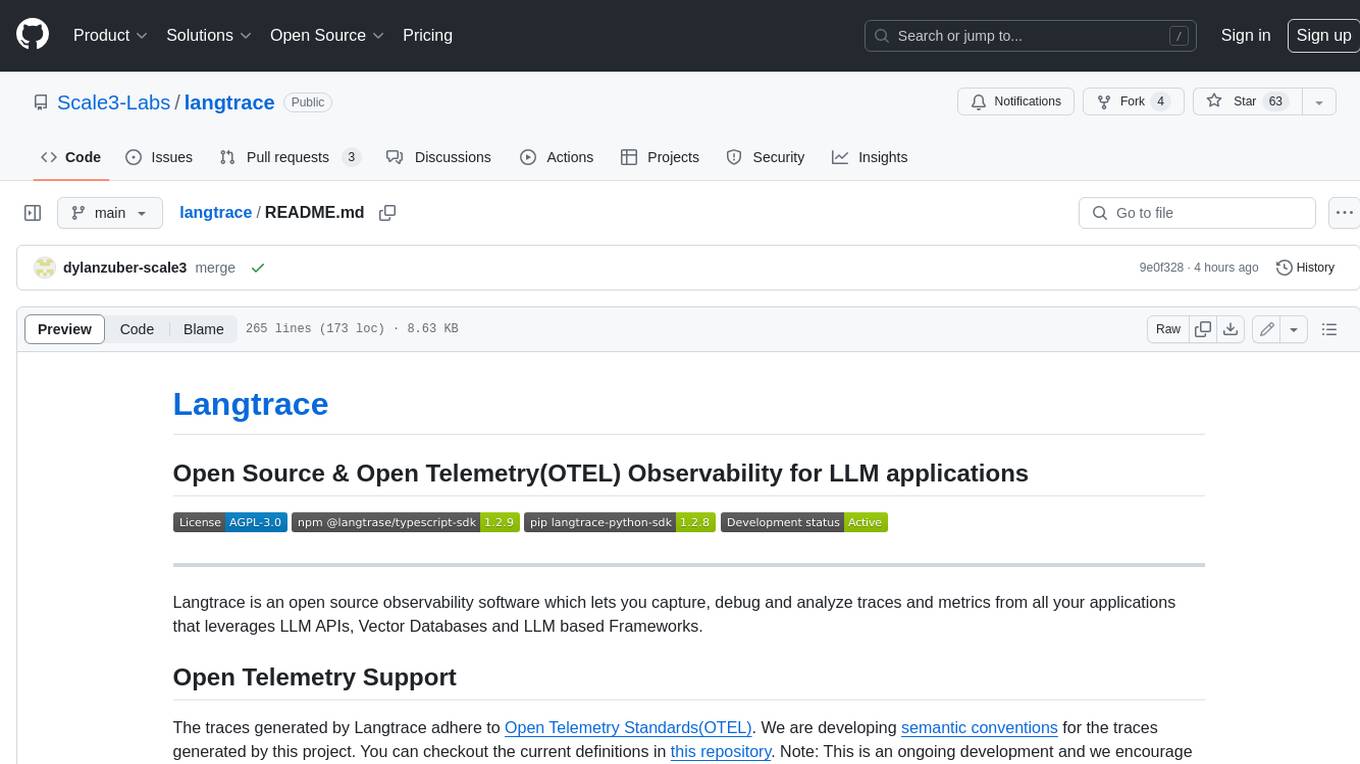

langtrace

Langtrace is an open source observability software that lets you capture, debug, and analyze traces and metrics from all your applications that leverage LLM APIs, Vector Databases, and LLM-based Frameworks. It supports Open Telemetry Standards (OTEL), and the traces generated adhere to these standards. Langtrace offers both a managed SaaS version (Langtrace Cloud) and a self-hosted option. The SDKs for both Typescript/Javascript and Python are available, making it easy to integrate Langtrace into your applications. Langtrace automatically captures traces from various vendors, including OpenAI, Anthropic, Azure OpenAI, Langchain, LlamaIndex, Pinecone, and ChromaDB.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

AgentNeo

AgentNeo is an advanced, open-source Agentic AI Application Observability, Monitoring, and Evaluation Framework designed to provide deep insights into AI agents, Large Language Model (LLM) calls, and tool interactions. It offers robust logging, visualization, and evaluation capabilities to help debug and optimize AI applications with ease. With features like tracing LLM calls, monitoring agents and tools, tracking interactions, detailed metrics collection, flexible data storage, simple instrumentation, interactive dashboard, project management, execution graph visualization, and evaluation tools, AgentNeo empowers users to build efficient, cost-effective, and high-quality AI-driven solutions.

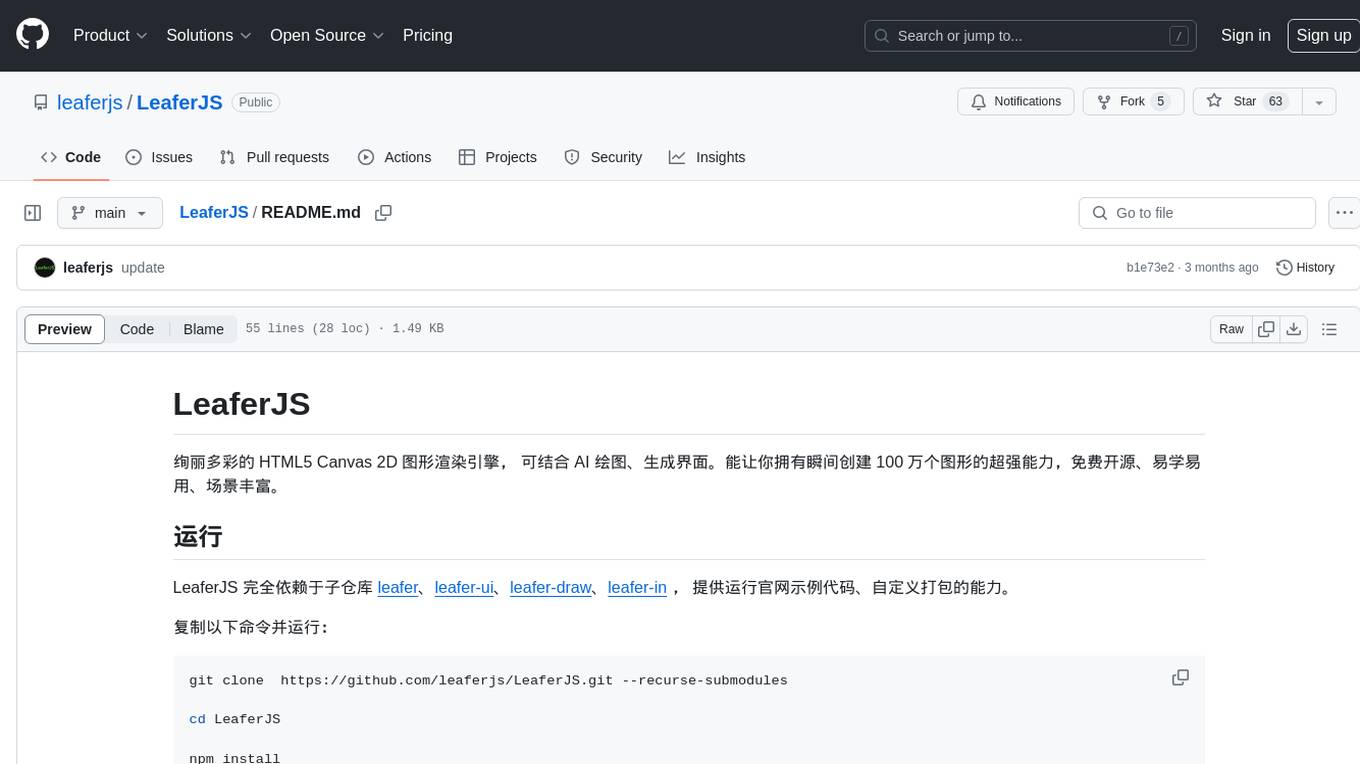

LeaferJS

LeaferJS is a colorful HTML5 Canvas 2D graphics rendering engine that can be combined with AI drawing to generate interfaces. It gives you the superpower to instantly create 1 million graphics, free and open source, easy to learn and use, with rich scenes.

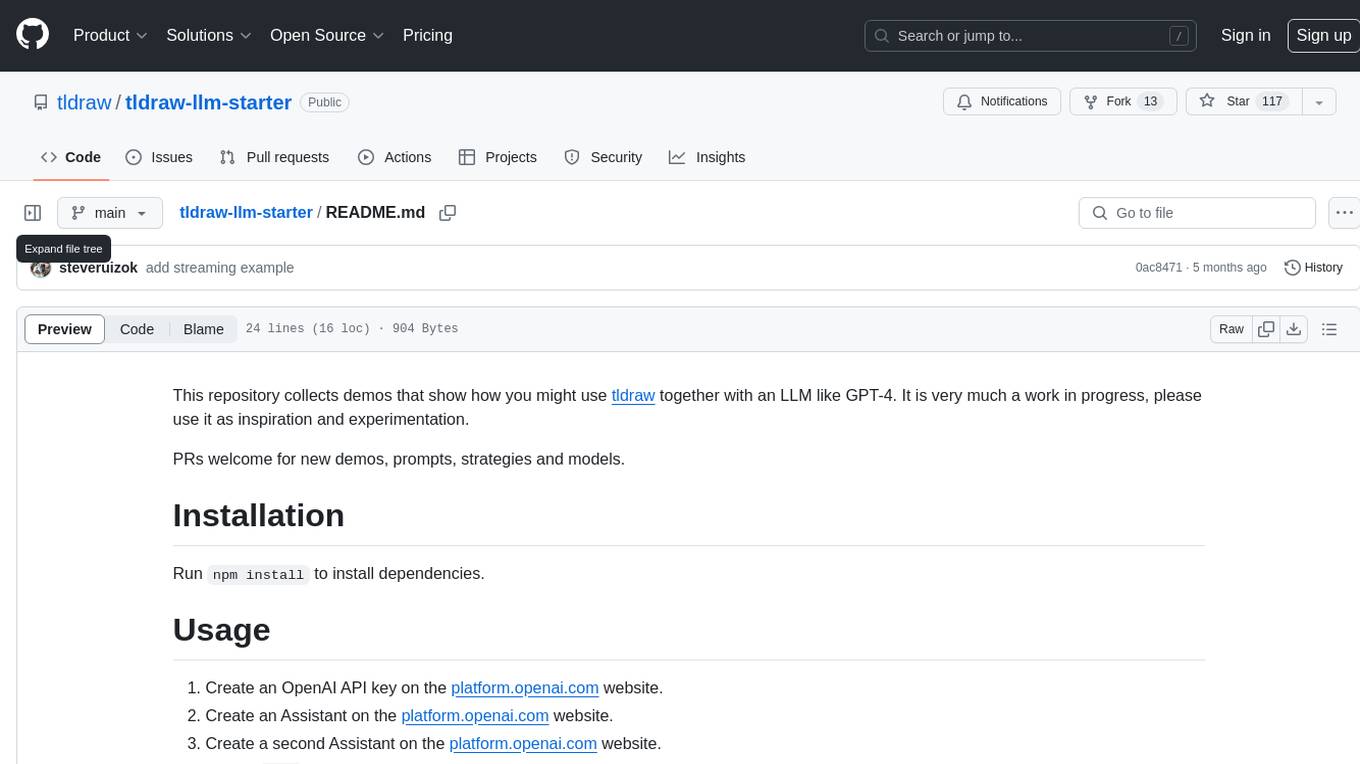

tldraw-llm-starter

This repository is a collection of demos showcasing how to integrate tldraw with an LLM like GPT-4. It serves as a work in progress for inspiration and experimentation. Users can contribute new demos, prompts, strategies, and models. The installation process involves running 'npm install' to install dependencies. Usage instructions include creating OpenAI API keys and assistants on the platform.openai.com website, as well as setting up a '.env' file with necessary credentials. The server can be started with 'npm run dev'. The repository aims to demonstrate the potential synergy between tldraw and GPT-4 for various applications.

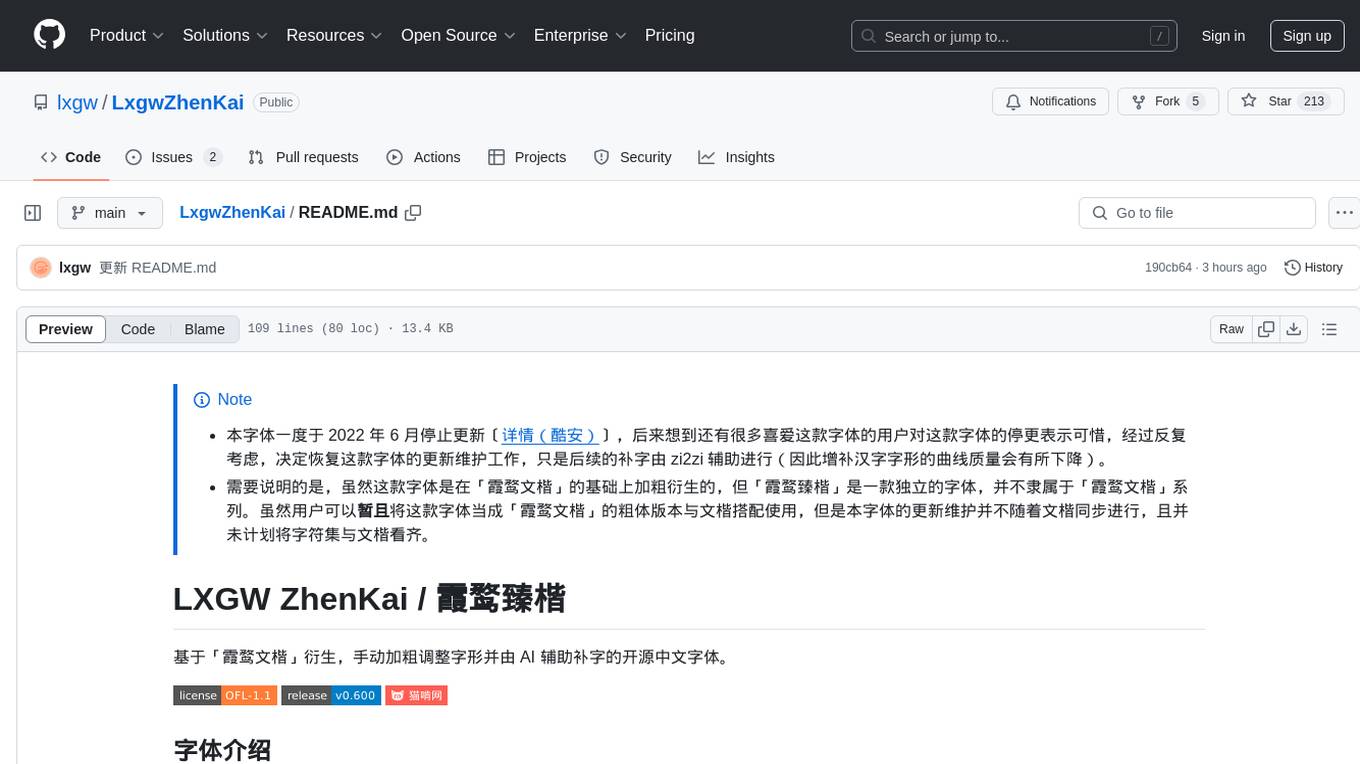

LxgwZhenKai

LxgwZhenKai is a Chinese font derived from LXGW WenKai, manually adjusted for boldness and supplemented with AI assistance for character additions. The font aims to provide a comfortable reading experience on screens while also serving as a bold version of LXGW WenKai for temporary use. It contains over 13,000 characters, including common simplified and traditional Chinese characters, and is licensed under SIL Open Font License 1.1. Users are allowed to freely use, distribute, modify, and create derivative fonts based on LxgwZhenKai.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.