Best AI tools for< Debug Applications >

20 - AI tool Sites

LangChain

LangChain is a framework for developing applications powered by large language models (LLMs). It simplifies every stage of the LLM application lifecycle, including development, productionization, and deployment. LangChain consists of open-source libraries such as langchain-core, langchain-community, and partner packages. It also includes LangGraph for building stateful agents and LangSmith for debugging and monitoring LLM applications.

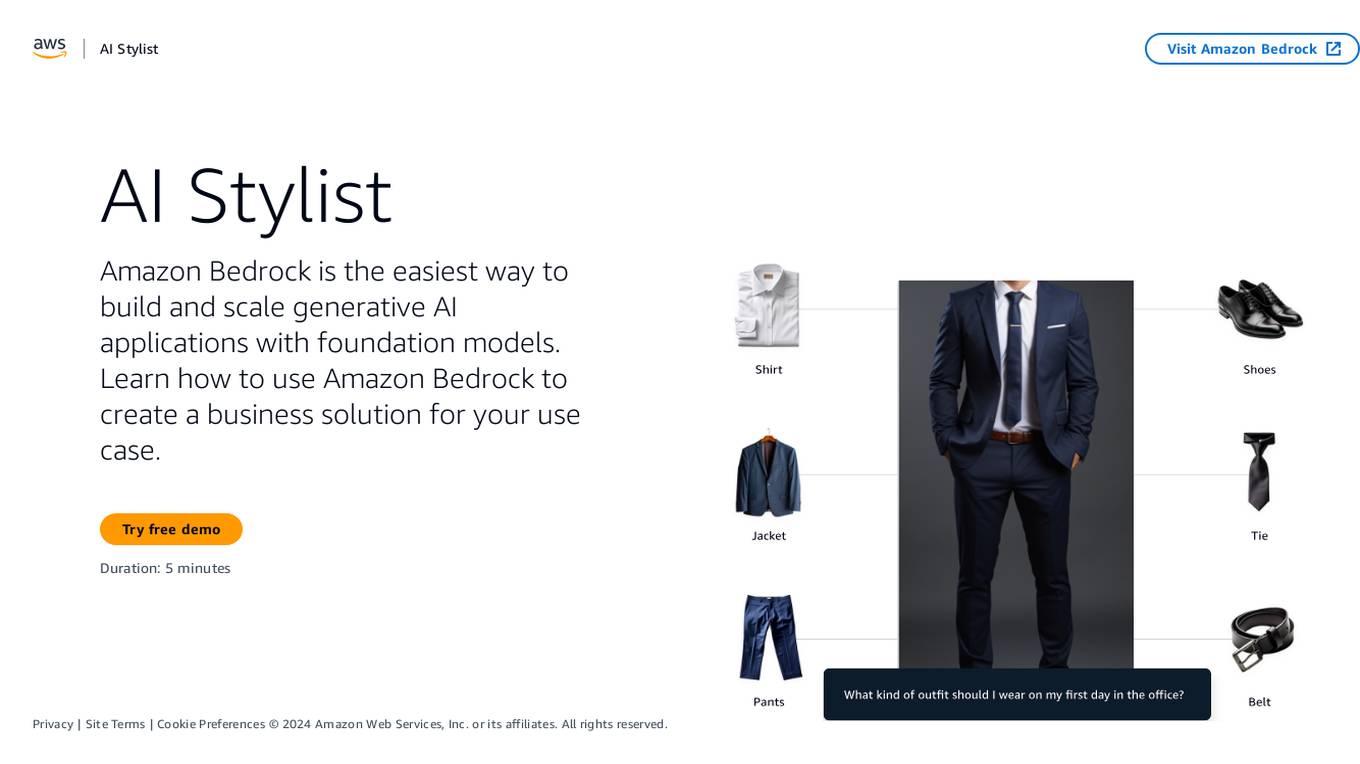

Amazon Bedrock

Amazon Bedrock is a cloud-based platform that enables developers to build, deploy, and manage serverless applications. It provides a fully managed environment that takes care of the infrastructure and operations, so developers can focus on writing code. Bedrock also offers a variety of tools and services to help developers build and deploy their applications, including a code editor, a debugger, and a deployment pipeline.

New Relic

New Relic is an AI monitoring platform that offers an all-in-one observability solution for monitoring, debugging, and improving the entire technology stack. With over 30 capabilities and 750+ integrations, New Relic provides the power of AI to help users gain insights and optimize performance across various aspects of their infrastructure, applications, and digital experiences.

Haystack

Haystack is a production-ready open-source AI framework designed to facilitate building AI applications. It offers a flexible components and pipelines architecture, allowing users to customize and build applications according to their specific requirements. With partnerships with leading LLM providers and AI tools, Haystack provides freedom of choice for users. The framework is built for production, with fully serializable pipelines, logging, monitoring integrations, and deployment guides for full-scale deployments on various platforms. Users can build Haystack apps faster using deepset Studio, a platform for drag-and-drop construction of pipelines, testing, debugging, and sharing prototypes.

Helicone

Helicone is an open-source platform designed for developers, offering observability solutions for logging, monitoring, and debugging. It provides sub-millisecond latency impact, 100% log coverage, industry-leading query times, and is ready for production-level workloads. Trusted by thousands of companies and developers, Helicone leverages Cloudflare Workers for low latency and high reliability, offering features such as prompt management, uptime of 99.99%, scalability, and reliability. It allows risk-free experimentation, prompt security, and various tools for monitoring, analyzing, and managing requests.

Vite

Vite is a lightning-fast development toolchain for Vue.js. It combines the best parts of Vue CLI, webpack, and Rollup into a single tool that's both powerful and easy to use. With Vite, you can develop Vue.js applications with incredible speed and efficiency.

Langfuse

Langfuse is an AI tool that offers the Langfuse TypeScript SDK v4 for building and debugging LLM (Large Language Models) applications. It provides features such as tracing, prompt management, evaluation, and metrics to enhance the performance of LLM applications. Langfuse is backed by a team of experts and offers integrations with various platforms and SDKs. The tool aims to simplify the development process of complex LLM applications and improve overall efficiency.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Debug Sage

Debug Sage is a website designed to help users understand and troubleshoot errors in their software applications. The platform provides detailed insights into various types of errors, allowing users to identify and resolve issues efficiently. With a user-friendly interface, Debug Sage aims to streamline the debugging process for developers and software engineers. The website also offers resources and tools to enhance the overall debugging experience. By leveraging advanced technologies, Debug Sage empowers users to tackle complex errors with ease.

Pythagora

Pythagora is the world's first all-in-one AI development platform that offers a secure and comprehensive solution for building web applications. It combines frontend, backend, debugging, and deployment features in a single platform, enabling users to create apps without heavy coding requirements. Pythagora is powered by specialized AI agents and top-tier language models from OpenAI and Anthropic, providing users with tools for planning, writing, testing, and deploying full-stack web apps. The platform is designed to streamline the development process, offering enterprise-grade security, role-based authentication, and transparent control over projects.

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

Portkey

Portkey is a control panel for production AI applications that offers an AI Gateway, Prompts, Guardrails, and Observability Suite. It enables teams to ship reliable, cost-efficient, and fast apps by providing tools for prompt engineering, enforcing reliable LLM behavior, integrating with major agent frameworks, and building AI agents with access to real-world tools. Portkey also offers seamless AI integrations for smarter decisions, with features like managed hosting, smart caching, and edge compute layers to optimize app performance.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Bugpilot

Bugpilot is an error monitoring tool specifically designed for React applications. It offers a comprehensive platform for error tracking, debugging, and user communication. With Bugpilot, developers can easily integrate error tracking into their React applications without any code changes or dependencies. The tool provides a user-friendly dashboard that helps developers quickly identify and prioritize errors, understand their root causes, and plan fixes. Bugpilot also includes features such as AI-assisted debugging, session recordings, and customizable error pages to enhance the user experience and reduce support requests.

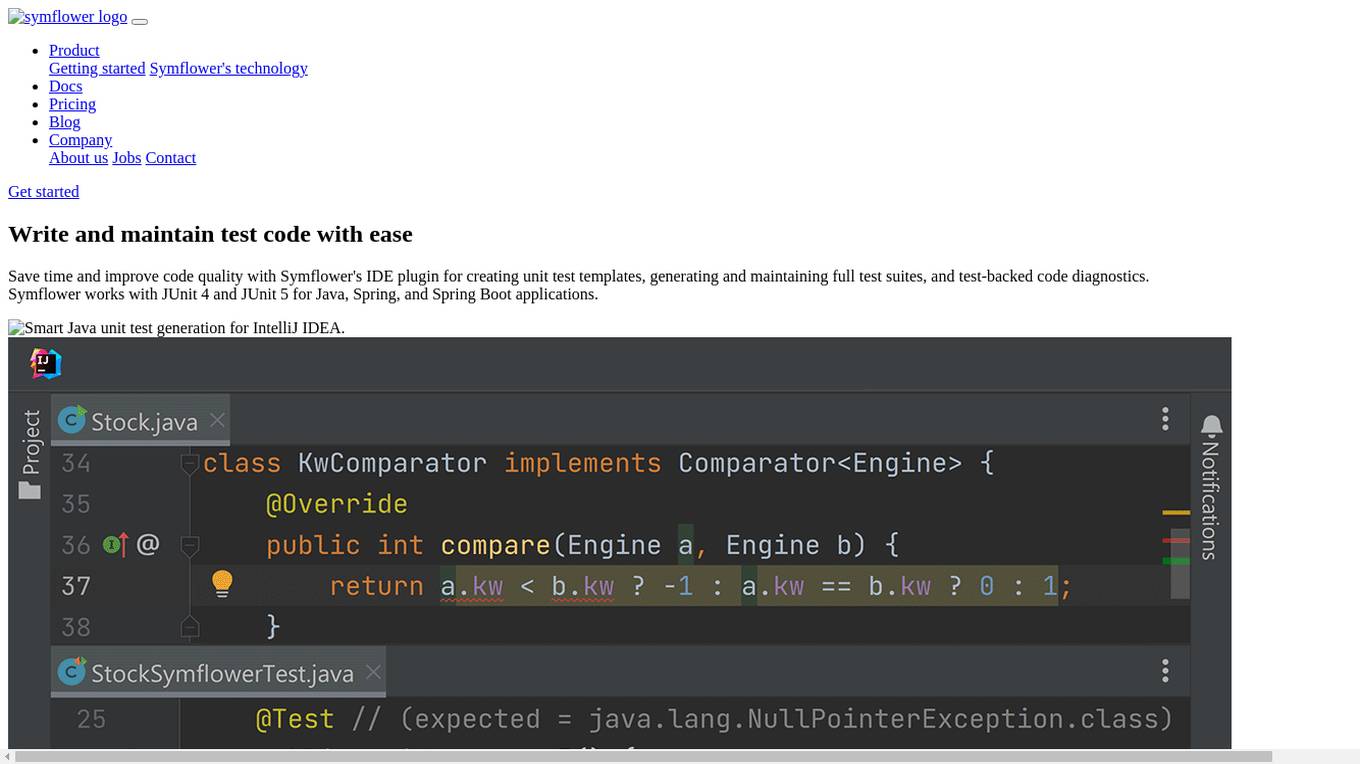

Symflower

Symflower is an AI-powered unit test generator for Java applications. It helps developers write and maintain test code with ease, saving time and improving code quality. Symflower works with JUnit 4 and JUnit 5 for Java, Spring, and Spring Boot applications.

Octomind

Octomind is an AI-powered QA platform that provides automated end-to-end testing for web applications. It offers features such as self-healing tests, visual debugging, and stable test runs. Octomind is designed for early-stage and fast-growing SaaS or AI startups with small engineering teams, aiming to improve product quality and speed by catching regressions before they reach users. The platform is trusted by thousands of engineering teams worldwide and is SOC-2 certified, ensuring privacy and security.

AI Tool Hub

The website features a variety of AI tools and applications, including GPTs, AI tutors, code assistants, diagram creators, and more. Users can explore and discover top GPTs, get assistance in programming, design, finance, and even fortune-telling. The platform offers a range of AI-powered solutions for different tasks and industries, aiming to enhance productivity and creativity through advanced AI technologies.

Aim

Aim is an open-source, self-hosted AI Metadata tracking tool designed to handle 100,000s of tracked metadata sequences. Two most famous AI metadata applications are: experiment tracking and prompt engineering. Aim provides a performant and beautiful UI for exploring and comparing training runs, prompt sessions.

Agenta.ai

Agenta.ai is a platform designed to provide prompt management, evaluation, and observability for LLM (Large Language Model) applications. It aims to address the challenges faced by AI development teams in managing prompts, collaborating effectively, and ensuring reliable product outcomes. By centralizing prompts, evaluations, and traces, Agenta.ai helps teams streamline their workflows and follow best practices in LLMOps. The platform offers features such as unified playground for prompt comparison, automated evaluation processes, human evaluation integration, observability tools for debugging AI systems, and collaborative workflows for PMs, experts, and developers.

Testsigma

Testsigma is a cloud-based test automation platform that enables teams to create, execute, and maintain automated tests for web, mobile, and API applications. It offers a range of features including natural language processing (NLP)-based scripting, record-and-playback capabilities, data-driven testing, and AI-driven test maintenance. Testsigma integrates with popular CI/CD tools and provides a marketplace for add-ons and extensions. It is designed to simplify and accelerate the test automation process, making it accessible to testers of all skill levels.

5 - Open Source AI Tools

langtrace

Langtrace is an open source observability software that lets you capture, debug, and analyze traces and metrics from all your applications that leverage LLM APIs, Vector Databases, and LLM-based Frameworks. It supports Open Telemetry Standards (OTEL), and the traces generated adhere to these standards. Langtrace offers both a managed SaaS version (Langtrace Cloud) and a self-hosted option. The SDKs for both Typescript/Javascript and Python are available, making it easy to integrate Langtrace into your applications. Langtrace automatically captures traces from various vendors, including OpenAI, Anthropic, Azure OpenAI, Langchain, LlamaIndex, Pinecone, and ChromaDB.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

air

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, customizable build or any command, support for excluding subdirectories, and allows watching new directories after Air started. Users can overwrite specific configuration from arguments and pass runtime arguments for running the built binary. Air can be installed via `go install`, `install.sh`, or `goblin.run`, and can also be used with Docker/Podman. It supports debugging, Docker Compose, and provides a Q&A section for common issues. The tool requires Go 1.16+ for development and welcomes pull requests. Air is released under the GNU General Public License v3.0.

AgentNeo

AgentNeo is an advanced, open-source Agentic AI Application Observability, Monitoring, and Evaluation Framework designed to provide deep insights into AI agents, Large Language Model (LLM) calls, and tool interactions. It offers robust logging, visualization, and evaluation capabilities to help debug and optimize AI applications with ease. With features like tracing LLM calls, monitoring agents and tools, tracking interactions, detailed metrics collection, flexible data storage, simple instrumentation, interactive dashboard, project management, execution graph visualization, and evaluation tools, AgentNeo empowers users to build efficient, cost-effective, and high-quality AI-driven solutions.

20 - OpenAI Gpts

The Dock - Your Docker Assistant

Technical assistant specializing in Docker and Docker Compose. Lets Debug !

React Senior Web Crafter Copilot ⚛️

Expert in React development, offering advanced solutions and best practices. v1.1

World Class React Redux Expert

Guides to optimal React, Redux, MUI solutions and avoids common pitfalls.

DirectX 11 Graphics Programming Helper

Helps beginners understand DirectX 11 concepts and terminology

Software development front-end GPT - Senior AI

Solve problems at front-end applications development - AI 100% PRO - 500+ Guides trainer

LUA Expert Code Creator

Expert in Lua code creation and review for applications such as Roblox games

Deluge Developer by TechBloom

Zoho Deluge expert developer who is trained to write and debug Deluge Functions for Zoho CRM

Raspberry Pi Pico Master

Expert in MicroPython, C, and C++ for Raspberry Pi Pico and RP2040 and other microcontroller oriented applications.