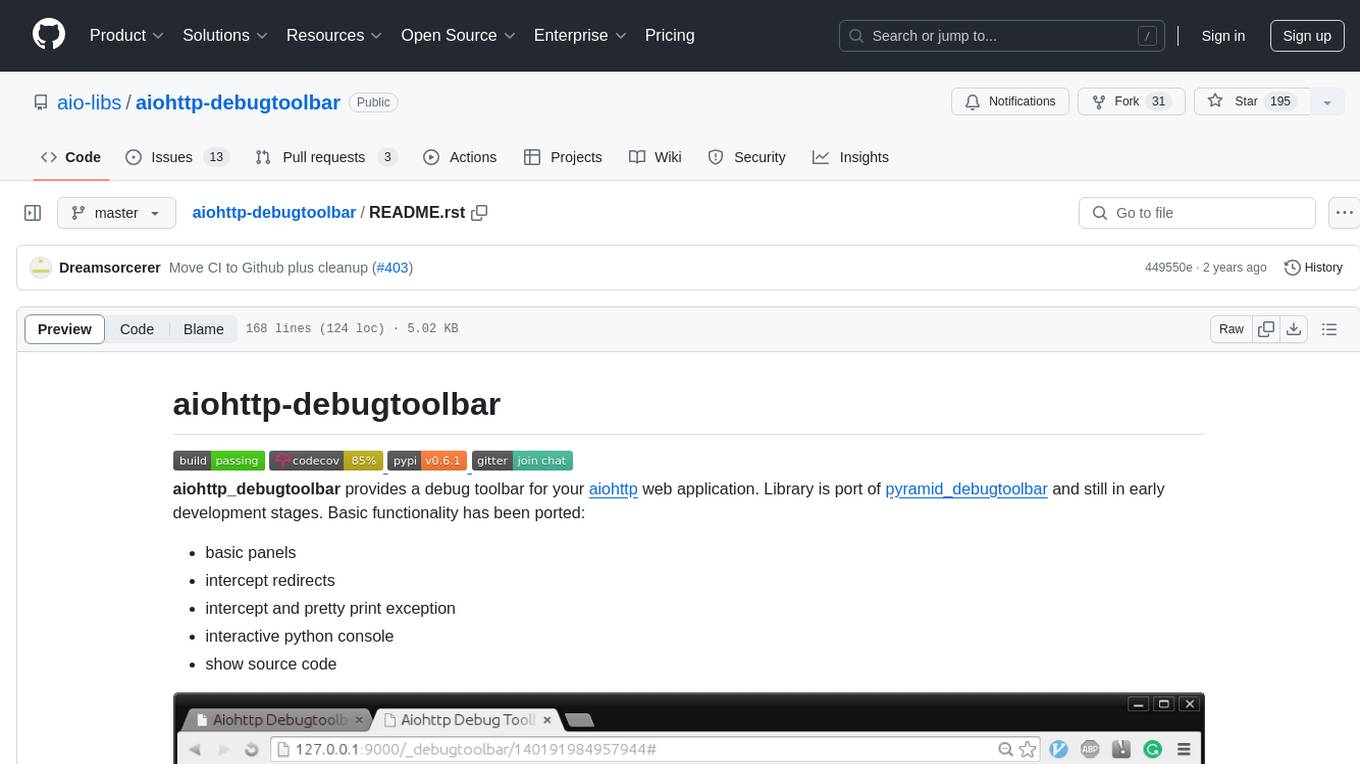

aiohttp-debugtoolbar

aiohttp_debugtoolbar is library for debugtoolbar support for aiohttp

Stars: 197

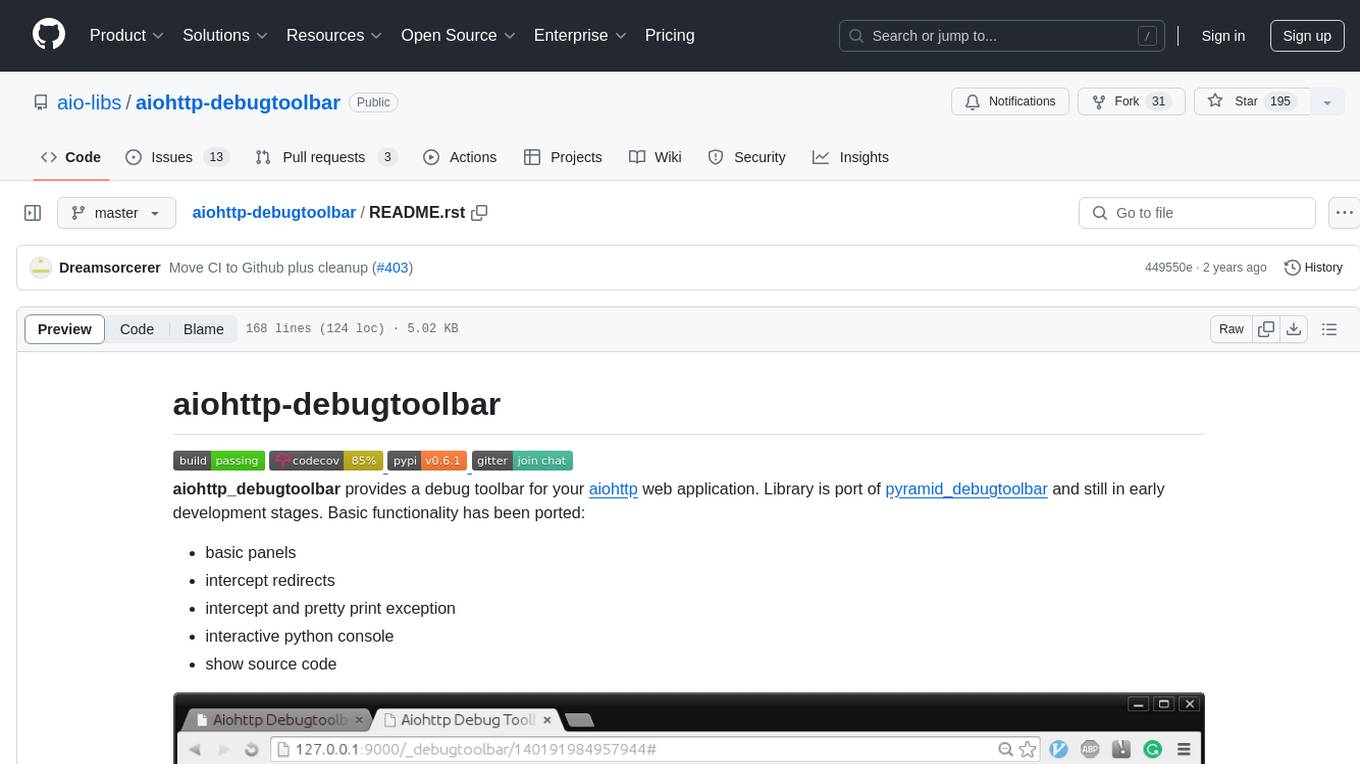

aiohttp_debugtoolbar provides a debug toolbar for aiohttp web applications. It is a port of pyramid_debugtoolbar and offers basic functionality such as basic panels, intercepting redirects, pretty printing exceptions, an interactive python console, and showing source code. The library is still in early development stages and offers various debug panels for monitoring different aspects of the web application. It is a useful tool for developers working with aiohttp to debug and optimize their applications.

README:

.. image:: https://travis-ci.org/aio-libs/aiohttp-debugtoolbar.svg?branch=master :target: https://travis-ci.org/aio-libs/aiohttp-debugtoolbar :alt: |Build status| .. image:: https://codecov.io/gh/aio-libs/aiohttp-debugtoolbar/branch/master/graph/badge.svg :target: https://codecov.io/gh/aio-libs/aiohttp-debugtoolbar :alt: |Coverage status| .. image:: https://img.shields.io/pypi/v/aiohttp-debugtoolbar.svg :target: https://pypi.python.org/pypi/aiohttp-debugtoolbar :alt: PyPI .. image:: https://badges.gitter.im/Join%20Chat.svg :target: https://gitter.im/aio-libs/Lobby :alt: Chat on Gitter

aiohttp_debugtoolbar provides a debug toolbar for your aiohttp_ web application. Library is port of pyramid_debugtoolbar_ and still in early development stages. Basic functionality has been ported:

- basic panels

- intercept redirects

- intercept and pretty print exception

- interactive python console

- show source code

HeaderDebugPanel, PerformanceDebugPanel, TracebackPanel,

SettingsDebugPanel, MiddlewaresDebugPanel, VersionDebugPanel,

RoutesDebugPanel, RequestVarsDebugPanel, LoggingPanel

Are you coder looking for a project to contribute to python/asyncio libraries? This is the project for you!

::

$ pip install aiohttp_debugtoolbar

In order to plug in aiohttp_debugtoolbar, call

aiohttp_debugtoolbar.setup on your app.

.. code:: python

import aiohttp_debugtoolbar

app = web.Application(loop=loop)

aiohttp_debugtoolbar.setup(app)

.. code:: python

import asyncio

import jinja2

import aiohttp_debugtoolbar

import aiohttp_jinja2

from aiohttp import web

@aiohttp_jinja2.template('index.html')

async def basic_handler(request):

return {'title': 'example aiohttp_debugtoolbar!',

'text': 'Hello aiohttp_debugtoolbar!',

'app': request.app}

async def exception_handler(request):

raise NotImplementedError

async def init(loop):

# add aiohttp_debugtoolbar middleware to you application

app = web.Application(loop=loop)

# install aiohttp_debugtoolbar

aiohttp_debugtoolbar.setup(app)

template = """

<html>

<head>

<title>{{ title }}</title>

</head>

<body>

<h1>{{ text }}</h1>

<p>

<a href="{{ app.router['exc_example'].url() }}">

Exception example</a>

</p>

</body>

</html>

"""

# install jinja2 templates

loader = jinja2.DictLoader({'index.html': template})

aiohttp_jinja2.setup(app, loader=loader)

# init routes for index page, and page with error

app.router.add_route('GET', '/', basic_handler, name='index')

app.router.add_route('GET', '/exc', exception_handler,

name='exc_example')

return app

loop = asyncio.get_event_loop()

app = loop.run_until_complete(init(loop))

web.run_app(app, host='127.0.0.1', port=9000)

.. code:: python

aiohttp_debugtoolbar.setup(app, hosts=['172.19.0.1', ])

Supported options

- enabled: The debugtoolbar is disabled if False. By default is set to True.

- intercept_redirects: If True, intercept redirect and display an intermediate page with a link to the redirect page. By default is set to True.

- hosts: The list of allow hosts. By default is set to ['127.0.0.1', '::1'].

- exclude_prefixes: The list of forbidden hosts. By default is set to [].

- check_host: If False, disable the host check and display debugtoolbar for any host. By default is set to True.

- max_request_history: The max value for storing requests. By default is set to 100.

- max_visible_requests: The max value of display requests. By default is set to 10.

- path_prefix: The prefix of path to debugtoolbar. By default is set to '/_debugtoolbar'.

I've borrowed a lot of code from following projects. I highly recommend to check them out:

- pyramid_debugtoolbar_

- django-debug-toolbar_

- flask-debugtoolbar_

https://github.com/aio-libs/aiohttp_debugtoolbar/tree/master/demo

- aiohttp_

- aiohttp_jinja2_

.. _Python: https://www.python.org .. _asyncio: http://docs.python.org/3/library/asyncio.html .. _aiohttp: https://github.com/KeepSafe/aiohttp .. _aiopg: https://github.com/aio-libs/aiopg .. _aiomysql: https://github.com/aio-libs/aiomysql .. _aiohttp_jinja2: https://github.com/aio-libs/aiohttp_jinja2 .. _pyramid_debugtoolbar: https://github.com/Pylons/pyramid_debugtoolbar .. _django-debug-toolbar: https://github.com/django-debug-toolbar/django-debug-toolbar .. _flask-debugtoolbar: https://github.com/mgood/flask-debugtoolbar

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiohttp-debugtoolbar

Similar Open Source Tools

aiohttp-debugtoolbar

aiohttp_debugtoolbar provides a debug toolbar for aiohttp web applications. It is a port of pyramid_debugtoolbar and offers basic functionality such as basic panels, intercepting redirects, pretty printing exceptions, an interactive python console, and showing source code. The library is still in early development stages and offers various debug panels for monitoring different aspects of the web application. It is a useful tool for developers working with aiohttp to debug and optimize their applications.

aiosql

aiosql is a Python module that allows you to organize SQL statements in .sql files and load them into your Python application as methods to call. It supports various database drivers like SQLite, PostgreSQL, MySQL, MariaDB, and DuckDB. The project is an implementation of Kris Jenkins' yesql library to the Python ecosystem, allowing users to easily reuse SQL code in SQL GUIs or CLI tools. With aiosql, you can write, version control, comment, and run SQL code using files without losing the ability to use them as you would any other SQL file. It provides support for PEP 249 and asyncio based drivers, enabling users to execute parametric SQL queries from Python methods.

aiocache

Aiocache is an asyncio cache library that supports multiple backends such as memory, redis, and memcached. It provides a simple interface for functions like add, get, set, multi_get, multi_set, exists, increment, delete, clear, and raw. Users can easily install and use the library for caching data in Python applications. Aiocache allows for easy instantiation of caches and setup of cache aliases for reusing configurations. It also provides support for backends, serializers, and plugins to customize cache operations. The library offers detailed documentation and examples for different use cases and configurations.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

aio-pika

Aio-pika is a wrapper around aiormq for asyncio and humans. It provides a completely asynchronous API, object-oriented API, transparent auto-reconnects with complete state recovery, Python 3.7+ compatibility, transparent publisher confirms support, transactions support, and complete type-hints coverage.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

mcp

Semgrep MCP Server is a beta server under active development for using Semgrep to scan code for security vulnerabilities. It provides a Model Context Protocol (MCP) for various coding tools to get specialized help in tasks. Users can connect to Semgrep AppSec Platform, scan code for vulnerabilities, customize Semgrep rules, analyze and filter scan results, and compare results. The tool is published on PyPI as semgrep-mcp and can be installed using pip, pipx, uv, poetry, or other methods. It supports CLI and Docker environments for running the server. Integration with VS Code is also available for quick installation. The project welcomes contributions and is inspired by core technologies like Semgrep and MCP, as well as related community projects and tools.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

Tutel

Tutel MoE is an optimized Mixture-of-Experts implementation that offers a parallel solution with 'No-penalty Parallism/Sparsity/Capacity/Switching' for modern training and inference. It supports Pytorch framework (version >= 1.10) and various GPUs including CUDA and ROCm. The tool enables Full Precision Inference of MoE-based Deepseek R1 671B on AMD MI300. Tutel provides features like all-to-all benchmarking, tensorcore option, NCCL timeout settings, Megablocks solution, and dynamic switchable configurations. Users can run Tutel in distributed mode across multiple GPUs and machines. The tool allows for custom MoE implementations and offers detailed usage examples and reference documentation.

mcp-ui

mcp-ui is a collection of SDKs that bring interactive web components to the Model Context Protocol (MCP). It allows servers to define reusable UI snippets, render them securely in the client, and react to their actions in the MCP host environment. The SDKs include @mcp-ui/server (TypeScript) for generating UI resources on the server, @mcp-ui/client (TypeScript) for rendering UI components on the client, and mcp_ui_server (Ruby) for generating UI resources in a Ruby environment. The project is an experimental community playground for MCP UI ideas, with rapid iteration and enhancements.

genai-toolbox

Gen AI Toolbox for Databases is an open source server that simplifies building Gen AI tools for interacting with databases. It handles complexities like connection pooling, authentication, and more, enabling easier, faster, and more secure tool development. The toolbox sits between the application's orchestration framework and the database, providing a control plane to modify, distribute, or invoke tools. It offers simplified development, better performance, enhanced security, and end-to-end observability. Users can install the toolbox as a binary, container image, or compile from source. Configuration is done through a 'tools.yaml' file, defining sources, tools, and toolsets. The project follows semantic versioning and welcomes contributions.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

For similar tasks

aiohttp-debugtoolbar

aiohttp_debugtoolbar provides a debug toolbar for aiohttp web applications. It is a port of pyramid_debugtoolbar and offers basic functionality such as basic panels, intercepting redirects, pretty printing exceptions, an interactive python console, and showing source code. The library is still in early development stages and offers various debug panels for monitoring different aspects of the web application. It is a useful tool for developers working with aiohttp to debug and optimize their applications.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

holoinsight

HoloInsight is a cloud-native observability platform that provides low-cost and high-performance monitoring services for cloud-native applications. It offers deep insights through real-time log analysis and AI integration. The platform is designed to help users gain a comprehensive understanding of their applications' performance and behavior in the cloud environment. HoloInsight is easy to deploy using Docker and Kubernetes, making it a versatile tool for monitoring and optimizing cloud-native applications. With a focus on scalability and efficiency, HoloInsight is suitable for organizations looking to enhance their observability and monitoring capabilities in the cloud.

awesome-AIOps

awesome-AIOps is a curated list of academic researches and industrial materials related to Artificial Intelligence for IT Operations (AIOps). It includes resources such as competitions, white papers, blogs, tutorials, benchmarks, tools, companies, academic materials, talks, workshops, papers, and courses covering various aspects of AIOps like anomaly detection, root cause analysis, incident management, microservices, dependency tracing, and more.

OpenLLM

OpenLLM is a platform that helps developers run any open-source Large Language Models (LLMs) as OpenAI-compatible API endpoints, locally and in the cloud. It supports a wide range of LLMs, provides state-of-the-art serving and inference performance, and simplifies cloud deployment via BentoML. Users can fine-tune, serve, deploy, and monitor any LLMs with ease using OpenLLM. The platform also supports various quantization techniques, serving fine-tuning layers, and multiple runtime implementations. OpenLLM seamlessly integrates with other tools like OpenAI Compatible Endpoints, LlamaIndex, LangChain, and Transformers Agents. It offers deployment options through Docker containers, BentoCloud, and provides a community for collaboration and contributions.

laravel-slower

Laravel Slower is a powerful package designed for Laravel developers to optimize the performance of their applications by identifying slow database queries and providing AI-driven suggestions for optimal indexing strategies and performance improvements. It offers actionable insights for debugging and monitoring database interactions, enhancing efficiency and scalability.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

llmops-workshop

LLMOps Workshop is a course designed to help users build, evaluate, monitor, and deploy Large Language Model solutions efficiently using Azure AI, Azure Machine Learning Prompt Flow, Content Safety, and Azure OpenAI. The workshop covers various aspects of LLMOps to help users master the process.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.