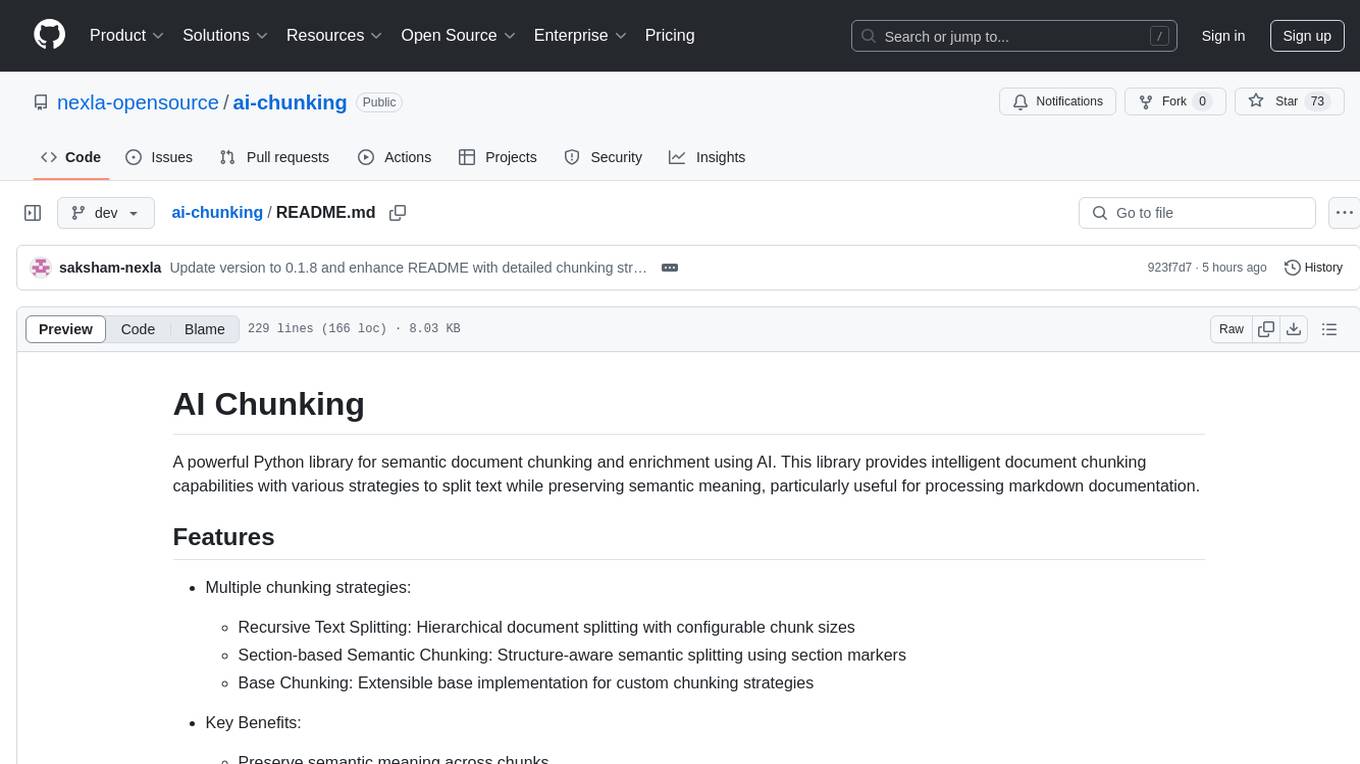

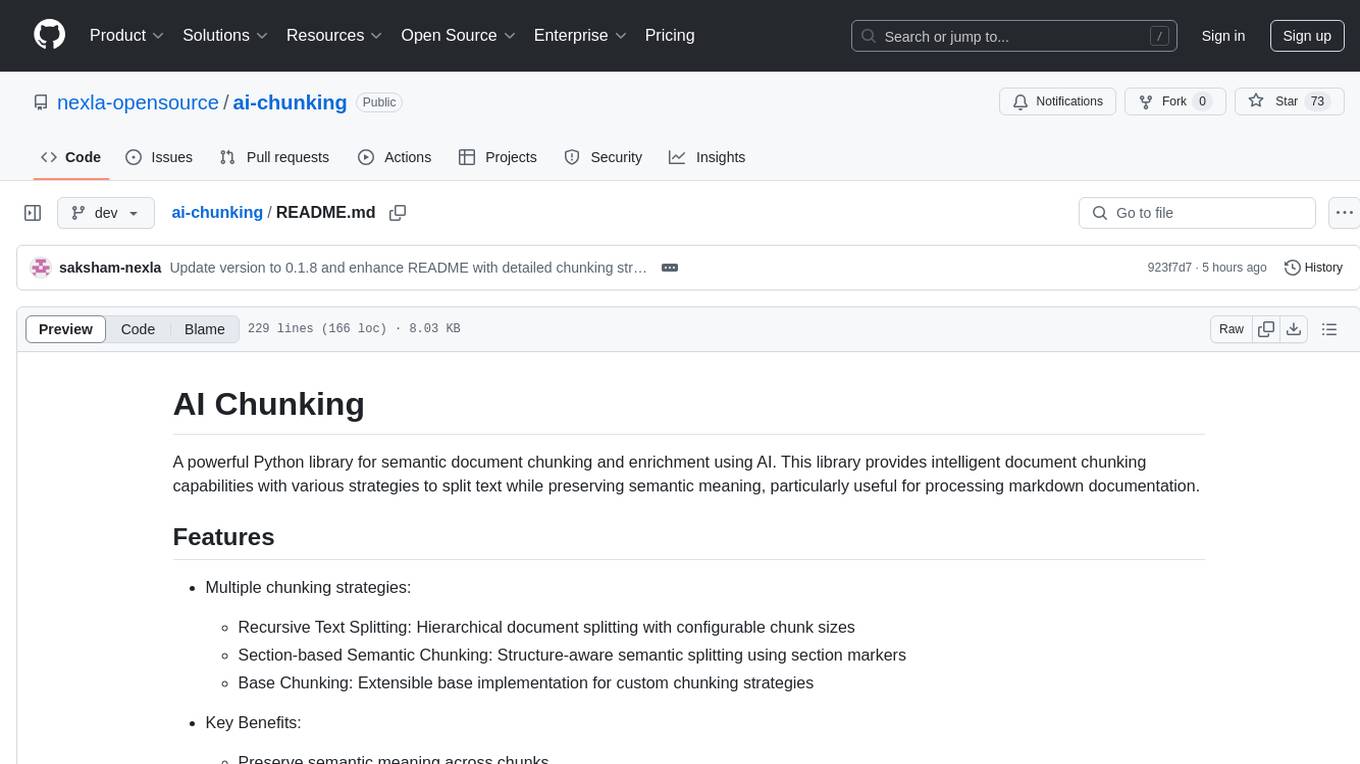

ai-chunking

None

Stars: 67

AI Chunking is a powerful Python library for semantic document chunking and enrichment using AI. It provides intelligent document chunking capabilities with various strategies to split text while preserving semantic meaning, particularly useful for processing markdown documentation. The library offers multiple chunking strategies such as Recursive Text Splitting, Section-based Semantic Chunking, and Base Chunking. Users can configure chunk sizes, overlap, and support various text formats. The tool is easy to extend with custom chunking strategies, making it versatile for different document processing needs.

README:

A powerful Python library for semantic document chunking and enrichment using AI. This library provides intelligent document chunking capabilities with various strategies to split text while preserving semantic meaning, particularly useful for processing markdown documentation.

-

Multiple chunking strategies:

- Recursive Text Splitting: Hierarchical document splitting with configurable chunk sizes

- Section-based Semantic Chunking: Structure-aware semantic splitting using section markers

- Base Chunking: Extensible base implementation for custom chunking strategies

-

Key Benefits:

- Preserve semantic meaning across chunks

- Configurable chunk sizes and overlap

- Support for various text formats

- Easy to extend with custom chunking strategies

Uses a hierarchical approach to split documents at natural breakpoints while maintaining context. It works by recursively splitting text using a list of separators in order of priority (headings, paragraphs, sentences, words).

- Use when: You have structured text with clear headings and sections and want simple, reliable chunking.

- Benefits: Fast, deterministic, maintains hierarchical structure.

Uses embeddings to find natural semantic breakpoints in text, creating chunks that preserve meaning regardless of formatting or explicit structure markers.

- Use when: Working with documents without clear section markers or when meaning preservation is critical.

- Benefits: Creates cohesive chunks based on semantic similarity rather than arbitrary length.

Processes documents by first identifying logical sections, then creating semantically meaningful chunks within each section. Enriches chunks with section and document summaries, providing rich context.

- Use when: You need deeper semantic understanding of document structure and relationships between sections.

- Benefits: Preserves document structure, adds rich metadata including section summaries and contextual relationships.

The most advanced chunker that uses LLMs to intelligently analyze document structure, creating optimal chunks with rich metadata and summaries.

- Use when: You need maximum semantic understanding and are willing to trade processing time for quality.

- Benefits: Superior chunk quality, rich metadata generation, deep semantic understanding.

pip install ai-chunkingTo use the semantic chunking capabilities, you need to set up the following environment variables:

# Required for semantic chunkers (SemanticTextChunker, SectionBasedSemanticChunker, AutoAIChunker)

export OPENAI_API_KEY=your_openai_api_key_hereYou can also set these environment variables in your Python code:

import os

os.environ["OPENAI_API_KEY"] = "your_openai_api_key_here"Each chunker type supports processing multiple documents together:

from ai_chunking import RecursiveTextSplitter

chunker = RecursiveTextSplitter(

chunk_size=1000,

chunk_overlap=100

)

# List of markdown files to process

files = ['document1.md', 'document2.md', 'document3.md']

# Process all documents at once

all_chunks = chunker.chunk_documents(files)

print(f"Processed {len(files)} documents into {len(all_chunks)} chunks")from ai_chunking import SectionBasedSemanticChunker, SemanticTextChunker

# Choose the chunker based on your needs

chunker = SemanticTextChunker(

chunk_size=1000,

similarity_threshold=0.85

)

# Or use the more advanced section-based chunker

# chunker = SectionBasedSemanticChunker(

# min_chunk_size=100,

# max_chunk_size=1000

# )

# List of files to process

markdown_files = ['user_guide.md', 'api_reference.md', 'tutorials.md']

# Process all documents, getting enriched chunks with metadata

all_chunks = chunker.chunk_documents(markdown_files)

# Access metadata for each chunk

for i, chunk in enumerate(all_chunks):

print(f"Chunk {i}:")

print(f" Source: {chunk.metadata.get('filename')}")

print(f" Tokens: {chunk.metadata.get('tokens_count')}")

if 'summary' in chunk.metadata:

print(f" Summary: {chunk.metadata.get('summary')}")

print(f" Text snippet: {chunk.text[:100]}...\n")You can create your own markdown-specific chunking strategy:

from ai_chunking import BaseChunker

import re

class MarkdownChunker(BaseChunker):

def __init__(self, **kwargs):

super().__init__(**kwargs)

self.heading_pattern = re.compile(r'^#{1,6}\s+', re.MULTILINE)

def chunk_documents(self, files: list[str]) -> list[str]:

chunks = []

for file in files:

with open(file, 'r') as f:

content = f.read()

# Split on markdown headings while preserving them

sections = self.heading_pattern.split(content)

# Remove empty sections and trim whitespace

chunks.extend([section.strip() for section in sections if section.strip()])

return chunks

# Usage

chunker = MarkdownChunker()

chunks = chunker.chunk_documents(['README.md', 'CONTRIBUTING.md'])Each chunker accepts different configuration parameters:

-

chunk_size: Maximum size of each chunk (default: 500) -

chunk_overlap: Number of characters to overlap between chunks (default: 50) -

separators: List of separators to use for splitting (default: ["\n\n", "\n", " ", ""])

-

chunk_size: Maximum chunk size in tokens (default: 1024) -

min_chunk_size: Minimum chunk size in tokens (default: 128) -

similarity_threshold: Threshold for semantic similarity when determining breakpoints (default: 0.9)

The chunker uses OpenAI's text-embedding-3-small model for embeddings to determine semantic breakpoints in the text. If chunks are too large, they are further split using recursive semantic chunking. If chunks are too small, they are merged with adjacent chunks when possible.

Each chunk includes metadata:

- Source filename

- Token count

- Source path

-

min_section_chunk_size: Minimum size for section chunks in tokens (default: 5000) -

max_section_chunk_size: Maximum size for section chunks in tokens (default: 50000) -

min_chunk_size: Minimum size for individual chunks in tokens (default: 128) -

max_chunk_size: Maximum size for individual chunks in tokens (default: 1000) -

page_content_similarity_threshold: Threshold for content similarity between pages (default: 0.8)

The chunker uses OpenAI's text-embedding-3-small model for embeddings with dynamic threshold settings:

- For chunks ≤ 500 tokens: 80% threshold (more aggressive splitting)

- For chunks 501-1000 tokens: 85% threshold (moderate splitting)

- For chunks > 1000 tokens: 90% threshold (less aggressive splitting)

Each chunk includes rich metadata:

- Unique chunk ID

- Text content and token count

- Page numbers

- Summary with metadata

- Global context

- Section and document summaries

- Previous/Next chunk summaries

- List of questions the excerpt can answer

We welcome contributions! Please follow these steps:

- Fork the repository

- Create a new branch for your feature

- Write tests for your changes

- Submit a pull request

For more details, see our Contributing Guidelines.

This project is licensed under the MIT License - see the LICENSE file for details.

- Issue Tracker: GitHub Issues

- Documentation: Coming soon

If you use this software in your research, please cite:

@software{ai_chunking2024,

title = {AI Chunking: A Python Library for Semantic Document Processing},

author = {Desai, Amey},

year = {2024},

publisher = {GitHub},

url = {https://github.com/nexla-opensource/ai-chunking}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-chunking

Similar Open Source Tools

ai-chunking

AI Chunking is a powerful Python library for semantic document chunking and enrichment using AI. It provides intelligent document chunking capabilities with various strategies to split text while preserving semantic meaning, particularly useful for processing markdown documentation. The library offers multiple chunking strategies such as Recursive Text Splitting, Section-based Semantic Chunking, and Base Chunking. Users can configure chunk sizes, overlap, and support various text formats. The tool is easy to extend with custom chunking strategies, making it versatile for different document processing needs.

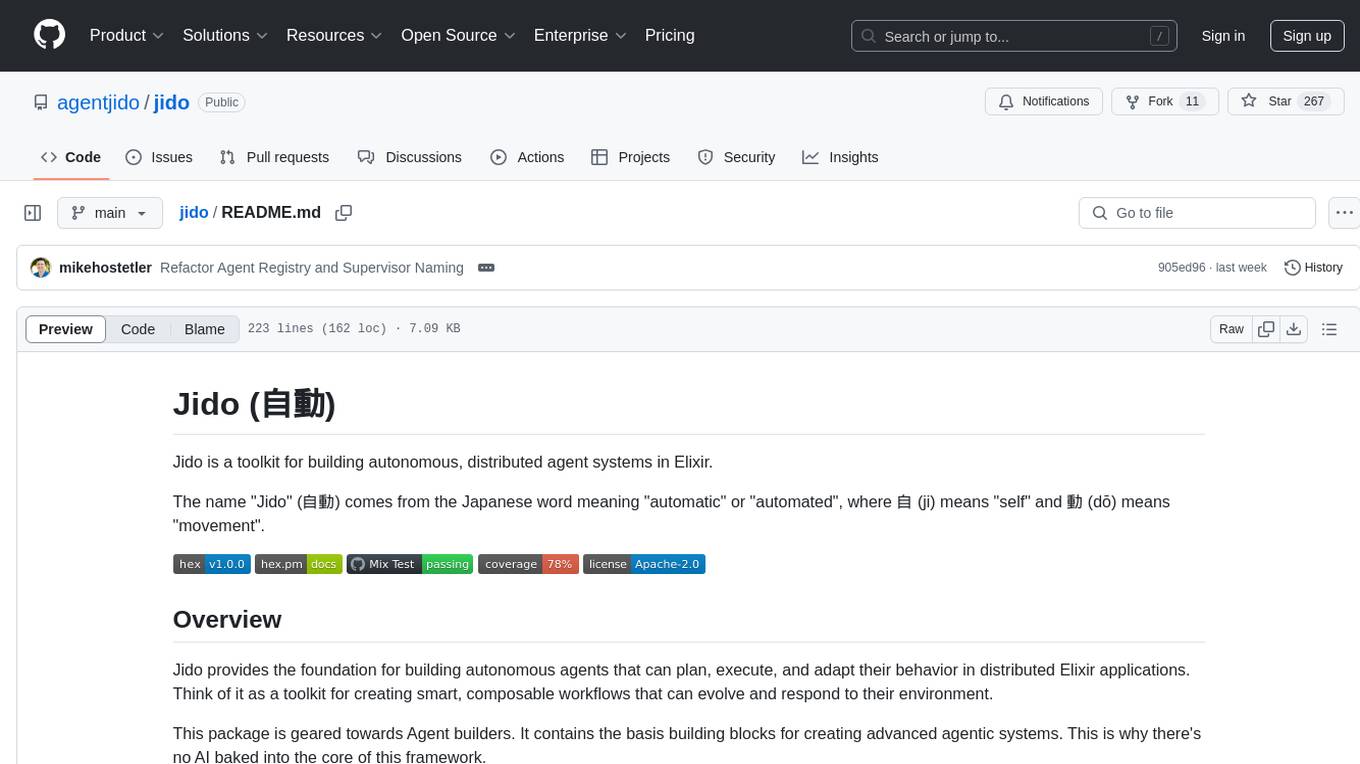

jido

Jido is a toolkit for building autonomous, distributed agent systems in Elixir. It provides the foundation for creating smart, composable workflows that can evolve and respond to their environment. Geared towards Agent builders, it contains core state primitives, composable actions, agent data structures, real-time sensors, signal system, skills, and testing tools. Jido is designed for multi-node Elixir clusters and offers rich helpers for unit and property-based testing.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

GraphRAG-SDK

Build fast and accurate GenAI applications with GraphRAG SDK, a specialized toolkit for building Graph Retrieval-Augmented Generation (GraphRAG) systems. It integrates knowledge graphs, ontology management, and state-of-the-art LLMs to deliver accurate, efficient, and customizable RAG workflows. The SDK simplifies the development process by automating ontology creation, knowledge graph agent creation, and query handling, enabling users to interact and query their knowledge graphs effectively. It supports multi-agent systems and orchestrates agents specialized in different domains. The SDK is optimized for FalkorDB, ensuring high performance and scalability for large-scale applications. By leveraging knowledge graphs, it enables semantic relationships and ontology-driven queries that go beyond standard vector similarity, enhancing retrieval-augmented generation capabilities.

lionagi

LionAGI is a powerful intelligent workflow automation framework that introduces advanced ML models into any existing workflows and data infrastructure. It can interact with almost any model, run interactions in parallel for most models, produce structured pydantic outputs with flexible usage, automate workflow via graph based agents, use advanced prompting techniques, and more. LionAGI aims to provide a centralized agent-managed framework for "ML-powered tools coordination" and to dramatically lower the barrier of entries for creating use-case/domain specific tools. It is designed to be asynchronous only and requires Python 3.10 or higher.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

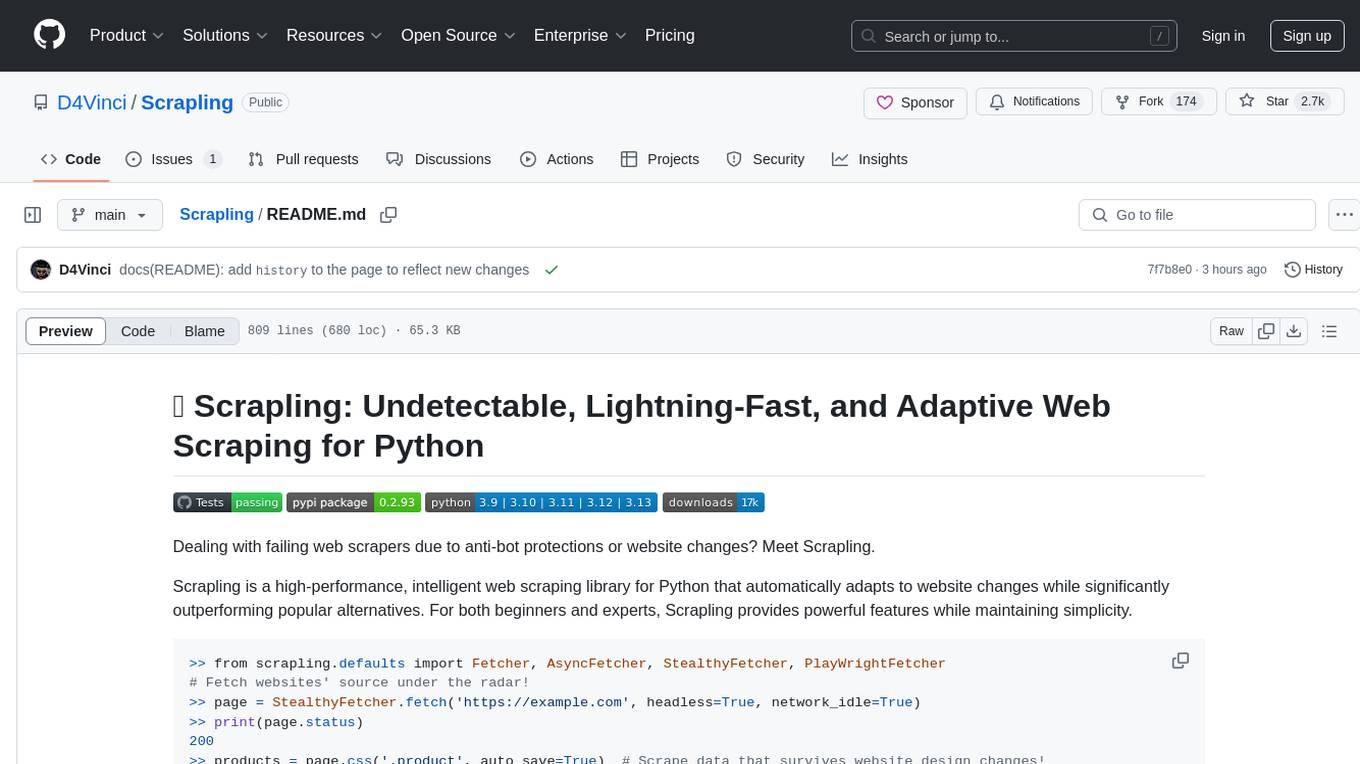

Scrapling

Scrapling is a high-performance, intelligent web scraping library for Python that automatically adapts to website changes while significantly outperforming popular alternatives. For both beginners and experts, Scrapling provides powerful features while maintaining simplicity. It offers features like fast and stealthy HTTP requests, adaptive scraping with smart element tracking and flexible selection, high performance with lightning-fast speed and memory efficiency, and developer-friendly navigation API and rich text processing. It also includes advanced parsing features like smart navigation, content-based selection, handling structural changes, and finding similar elements. Scrapling is designed to handle anti-bot protections and website changes effectively, making it a versatile tool for web scraping tasks.

videokit

VideoKit is a full-featured user-generated content solution for Unity Engine, enabling video recording, camera streaming, microphone streaming, social sharing, and conversational interfaces. It is cross-platform, with C# source code available for inspection. Users can share media, save to camera roll, pick from camera roll, stream camera preview, record videos, remove background, caption audio, and convert text commands. VideoKit requires Unity 2022.3+ and supports Android, iOS, macOS, Windows, and WebGL platforms.

Agentarium

Agentarium is a powerful Python framework for managing and orchestrating AI agents with ease. It provides a flexible and intuitive way to create, manage, and coordinate interactions between multiple AI agents in various environments. The framework offers advanced agent management, robust interaction management, a checkpoint system for saving and restoring agent states, data generation through agent interactions, performance optimization, flexible environment configuration, and an extensible architecture for customization.

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

rag-chat

The `@upstash/rag-chat` package simplifies the development of retrieval-augmented generation (RAG) chat applications by providing Next.js compatibility with streaming support, built-in vector store, optional Redis compatibility for fast chat history management, rate limiting, and disableRag option. Users can easily set up the environment variables and initialize RAGChat to interact with AI models, manage knowledge base, chat history, and enable debugging features. Advanced configuration options allow customization of RAGChat instance with built-in rate limiting, observability via Helicone, and integration with Next.js route handlers and Vercel AI SDK. The package supports OpenAI models, Upstash-hosted models, and custom providers like TogetherAi and Replicate.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

genaiscript

GenAIScript is a scripting environment designed to facilitate file ingestion, prompt development, and structured data extraction. Users can define metadata and model configurations, specify data sources, and define tasks to extract specific information. The tool provides a convenient way to analyze files and extract desired content in a structured format. It offers a user-friendly interface for working with data and automating data extraction processes, making it suitable for various data processing tasks.

For similar tasks

ai-chunking

AI Chunking is a powerful Python library for semantic document chunking and enrichment using AI. It provides intelligent document chunking capabilities with various strategies to split text while preserving semantic meaning, particularly useful for processing markdown documentation. The library offers multiple chunking strategies such as Recursive Text Splitting, Section-based Semantic Chunking, and Base Chunking. Users can configure chunk sizes, overlap, and support various text formats. The tool is easy to extend with custom chunking strategies, making it versatile for different document processing needs.

anylabeling

AnyLabeling is a tool for effortless data labeling with AI support from YOLO and Segment Anything. It combines features from LabelImg and Labelme with an improved UI and auto-labeling capabilities. Users can annotate images with polygons, rectangles, circles, lines, and points, as well as perform auto-labeling using YOLOv5 and Segment Anything. The tool also supports text detection, recognition, and Key Information Extraction (KIE) labeling, with multiple language options available such as English, Vietnamese, and Chinese.

basdonax-ai-rag

Basdonax AI RAG v1.0 is a repository that contains all the necessary resources to create your own AI-powered secretary using the RAG from Basdonax AI. It leverages open-source models from Meta and Microsoft, namely 'Llama3-7b' and 'Phi3-4b', allowing users to upload documents and make queries. This tool aims to simplify life for individuals by harnessing the power of AI. The installation process involves choosing between different data models based on GPU capabilities, setting up Docker, pulling the desired model, and customizing the assistant prompt file. Once installed, users can access the RAG through a local link and enjoy its functionalities.

Local-File-Organizer

The Local File Organizer is an AI-powered tool designed to help users organize their digital files efficiently and securely on their local device. By leveraging advanced AI models for text and visual content analysis, the tool automatically scans and categorizes files, generates relevant descriptions and filenames, and organizes them into a new directory structure. All AI processing occurs locally using the Nexa SDK, ensuring privacy and security. With support for multiple file types and customizable prompts, this tool aims to simplify file management and bring order to users' digital lives.

uwazi

Uwazi is a flexible database application designed for capturing and organizing collections of information, with a focus on document management. It is developed and supported by HURIDOCS, benefiting human rights organizations globally. The tool requires NodeJs, ElasticSearch, ICU Analysis Plugin, MongoDB, Yarn, and pdftotext for installation. It offers production and development installation guides, including Docker setup. Uwazi supports hot reloading, unit and integration testing with JEST, and end-to-end testing with Nightmare or Puppeteer. The system requirements include RAM, CPU, and disk space recommendations for on-premises and development usage.

Notate

Notate is a powerful desktop research assistant that combines AI-driven analysis with advanced vector search technology. It streamlines research workflow by processing, organizing, and retrieving information from documents, audio, and text. Notate offers flexible AI capabilities with support for various LLM providers and local models, ensuring data privacy. Built for researchers, academics, and knowledge workers, it features real-time collaboration, accessible UI, and cross-platform compatibility.

suna

Kortix is an open-source platform designed to build, manage, and train AI agents for various tasks. It allows users to create autonomous agents, from general-purpose assistants to specialized automation tools. The platform offers capabilities such as browser automation, file management, web intelligence, system operations, API integrations, and agent building tools. Users can create custom agents tailored to specific domains, workflows, or business needs, enabling tasks like research & analysis, browser automation, file & document management, data processing & analysis, and system administration.

OpenContracts

OpenContracts is a free and open-source document analytics platform designed to empower knowledge owners and subject matter experts. It supports multiple document formats, ingestion pipelines, and custom document analytics tools. Users can manage documents, define metadata schemas, extract layout features, generate vector embeddings, deploy custom analyzers, support new document formats, annotate documents, extract bulk data, and create bespoke data extraction workflows. The tool aims to provide a standardized architecture for analyzing contracts and making data portable, with a focus on PDF and text-based formats. It includes features like document management, layout parsing, pluggable architectures, human annotation interface, and a custom LLM framework for conversation management and real-time streaming.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.