MCPSharp

MCPSharp is a .NET library that helps you build Model Context Protocol (MCP) servers and clients - the standardized API protocol used by AI assistants and models.

Stars: 142

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

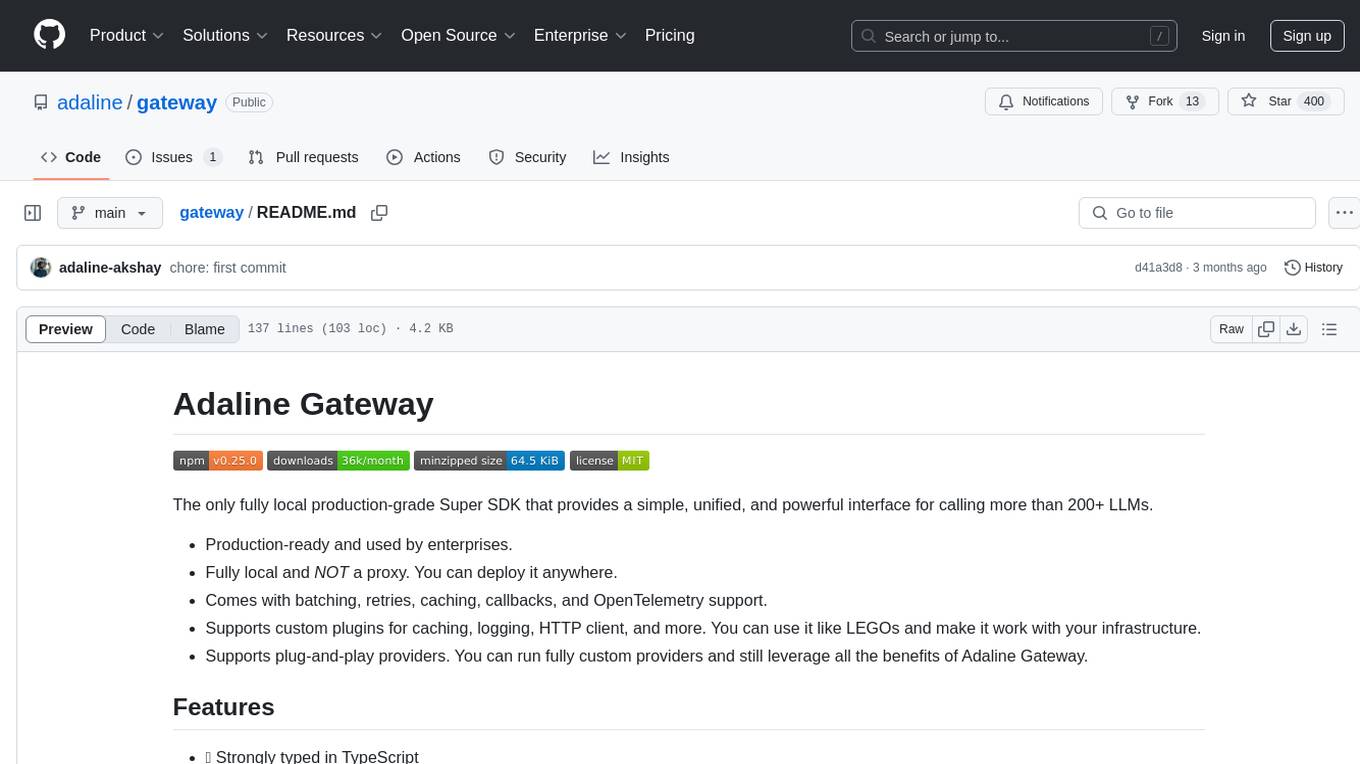

README:

MCPSharp is a .NET library that helps you build Model Context Protocol (MCP) servers and clients - the standardized API protocol used by AI assistants and models. With MCPSharp, you can:

- Create MCP-compliant tools and functions that AI models can discover and use

- Connect directly to existing MCP servers from C# code with an easy to use client

- Expose your .NET methods as MCP endpoints with simple attributes

- Handle MCP protocol details and JSON-RPC communication seamlessly

- Microsoft.Extensions.AI Integration: MCPSharp now integrates with Microsoft.Extensions.AI, allowing tools to be exposed as AIFunctions

- Semantic Kernel Support: Add tools using Semantic Kernel's KernelFunctionAttribute

- Dynamic Tool Registration: Register tools on-the-fly with custom implementation logic

- Tool Change Notifications: Server now notifies clients when tools are added, updated, or removed

- Complex Object Parameter Support: Better handling of complex objects in tool parameters

- Better Error Handling: Improved error handling with detailed stack traces

Use MCPSharp when you want to:

- Create tools that AI assistants like Anthropic's Claude Desktop can use

- Build MCP-compliant APIs without dealing with the protocol details

- Expose existing .NET code as MCP endpoints

- Add AI capabilities to your applications through standardized interfaces

- Integrate with Microsoft.Extensions.AI and/or Semantic Kernel without locking into a single vendor

- Easy-to-use attribute-based API (

[McpTool],[McpResource]) - Built-in JSON-RPC support with automatic request/response handling

- Automatic parameter validation and type conversion

- Rich documentation support through XML comments

- Near zero configuration required for basic usage

- Any version of .NET that supports standard 2.0

dotnet add package MCPSharpCreate a class and mark your method(s) with the [McpTool] attribute:

using MCPSharp;

public class Calculator

{

[McpTool("add", "Adds two numbers")] // Note: [McpFunction] is deprecated, use [McpTool] instead

public static int Add([McpParameter(true)] int a, [McpParameter(true)] int b)

{

return a + b;

}

}await MCPServer.StartAsync("CalculatorServer", "1.0.0");The StartAsync() method will automatically find any methods in the base assembly that are marked with the McpTool attribute. In order to add any methods that are in a referenced library, you can manually register them by calling MCPServer.Register<T>(); with T being the class containing the desired methods. If your methods are marked with Semantic Kernel attributes, this will work as well. If the client supports list changed notifications, it will be notified when additional tools are registered.

Register tools dynamically with custom implementation:

MCPServer.AddToolHandler(new Tool()

{

Name = "dynamicTool",

Description = "A dynamic tool",

InputSchema = new InputSchema {

Type = "object",

Required = ["input"],

Properties = new Dictionary<string, ParameterSchema>{

{"input", new ParameterSchema{Type="string", Description="Input value"}}

}

}

}, (string input) => { return $"You provided: {input}"; });// Client-side integration

MCPClient client = new("AIClient", "1.0", "path/to/mcp/server");

IList<AIFunction> functions = await client.GetFunctionsAsync();This list can be plugged into the ChatOptions.Tools property for an IChatClient, Allowing MCP servers to be used seamlessly with Any IChatClient Implementation.

using Microsoft.SemanticKernel;

public class MySkillClass

{

[KernelFunction("MyFunction")]

[Description("Description of my function")]

public string MyFunction(string input) => $"Processed: {input}";

}

// Register with MCPServer

MCPServer.Register<MySkillClass>();Currently, This is the only way to make a Semantic kernel method registerable with the MCP server. If you have a use case that is not covered here, please reach out!

-

[McpTool]- Marks a class or method as an MCP tool- Optional parameters:

-

Name- The tool name (default: class/method name) -

Description- Description of the tool

-

- Optional parameters:

-

[McpParameter]- Provides metadata for function parameters- Optional parameters:

-

Description- Parameter description -

Required- Whether the parameter is required (default: false)

-

- Optional parameters:

-

[McpResource]- Marks a property or method as an MCP resource- Parameters:

-

Name- Resource name -

Uri- Resource URI (can include templates) -

MimeType- MIME type of the resource -

Description- Resource description

-

- Parameters:

-

MCPServer.StartAsync(string serverName, string version)- Starts the MCP server -

MCPServer.Register<T>()- Registers a class containing tools or resources -

MCPServer.AddToolHandler(Tool tool, Delegate func)- Registers a dynamic tool

-

new MCPClient(string name, string version, string server, string args = null, IDictionary<string, string> env = null)- Create a client instance -

client.GetToolsAsync()- Get available tools -

client.CallToolAsync(string name, Dictionary<string, object> parameters)- Call a tool -

client.GetResourcesAsync()- Get available resources -

client.GetFunctionsAsync()- Get tools as AIFunctions

MCPSharp automatically extracts documentation from XML comments:

/// <summary>

/// Provides mathematical operations

/// </summary>

public class Calculator

{

/// <summary>

/// Adds two numbers together

/// </summary>

/// <param name="a">The first number to add</param>

/// <param name="b">The second number to add</param>

/// <returns>The sum of the two numbers</returns>

[McpTool]

public static int Add(

[McpParameter(true)] int a,

[McpParameter(true)] int b)

{

return a + b;

}

}Enable XML documentation in your project file:

<PropertyGroup>

<GenerateDocumentationFile>true</GenerateDocumentationFile>

<NoWarn>$(NoWarn);1591</NoWarn>

</PropertyGroup>This allows you to be able to quickly change the names and descriptions of your MCP tools without having to recompile. For example, if you find the model is having trouble understanding how to use it correctly.

-

[McpFunction]is deprecated and replaced with[McpTool]for better alignment with MCP standards - Use

MCPServer.Register<T>()instead ofMCPServer.RegisterTool<T>()for consistency (old method still works but is deprecated)

We welcome contributions! Please feel free to submit a Pull Request.

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MCPSharp

Similar Open Source Tools

MCPSharp

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

mcphub.nvim

MCPHub.nvim is a powerful Neovim plugin that integrates MCP (Model Context Protocol) servers into your workflow. It offers a centralized config file for managing servers and tools, with an intuitive UI for testing resources. Ideal for LLM integration, it provides programmatic API access and interactive testing through the `:MCPHub` command.

IntelliNode

IntelliNode is a javascript module that integrates cutting-edge AI models like ChatGPT, LLaMA, WaveNet, Gemini, and Stable diffusion into projects. It offers functions for generating text, speech, and images, as well as semantic search, multi-model evaluation, and chatbot capabilities. The module provides a wrapper layer for low-level model access, a controller layer for unified input handling, and a function layer for abstract functionality tailored to various use cases.

pentagi

PentAGI is an innovative tool for automated security testing that leverages cutting-edge artificial intelligence technologies. It is designed for information security professionals, researchers, and enthusiasts who need a powerful and flexible solution for conducting penetration tests. The tool provides secure and isolated operations in a sandboxed Docker environment, fully autonomous AI-powered agent for penetration testing steps, a suite of 20+ professional security tools, smart memory system for storing research results, web intelligence for gathering information, integration with external search systems, team delegation system, comprehensive monitoring and reporting, modern interface, API integration, persistent storage, scalable architecture, self-hosted solution, flexible authentication, and quick deployment through Docker Compose.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

a2a-java

A2A Java SDK is a Java library that helps run agentic applications as A2AServers following the Agent2Agent (A2A) Protocol. It provides a Java server implementation of the A2A Protocol, allowing users to create A2A server agents and execute tasks. The SDK also includes a Java client implementation for communication with A2A servers using various transports like JSON-RPC 2.0, gRPC, and HTTP+JSON/REST. Users can configure different transport protocols, handle messages, tasks, push notifications, and interact with server agents. The SDK supports streaming and non-streaming responses, error handling, and task management functionalities.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

gateway

Adaline Gateway is a fully local production-grade Super SDK that offers a unified interface for calling over 200+ LLMs. It is production-ready, supports batching, retries, caching, callbacks, and OpenTelemetry. Users can create custom plugins and providers for seamless integration with their infrastructure.

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

agentpress

AgentPress is a collection of simple but powerful utilities that serve as building blocks for creating AI agents. It includes core components for managing threads, registering tools, processing responses, state management, and utilizing LLMs. The tool provides a modular architecture for handling messages, LLM API calls, response processing, tool execution, and results management. Users can easily set up the environment, create custom tools with OpenAPI or XML schema, and manage conversation threads with real-time interaction. AgentPress aims to be agnostic, simple, and flexible, allowing users to customize and extend functionalities as needed.

agents-starter

A starter template for building AI-powered chat agents using Cloudflare's Agent platform, powered by agents-sdk. It provides a foundation for creating interactive chat experiences with AI, complete with a modern UI and tool integration capabilities. Features include interactive chat interface with AI, built-in tool system with human-in-the-loop confirmation, advanced task scheduling, dark/light theme support, real-time streaming responses, state management, and chat history. Prerequisites include a Cloudflare account and OpenAI API key. The project structure includes components for chat UI implementation, chat agent logic, tool definitions, and helper functions. Customization guide covers adding new tools, modifying the UI, and example use cases for customer support, development assistant, data analysis assistant, personal productivity assistant, and scheduling assistant.

metis

Metis is an open-source, AI-driven tool for deep security code review, created by Arm's Product Security Team. It helps engineers detect subtle vulnerabilities, improve secure coding practices, and reduce review fatigue. Metis uses LLMs for semantic understanding and reasoning, RAG for context-aware reviews, and supports multiple languages and vector store backends. It provides a plugin-friendly and extensible architecture, named after the Greek goddess of wisdom, Metis. The tool is designed for large, complex, or legacy codebases where traditional tooling falls short.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

fast-mcp

Fast MCP is a Ruby gem that simplifies the integration of AI models with your Ruby applications. It provides a clean implementation of the Model Context Protocol, eliminating complex communication protocols, integration challenges, and compatibility issues. With Fast MCP, you can easily connect AI models to your servers, share data resources, choose from multiple transports, integrate with frameworks like Rails and Sinatra, and secure your AI-powered endpoints. The gem also offers real-time updates and authentication support, making AI integration a seamless experience for developers.

Google_GenerativeAI

Google GenerativeAI (Gemini) is an unofficial C# .Net SDK based on REST APIs for accessing Google Gemini models. It offers a complete rewrite of the previous SDK with improved performance, flexibility, and ease of use. The SDK seamlessly integrates with LangChain.net, providing easy methods for JSON-based interactions and function calling with Google Gemini models. It includes features like enhanced JSON mode handling, function calling with code generator, multi-modal functionality, Vertex AI support, multimodal live API, image generation and captioning, retrieval-augmented generation with Vertex RAG Engine and Google AQA, easy JSON handling, Gemini tools and function calling, multimodal live API, and more.

For similar tasks

MCPSharp

MCPSharp is a .NET library that helps build Model Context Protocol (MCP) servers and clients for AI assistants and models. It allows creating MCP-compliant tools, connecting to existing MCP servers, exposing .NET methods as MCP endpoints, and handling MCP protocol details seamlessly. With features like attribute-based API, JSON-RPC support, parameter validation, and type conversion, MCPSharp simplifies the development of AI capabilities in applications through standardized interfaces.

chrome-extension

Mem0 Chrome Extension lets you own your memory and preferences across any Gen AI apps like ChatGPT, Claude, Perplexity, etc and get personalized, relevant responses. It allows users to store memories from conversations, retrieve relevant memories during chats, manage and organize stored information, and seamlessly integrate with the Claude AI interface. The extension requires an API key and user ID for connecting to the Mem0 API, and it stores this information locally in the browser. Users can troubleshoot common issues, and contributions to improve the extension are welcome under the MIT License.

astron-rpa

AstronRPA is an enterprise-grade Robotic Process Automation (RPA) desktop application that supports low-code/no-code development. It enables users to rapidly build workflows and automate desktop software and web pages. The tool offers comprehensive automation support for various applications, highly component-based design, enterprise-grade security and collaboration features, developer-friendly experience, native agent empowerment, and multi-channel trigger integration. It follows a frontend-backend separation architecture with components for system operations, browser automation, GUI automation, AI integration, and more. The tool is deployed via Docker and designed for complex RPA scenarios.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.