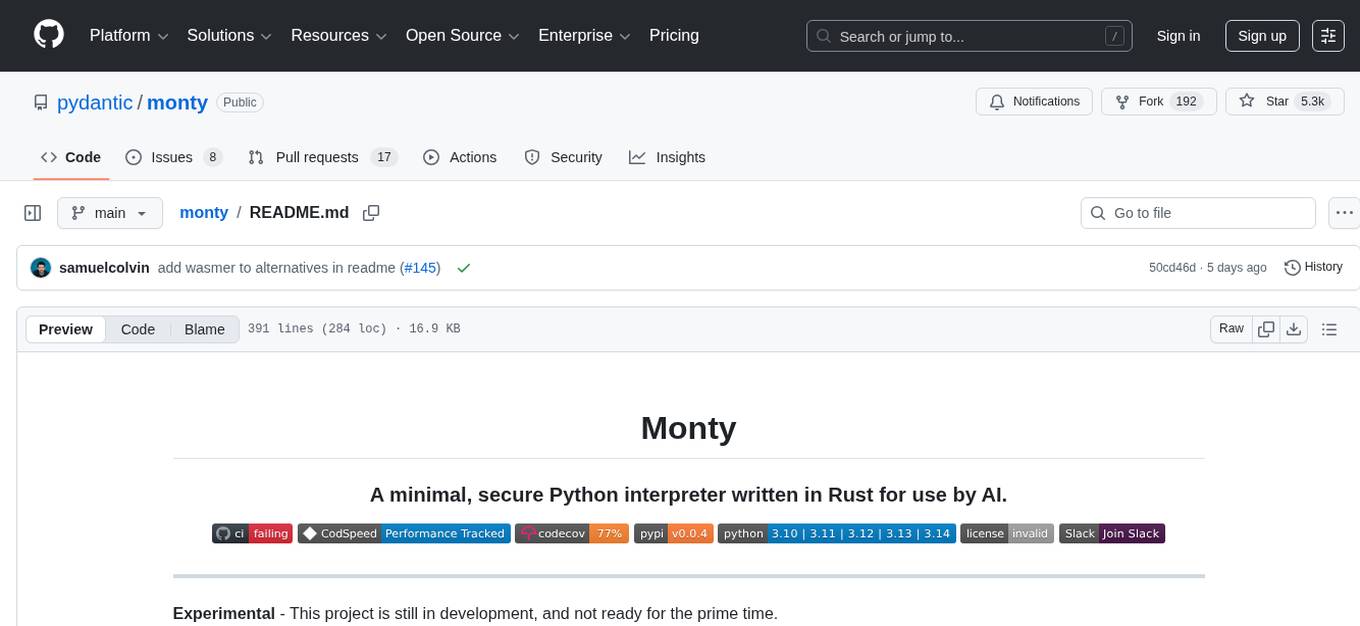

Scrapling

🕷️ An undetectable, powerful, flexible, high-performance Python library to make Web Scraping Easy and Effortless as it should be!

Stars: 7307

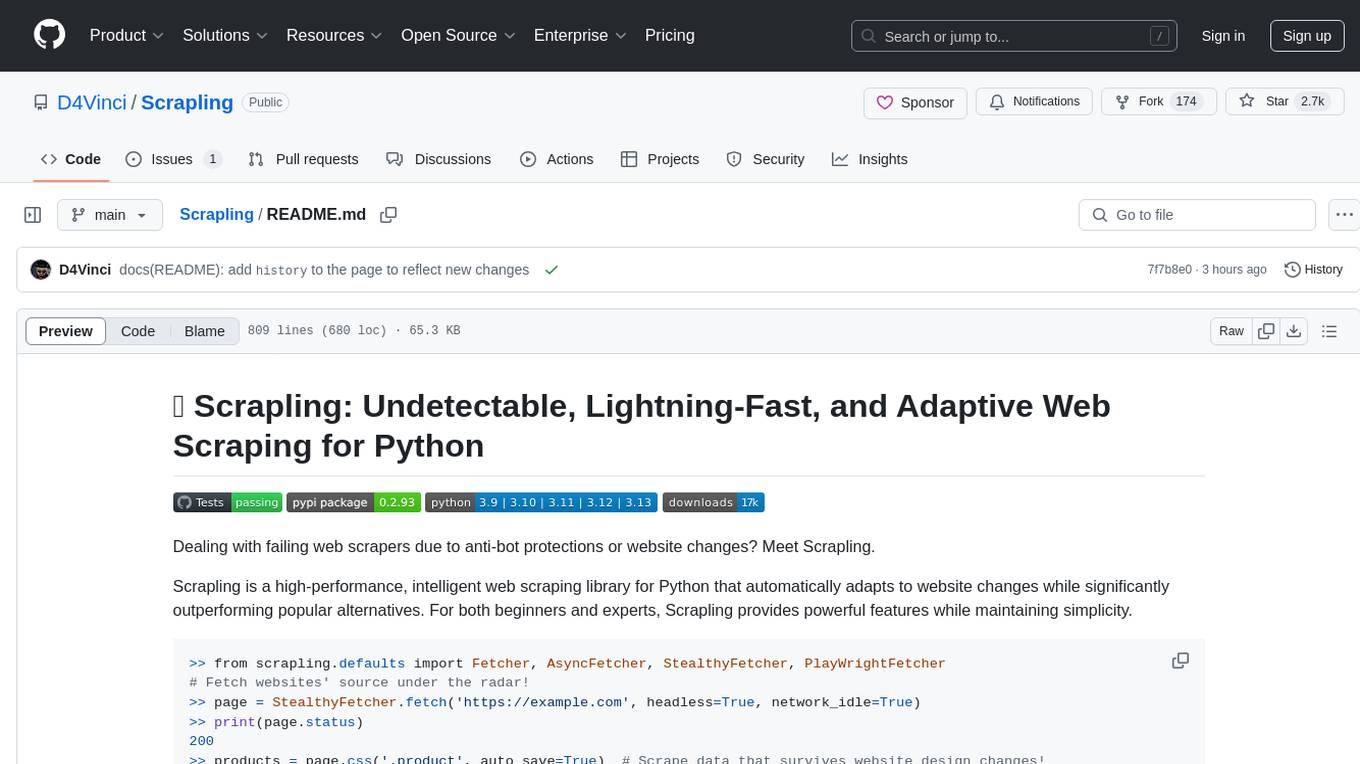

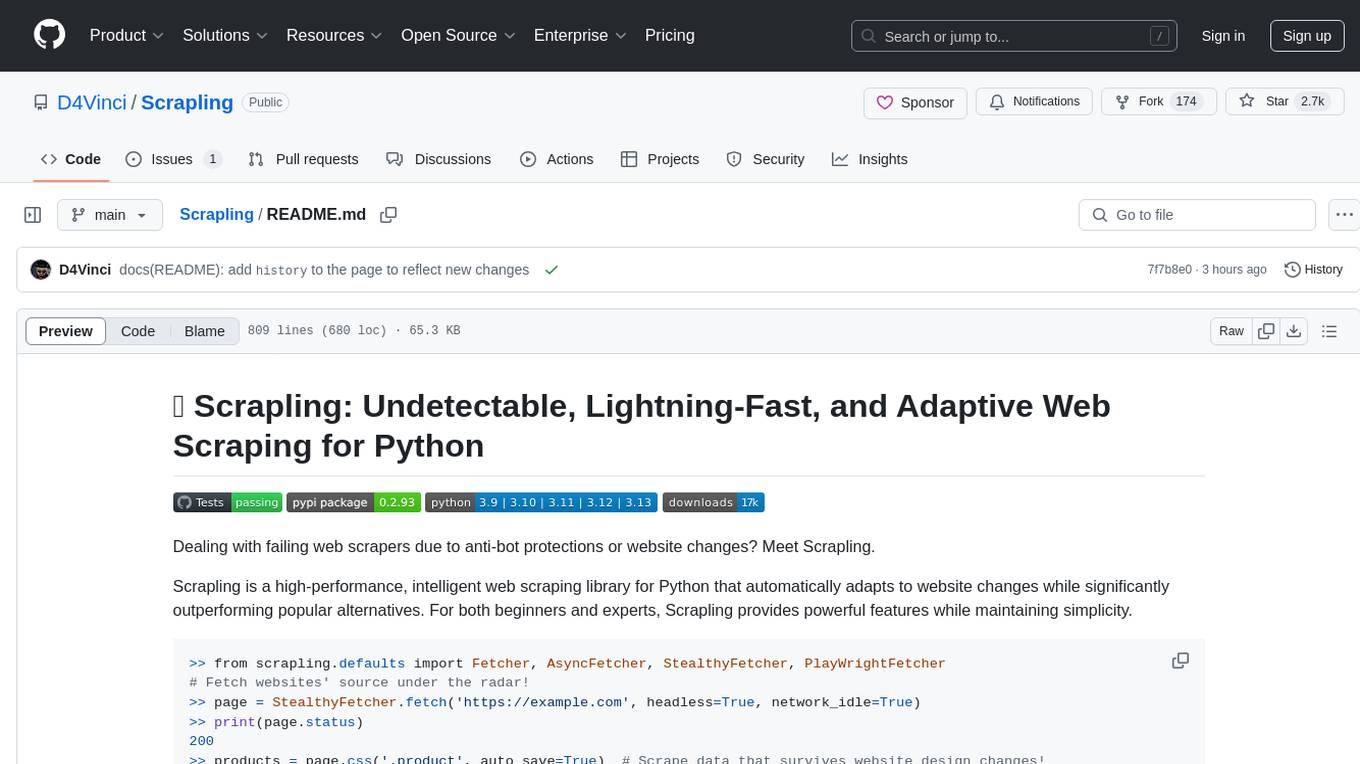

Scrapling is a high-performance, intelligent web scraping library for Python that automatically adapts to website changes while significantly outperforming popular alternatives. For both beginners and experts, Scrapling provides powerful features while maintaining simplicity. It offers features like fast and stealthy HTTP requests, adaptive scraping with smart element tracking and flexible selection, high performance with lightning-fast speed and memory efficiency, and developer-friendly navigation API and rich text processing. It also includes advanced parsing features like smart navigation, content-based selection, handling structural changes, and finding similar elements. Scrapling is designed to handle anti-bot protections and website changes effectively, making it a versatile tool for web scraping tasks.

README:

Easy, effortless Web Scraping as it should be!

Selection methods · Choosing a fetcher · CLI · MCP mode · Migrating from Beautifulsoup

Stop fighting anti-bot systems. Stop rewriting selectors after every website update.

Scrapling isn't just another Web Scraping library. It's the first adaptive scraping library that learns from website changes and evolves with them. While other libraries break when websites update their structure, Scrapling automatically relocates your elements and keeps your scrapers running.

Built for the modern Web, Scrapling has its own rapid parsing engine and its fetchers to handle all Web Scraping challenges you are facing or will face. Built by Web Scrapers for Web Scrapers and regular users, there's something for everyone.

>> from scrapling.fetchers import Fetcher, AsyncFetcher, StealthyFetcher, DynamicFetcher

>> StealthyFetcher.adaptive = True

# Fetch websites' source under the radar!

>> page = StealthyFetcher.fetch('https://example.com', headless=True, network_idle=True)

>> print(page.status)

200

>> products = page.css('.product', auto_save=True) # Scrape data that survives website design changes!

>> # Later, if the website structure changes, pass `adaptive=True`

>> products = page.css('.product', adaptive=True) # and Scrapling still finds them!Do you want to show your ad here? Click here and choose the tier that suites you!

-

HTTP Requests: Fast and stealthy HTTP requests with the

Fetcherclass. Can impersonate browsers' TLS fingerprint, headers, and use HTTP3. -

Dynamic Loading: Fetch dynamic websites with full browser automation through the

DynamicFetcherclass supporting Playwright's Chromium, real Chrome, and custom stealth mode. -

Anti-bot Bypass: Advanced stealth capabilities with

StealthyFetcherusing a modified version of Firefox and fingerprint spoofing. Can bypass all levels of Cloudflare's Turnstile with automation easily. -

Session Management: Persistent session support with

FetcherSession,StealthySession, andDynamicSessionclasses for cookie and state management across requests. - Async Support: Complete async support across all fetchers and dedicated async session classes.

- 🔄 Smart Element Tracking: Relocate elements after website changes using intelligent similarity algorithms.

- 🎯 Smart Flexible Selection: CSS selectors, XPath selectors, filter-based search, text search, regex search, and more.

- 🔍 Find Similar Elements: Automatically locate elements similar to found elements.

- 🤖 MCP Server to be used with AI: Built-in MCP server for AI-assisted Web Scraping and data extraction. The MCP server features custom, powerful capabilities that utilize Scrapling to extract targeted content before passing it to the AI (Claude/Cursor/etc), thereby speeding up operations and reducing costs by minimizing token usage. (demo video)

- 🚀 Lightning Fast: Optimized performance outperforming most Python scraping libraries.

- 🔋 Memory Efficient: Optimized data structures and lazy loading for a minimal memory footprint.

- ⚡ Fast JSON Serialization: 10x faster than the standard library.

- 🏗️ Battle tested: Not only does Scrapling have 92% test coverage and full type hints coverage, but it has been used daily by hundreds of Web Scrapers over the past year.

- 🎯 Interactive Web Scraping Shell: Optional built-in IPython shell with Scrapling integration, shortcuts, and new tools to speed up Web Scraping scripts development, like converting curl requests to Scrapling requests and viewing requests results in your browser.

- 🚀 Use it directly from the Terminal: Optionally, you can use Scrapling to scrape a URL without writing a single code!

- 🛠️ Rich Navigation API: Advanced DOM traversal with parent, sibling, and child navigation methods.

- 🧬 Enhanced Text Processing: Built-in regex, cleaning methods, and optimized string operations.

- 📝 Auto Selector Generation: Generate robust CSS/XPath selectors for any element.

- 🔌 Familiar API: Similar to Scrapy/BeautifulSoup with the same pseudo-elements used in Scrapy/Parsel.

- 📘 Complete Type Coverage: Full type hints for excellent IDE support and code completion.

Scrapling 0.3 introduces a completely revamped session system:

- Persistent Sessions: Maintain cookies, headers, and authentication across multiple requests

- Automatic Session Management: Smart session lifecycle handling with proper cleanup

- Session Inheritance: All fetchers support both one-off requests and persistent session usage

- Concurrent Session Support: Run multiple isolated sessions simultaneously

from scrapling.fetchers import Fetcher, StealthyFetcher, DynamicFetcher

from scrapling.fetchers import FetcherSession, StealthySession, DynamicSession

# HTTP requests with session support

with FetcherSession(impersonate='chrome') as session: # Use latest version of Chrome's TLS fingerprint

page = session.get('https://quotes.toscrape.com/', stealthy_headers=True)

quotes = page.css('.quote .text::text')

# Or use one-off requests

page = Fetcher.get('https://quotes.toscrape.com/')

quotes = page.css('.quote .text::text')

# Advanced stealth mode (Keep the browser open until you finish)

with StealthySession(headless=True, solve_cloudflare=True) as session:

page = session.fetch('https://nopecha.com/demo/cloudflare', google_search=False)

data = page.css('#padded_content a')

# Or use one-off request style, it opens the browser for this request, then closes it after finishing

page = StealthyFetcher.fetch('https://nopecha.com/demo/cloudflare')

data = page.css('#padded_content a')

# Full browser automation (Keep the browser open until you finish)

with DynamicSession(headless=True, disable_resources=False, network_idle=True) as session:

page = session.fetch('https://quotes.toscrape.com/', load_dom=False)

data = page.xpath('//span[@class="text"]/text()') # XPath selector if you prefer it

# Or use one-off request style, it opens the browser for this request, then closes it after finishing

page = DynamicFetcher.fetch('https://quotes.toscrape.com/')

data = page.css('.quote .text::text')from scrapling.fetchers import Fetcher

# Rich element selection and navigation

page = Fetcher.get('https://quotes.toscrape.com/')

# Get quotes with multiple selection methods

quotes = page.css('.quote') # CSS selector

quotes = page.xpath('//div[@class="quote"]') # XPath

quotes = page.find_all('div', {'class': 'quote'}) # BeautifulSoup-style

# Same as

quotes = page.find_all('div', class_='quote')

quotes = page.find_all(['div'], class_='quote')

quotes = page.find_all(class_='quote') # and so on...

# Find element by text content

quotes = page.find_by_text('quote', tag='div')

# Advanced navigation

first_quote = page.css_first('.quote')

quote_text = first_quote.css('.text::text')

quote_text = page.css('.quote').css_first('.text::text') # Chained selectors

quote_text = page.css_first('.quote .text').text # Using `css_first` is faster than `css` if you want the first element

author = first_quote.next_sibling.css('.author::text')

parent_container = first_quote.parent

# Element relationships and similarity

similar_elements = first_quote.find_similar()

below_elements = first_quote.below_elements()You can use the parser right away if you don't want to fetch websites like below:

from scrapling.parser import Selector

page = Selector("<html>...</html>")And it works precisely the same way!

import asyncio

from scrapling.fetchers import FetcherSession, AsyncStealthySession, AsyncDynamicSession

async with FetcherSession(http3=True) as session: # `FetcherSession` is context-aware and can work in both sync/async patterns

page1 = session.get('https://quotes.toscrape.com/')

page2 = session.get('https://quotes.toscrape.com/', impersonate='firefox135')

# Async session usage

async with AsyncStealthySession(max_pages=2) as session:

tasks = []

urls = ['https://example.com/page1', 'https://example.com/page2']

for url in urls:

task = session.fetch(url)

tasks.append(task)

print(session.get_pool_stats()) # Optional - The status of the browser tabs pool (busy/free/error)

results = await asyncio.gather(*tasks)

print(session.get_pool_stats())Scrapling v0.3 includes a powerful command-line interface:

# Launch interactive Web Scraping shell

scrapling shell

# Extract pages to a file directly without programming (Extracts the content inside `body` tag by default)

# If the output file ends with `.txt`, then the text content of the target will be extracted.

# If ended with `.md`, it will be a markdown representation of the HTML content, and `.html` will be the HTML content right away.

scrapling extract get 'https://example.com' content.md

scrapling extract get 'https://example.com' content.txt --css-selector '#fromSkipToProducts' --impersonate 'chrome' # All elements matching the CSS selector '#fromSkipToProducts'

scrapling extract fetch 'https://example.com' content.md --css-selector '#fromSkipToProducts' --no-headless

scrapling extract stealthy-fetch 'https://nopecha.com/demo/cloudflare' captchas.html --css-selector '#padded_content a' --solve-cloudflare[!NOTE] There are many additional features, but we want to keep this page short, like the MCP server and the interactive Web Scraping Shell. Check out the full documentation here

Scrapling isn't just powerful—it's also blazing fast, and the updates since version 0.3 deliver exceptional performance improvements across all operations!

| # | Library | Time (ms) | vs Scrapling |

|---|---|---|---|

| 1 | Scrapling | 1.92 | 1.0x |

| 2 | Parsel/Scrapy | 1.99 | 1.036x |

| 3 | Raw Lxml | 2.33 | 1.214x |

| 4 | PyQuery | 20.61 | ~11x |

| 5 | Selectolax | 80.65 | ~42x |

| 6 | BS4 with Lxml | 1283.21 | ~698x |

| 7 | MechanicalSoup | 1304.57 | ~679x |

| 8 | BS4 with html5lib | 3331.96 | ~1735x |

Scrapling's adaptive element finding capabilities significantly outperform alternatives:

| Library | Time (ms) | vs Scrapling |

|---|---|---|

| Scrapling | 1.87 | 1.0x |

| AutoScraper | 10.24 | 5.476x |

All benchmarks represent averages of 100+ runs. See benchmarks.py for methodology.

Scrapling requires Python 3.10 or higher:

pip install scraplingStarting with v0.3.2, this installation only includes the parser engine and its dependencies, without any fetchers or commandline dependencies.

-

If you are going to use any of the extra features below, the fetchers, or their classes, then you need to install fetchers' dependencies, and then install their browser dependencies with

pip install "scrapling[fetchers]" scrapling installThis downloads all browsers with their system dependencies and fingerprint manipulation dependencies.

-

Extra features:

- Install the MCP server feature:

pip install "scrapling[ai]" - Install shell features (Web Scraping shell and the

extractcommand):pip install "scrapling[shell]" - Install everything:

pip install "scrapling[all]"

Don't forget that you need to install the browser dependencies with

scrapling installafter any of these extras (if you didn't already) - Install the MCP server feature:

We welcome contributions! Please read our contributing guidelines before getting started.

[!CAUTION] This library is provided for educational and research purposes only. By using this library, you agree to comply with local and international data scraping and privacy laws. The authors and contributors are not responsible for any misuse of this software. Always respect website terms of service and robots.txt files.

This work is licensed under the BSD-3-Clause License.

This project includes code adapted from:

- Parsel (BSD License)—Used for translator submodule

- Daijro's brilliant work on BrowserForge and Camoufox

- Vinyzu's work on Botright

- brotector for browser detection bypass techniques

- fakebrowser for fingerprinting research

- rebrowser-patches for stealth improvements

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Scrapling

Similar Open Source Tools

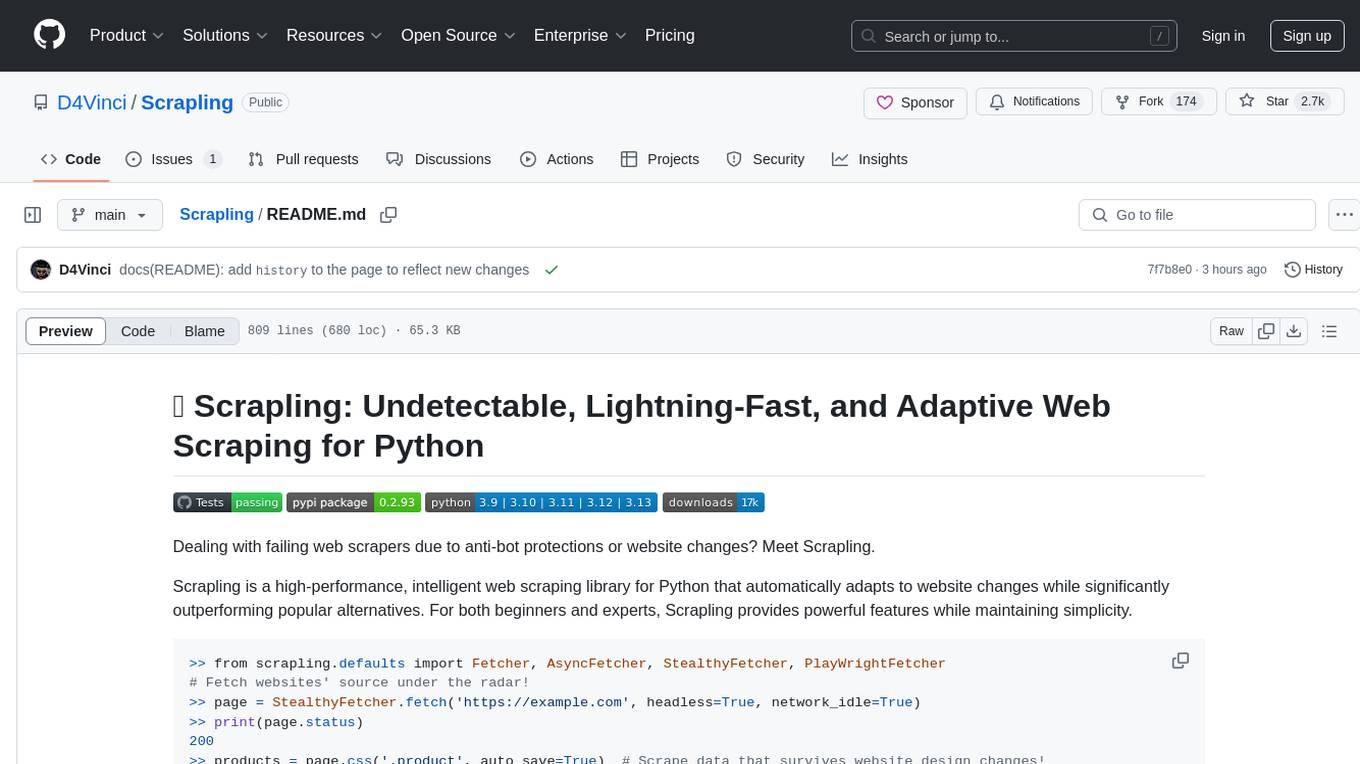

Scrapling

Scrapling is a high-performance, intelligent web scraping library for Python that automatically adapts to website changes while significantly outperforming popular alternatives. For both beginners and experts, Scrapling provides powerful features while maintaining simplicity. It offers features like fast and stealthy HTTP requests, adaptive scraping with smart element tracking and flexible selection, high performance with lightning-fast speed and memory efficiency, and developer-friendly navigation API and rich text processing. It also includes advanced parsing features like smart navigation, content-based selection, handling structural changes, and finding similar elements. Scrapling is designed to handle anti-bot protections and website changes effectively, making it a versatile tool for web scraping tasks.

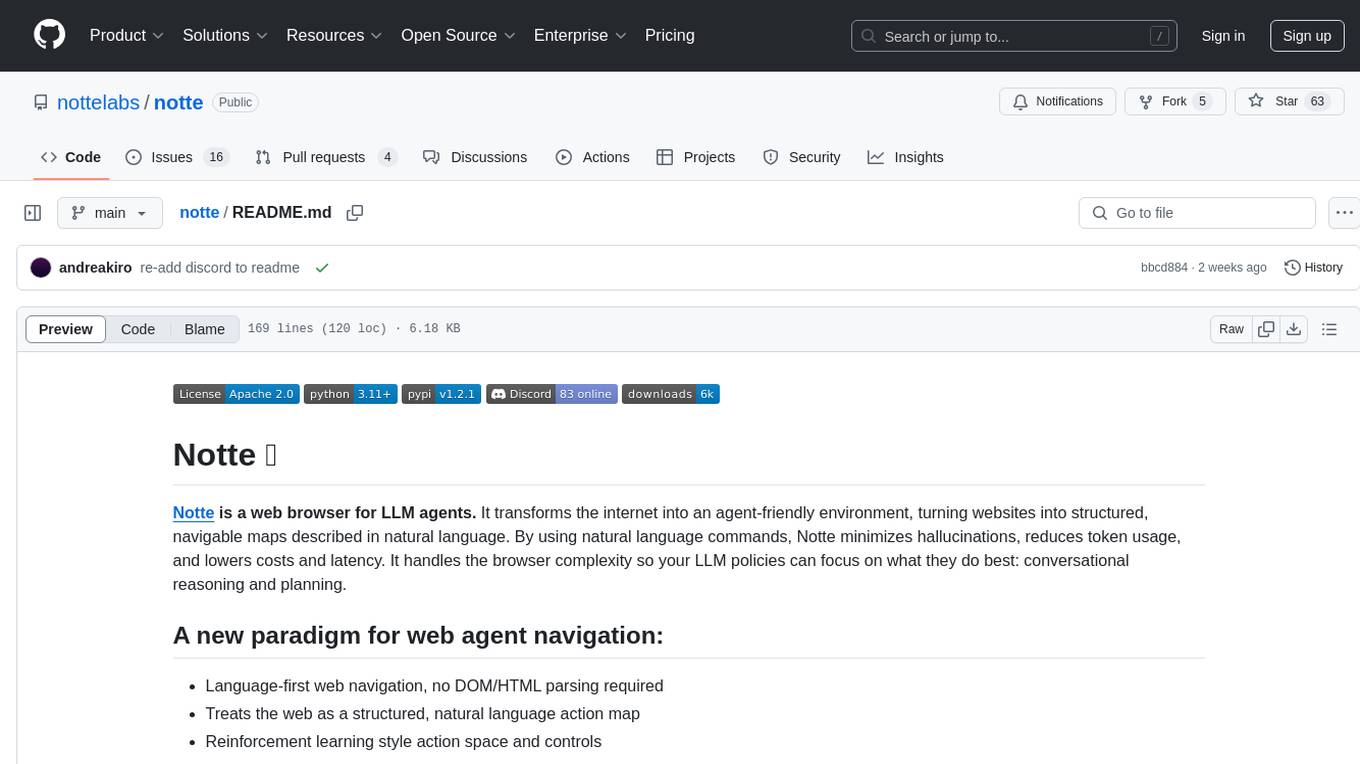

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

rl

TorchRL is an open-source Reinforcement Learning (RL) library for PyTorch. It provides pytorch and **python-first** , low and high level abstractions for RL that are intended to be **efficient** , **modular** , **documented** and properly **tested**. The code is aimed at supporting research in RL. Most of it is written in python in a highly modular way, such that researchers can easily swap components, transform them or write new ones with little effort.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

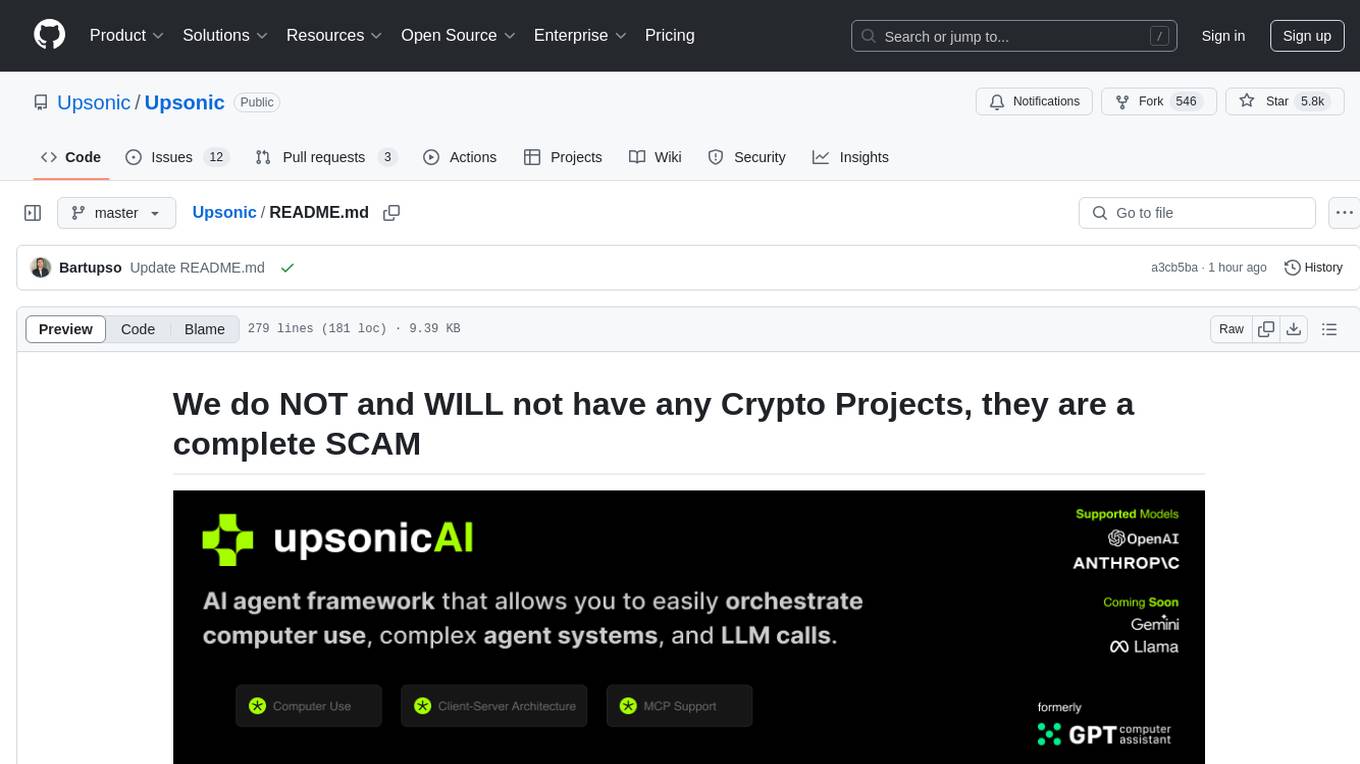

monty

Monty is a minimal, secure Python interpreter written in Rust for use by AI. It allows safe execution of Python code written by an LLM embedded in your agent, with fast startup times and performance similar to CPython. Monty supports running a subset of Python code, blocking access to the host environment, calling host functions, typechecking, snapshotting interpreter state, controlling resource usage, collecting stdout and stderr, and running async or sync code. It is designed for running code written by agents, providing a sandboxed environment without the complexity of a full container-based solution.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

req_llm

ReqLLM is a Req-based library for LLM interactions, offering a unified interface to AI providers through a plugin-based architecture. It brings composability and middleware advantages to LLM interactions, with features like auto-synced providers/models, typed data structures, ergonomic helpers, streaming capabilities, usage & cost extraction, and a plugin-based provider system. Users can easily generate text, structured data, embeddings, and track usage costs. The tool supports various AI providers like Anthropic, OpenAI, Groq, Google, and xAI, and allows for easy addition of new providers. ReqLLM also provides API key management, detailed documentation, and a roadmap for future enhancements.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

effective_llm_alignment

This is a super customizable, concise, user-friendly, and efficient toolkit for training and aligning LLMs. It provides support for various methods such as SFT, Distillation, DPO, ORPO, CPO, SimPO, SMPO, Non-pair Reward Modeling, Special prompts basket format, Rejection Sampling, Scoring using RM, Effective FAISS Map-Reduce Deduplication, LLM scoring using RM, NER, CLIP, Classification, and STS. The toolkit offers key libraries like PyTorch, Transformers, TRL, Accelerate, FSDP, DeepSpeed, and tools for result logging with wandb or clearml. It allows mixing datasets, generation and logging in wandb/clearml, vLLM batched generation, and aligns models using the SMPO method.

DB-GPT

DB-GPT is a personal database administrator that can solve database problems by reading documents, using various tools, and writing analysis reports. It is currently undergoing an upgrade. **Features:** * **Online Demo:** * Import documents into the knowledge base * Utilize the knowledge base for well-founded Q&A and diagnosis analysis of abnormal alarms * Send feedbacks to refine the intermediate diagnosis results * Edit the diagnosis result * Browse all historical diagnosis results, used metrics, and detailed diagnosis processes * **Language Support:** * English (default) * Chinese (add "language: zh" in config.yaml) * **New Frontend:** * Knowledgebase + Chat Q&A + Diagnosis + Report Replay * **Extreme Speed Version for localized llms:** * 4-bit quantized LLM (reducing inference time by 1/3) * vllm for fast inference (qwen) * Tiny LLM * **Multi-path extraction of document knowledge:** * Vector database (ChromaDB) * RESTful Search Engine (Elasticsearch) * **Expert prompt generation using document knowledge** * **Upgrade the LLM-based diagnosis mechanism:** * Task Dispatching -> Concurrent Diagnosis -> Cross Review -> Report Generation * Synchronous Concurrency Mechanism during LLM inference * **Support monitoring and optimization tools in multiple levels:** * Monitoring metrics (Prometheus) * Flame graph in code level * Diagnosis knowledge retrieval (dbmind) * Logical query transformations (Calcite) * Index optimization algorithms (for PostgreSQL) * Physical operator hints (for PostgreSQL) * Backup and Point-in-time Recovery (Pigsty) * **Continuously updated papers and experimental reports** This project is constantly evolving with new features. Don't forget to star ⭐ and watch 👀 to stay up to date.

gpt-computer-assistant

GPT Computer Assistant (GCA) is an open-source framework designed to build vertical AI agents that can automate tasks on Windows, macOS, and Ubuntu systems. It leverages the Model Context Protocol (MCP) and its own modules to mimic human-like actions and achieve advanced capabilities. With GCA, users can empower themselves to accomplish more in less time by automating tasks like updating dependencies, analyzing databases, and configuring cloud security settings.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

For similar tasks

Scrapling

Scrapling is a high-performance, intelligent web scraping library for Python that automatically adapts to website changes while significantly outperforming popular alternatives. For both beginners and experts, Scrapling provides powerful features while maintaining simplicity. It offers features like fast and stealthy HTTP requests, adaptive scraping with smart element tracking and flexible selection, high performance with lightning-fast speed and memory efficiency, and developer-friendly navigation API and rich text processing. It also includes advanced parsing features like smart navigation, content-based selection, handling structural changes, and finding similar elements. Scrapling is designed to handle anti-bot protections and website changes effectively, making it a versatile tool for web scraping tasks.

bookmark-summary

The 'bookmark-summary' repository reads bookmarks from 'bookmark-collection', extracts text content using Jina Reader, and then summarizes the text using LLM. The detailed implementation can be found in 'process_changes.py'. It needs to be used together with the Github Action in 'bookmark-collection'.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

For similar jobs

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

OAD

OAD is a powerful open-source tool for analyzing and visualizing data. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With OAD, users can easily import data from various sources, clean and preprocess data, perform statistical analysis, and create customizable visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, OAD can help you streamline your data analysis workflow and uncover valuable insights from your data.

sqlcoder

Defog's SQLCoder is a family of state-of-the-art large language models (LLMs) designed for converting natural language questions into SQL queries. It outperforms popular open-source models like gpt-4 and gpt-4-turbo on SQL generation tasks. SQLCoder has been trained on more than 20,000 human-curated questions based on 10 different schemas, and the model weights are licensed under CC BY-SA 4.0. Users can interact with SQLCoder through the 'transformers' library and run queries using the 'sqlcoder launch' command in the terminal. The tool has been tested on NVIDIA GPUs with more than 16GB VRAM and Apple Silicon devices with some limitations. SQLCoder offers a demo on their website and supports quantized versions of the model for consumer GPUs with sufficient memory.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

data-scientist-roadmap2024

The Data Scientist Roadmap2024 provides a comprehensive guide to mastering essential tools for data science success. It includes programming languages, machine learning libraries, cloud platforms, and concepts categorized by difficulty. The roadmap covers a wide range of topics from programming languages to machine learning techniques, data visualization tools, and DevOps/MLOps tools. It also includes web development frameworks and specific concepts like supervised and unsupervised learning, NLP, deep learning, reinforcement learning, and statistics. Additionally, it delves into DevOps tools like Airflow and MLFlow, data visualization tools like Tableau and Matplotlib, and other topics such as ETL processes, optimization algorithms, and financial modeling.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

quadratic

Quadratic is a modern multiplayer spreadsheet application that integrates Python, AI, and SQL functionalities. It aims to streamline team collaboration and data analysis by enabling users to pull data from various sources and utilize popular data science tools. The application supports building dashboards, creating internal tools, mixing data from different sources, exploring data for insights, visualizing Python workflows, and facilitating collaboration between technical and non-technical team members. Quadratic is built with Rust + WASM + WebGL to ensure seamless performance in the browser, and it offers features like WebGL Grid, local file management, Python and Pandas support, Excel formula support, multiplayer capabilities, charts and graphs, and team support. The tool is currently in Beta with ongoing development for additional features like JS support, SQL database support, and AI auto-complete.