mcp-ui

SDK for UI over MCP. Create next-gen UI experiences!

Stars: 2365

mcp-ui is a collection of SDKs that bring interactive web components to the Model Context Protocol (MCP). It allows servers to define reusable UI snippets, render them securely in the client, and react to their actions in the MCP host environment. The SDKs include @mcp-ui/server (TypeScript) for generating UI resources on the server, @mcp-ui/client (TypeScript) for rendering UI components on the client, and mcp_ui_server (Ruby) for generating UI resources in a Ruby environment. The project is an experimental community playground for MCP UI ideas, with rapid iteration and enhancements.

README:

What's mcp-ui? • Core Concepts • Installation • Getting Started • Walkthrough • Examples • Supported Hosts • Security • Roadmap • Contributing • License

mcp-ui brings interactive web components to the Model Context Protocol (MCP). Deliver rich, dynamic UI resources directly from your MCP server to be rendered by the client. Take AI interaction to the next level!

This project is an experimental community playground for MCP UI ideas. Expect rapid iteration and enhancements!

mcp-ui is a collection of SDKs comprising:

-

@mcp-ui/server(TypeScript): Utilities to generate UI resources (UIResource) on your MCP server. -

@mcp-ui/client(TypeScript): UI components (e.g.,<UIResourceRenderer />) to render the UI resources and handle their events. -

mcp_ui_server(Ruby): Utilities to generate UI resources on your MCP server in a Ruby environment. -

mcp-ui-server(Python): Utilities to generate UI resources on your MCP server in a Python environment.

Together, they let you define reusable UI snippets on the server side, seamlessly and securely render them in the client, and react to their actions in the MCP host environment.

In essence, by using mcp-ui SDKs, servers and hosts can agree on contracts that enable them to create and render interactive UI snippets (as a path to a standardized UI approach in MCP).

The primary payload returned from the server to the client is the UIResource:

interface UIResource {

type: 'resource';

resource: {

uri: string; // e.g., ui://component/id

mimeType: 'text/html' | 'text/uri-list' | 'application/vnd.mcp-ui.remote-dom'; // text/html for HTML content, text/uri-list for URL content, application/vnd.mcp-ui.remote-dom for remote-dom content (Javascript)

text?: string; // Inline HTML, external URL, or remote-dom script

blob?: string; // Base64-encoded HTML, URL, or remote-dom script

};

}-

uri: Unique identifier for caching and routing-

ui://…— UI resources (rendering method determined by mimeType)

-

-

mimeType:text/htmlfor HTML content (iframe srcDoc),text/uri-listfor URL content (iframe src),application/vnd.mcp-ui.remote-domfor remote-dom content (Javascript)-

MCP-UI requires a single URL: While

text/uri-listformat supports multiple URLs, MCP-UI uses only the first validhttp/sURL and warns if additional URLs are found

-

MCP-UI requires a single URL: While

-

textvs.blob: Choosetextfor simple strings; useblobfor larger or encoded content.

The UI Resource is rendered in the <UIResourceRenderer /> component. It automatically detects the resource type and renders the appropriate component.

It is available as a React component and as a Web Component.

React Component

It accepts the following props:

-

resource: The resource object from an MCP Tool response. It must includeuri,mimeType, and content (text,blob) -

onUIAction: Optional callback for handling UI actions from the resource:When actions include a{ type: 'tool', payload: { toolName: string, params: Record<string, unknown> }, messageId?: string } | { type: 'intent', payload: { intent: string, params: Record<string, unknown> }, messageId?: string } | { type: 'prompt', payload: { prompt: string }, messageId?: string } | { type: 'notify', payload: { message: string }, messageId?: string } | { type: 'link', payload: { url: string }, messageId?: string }

messageId, the iframe automatically receives response messages for asynchronous handling. -

supportedContentTypes: Optional array to restrict which content types are allowed (['rawHtml', 'externalUrl', 'remoteDom']) -

htmlProps: Optional props for the internal<HTMLResourceRenderer>-

style: Optional custom styles for the iframe -

iframeProps: Optional props passed to the iframe element -

iframeRenderData: OptionalRecord<string, unknown>to pass data to the iframe upon rendering. This enables advanced use cases where the parent application needs to provide initial state or configuration to the sandboxed iframe content. -

autoResizeIframe: Optionalboolean | { width?: boolean; height?: boolean }to automatically resize the iframe to the size of the content.

-

-

remoteDomProps: Optional props for the internal<RemoteDOMResourceRenderer>-

library: Optional component library for Remote DOM resources (defaults tobasicComponentLibrary) -

remoteElements: remote element definitions for Remote DOM resources.

-

Web Component

The Web Component is available as <ui-resource-renderer>. It accepts the same props as the React component, but they must be passed as strings.

Example:

<ui-resource-renderer

resource='{ "mimeType": "text/html", "text": "<h2>Hello from the Web Component!</h2>" }'

></ui-resource-renderer>The onUIAction prop can be handled by attaching an event listener to the component:

const renderer = document.querySelector('ui-resource-renderer');

renderer.addEventListener('onUIAction', (event) => {

console.log('Action:', event.detail);

});The Web Component is available in the @mcp-ui/client package at dist/ui-resource-renderer.wc.js.

Rendered using the internal <HTMLResourceRenderer /> component, which displays content inside an <iframe>. This is suitable for self-contained HTML or embedding external apps.

-

mimeType:-

text/html: Renders inline HTML content. -

text/uri-list: Renders an external URL. MCP-UI uses the first validhttp/sURL.

-

Rendered using the internal <RemoteDOMResourceRenderer /> component, which utilizes Shopify's remote-dom. The server responds with a script that describes the UI and events. On the host, the script is securely rendered in a sandboxed iframe, and the UI changes are communicated to the host in JSON, where they're rendered using the host's component library. This is more flexible than iframes and allows for UIs that match the host's look-and-feel.

-

mimeType:application/vnd.mcp-ui.remote-dom+javascript; framework={react | webcomponents}

UI snippets must be able to interact with the agent. In mcp-ui, this is done by hooking into events sent from the UI snippet and reacting to them in the host (see onUIAction prop). For example, an HTML may trigger a tool call when a button is clicked by sending an event which will be caught handled by the client.

# using npm

npm install @mcp-ui/server @mcp-ui/client

# or pnpm

pnpm add @mcp-ui/server @mcp-ui/client

# or yarn

yarn add @mcp-ui/server @mcp-ui/clientgem install mcp_ui_server# using pip

pip install mcp-ui-server

# or uv

uv add mcp-ui-serverYou can use GitMCP to give your IDE access to mcp-ui's latest documentation!

-

Server-side: Build your UI resources

import { createUIResource } from '@mcp-ui/server'; import { createRemoteComponent, createRemoteDocument, createRemoteText, } from '@remote-dom/core'; // Inline HTML const htmlResource = createUIResource({ uri: 'ui://greeting/1', content: { type: 'rawHtml', htmlString: '<p>Hello, MCP UI!</p>' }, encoding: 'text', }); // External URL const externalUrlResource = createUIResource({ uri: 'ui://greeting/1', content: { type: 'externalUrl', iframeUrl: 'https://example.com' }, encoding: 'text', }); // remote-dom const remoteDomResource = createUIResource({ uri: 'ui://remote-component/action-button', content: { type: 'remoteDom', script: ` const button = document.createElement('ui-button'); button.setAttribute('label', 'Click me for a tool call!'); button.addEventListener('press', () => { window.parent.postMessage({ type: 'tool', payload: { toolName: 'uiInteraction', params: { action: 'button-click', from: 'remote-dom' } } }, '*'); }); root.appendChild(button); `, framework: 'react', // or 'webcomponents' }, encoding: 'text', });

-

Client-side: Render in your MCP host

import React from 'react'; import { UIResourceRenderer } from '@mcp-ui/client'; function App({ mcpResource }) { if ( mcpResource.type === 'resource' && mcpResource.resource.uri?.startsWith('ui://') ) { return ( <UIResourceRenderer resource={mcpResource.resource} onUIAction={(result) => { console.log('Action:', result); }} /> ); } return <p>Unsupported resource</p>; }

Server-side: Build your UI resources

from mcp_ui_server import create_ui_resource

# Inline HTML

html_resource = create_ui_resource({

"uri": "ui://greeting/1",

"content": { "type": "rawHtml", "htmlString": "<p>Hello, from Python!</p>" },

"encoding": "text",

})

# External URL

external_url_resource = create_ui_resource({

"uri": "ui://greeting/2",

"content": { "type": "externalUrl", "iframeUrl": "https://example.com" },

"encoding": "text",

})Server-side: Build your UI resources

require 'mcp_ui_server'

# Inline HTML

html_resource = McpUiServer.create_ui_resource(

uri: 'ui://greeting/1',

content: { type: :raw_html, htmlString: '<p>Hello, from Ruby!</p>' },

encoding: :text

)

# External URL

external_url_resource = McpUiServer.create_ui_resource(

uri: 'ui://greeting/2',

content: { type: :external_url, iframeUrl: 'https://example.com' },

encoding: :text

)

# remote-dom

remote_dom_resource = McpUiServer.create_ui_resource(

uri: 'ui://remote-component/action-button',

content: {

type: :remote_dom,

script: "

const button = document.createElement('ui-button');

button.setAttribute('label', 'Click me from Ruby!');

button.addEventListener('press', () => {

window.parent.postMessage({ type: 'tool', payload: { toolName: 'uiInteraction', params: { action: 'button-click', from: 'ruby-remote-dom' } } }, '*');

});

root.appendChild(button);

",

framework: :react,

},

encoding: :text

)For a detailed, simple, step-by-step guide on how to integrate mcp-ui into your own server, check out the full server walkthroughs on the mcp-ui documentation site:

These guides will show you how to add a mcp-ui endpoint to an existing server, create tools that return UI resources, and test your setup with the ui-inspector!

Client Examples

-

ui-inspector - inspect local

mcp-ui-enabled servers. -

MCP-UI Chat - interactive chat built with the

mcp-uiclient. Check out the hosted version! - MCP-UI RemoteDOM Playground (

examples/remote-dom-demo) - local demo app to test RemoteDOM resources (intended for hosts) - MCP-UI Web Component Demo (

examples/wc-demo) - local demo app to test the Web Component

Server Examples

-

TypeScript: A full-featured server that is deployed to a hosted environment for easy testing.

-

typescript-server-demo: A simple Typescript server that demonstrates how to generate UI resources. -

server: A full-featured Typescript server that is deployed to a hosted Cloudflare environment for easy testing.

-

HTTP Streaming:

https://remote-mcp-server-authless.idosalomon.workers.dev/mcp -

SSE:

https://remote-mcp-server-authless.idosalomon.workers.dev/sse

-

HTTP Streaming:

-

-

Ruby: A barebones demo server that shows how to use

mcp_ui_serverandmcpgems together. -

Python: A simple demo server that shows how to use the

mcp-ui-serverPython package.

Drop those URLs into any MCP-compatible host to see mcp-ui in action. For a supported local inspector, see the ui-inspector.

mcp-ui is supported by a growing number of MCP-compatible clients. Feature support varies by host:

| Host | Rendering | UI Actions |

|---|---|---|

| Postman | ✅ | |

| Goose | ✅ | |

| Smithery | ✅ | ❌ |

| MCPJam | ✅ | ❌ |

| fast-agent | ✅ | ❌ |

| VSCode (TBA) | ? | ? |

Legend:

- ✅: Supported

⚠️ : Partial Support- ❌: Not Supported (yet)

Host and user security is one of mcp-ui's primary concerns. In all content types, the remote code is executed in a sandboxed iframe.

- [X] Add online playground

- [X] Expand UI Action API (beyond tool calls)

- [X] Support Web Components

- [X] Support Remote-DOM

- [ ] Add component libraries (in progress)

- [ ] Add SDKs for additional programming languages (in progress; Ruby available)

- [ ] Support additional frontend frameworks

- [ ] Add declarative UI content type

- [ ] Support generative UI?

mcp-ui is a project by Ido Salomon, in collaboration with Liad Yosef.

Contributions, ideas, and bug reports are welcome! See the contribution guidelines to get started.

Apache License 2.0 © The MCP-UI Authors

This project is provided "as is", without warranty of any kind. The mcp-ui authors and contributors shall not be held liable for any damages, losses, or issues arising from the use of this software. Use at your own risk.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-ui

Similar Open Source Tools

mcp-ui

mcp-ui is a collection of SDKs that bring interactive web components to the Model Context Protocol (MCP). It allows servers to define reusable UI snippets, render them securely in the client, and react to their actions in the MCP host environment. The SDKs include @mcp-ui/server (TypeScript) for generating UI resources on the server, @mcp-ui/client (TypeScript) for rendering UI components on the client, and mcp_ui_server (Ruby) for generating UI resources in a Ruby environment. The project is an experimental community playground for MCP UI ideas, with rapid iteration and enhancements.

lingo.dev

Replexica AI automates software localization end-to-end, producing authentic translations instantly across 60+ languages. Teams can do localization 100x faster with state-of-the-art quality, reaching more paying customers worldwide. The tool offers a GitHub Action for CI/CD automation and supports various formats like JSON, YAML, CSV, and Markdown. With lightning-fast AI localization, auto-updates, native quality translations, developer-friendly CLI, and scalability for startups and enterprise teams, Replexica is a top choice for efficient and effective software localization.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

laravel-mcp-server

Laravel MCP Server is a tool that allows users to build a route-first MCP server in Laravel and Lumen. It provides route-based MCP endpoint registration, streamable HTTP transport, and supports tool, resource, resource template, and prompt registration per endpoint. The server metadata is compatible with route cache, and it requires PHP version 8.2 or higher along with Laravel (Illuminate) version 9.x or Lumen version 9.x. Users can quickly install the tool, register endpoints, and verify functionality. Additionally, the tool offers advanced features like creating tools, resources, resource templates, prompts, notifications, and generating tools from OpenAPI specs.

probe

Probe is an AI-friendly, fully local, semantic code search tool designed to power the next generation of AI coding assistants. It combines the speed of ripgrep with the code-aware parsing of tree-sitter to deliver precise results with complete code blocks, making it perfect for large codebases and AI-driven development workflows. Probe supports various features like AI-friendly code extraction, fully local operation without external APIs, fast scanning of large codebases, accurate code structure parsing, re-rankers and NLP methods for better search results, multi-language support, interactive AI chat mode, and flexibility to run as a CLI tool, MCP server, or interactive AI chat.

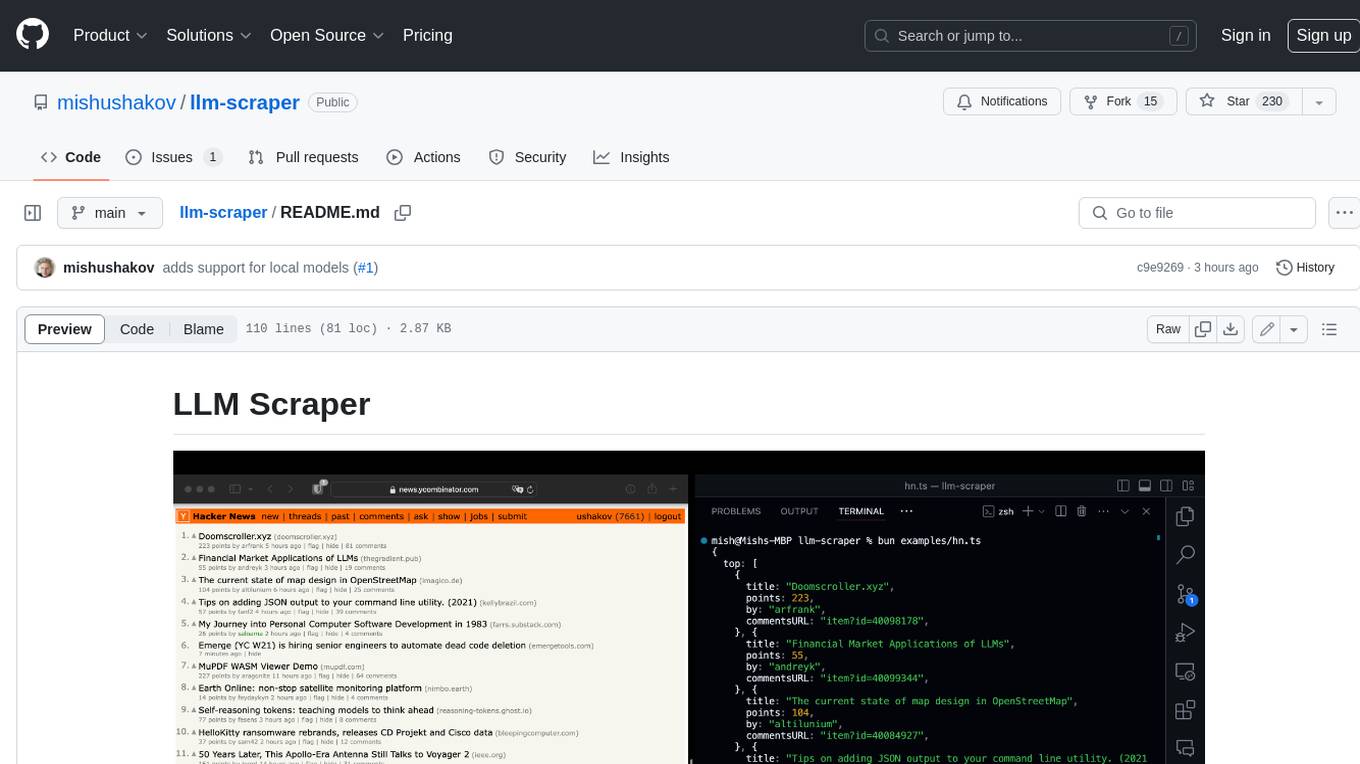

llm-scraper

LLM Scraper is a TypeScript library that allows you to convert any webpages into structured data using LLMs. It supports Local (GGUF), OpenAI, Groq chat models, and schemas defined with Zod. With full type-safety in TypeScript and based on the Playwright framework, it offers streaming when crawling multiple pages and supports four input modes: html, markdown, text, and image.

mdream

Mdream is a lightweight and user-friendly markdown editor designed for developers and writers. It provides a simple and intuitive interface for creating and editing markdown files with real-time preview. The tool offers syntax highlighting, markdown formatting options, and the ability to export files in various formats. Mdream aims to streamline the writing process and enhance productivity for individuals working with markdown documents.

react-native-rag

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

hud-python

hud-python is a Python library for creating interactive heads-up displays (HUDs) in video games. It provides a simple and flexible way to overlay information on the screen, such as player health, score, and notifications. The library is designed to be easy to use and customizable, allowing game developers to enhance the user experience by adding dynamic elements to their games. With hud-python, developers can create engaging HUDs that improve gameplay and provide important feedback to players.

mcphost

MCPHost is a CLI host application that enables Large Language Models (LLMs) to interact with external tools through the Model Context Protocol (MCP). It acts as a host in the MCP client-server architecture, allowing language models to access external tools and data sources, maintain consistent context across interactions, and execute commands safely. The tool supports interactive conversations with Claude 3.5 Sonnet and Ollama models, multiple concurrent MCP servers, dynamic tool discovery and integration, configurable server locations and arguments, and a consistent command interface across model types.

unity-mcp

MCP for Unity is a tool that acts as a bridge, enabling AI assistants to interact with the Unity Editor via a local MCP Client. Users can instruct their LLM to manage assets, scenes, scripts, and automate tasks within Unity. The tool offers natural language control, powerful tools for asset management, scene manipulation, and automation of workflows. It is extensible and designed to work with various MCP Clients, providing a range of functions for precise text edits, script management, GameObject operations, and more.

superagent

Superagent is an open-source AI assistant framework and API that allows developers to add powerful AI assistants to their applications. These assistants use large language models (LLMs), retrieval augmented generation (RAG), and generative AI to help users with a variety of tasks, including question answering, chatbot development, content generation, data aggregation, and workflow automation. Superagent is backed by Y Combinator and is part of YC W24.

twick

Twick is a comprehensive video editing toolkit built with modern web technologies. It is a monorepo containing multiple packages for video and image manipulation. The repository includes core utilities for media handling, a React-based canvas library for video and image editing, a video visualization and animation toolkit, a React component for video playback and control, timeline management and editing capabilities, a React-based video editor, and example implementations and usage demonstrations. Twick provides detailed API documentation and module information for developers. It offers easy integration with existing projects and allows users to build videos using the Twick Studio. The project follows a comprehensive style guide for naming conventions and code style across all packages.

browser4

Browser4 is a lightning-fast, coroutine-safe browser designed for AI integration with large language models. It offers ultra-fast automation, deep web understanding, and powerful data extraction APIs. Users can automate the browser, extract data at scale, and perform tasks like summarizing products, extracting product details, and finding specific links. The tool is developer-friendly, supports AI-powered automation, and provides advanced features like X-SQL for precise data extraction. It also offers RPA capabilities, browser control, and complex data extraction with X-SQL. Browser4 is suitable for web scraping, data extraction, automation, and AI integration tasks.

llm-metadata

LLM Metadata is a lightweight static API designed for discovering and integrating LLM metadata. It provides a high-throughput friendly, static-by-default interface that serves static JSON via GitHub Pages. The sources for the metadata include models.dev/api.json and contributions from the basellm community. The tool allows for easy rebuilding on change and offers various scripts for compiling TypeScript, building the API, and managing the project. It also supports internationalization for both documentation and API, enabling users to add new languages and localize capability labels and descriptions. The tool follows an auto-update policy based on a configuration file and allows for directory-based overrides for providers and models, facilitating customization and localization of metadata.

agent-prism

AgentPrism is an open source library of React components designed for visualizing traces from AI agents. It helps in turning complex JSON data into clear and visual diagrams for debugging AI agents. By plugging in OpenTelemetry data, users can visualize LLM calls, tool executions, and agent workflows in a hierarchical timeline. The library is currently in alpha release and under active development, with APIs subject to change. Users can try out AgentPrism live at agent-prism.evilmartians.io to visualize and debug their own agent traces.

For similar tasks

mcp-ui

mcp-ui is a collection of SDKs that bring interactive web components to the Model Context Protocol (MCP). It allows servers to define reusable UI snippets, render them securely in the client, and react to their actions in the MCP host environment. The SDKs include @mcp-ui/server (TypeScript) for generating UI resources on the server, @mcp-ui/client (TypeScript) for rendering UI components on the client, and mcp_ui_server (Ruby) for generating UI resources in a Ruby environment. The project is an experimental community playground for MCP UI ideas, with rapid iteration and enhancements.

For similar jobs

Protofy

Protofy is a full-stack, batteries-included low-code enabled web/app and IoT system with an API system and real-time messaging. It is based on Protofy (protoflow + visualui + protolib + protodevices) + Expo + Next.js + Tamagui + Solito + Express + Aedes + Redbird + Many other amazing packages. Protofy can be used to fast prototype Apps, webs, IoT systems, automations, or APIs. It is a ultra-extensible CMS with supercharged capabilities, mobile support, and IoT support (esp32 thanks to esphome).

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

dev-conf-replay

This repository contains information about various IT seminars and developer conferences in South Korea, allowing users to watch replays of past events. It covers a wide range of topics such as AI, big data, cloud, infrastructure, devops, blockchain, mobility, games, security, mobile development, frontend, programming languages, open source, education, and community events. Users can explore upcoming and past events, view related YouTube channels, and access additional resources like free programming ebooks and data structures and algorithms tutorials.

OpenDevin

OpenDevin is an open-source project aiming to replicate Devin, an autonomous AI software engineer capable of executing complex engineering tasks and collaborating actively with users on software development projects. The project aspires to enhance and innovate upon Devin through the power of the open-source community. Users can contribute to the project by developing core functionalities, frontend interface, or sandboxing solutions, participating in research and evaluation of LLMs in software engineering, and providing feedback and testing on the OpenDevin toolset.

polyfire-js

Polyfire is an all-in-one managed backend for AI apps that allows users to build AI applications directly from the frontend, eliminating the need for a separate backend. It simplifies the process by providing most backend services in just a few lines of code. With Polyfire, users can easily create chatbots, transcribe audio files, generate simple text, manage long-term memory, and generate images. The tool also offers starter guides and tutorials to help users get started quickly and efficiently.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

aimeos-laravel

Aimeos Laravel is a professional, full-featured, and ultra-fast Laravel ecommerce package that can be easily integrated into existing Laravel applications. It offers a wide range of features including multi-vendor, multi-channel, and multi-warehouse support, fast performance, support for various product types, subscriptions with recurring payments, multiple payment gateways, full RTL support, flexible pricing options, admin backend, REST and GraphQL APIs, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. The package is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and online shops with millions of vendors.

llm-ui

llm-ui is a React library designed for LLMs, providing features such as removing broken markdown syntax, adding custom components to LLM output, smoothing out pauses in streamed output, rendering at native frame rate, supporting code blocks for every language with Shiki, and being headless to allow for custom styles. The library aims to enhance the user experience and flexibility when working with LLMs.