docutranslate

文档(小说、论文、字幕)翻译工具(支持 pdf/word/excel/json/epub/srt...)Document (Novel, Thesis, Subtitle) Translation Tool (Supports pdf/word/excel/json/epub/srt...)

Stars: 87

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

README:

A lightweight local file translation tool based on Large Language Models

- ✅ Multiple Format Support: Translates various files including

pdf,docx,xlsx,md,txt,json,epub,srt,ass, and more. - ✅ Automatic Glossary Generation: Supports automatic generation of glossaries for term alignment.

- ✅ PDF Table, Formula, and Code Recognition: Recognizes and translates tables, formulas, and code often found in academic papers, powered by

doclingandmineruPDF parsing engines. - ✅ JSON Translation: Supports specifying values to be translated in JSON using JSON paths (following

jsonpath-ngsyntax). - ✅ Word/Excel Format Preservation: Translates

docxandxlsxfiles while preserving their original formatting (does not yet supportdocorxlsfiles). - ✅ Multi-AI Platform Support: Compatible with most AI platforms, enabling high-performance, concurrent AI translation with custom prompts.

- ✅ Asynchronous Support: Designed for high-performance scenarios with full asynchronous support, offering service interfaces for parallel tasks.

- ✅ LAN and Multi-user Support: Can be used by multiple people simultaneously on a local area network.

- ✅ Interactive Web Interface: Provides an out-of-the-box Web UI and RESTful API for easy integration and use.

- ✅ Small, Multi-platform Standalone Packages: Windows and Mac standalone packages under 40MB (for versions not using the

doclinglocal PDF parser).

When translating

QQ Discussion Group: 1047781902

For users who want to get started quickly, we provide all-in-one packages on GitHub Releases. Simply download, unzip, and enter your AI platform API Key to begin.

-

DocuTranslate: Standard version, uses the online

minerUengine to parse PDF documents. Choose this version if you don't need local PDF parsing (recommended). -

DocuTranslate_full: Full version, includes the built-in

doclinglocal PDF parsing engine. Choose this version if you need local PDF parsing.

# Basic installation

pip install docutranslate

# To use docling for local PDF parsing

pip install docutranslate[docling]# Initialize environment

uv init

# Basic installation

uv add docutranslate

# Install docling extension

uv add docutranslate[docling]# Initialize environment

git clone https://github.com/xunbu/docutranslate.git

cd docutranslate

uv sync

The core of the new DocuTranslate is the Workflow. Each workflow is a complete, end-to-end translation pipeline designed for a specific file type. Instead of interacting with a single large class, you select and configure a workflow based on your file type.

The basic usage flow is as follows:

-

Select a Workflow: Choose a workflow based on your input file type (e.g., PDF/Word or TXT), such as

MarkdownBasedWorkfloworTXTWorkflow. -

Build Configuration: Create the corresponding configuration object for the selected workflow (e.g.,

MarkdownBasedWorkflowConfig). This object contains all necessary sub-configurations, such as:- Converter Config: Defines how to convert the original file (like a PDF) to Markdown.

- Translator Config: Defines which LLM, API-Key, target language, etc., to use.

- Exporter Config: Defines specific options for the output format (like HTML).

- Instantiate the Workflow: Create an instance of the workflow using the configuration object.

-

Execute Translation: Call the workflow's

.read_*()and.translate()/.translate_async()methods. -

Export/Save Results: Call the

.export_to_*()or.save_as_*()methods to get or save the translation results.

| Workflow | Use Case | Input Formats | Output Formats | Core Config Class |

|---|---|---|---|---|

MarkdownBasedWorkflow |

Processes rich text documents like PDF, Word, images. Flow: File -> Markdown -> Translate -> Export. |

.pdf, .docx, .md, .png, .jpg, etc. |

.md, .zip, .html

|

MarkdownBasedWorkflowConfig |

TXTWorkflow |

Processes plain text documents. Flow: txt -> Translate -> Export. |

.txt and other plain text formats |

.txt, .html

|

TXTWorkflowConfig |

JsonWorkflow |

Processes JSON files. Flow: json -> Translate -> Export. |

.json |

.json, .html

|

JsonWorkflowConfig |

DocxWorkflow |

Processes docx files. Flow: docx -> Translate -> Export. |

.docx |

.docx, .html

|

DocxWorkflowConfig |

XlsxWorkflow |

Processes xlsx files. Flow: xlsx -> Translate -> Export. |

.xlsx, .csv

|

.xlsx, .html

|

XlsxWorkflowConfig |

SrtWorkflow |

Processes srt files. Flow: srt -> Translate -> Export. |

.srt |

.srt, .html

|

SrtWorkflowConfig |

EpubWorkflow |

Processes epub files. Flow: epub -> Translate -> Export. |

.epub |

.epub, .html

|

EpubWorkflowConfig |

HtmlWorkflow |

Processes html files. Flow: html -> Translate -> Export. |

.html, .htm

|

.html |

HtmlWorkflowConfig |

You can export to PDF format in the interactive interface.

For ease of use, DocuTranslate provides a full-featured Web interface and RESTful API.

Start the service:

# Start the service, listening on port 8010 by default

docutranslate -i

# Start on a specific port

docutranslate -i -p 8011

# You can also specify the port via an environment variable

export DOCUTRANSLATE_PORT=8011

docutranslate -i-

Interactive Interface: After starting the service, visit

http://127.0.0.1:8010(or your specified port) in your browser. -

API Documentation: The complete API documentation (Swagger UI) is available at

http://127.0.0.1:8010/docs.

This is the most common use case. We will use the minerU engine to convert the PDF to Markdown and then use an LLM for translation. This example uses the asynchronous method.

import asyncio

from docutranslate.workflow.md_based_workflow import MarkdownBasedWorkflow, MarkdownBasedWorkflowConfig

from docutranslate.converter.x2md.converter_mineru import ConverterMineruConfig

from docutranslate.translator.ai_translator.md_translator import MDTranslatorConfig

from docutranslate.exporter.md.md2html_exporter import MD2HTMLExporterConfig

async def main():

# 1. Build translator configuration

translator_config = MDTranslatorConfig(

base_url="https://open.bigmodel.cn/api/paas/v4", # AI Platform Base URL

api_key="YOUR_ZHIPU_API_KEY", # AI Platform API Key

model_id="glm-4-air", # Model ID

to_lang="English", # Target language

chunk_size=3000, # Text chunk size

concurrent=10, # Concurrency level

# glossary_generate_enable=True, # Enable automatic glossary generation

# glossary_dict={"Jobs":"乔布斯"}, # Pass in a glossary

# system_proxy_enable=True, # Enable system proxy

)

# 2. Build converter configuration (using minerU)

converter_config = ConverterMineruConfig(

mineru_token="YOUR_MINERU_TOKEN", # Your minerU Token

formula_ocr=True # Enable formula recognition

)

# 3. Build main workflow configuration

workflow_config = MarkdownBasedWorkflowConfig(

convert_engine="mineru", # Specify the parsing engine

converter_config=converter_config, # Pass the converter config

translator_config=translator_config, # Pass the translator config

html_exporter_config=MD2HTMLExporterConfig(cdn=True) # HTML export configuration

)

# 4. Instantiate the workflow

workflow = MarkdownBasedWorkflow(config=workflow_config)

# 5. Read the file and execute translation

print("Reading and translating the file...")

workflow.read_path("path/to/your/document.pdf")

await workflow.translate_async()

# Or use the synchronous method

# workflow.translate()

print("Translation complete!")

# 6. Save the results

workflow.save_as_html(name="translated_document.html")

workflow.save_as_markdown_zip(name="translated_document.zip")

workflow.save_as_markdown(name="translated_document.md") # Markdown with embedded images

print("Files saved to the ./output folder.")

# Or get the content strings directly

html_content = workflow.export_to_html()

html_content = workflow.export_to_markdown()

# print(html_content)

if __name__ == "__main__":

asyncio.run(main())For plain text files, the process is simpler as it doesn't require a document parsing (conversion) step. This example uses the asynchronous method.

import asyncio

from docutranslate.workflow.txt_workflow import TXTWorkflow, TXTWorkflowConfig

from docutranslate.translator.ai_translator.txt_translator import TXTTranslatorConfig

from docutranslate.exporter.txt.txt2html_exporter import TXT2HTMLExporterConfig

async def main():

# 1. Build translator configuration

translator_config = TXTTranslatorConfig(

base_url="https://api.openai.com/v1/",

api_key="YOUR_OPENAI_API_KEY",

model_id="gpt-4o",

to_lang="Chinese",

)

# 2. Build main workflow configuration

workflow_config = TXTWorkflowConfig(

translator_config=translator_config,

html_exporter_config=TXT2HTMLExporterConfig(cdn=True)

)

# 3. Instantiate the workflow

workflow = TXTWorkflow(config=workflow_config)

# 4. Read the file and execute translation

workflow.read_path("path/to/your/notes.txt")

await workflow.translate_async()

# Or use the synchronous method

# workflow.translate()

# 5. Save the result

workflow.save_as_txt(name="translated_notes.txt")

print("TXT file saved.")

# You can also export the translated plain text

text = workflow.export_to_txt()

if __name__ == "__main__":

asyncio.run(main())This example uses the asynchronous method. The json_paths item in JsonTranslatorConfig needs to specify the JSON paths to be translated (conforming to the jsonpath-ng syntax). Only values matching these paths will be translated.

import asyncio

from docutranslate.exporter.js.json2html_exporter import Json2HTMLExporterConfig

from docutranslate.translator.ai_translator.json_translator import JsonTranslatorConfig

from docutranslate.workflow.json_workflow import JsonWorkflowConfig, JsonWorkflow

async def main():

# 1. Build translator configuration

translator_config = JsonTranslatorConfig(

base_url="https://api.openai.com/v1/",

api_key="YOUR_OPENAI_API_KEY",

model_id="gpt-4o",

to_lang="Chinese",

json_paths=["$.*", "$.name"] # Conforms to jsonpath-ng syntax, values at matching paths will be translated

)

# 2. Build main workflow configuration

workflow_config = JsonWorkflowConfig(

translator_config=translator_config,

html_exporter_config=Json2HTMLExporterConfig(cdn=True)

)

# 3. Instantiate the workflow

workflow = JsonWorkflow(config=workflow_config)

# 4. Read the file and execute translation

workflow.read_path("path/to/your/notes.json")

await workflow.translate_async()

# Or use the synchronous method

# workflow.translate()

# 5. Save the result

workflow.save_as_json(name="translated_notes.json")

print("JSON file saved.")

# You can also export the translated JSON text

text = workflow.export_to_json()

if __name__ == "__main__":

asyncio.run(main())This example uses the asynchronous method.

import asyncio

from docutranslate.exporter.docx.docx2html_exporter import Docx2HTMLExporterConfig

from docutranslate.translator.ai_translator.docx_translator import DocxTranslatorConfig

from docutranslate.workflow.docx_workflow import DocxWorkflowConfig, DocxWorkflow

async def main():

# 1. Build translator configuration

translator_config = DocxTranslatorConfig(

base_url="https://api.openai.com/v1/",

api_key="YOUR_OPENAI_API_KEY",

model_id="gpt-4o",

to_lang="Chinese",

insert_mode="replace", # Options: "replace", "append", "prepend"

separator="\n", # Separator used in "append" and "prepend" modes

)

# 2. Build main workflow configuration

workflow_config = DocxWorkflowConfig(

translator_config=translator_config,

html_exporter_config=Docx2HTMLExporterConfig(cdn=True)

)

# 3. Instantiate the workflow

workflow = DocxWorkflow(config=workflow_config)

# 4. Read the file and execute translation

workflow.read_path("path/to/your/notes.docx")

await workflow.translate_async()

# Or use the synchronous method

# workflow.translate()

# 5. Save the result

workflow.save_as_docx(name="translated_notes.docx")

print("DOCX file saved.")

# You can also export the translated DOCX as bytes

text_bytes = workflow.export_to_docx()

if __name__ == "__main__":

asyncio.run(main())This example uses the asynchronous method.

import asyncio

from docutranslate.exporter.xlsx.xlsx2html_exporter import Xlsx2HTMLExporterConfig

from docutranslate.translator.ai_translator.xlsx_translator import XlsxTranslatorConfig

from docutranslate.workflow.xlsx_workflow import XlsxWorkflowConfig, XlsxWorkflow

async def main():

# 1. Build translator configuration

translator_config = XlsxTranslatorConfig(

base_url="https://api.openai.com/v1/",

api_key="YOUR_OPENAI_API_KEY",

model_id="gpt-4o",

to_lang="Chinese",

insert_mode="replace", # Options: "replace", "append", "prepend"

separator="\n", # Separator used in "append" and "prepend" modes

)

# 2. Build main workflow configuration

workflow_config = XlsxWorkflowConfig(

translator_config=translator_config,

html_exporter_config=Xlsx2HTMLExporterConfig(cdn=True)

)

# 3. Instantiate the workflow

workflow = XlsxWorkflow(config=workflow_config)

# 4. Read the file and execute translation

workflow.read_path("path/to/your/notes.xlsx")

await workflow.translate_async()

# Or use the synchronous method

# workflow.translate()

# 5. Save the result

workflow.save_as_xlsx(name="translated_notes.xlsx")

print("XLSX file saved.")

# You can also export the translated XLSX as bytes

text_bytes = workflow.export_to_xlsx()

if __name__ == "__main__":

asyncio.run(main())The translation feature relies on large language models. You need to obtain a base_url, api_key, and model_id from the respective AI platform.

Recommended models: Volcengine's

doubao-seed-1-6-flashanddoubao-seed-1-6series, Zhipu'sglm-4-flash, Alibaba Cloud'sqwen-plusandqwen-flash, Deepseek'sdeepseek-chat, etc.

| Platform Name | Get API Key | Base URL |

|---|---|---|

| ollama | http://127.0.0.1:11434/v1 |

|

| lm studio | http://127.0.0.1:1234/v1 |

|

| openrouter | Click to get | https://openrouter.ai/api/v1 |

| openai | Click to get | https://api.openai.com/v1/ |

| gemini | Click to get | https://generativelanguage.googleapis.com/v1beta/openai/ |

| deepseek | Click to get | https://api.deepseek.com/v1 |

| Zhipu AI (智谱ai) | Click to get | https://open.bigmodel.cn/api/paas/v4 |

| Tencent Hunyuan (腾讯混元) | Click to get | https://api.hunyuan.cloud.tencent.com/v1 |

| Alibaba Cloud Bailian (阿里云百炼) | Click to get | https://dashscope.aliyuncs.com/compatible-mode/v1 |

| Volcengine (火山引擎) | Click to get | https://ark.cn-beijing.volces.com/api/v3 |

| SiliconFlow (硅基流动) | Click to get | https://api.siliconflow.cn/v1 |

| DMXAPI | Click to get | https://www.dmxapi.cn/v1 |

| Juguang AI (聚光AI) | Click to get | https://ai.juguang.chat/v1 |

If you choose mineru as your document parsing engine (convert_engine="mineru"), you need to apply for a free token.

- Visit the minerU official website to register and apply for an API.

- Create a new API Token in the API Token Management interface.

Note: minerU Tokens are valid for 14 days. Please create a new one after expiration.

If you choose docling as your document parsing engine (convert_engine="docling"), it will download the required models from Hugging Face upon first use.

A better option is to download

docling_artifact.zipfrom GitHub Releases and extract it to your working directory.

Solutions for network issues when downloading docling models:

-

Set a Hugging Face mirror (Recommended):

-

Method A (Environment Variable): Set the system environment variable

HF_ENDPOINTand restart your IDE or terminal.HF_ENDPOINT=https://hf-mirror.com

-

Method A (Environment Variable): Set the system environment variable

- Method B (Set in code): Add the following code at the beginning of your Python script.

import os

os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com'-

Offline Usage (Download the model package in advance):

- Download

docling_artifact.zipfrom GitHub Releases. - Extract it into your project directory.

- Download

- Specify the model path in your configuration (if the model is not in the same directory as the script):

from docutranslate.converter.x2md.converter_docling import ConverterDoclingConfig

converter_config = ConverterDoclingConfig(

artifact="./docling_artifact", # Path to the extracted folder

code_ocr=True,

formula_ocr=True

)Q: Why is the translated text still in the original language?

A: Check the logs for errors. It's usually due to an overdue payment on the AI platform or network issues (check if you need to enable the system proxy).

Q: Port 8010 is already in use. What should I do?

A: Use the -p parameter to specify a new port, or set the DOCUTRANSLATE_PORT environment variable.

Q: Does it support translating scanned PDFs?

A: Yes. Please use the mineru parsing engine, which has powerful OCR capabilities.

Q: Why is the first PDF translation very slow?

A: If you are using the docling engine, it needs to download models from Hugging Face on its first run. Please refer to the "Network Issues Solutions" section above to speed up this process.

Q: How can I use it in an intranet (offline) environment?

A: Absolutely. You need to meet the following conditions:

-

Local LLM: Deploy a language model locally using tools like Ollama or LM Studio, and fill in the local model's

base_urlinTranslatorConfig. -

Local PDF Parsing Engine (only for parsing PDFs): Use the

doclingengine and download the model package in advance as described in the "Offline Usage" section above.

Q: How does the PDF parsing cache mechanism work?

A: MarkdownBasedWorkflow automatically caches the results of document parsing (file-to-Markdown conversion) to avoid repetitive, time-consuming parsing. The cache is stored in memory by default and records the last 10 parses. You can change the cache size using the DOCUTRANSLATE_CACHE_NUM environment variable.

Q: How can I make the software use a proxy?

A: By default, the software does not use the system proxy. You can enable it by setting system_proxy_enable=True in TranslatorConfig.

Your support is welcome! Please mention the reason for your donation in the memo.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for docutranslate

Similar Open Source Tools

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

RepairAgent

RepairAgent is an autonomous LLM-based agent for automated program repair targeting the Defects4J benchmark. It uses an LLM-driven loop to localize, analyze, and fix Java bugs. The tool requires Docker, VS Code with Dev Containers extension, OpenAI API key, disk space of ~40 GB, and internet access. Users can get started with RepairAgent using either VS Code Dev Container or Docker Image. Running RepairAgent involves checking out the buggy project version, autonomous bug analysis, fix candidate generation, and testing against the project's test suite. Users can configure hyperparameters for budget control, repetition handling, commands limit, and external fix strategy. The tool provides output structure, experiment overview, individual analysis scripts, and data on fixed bugs from the Defects4J dataset.

tokscale

Tokscale is a high-performance CLI tool and visualization dashboard for tracking token usage and costs across multiple AI coding agents. It helps monitor and analyze token consumption from various AI coding tools, providing real-time pricing calculations using LiteLLM's pricing data. Inspired by the Kardashev scale, Tokscale measures token consumption as users scale the ranks of AI-augmented development. It offers interactive TUI mode, multi-platform support, real-time pricing, detailed breakdowns, web visualization, flexible filtering, and social platform features.

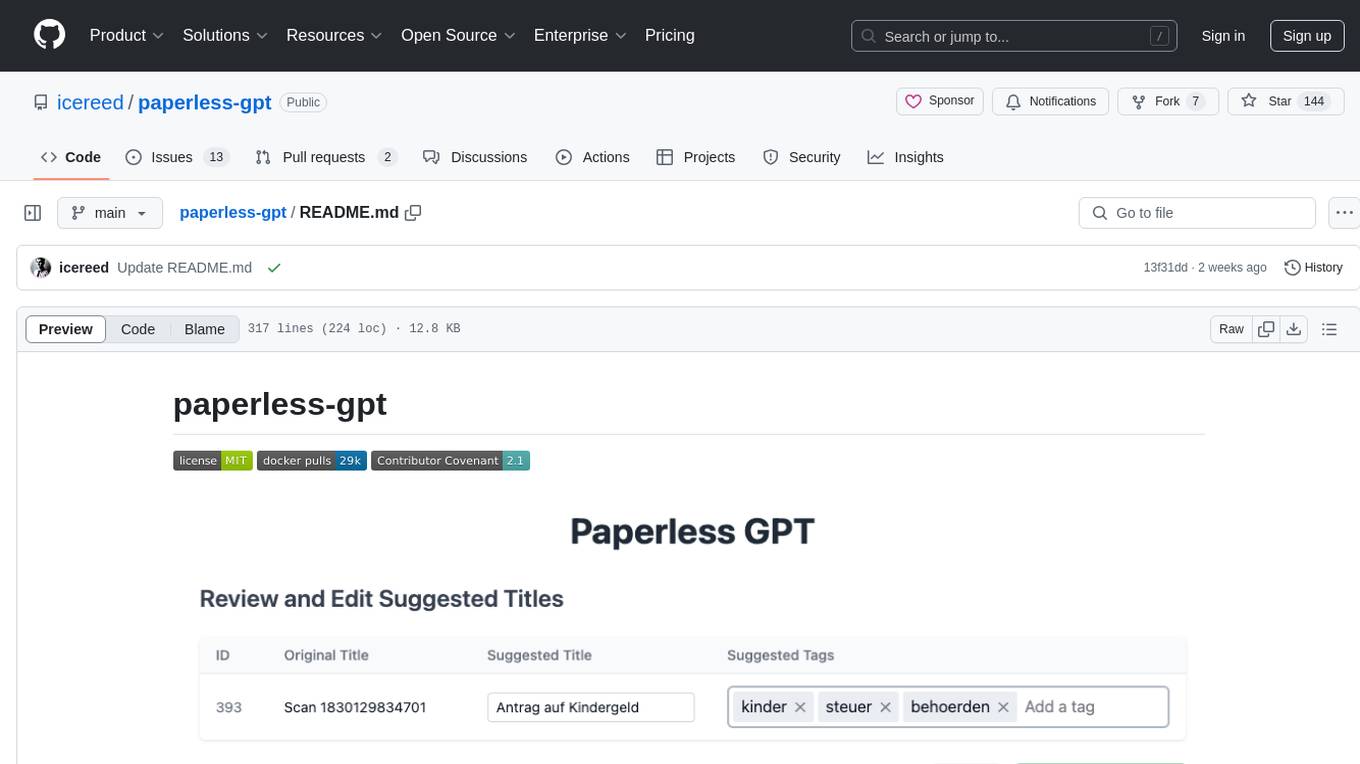

paperless-gpt

paperless-gpt is a tool designed to generate accurate and meaningful document titles and tags for paperless-ngx using Large Language Models (LLMs). It supports multiple LLM providers, including OpenAI and Ollama. With paperless-gpt, you can streamline your document management by automatically suggesting appropriate titles and tags based on the content of your scanned documents. The tool offers features like multiple LLM support, customizable prompts, easy integration with paperless-ngx, user-friendly interface for reviewing and applying suggestions, dockerized deployment, automatic document processing, and an experimental OCR feature.

ruler

Ruler is a tool designed to centralize AI coding assistant instructions, providing a single source of truth for managing instructions across multiple AI coding tools. It helps in avoiding inconsistent guidance, duplicated effort, context drift, onboarding friction, and complex project structures by automatically distributing instructions to the right configuration files. With support for nested rule loading, Ruler can handle complex project structures with context-specific instructions for different components. It offers features like centralised rule management, nested rule loading, automatic distribution, targeted agent configuration, MCP server propagation, .gitignore automation, and a simple CLI for easy configuration management.

lingo.dev

Replexica AI automates software localization end-to-end, producing authentic translations instantly across 60+ languages. Teams can do localization 100x faster with state-of-the-art quality, reaching more paying customers worldwide. The tool offers a GitHub Action for CI/CD automation and supports various formats like JSON, YAML, CSV, and Markdown. With lightning-fast AI localization, auto-updates, native quality translations, developer-friendly CLI, and scalability for startups and enterprise teams, Replexica is a top choice for efficient and effective software localization.

FDAbench

FDABench is a benchmark tool designed for evaluating data agents' reasoning ability over heterogeneous data in analytical scenarios. It offers 2,007 tasks across various data sources, domains, difficulty levels, and task types. The tool provides ready-to-use data agent implementations, a DAG-based evaluation system, and a framework for agent-expert collaboration in dataset generation. Key features include data agent implementations, comprehensive evaluation metrics, multi-database support, different task types, extensible framework for custom agent integration, and cost tracking. Users can set up the environment using Python 3.10+ on Linux, macOS, or Windows. FDABench can be installed with a one-command setup or manually. The tool supports API configuration for LLM access and offers quick start guides for database download, dataset loading, and running examples. It also includes features like dataset generation using the PUDDING framework, custom agent integration, evaluation metrics like accuracy and rubric score, and a directory structure for easy navigation.

mdream

Mdream is a lightweight and user-friendly markdown editor designed for developers and writers. It provides a simple and intuitive interface for creating and editing markdown files with real-time preview. The tool offers syntax highlighting, markdown formatting options, and the ability to export files in various formats. Mdream aims to streamline the writing process and enhance productivity for individuals working with markdown documents.

zeroclaw

ZeroClaw is a fast, small, and fully autonomous AI assistant infrastructure built with Rust. It features a lean runtime, cost-efficient deployment, fast cold starts, and a portable architecture. It is secure by design, fully swappable, and supports OpenAI-compatible provider support. The tool is designed for low-cost boards and small cloud instances, with a memory footprint of less than 5MB. It is suitable for tasks like deploying AI assistants, swapping providers/channels/tools, and pluggable everything.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

candle-vllm

Candle-vllm is an efficient and easy-to-use platform designed for inference and serving local LLMs, featuring an OpenAI compatible API server. It offers a highly extensible trait-based system for rapid implementation of new module pipelines, streaming support in generation, efficient management of key-value cache with PagedAttention, and continuous batching. The tool supports chat serving for various models and provides a seamless experience for users to interact with LLMs through different interfaces.

logicstamp-context

LogicStamp Context is a static analyzer that extracts deterministic component contracts from TypeScript codebases, providing structured architectural context for AI coding assistants. It helps AI assistants understand architecture by extracting props, hooks, and dependencies without implementation noise. The tool works with React, Next.js, Vue, Express, and NestJS, and is compatible with various AI assistants like Claude, Cursor, and MCP agents. It offers features like watch mode for real-time updates, breaking change detection, and dependency graph creation. LogicStamp Context is a security-first tool that protects sensitive data, runs locally, and is non-opinionated about architectural decisions.

OSA

OSA (Open-Source-Advisor) is a tool designed to improve the quality of scientific open source projects by automating the generation of README files, documentation, CI/CD scripts, and providing advice and recommendations for repositories. It supports various LLMs accessible via API, local servers, or osa_bot hosted on ITMO servers. OSA is currently under development with features like README file generation, documentation generation, automatic implementation of changes, LLM integration, and GitHub Action Workflow generation. It requires Python 3.10 or higher and tokens for GitHub/GitLab/Gitverse and LLM API key. Users can install OSA using PyPi or build from source, and run it using CLI commands or Docker containers.

pixeltable

Pixeltable is a Python library designed for ML Engineers and Data Scientists to focus on exploration, modeling, and app development without the need to handle data plumbing. It provides a declarative interface for working with text, images, embeddings, and video, enabling users to store, transform, index, and iterate on data within a single table interface. Pixeltable is persistent, acting as a database unlike in-memory Python libraries such as Pandas. It offers features like data storage and versioning, combined data and model lineage, indexing, orchestration of multimodal workloads, incremental updates, and automatic production-ready code generation. The tool emphasizes transparency, reproducibility, cost-saving through incremental data changes, and seamless integration with existing Python code and libraries.

paperbanana

PaperBanana is an automated academic illustration tool designed for AI scientists. It implements an agentic framework for generating publication-quality academic diagrams and statistical plots from text descriptions. The tool utilizes a two-phase multi-agent pipeline with iterative refinement, Gemini-based VLM planning, and image generation. It offers a CLI, Python API, and MCP server for IDE integration, along with Claude Code skills for generating diagrams, plots, and evaluating diagrams. PaperBanana is not affiliated with or endorsed by the original authors or Google Research, and it may differ from the original system described in the paper.

llm-context.py

LLM Context is a tool designed to assist developers in quickly injecting relevant content from code/text projects into Large Language Model chat interfaces. It leverages `.gitignore` patterns for smart file selection and offers a streamlined clipboard workflow using the command line. The tool also provides direct integration with Large Language Models through the Model Context Protocol (MCP). LLM Context is optimized for code repositories and collections of text/markdown/html documents, making it suitable for developers working on projects that fit within an LLM's context window. The tool is under active development and aims to enhance AI-assisted development workflows by harnessing the power of Large Language Models.

For similar tasks

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.