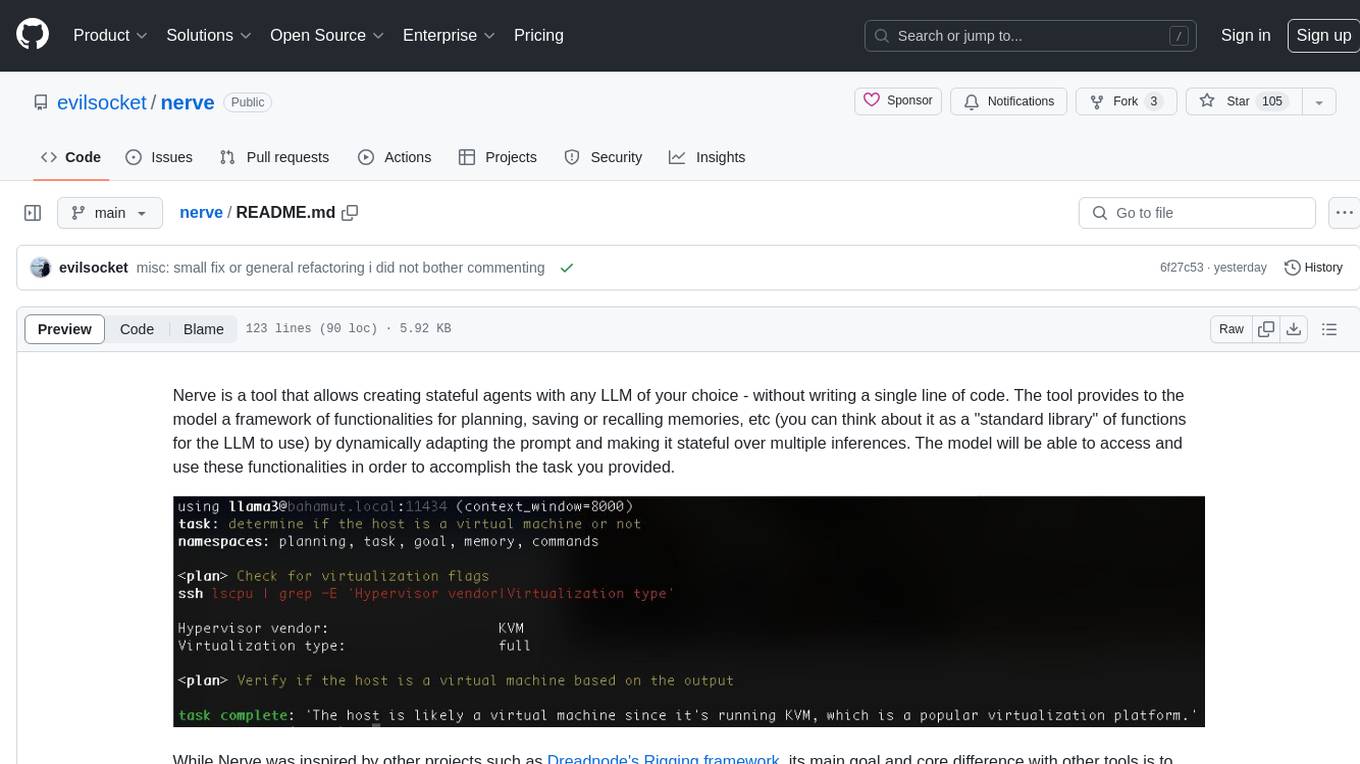

code-graph-rag

The ultimate RAG for your monorepo. Query, understand, and edit multi-language codebases with the power of AI and knowledge graphs

Stars: 1204

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

README:

An accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter, builds comprehensive knowledge graphs, and enables natural language querying of codebase structure and relationships as well as editing capabilities.

https://github.com/user-attachments/assets/2fec9ef5-7121-4e6c-9b68-dc8d8a835115

Use the Makefile for:

- make install: Install project dependencies with full language support.

- make python: Install dependencies for Python only.

- make dev: Setup dev environment (install deps + pre-commit hooks).

- make test: Run all tests.

- make clean: Clean up build artifacts and cache.

- make help: Show available commands.

-

🌍 Multi-Language Support:

Language Status Extensions Functions Classes/Structs Modules Package Detection Additional Features ✅ Python Fully Supported .py✅ ✅ ✅ __init__.pyType inference, decorators, nested functions ✅ JavaScript Fully Supported .js,.jsx✅ ✅ ✅ - ES6 modules, CommonJS, prototype methods, object methods, arrow functions ✅ TypeScript Fully Supported .ts,.tsx✅ ✅ ✅ - Interfaces, type aliases, enums, namespaces, ES6/CommonJS modules ✅ C++ Fully Supported .cpp,.h,.hpp,.cc,.cxx,.hxx,.hh,.ixx,.cppm,.ccm✅ ✅ (classes/structs/unions/enums) ✅ CMakeLists.txt, Makefile Constructors, destructors, operator overloading, templates, lambdas, C++20 modules, namespaces ✅ Lua Fully Supported .lua✅ ✅ (tables/modules) ✅ - Local/global functions, metatables, closures, coroutines ✅ Rust Fully Supported .rs✅ ✅ (structs/enums) ✅ - impl blocks, associated functions ✅ Java Fully Supported .java✅ ✅ (classes/interfaces/enums) ✅ package declarations Generics, annotations, modern features (records/sealed classes), concurrency, reflection 🚧 Go In Development .go✅ ✅ (structs) ✅ - Methods, type declarations 🚧 Scala In Development .scala,.sc✅ ✅ (classes/objects/traits) ✅ package declarations Case classes, objects 🚧 C# In Development .cs- - - - Classes, interfaces, generics (planned) -

🌳 Tree-sitter Parsing: Uses Tree-sitter for robust, language-agnostic AST parsing

-

📊 Knowledge Graph Storage: Uses Memgraph to store codebase structure as an interconnected graph

-

🗣️ Natural Language Querying: Ask questions about your codebase in plain English

-

🤖 AI-Powered Cypher Generation: Supports both cloud models (Google Gemini), local models (Ollama), and OpenAI models for natural language to Cypher translation

-

🤖 OpenAI Integration: Leverage OpenAI models to enhance AI functionalities.

-

📝 Code Snippet Retrieval: Retrieves actual source code snippets for found functions/methods

-

✍️ Advanced File Editing: Surgical code replacement with AST-based function targeting, visual diff previews, and exact code block modifications

-

⚡️ Shell Command Execution: Can execute terminal commands for tasks like running tests or using CLI tools.

-

🚀 Interactive Code Optimization: AI-powered codebase optimization with language-specific best practices and interactive approval workflow

-

📚 Reference-Guided Optimization: Use your own coding standards and architectural documents to guide optimization suggestions

-

🔗 Dependency Analysis: Parses

pyproject.tomlto understand external dependencies -

🎯 Nested Function Support: Handles complex nested functions and class hierarchies

-

🔄 Language-Agnostic Design: Unified graph schema across all supported languages

The system consists of two main components:

- Multi-language Parser: Tree-sitter based parsing system that analyzes codebases and ingests data into Memgraph

-

RAG System (

codebase_rag/): Interactive CLI for querying the stored knowledge graph

- Python 3.12+

- Docker & Docker Compose (for Memgraph)

- cmake (required for building pymgclient dependency)

- For cloud models: Google Gemini API key

- For local models: Ollama installed and running

-

uvpackage manager

On macOS:

brew install cmakeOn Linux (Ubuntu/Debian):

sudo apt-get update

sudo apt-get install cmakeOn Linux (CentOS/RHEL):

sudo yum install cmake

# or on newer versions:

sudo dnf install cmakegit clone https://github.com/vitali87/code-graph-rag.git

cd code-graph-rag- Install dependencies:

For basic Python support:

uv syncFor full multi-language support:

uv sync --extra treesitter-fullFor development (including tests and pre-commit hooks):

make devThis installs all dependencies and sets up pre-commit hooks automatically.

This installs Tree-sitter grammars for all supported languages (see Multi-Language Support section).

- Set up environment variables:

cp .env.example .env

# Edit .env with your configuration (see options below)The new provider-explicit configuration supports mixing different providers for orchestrator and cypher models.

# .env file

ORCHESTRATOR_PROVIDER=ollama

ORCHESTRATOR_MODEL=llama3.2

ORCHESTRATOR_ENDPOINT=http://localhost:11434/v1

CYPHER_PROVIDER=ollama

CYPHER_MODEL=codellama

CYPHER_ENDPOINT=http://localhost:11434/v1# .env file

ORCHESTRATOR_PROVIDER=openai

ORCHESTRATOR_MODEL=gpt-4o

ORCHESTRATOR_API_KEY=sk-your-openai-key

CYPHER_PROVIDER=openai

CYPHER_MODEL=gpt-4o-mini

CYPHER_API_KEY=sk-your-openai-key# .env file

ORCHESTRATOR_PROVIDER=google

ORCHESTRATOR_MODEL=gemini-2.5-pro

ORCHESTRATOR_API_KEY=your-google-api-key

CYPHER_PROVIDER=google

CYPHER_MODEL=gemini-2.5-flash

CYPHER_API_KEY=your-google-api-key# .env file - Google orchestrator + Ollama cypher

ORCHESTRATOR_PROVIDER=google

ORCHESTRATOR_MODEL=gemini-2.5-pro

ORCHESTRATOR_API_KEY=your-google-api-key

CYPHER_PROVIDER=ollama

CYPHER_MODEL=codellama

CYPHER_ENDPOINT=http://localhost:11434/v1Get your Google API key from Google AI Studio.

Install and run Ollama:

# Install Ollama (macOS/Linux)

curl -fsSL https://ollama.ai/install.sh | sh

# Pull required models

ollama pull llama3.2

# Or try other models like:

# ollama pull llama3

# ollama pull mistral

# ollama pull codellama

# Ollama will automatically start serving on localhost:11434Note: Local models provide privacy and no API costs, but may have lower accuracy compared to cloud models like Gemini.

- Start Memgraph database:

docker-compose up -dThe Graph-Code system offers four main modes of operation:

- Parse & Ingest: Build knowledge graph from your codebase

- Interactive Query: Ask questions about your code in natural language

- Export & Analyze: Export graph data for programmatic analysis

- AI Optimization: Get AI-powered optimization suggestions for your code.

- Editing: Perform surgical code replacements and modifications with precise targeting.

Parse and ingest a multi-language repository into the knowledge graph:

For the first repository (clean start):

python -m codebase_rag.main start --repo-path /path/to/repo1 --update-graph --cleanFor additional repositories (preserve existing data):

python -m codebase_rag.main start --repo-path /path/to/repo2 --update-graph

python -m codebase_rag.main start --repo-path /path/to/repo3 --update-graphControl Memgraph batch flushing:

# Flush every 5,000 records instead of the default from settings

python -m codebase_rag.main start --repo-path /path/to/repo --update-graph \

--batch-size 5000The system automatically detects and processes files for all supported languages (see Multi-Language Support section).

Start the interactive RAG CLI:

python -m codebase_rag.main start --repo-path /path/to/your/repoSpecify Custom Models:

# Use specific local models

python -m codebase_rag.main start --repo-path /path/to/your/repo \

--orchestrator ollama:llama3.2 \

--cypher ollama:codellama

# Use specific Gemini models

python -m codebase_rag.main start --repo-path /path/to/your/repo \

--orchestrator google:gemini-2.0-flash-thinking-exp-01-21 \

--cypher google:gemini-2.5-flash-lite-preview-06-17

# Use mixed providers

python -m codebase_rag.main start --repo-path /path/to/your/repo \

--orchestrator google:gemini-2.0-flash-thinking-exp-01-21 \

--cypher ollama:codellamaExample queries (works across all supported languages):

- "Show me all classes that contain 'user' in their name"

- "Find functions related to database operations"

- "What methods does the User class have?"

- "Show me functions that handle authentication"

- "List all TypeScript components"

- "Find Rust structs and their methods"

- "Show me Go interfaces and implementations"

- "Find all C++ operator overloads in the Matrix class"

- "Show me C++ template functions with their specializations"

- "List all C++ namespaces and their contained classes"

- "Find C++ lambda expressions used in algorithms"

- "Add logging to all database connection functions"

- "Refactor the User class to use dependency injection"

- "Convert these Python functions to async/await pattern"

- "Add error handling to authentication methods"

- "Optimize this function for better performance"

For programmatic access and integration with other tools, you can export the entire knowledge graph to JSON:

Export during graph update:

python -m codebase_rag.main start --repo-path /path/to/repo --update-graph --clean -o my_graph.jsonExport existing graph without updating:

python -m codebase_rag.main export -o my_graph.jsonOptional: adjust Memgraph batching during export:

python -m codebase_rag.main export -o my_graph.json --batch-size 5000Working with exported data:

from codebase_rag.graph_loader import load_graph

# Load the exported graph

graph = load_graph("my_graph.json")

# Get summary statistics

summary = graph.summary()

print(f"Total nodes: {summary['total_nodes']}")

print(f"Total relationships: {summary['total_relationships']}")

# Find specific node types

functions = graph.find_nodes_by_label("Function")

classes = graph.find_nodes_by_label("Class")

# Analyze relationships

for func in functions[:5]:

relationships = graph.get_relationships_for_node(func.node_id)

print(f"Function {func.properties['name']} has {len(relationships)} relationships")Example analysis script:

python examples/graph_export_example.py my_graph.jsonThis provides a reliable, programmatic way to access your codebase structure without LLM restrictions, perfect for:

- Integration with other tools

- Custom analysis scripts

- Building documentation generators

- Creating code metrics dashboards

For AI-powered codebase optimization with best practices guidance:

Basic optimization for a specific language:

python -m codebase_rag.main optimize python --repo-path /path/to/your/repoOptimization with reference documentation:

python -m codebase_rag.main optimize python \

--repo-path /path/to/your/repo \

--reference-document /path/to/best_practices.mdUsing specific models for optimization:

python -m codebase_rag.main optimize javascript \

--repo-path /path/to/frontend \

--orchestrator google:gemini-2.0-flash-thinking-exp-01-21

# Optional: override Memgraph batch flushing during optimization

python -m codebase_rag.main optimize javascript --repo-path /path/to/frontend \

--batch-size 5000Supported Languages for Optimization:

All supported languages: python, javascript, typescript, rust, go, java, scala, cpp

How It Works:

- Analysis Phase: The agent analyzes your codebase structure using the knowledge graph

- Pattern Recognition: Identifies common anti-patterns, performance issues, and improvement opportunities

- Best Practices Application: Applies language-specific best practices and patterns

- Interactive Approval: Presents each optimization suggestion for your approval before implementation

- Guided Implementation: Implements approved changes with detailed explanations

Example Optimization Session:

Starting python optimization session...

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ The agent will analyze your python codebase and propose specific ┃

┃ optimizations. You'll be asked to approve each suggestion before ┃

┃ implementation. Type 'exit' or 'quit' to end the session. ┃

┗━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┛

🔍 Analyzing codebase structure...

📊 Found 23 Python modules with potential optimizations

💡 Optimization Suggestion #1:

File: src/data_processor.py

Issue: Using list comprehension in a loop can be optimized

Suggestion: Replace with generator expression for memory efficiency

[y/n] Do you approve this optimization?

Reference Document Support: You can provide reference documentation (like coding standards, architectural guidelines, or best practices documents) to guide the optimization process:

# Use company coding standards

python -m codebase_rag.main optimize python \

--reference-document ./docs/coding_standards.md

# Use architectural guidelines

python -m codebase_rag.main optimize java \

--reference-document ./ARCHITECTURE.md

# Use performance best practices

python -m codebase_rag.main optimize rust \

--reference-document ./docs/performance_guide.mdThe agent will incorporate the guidance from your reference documents when suggesting optimizations, ensuring they align with your project's standards and architectural decisions.

Common CLI Arguments:

-

--orchestrator: Specify provider:model for main operations (e.g.,google:gemini-2.0-flash-thinking-exp-01-21,ollama:llama3.2) -

--cypher: Specify provider:model for graph queries (e.g.,google:gemini-2.5-flash-lite-preview-06-17,ollama:codellama) -

--repo-path: Path to repository (defaults to current directory) -

--batch-size: Override Memgraph flush batch size (defaults toMEMGRAPH_BATCH_SIZEin settings) -

--reference-document: Path to reference documentation (optimization only)

The knowledge graph uses the following node types and relationships:

- Project: Root node representing the entire repository

-

Package: Language packages (Python:

__init__.py, etc.) -

Module: Individual source code files (

.py,.js,.jsx,.ts,.tsx,.rs,.go,.scala,.sc,.java) - Class: Class/Struct/Enum definitions across all languages

- Function: Module-level functions and standalone functions

- Method: Class methods and associated functions

- Folder: Regular directories

- File: All files (source code and others)

- ExternalPackage: External dependencies

-

Python:

function_definition,class_definition -

JavaScript/TypeScript:

function_declaration,arrow_function,class_declaration -

C++:

function_definition,template_declaration,lambda_expression,class_specifier,struct_specifier,union_specifier,enum_specifier -

Rust:

function_item,struct_item,enum_item,impl_item -

Go:

function_declaration,method_declaration,type_declaration -

Scala:

function_definition,class_definition,object_definition,trait_definition -

Java:

method_declaration,class_declaration,interface_declaration,enum_declaration

-

CONTAINS_PACKAGE: Project or Package contains Package nodes -

CONTAINS_FOLDER: Project, Package, or Folder contains Folder nodes -

CONTAINS_FILE: Project, Package, or Folder contains File nodes -

CONTAINS_MODULE: Project, Package, or Folder contains Module nodes -

DEFINES: Module defines classes/functions -

DEFINES_METHOD: Class defines methods -

DEPENDS_ON_EXTERNAL: Project depends on external packages -

CALLS: Function or Method calls other functions/methods

Configuration is managed through environment variables in .env file:

-

ORCHESTRATOR_PROVIDER: Provider name (google,openai,ollama) -

ORCHESTRATOR_MODEL: Model ID (e.g.,gemini-2.5-pro,gpt-4o,llama3.2) -

ORCHESTRATOR_API_KEY: API key for the provider (if required) -

ORCHESTRATOR_ENDPOINT: Custom endpoint URL (if required) -

ORCHESTRATOR_PROJECT_ID: Google Cloud project ID (for Vertex AI) -

ORCHESTRATOR_REGION: Google Cloud region (default:us-central1) -

ORCHESTRATOR_PROVIDER_TYPE: Google provider type (glaorvertex) -

ORCHESTRATOR_THINKING_BUDGET: Thinking budget for reasoning models -

ORCHESTRATOR_SERVICE_ACCOUNT_FILE: Path to service account file (for Vertex AI)

-

CYPHER_PROVIDER: Provider name (google,openai,ollama) -

CYPHER_MODEL: Model ID (e.g.,gemini-2.5-flash,gpt-4o-mini,codellama) -

CYPHER_API_KEY: API key for the provider (if required) -

CYPHER_ENDPOINT: Custom endpoint URL (if required) -

CYPHER_PROJECT_ID: Google Cloud project ID (for Vertex AI) -

CYPHER_REGION: Google Cloud region (default:us-central1) -

CYPHER_PROVIDER_TYPE: Google provider type (glaorvertex) -

CYPHER_THINKING_BUDGET: Thinking budget for reasoning models -

CYPHER_SERVICE_ACCOUNT_FILE: Path to service account file (for Vertex AI)

-

MEMGRAPH_HOST: Memgraph hostname (default:localhost) -

MEMGRAPH_PORT: Memgraph port (default:7687) -

MEMGRAPH_HTTP_PORT: Memgraph HTTP port (default:7444) -

LAB_PORT: Memgraph Lab port (default:3000) -

MEMGRAPH_BATCH_SIZE: Batch size for Memgraph operations (default:1000) -

TARGET_REPO_PATH: Default repository path (default:.) -

LOCAL_MODEL_ENDPOINT: Fallback endpoint for Ollama (default:http://localhost:11434/v1)

- tree-sitter: Core Tree-sitter library for language-agnostic parsing

- tree-sitter-{language}: Language-specific grammars (Python, JS, TS, Rust, Go, Scala, Java)

- pydantic-ai: AI agent framework for RAG orchestration

- pymgclient: Memgraph Python client for graph database operations

- loguru: Advanced logging with structured output

- python-dotenv: Environment variable management

The agent is designed with a deliberate workflow to ensure it acts with context and precision, especially when modifying the file system.

The agent has access to a suite of tools to understand and interact with the codebase:

-

query_codebase_knowledge_graph: The primary tool for understanding the repository. It queries the graph database to find files, functions, classes, and their relationships based on natural language. -

get_code_snippet: Retrieves the exact source code for a specific function or class. -

read_file_content: Reads the entire content of a specified file. -

create_new_file: Creates a new file with specified content. -

replace_code_surgically: Surgically replaces specific code blocks in files. Requires exact target code and replacement. Only modifies the specified block, leaving rest of file unchanged. True surgical patching. -

execute_shell_command: Executes a shell command in the project's environment.

The agent uses AST-based function targeting with Tree-sitter for precise code modifications. Features include:

- Visual diff preview before changes

- Surgical patching that only modifies target code blocks

- Multi-language support across all supported languages

- Security sandbox preventing edits outside project directory

- Smart function matching with qualified names and line numbers

- Python: Full support including nested functions, methods, classes, decorators, type hints, and package structure

- JavaScript: ES6 modules, CommonJS modules, prototype-based methods, object methods, arrow functions, classes, and JSX support

- TypeScript: All JavaScript features plus interfaces, type aliases, enums, namespaces, generics, and advanced type inference

- C++: Comprehensive support including functions, classes, structs, unions, enums, constructors, destructors, operator overloading, templates, lambdas, namespaces, C++20 modules, inheritance, method calls, and modern C++ features

- Lua: Functions, local/global variables, tables, metatables, closures, coroutines, and object-oriented patterns

- Rust: Functions, structs, enums, impl blocks, traits, and associated functions

- Go: Functions, methods, type declarations, interfaces, and struct definitions

- Scala: Functions, methods, classes, objects, traits, case classes, implicits, and Scala 3 syntax

- Java: Methods, constructors, classes, interfaces, enums, annotations, generics, modern features (records, sealed classes, switch expressions), concurrency patterns, reflection, and enterprise frameworks

Graph-Code makes it easy to add support for any language that has a Tree-sitter grammar. The system automatically handles grammar compilation and integration.

⚠️ Recommendation: While you can add languages yourself, we recommend waiting for official full support to ensure optimal parsing quality, comprehensive feature coverage, and robust integration. The languages marked as "In Development" above will receive dedicated optimization and testing.

💡 Request Support: If you want a specific language to be officially supported, please submit an issue with your language request.

Use the built-in language management tool to add any Tree-sitter supported language:

# Add a language using the standard tree-sitter repository

python -m codebase_rag.tools.language add-grammar <language-name>

# Examples:

python -m codebase_rag.tools.language add-grammar c-sharp

python -m codebase_rag.tools.language add-grammar php

python -m codebase_rag.tools.language add-grammar ruby

python -m codebase_rag.tools.language add-grammar kotlinFor languages hosted outside the standard tree-sitter organization:

# Add a language from a custom repository

python -m codebase_rag.tools.language add-grammar --grammar-url https://github.com/custom/tree-sitter-mylangWhen you add a language, the tool automatically:

- Downloads the Grammar: Clones the tree-sitter grammar repository as a git submodule

-

Detects Configuration: Auto-extracts language metadata from

tree-sitter.json -

Analyzes Node Types: Automatically identifies AST node types for:

- Functions/methods (

method_declaration,function_definition, etc.) - Classes/structs (

class_declaration,struct_declaration, etc.) - Modules/files (

compilation_unit,source_file, etc.) - Function calls (

call_expression,method_invocation, etc.)

- Functions/methods (

- Compiles Bindings: Builds Python bindings from the grammar source

-

Updates Configuration: Adds the language to

codebase_rag/language_config.py - Enables Parsing: Makes the language immediately available for codebase analysis

$ python -m codebase_rag.tools.language add-grammar c-sharp

🔍 Using default tree-sitter URL: https://github.com/tree-sitter/tree-sitter-c-sharp

🔄 Adding submodule from https://github.com/tree-sitter/tree-sitter-c-sharp...

✅ Successfully added submodule at grammars/tree-sitter-c-sharp

Auto-detected language: c-sharp

Auto-detected file extensions: ['cs']

Auto-detected node types:

Functions: ['destructor_declaration', 'method_declaration', 'constructor_declaration']

Classes: ['struct_declaration', 'enum_declaration', 'interface_declaration', 'class_declaration']

Modules: ['compilation_unit', 'file_scoped_namespace_declaration', 'namespace_declaration']

Calls: ['invocation_expression']

✅ Language 'c-sharp' has been added to the configuration!

📝 Updated codebase_rag/language_config.py# List all configured languages

python -m codebase_rag.tools.language list-languages

# Remove a language (this also removes the git submodule unless --keep-submodule is specified)

python -m codebase_rag.tools.language remove-language <language-name>The system uses a configuration-driven approach for language support. Each language is defined in codebase_rag/language_config.py with the following structure:

"language-name": LanguageConfig(

name="language-name",

file_extensions=[".ext1", ".ext2"],

function_node_types=["function_declaration", "method_declaration"],

class_node_types=["class_declaration", "struct_declaration"],

module_node_types=["compilation_unit", "source_file"],

call_node_types=["call_expression", "method_invocation"],

),Grammar not found: If the automatic URL doesn't work, use a custom URL:

python -m codebase_rag.tools.language add-grammar --grammar-url https://github.com/custom/tree-sitter-mylangVersion incompatibility: If you get "Incompatible Language version" errors, update your tree-sitter package:

uv add tree-sitter@latestMissing node types: The tool automatically detects common node patterns, but you can manually adjust the configuration in language_config.py if needed.

You can build a binary of the application using the build_binary.py script. This script uses PyInstaller to package the application and its dependencies into a single executable.

python build_binary.pyThe resulting binary will be located in the dist directory.

-

Check Memgraph connection:

- Ensure Docker containers are running:

docker-compose ps - Verify Memgraph is accessible on port 7687

- Ensure Docker containers are running:

-

View database in Memgraph Lab:

- Open http://localhost:3000

- Connect to memgraph:7687

-

For local models:

- Verify Ollama is running:

ollama list - Check if models are downloaded:

ollama pull llama3 - Test Ollama API:

curl http://localhost:11434/v1/models - Check Ollama logs:

ollama logs

- Verify Ollama is running:

Please see CONTRIBUTING.md for detailed contribution guidelines.

Good first PRs are from TODO issues.

For issues or questions:

- Check the logs for error details

- Verify Memgraph connection

- Ensure all environment variables are set

- Review the graph schema matches your expectations

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for code-graph-rag

Similar Open Source Tools

code-graph-rag

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

VimLM

VimLM is an AI-powered coding assistant for Vim that integrates AI for code generation, refactoring, and documentation directly into your Vim workflow. It offers native Vim integration with split-window responses and intuitive keybindings, offline first execution with MLX-compatible models, contextual awareness with seamless integration with codebase and external resources, conversational workflow for iterating on responses, project scaffolding for generating and deploying code blocks, and extensibility for creating custom LLM workflows with command chains.

routilux

Routilux is a powerful event-driven workflow orchestration framework designed for building complex data pipelines and workflows effortlessly. It offers features like event queue architecture, flexible connections, built-in state management, robust error handling, concurrent execution, persistence & recovery, and simplified API. Perfect for tasks such as data pipelines, API orchestration, event processing, workflow automation, microservices coordination, and LLM agent workflows.

ruler

Ruler is a tool designed to centralize AI coding assistant instructions, providing a single source of truth for managing instructions across multiple AI coding tools. It helps in avoiding inconsistent guidance, duplicated effort, context drift, onboarding friction, and complex project structures by automatically distributing instructions to the right configuration files. With support for nested rule loading, Ruler can handle complex project structures with context-specific instructions for different components. It offers features like centralised rule management, nested rule loading, automatic distribution, targeted agent configuration, MCP server propagation, .gitignore automation, and a simple CLI for easy configuration management.

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

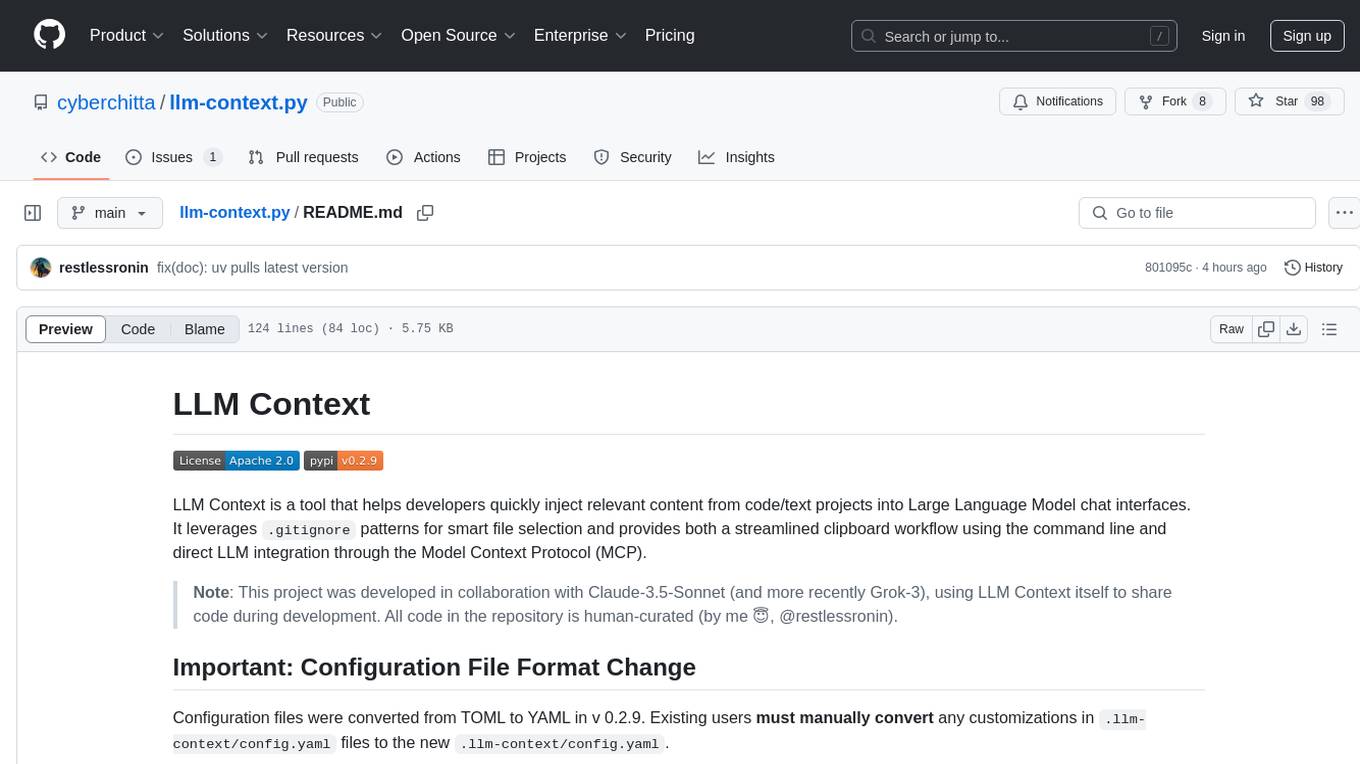

llm-context.py

LLM Context is a tool designed to assist developers in quickly injecting relevant content from code/text projects into Large Language Model chat interfaces. It leverages `.gitignore` patterns for smart file selection and offers a streamlined clipboard workflow using the command line. The tool also provides direct integration with Large Language Models through the Model Context Protocol (MCP). LLM Context is optimized for code repositories and collections of text/markdown/html documents, making it suitable for developers working on projects that fit within an LLM's context window. The tool is under active development and aims to enhance AI-assisted development workflows by harnessing the power of Large Language Models.

git-mcp-server

A secure and scalable Git MCP server providing AI agents with powerful version control capabilities for local and serverless environments. It offers 28 comprehensive Git operations organized into seven functional categories, resources for contextual information about the Git environment, and structured prompt templates for guiding AI agents through complex workflows. The server features declarative tools, robust error handling, pluggable authentication, abstracted storage, full-stack observability, dependency injection, and edge-ready architecture. It also includes specialized features for Git integration such as cross-runtime compatibility, provider-based architecture, optimized Git execution, working directory management, configurable Git identity, safety features, and commit signing.

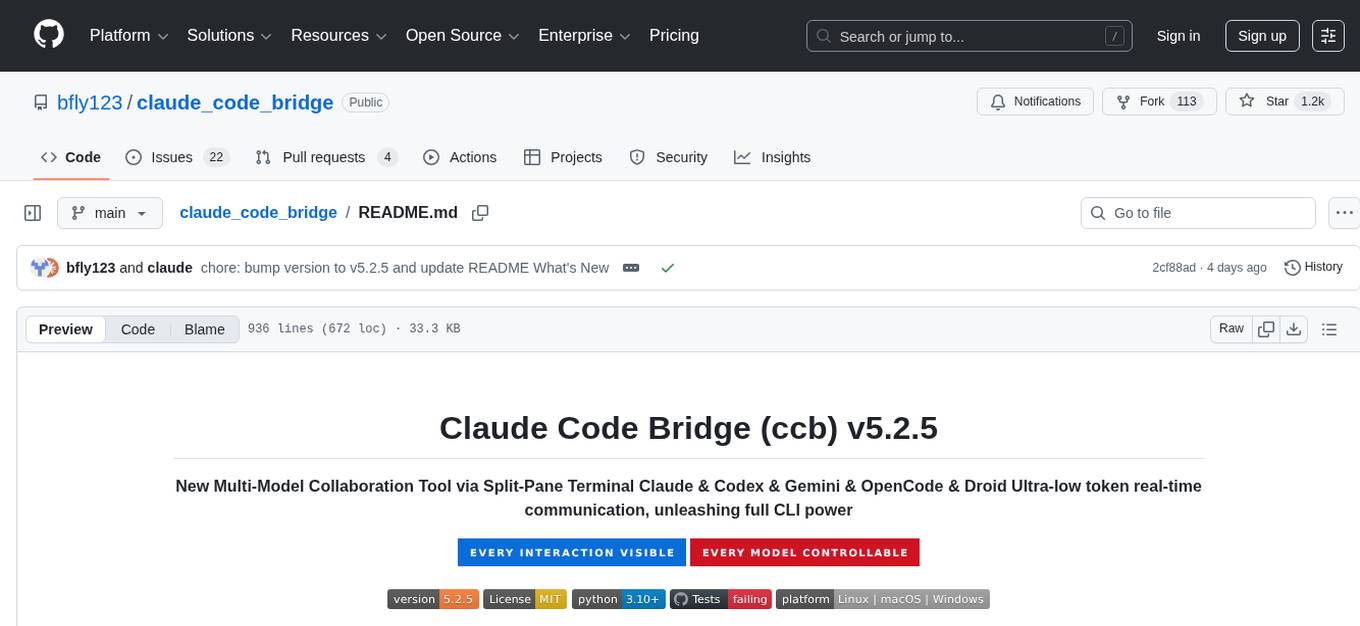

claude_code_bridge

Claude Code Bridge (ccb) is a new multi-model collaboration tool that enables effective collaboration among multiple AI models in a split-pane CLI environment. It offers features like visual and controllable interface, persistent context maintenance, token savings, and native workflow integration. The tool allows users to unleash the full power of CLI by avoiding model bias, cognitive blind spots, and context limitations. It provides a new WYSIWYG solution for multi-model collaboration, making it easier to control and visualize multiple AI models simultaneously.

mcp-documentation-server

The mcp-documentation-server is a lightweight server application designed to serve documentation files for projects. It provides a simple and efficient way to host and access project documentation, making it easy for team members and stakeholders to find and reference important information. The server supports various file formats, such as markdown and HTML, and allows for easy navigation through the documentation. With mcp-documentation-server, teams can streamline their documentation process and ensure that project information is easily accessible to all involved parties.

zcf

ZCF (Zero-Config Claude-Code Flow) is a tool that provides zero-configuration, one-click setup for Claude Code with bilingual support, intelligent agent system, and personalized AI assistant. It offers an interactive menu for easy operations and direct commands for quick execution. The tool supports bilingual operation with automatic language switching and customizable AI output styles. ZCF also includes features like BMad Workflow for enterprise-grade workflow system, Spec Workflow for structured feature development, CCR (Claude Code Router) support for proxy routing, and CCometixLine for real-time usage tracking. It provides smart installation, complete configuration management, and core features like professional agents, command system, and smart configuration. ZCF is cross-platform compatible, supports Windows and Termux environments, and includes security features like dangerous operation confirmation mechanism.

kiss_ai

KISS AI is a lightweight and powerful multi-agent evolutionary framework that simplifies building AI agents. It uses native function calling for efficiency and accuracy, making building AI agents as straightforward as possible. The framework includes features like multi-agent orchestration, agent evolution and optimization, relentless coding agent for long-running tasks, output formatting, trajectory saving and visualization, GEPA for prompt optimization, KISSEvolve for algorithm discovery, self-evolving multi-agent, Docker integration, multiprocessing support, and support for various models from OpenAI, Anthropic, Gemini, Together AI, and OpenRouter.

FDAbench

FDABench is a benchmark tool designed for evaluating data agents' reasoning ability over heterogeneous data in analytical scenarios. It offers 2,007 tasks across various data sources, domains, difficulty levels, and task types. The tool provides ready-to-use data agent implementations, a DAG-based evaluation system, and a framework for agent-expert collaboration in dataset generation. Key features include data agent implementations, comprehensive evaluation metrics, multi-database support, different task types, extensible framework for custom agent integration, and cost tracking. Users can set up the environment using Python 3.10+ on Linux, macOS, or Windows. FDABench can be installed with a one-command setup or manually. The tool supports API configuration for LLM access and offers quick start guides for database download, dataset loading, and running examples. It also includes features like dataset generation using the PUDDING framework, custom agent integration, evaluation metrics like accuracy and rubric score, and a directory structure for easy navigation.

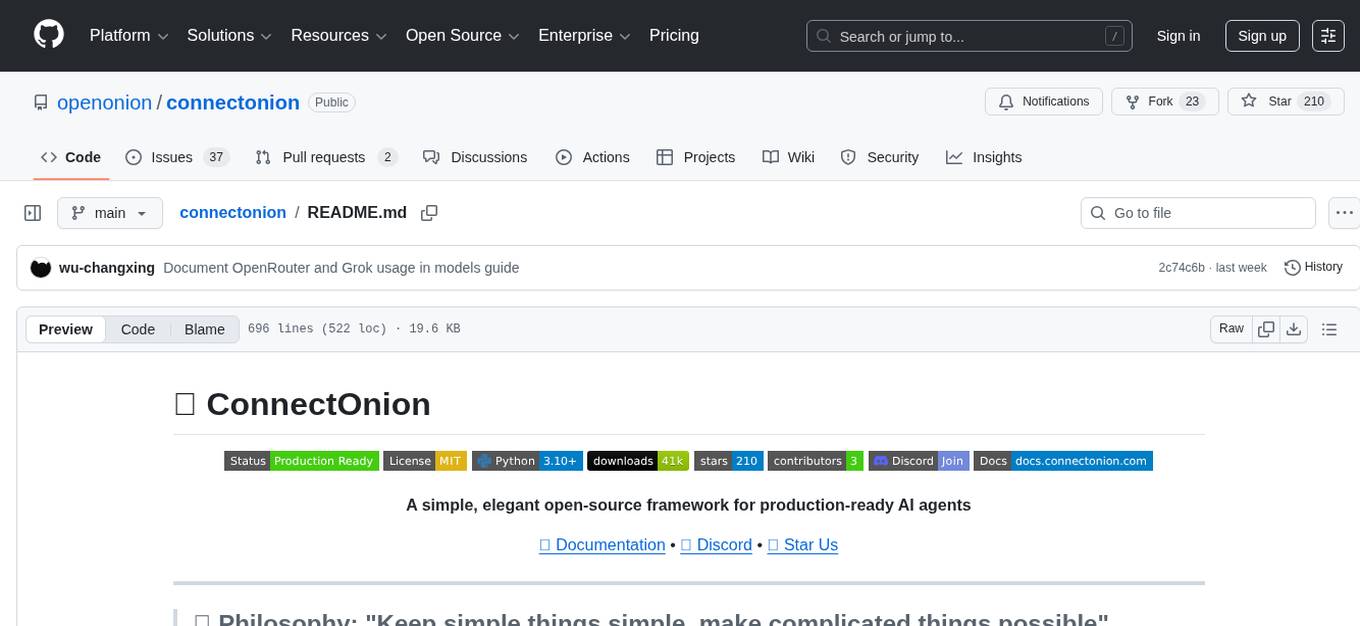

connectonion

ConnectOnion is a simple, elegant open-source framework for production-ready AI agents. It provides a platform for creating and using AI agents with a focus on simplicity and efficiency. The framework allows users to easily add tools, debug agents, make them production-ready, and enable multi-agent capabilities. ConnectOnion offers a simple API, is production-ready with battle-tested models, and is open-source under the MIT license. It features a plugin system for adding reflection and reasoning capabilities, interactive debugging for easy troubleshooting, and no boilerplate code for seamless scaling from prototypes to production systems.

rlama

RLAMA is a powerful AI-driven question-answering tool that seamlessly integrates with local Ollama models. It enables users to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored to their documentation needs. RLAMA follows a clean architecture pattern with clear separation of concerns, focusing on lightweight and portable RAG capabilities with minimal dependencies. The tool processes documents, generates embeddings, stores RAG systems locally, and provides contextually-informed responses to user queries. Supported document formats include text, code, and various document types, with troubleshooting steps available for common issues like Ollama accessibility, text extraction problems, and relevance of answers.

For similar tasks

nerve

Nerve is a tool that allows creating stateful agents with any LLM of your choice without writing code. It provides a framework of functionalities for planning, saving, or recalling memories by dynamically adapting the prompt. Nerve is experimental and subject to changes. It is valuable for learning and experimenting but not recommended for production environments. The tool aims to instrument smart agents without code, inspired by projects like Dreadnode's Rigging framework.

Windows-Use

Windows-Use is a powerful automation agent that interacts directly with the Windows OS at the GUI layer. It bridges the gap between AI agents and Windows to perform tasks such as opening apps, clicking buttons, typing, executing shell commands, and capturing UI state without relying on traditional computer vision models. It enables any large language model (LLM) to perform computer automation instead of relying on specific models for it.

openhands-aci

Agent-Computer Interface (ACI) for OpenHands is a deprecated repository that provided essential tools and interfaces for AI agents to interact with computer systems for software development tasks. It included a code editor interface, code linting capabilities, and utility functions for common operations. The package aimed to enhance software development agents' capabilities in editing code, managing configurations, analyzing code, and executing shell commands.

sandbox

AIO Sandbox is an all-in-one agent sandbox environment that combines Browser, Shell, File, MCP operations, and VSCode Server in a single Docker container. It provides a unified, secure execution environment for AI agents and developers, with features like unified file system, multiple interfaces, secure execution, zero configuration, and agent-ready MCP-compatible APIs. The tool allows users to run shell commands, perform file operations, automate browser tasks, and integrate with various development tools and services.

code-graph-rag

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.