VASA-1-hack

wip - running some training with overfitting - https://wandb.ai/snoozie/vasa-overfit

Stars: 295

VASA-1-hack is a repository containing the VASA implementation separated from EMOPortraits, with all components properly configured for standalone training. It provides detailed setup instructions, prerequisites, project structure, configuration details, running training modes, troubleshooting tips, monitoring training progress, development information, and acknowledgments. The repository aims to facilitate training volumetric avatar models with configurable parameters and logging levels for efficient debugging and testing.

README:

This repository contains the VASA implementation separated from EMOPortraits, with all components properly configured for standalone training.

- Clone the repository with submodules:

# Clone with submodules included

git clone --recurse-submodules https://github.com/johndpope/VASA-1-hack.git

cd VASA-1-hack

# Or if you already cloned without submodules:

git submodule update --init --recursive# Create conda environment

conda create -n vasa python=3.10

conda activate vasa

# Install PyTorch (adjust for your CUDA version)

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

# Install required packages

pip install omegaconf wandb opencv-python pillow scipy matplotlib tqdm

pip install transformers diffusers accelerate

pip install facenet-pytorch insightface hsemotion-onnx

pip install mediapipe

pip install l2cs memory-profiler rich

# EMOPortaits

cd nemo

bootstrap.sh

- Create necessary symlinks:

# Create symlink for repos (required for relative paths)

ln -s nemo/repos repos- Download pre-trained volumetric avatar model:

The pre-trained model should be placed in:

nemo/logs/Retrain_with_17_V1_New_rand_MM_SEC_4_drop_02_stm_10_CV_05_1_1/checkpoints/328_model.pth

- Prepare your training data:

# Create directories

mkdir -p junk cache checkpoints

# Place your training videos in the junk directory

# Videos should be .mp4 format

cp your_training_videos/*.mp4 junk/VASA-1-hack/

├── nemo/ # Git submodule: nemo repository (base EMOPortraits code)

│ ├── models/ # Model implementations

│ ├── networks/ # Network architectures

│ ├── losses/ # Loss functions

│ ├── datasets/ # Dataset loaders

│ ├── repos/ # External repositories (face_par_off, etc.)

│ └── logs/ # Pre-trained model checkpoints

│

├── vasa_*.py # VASA-specific implementations

│ ├── vasa_trainer.py # Main training script

│ ├── vasa_model.py # VASA model architecture

│ ├── vasa_dataset.py # VASA dataset handler

│ ├── vasa_scheduler.py # Diffusion scheduler

│ └── vasa_lip_normalizer.py # Lip normalization utilities

│

├── vasa_config.yaml # Main configuration file

├── video_tracker.py # Video tracking utilities

├── syncnet.py # Sync network implementation

│

├── data/ # Data files

│ └── aligned_keypoints_3d.npy

├── losses/ # Loss model weights

│ └── loss_model_weights/

├── junk/ # Training videos directory

├── cache/ # Cache for processed data

├── checkpoints/ # Model checkpoints

└── repos/ # Symlink to nemo/repos

Edit vasa_config.yaml to configure paths and training parameters:

paths:

volumetric_model: "nemo/logs/[...]/328_model.pth" # Pre-trained model

volumetric_config: "nemo/models/stage_1/volumetric_avatar/va.yaml"

data_dir: "data"

video_folder: "junk" # Your training videos directory

cache_dir: "cache"

checkpoint_dir: "checkpoints"

train:

batch_size: 1

num_epochs: 4000

lr: 1e-3

# ... other training parameterspython test_vasa_setup.pyExpected output:

✓ Config loaded successfully

✓ All paths exist

✓ All modules import correctly

✓ Setup looks good! You can now run vasa_trainer.py

Use the standard configuration for training on your complete dataset:

# Uses vasa_config.yaml by default

python vasa_trainer.py

# Or explicitly specify the config

python vasa_trainer.py --config vasa_config.yamlKey parameters in vasa_config.yaml:

-

window_size: 50- Full 50-frame windows -

n_layers: 8- Full 8 transformer layers -

num_steps: 1000- Full 1000 diffusion steps -

batch_size: 1- Adjust based on GPU memory -

num_epochs: 4000- Full training schedule

Use the overfitting configuration for rapid testing and debugging:

# Use the overfitting configuration

python vasa_trainer.py --config overfit_config.yamlKey differences in overfit_config.yaml:

-

window_size: 20- Smaller windows for faster processing -

n_layers: 2- Reduced transformer depth (2x-4x faster) -

num_steps: 100- Reduced diffusion steps (10x faster) -

batch_size: 4- Larger batch for better GPU utilization -

num_epochs: 100- Shorter training for quick iteration -

max_videos: 100- Limited dataset size -

num_workers: 8- Multi-threaded data loading - No augmentation - Pure overfitting test

When to use overfitting mode:

- Testing new model architectures

- Debugging training pipeline

- Verifying data loading and caching

- Quick convergence tests

- Checking if model can overfit to small dataset (sanity check)

Both training modes support WandB logging:

# View training progress

# Visit the URL printed at training start, e.g.:

# wandb: 🚀 View run at https://wandb.ai/your-username/vasa/runs/run-idFor overfitting mode, runs are grouped as "overfit-experiments" in WandB for easy comparison.

To use a different dataset (e.g., CelebV-HQ):

# Edit the config file or create a custom one

# Update video_folder path in the config:

# video_folder: "/path/to/your/dataset"

# For example, using CelebV-HQ:

# video_folder: "/media/12TB/Downloads/CelebV-HQ/celebvhq/35666"The trainer will:

- Load the pre-trained volumetric avatar model

- Process videos from the configured directory

- Cache processed windows for faster subsequent epochs

- Save checkpoints periodically based on

save_freq - Save checkpoints to

checkpoints/(orcheckpoints_overfit/for overfitting mode) - Log to Weights & Biases (if enabled)

| Parameter | Vanilla Training | Overfitting Mode | Speedup |

|---|---|---|---|

| Window Size | 50 frames | 20 frames | 2.5x |

| Transformer Layers | 8 | 2 | 4x |

| Diffusion Steps | 1000 | 100 | 10x |

| Batch Size | 1 | 4 | 4x |

| Workers | 0 | 8 | Parallel loading |

| Epoch Time (RTX 5090) | ~5 min | ~1.5 min | 3.3x |

| Convergence | 1000+ epochs | 10-20 epochs | 50x+ |

The project uses Python's logging module with three configurable levels defined in nemo/logger.py:28-30:

# log_level = logging.WARNING # Minimal output - only warnings and errors

log_level = logging.INFO # Standard output - informational messages (default)

# log_level = logging.DEBUG # Verbose output - detailed debugging informationLogging Levels Explained:

-

WARNING (

logging.WARNING)- Shows only warnings, errors, and critical messages

- Use when you want minimal console output during training

- Best for production runs where you only need to know about issues

-

INFO (

logging.INFO) - Currently Active- Shows informational messages, warnings, and errors

- Provides training progress, epoch updates, and key metrics

- Default and recommended level for normal training runs

- Balances visibility with readability

-

DEBUG (

logging.DEBUG)- Shows all messages including detailed debugging information

- Includes tensor shapes, gradient information, and internal state

- Use when troubleshooting model issues or understanding data flow

- Can be verbose - recommended only for debugging sessions

To change the logging level:

- Edit

nemo/logger.pyline 29 - Uncomment the desired level and comment out the others

- The change takes effect on next run

Additional Features:

- Logs are saved to

project.logfile for later review - Rich formatting with color-coded output and timestamps

- Third-party library logging is suppressed to reduce noise

- TorchDebugger class available for advanced PyTorch debugging

-

ModuleNotFoundError: No module named 'logger'

# The logger module is in nemo, paths are already configured # If still having issues, check that nemo is cloned properly

-

FileNotFoundError: './repos/face_par_off/res/cp/79999_iter.pth'

# Ensure the symlink exists: ln -s nemo/repos repos -

ValueError: num_samples should be a positive integer value, but got num_samples=0

# No videos found. Add videos to junk/ directory: cp your_video.mp4 junk/ -

FileNotFoundError: Config file not found at channel_config.yaml

# Copy from EMOPortraits or create a basic one -

CUDA out of memory

- Reduce

batch_sizein vasa_config.yaml - Enable gradient checkpointing

- Reduce

sequence_lengthin dataset config

- Reduce

-

FFmpeg warnings

- These can be safely ignored if not processing audio

- To fix:

pip install ffmpeg-python

If you're missing files, you'll need these from EMOPortraits:

-

channel_config.yaml- Channel configuration -

syncnet.py- Sync network implementation -

data/aligned_keypoints_3d.npy- 3D keypoint alignments -

losses/loss_model_weights/*.pth- Pre-trained loss models - Pre-trained volumetric avatar checkpoint

Training progress is logged to:

- Console: Real-time training metrics

- Weights & Biases: Detailed metrics and visualizations (if enabled)

-

Checkpoints: Saved every N epochs to

checkpoints/

Monitor training:

# Watch training logs

tail -f project.log

# Check W&B dashboard

# https://wandb.ai/YOUR_USERNAME/vasa/-

VASA-specific code: Root directory (

vasa_*.py) -

Base EMOPortraits code:

nemo/directory -

Configuration:

vasa_config.yaml -

Training data:

junk/directory -

Model outputs:

checkpoints/directory

- Separated VASA components from EMOPortraits codebase

- Fixed all hardcoded paths to be relative or configurable

- Proper module imports with sys.path management

- Configurable paths via vasa_config.yaml

- Auto-detection of project directories in nemo code

- Clean separation between VASA-specific and base code

Update nemo to latest version:

cd nemo

git pull origin main

cd ..

git add nemo

git commit -m "Update nemo submodule to latest"Lock to specific nemo version:

cd nemo

git checkout <commit-hash>

cd ..

git add nemo

git commit -m "Lock nemo to specific version"- The volumetric model must be pre-trained (from EMOPortraits)

- Training requires at least one video in the

junk/directory - All paths in configs are relative to the project root

- The

repossymlink is required for backward compatibility

- Training requires significant GPU memory (recommended: 24GB+)

- Some imports show FFmpeg warnings (can be ignored)

- Initial dataset processing can be slow (cached afterward)

This project is licensed under the MIT License - see the LICENSE file for details.

Note: The nemo submodule and other dependencies may have their own licenses.

- EMOPortraits team for the base implementation

- VASA paper authors for the architecture design

- Contributors to the nemo repository

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for VASA-1-hack

Similar Open Source Tools

VASA-1-hack

VASA-1-hack is a repository containing the VASA implementation separated from EMOPortraits, with all components properly configured for standalone training. It provides detailed setup instructions, prerequisites, project structure, configuration details, running training modes, troubleshooting tips, monitoring training progress, development information, and acknowledgments. The repository aims to facilitate training volumetric avatar models with configurable parameters and logging levels for efficient debugging and testing.

code-graph-rag

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

comp

Comp AI is an open-source compliance automation platform designed to assist companies in achieving compliance with standards like SOC 2, ISO 27001, and GDPR. It transforms compliance into an engineering problem solved through code, automating evidence collection, policy management, and control implementation while maintaining data and infrastructure control.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

one

ONE is a modern web and AI agent development toolkit that empowers developers to build AI-powered applications with high performance, beautiful UI, AI integration, responsive design, type safety, and great developer experience. It is perfect for building modern web applications, from simple landing pages to complex AI-powered platforms.

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

VimLM

VimLM is an AI-powered coding assistant for Vim that integrates AI for code generation, refactoring, and documentation directly into your Vim workflow. It offers native Vim integration with split-window responses and intuitive keybindings, offline first execution with MLX-compatible models, contextual awareness with seamless integration with codebase and external resources, conversational workflow for iterating on responses, project scaffolding for generating and deploying code blocks, and extensibility for creating custom LLM workflows with command chains.

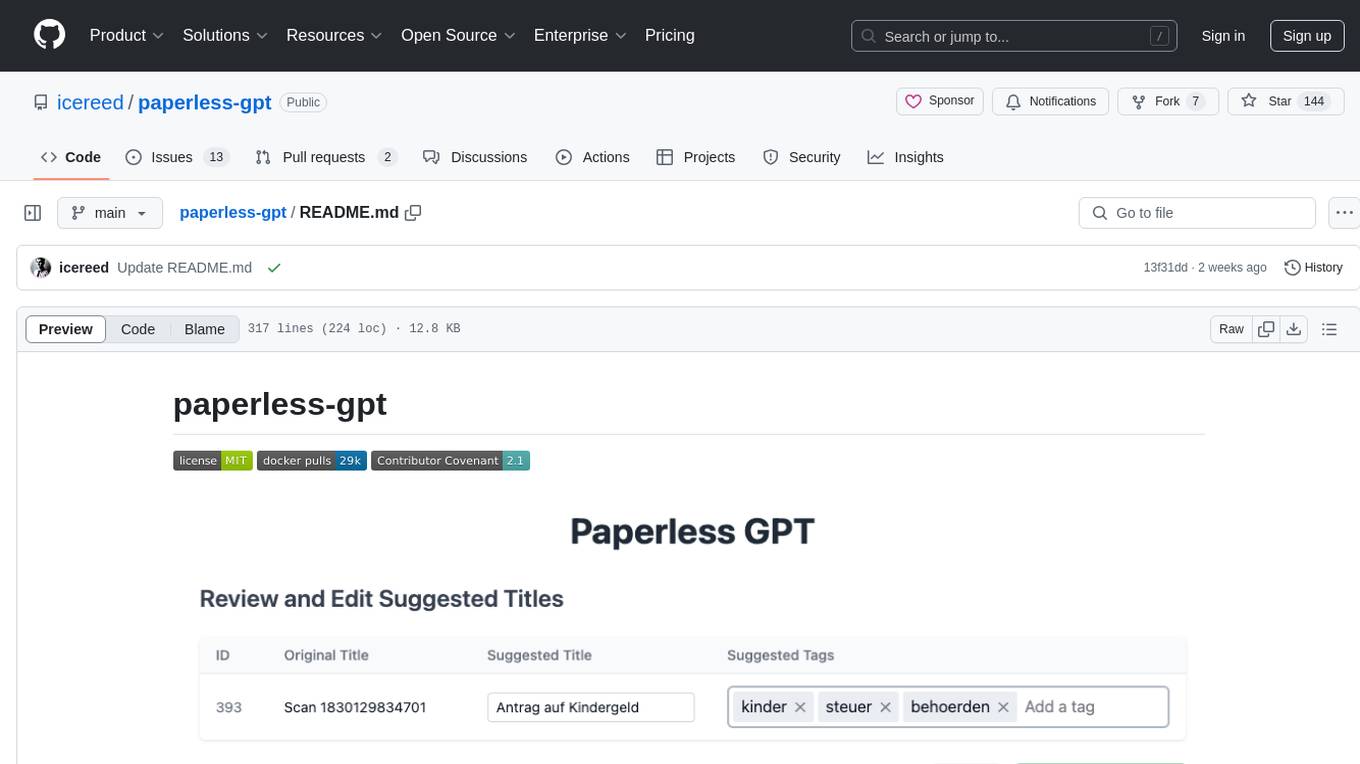

paperless-gpt

paperless-gpt is a tool designed to generate accurate and meaningful document titles and tags for paperless-ngx using Large Language Models (LLMs). It supports multiple LLM providers, including OpenAI and Ollama. With paperless-gpt, you can streamline your document management by automatically suggesting appropriate titles and tags based on the content of your scanned documents. The tool offers features like multiple LLM support, customizable prompts, easy integration with paperless-ngx, user-friendly interface for reviewing and applying suggestions, dockerized deployment, automatic document processing, and an experimental OCR feature.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

TermNet

TermNet is an AI-powered terminal assistant that connects a Large Language Model (LLM) with shell command execution, browser search, and dynamically loaded tools. It streams responses in real-time, executes tools one at a time, and maintains conversational memory across steps. The project features terminal integration for safe shell command execution, dynamic tool loading without code changes, browser automation powered by Playwright, WebSocket architecture for real-time communication, a memory system to track planning and actions, streaming LLM output integration, a safety layer to block dangerous commands, dual interface options, a notification system, and scratchpad memory for persistent note-taking. The architecture includes a multi-server setup with servers for WebSocket, browser automation, notifications, and web UI. The project structure consists of core backend files, various tools like web browsing and notification management, and servers for browser automation and notifications. Installation requires Python 3.9+, Ollama, and Chromium, with setup steps provided in the README. The tool can be used via the launcher for managing components or directly by starting individual servers. Additional tools can be added by registering them in `toolregistry.json` and implementing them in Python modules. Safety notes highlight the blocking of dangerous commands, allowed risky commands with warnings, and the importance of monitoring tool execution and setting appropriate timeouts.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

TranslateBookWithLLM

TranslateBookWithLLM is a Python application designed for large-scale text translation, such as entire books (.EPUB), subtitle files (.SRT), and plain text. It leverages local LLMs via the Ollama API or Gemini API. The tool offers both a web interface for ease of use and a command-line interface for advanced users. It supports multiple format translations, provides a user-friendly browser-based interface, CLI support for automation, multiple LLM providers including local Ollama models and Google Gemini API, and Docker support for easy deployment.

zcf

ZCF (Zero-Config Claude-Code Flow) is a tool that provides zero-configuration, one-click setup for Claude Code with bilingual support, intelligent agent system, and personalized AI assistant. It offers an interactive menu for easy operations and direct commands for quick execution. The tool supports bilingual operation with automatic language switching and customizable AI output styles. ZCF also includes features like BMad Workflow for enterprise-grade workflow system, Spec Workflow for structured feature development, CCR (Claude Code Router) support for proxy routing, and CCometixLine for real-time usage tracking. It provides smart installation, complete configuration management, and core features like professional agents, command system, and smart configuration. ZCF is cross-platform compatible, supports Windows and Termux environments, and includes security features like dangerous operation confirmation mechanism.

For similar tasks

VASA-1-hack

VASA-1-hack is a repository containing the VASA implementation separated from EMOPortraits, with all components properly configured for standalone training. It provides detailed setup instructions, prerequisites, project structure, configuration details, running training modes, troubleshooting tips, monitoring training progress, development information, and acknowledgments. The repository aims to facilitate training volumetric avatar models with configurable parameters and logging levels for efficient debugging and testing.

FinRL_DeepSeek

FinRL-DeepSeek is a project focusing on LLM-infused risk-sensitive reinforcement learning for trading agents. It provides a framework for training and evaluating trading agents in different market conditions using deep reinforcement learning techniques. The project integrates sentiment analysis and risk assessment to enhance trading strategies in both bull and bear markets. Users can preprocess financial news data, add LLM signals, and train agent-ready datasets for PPO and CPPO algorithms. The project offers specific training and evaluation environments for different agent configurations, along with detailed instructions for installation and usage.

For similar jobs

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.

awesome-RK3588

RK3588 is a flagship 8K SoC chip by Rockchip, integrating Cortex-A76 and Cortex-A55 cores with NEON coprocessor for 8K video codec. This repository curates resources for developing with RK3588, including official resources, RKNN models, projects, development boards, documentation, tools, and sample code.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.