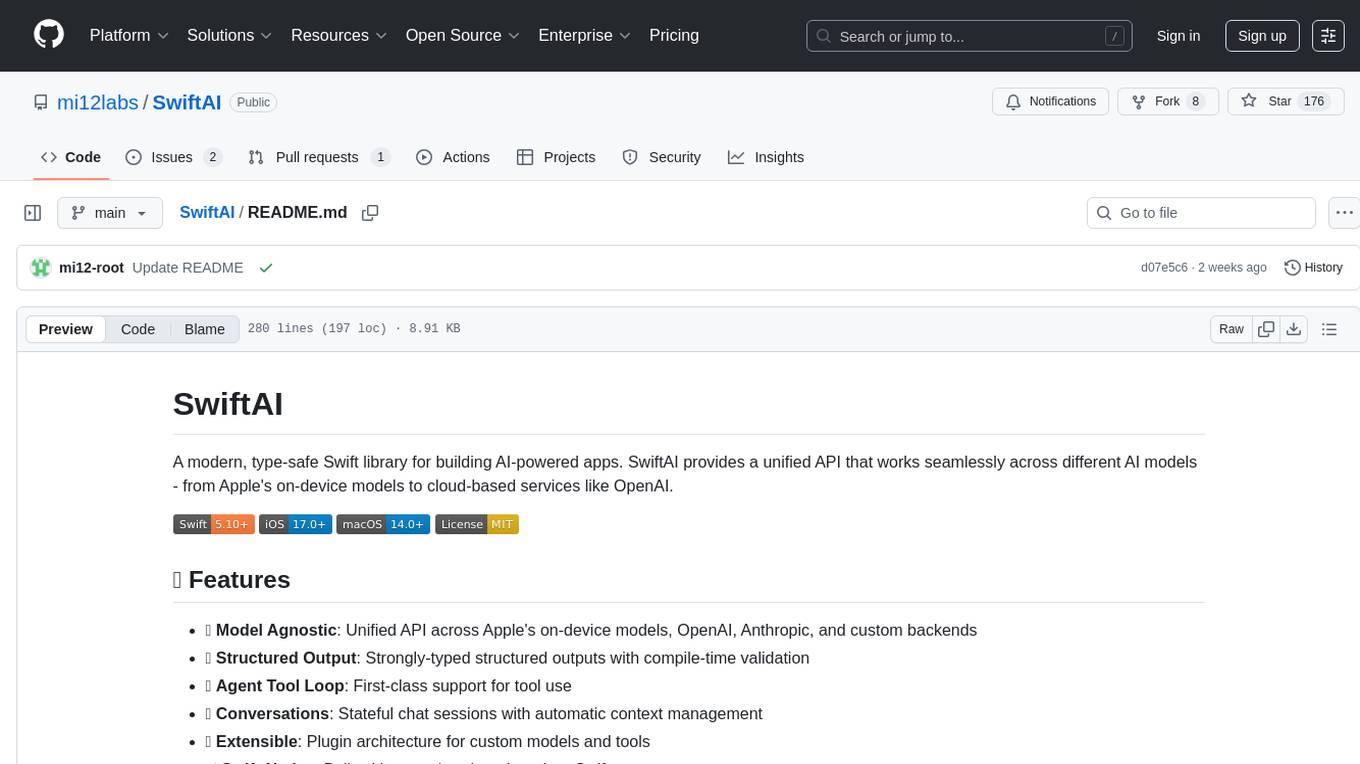

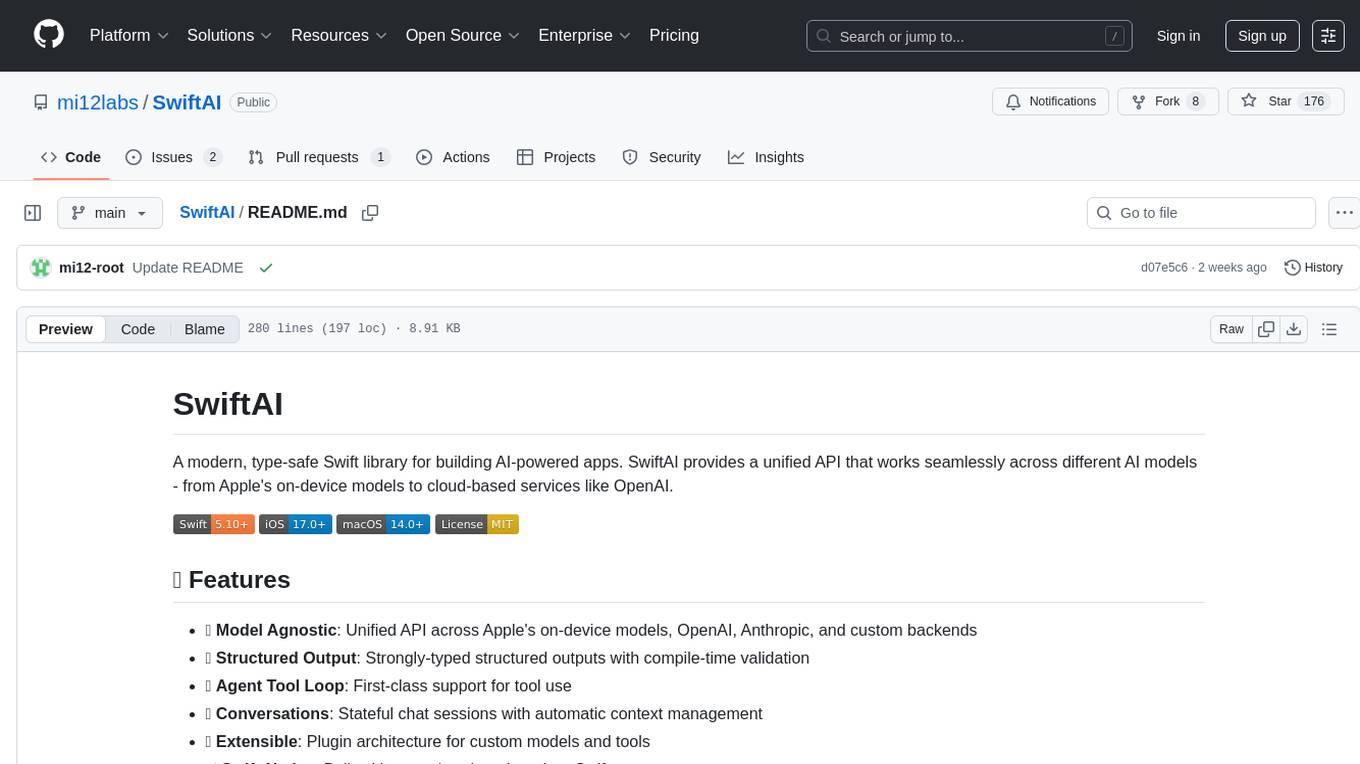

SwiftAI

Build beautiful and reliable LLM apps on iOS and MacOS

Stars: 201

SwiftAI is a modern, type-safe Swift library for building AI-powered apps. It provides a unified API that works seamlessly across different AI models, including Apple's on-device models and cloud-based services like OpenAI. With features like model agnosticism, structured output, agent tool loop, conversations, extensibility, and Swift-native design, SwiftAI offers a powerful toolset for developers to integrate AI capabilities into their applications. The library supports easy installation via Swift Package Manager and offers detailed guidance on getting started, structured responses, tool use, model switching, conversations, and advanced constraints. SwiftAI aims to simplify AI integration by providing a type-safe and versatile solution for various AI tasks.

README:

A modern, type-safe Swift library for building AI-powered apps. SwiftAI provides a unified API that works seamlessly across different AI models - from Apple's on-device models to cloud-based services like OpenAI.

- 🤖 Model Agnostic: Unified API across Apple's on-device models, OpenAI, MLX, and custom backends

- 🎯 Structured Output: Strongly-typed structured outputs with compile-time validation

- 🔧 Agent Tool Loop: First-class support for tool use

- 💬 Conversations: Stateful chat sessions with automatic context management

- 🏗️ Extensible: Plugin architecture for custom models and tools

- ⚡ Swift-Native: Built with async/await and modern Swift concurrency

import SwiftAI

let llm = SystemLLM()

let response = try await llm.reply(to: "What is the capital of France?")

print(response.content) // "Paris"Xcode:

- Go to File → Add Package Dependencies

- Enter:

https://github.com/mi12labs/SwiftAI - Click Add Package

Package.swift (Non Xcode):

dependencies: [

.package(url: "https://github.com/mi12labs/SwiftAI", from: "main")

]Start with the simplest possible example - just ask a question and get an answer:

import SwiftAI

// Initialize Apple's on-device language model.

let llm = SystemLLM()

// Ask a question and get a response.

let response = try await llm.reply(to: "What is the capital of France?")

print(response.content) // "Paris"What just happened?

-

SystemLLM()creates Apple's on-device AI model -

reply(to:)sends your question and returns aStringby default -

try awaithandles the asynchronous AI processing - The response is wrapped in a

responseobject - use.contentto get the actual text

Instead of getting plain text, let's get structured data that your app can use directly:

// Define the structure you want back

@Generable

struct CityInfo {

let name: String

let country: String

let population: Int

}

let response = try await llm.reply(

to: "Tell me about Tokyo",

returning: CityInfo.self // Tell the LLM what to output

)

let cityInfo = response.content

print(cityInfo.name) // "Tokyo"

print(cityInfo.country) // "Japan"

print(cityInfo.population) // 13960000What's new here?

-

@Generabletells SwiftAI this struct can be generated by AI -

returning: CityInfo.selfspecifies you want structured data, not a string - SwiftAI automatically converts the AI's response into your struct

- No JSON parsing required!

SwiftAI ensures the AI returns data in exactly the format your code expects. If the AI can't generate valid data, you'll get an error instead of broken data.

Let your AI call functions in your app to get real-time information:

// Create a tool the AI can use

struct WeatherTool: Tool {

let description = "Get current weather for a city"

@Generable

struct Arguments {

let city: String

}

func call(arguments: Arguments) async throws -> String {

// Your weather API logic here

return "It's 72°F and sunny in \(arguments.city)"

}

}

// Use the tool with your AI

let weatherTool = WeatherTool()

let response = try await llm.reply(

to: "What's the weather like in San Francisco?",

tools: [weatherTool]

)

print(response.content) // "Based on current data, it's 72°F and sunny in San Francisco"What's new here?

-

Toolprotocol lets you create functions the AI can call -

Argumentsstruct defines what parameters your tool needs (also@Generable) - The AI automatically decides when to call your tool

- You get back a natural language response that incorporates the tool's data

The AI reads your tool's description and automatically decides whether to call it. You don't manually trigger tools - the AI does it when needed.

Different AI models have different strengths. SwiftAI makes switching seamless:

// Choose your model based on availability

let llm: any LLM = {

let systemLLM = SystemLLM()

return systemLLM.isAvailable ? systemLLM : OpenaiLLM(apiKey: "your-api-key")

}()

// Same code works with any model

let response = try await llm.reply(to: "Write a haiku about Berlin.")

print(response.content)What's new here?

-

SystemLLMruns on-device (private, fast, free) -

OpenaiLLMuses the cloud (more capable, requires API key) -

isAvailablechecks if the on-device model is ready - Same

reply()method works with any LLM

Your code doesn't change when you switch models. This lets you optimize for different scenarios (privacy, capabilities, cost) without rewriting your app.

For multi-turn conversations, use Chat to maintain context across messages:

// Create a chat with tools

let chat = try Chat(with: llm, tools: [weatherTool])

// Have a conversation

let greeting = try await chat.send("Hello! I'm planning a trip.")

let advice = try await chat.send("What should I pack for Seattle?")

// The AI remembers context from previous messagesWhat's new here?

-

Chatmaintains conversation history automatically -

send()is likereply()but remembers previous messages - Tools work in conversations too

- The AI remembers context from earlier in the conversation

-

reply()is stateless - each call is independent -

Chatis stateful - builds on previous conversation

Add validation rules and descriptions to guide AI generation:

@Generable

struct UserProfile {

@Guide(description: "A valid username starting with a letter", .pattern("^[a-zA-Z][a-zA-Z0-9_]{2,}$"))

let username: String

@Guide(description: "User age in years", .minimum(13), .maximum(120))

let age: Int

@Guide(description: "One to three favorite colors", .minimumCount(1), .maximumCount(3))

let favoriteColors: [String]

}What's new here?

-

@Guideadds constraints and descriptions to fields which help LLM generate good content -

.pattern()tells the LLM to follow a regex -

.minimum()and.maximum()constrain numbers -

.minimumCount()and.maximumCount()control array sizes

Constraints ensure the AI follows your business rules.

The MLX backend provides access to local language models through Apple's MLX framework.

Setup:

// Add SwiftAIMLX to your target in Package.swift

targets: [

.target(

name: "YourTarget",

dependencies: [

.product(name: "SwiftAI", package: "SwiftAI"),

.product(name: "SwiftAIMLX", package: "SwiftAI") // 👈 Add this

]

)

]Usage:

import SwiftAI

import SwiftAIMLX

import MLXLLM

// The model manager handles MLX models within an app instance.

// Responsibilities:

// - Downloading models from Hugging Face (if not already on disk)

// - Caching model weights in memory

// - Sharing model weights across the app instance

let modelManager = MlxModelManager(storageDirectory: .documentsDirectory)

// Create an LLM with a specific configuration.

// Available configurations are listed in `LLMRegistry` (from MLXLLM).

//

// If the model is not yet available locally, it will be automatically

// downloaded from Hugging Face on first use.

let llm = modelManager.llm(withConfiguration: LLMRegistry.gemma3n_E2B_it_lm_4bit)

// Use the same API as with other LLM backends.

let response = try await llm.reply(to: "Hello!")

print(response.content)Note: Structured output generation is not yet supported with MLX models.

| What You Want | What To Use | Example |

|---|---|---|

| Simple text response | reply(to:) |

reply(to: "Hello") |

| Structured data | reply(to:returning:) |

reply(to: "...", returning: MyStruct.self) |

| Function calling | reply(to:tools:) |

reply(to: "...", tools: [myTool]) |

| Conversation | Chat |

chat.send("Hello") |

| Model switching | any LLM |

SystemLLM() or OpenaiLLM()

|

| Model | Type | Privacy | Capabilities | Cost |

|---|---|---|---|---|

| SystemLLM | On-device | 🔒 Private | Good | 🆓 Free |

| OpenaiLLM | Cloud API | Excellent | 💰 Paid | |

| MlxLLM | On-device | 🔒 Private | Excellent | 🆓 Free |

| CustomLLM | Your choice | Your choice | Your choice | Your choice |

| Feature | Status |

|---|---|

| Structured outputs for enums | ❌ #issue |

We welcome contributions! Please read our Contributing Guidelines.

git clone https://github.com/your-org/SwiftAI.git

cd SwiftAI

swift build

swift testSwiftAI is released under the MIT License. See LICENSE for details.

SwiftAI is alpha 🚧 – rough edges and breaking changes are expected.

Built with ❤️ for the Swift community

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for SwiftAI

Similar Open Source Tools

SwiftAI

SwiftAI is a modern, type-safe Swift library for building AI-powered apps. It provides a unified API that works seamlessly across different AI models, including Apple's on-device models and cloud-based services like OpenAI. With features like model agnosticism, structured output, agent tool loop, conversations, extensibility, and Swift-native design, SwiftAI offers a powerful toolset for developers to integrate AI capabilities into their applications. The library supports easy installation via Swift Package Manager and offers detailed guidance on getting started, structured responses, tool use, model switching, conversations, and advanced constraints. SwiftAI aims to simplify AI integration by providing a type-safe and versatile solution for various AI tasks.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

oxylabs-mcp

The Oxylabs MCP Server acts as a bridge between AI models and the web, providing clean, structured data from any site. It enables scraping of URLs, rendering JavaScript-heavy pages, content extraction for AI use, bypassing anti-scraping measures, and accessing geo-restricted web data from 195+ countries. The implementation utilizes the Model Context Protocol (MCP) to facilitate secure interactions between AI assistants and web content. Key features include scraping content from any site, automatic data cleaning and conversion, bypassing blocks and geo-restrictions, flexible setup with cross-platform support, and built-in error handling and request management.

dive

Dive is an AI toolkit for Go that enables the creation of specialized teams of AI agents and seamless integration with leading LLMs. It offers a CLI and APIs for easy integration, with features like creating specialized agents, hierarchical agent systems, declarative configuration, multiple LLM support, extended reasoning, model context protocol, advanced model settings, tools for agent capabilities, tool annotations, streaming, CLI functionalities, thread management, confirmation system, deep research, and semantic diff. Dive also provides semantic diff analysis, unified interface for LLM providers, tool system with annotations, custom tool creation, and support for various verified models. The toolkit is designed for developers to build AI-powered applications with rich agent capabilities and tool integrations.

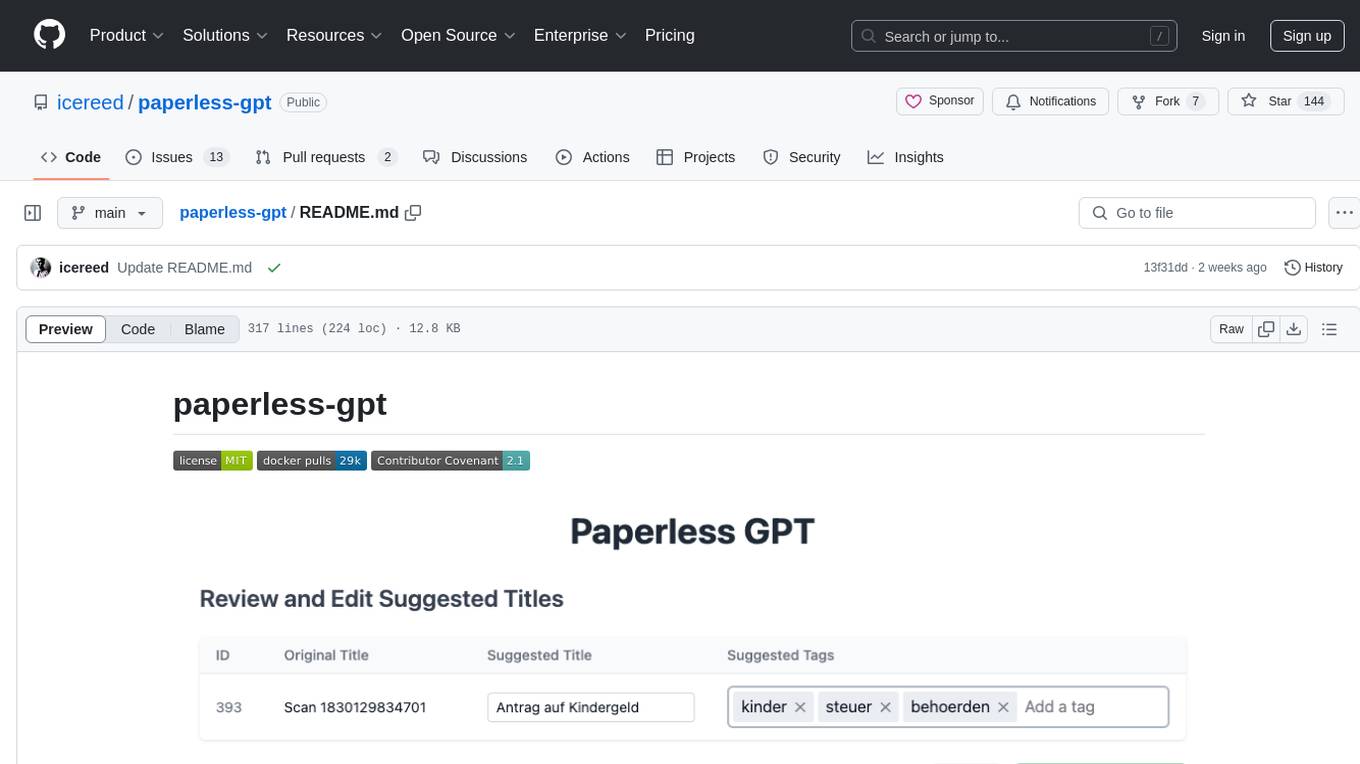

paperless-gpt

paperless-gpt is a tool designed to generate accurate and meaningful document titles and tags for paperless-ngx using Large Language Models (LLMs). It supports multiple LLM providers, including OpenAI and Ollama. With paperless-gpt, you can streamline your document management by automatically suggesting appropriate titles and tags based on the content of your scanned documents. The tool offers features like multiple LLM support, customizable prompts, easy integration with paperless-ngx, user-friendly interface for reviewing and applying suggestions, dockerized deployment, automatic document processing, and an experimental OCR feature.

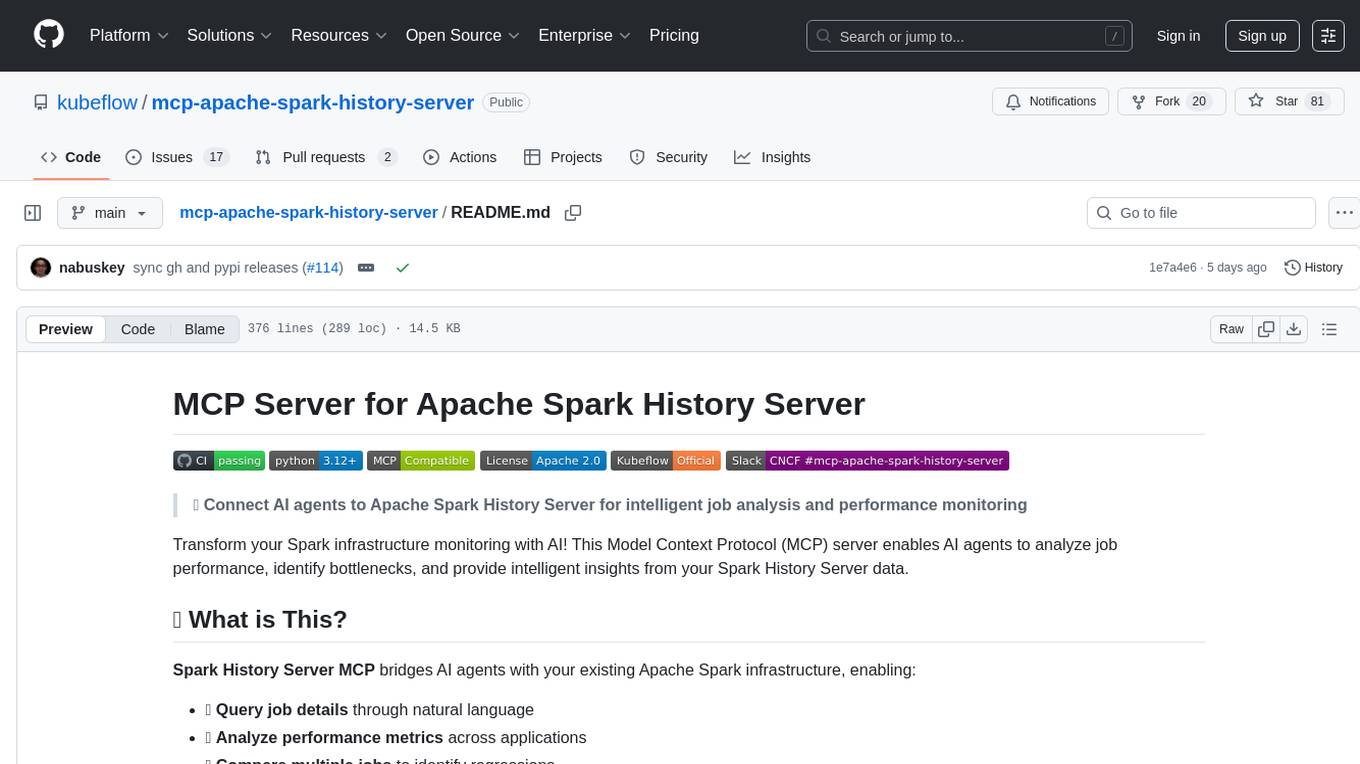

mcp-apache-spark-history-server

The MCP Server for Apache Spark History Server is a tool that connects AI agents to Apache Spark History Server for intelligent job analysis and performance monitoring. It enables AI agents to analyze job performance, identify bottlenecks, and provide insights from Spark History Server data. The server bridges AI agents with existing Apache Spark infrastructure, allowing users to query job details, analyze performance metrics, compare multiple jobs, investigate failures, and generate insights from historical execution data.

VimLM

VimLM is an AI-powered coding assistant for Vim that integrates AI for code generation, refactoring, and documentation directly into your Vim workflow. It offers native Vim integration with split-window responses and intuitive keybindings, offline first execution with MLX-compatible models, contextual awareness with seamless integration with codebase and external resources, conversational workflow for iterating on responses, project scaffolding for generating and deploying code blocks, and extensibility for creating custom LLM workflows with command chains.

prometheus-mcp-server

Prometheus MCP Server is a Model Context Protocol (MCP) server that provides access to Prometheus metrics and queries through standardized interfaces. It allows AI assistants to execute PromQL queries and analyze metrics data. The server supports executing queries, exploring metrics, listing available metrics, viewing query results, and authentication. It offers interactive tools for AI assistants and can be configured to choose specific tools. Installation methods include using Docker Desktop, MCP-compatible clients like Claude Desktop, VS Code, Cursor, and Windsurf, and manual Docker setup. Configuration options include setting Prometheus server URL, authentication credentials, organization ID, transport mode, and bind host/port. Contributions are welcome, and the project uses `uv` for managing dependencies and includes a comprehensive test suite for functionality testing.

llm-context.py

LLM Context is a tool designed to assist developers in quickly injecting relevant content from code/text projects into Large Language Model chat interfaces. It leverages `.gitignore` patterns for smart file selection and offers a streamlined clipboard workflow using the command line. The tool also provides direct integration with Large Language Models through the Model Context Protocol (MCP). LLM Context is optimized for code repositories and collections of text/markdown/html documents, making it suitable for developers working on projects that fit within an LLM's context window. The tool is under active development and aims to enhance AI-assisted development workflows by harnessing the power of Large Language Models.

code-graph-rag

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

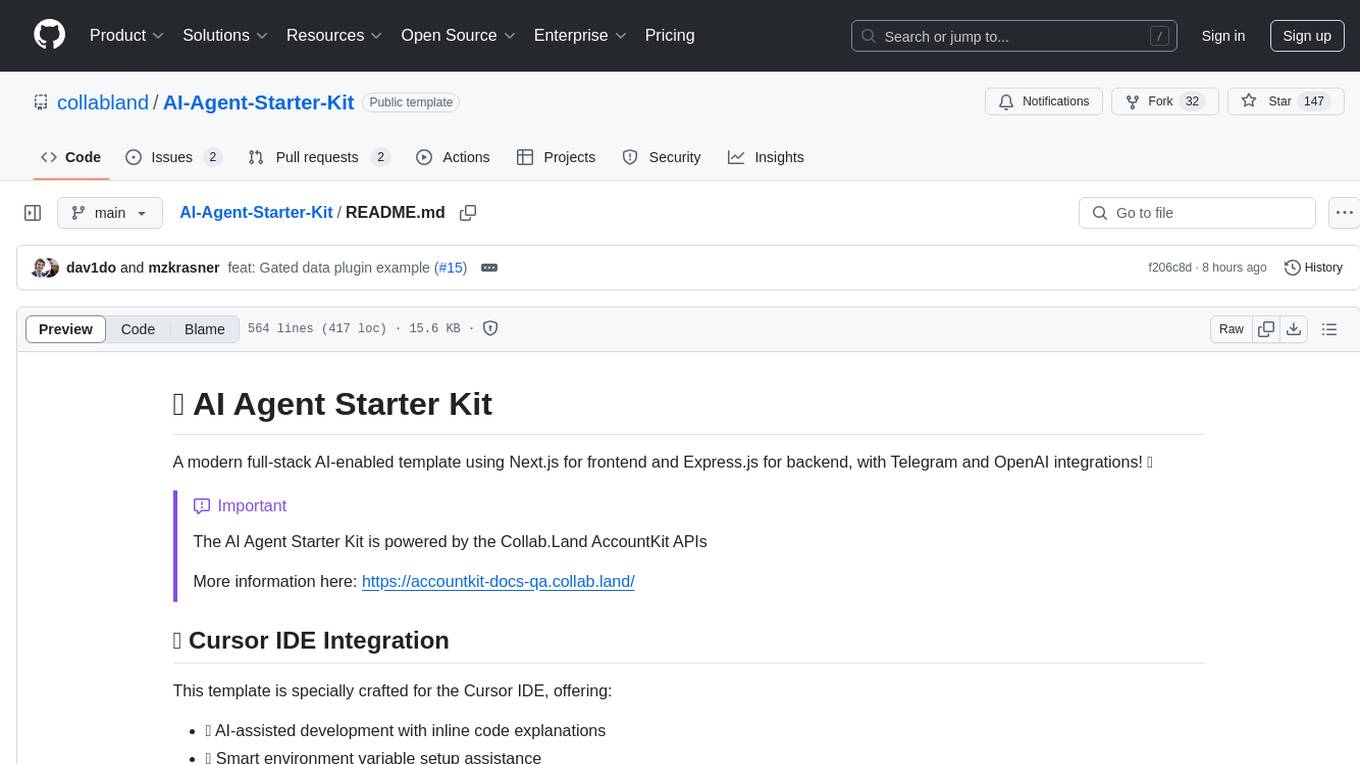

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

ck

ck (seek) is a semantic grep tool that finds code by meaning, not just keywords. It replaces traditional grep by understanding the user's search intent. It allows users to search for code based on concepts like 'error handling' and retrieves relevant code even if the exact keywords are not present. ck offers semantic search, drop-in grep compatibility, hybrid search combining keyword precision with semantic understanding, agent-friendly output in JSONL format, smart file filtering, and various advanced features. It supports multiple search modes, relevance scoring, top-K results, and smart exclusions. Users can index projects for semantic search, choose embedding models, and search specific files or directories. The tool is designed to improve code search efficiency and accuracy for developers and AI agents.

LocalAGI

LocalAGI is a powerful, self-hostable AI Agent platform that allows you to design AI automations without writing code. It provides a complete drop-in replacement for OpenAI's Responses APIs with advanced agentic capabilities. With LocalAGI, you can create customizable AI assistants, automations, chat bots, and agents that run 100% locally, without the need for cloud services or API keys. The platform offers features like no-code agents, web-based interface, advanced agent teaming, connectors for various platforms, comprehensive REST API, short & long-term memory capabilities, planning & reasoning, periodic tasks scheduling, memory management, multimodal support, extensible custom actions, fully customizable models, observability, and more.

ruler

Ruler is a tool designed to centralize AI coding assistant instructions, providing a single source of truth for managing instructions across multiple AI coding tools. It helps in avoiding inconsistent guidance, duplicated effort, context drift, onboarding friction, and complex project structures by automatically distributing instructions to the right configuration files. With support for nested rule loading, Ruler can handle complex project structures with context-specific instructions for different components. It offers features like centralised rule management, nested rule loading, automatic distribution, targeted agent configuration, MCP server propagation, .gitignore automation, and a simple CLI for easy configuration management.

For similar tasks

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

awesome-langchain-zh

The awesome-langchain-zh repository is a collection of resources related to LangChain, a framework for building AI applications using large language models (LLMs). The repository includes sections on the LangChain framework itself, other language ports of LangChain, tools for low-code development, services, agents, templates, platforms, open-source projects related to knowledge management and chatbots, as well as learning resources such as notebooks, videos, and articles. It also covers other LLM frameworks and provides additional resources for exploring and working with LLMs. The repository serves as a comprehensive guide for developers and AI enthusiasts interested in leveraging LangChain and LLMs for various applications.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.