react-native-rag

Private, local RAGs. Supercharge LLMs with your own knowledge base.

Stars: 154

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

README:

Private, local RAGs. Supercharge LLMs with your own knowledge base.

- 🚀 Features

- 🌍 Real-World Example

- 📦 Installation

- 📱 Quickstart - Example App

- 📚 Usage

- 📖 API Reference

- 🧩 Using Custom Components

- 🔌 Plugins

- 🤝 Contributing

- 📄 License

-

Modular: Use only the components you need. Choose from

LLM,Embeddings,VectorStore, andTextSplitter. -

Extensible: Create your own components by implementing the

LLM,Embeddings,VectorStore, andTextSplitterinterfaces. -

Multiple Integration Options: Whether you prefer a simple hook (

useRAG), a powerful class (RAG), or direct component interaction, the library adapts to your needs. -

On-device Inference: Powered by

@react-native-rag/executorch, allowing for private and efficient model execution directly on the user's device. -

Vector Store Persistence: Includes support for SQLite with

@react-native-rag/op-sqliteto save and manage vector stores locally. -

Semantic Search Ready: Easily implement powerful semantic search in your app by using the

VectorStoreandEmbeddingscomponents directly.

React Native RAG is powering Private Mind, a privacy-first mobile AI app available on App Store and Google Play.

npm install react-native-ragYou will also need an embeddings model and a large language model. We recommend using @react-native-rag/executorch for on-device inference. To use it, install the following packages:

npm install @react-native-rag/executorch react-native-executorchFor persisting vector stores, you can use @react-native-rag/op-sqlite:

For a complete example app that demonstrates how to use the library, check out the example app.

We offer three ways to integrate RAG, depending on your needs.

The easiest way to get started. Good for simple use cases where you want to quickly set up RAG.

import React, { useState } from 'react';

import { Text } from 'react-native';

import { useRAG, MemoryVectorStore } from 'react-native-rag';

import {

ALL_MINILM_L6_V2,

ALL_MINILM_L6_V2_TOKENIZER,

LLAMA3_2_1B_QLORA,

LLAMA3_2_1B_TOKENIZER,

LLAMA3_2_TOKENIZER_CONFIG,

} from 'react-native-executorch';

import {

ExecuTorchEmbeddings,

ExecuTorchLLM,

} from '@react-native-rag/executorch';

const vectorStore = new MemoryVectorStore({

embeddings: new ExecuTorchEmbeddings({

modelSource: ALL_MINILM_L6_V2,

tokenizerSource: ALL_MINILM_L6_V2_TOKENIZER,

}),

});

const llm = new ExecuTorchLLM({

modelSource: LLAMA3_2_1B_QLORA,

tokenizerSource: LLAMA3_2_1B_TOKENIZER,

tokenizerConfigSource: LLAMA3_2_TOKENIZER_CONFIG,

});

const app = () => {

const rag = useRAG({ vectorStore, llm });

return (

<Text>{rag.response}</Text>

);

};For more control over components and configuration.

import React, { useEffect, useState } from 'react';

import { Text } from 'react-native';

import { RAG, MemoryVectorStore } from 'react-native-rag';

import {

ExecuTorchEmbeddings,

ExecuTorchLLM,

} from '@react-native-rag/executorch';

import {

ALL_MINILM_L6_V2,

ALL_MINILM_L6_V2_TOKENIZER,

LLAMA3_2_1B_QLORA,

LLAMA3_2_1B_TOKENIZER,

LLAMA3_2_TOKENIZER_CONFIG,

} from 'react-native-executorch';

const app = () => {

const [rag, setRag] = useState<RAG | null>(null);

const [response, setResponse] = useState<string | null>(null);

useEffect(() => {

const initializeRAG = async () => {

const embeddings = new ExecuTorchEmbeddings({

modelSource: ALL_MINILM_L6_V2,

tokenizerSource: ALL_MINILM_L6_V2_TOKENIZER,

});

const llm = new ExecuTorchLLM({

modelSource: LLAMA3_2_1B_QLORA,

tokenizerSource: LLAMA3_2_1B_TOKENIZER,

tokenizerConfigSource: LLAMA3_2_TOKENIZER_CONFIG,

responseCallback: setResponse,

});

const vectorStore = new MemoryVectorStore({ embeddings });

const ragInstance = new RAG({

llm: llm,

vectorStore: vectorStore,

});

await ragInstance.load();

setRag(ragInstance);

};

initializeRAG();

}, []);

return (

<Text>{response}</Text>

);

}For advanced use cases requiring fine-grained control.

This is the recommended way you if you want to implement semantic search in your app, use the VectorStore and Embeddings classes directly.

import React, { useEffect, useState } from 'react';

import { Text } from 'react-native';

import { MemoryVectorStore } from 'react-native-rag';

import {

ExecuTorchEmbeddings,

ExecuTorchLLM,

} from '@react-native-rag/executorch';

import {

ALL_MINILM_L6_V2,

ALL_MINILM_L6_V2_TOKENIZER,

LLAMA3_2_1B_QLORA,

LLAMA3_2_1B_TOKENIZER,

LLAMA3_2_TOKENIZER_CONFIG,

} from 'react-native-executorch';

const app = () => {

const [embeddings, setEmbeddings] = useState<ExecuTorchEmbeddings | null>(null);

const [llm, setLLM] = useState<ExecuTorchLLM | null>(null);

const [vectorStore, setVectorStore] = useState<MemoryVectorStore | null>(null);

const [response, setResponse] = useState<string | null>(null);

useEffect(() => {

const initializeRAG = async () => {

// Instantiate and load the Embeddings Model

// NOTE: Calling load on VectorStore will automatically load the embeddings model

// so loading the embeddings model separately is not necessary in this case.

const embeddings = await new ExecuTorchEmbeddings({

modelSource: ALL_MINILM_L6_V2,

tokenizerSource: ALL_MINILM_L6_V2_TOKENIZER,

}).load();

// Instantiate and load the Large Language Model

const llm = await new ExecuTorchLLM({

modelSource: LLAMA3_2_1B_QLORA,

tokenizerSource: LLAMA3_2_1B_TOKENIZER,

tokenizerConfigSource: LLAMA3_2_TOKENIZER_CONFIG,

responseCallback: setResponse,

}).load();

// Instantiate and initialize the Vector Store

const vectorStore = await new MemoryVectorStore({ embeddings }).load();

setEmbeddings(embeddings);

setLLM(llm);

setVectorStore(vectorStore);

};

initializeRAG();

}, []);

return (

<Text>{response}</Text>

);

}A React hook for Retrieval Augmented Generation (RAG). Manages the RAG system lifecycle, loading, unloading, generation, and document storage.

Parameters:

-

params: An object containing:-

vectorStore: An instance of a class that implementsVectorStoreinterface. -

llm: An instance of a class that implementsLLMinterface. -

preventLoad(optional): A boolean to defer loading the RAG system.

-

Returns: An object with the following properties:

-

response(string): The current generated text from the LLM. -

isReady(boolean): True if the RAG system (Vector Store and LLM) is loaded. -

isGenerating(boolean): True if the LLM is currently generating a response. -

isStoring(boolean): True if a document operation (add, update, delete) is in progress. -

error(string | null): The last error message, if any. -

generate: A function to generate text. SeeRAG.generate()for details. -

interrupt: A function to stop the current generation. SeeRAG.interrupt()for details. -

splitAddDocument: A function to split and add a document. SeeRAG.splitAddDocument()for details. -

addDocument: Adds a document. SeeRAG.addDocument()for details. -

updateDocument: Updates a document. SeeRAG.updateDocument()for details. -

deleteDocument: Deletes a document. SeeRAG.deleteDocument()for details.

The core class for managing the RAG workflow.

constructor(params: RAGParams)

-

params: An object containing:-

vectorStore: An instance that implementsVectorStoreinterface. -

llm: An instance that implementsLLMinterface.

-

Methods:

-

async load(): Promise<this>: Initializes the vector store and loads the LLM. -

async unload(): Promise<void>: Unloads the vector store and LLM. -

async generate(input: Message[] | string, options?: { augmentedGeneration?: boolean; k?: number; predicate?: (value: SearchResult) => boolean; questionGenerator?: Function; promptGenerator?: Function; callback?: (token: string) => void }): Promise<string>Generates a response.-

input(Message[] | string): A string or an array ofMessageobjects. -

options(object, optional): Generation options.-

augmentedGeneration(boolean, optional): Iftrue(default), retrieves context from the vector store to augment the prompt. -

k(number, optional): Number of documents to retrieve (default:3). -

predicate(function, optional): Function to filter retrieved documents (default: includes all). -

questionGenerator(function, optional): Custom question generator. -

promptGenerator(function, optional): Custom prompt generator. -

callback(function, optional): A function that receives tokens as they are generated.

-

-

-

async splitAddDocument(document: string, metadataGenerator?: (chunks: string[]) => Record<string, any>[], textSplitter?: TextSplitter): Promise<string[]>: Splits a document into chunks and adds them to the vector store. -

async addDocument(document: string, metadata?: Record<string, any>): Promise<string>: Adds a single document to the vector store. -

async updateDocument(id: string, document?: string, metadata?: Record<string, any>): Promise<void>: Updates a document in the vector store. -

async deleteDocument(id: string): Promise<void>: Deletes a document from the vector store. -

async interrupt(): Promise<void>: Interrupts the ongoing LLM generation.

An in-memory implementation of the VectorStore interface. Useful for development and testing without persistent storage or when you don't need to save documents across app restarts.

constructor(params: { embeddings: Embeddings })

-

params: Requires anembeddingsinstance to generate vectors for documents. -

async load(): Promise<this>: Loads the Embeddings model. -

async unload(): Promise<void>: Unloads the Embeddings model.

These interfaces define the contracts for creating your own custom components.

-

load: () => Promise<this>: Loads the embedding model. -

unload: () => Promise<void>: Unloads the model. -

embed: (text: string) => Promise<number[]>: Generates an embedding for a given text.

-

load: () => Promise<this>: Loads the language model. -

interrupt: () => Promise<void>: Stops the current text generation. -

unload: () => Promise<void>: Unloads the model. -

generate: (messages: Message[], callback: (token: string) => void) => Promise<string>: Generates a response from a list of messages, streaming tokens to the callback.

-

load: () => Promise<this>: Initializes the vector store. -

unload: () => Promise<void>: Unloads the vector store and releases resources. -

add(document: string, metadata?: Record<string, any>): Promise<string>: Adds a document. -

update(id: string, document?: string, metadata?: Record<string, any>): Promise<void>: Updates a document. -

delete(id: string): Promise<void>: Deletes a document. -

similaritySearch(query: string, k?: number, predicate?: (value: SearchResult) => boolean): Promise<SearchResult[]>: Searches forksimilar documents. Which can be filtered with an optionalpredicatefunction.

-

splitText: (text: string) => Promise<string[]>: Splits text into an array of chunks.

The library provides wrappers around common langchain text splitters. All splitters are initialized with { chunkSize: number, chunkOverlap: number }.

-

RecursiveCharacterTextSplitter: Splits text recursively by different characters. (Default inRAGclass). -

CharacterTextSplitter: Splits text by a fixed character count. -

TokenTextSplitter: Splits text by token count. -

MarkdownTextSplitter: Splits text while preserving Markdown structure. -

LatexTextSplitter: Splits text while preserving LaTeX structure.

-

uuidv4(): string: Generates a compliant Version 4 UUID. Not cryptographically secure. -

cosine(a: number[], b: number[]): number: Calculates the cosine similarity between two vectors. -

dotProduct(a: number[], b: number[]): number: Calculates the dot product of two vectors. -

magnitude(a: number[]): number: Calculates the Euclidean magnitude of a vector.

interface Message {

role: 'user' | 'assistant' | 'system';

content: string;

}

type ResourceSource = string | number | object;

interface SearchResult {

id: string;

content: string;

metadata?: Record<string, any>;

similarity: number;

}Bring your own components by creating classes that implement the LLM, Embeddings, VectorStore and TextSplitter interfaces. This allows you to use any model or service that fits your needs.

interface Embeddings {

load: () => Promise<this>;

unload: () => Promise<void>;

embed: (text: string) => Promise<number[]>;

}

interface LLM {

load: () => Promise<this>;

interrupt: () => Promise<void>;

unload: () => Promise<void>;

generate: (

messages: Message[],

callback: (token: string) => void

) => Promise<string>;

}

interface TextSplitter {

splitText: (text: string) => Promise<string[]>;

}

interface VectorStore {

load: () => Promise<this>;

unload: () => Promise<void>;

add(document: string, metadata?: Record<string, any>): Promise<string>;

update(

id: string,

document?: string,

metadata?: Record<string, any>

): Promise<void>;

delete(id: string): Promise<void>;

similaritySearch(

query: string,

k?: number,

predicate?: (value: SearchResult) => boolean

): Promise<SearchResult[]>;

}-

@react-native-rag/executorch: On-device inference withreact-native-executorch. -

@react-native-rag/op-sqlite: Persisting vector stores using SQLite.

Contributions are welcome! See the contributing guide to learn about the development workflow.

MIT

Since 2012 Software Mansion is a software agency with experience in building web and mobile apps. We are Core React Native Contributors and experts in dealing with all kinds of React Native issues. We can help you build your next dream product – Hire us.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for react-native-rag

Similar Open Source Tools

react-native-rag

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

openrouter-kit

OpenRouter Kit is a powerful TypeScript/JavaScript library for interacting with the OpenRouter API. It simplifies working with LLMs by providing a high-level API for chats, dialogue history management, tool calls with error handling, security module, and cost tracking. Ideal for building chatbots, AI agents, and integrating LLMs into applications.

rust-genai

genai is a multi-AI providers library for Rust that aims to provide a common and ergonomic single API to various generative AI providers such as OpenAI, Anthropic, Cohere, Ollama, and Gemini. It focuses on standardizing chat completion APIs across major AI services, prioritizing ergonomics and commonality. The library initially focuses on text chat APIs and plans to expand to support images, function calling, and more in the future versions. Version 0.1.x will have breaking changes in patches, while version 0.2.x will follow semver more strictly. genai does not provide a full representation of a given AI provider but aims to simplify the differences at a lower layer for ease of use.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

mcp-ui

mcp-ui is a collection of SDKs that bring interactive web components to the Model Context Protocol (MCP). It allows servers to define reusable UI snippets, render them securely in the client, and react to their actions in the MCP host environment. The SDKs include @mcp-ui/server (TypeScript) for generating UI resources on the server, @mcp-ui/client (TypeScript) for rendering UI components on the client, and mcp_ui_server (Ruby) for generating UI resources in a Ruby environment. The project is an experimental community playground for MCP UI ideas, with rapid iteration and enhancements.

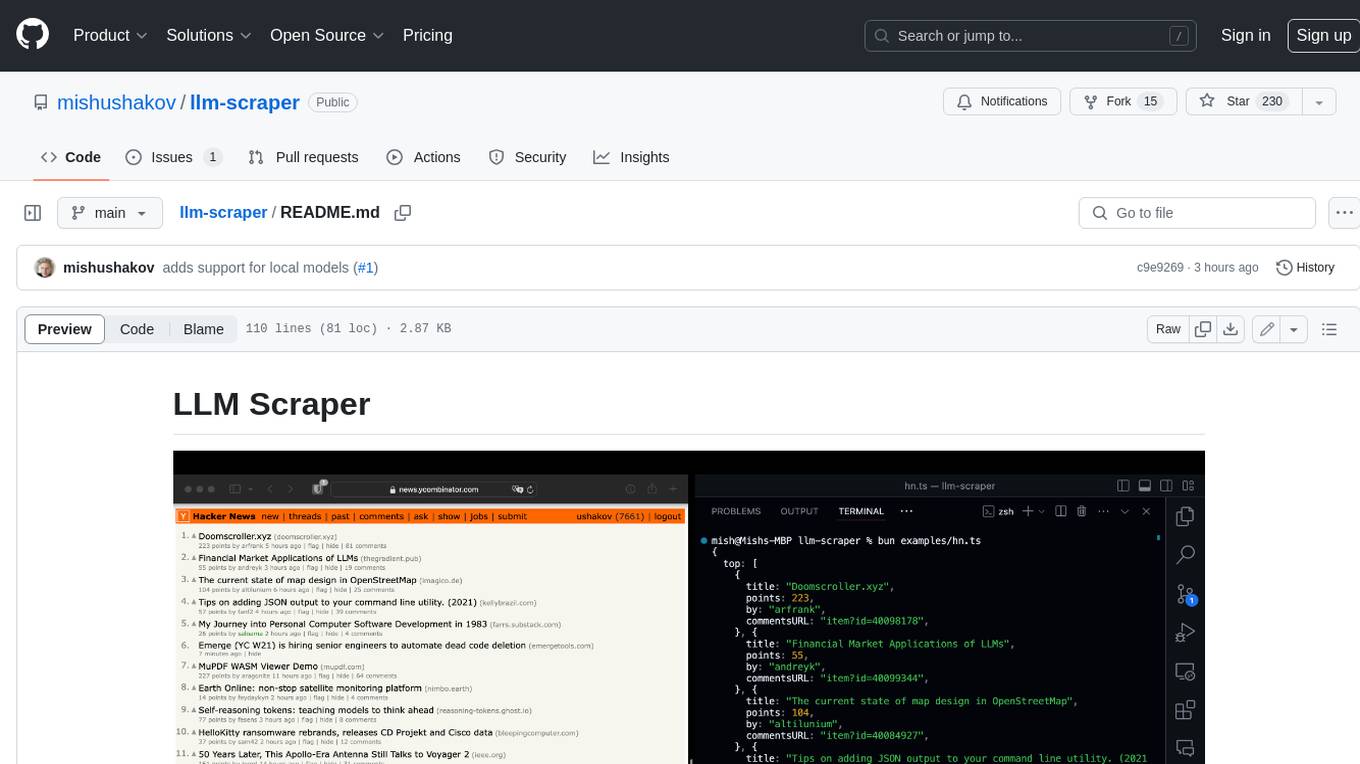

llm-scraper

LLM Scraper is a TypeScript library that allows you to convert any webpages into structured data using LLMs. It supports Local (GGUF), OpenAI, Groq chat models, and schemas defined with Zod. With full type-safety in TypeScript and based on the Playwright framework, it offers streaming when crawling multiple pages and supports four input modes: html, markdown, text, and image.

pocketgroq

PocketGroq is a tool that provides advanced functionalities for text generation, web scraping, web search, and AI response evaluation. It includes features like an Autonomous Agent for answering questions, web crawling and scraping capabilities, enhanced web search functionality, and flexible integration with Ollama server. Users can customize the agent's behavior, evaluate responses using AI, and utilize various methods for text generation, conversation management, and Chain of Thought reasoning. The tool offers comprehensive methods for different tasks, such as initializing RAG, error handling, and tool management. PocketGroq is designed to enhance development processes and enable the creation of AI-powered applications with ease.

openapi

The `@samchon/openapi` repository is a collection of OpenAPI types and converters for various versions of OpenAPI specifications. It includes an 'emended' OpenAPI v3.1 specification that enhances clarity by removing ambiguous and duplicated expressions. The repository also provides an application composer for LLM (Large Language Model) function calling from OpenAPI documents, allowing users to easily perform LLM function calls based on the Swagger document. Conversions to different versions of OpenAPI documents are also supported, all based on the emended OpenAPI v3.1 specification. Users can validate their OpenAPI documents using the `typia` library with `@samchon/openapi` types, ensuring compliance with standard specifications.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

aiocache

Aiocache is an asyncio cache library that supports multiple backends such as memory, redis, and memcached. It provides a simple interface for functions like add, get, set, multi_get, multi_set, exists, increment, delete, clear, and raw. Users can easily install and use the library for caching data in Python applications. Aiocache allows for easy instantiation of caches and setup of cache aliases for reusing configurations. It also provides support for backends, serializers, and plugins to customize cache operations. The library offers detailed documentation and examples for different use cases and configurations.

ax

Ax is a Typescript library that allows users to build intelligent agents inspired by agentic workflows and the Stanford DSP paper. It seamlessly integrates with multiple Large Language Models (LLMs) and VectorDBs to create RAG pipelines or collaborative agents capable of solving complex problems. The library offers advanced features such as streaming validation, multi-modal DSP, and automatic prompt tuning using optimizers. Users can easily convert documents of any format to text, perform smart chunking, embedding, and querying, and ensure output validation while streaming. Ax is production-ready, written in Typescript, and has zero dependencies.

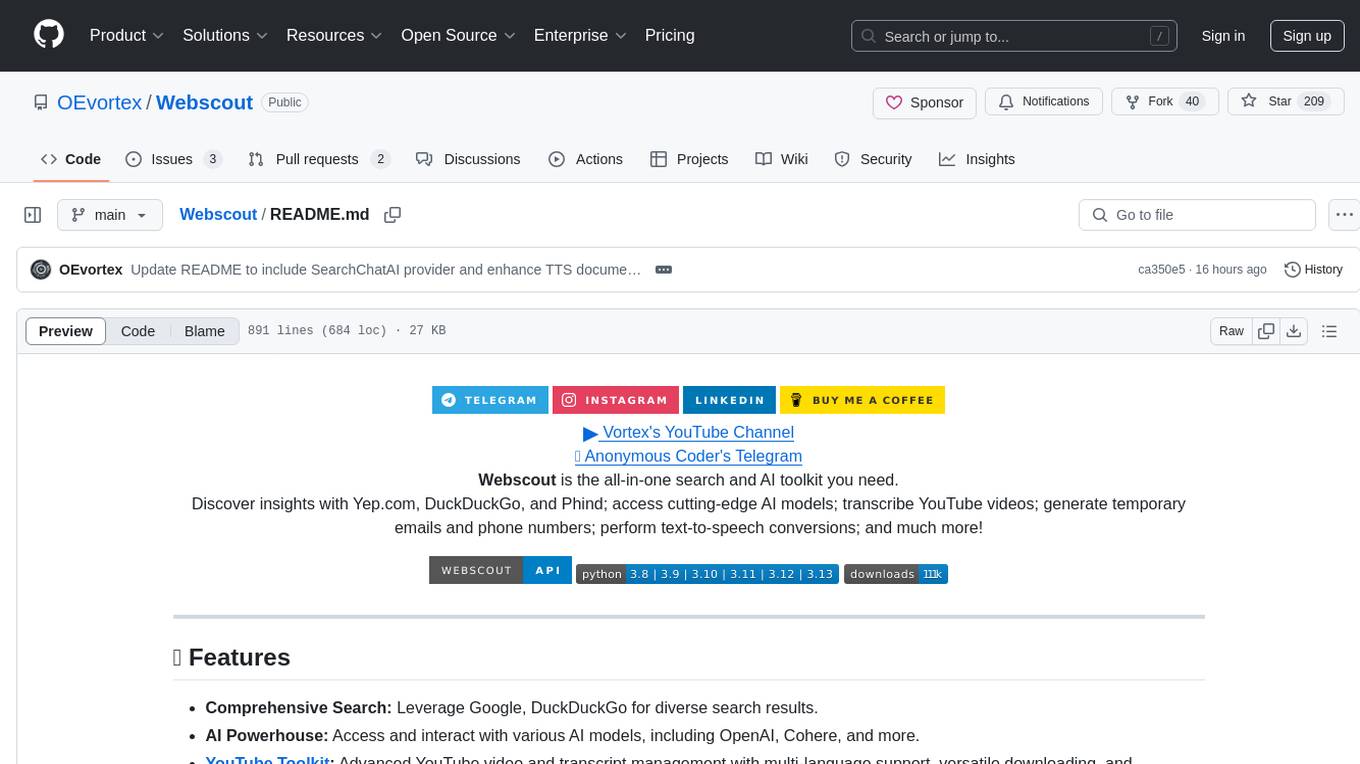

Webscout

Webscout is an all-in-one Python toolkit for web search, AI interaction, digital utilities, and more. It provides access to diverse search engines, cutting-edge AI models, temporary communication tools, media utilities, developer helpers, and powerful CLI interfaces through a unified library. With features like comprehensive search leveraging Google and DuckDuckGo, AI powerhouse for accessing various AI models, YouTube toolkit for video and transcript management, GitAPI for GitHub data extraction, Tempmail & Temp Number for privacy, Text-to-Speech conversion, GGUF conversion & quantization, SwiftCLI for CLI interfaces, LitPrinter for styled console output, LitLogger for logging, LitAgent for user agent generation, Text-to-Image generation, Scout for web parsing and crawling, Awesome Prompts for specialized tasks, Weather Toolkit, and AI Search Providers.

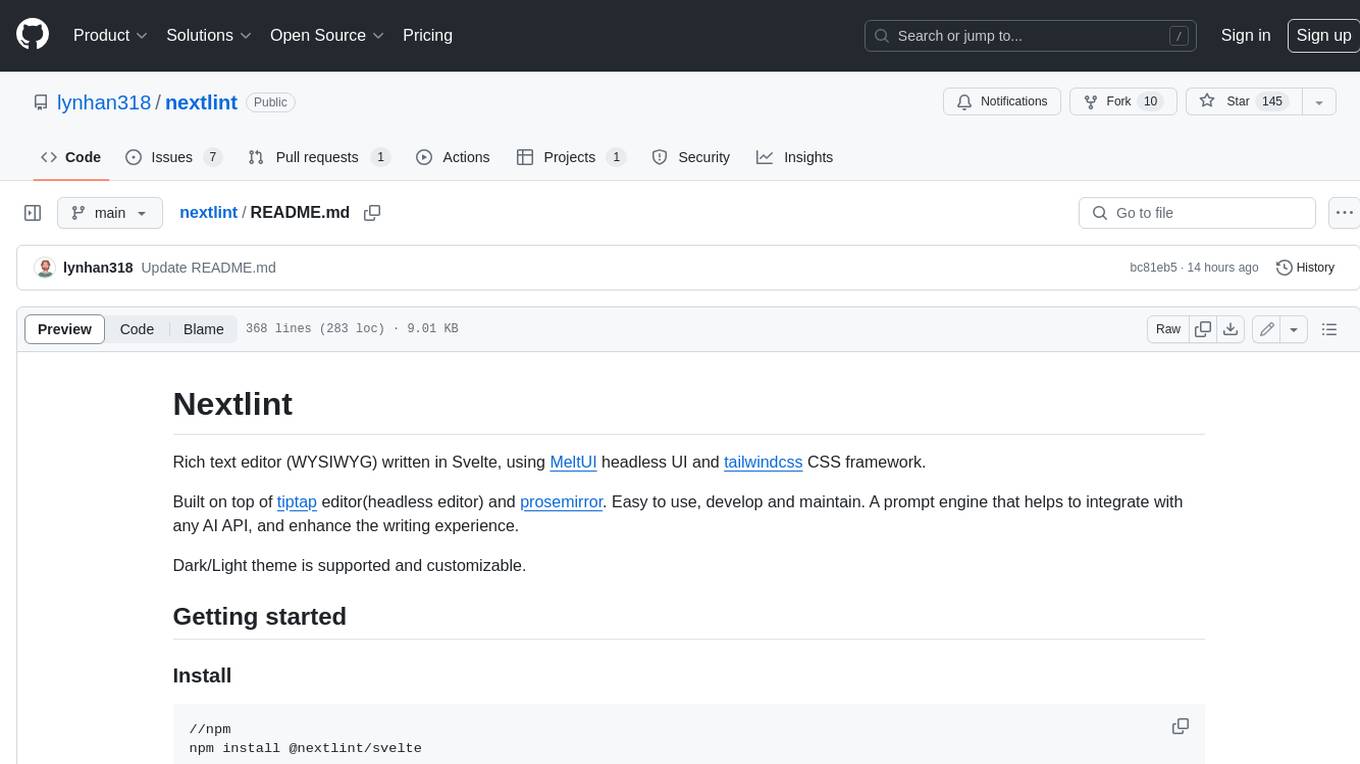

nextlint

Nextlint is a rich text editor (WYSIWYG) written in Svelte, using MeltUI headless UI and tailwindcss CSS framework. It is built on top of tiptap editor (headless editor) and prosemirror. Nextlint is easy to use, develop, and maintain. It has a prompt engine that helps to integrate with any AI API and enhance the writing experience. Dark/Light theme is supported and customizable.

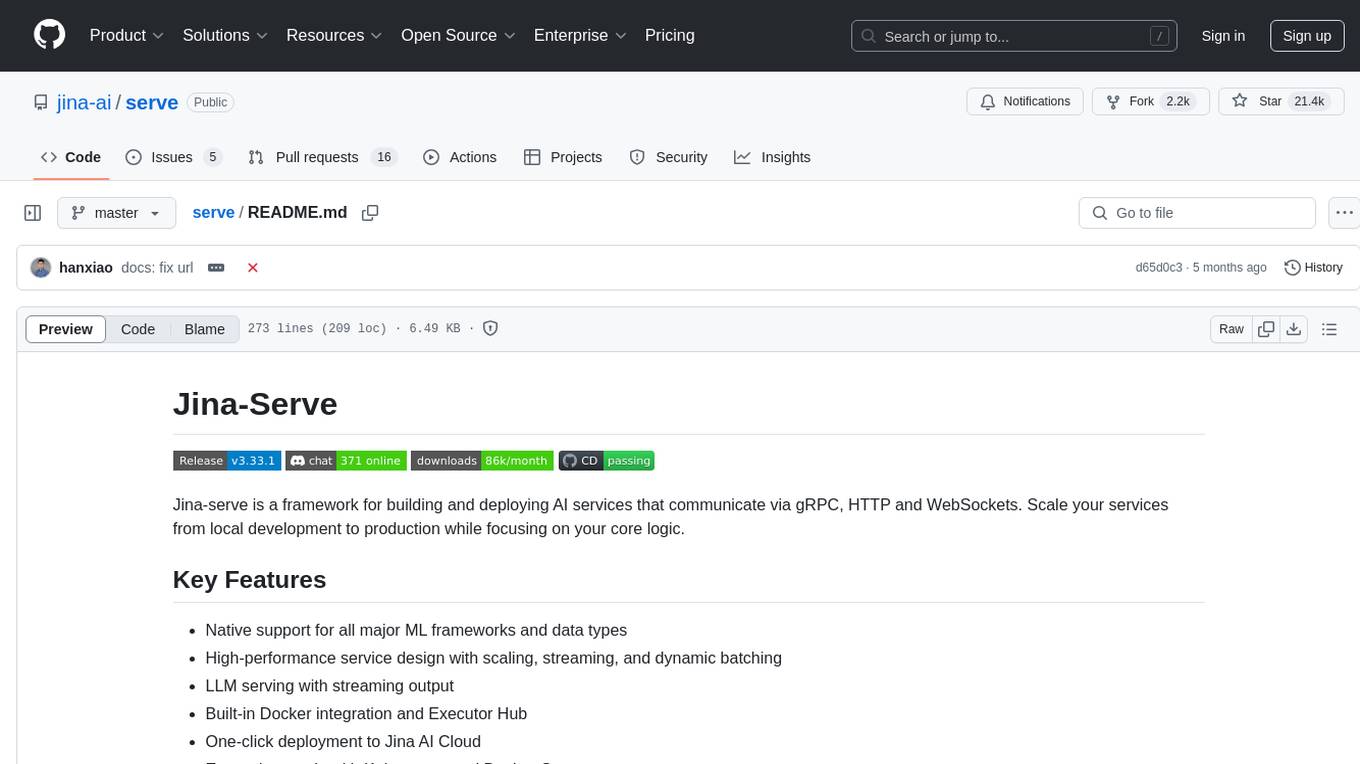

serve

Jina-Serve is a framework for building and deploying AI services that communicate via gRPC, HTTP and WebSockets. It provides native support for major ML frameworks and data types, high-performance service design with scaling and dynamic batching, LLM serving with streaming output, built-in Docker integration and Executor Hub, one-click deployment to Jina AI Cloud, and enterprise-ready features with Kubernetes and Docker Compose support. Users can create gRPC-based AI services, build pipelines, scale services locally with replicas, shards, and dynamic batching, deploy to the cloud using Kubernetes, Docker Compose, or JCloud, and enable token-by-token streaming for responsive LLM applications.

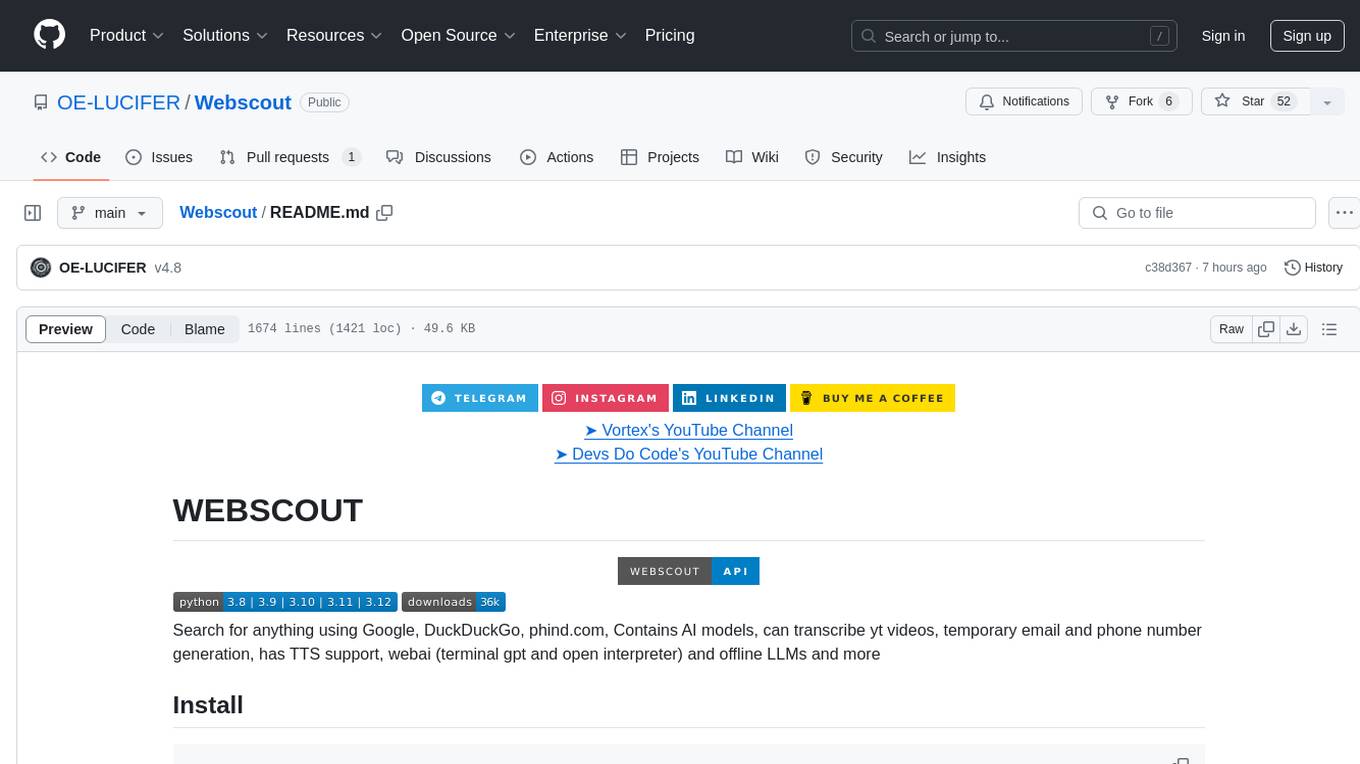

Webscout

WebScout is a versatile tool that allows users to search for anything using Google, DuckDuckGo, and phind.com. It contains AI models, can transcribe YouTube videos, generate temporary email and phone numbers, has TTS support, webai (terminal GPT and open interpreter), and offline LLMs. It also supports features like weather forecasting, YT video downloading, temp mail and number generation, text-to-speech, advanced web searches, and more.

desktop

E2B Desktop Sandbox is a secure virtual desktop environment powered by E2B, allowing users to create isolated sandboxes with customizable dependencies. It provides features such as streaming the desktop screen, mouse and keyboard control, taking screenshots, opening files, and running bash commands. The environment is based on Linux and Xfce, offering a fast and lightweight experience that can be fully customized to create unique desktop environments.

For similar tasks

react-native-rag

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

serverless-rag-demo

The serverless-rag-demo repository showcases a solution for building a Retrieval Augmented Generation (RAG) system using Amazon Opensearch Serverless Vector DB, Amazon Bedrock, Llama2 LLM, and Falcon LLM. The solution leverages generative AI powered by large language models to generate domain-specific text outputs by incorporating external data sources. Users can augment prompts with relevant context from documents within a knowledge library, enabling the creation of AI applications without managing vector database infrastructure. The repository provides detailed instructions on deploying the RAG-based solution, including prerequisites, architecture, and step-by-step deployment process using AWS Cloudshell.

june

june-va is a local voice chatbot that combines Ollama for language model capabilities, Hugging Face Transformers for speech recognition, and the Coqui TTS Toolkit for text-to-speech synthesis. It provides a flexible, privacy-focused solution for voice-assisted interactions on your local machine, ensuring that no data is sent to external servers. The tool supports various interaction modes including text input/output, voice input/text output, text input/audio output, and voice input/audio output. Users can customize the tool's behavior with a JSON configuration file and utilize voice conversion features for voice cloning. The application can be further customized using a configuration file with attributes for language model, speech-to-text model, and text-to-speech model configurations.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

parakeet

Parakeet is a Go library for creating GenAI apps with Ollama. It enables the creation of generative AI applications that can generate text-based content. The library provides tools for simple completion, completion with context, chat completion, and more. It also supports function calling with tools and Wasm plugins. Parakeet allows users to interact with language models and create AI-powered applications easily.

sparkle

Sparkle is a tool that streamlines the process of building AI-driven features in applications using Large Language Models (LLMs). It guides users through creating and managing agents, defining tools, and interacting with LLM providers like OpenAI. Sparkle allows customization of LLM provider settings, model configurations, and provides a seamless integration with Sparkle Server for exposing agents via an OpenAI-compatible chat API endpoint.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

llm-cookbook

LLM Cookbook is a developer-oriented comprehensive guide focusing on LLM for Chinese developers. It covers various aspects from Prompt Engineering to RAG development and model fine-tuning, providing guidance on how to learn and get started with LLM projects in a way suitable for Chinese learners. The project translates and reproduces 11 courses from Professor Andrew Ng's large model series, categorizing them for beginners to systematically learn essential skills and concepts before exploring specific interests. It encourages developers to contribute by replicating unreproduced courses following the format and submitting PRs for review and merging. The project aims to help developers grasp a wide range of skills and concepts related to LLM development, offering both online reading and PDF versions for easy access and learning.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.