llm-cookbook

面向开发者的 LLM 入门教程,吴恩达大模型系列课程中文版

Stars: 11367

LLM Cookbook is a developer-oriented comprehensive guide focusing on LLM for Chinese developers. It covers various aspects from Prompt Engineering to RAG development and model fine-tuning, providing guidance on how to learn and get started with LLM projects in a way suitable for Chinese learners. The project translates and reproduces 11 courses from Professor Andrew Ng's large model series, categorizing them for beginners to systematically learn essential skills and concepts before exploring specific interests. It encourages developers to contribute by replicating unreproduced courses following the format and submitting PRs for review and merging. The project aims to help developers grasp a wide range of skills and concepts related to LLM development, offering both online reading and PDF versions for easy access and learning.

README:

本项目是一个面向开发者的大模型手册,针对国内开发者的实际需求,主打 LLM 全方位入门实践。本项目基于吴恩达老师大模型系列课程内容,对原课程内容进行筛选、翻译、复现和调优,覆盖从 Prompt Engineering 到 RAG 开发、模型微调的全部流程,用最适合国内学习者的方式,指导国内开发者如何学习、入门 LLM 相关项目。

针对不同内容的特点,我们对共计 11 门吴恩达老师的大模型课程进行了翻译复现,并结合国内学习者的实际情况,对不同课程进行了分级和排序,初学者可以先系统学习我们的必修类课程,掌握入门 LLM 所有方向都需要掌握的基础技能和概念,再选择性地学习我们的选修类课程,在自己感兴趣的方向上不断探索和学习。

如果有你非常喜欢但我们还没有进行复现的吴恩达老师大模型课程,我们欢迎每一位开发者参考我们已有课程的格式和写法来对课程进行复现并提交 PR,在 PR 审核通过后,我们会根据课程内容将课程进行分级合并。欢迎每一位开发者的贡献!

在线阅读地址:面向开发者的 LLM 入门课程-在线阅读

PDF下载地址:面向开发者的 LLM 入门教程-PDF

英文原版地址:吴恩达关于大模型的系列课程

LLM 正在逐步改变人们的生活,而对于开发者,如何基于 LLM 提供的 API 快速、便捷地开发一些具备更强能力、集成LLM 的应用,来便捷地实现一些更新颖、更实用的能力,是一个急需学习的重要能力。

由吴恩达老师与 OpenAI 合作推出的大模型系列教程,从大模型时代开发者的基础技能出发,深入浅出地介绍了如何基于大模型 API、LangChain 架构快速开发结合大模型强大能力的应用。其中,《Prompt Engineering for Developers》教程面向入门 LLM 的开发者,深入浅出地介绍了对于开发者,如何构造 Prompt 并基于 OpenAI 提供的 API 实现包括总结、推断、转换等多种常用功能,是入门 LLM 开发的经典教程;《Building Systems with the ChatGPT API》教程面向想要基于 LLM 开发应用程序的开发者,简洁有效而又系统全面地介绍了如何基于 ChatGPT API 打造完整的对话系统;《LangChain for LLM Application Development》教程结合经典大模型开源框架 LangChain,介绍了如何基于 LangChain 框架开发具备实用功能、能力全面的应用程序,《LangChain Chat With Your Data》教程则在此基础上进一步介绍了如何使用 LangChain 架构结合个人私有数据开发个性化大模型应用;《Building Generative AI Applications with Gradio》、《Evaluating and Debugging Generative AI》教程分别介绍了两个实用工具 Gradio 与 W&B,指导开发者如何结合这两个工具来打造、评估生成式 AI 应用。

上述教程非常适用于开发者学习以开启基于 LLM 实际搭建应用程序之路。因此,我们将该系列课程翻译为中文,并复现其范例代码,也为其中一个视频增加了中文字幕,支持国内中文学习者直接使用,以帮助中文学习者更好地学习 LLM 开发;我们也同时实现了效果大致相当的中文 Prompt,支持学习者感受中文语境下 LLM 的学习使用,对比掌握多语言语境下的 Prompt 设计与 LLM 开发。未来,我们也将加入更多 Prompt 高级技巧,以丰富本课程内容,帮助开发者掌握更多、更巧妙的 Prompt 技能。

所有具备基础 Python 能力,想要入门 LLM 的开发者。

《ChatGPT Prompt Engineering for Developers》、《Building Systems with the ChatGPT API》等教程作为由吴恩达老师与 OpenAI 联合推出的官方教程,在可预见的未来会成为 LLM 的重要入门教程,但是目前还只支持英文版且国内访问受限,打造中文版且国内流畅访问的教程具有重要意义;同时,GPT 对中文、英文具有不同的理解能力,本教程在多次对比、实验之后确定了效果大致相当的中文 Prompt,支持学习者研究如何提升 ChatGPT 在中文语境下的理解与生成能力。

本教程适用于所有具备基础 Python 能力,想要入门 LLM 的开发者。

如果你想要开始学习本教程,你需要提前具备:

- 至少一个 LLM API(最好是 OpenAI,如果是其他 API,你可能需要参考其他教程对 API 调用代码进行修改)

- 能够使用 Python Jupyter Notebook

本教程共包括 11 门课程,分为必修类、选修类两个类别。必修类课程是我们认为最适合初学者学习以入门 LLM 的课程,包括了入门 LLM 所有方向都需要掌握的基础技能和概念,我们也针对必修类课程制作了适合阅读的在线阅读和 PDF 版本,在学习必修类课程时,我们建议学习者按照我们列出的顺序进行学习;选修类课程是在必修类课程上的拓展延伸,包括了 RAG 开发、模型微调、模型评估等多个方面,适合学习者在掌握了必修类课程之后选择自己感兴趣的方向和课程进行学习。

必修类课程包括:

- 面向开发者的 Prompt Engineering。基于吴恩达老师《ChatGPT Prompt Engineering for Developers》课程打造,面向入门 LLM 的开发者,深入浅出地介绍了对于开发者,如何构造 Prompt 并基于 OpenAI 提供的 API 实现包括总结、推断、转换等多种常用功能,是入门 LLM 开发的第一步。

- 搭建基于 ChatGPT 的问答系统。基于吴恩达老师《Building Systems with the ChatGPT API》课程打造,指导开发者如何基于 ChatGPT 提供的 API 开发一个完整的、全面的智能问答系统。通过代码实践,实现了基于 ChatGPT 开发问答系统的全流程,介绍了基于大模型开发的新范式,是大模型开发的实践基础。

- 使用 LangChain 开发应用程序。基于吴恩达老师《LangChain for LLM Application Development》课程打造,对 LangChain 展开深入介绍,帮助学习者了解如何使用 LangChain,并基于 LangChain 开发完整的、具备强大能力的应用程序。

- 使用 LangChain 访问个人数据。基于吴恩达老师《LangChain Chat with Your Data》课程打造,深入拓展 LangChain 提供的个人数据访问能力,指导开发者如何使用 LangChain 开发能够访问用户个人数据、提供个性化服务的大模型应用。

选修类课程包括:

- 使用 Gradio 搭建生成式 AI 应用。基于吴恩达老师《Building Generative AI Applications with Gradio》课程打造,指导开发者如何使用 Gradio 通过 Python 接口程序快速、高效地为生成式 AI 构建用户界面。

- 评估改进生成式 AI。基于吴恩达老师《Evaluating and Debugging Generative AI》课程打造,结合 wandb,提供一套系统化的方法和工具,帮助开发者有效地跟踪和调试生成式 AI 模型。

- 微调大语言模型。基于吴恩达老师《Finetuning Large Language Model》课程打造,结合 lamini 框架,讲述如何便捷高效地在本地基于个人数据微调开源大语言模型。

- 大模型与语义检索。基于吴恩达老师《Large Language Models with Semantic Search》课程打造,针对检索增强生成,讲述了多种高级检索技巧以实现更准确、高效的检索增强 LLM 生成效果。

- 基于 Chroma 的高级检索。基于吴恩达老师《Advanced Retrieval for AI with Chroma》课程打造,旨在介绍基于 Chroma 的高级检索技术,提升检索结果的准确性。

- 搭建和评估高级 RAG 应用。基于吴恩达老师《Building and Evaluating Advanced RAG Applications》课程打造,介绍构建和实现高质量RAG系统所需的关键技术和评估框架。

- LangChain 的 Functions、Tools 和 Agents。基于吴恩达老师《Functions, Tools and Agents with LangChain》课程打造,介绍如何基于 LangChain 的新语法构建 Agent。

- Prompt 高级技巧。包括 CoT、自我一致性等多种 Prompt 高级技巧的基础理论与代码实现。

其他资料包括:

双语字幕视频地址:吴恩达 x OpenAI的Prompt Engineering课程专业翻译版

中英双语字幕下载:《ChatGPT提示工程》非官方版中英双语字幕

视频讲解:面向开发者的 Prompt Engineering 讲解(数字游民大会)

目录结构说明:

content:基于原课程复现的双语版代码,可运行的 Notebook,更新频率最高,更新速度最快。

docs:必修类课程文字教程版在线阅读源码,适合阅读的 md。

figures:图片文件。

核心贡献者

- 邹雨衡-项目负责人(Datawhale成员-对外经济贸易大学研究生)

- 长琴-项目发起人(内容创作者-Datawhale成员-AI算法工程师)

- 玉琳-项目发起人(内容创作者-Datawhale成员)

- 徐虎-教程编撰者(内容创作者-Datawhale成员)

- 刘伟鸿-教程编撰者(内容创作者-江南大学非全研究生)

- Joye-教程编撰者(内容创作者-数据科学家)

- 高立业(内容创作者-DataWhale成员-算法工程师)

- 邓宇文(内容创作者-Datawhale成员)

- 魂兮(内容创作者-前端工程师)

- 宋志学(内容创作者-Datawhale成员)

- 韩颐堃(内容创作者-Datawhale成员)

- 陈逸涵 (内容创作者-Datawhale意向成员-AI爱好者)

- 仲泰(内容创作者-Datawhale成员)

- 万礼行(内容创作者-视频翻译者)

- 王熠明(内容创作者-Datawhale成员)

- 曾浩龙(内容创作者-Datawhale 意向成员-JLU AI 研究生)

- 小饭同学(内容创作者)

- 孙韩玉(内容创作者-算法量化部署工程师)

- 张银晗(内容创作者-Datawhale成员)

- 左春生(内容创作者-Datawhale成员)

- 张晋(内容创作者-Datawhale成员)

- 李娇娇(内容创作者-Datawhale成员)

- 邓恺俊(内容创作者-Datawhale成员)

- 范致远(内容创作者-Datawhale成员)

- 周景林(内容创作者-Datawhale成员)

- 诸世纪(内容创作者-算法工程师)

- Zhang Yixin(内容创作者-IT爱好者)

- Sarai(内容创作者-AI应用爱好者)

其他

- 特别感谢 @Sm1les、@LSGOMYP 对本项目的帮助与支持;

- 感谢 GithubDaily 提供的双语字幕;

- 如果有任何想法可以联系我们 DataWhale 也欢迎大家多多提出 issue;

- 特别感谢以下为教程做出贡献的同学!

Made with contrib.rocks.

Datawhale 是一个专注于数据科学与 AI 领域的开源组织,汇集了众多领域院校和知名企业的优秀学习者,聚合了一群有开源精神和探索精神的团队成员。微信搜索公众号Datawhale可以加入我们。

本作品采用知识共享署名-非商业性使用-相同方式共享 4.0 国际许可协议进行许可。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-cookbook

Similar Open Source Tools

llm-cookbook

LLM Cookbook is a developer-oriented comprehensive guide focusing on LLM for Chinese developers. It covers various aspects from Prompt Engineering to RAG development and model fine-tuning, providing guidance on how to learn and get started with LLM projects in a way suitable for Chinese learners. The project translates and reproduces 11 courses from Professor Andrew Ng's large model series, categorizing them for beginners to systematically learn essential skills and concepts before exploring specific interests. It encourages developers to contribute by replicating unreproduced courses following the format and submitting PRs for review and merging. The project aims to help developers grasp a wide range of skills and concepts related to LLM development, offering both online reading and PDF versions for easy access and learning.

hdu-cs-wiki

The HDU Computer Science Lecture Notes is a comprehensive guide designed to help students navigate through various challenges in the field of computer science. It covers topics such as programming languages, artificial intelligence, software development, and more. The notes provide insights on how to effectively utilize university time, balance grades with project experience, and make informed decisions regarding career paths. Created by a collaborative effort involving students, teachers, and industry experts, the lecture notes aim to serve as a guiding tool for individuals seeking guidance in the computer science domain.

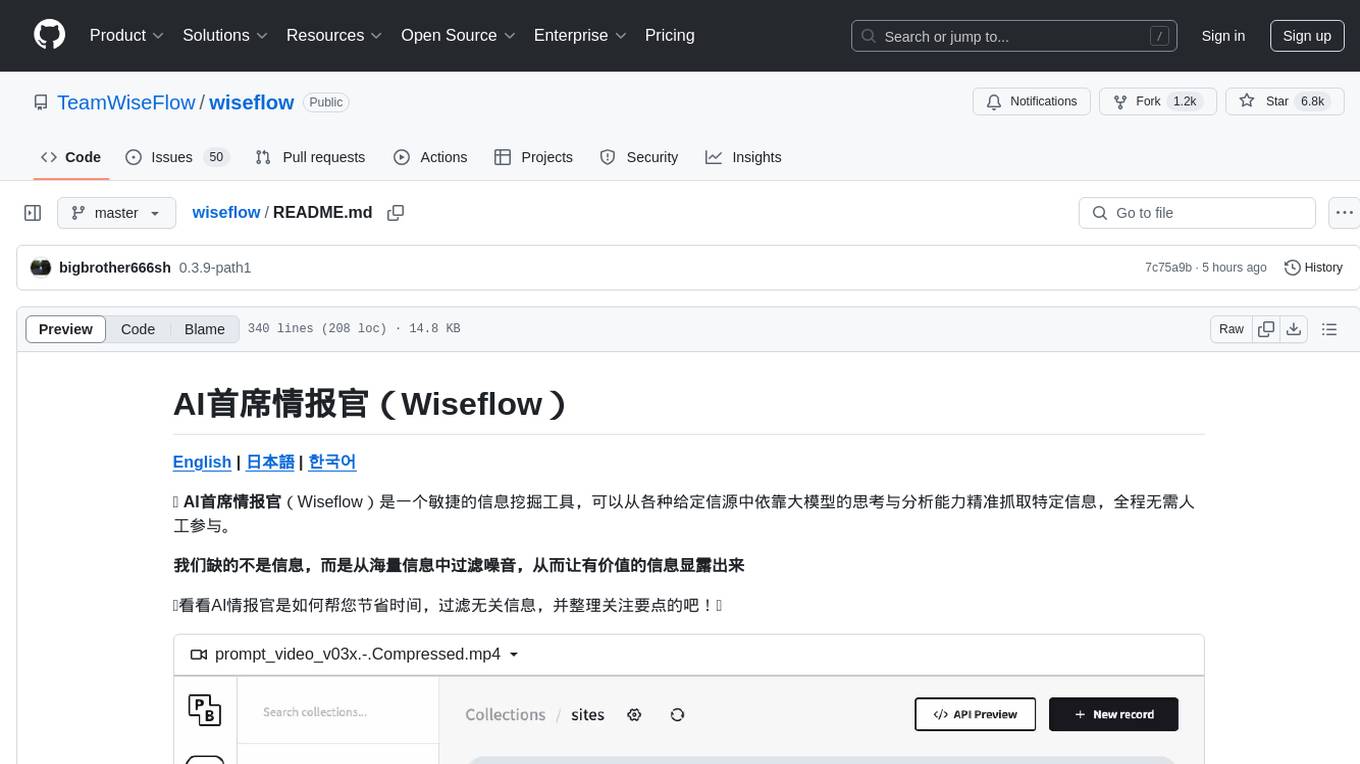

wiseflow

Wiseflow is an agile information mining tool that utilizes the thinking and analysis capabilities of large models to accurately extract specific information from various given sources, without the need for manual intervention. The tool focuses on filtering noise from a vast amount of information to reveal valuable insights. It is recommended to use normal language models for information extraction tasks to optimize speed and cost, rather than complex reasoning models. The tool is designed for continuous information gathering based on specified focus points from various sources.

chitu

Chitu is a high-performance inference framework for large language models, focusing on efficiency, flexibility, and availability. It supports various mainstream large language models, including DeepSeek, LLaMA series, Mixtral, and more. Chitu integrates latest optimizations for large language models, provides efficient operators with online FP8 to BF16 conversion, and is deployed for real-world production. The framework is versatile, supporting various hardware environments beyond NVIDIA GPUs. Chitu aims to enhance output speed per unit computing power, especially in decoding processes dependent on memory bandwidth.

jd_scripts

jd_scripts is a repository containing scripts for automating various tasks on the JD platform. The scripts provide instructions for setting up and using the tools to enhance user experience and efficiency in managing JD accounts and assets. Users can automate processes such as receiving notifications, redeeming rewards, participating in group purchases, and monitoring ticket availability. The repository also includes resources for optimizing performance and security measures to safeguard user accounts. With a focus on simplifying interactions with the JD platform, jd_scripts offers a comprehensive solution for maximizing benefits and convenience for JD users.

AI-LLM-ML-CS-Quant-Readings

AI-LLM-ML-CS-Quant-Readings is a repository dedicated to taking notes on Artificial Intelligence, Large Language Models, Machine Learning, Computer Science, and Quantitative Finance. It contains a wide range of resources, including theory, applications, conferences, essentials, foundations, system design, computer systems, finance, and job interview questions. The repository covers topics such as AI systems, multi-agent systems, deep learning theory and applications, system design interviews, C++ design patterns, high-frequency finance, algorithmic trading, stochastic volatility modeling, and quantitative investing. It is a comprehensive collection of materials for individuals interested in these fields.

AI-LLM-ML-CS-Quant-Overview

AI-LLM-ML-CS-Quant-Overview is a repository providing overview notes on AI, Large Language Models (LLM), Machine Learning (ML), Computer Science (CS), and Quantitative Finance. It covers various topics such as LangGraph & Cursor AI, DeepSeek, MoE (Mixture of Experts), NVIDIA GTC, LLM Essentials, System Design, Computer Systems, Big Data and AI in Finance, Econometrics and Statistics Conference, C++ Design Patterns and Derivatives Pricing, High-Frequency Finance, Machine Learning for Algorithmic Trading, Stochastic Volatility Modeling, Quant Job Interview Questions, Distributed Systems, Language Models, Designing Machine Learning Systems, Designing Data-Intensive Applications (DDIA), Distributed Machine Learning, and The Elements of Quantitative Investing.

PyTorch-Tutorial-2nd

The second edition of "PyTorch Practical Tutorial" was completed after 5 years, 4 years, and 2 years. On the basis of the essence of the first edition, rich and detailed deep learning application cases and reasoning deployment frameworks have been added, so that this book can more systematically cover the knowledge involved in deep learning engineers. As the development of artificial intelligence technology continues to emerge, the second edition of "PyTorch Practical Tutorial" is not the end, but the beginning, opening up new technologies, new fields, and new chapters. I hope to continue learning and making progress in artificial intelligence technology with you in the future.

HTFramework

HTFramework is a rapid development framework based on Unity, integrating modular requirements, code reusability, practicality, high cohesion, unified coding standards, extensibility, maintainability, generality, and pluggability. It provides continuous maintenance and upgrades. The framework includes modules for aspect-oriented program code tracking, audio management, controller simplification, coroutine scheduling, custom modules, custom datasets, debugging, entity-component-system, entity management, event handling, exception handling, finite state machines, hotfixing, input management, instruction system, main module access, network client, object pooling, procedures, reference pooling, resource loading, step editing, task editing, UI management, utility tools, web requests, and optional AI, ILRuntime-based hotfixing, XLua integration, and game component modules.

cc-sdd

The cc-sdd repository provides a tool for AI-Driven Development Life Cycle with Spec-Driven Development workflows for Claude Code and Gemini CLI. It includes powerful slash commands, Project Memory for AI learning, structured AI-DLC workflow, Spec-Driven Development methodology, and Kiro IDE compatibility. Ideal for feature development, code reviews, technical planning, and maintaining development standards. The tool supports multiple coding agents, offers an AI-DLC workflow with quality gates, and allows for advanced options like language and OS selection, preview changes, safe updates, and custom specs directory. It integrates AI-Driven Development Life Cycle, Project Memory, Spec-Driven Development, supports cross-platform usage, multi-language support, and safe updates with backup options.

LLM-Navigation

LLM-Navigation is a repository dedicated to documenting learning records related to large models, including basic knowledge, prompt engineering, building effective agents, model expansion capabilities, security measures against prompt injection, and applications in various fields such as AI agent control, browser automation, financial analysis, 3D modeling, and tool navigation using MCP servers. The repository aims to organize and collect information for personal learning and self-improvement through AI exploration.

writing-helper

A Next.js-based AI writing assistant that helps users organize writing style prompts and sends them to large language models (LLMs) to generate content. The tool aims to help writers, content creators, and copywriters improve writing efficiency and quality through AI technology. It features rich writing style customization, support for multiple LLM APIs, flexible API settings, user-friendly interface, real-time content editing, export function, detailed debugging information, dark/light mode support, and more.

AI-GAL

AI-GAL is a tool that offers a visual GUI for easier configuration file editing, branch selection mode for content generation, and bug fixes. Users can configure settings in config.ini, utilize cloud-based AI drawing and voice modes, set themes for script generation, and enjoy a wallpaper. Prior to usage, ensure a 4GB+ GPU, chatgpt key or local LLM deployment, and installation of stable diffusion, gpt-sovits, and rembg. To start, fill out the config.ini file and run necessary APIs. Restart a storyline by clearing story.txt in the game directory. Encounter errors? Copy the log.txt details and send them for assistance.

vocabulary-book-by-deepseek

Vocabulary Book by DeepSeek is a manual for CET-4, postgraduate entrance examination, and TOEFL vocabulary, providing word meanings, roots, example sentences, mnemonic aids, and mnemonic images. The project uses Cline + DeepSeek-R1-16b for over 80% of the code to automatically encode the vocabulary manual. The generated manual includes vocabulary from A to Z for CET-4, CET-6, postgraduate entrance examination, and TOEFL, along with features to generate Anki cards and PDFs. The tool also allows for the creation of mnemonic images for each word and articles.

ChatMemOllama

ChatMemOllama is a personal WeChat public account chatbot that combines a local AI model (provided by Ollama) and mem0 memory management functionality. The project aims to provide an intelligent, personalized chat experience. It features a local AI model for conversation, memory management through mem0 for a coherent dialogue experience, support for multiple users simultaneously (with logic issues in the test version), and quick responses within 5 seconds to users with timeout prompts. It allows or prohibits other users from calling AI, with ongoing development tasks including debugging multiple user handling logic and keyword replies, and completed tasks such as basic conversation and tool calling. The ultimate goal is to wait for pre-task testing completion.

gen-ai-experiments

Gen-AI-Experiments is a structured collection of Jupyter notebooks and AI experiments designed to guide users through various AI tools, frameworks, and models. It offers valuable resources for both beginners and experienced practitioners, covering topics such as AI agents, model testing, RAG systems, real-world applications, and open-source tools. The repository includes folders with curated libraries, AI agents, experiments, LLM testing, open-source libraries, RAG experiments, and educhain experiments, each focusing on different aspects of AI development and application.

For similar tasks

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

awesome-langchain-zh

The awesome-langchain-zh repository is a collection of resources related to LangChain, a framework for building AI applications using large language models (LLMs). The repository includes sections on the LangChain framework itself, other language ports of LangChain, tools for low-code development, services, agents, templates, platforms, open-source projects related to knowledge management and chatbots, as well as learning resources such as notebooks, videos, and articles. It also covers other LLM frameworks and provides additional resources for exploring and working with LLMs. The repository serves as a comprehensive guide for developers and AI enthusiasts interested in leveraging LangChain and LLMs for various applications.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.