cosmos-predict1

Cosmos-Predict1 is a collection of general-purpose world foundation models for Physical AI that can be fine-tuned into customized world models for downstream applications.

Stars: 115

Cosmos-Predict1 is a specialized branch of Cosmos World Foundation Models (WFMs) focused on future state prediction, offering diffusion-based and autoregressive-based world foundation models for Text2World and Video2World generation. It includes image and video tokenizers for efficient tokenization, along with post-training and pre-training scripts for Physical AI builders. The tool provides various models for different tasks such as text to visual world generation, video-based future visual world generation, and tokenization of images and videos.

README:

Cosmos-Predict1 is a key branch of Cosmos World Foundation Models (WFMs) specialized for future state prediction, often referred to as world models. The tree main branches of Cosmos WFMs are cosmos-predict, cosmos-transfer, and cosmos-reason. We visualize the architecture of Cosmos-Predict1 in the following figure.

Cosmos-Predict1 includes the following:

- Diffusion-based world foundation models for Text2World and Video2World generation, where a user can generate visual simulation based on text prompts and video prompts.

- Autoregressive-based world foundation models for Video2World generation, where a user can generate visual simulation based on video prompts and optional text prompts.

- Image and video tokenizers for tokenizing videos into continuous tokens (latent vectors) and discrete tokens (integers) efficiently and effectively.

- Post-training scripts for helping Physical AI builders post-train pre-trained Cosmos-Predict1 for their applications.

- Pre-training scripts for helping Physical AI builders train their WFMs from scratch.

Your browser does not support the video tag.

Your browser does not support the video tag.

We provide a comphrehensive set of examples to illustrate how to perform inference, post-training, etc, with Cosmos-Predict1. Click a relevant example below and start your Cosmos journey.

Please refer to INSTALL.md for general instructions on environment setup.

- Inference with diffusion-based Text2World models [with multi-GPU support]

- Inference with diffusion-based Video2World models [with multi-GPU support]

- Inference with autoregressive-based base models [with multi-GPU support]

- Inference with autoregressive-based Video2World models [with multi-GPU support]

- Inference with tokenizer models

- Post-train diffusion-based Text2World models using custom datasets [with multi-node support]

- Post-train diffusion-based Video2World models using custom datasets [with multi-node support]

- Post-train diffusion-based Text2World models using custom multi-view datasets [with multi-node support]

- Post-train diffusion-based Video2World models using custom multi-view datasets) [with multi-node support]

- Post-train autoregressive-based base models using custom datasets [with multi-node support]

- Post-train tokenizers using custom datasets [with multi-node support]

- Inference with post-trained multi-view diffusion-based Text2World models) [with multi-GPU support]

- Inference with post-trained multi-view diffusion-based Video2World models) [with multi-GPU support]

Cosmos-Predict1 include the following models

Diffusion models

- Cosmos-Predict1-7B-Text2World: Text to visual world generation

- Cosmos-Predict1-14B-Text2World: Text to visual world generation

- Cosmos-Predict1-7B-Video2World: Video + Text based future visual world generation

- Cosmos-Predict1-14B-Video2World: Video + Text based future visual world generation

Autoregressive models

- Cosmos-Predict1-4B: Future visual world generation

- Cosmos-Predict1-12B: Future visual world generation

- Cosmos-Predict1-5B-Video2World: Video + Text based future visual world generation

- Cosmos-Predict1-13B-Video2World: Video + Text based future visual world generation

Tokenizers

- Cosmos-Tokenize1-CV8×8×8-720p: Continuous Video Tokenizer with 8x8x8 spatio-temporal compression with, 121 frames context

- Cosmos-Tokenize1-DV8×16×16-720p: Discrete Video Tokenizer with 8x16x16 spatio-temporal compression, and 49 frames context

- Cosmos-Tokenize1-CI8×8-360p: Continuous Image Tokenizer with 8x8 spatial compression with low-resolution support

- Cosmos-Tokenize1-CI16x16-360p: Continuous Image Tokenizer with 16x16 spatial compression with low-resolution support

- Cosmos-Tokenize1-CV4×8×8-360p: Continuous Video Tokenizer with 4x8x8 spatio-temporal compression with low-resolution support

- Cosmos-Tokenize1-DI8×8-360p: Discrete Image Tokenizer with 8x8 spatial compression with low-resolution support

- Cosmos-Tokenize1-DI16x16-360p: Discrete Image Tokenizer with 16x16 spatial compression with low-resolution support

- Cosmos-Tokenize1-DV4×8×8-360p: Discrete Video Tokenizer with 4x8x8 spatio-temporal compression with low-resolution support

This project will download and install additional third-party open source software projects. Review the license terms of these open source projects before use.

NVIDIA Cosmos source code is released under the Apache 2 License.

NVIDIA Cosmos models are released under the NVIDIA Open Model License. For a custom license (such as exemption of guardrail), please contact [email protected].

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cosmos-predict1

Similar Open Source Tools

cosmos-predict1

Cosmos-Predict1 is a specialized branch of Cosmos World Foundation Models (WFMs) focused on future state prediction, offering diffusion-based and autoregressive-based world foundation models for Text2World and Video2World generation. It includes image and video tokenizers for efficient tokenization, along with post-training and pre-training scripts for Physical AI builders. The tool provides various models for different tasks such as text to visual world generation, video-based future visual world generation, and tokenization of images and videos.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

DecryptPrompt

This repository does not provide a tool, but rather a collection of resources and strategies for academics in the field of artificial intelligence who are feeling depressed or overwhelmed by the rapid advancements in the field. The resources include articles, blog posts, and other materials that offer advice on how to cope with the challenges of working in a fast-paced and competitive environment.

RAG-Retrieval

RAG-Retrieval is an end-to-end code repository that provides training, inference, and distillation capabilities for the RAG retrieval model. It supports fine-tuning of various open-source RAG retrieval models, including embedding models, late interactive models, and reranker models. The repository offers a lightweight Python library for calling different RAG ranking models and allows distillation of LLM-based reranker models into bert-based reranker models. It includes features such as support for end-to-end fine-tuning, distillation of large models, advanced algorithms like MRL, multi-GPU training strategy, and a simple code structure for easy modifications.

llm-awq

AWQ (Activation-aware Weight Quantization) is a tool designed for efficient and accurate low-bit weight quantization (INT3/4) for Large Language Models (LLMs). It supports instruction-tuned models and multi-modal LMs, providing features such as AWQ search for accurate quantization, pre-computed AWQ model zoo for various LLMs, memory-efficient 4-bit linear in PyTorch, and efficient CUDA kernel implementation for fast inference. The tool enables users to run large models on resource-constrained edge platforms, delivering more efficient responses with LLM/VLM chatbots through 4-bit inference.

RAG-Retrieval

RAG-Retrieval provides full-chain RAG retrieval fine-tuning and inference code. It supports fine-tuning any open-source RAG retrieval models, including vector (embedding, graph a), delayed interactive models (ColBERT, graph d), interactive models (cross encoder, graph c). For inference, RAG-Retrieval focuses on ranking (reranker) and has developed a lightweight Python library rag-retrieval, providing a unified way to call any different RAG ranking models.

rlhf_thinking_model

This repository is a collection of research notes and resources focusing on training large language models (LLMs) and Reinforcement Learning from Human Feedback (RLHF). It includes methodologies, techniques, and state-of-the-art approaches for optimizing preferences and model alignment in LLM training. The purpose is to serve as a reference for researchers and engineers interested in reinforcement learning, large language models, model alignment, and alternative RL-based methods.

veScale

veScale is a PyTorch Native LLM Training Framework. It provides a set of tools and components to facilitate the training of large language models (LLMs) using PyTorch. veScale includes features such as 4D parallelism, fast checkpointing, and a CUDA event monitor. It is designed to be scalable and efficient, and it can be used to train LLMs on a variety of hardware platforms.

kornia

Kornia is a differentiable computer vision library for PyTorch. It consists of a set of routines and differentiable modules to solve generic computer vision problems. At its core, the package uses PyTorch as its main backend both for efficiency and to take advantage of the reverse-mode auto-differentiation to define and compute the gradient of complex functions.

Awesome-local-LLM

Awesome-local-LLM is a curated list of platforms, tools, practices, and resources that help run Large Language Models (LLMs) locally. It includes sections on inference platforms, engines, user interfaces, specific models for general purpose, coding, vision, audio, and miscellaneous tasks. The repository also covers tools for coding agents, agent frameworks, retrieval-augmented generation, computer use, browser automation, memory management, testing, evaluation, research, training, and fine-tuning. Additionally, there are tutorials on models, prompt engineering, context engineering, inference, agents, retrieval-augmented generation, and miscellaneous topics, along with a section on communities for LLM enthusiasts.

CogVideo

CogVideo is an open-source repository that provides pretrained text-to-video models for generating videos based on input text. It includes models like CogVideoX-2B and CogVideo, offering powerful video generation capabilities. The repository offers tools for inference, fine-tuning, and model conversion, along with demos showcasing the model's capabilities through CLI, web UI, and online experiences. CogVideo aims to facilitate the creation of high-quality videos from textual descriptions, catering to a wide range of applications.

long-llms-learning

A repository sharing the panorama of the methodology literature on Transformer architecture upgrades in Large Language Models for handling extensive context windows, with real-time updating the newest published works. It includes a survey on advancing Transformer architecture in long-context large language models, flash-ReRoPE implementation, latest news on data engineering, lightning attention, Kimi AI assistant, chatglm-6b-128k, gpt-4-turbo-preview, benchmarks like InfiniteBench and LongBench, long-LLMs-evals for evaluating methods for enhancing long-context capabilities, and LLMs-learning for learning technologies and applicated tasks about Large Language Models.

ERNIE

ERNIE 4.5 is a family of large-scale multimodal models with 10 distinct variants, including Mixture-of-Experts (MoE) models with 47B and 3B active parameters. The models feature a novel heterogeneous modality structure supporting parameter sharing across modalities while allowing dedicated parameters for each individual modality. Trained with optimal efficiency using PaddlePaddle deep learning framework, ERNIE 4.5 models achieve state-of-the-art performance across text and multimodal benchmarks, enhancing multimodal understanding without compromising performance on text-related tasks. The open-source development toolkits for ERNIE 4.5 offer industrial-grade capabilities, resource-efficient training and inference workflows, and multi-hardware compatibility.

FuseAI

FuseAI is a repository that focuses on knowledge fusion of large language models. It includes FuseChat, a state-of-the-art 7B LLM on MT-Bench, and FuseLLM, which surpasses Llama-2-7B by fusing three open-source foundation LLMs. The repository provides tech reports, releases, and datasets for FuseChat and FuseLLM, showcasing their performance and advancements in the field of chat models and large language models.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

Awesome-LLMs-on-device

Welcome to the ultimate hub for on-device Large Language Models (LLMs)! This repository is your go-to resource for all things related to LLMs designed for on-device deployment. Whether you're a seasoned researcher, an innovative developer, or an enthusiastic learner, this comprehensive collection of cutting-edge knowledge is your gateway to understanding, leveraging, and contributing to the exciting world of on-device LLMs.

For similar tasks

cosmos-predict1

Cosmos-Predict1 is a specialized branch of Cosmos World Foundation Models (WFMs) focused on future state prediction, offering diffusion-based and autoregressive-based world foundation models for Text2World and Video2World generation. It includes image and video tokenizers for efficient tokenization, along with post-training and pre-training scripts for Physical AI builders. The tool provides various models for different tasks such as text to visual world generation, video-based future visual world generation, and tokenization of images and videos.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

MathCoder

MathCoder is a repository focused on enhancing mathematical reasoning by fine-tuning open-source language models to use code for modeling and deriving math equations. It introduces MathCodeInstruct dataset with solutions interleaving natural language, code, and execution results. The repository provides MathCoder models capable of generating code-based solutions for challenging math problems, achieving state-of-the-art scores on MATH and GSM8K datasets. It offers tools for model deployment, inference, and evaluation, along with a citation for referencing the work.

llm_qlora

LLM_QLoRA is a repository for fine-tuning Large Language Models (LLMs) using QLoRA methodology. It provides scripts for training LLMs on custom datasets, pushing models to HuggingFace Hub, and performing inference. Additionally, it includes models trained on HuggingFace Hub, a blog post detailing the QLoRA fine-tuning process, and instructions for converting and quantizing models. The repository also addresses troubleshooting issues related to Python versions and dependencies.

instruct-ner

Instruct NER is a solution for complex Named Entity Recognition tasks, including Nested NER, based on modern Large Language Models (LLMs). It provides tools for dataset creation, training, automatic metric calculation, inference, error analysis, and model implementation. Users can create instructions for LLM, build dictionaries with labels, and generate model input templates. The tool supports various entity types and datasets, such as RuDReC, NEREL-BIO, CoNLL-2003, and MultiCoNER II. It offers training scripts for LLMs and metric calculation functions. Instruct NER models like Llama, Mistral, T5, and RWKV are implemented, with HuggingFace models available for adaptation and merging.

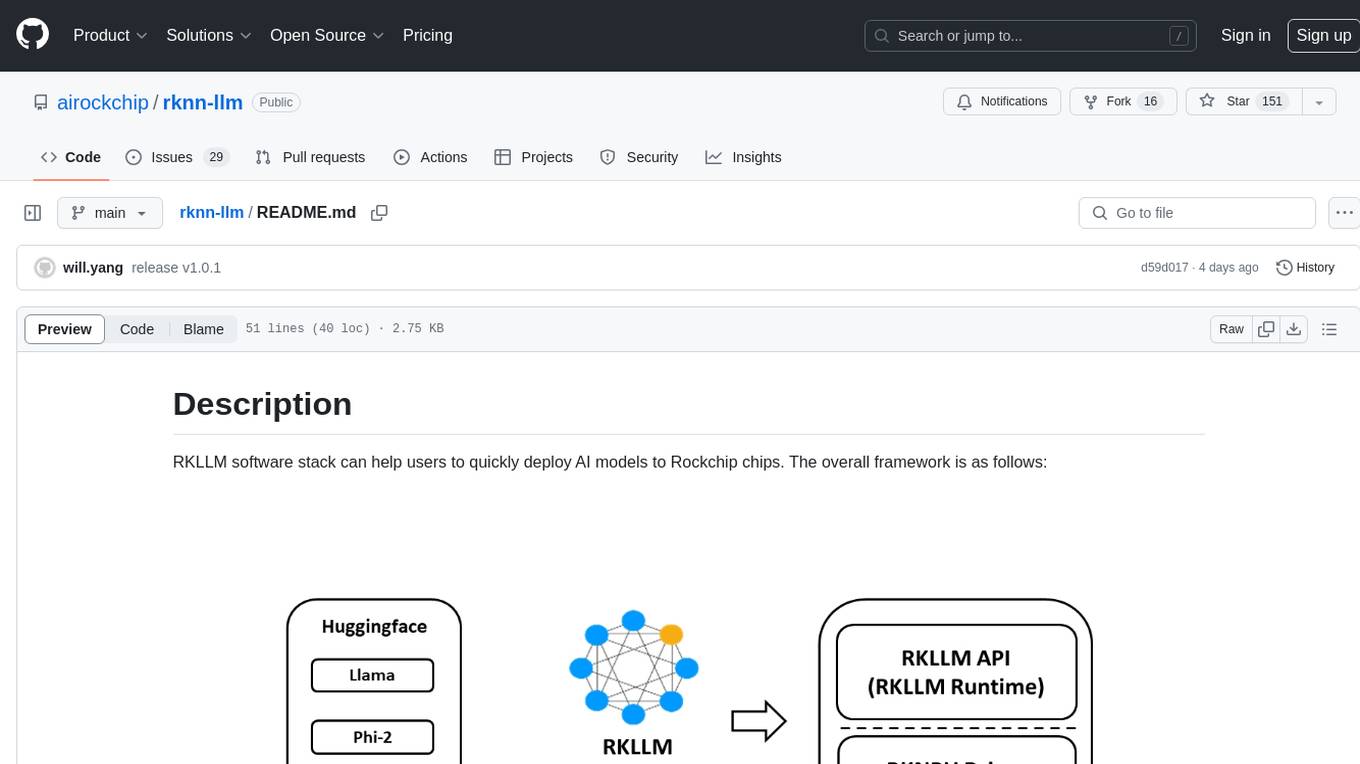

rknn-llm

RKLLM software stack is a toolkit designed to help users quickly deploy AI models to Rockchip chips. It consists of RKLLM-Toolkit for model conversion and quantization, RKLLM Runtime for deploying models on Rockchip NPU platform, and RKNPU kernel driver for hardware interaction. The toolkit supports RK3588 and RK3576 series chips and various models like TinyLLAMA, Qwen, Phi, ChatGLM3, Gemma, InternLM2, and MiniCPM. Users can download packages, docker images, examples, and docs from RKLLM_SDK. Additionally, RKNN-Toolkit2 SDK is available for deploying additional AI models.

booster

Booster is a powerful inference accelerator designed for scaling large language models within production environments or for experimental purposes. It is built with performance and scaling in mind, supporting various CPUs and GPUs, including Nvidia CUDA, Apple Metal, and OpenCL cards. The tool can split large models across multiple GPUs, offering fast inference on machines with beefy GPUs. It supports both regular FP16/FP32 models and quantised versions, along with popular LLM architectures. Additionally, Booster features proprietary Janus Sampling for code generation and non-English languages.

For similar jobs

Detection-and-Classification-of-Alzheimers-Disease

This tool is designed to detect and classify Alzheimer's Disease using Deep Learning and Machine Learning algorithms on an early basis, which is further optimized using the Crow Search Algorithm (CSA). Alzheimer's is a fatal disease, and early detection is crucial for patients to predetermine their condition and prevent its progression. By analyzing MRI scanned images using Artificial Intelligence technology, this tool can classify patients who may or may not develop AD in the future. The CSA algorithm, combined with ML algorithms, has proven to be the most effective approach for this purpose.

Co-LLM-Agents

This repository contains code for building cooperative embodied agents modularly with large language models. The agents are trained to perform tasks in two different environments: ThreeDWorld Multi-Agent Transport (TDW-MAT) and Communicative Watch-And-Help (C-WAH). TDW-MAT is a multi-agent environment where agents must transport objects to a goal position using containers. C-WAH is an extension of the Watch-And-Help challenge, which enables agents to send messages to each other. The code in this repository can be used to train agents to perform tasks in both of these environments.

awesome-synthetic-datasets

This repository focuses on organizing resources for building synthetic datasets using large language models. It covers important datasets, libraries, tools, tutorials, and papers related to synthetic data generation. The goal is to provide pragmatic and practical resources for individuals interested in creating synthetic datasets for machine learning applications.

ai-devices

AI Devices Template is a project that serves as an AI-powered voice assistant utilizing various AI models and services to provide intelligent responses to user queries. It supports voice input, transcription, text-to-speech, image processing, and function calling with conditionally rendered UI components. The project includes customizable UI settings, optional rate limiting using Upstash, and optional tracing with Langchain's LangSmith for function execution. Users can clone the repository, install dependencies, add API keys, start the development server, and deploy the application. Configuration settings can be modified in `app/config.tsx` to adjust settings and configurations for the AI-powered voice assistant.

ROSGPT_Vision

ROSGPT_Vision is a new robotic framework designed to command robots using only two prompts: a Visual Prompt for visual semantic features and an LLM Prompt to regulate robotic reactions. It is based on the Prompting Robotic Modalities (PRM) design pattern and is used to develop CarMate, a robotic application for monitoring driver distractions and providing real-time vocal notifications. The framework leverages state-of-the-art language models to facilitate advanced reasoning about image data and offers a unified platform for robots to perceive, interpret, and interact with visual data through natural language. LangChain is used for easy customization of prompts, and the implementation includes the CarMate application for driver monitoring and assistance.

AIBotPublic

AIBotPublic is an open-source version of AIBotPro, a comprehensive AI tool that provides various features such as knowledge base construction, AI drawing, API hosting, and more. It supports custom plugins and parallel processing of multiple files. The tool is built using bootstrap4 for the frontend, .NET6.0 for the backend, and utilizes technologies like SqlServer, Redis, and Milvus for database and vector database functionalities. It integrates third-party dependencies like Baidu AI OCR, Milvus C# SDK, Google Search, and more to enhance its capabilities.

LLMGA

LLMGA (Multimodal Large Language Model-based Generation Assistant) is a tool that leverages Large Language Models (LLMs) to assist users in image generation and editing. It provides detailed language generation prompts for precise control over Stable Diffusion (SD), resulting in more intricate and precise content in generated images. The tool curates a dataset for prompt refinement, similar image generation, inpainting & outpainting, and visual question answering. It offers a two-stage training scheme to optimize SD alignment and a reference-based restoration network to alleviate texture, brightness, and contrast disparities in image editing. LLMGA shows promising generative capabilities and enables wider applications in an interactive manner.

MetaAgent

MetaAgent is a multi-agent collaboration platform designed to build, manage, and deploy multi-modal AI agents without the need for coding. Users can easily create AI agents by editing a yml file or using the provided UI. The platform supports features such as building LLM-based AI agents, multi-modal interactions with users using texts, audios, images, and videos, creating a company of agents for complex tasks like drawing comics, vector database and knowledge embeddings, and upcoming features like UI for creating and using AI agents, fine-tuning, and RLHF. The tool simplifies the process of creating and deploying AI agents for various tasks.