OpenManus-RL

A live stream development of RL tunning for LLM agents

Stars: 3456

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

README:

OpenManus-RL is an open-source initiative collaboratively led by Ulab-UIUC and MetaGPT .

This project is an extended version of the original @OpenManus initiative. Inspired by successful RL tunning for reasoning LLM such as Deepseek-R1, QwQ-32B, we will explore new paradigms for RL-based LLM agent tuning, particularly building upon foundations.

We are committed to regularly updating our exploration directions and results in a dynamic, live-streaming fashion. All progress, including rigorous testing on agent benchmarks such as GAIA, AgentBench, WebShop, and OSWorld, and tuned models, will be openly shared and continuously updated.

We warmly welcome contributions from the broader community—join us in pushing the boundaries of agent reasoning and tool integration!

Code and dataset are now available! The verl submodule has been integrated for enhanced RL training capabilities.

- OpenManus-RL

- Running

- Related Work

- Acknowledgement

- Community Group

- Citation

- Documentation

- [2025-03-09] 🍺 We collect and opensource our Agent SFT dataset at Huggingface, go try it!

- [2025-03-08] 🎉 We are collaborating with @OpenManus from Metagpt to work on this project together!

- [2025-03-06] 🥳 We(UIUC-Ulab) are announcing our live-streaming project, OpenManus-RL.

@Kunlun Zhu(Ulab-UIUC), @Muxin Tian, @Zijia Liu(Ulab-UIUC), @Yingxuan Yang,@Jiayi Zhang(MetaGPT), @Xinbing Liang, @Weijia Zhang, @Haofei Yu(Ulab-UIUC), @Cheng Qian,@Bowen Jin,

We wholeheartedly welcome suggestions, feedback, and contributions from the community! Feel free to:

We welcome contributions, including fine-tuning codebase, tuning dataset, environment setup, and computing resources. Create issues for feature requests, bug reports, or ideas. Submit pull requests to help improve OpenManus-RL. Or simply reach out to us for direct collaboration. Important contributors will be listed as co-authors to our paper.

-

Agent Environment Support Setting up LLM agent environment for online RL tunning.

-

Agent Trajectories Data Collection Connect to specialized reasoning models such as deepseek-r1, QwQ-32B for more complex inference tasks to collect comprehensive agent trajectories.

-

RL-Tuning Model Paradigm Provide an RL fine-tuning approach for customizing the agent's behavior in our agent environment.

-

Test on Agent Benchmarks Evaluate our framework on agentic benchmark such as Webshop, GAIA, OSWorld, AgentBench

Our method proposes an advanced reinforcement learning (RL)-based agent tuning framework designed to significantly enhance reasoning and decision-making capabilities of large language models (LLMs). Drawing inspiration from RAGEN's Reasoning-Interaction Chain Optimization (RICO), our approach further explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments.

To benchmark the reasoning capabilities effectively, we evaluate multiple state-of-the-art reasoning models:

- GPT-O1

- Deepseek-R1

- QwQ-32B

Each model provides unique reasoning capabilities that inform downstream optimization and training strategies.

We experiment with a variety of rollout strategies to enhance agent planning efficiency and reasoning robustness, including:

- Tree-of-Thoughts (ToT): Employs tree-based reasoning paths, enabling agents to explore branching possibilities systematically.

- Graph-of-Thoughts (GoT): Utilizes graph structures to represent complex reasoning dependencies effectively.

- DFSDT (Depth-First Search Decision Trees): Optimizes action selection through depth-first search, enhancing long-horizon planning.

- Monte Carlo Tree Search (MCTS): Explores reasoning and decision paths probabilistically, balancing exploration and exploitation effectively.

These methods help identify optimal rollout techniques for various reasoning tasks.

We specifically analyze and compare several reasoning output formats, notably:

- ReAct: Integrates reasoning and action explicitly, encouraging structured decision-making.

- Outcome-based Reasoning: Optimizes toward explicit outcome predictions, driving focused goal alignment.

These formats are rigorously compared to derive the most effective reasoning representation for various tasks.

We investigate multiple post-training methodologies to fine-tune agent reasoning effectively:

- Supervised Fine-Tuning (SFT): Initializes reasoning capabilities using human-annotated instructions.

-

Generalized Reward-based Policy Optimization (GRPO): Incorporates:

- Format-based Rewards: Rewards adherence to specified reasoning structures.

- Outcome-based Rewards: Rewards accurate task completion and goal attainment.

- Proximal Policy Optimization (PPO): Enhances agent stability through proximal updates.

- Direct Preference Optimization (DPO): Leverages explicit human preferences to optimize agent outputs directly.

- Preference-based Reward Modeling (PRM): Uses learned reward functions derived from human preference data.

We train specialized agent reward models using annotated data to accurately quantify nuanced reward signals. These models are then leveraged to guide agent trajectory selection during both training and evaluation phases.

During the inference phase, trajectory scaling methods are implemented, allowing agents to flexibly adapt to varying task complexities, thus enhancing robustness and performance in real-world scenarios.

Agents are equipped with action-space awareness, employing systematic exploration strategies designed to navigate complex action spaces effectively, ultimately maximizing expected rewards.

We integrate insights and methodologies from leading RL tuning frameworks, including:

- Verl - Integrated as Git Submodule - Our primary RL framework, providing advanced training capabilities for agent optimization

- TinyZero

- OpenR1

- Trlx

The verl submodule is fully integrated into OpenManus-RL, providing:

- Advanced RL Algorithms - PPO, DPO, and custom reward modeling

- Efficient Training - Optimized for large language model fine-tuning

- Flexible Configuration - Easy customization of training parameters

- Production Ready - Battle-tested framework from Bytedance

Through these frameworks, agents can effectively balance exploration and exploitation, optimize reasoning processes, and adapt dynamically to novel environments.

In summary, our method systematically integrates advanced reasoning paradigms, diverse rollout strategies, sophisticated reward modeling, and robust RL frameworks, significantly advancing the capability and adaptability of reasoning-enhanced LLM agents.

OpenManusRL-Dataset combines agent trajectories from AgentInstruct, Agent-FLAN and [AgentTraj-L(AgentGym)] with features:

- 🔍 ReAct Framework - Reasoning-Acting integration

- 🧠 Structured Training - Separate format/reasoning learning

- 🚫 Anti-Hallucination - Negative samples + environment grounding

- 🌐 6 Domains - OS, DB, Web, KG, Household, E-commerce

| Source | Trajectories | Avg Turns | Key Features |

|---|---|---|---|

| AgentInstruct | 1,866 | 5.24 | Multi-task QA, CoT reasoning |

| Agent-FLAN | 34442 | 3-35 | Error recovery patterns, diverse real-world tasks |

| AgentTraj-L | 14485 | 3-35 | interactive environments and tasks |

| Combined | 50793 | 4-20 | Enhanced generalization, uniform format with broader task coverage |

- text-generation: ReAct-style instruction following

- conversational-ai: Tool-augmented dialogues

English

ReAct Pattern Example:

{

"id": "os_0",

"conversations": [

{"role": "user", "content": "Count files in /etc"},

{"role": "assistant", "content": "Think: Need reliable counting method\nAct: bash\n```bash\nls -1 /etc | wc -l\n```"},

{"role": "user", "content": "OS Output: 220"},

{"role": "assistant", "content": "Think: Verified through execution\nAct: answer(220)"}

]

}A simplified library for Supervised Fine-Tuning (SFT) and GRPO tunning of language models for agentic system. (developed upon Verl from Bytedance) We are still laboriously developing this part, welcome feedback.

This project uses git submodules. After cloning the repository, make sure to initialize and update the submodules:

# Clone the repository with submodules

git clone --recursive https://github.com/OpenManus/OpenManus-RL.git

# Or if already cloned, initialize and update submodules

git submodule update --init --recursiveFirst, create a conda environment and activate it:

# Create a new conda environment

conda create -n openmanus-rl python=3.10 -y

conda activate openmanus-rlThen, install the required dependencies:

# Install PyTorch with CUDA support

pip3 install torch torchvision

# Install vllm for efficient inference

# Install the main package

pip install -e .[vllm]

# flash attention 2

pip3 install flash-attn --no-build-isolation

pip install wandb

To set up the WebShop environment for evaluation:

# Change to the agentenv-webshop directory

cd openmanus_rl/environments/env_package/webshop/webshop/

# Create a new conda environment for WebShop

conda create -n agentenv_webshop python==3.10 -y

conda activate agentenv_webshop

# Setup the environment

bash ./setup.sh -d allconda acitvate openmanus-rl

pip3 install gymnasium==0.29.1

pip3 install stable-baselines3==2.6.0

pip install alfworldDownload PDDL & Game files and pre-trained MskRCNN detector (will be stored in ~/.cache/alfworld/):

alfworld-download -f

Use --extra to download pre-trained checkpoints and seq2seq data.

Make sure you have the required environments set up (see Environment Setup section above).

Download the OpenManus-RL dataset from Hugging Face.

conda activate openmanus-rl

bash scripts/ppo_train/train_alfworld.sh- Offline Training of Language Model Agents with Functions as Learnable Weights. [paper]

- FIREACT : TOWARD LANGUAGE AGENT FINE-TUNING. [paper]

- AgentTuning: Enabling Generalized Agent Abilities for LLMs. [paper]

- ReAct Meets ActRe: When Language Agents Enjoy Training Data Autonomy. [paper]

- UI-TARS: Pioneering Automated GUI Interaction with Native Agents. [paper]

- ATLAS: Agent Tuning via Learning Critical Steps. [paper]

- Toolformer: Language Models Can Teach Themselves to Use Tools. [paper]

- ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs. [paper]

- Agent-FLAN: Designing Data and Methods of Effective Agent Tuning for Large Language Models. [paper]

- AgentOhana: Design Unified Data and Training Pipeline for Effective Agent Learning. [paper]

- Training Language Models to Follow Instructions with Human Feedback. [paper]

- Deepseekmath: Pushing the Limits of Mathematical Reasoning in Open Language Models. [paper]

- DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. [paper]

- AgentBench: Evaluating LLMs as Agents. paper

- WebShop: Towards Scalable Real-World Web Interaction with Autonomous Agents. paper

- GAIA: a benchmark for General AI Assistants. paper

- ALFWorld: Aligning Text and Embodied Environments for Interactive Learning. paper

- D4RL: Datasets for Deep Data-Drive Reinforcement Learning. [paper]

- Offline Reforcement Learning with Implicit Q-Learning. [paper]

- Behavior Proximal Policy Optimization. [paper]

We extend our thanks to ulab-uiuc (https://ulab-uiuc.github.io/) and Openmanus (https://github.com/mannaandpoem/OpenManus)) team from MetaGPT for their support and shared knowledge. Their mission and community contributions help drive innovations like OpenManus forward.

We also want to gratefully thank Verl (https://github.com/volcengine/verl) and verl-agent(https://github.com/langfengQ/verl-agent) for their opensource.

We welcome all developers who are interested in this project can reach out to ([email protected])

Stay tuned for updates and the official release of our repository. Together, let's build a thriving open-source agent ecosystem!

Join our networking group on Feishu and share your experience with other developers!

Please cite the following paper if you find OpenManus helpful!

@misc{OpenManus,

author = {OpenManus-RL Team},

title = {OpenManus-RL: Open Platform for Generalist LLM Reasoning Agents with RL optimization},

year = {2025},

organization = {GitHub},

url = {https://github.com/OpenManus/OpenManus-RL},

}OpenManus-RL/

├── verl/ # Verl RL framework submodule

├── openmanus_rl/ # Main OpenManus-RL library

├── scripts/ # Training and evaluation scripts

├── configs/ # Configuration files

├── environments/ # Agent environment implementations

├── docs/ # Documentation

└── examples/ # Usage examples

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenManus-RL

Similar Open Source Tools

OpenManus-RL

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

ml-engineering

This repository provides a comprehensive collection of methodologies, tools, and step-by-step instructions for successful training of large language models (LLMs) and multi-modal models. It is a technical resource suitable for LLM/VLM training engineers and operators, containing numerous scripts and copy-n-paste commands to facilitate quick problem-solving. The repository is an ongoing compilation of the author's experiences training BLOOM-176B and IDEFICS-80B models, and currently focuses on the development and training of Retrieval Augmented Generation (RAG) models at Contextual.AI. The content is organized into six parts: Insights, Hardware, Orchestration, Training, Development, and Miscellaneous. It includes key comparison tables for high-end accelerators and networks, as well as shortcuts to frequently needed tools and guides. The repository is open to contributions and discussions, and is licensed under Attribution-ShareAlike 4.0 International.

verl

veRL is a flexible and efficient reinforcement learning training framework designed for large language models (LLMs). It allows easy extension of diverse RL algorithms, seamless integration with existing LLM infrastructures, and flexible device mapping. The framework achieves state-of-the-art throughput and efficient actor model resharding with 3D-HybridEngine. It supports popular HuggingFace models and is suitable for users working with PyTorch FSDP, Megatron-LM, and vLLM backends.

agentic

Agentic is a lightweight and flexible Python library for building multi-agent systems. It provides a simple and intuitive API for creating and managing agents, defining their behaviors, and simulating interactions in a multi-agent environment. With Agentic, users can easily design and implement complex agent-based models to study emergent behaviors, social dynamics, and decentralized decision-making processes. The library supports various agent architectures, communication protocols, and simulation scenarios, making it suitable for a wide range of research and educational applications in the fields of artificial intelligence, machine learning, social sciences, and robotics.

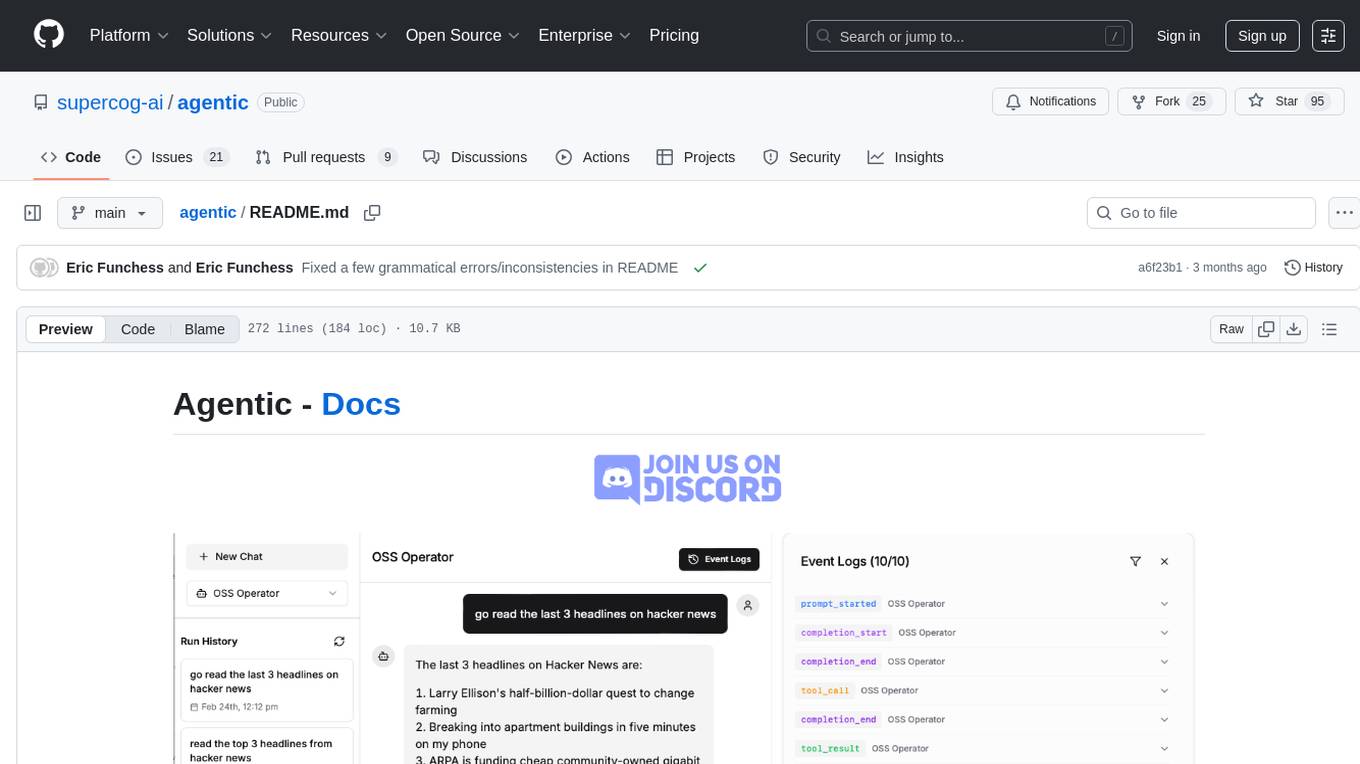

youtu-graphrag

Youtu-GraphRAG is a vertically unified agentic paradigm that connects the entire framework based on graph schema, allowing seamless domain transfer with minimal intervention. It introduces key innovations like schema-guided hierarchical knowledge tree construction, dually-perceived community detection, agentic retrieval, advanced construction and reasoning capabilities, fair anonymous dataset 'AnonyRAG', and unified configuration management. The framework demonstrates robustness with lower token cost and higher accuracy compared to state-of-the-art methods, enabling enterprise-scale deployment with minimal manual intervention for new domains.

transformers

Transformers is a state-of-the-art pretrained models library that acts as the model-definition framework for machine learning models in text, computer vision, audio, video, and multimodal tasks. It centralizes model definition for compatibility across various training frameworks, inference engines, and modeling libraries. The library simplifies the usage of new models by providing simple, customizable, and efficient model definitions. With over 1M+ Transformers model checkpoints available, users can easily find and utilize models for their tasks.

RustGPT

A complete Large Language Model implementation in pure Rust with no external ML frameworks. Demonstrates building a transformer-based language model from scratch, including pre-training, instruction tuning, interactive chat mode, full backpropagation, and modular architecture. Model learns basic world knowledge and conversational patterns. Features custom tokenization, greedy decoding, gradient clipping, modular layer system, and comprehensive test coverage. Ideal for understanding modern LLMs and key ML concepts. Dependencies include ndarray for matrix operations and rand for random number generation. Contributions welcome for model persistence, performance optimizations, better sampling, evaluation metrics, advanced architectures, training improvements, data handling, and model analysis. Follows standard Rust conventions and encourages contributions at beginner, intermediate, and advanced levels.

Fast-dLLM

Fast-DLLM is a diffusion-based Large Language Model (LLM) inference acceleration framework that supports efficient inference for models like Dream and LLaDA. It offers fast inference support, multiple optimization strategies, code generation, evaluation capabilities, and an interactive chat interface. Key features include Key-Value Cache for Block-Wise Decoding, Confidence-Aware Parallel Decoding, and overall performance improvements. The project structure includes directories for Dream and LLaDA model-related code, with installation and usage instructions provided for using the LLaDA and Dream models.

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

ms-agent

MS-Agent is a lightweight framework designed to empower agents with autonomous exploration capabilities. It provides a flexible and extensible architecture for creating agents capable of tasks like code generation, data analysis, and tool calling with MCP support. The framework supports multi-agent interactions, deep research, code generation, and is lightweight and extensible for various applications.

alignment-handbook

The Alignment Handbook provides robust training recipes for continuing pretraining and aligning language models with human and AI preferences. It includes techniques such as continued pretraining, supervised fine-tuning, reward modeling, rejection sampling, and direct preference optimization (DPO). The handbook aims to fill the gap in public resources on training these models, collecting data, and measuring metrics for optimal downstream performance.

chinese-llm-benchmark

The Chinese LLM Benchmark is a continuous evaluation list of large models in CLiB, covering a wide range of commercial and open-source models from various companies and research institutions. It supports multidimensional evaluation of capabilities including classification, information extraction, reading comprehension, data analysis, Chinese encoding efficiency, and Chinese instruction compliance. The benchmark not only provides capability score rankings but also offers the original output results of all models for interested individuals to score and rank themselves.

rag-in-action

rag-in-action is a GitHub repository that provides a practical course structure for developing a RAG system based on DeepSeek. The repository likely contains resources, code samples, and tutorials to guide users through the process of building and implementing a RAG system using DeepSeek technology. Users interested in learning about RAG systems and their development may find this repository helpful in gaining hands-on experience and practical knowledge in this area.

llms-from-scratch-rs

This project provides Rust code that follows the text 'Build An LLM From Scratch' by Sebastian Raschka. It translates PyTorch code into Rust using the Candle crate, aiming to build a GPT-style LLM. Users can clone the repo, run examples/exercises, and access the same datasets as in the book. The project includes chapters on understanding large language models, working with text data, coding attention mechanisms, implementing a GPT model, pretraining unlabeled data, fine-tuning for classification, and fine-tuning to follow instructions.

ai-tutor-rag-system

The AI Tutor RAG System repository contains Jupyter notebooks supporting the RAG course, focusing on enhancing AI models with retrieval-based methods. It covers foundational and advanced concepts in retrieval-augmented generation, including data retrieval techniques, model integration with retrieval systems, and practical applications of RAG in real-world scenarios.

RAGElo

RAGElo is a streamlined toolkit for evaluating Retrieval Augmented Generation (RAG)-powered Large Language Models (LLMs) question answering agents using the Elo rating system. It simplifies the process of comparing different outputs from multiple prompt and pipeline variations to a 'gold standard' by allowing a powerful LLM to judge between pairs of answers and questions. RAGElo conducts tournament-style Elo ranking of LLM outputs, providing insights into the effectiveness of different settings.

For similar tasks

Mortal

Mortal (凡夫) is a free and open source AI for Japanese mahjong, powered by deep reinforcement learning. It provides a comprehensive solution for playing Japanese mahjong with AI assistance. The project focuses on utilizing deep reinforcement learning techniques to enhance gameplay and decision-making in Japanese mahjong. Mortal offers a user-friendly interface and detailed documentation to assist users in understanding and utilizing the AI effectively. The project is actively maintained and welcomes contributions from the community to further improve the AI's capabilities and performance.

Smart-Connections-Visualizer

The Smart Connections Visualizer Plugin is a tool designed to enhance note-taking and information visualization by creating dynamic force-directed graphs that represent connections between notes or excerpts. Users can customize visualization settings, preview notes, and interact with the graph to explore relationships and insights within their notes. The plugin aims to revolutionize communication with AI and improve decision-making processes by visualizing complex information in a more intuitive and context-driven manner.

OpenManus-RL

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

RAG-Driven-Generative-AI

RAG-Driven Generative AI provides a roadmap for building effective LLM, computer vision, and generative AI systems that balance performance and costs. This book offers a detailed exploration of RAG and how to design, manage, and control multimodal AI pipelines. By connecting outputs to traceable source documents, RAG improves output accuracy and contextual relevance, offering a dynamic approach to managing large volumes of information. This AI book also shows you how to build a RAG framework, providing practical knowledge on vector stores, chunking, indexing, and ranking. You'll discover techniques to optimize your project's performance and better understand your data, including using adaptive RAG and human feedback to refine retrieval accuracy, balancing RAG with fine-tuning, implementing dynamic RAG to enhance real-time decision-making, and visualizing complex data with knowledge graphs. You'll be exposed to a hands-on blend of frameworks like LlamaIndex and Deep Lake, vector databases such as Pinecone and Chroma, and models from Hugging Face and OpenAI. By the end of this book, you will have acquired the skills to implement intelligent solutions, keeping you competitive in fields ranging from production to customer service across any project.

RLHF-Reward-Modeling

This repository contains code for training reward models for Deep Reinforcement Learning-based Reward-modulated Hierarchical Fine-tuning (DRL-based RLHF), Iterative Selection Fine-tuning (Rejection sampling fine-tuning), and iterative Decision Policy Optimization (DPO). The reward models are trained using a Bradley-Terry model based on the Gemma and Mistral language models. The resulting reward models achieve state-of-the-art performance on the RewardBench leaderboard for reward models with base models of up to 13B parameters.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

MathCoder

MathCoder is a repository focused on enhancing mathematical reasoning by fine-tuning open-source language models to use code for modeling and deriving math equations. It introduces MathCodeInstruct dataset with solutions interleaving natural language, code, and execution results. The repository provides MathCoder models capable of generating code-based solutions for challenging math problems, achieving state-of-the-art scores on MATH and GSM8K datasets. It offers tools for model deployment, inference, and evaluation, along with a citation for referencing the work.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.