Awesome-Text2SQL

Curated tutorials and resources for Large Language Models, Text2SQL, Text2DSL、Text2API、Text2Vis and more.

Stars: 1502

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

README:

Curated tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis and more.

We warmly welcome contributions from everyone, whether you've found a typo, a bug, have a suggestion, or want to share a resource related to LLM+Text2SQL. For detailed guidelines on how to contribute, please see our CONTRIBUTING.md file.

| WikiSQL |

Spider Exact Match(EM) |

Spider Exact Execution(EX) |

BIRD Valid Efficiency Score (VES) |

BIRD Execution Accuracy (EX) |

|

|---|---|---|---|---|---|

| 🏆1 |

93.0 (2021/05-SeaD+Execution-Guided Decoding) |

81.5 (2023/11-MiniSeek) |

91.2 (2023/11-MiniSeek) |

80.40 (2024/05-ExSL + granite-20b-code) |

71.83 (2024/07-Distillery + GPT-4o) |

| 🥈2 | 92.7 (2021/03-SDSQL+Execution-Guided Decoding) |

74.0 (2022/09-Graphix-3B + PICARD) |

86.6 (2023/08-DAIL-SQL + GPT-4 + Self-Consistency) |

77.74 (2024/07-Distillery + GPT-4o) |

70.37 (2024/05-ExSL + granite-34b-code) |

| 🥉3 | 92.5 (2020/11-IE-SQL+Execution-Guided Decoding) |

73.9 (2022/09-CatSQL + GraPPa) |

86.2 (2023/08-DAIL-SQL + GPT-4) |

76.11 (2024/07-RECAP + Gemini) |

69.03 (2024/07-RECAP + Gemini) |

| 4 | 92.2 (2020/03-HydraNet+Execution-Guided Decoding) |

73.1 (2022/09-SHiP + PICARD) |

85.6 (2023/10-DPG-SQL + GPT-4 + Self-Correction) |

73.24 (2024/07-ByteBrain) |

68.87 (2024/07-ByteBrain) |

| 5 | 91.9 (2020/12-BRIDGE+Execution-Guided Decoding) |

72.9 (2022/05-G³R + LGESQL + ELECTRA) |

85.3 (2023/04-DIN-SQL + GPT-4) |

72.78 (2024/05-ExSL + granite-20b-code) |

67.86 (2024/05-ExSL + granite-20b-code) |

| 6 | 91.8 (2019/08-X-SQL+Execution-Guided Decoding) |

72.4 (2022/08-RESDSQL+T5-1.1-lm100k-xl) |

83.9 (2023/07-Hindsight Chain of Thought with GPT-4) |

72.63 (2024/05-CHESS) ) |

66.69 (2024/05-CHESS) |

| 7 | 91.4 (2021/03-SDSQL) |

72.4 (2022/05-T5-SR) |

82.3 (2023/06-C3 + ChatGPT + Zero-Shot) |

71.35 (2024/01-MCS-SQL + GPT-4) |

65.45 (2024/01-MCS-SQL + GPT-4) |

| 8 | 91.1 (2020/12-BRIDGE) |

72.2 (2022/12-N-best List Rerankers + PICARD) |

80.8 (2023/07-Hindsight Chain of Thought with GPT-4 and Instructions) |

69.56 (2024/04-GRA-SQL) |

65.34 (2024/07-Insights AI) |

| 9 | 91.0 (2021/04-Text2SQLGen + EG) |

72.1 (2021/09-S²SQL + ELECTRA ) |

79.9 (2023/02-RESDSQL-3B + NatSQ) |

68.90 (2024/02-PB-SQL) |

64.95 (2024/04-OpenSearch-SQL,v1 + GPT-4) |

| 10 | 90.5 (2020/11-SeqGenSQL+EG) |

72.0 (2023/02-RESDSQL-3B + NatSQL) |

78.5 (2022/11-SeaD + PQL) |

68.82 (2024/07-Insights AI) |

64.84 (2024/02-PB-SQL v1) |

- Text-to-SQL (or Text2SQL), as the name implies, is to convert text into SQL. A more academic definition is to convert natural language problems in the database field into structured query languages that can be executed in relational databases. Therefore, Text-to-SQL can also be abbreviated as NL2SQL.

- Input: natural language questions, such as "Query the relevant information of the table t_user, and the results are sorted in descending order by id, and only the first 10 data are kept."

- Output: SQL, such as "SELECT * FROM t_user ORDER BY id DESC LIMIT 10"

- (2023-International Conference on Very Large Data Bases, VLDB, CCF-A)A survey on deep learning approaches for text-to-SQL [paper]

- (2022-IEEE Transactions on Knowledge and Data Engineering, TKDE, CCF-A) A Survey on Text-to-SQL Parsing: Concepts, Methods, and Future Directions [paper]

- (2022-International Conference on Computational Linguistics, COLOING, CCF-B) Recent Advances in Text-to-SQL: A Survey of What We Have and What We Expect [paper]

- (2022-arXiv)Deep Learning Driven Natural Languages Text to SQL Query Conversion: A Survey [paper]

-

(2024-arXiv, None) DB-GPT-Hub: Towards Open Benchmarking Text-to-SQL Empowered by Large Language Models [paper] [code]

-

(2023-arXiv, None) MAC-SQL: A Multi-Agent Collaborative Framework for Text-to-SQL [paper] [code]

-

(2023-arXiv, None) DBCᴏᴘɪʟᴏᴛ: Scaling Natural Language Querying to Massive Databases [paper] [code]

-

(2023-arXiv, None) Text-to-SQL Empowered by Large Language Models: A Benchmark Evaluation [paper] [code]

-

(2023-AAAI 2023, CCF-A) RESDSQL: Decoupling Schema Linking and Skeleton Parsing for Text-to-SQL [paper] [code]

-

(2023-arXiv, None) Can LLM Already Serve as A Database Interface? A BIg Bench for Large-Scale Database Grounded Text-to-SQLs [paper] [code]

-

(2023-arXiv, None) DIN-SQL: Decomposed In-Context Learning of Text-to-SQL with Self-Correction [paper] [code]

-

(2023-arXiv, None) A comprehensive evaluation of ChatGPT's zero-shot Text-to-SQL capability [paper] [code]

-

(2023-ICLR, CCF-A) Binding Language Models in Symbolic Languages [paper] [code]

-

(2023-SIGMOD, CCF-A) Few-shot Text-to-SQL Translation using Structure and Content Prompt Learning [paper] [code]

-

(2023-ICASSP, CCF-B) T5-SR: A Unified Seq-to-Seq Decoding Strategy for Semantic Parsing [paper]

-

(2022-ACL, CCF-A) S2SQL: Injecting Syntax to Question-Schema Interaction Graph Encoder for Text-to-SQL Parsers [paper]

-

(2022-NAACL, CCF-B) SeaD: End-to-end Text-to-SQL Generation with Schema-aware Denoising [paper]

-

(2022-EMNLP, CCF-B) STAR: SQL Guided Pre-Training for Context-dependent Text-to-SQL Parsing [paper] [code]

-

(2022-EMNLP, CCF-B) RASAT: Integrating Relational Structures into Pretrained Seq2Seq Model for Text-to-SQL [paper] [code]

-

(2022-EMNLP, CCF-B) CQR-SQL: Conversational Question Reformulation Enhanced Context-Dependent Text-to-SQL Parsers [paper]

-

(2022-ACL, CCF-A) HIE-SQL: History Information Enhanced Network for Context-Dependent Text-to-SQL Semantic Parsing [paper]

-

(2022-arXiv, None) Importance of Synthesizing High-quality Data for Text-to-SQL Parsing [paper]

-

(2021-ACL, CCF-A) Decoupled Dialogue Modeling and Semantic Parsing for Multi-Turn Text-to-SQL [paper]

-

(2021-arXiv, None) Pay More Attention to History: A Context Modelling Strategy for Conversational Text-to-SQL [paper] [code]

-

(2021-ICLR, CCF-A) SCORE: Pre-training for Context Representation in Conversational Semantic Parsing [paper]

-

(2021-DASFAA, CCF-B) An Interactive NL2SQL Approach with Reuse Strategy [paper]

-

(2021-NAACL, CCF-B) Structure-Grounded Pretraining for Text-to-SQL [paper]

-

(2021-EMNLP, CCF-B) PICARD:Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models [paper] [code]

-

(2021-ICLR, CCF-A) GraPPa: Grammar-Augmented Pre-Training for Table Semantic Parsing [paper] [code]

-

(2021-ACL, CCF-A) LGESQL: Line Graph Enhanced Text-to-SQL Model with Mixed Local and Non-Local Relations [paper] [code]

-

(2020-EMNLP, CCF-B) Bridging Textual and Tabular Data for Cross-Domain Text-to-SQL Semantic Parsing [paper] [code]

-

(2020-ACL, CCF-A) TaBERT: Pretraining for Joint Understanding of Textual and Tabular Data [paper] [code]

-

(2020-ACL, CCF-A) RAT-SQL: Relation-Aware Schema Encoding and Linking for Text-to-SQL Parsers [paper] [code]

-

(2020-EMNLP, CCF-B) Mention Extraction and Linking for SQL Query Generation [paper]

-

(2020-EMNLP, CCF-B) IGSQL: Database Schema Interaction Graph Based Neural Model for Context-Dependent Text-to-SQL Generation [paper] [code]

-

(2020-arXiv, None) Hybrid Ranking Network for Text-to-SQL [paper] [code]

-

(2019-arXiv, None) X-SQL: reinforce schema representation with context [paper]

-

(2019-EMNLP, CCF-B) Global Reasoning over Database Structures for Text-to-SQL Parsing [paper] [code]

-

(2019-EMNLP, CCF-B) Editing-Based SQL Query Generation for Cross-Domain Context-Dependent Questions [paper] [code]

-

(2019-ACL, CCF-A) Representing Schema Structure with Graph Neural Networks for Text-to-SQL Parsing [paper] [code]

-

(2019-ACL, CCF-A) Towards Complex Text-to-SQL in Cross-Domain Database with Intermediate Representation [paper] [code]

-

(2018-EMNLP, CCF-B) SyntaxSQLNet: Syntax Tree Networks for Complex and Cross-DomainText-to-SQL Task [paper] [code]

-

(2018-NAACL, CCF-B) TypeSQL: Knowledge-based Type-Aware Neural Text-to-SQL Generation [paper] [code]

-

(2017-arXiv, None) SQLNet: Generating Structured Queries From Natural Language Without Reinforcement Learning [paper] [code]

-

- 2023/02, Meta AI proposes the open source LLM Llama, which has four scales: 7b, 13b, 33b, and 65b.

-

ChatGLM [paper] [code] [model]

- 2023/03, Tsinghua University proposes the open bilingual language model ChatGLM, based on General Language Model framework, with the specification of 7b.

-

- 2023/03, Stanford University proposes Alpaca, an open source LLM fine-tuned based on the Llama 7b model. There are 1 specification of 7b, and the training is simpler and cheaper.

-

- 2023/03, UC Berkeley University, CMU and Stanford University propose Vicuna, an open souce LLM based on the Llama model, has two specifications: 7b and 13b.

-

WizardLM [paper] [code] [model]

- 2023/04, Peking University and Microsoft propose WizardLM, a LLM of evolutionary instructions, with three specifications of 7b, 13b, and 30b. 2023/06, They propose WizardMath, a LLM in the field of mathematics. 2023/08, They propose WizardCoder, a LLM in the field of code.

-

- 2023/06, United Arab Emirates proposes Falcon, an open source LLM trained solely on refinedweb datasets, with four parameter specifications of 1b, 7b, 40b and 180b. It is worth noting that the performance on model 40B exceeds that of 65B LLaMA.

-

ChatGLM2[paper] [code] [model]

- 2023/06, Tsinghua University proposes the second-generation version of ChatGLM,with the specification of 7b, which has stronger performance, longer context, more efficient inference and more open license.

-

- 2023/06, Baichuan Intelligent Technology proposes the Baichuan-7B, an open-source, large-scale pre-trained language model based on Transformer architecture, which contains 7 billion parameters and trained on approximately 1.2 trillion tokens. It supports both Chinese and English languages with a context window length of 4096.

-

- 2023/07, Baichuan Intelligent Technology proposes the Baichuan-13B, an open-source, commercially available large-scale language model, following Baichuan-7B, which has two versions: pre-training (Baichuan-13B-Base) and alignment (Baichuan-13B-Chat).

-

InternLM [paper] [code] [model]

- 2023/07, Shanghai AI Laboratory and SenseTime propose the InternLM, which has open-sourced a 7b and 20b parameter base models and chat models tailored for practical scenarios and the training system.

-

Llama 2 [paper] [code] [model]

- 2023/07, Meta AI proposes the second-generation Llama series open-source LLM Llama 2. Compared with Llama 1, the training data is 40% more, and the context length is doubled. The model has four specifications: 7b, 13b, 34b, and 70b, but 34b is not open source.

-

Code Llama [paper] [code] [model]

- 2023/08, Meta AI proposes Code LLama, based on Llama 2. Code Llama reaches state-of-the-art performance among open models on several code benchmarks. There are foundation models (Code Llama), Python specializations (Code Llama - Python), and instruction-following models, with 7B, 13B and 34B parameters each. 2024/01, Meta AI open sourced CodeLlama-70b, CodeLlama-70b-Python and CodeLlama-70b-Instruct.

-

- 2023/08, Alibaba Cloud proposes the 7b-parameter version of the large language model series Qwen-7B (abbr. Tongyi Qianwen), is pretrained on a large volume of data, including web texts, books, codes, etc, which has open sourced two models with Qwen-7B and Qwen-7B-Chat. 2023/09, Alibaba Cloud updated the Qwen-7B and Qwen-7B-Chat and open sourced Qwen-14B and Qwen-14B-Chat. 2023/11, they open sourced Qwen-1.8B, Qwen-1.8B-Chat, Qwen-72B and Qwen-72B-Chat.

-

Baichuan 2 [paper] [code] [model]

- 2023/09, Baichuan Intelligent Technology proposes the new generation of open-source large language models Baichuan 2, trained on a high-quality corpus with 2.6 trillion tokens, which has base and chat versions for 7B and 13B, and a 4bits quantized version for the chat model.

-

- 2023/09, Microsoft Research proposes the open source language model phi-1.5, a Transformer with 1.3 billion parameters, which was trained using the same data sources as phi-1, augmented with a new data source that consists of various NLP synthetic texts. When assessed against benchmarks testing common sense, language understanding, and logical reasoning, phi-1.5 demonstrates a nearly state-of-the-art performance among models with less than 10 billion parameters. 2023/12, They propose Phi-2, a 2.7 billion-parameter language model that demonstrates outstanding reasoning and language understanding capabilities, showcasing state-of-the-art performance among base language models with less than 13 billion parameters.

-

Mistral-7B [paper] [code] [model]

- 2023/10, Mistral-AI company proposes the open source LLM Mistral 7B, a 7–billion-parameter language model engineered for superior performance and efficiency. Mistral 7B outperforms the best open 13B model (Llama 2) across all evaluated benchmarks, and the best released 34B model (Llama 1) in reasoning, mathematics, and code generation. They also provide a model fine-tuned to follow instructions, Mistral 7B – Instruct, that surpasses Llama 2 13B–chat model both on human and automated benchmarks. 2023/12,They propose the open source LLM Mixtral-8x7B, a pretrained generative Sparse Mixture of Experts, which outperforms Llama 2 70B on most benchmarks.

-

Deepseek [paper] [code] [model]

- 2023/11, DeepSeek-AI company proposes the open source LLM deepseek, which has been trained from scratch on a vast dataset of 2 trillion tokens in both English and Chinese. Similarly, the deepseek LLM mainly has two categories: base and chat, with two parameter formats of 7b and 67b respectively. Data from its paper shows that deepSeek LLM 67b surpasses LLaMA-2 70b across a range of benchmarks, especially in the domains of code, mathematics, and reasoning. Furthermore, DeepSeek LLM 67B Chat exhibits superior performance compared to GPT-3.5.

-

MiniCPM [paper] [code] [model]

- 2024/02, ModelBest Inc. and TsinghuaNLP proposes the open source LLM MiniCPM, which is an End-Side LLM, with only 2.4B parameters excluding embeddings (2.7B in total). It is worth that MiniCPM has very close performance compared with Mistral-7B on open-sourced general benchmarks with better ability on Chinese, Mathematics and Coding after SFT. The overall performance exceeds Llama2-13B, MPT-30B, Falcon-40B, etc.

-

Mixtral-8x22B [paper] [code] [model]

- 2024/04, Mistral AI proposed the latest open model Mixtral 8x22B. It sets a new standard for performance and efficiency within the AI community. It is a sparse Mixture-of-Experts (SMoE) model that uses only 39B active parameters out of 141B, offering unparalleled cost efficiency for its size.

-

- 2024/04, Microsoft proposed the Phi-3 models, which are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks. Phi-3-mini is available in two context-length variants—4K and 128K tokens. It is the first model in its class to support a context window of up to 128K tokens, with little impact on quality. Phi-3-small (7B) and Phi-3-medium (14B) will be available in the Azure AI model catalog and other model gardens shortly.

-

Llama 3 [paper] [code] [model]

- 2024/04, Meta AI proposed the third generation Llama series open source large model Llama 3. The model has 2 parameter specifications, 8b and 70b, with base and instruct versions respectively. Excitingly, Llama 3 models are a major leap over Llama 2 and establish a new state-of-the-art for LLM models at those scales.

-

Qwen-1.5-110B [paper] [code] [model]

- 2024/04, Alibaba Cloud proposed the first 100B+ model of the Qwen1.5 series, Qwen1.5-110B, which achieves comparable performance with Meta-Llama3-70B in the base model evaluation, and outstanding performance in the chat evaluation, including MT-Bench and AlpacaEval 2.0. Qwen1.5 is the beta version of Qwen2, which has 9 model sizes, including 0.5B, 1.8B, 4B, 7B, 14B, 32B, 72B, and 110B dense models, and an MoE model of 14B with 2.7B activated.

-

- 2024/06, Alibaba Cloud proposed the evolution from Qwen1.5 to Qwen2, which has 5 model sizes, including Qwen2-0.5B, Qwen2-1.5B, Qwen2-7B, Qwen2-57B-A14B, and Qwen2-72B. Qwen2-72B exhibits superior performance compared to leading models such as Llama-3-70B. Notably, it surpasses the performance of its predecessor, Qwen1.5-110B, despite having fewer parameters.

-

- 2021/03, Tsinghua University and others propose P-Tuning, a fine-tuning method for LLM, which uses trainable continuous prompt word embeddings to reduce the cost of fine-tuning.

-

- 2021/06, Microsoft proposes the Low-Rank Adaptation method for fine-tuning LLM by freezing the pre-training weights.

-

- 2021/10, Tsinghua University proposes P-Tuning V2, an improved version of P-Tuning with better performance.

-

- 2022/12, OpenAI uses the RLHF (Reinforcement Learning from Human Feedback) method to train ChatGPT, and uses human feedback signals to directly optimize the language model, with excellent performance.

-

- 2023/04, Alibaba proposes a novel learning paradigm called RRHF(Rank Responses to Align Language Models with Human Feedback without tears), which can be tuned as easily as fine-tuning and achieve a similar performance as PPO in HH dataset.

-

- 2023/05, Washington University proposes the qlora method, based on the frozen 4bit quantization model, combined with LoRA method training, which further reduces the cost of fine-tuning.

-

- 2023/07, Tencent proposes RLTF(Reinforcement Learning from Unit Test Feedback), a novel online RL framework with unit test feedback of multi-granularity for refining code LLMs.

-

RRTF [paper]

- 2023/07, Huawei proposes RRTF(Rank Responses to align Test&Teacher Feedback). Compared with RLHF, RRHF can efficiently align the output probabilities of a language model with human preferences, with only 1-2 models required during the tuning period, and it is simpler than PPO in terms of implementation, hyperparameter tuning, and training.

-

RLAIF [paper]

- 2023/09, Google proposes RLAIF (RL from AI Feedback), a technique where preferences are labeled by an off-the-shelf LLM in lieu of humans. They find that the RLHF and RLAIF methods achieve the similar results on the task of summarization.

-

WikiSQL [paper] [code] [dataset]

- 2017/09, Salesforce proposes a large Text-to-SQL dataset WikiSQL, the data comes from Wikipedia, which belongs to a single domain, contains 80,654 natural language questions, and 77,840 SQL statements. The form of SQL statements is relatively simple, and does not include sorting, grouping, and subqueries and other complex operations.

-

Spider [paper] [code] [dataset]

- 2018/09, Yale University proposes the Text-to-SQL dataset Spider with multiple databases, multiple tables, and single-round query. It is also recognized as the most difficult large-scale cross-domain evaluation list in the industry. It contains 10,181 natural language questions and 5,693 SQL statements. Involving more than 200 databases in 138 different fields, the difficulty level is divided into: easy, medium, difficult, and extremely difficult. 2024/02, Yale University has open sourced the test collection of Spider 1.0 leaderboard, and they will open source the Spider 2.0 data set in March.

-

SParC [paper] [code] [dataset]

- 2019/06, Yale University proposes a large dataset SParC for complex, cross-domain, and context-dependent(multi-turn) semantic parsing and text-to-SQL task, which consists of 4,298 coherent question sequences (12k+ unique individual questions annotated with SQL queries annotated by 14 Yale students), obtained from user interactions with 200 complex databases over 138 domains.

-

CSpider [paper] [code] [dataset]

- 2019/09, Westlake University propposes a large Chinese dataset CSpider for complex and cross-domain semantic parsing and text-to-SQL task, translated from Spider by 2 NLP researchers and 1 computer science student, which consists of 10,181 questions and 5,693 unique complex SQL queries on 200 databases with multiple tables covering 138 different domains.

-

CoSQL [paper] [code] [dataset]

- 2019/09, Yale University and Salesforce Research propose a cross-domain database CoSQL, which consists of 30k+ turns plus 10k+ annotated SQL queries, obtained from a Wizard-of-Oz (WOZ) collection of 3k dialogues querying 200 complex DBs spanning 138 domains.

-

- 2020/06, Zhuiyi Technology proposes a large-scale cross-domain Natural Language to SQL dataset TableQA in Chinese language consisting 64,891 questions and 20,311 unique SQL queries on over 6,000 tables.

-

- 2020/11, Baidu proposes a larges-scale and pragmatic Chinese dataset DuSQL for the cross-domain text-toSQL task, containing 200 databases, 813 tables, and 23,797 question/SQL pairs.

-

KaggleDBQA [paper] [code] [dataset]

- 2021/06, University of Washington and Microsoft Research propose KaggleDBQA, a cross-domain evaluation dataset of real Web databases with domain-specific data types, original formatting, and unrestricted questions. It includes 272 examples across 8 databases, with an average of 2.25 tables per database. The dataset is notable for its real-world data sources, natural question authoring environments, and database documentation with rich domain knowledge. Key statistics: 8.7% WHERE clauses, 73.5% VAL, 24.6% SELECT, and 6.8% NON-SELECT.

-

CHASE [paper] [code] [dataset]

- 2021/08, Xi'an Jiaotong University and Microsoft propose the first cross-domain, multi-round Text-to-SQL Chinese dataset, which contains a list of 5459 multi-round questions and 17940 <query, SQL> binary groups.

-

BIRD-SQL [paper] [code] [dataset]

- 2023/05, the University of Hong Kong and Alibaba propose a large-scale cross-domain dataset BIRD, which contains over 12,751 unique question-SQL pairs, 95 big databases with a total size of 33.4 GB. It also covers more than 37 professional domains, such as blockchain, hockey, healthcare and education, etc.

-

BIRD-SQL Mini-Dev [paper] [code] [dataset]

- 2024/06, the collaboration between the University of Hong Kong and Alibaba continues with the release of BIRD-SQL Mini-Dev, a lite version of their development dataset designed for efficient and cost-effective SQL model testing. This dataset compiles 500 high-quality text2SQL pairs from 11 distinct databases and supports both MySQL and PostgreSQL formats. It features the introduction of two new evaluation metrics: the Reward-based Valid Efficiency Score (R-VES) and the Soft F1-Score, both currently in beta and specifically developed to enhance the accuracy and efficiency of text-to-SQL models in a development setting.

-

Execution Accuracy (EX) [paper]

- Definition: Calculate the proportion of the correct number of SQL execution results in the data set, and the result may be overestimated.

-

Exact Match (EM) [paper]

- Definition: Calculate the matching degree between the SQL generated by the model and the marked SQL, and the result may be underestimated.

-

- MindSQL is a Python RAG (Retrieval-Augmented Generation) Library designed to streamline the interaction between users and their databases using just a few lines of code. With seamless integration for renowned databases such as PostgreSQL, MySQL, and SQLite, MindSQL also extends its capabilities to major databases like Snowflake and BigQuery by extending the core class. The library utilizes large language models (LLM) like GPT-4, Llama 2, Google Gemini, and supports knowledge bases like ChromaDB and Fais

-

- Prem text2sql is a lightweight library that aims to provide modular and extendible tools for natural language to SQL applications. This would help developers build more controllable RAG solutions based on different databases. It seeks to offer toolings for evaluation, fine-tuning, connectors, and agentic frameworks. We are currently in the building stages, with stable support for evaluating text2sql models and pipelines. Later versions aim to release features for fine-tuning and Agents.

-

- The eosphoros organization proposes an open source project focusing on Text-to-SQL fine-tuning based on LLM, including large-scale model download, dataset preprocessing, fine-tuning technologies such as LoRA and QLoRA, model prediction, model evaluation and other steps.

-

- The Defog organization proposes an advanced Text-to-SQL LLM, which has outstanding performance and is better than GPT3.5, wizardcoder and starcoder, etc., second only to GPT4.

-

- This project is based on the LLaMa 2 7b model for Text-to-SQL fine-tuning, which includes a complete training, fine-tuning, and evaluation process.

-

- Easy-to-use LLM fine-tuning framework (LLaMA-2, BLOOM, Falcon, Baichuan, Qwen, Chat

If you find Text2SQL useful for your research or development, please cite the following paper:

@misc{zhou2024dbgpthub,

title={DB-GPT-Hub: Towards Open Benchmarking Text-to-SQL Empowered by Large Language Models},

author={Fan Zhou and Siqiao Xue and Danrui Qi and Wenhui Shi and Wang Zhao and Ganglin Wei and Hongyang Zhang and Caigai Jiang and Gangwei Jiang and Zhixuan Chu and Faqiang Chen},

year={2024},

eprint={2406.11434},

archivePrefix={arXiv},

primaryClass={id='cs.DB' full_name='Databases' is_active=True alt_name=None in_archive='cs' is_general=False description='Covers database management, datamining, and data processing. Roughly includes material in ACM Subject Classes E.2, E.5, H.0, H.2, and J.1.'}

}-

- They are a team of technology enthusiasts from internet companies and NLP graduate students who are passionate about open source projects. Their focus is on developing solutions that protect the privacy and security of databases and large language models. Their aim is to ensure that the abilities of these models remain absolutely private, secure, and under control.

-

- Awesome AIGC Tutorials houses a curated collection of tutorials and resources spanning across Large Language Models, AI Painting, and related fields. Discover in-depth insights and knowledge catered for both beginners and advanced AI enthusiasts.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Text2SQL

Similar Open Source Tools

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

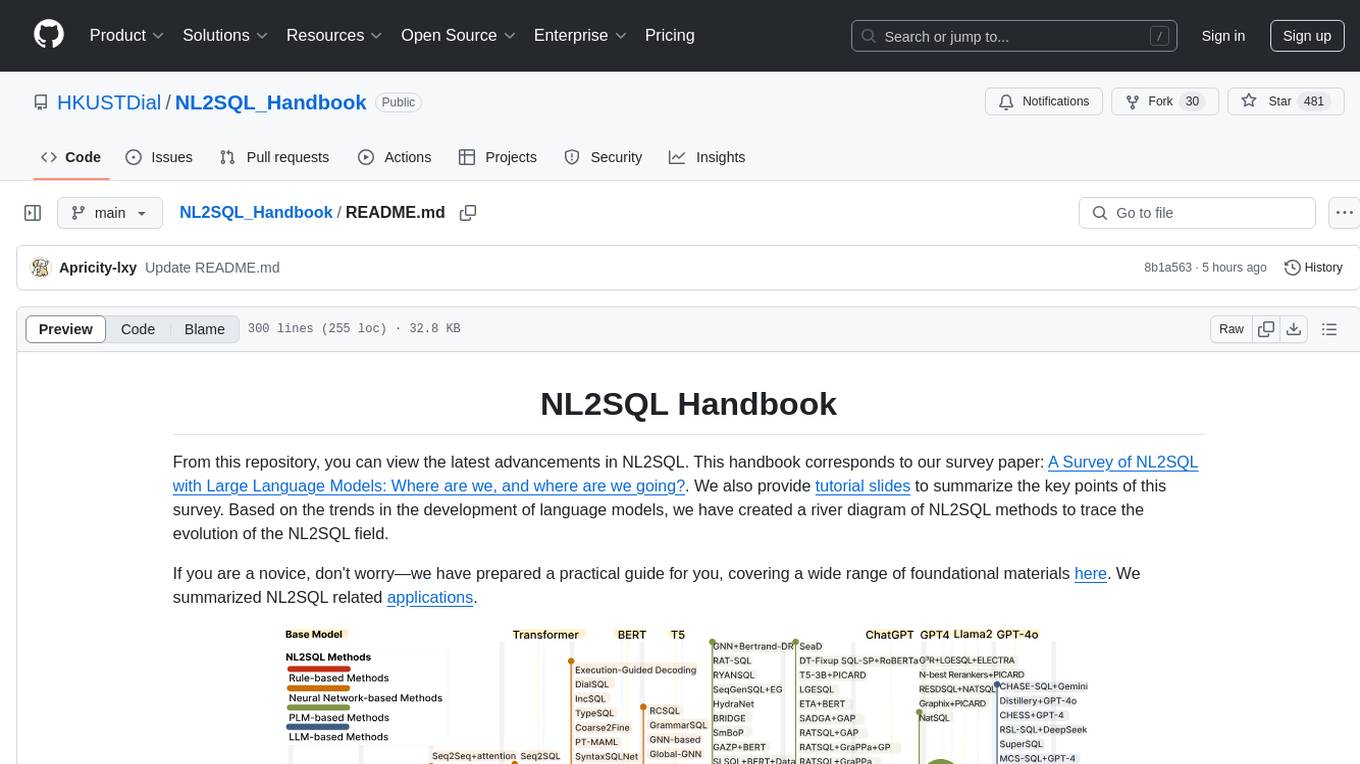

NL2SQL_Handbook

NL2SQL Handbook provides a comprehensive overview of Natural Language to SQL (NL2SQL) advancements, including survey papers, tutorial slides, and a river diagram of NL2SQL methods. It covers the evolution of NL2SQL solutions, module-based methods, benchmark development, and future directions. The repository also offers practical guides for beginners, access to high-performance language models, and evaluation metrics for NL2SQL models.

Awesome-LLM-Post-training

The Awesome-LLM-Post-training repository is a curated collection of influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies. It covers various aspects of LLMs, including reasoning, decision-making, reinforcement learning, reward learning, policy optimization, explainability, multimodal agents, benchmarks, tutorials, libraries, and implementations. The repository aims to provide a comprehensive overview and resources for researchers and practitioners interested in advancing LLM technologies.

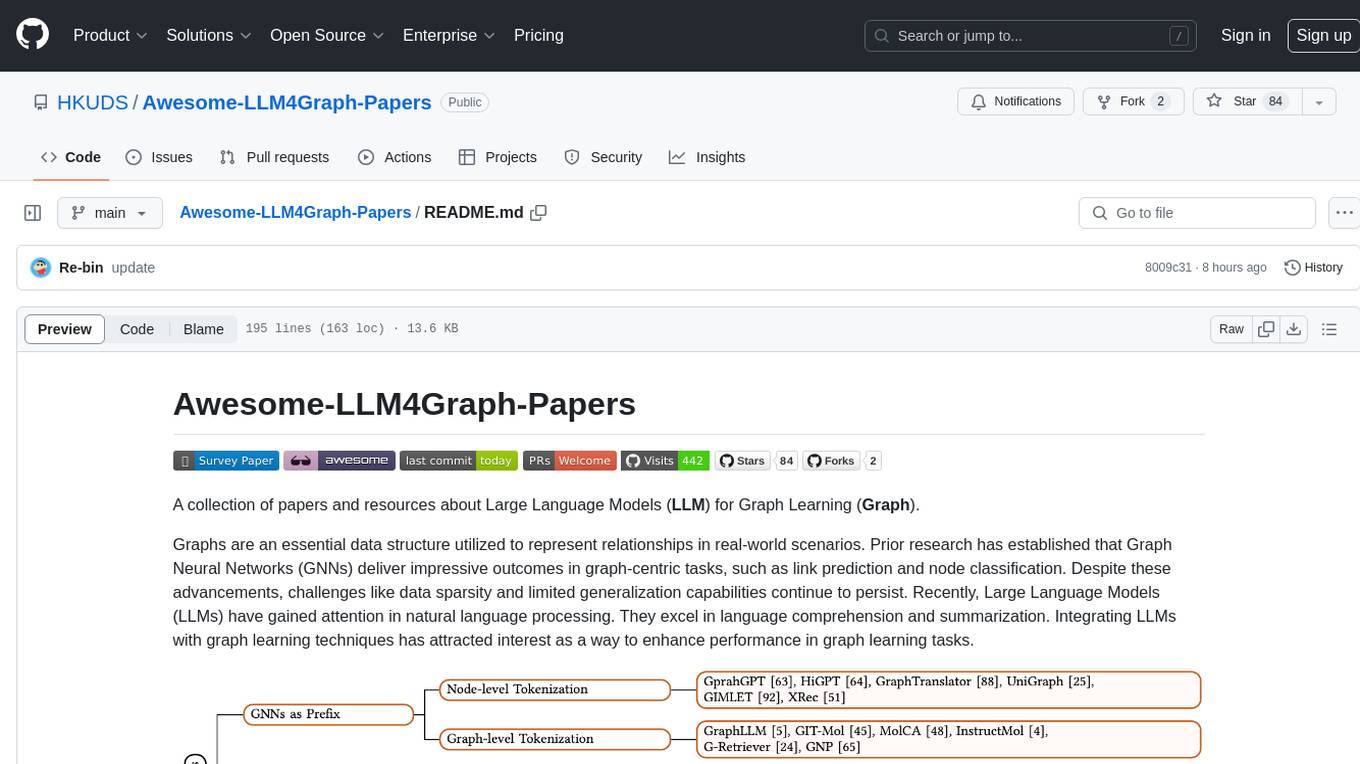

Awesome-LLM4Graph-Papers

A collection of papers and resources about Large Language Models (LLM) for Graph Learning (Graph). Integrating LLMs with graph learning techniques to enhance performance in graph learning tasks. Categorizes approaches based on four primary paradigms and nine secondary-level categories. Valuable for research or practice in self-supervised learning for recommendation systems.

ColossalAI

Colossal-AI is a deep learning system for large-scale parallel training. It provides a unified interface to scale sequential code of model training to distributed environments. Colossal-AI supports parallel training methods such as data, pipeline, tensor, and sequence parallelism and is integrated with heterogeneous training and zero redundancy optimizer.

sglang

SGLang is a structured generation language designed for large language models (LLMs). It makes your interaction with LLMs faster and more controllable by co-designing the frontend language and the runtime system. The core features of SGLang include: - **A Flexible Front-End Language**: This allows for easy programming of LLM applications with multiple chained generation calls, advanced prompting techniques, control flow, multiple modalities, parallelism, and external interaction. - **A High-Performance Runtime with RadixAttention**: This feature significantly accelerates the execution of complex LLM programs by automatic KV cache reuse across multiple calls. It also supports other common techniques like continuous batching and tensor parallelism.

Cyberion-Spark-X

Cyberion-Spark-X is a powerful open-source tool designed for cybersecurity professionals and data analysts. It provides advanced capabilities for analyzing and visualizing large datasets to detect security threats and anomalies. The tool integrates with popular data sources and supports various machine learning algorithms for predictive analytics and anomaly detection. Cyberion-Spark-X is user-friendly and highly customizable, making it suitable for both beginners and experienced professionals in the field of cybersecurity and data analysis.

innoshop

InnoShop is an innovative open-source e-commerce system based on Laravel 12. It supports multiple languages, multiple currencies, and is integrated with OpenAI. The system features plugin mechanisms and theme template development for enhanced user experience and system extensibility. It is globally oriented, user-friendly, and based on the latest technology with deep AI integration.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

EvalAI

EvalAI is an open-source platform for evaluating and comparing machine learning (ML) and artificial intelligence (AI) algorithms at scale. It provides a central leaderboard and submission interface, making it easier for researchers to reproduce results mentioned in papers and perform reliable & accurate quantitative analysis. EvalAI also offers features such as custom evaluation protocols and phases, remote evaluation, evaluation inside environments, CLI support, portability, and faster evaluation.

Awesome-RL-based-LLM-Reasoning

This repository is dedicated to enhancing Language Model (LLM) reasoning with reinforcement learning (RL). It includes a collection of the latest papers, slides, and materials related to RL-based LLM reasoning, aiming to facilitate quick learning and understanding in this field. Starring this repository allows users to stay updated and engaged with the forefront of RL-based LLM reasoning.

note-gen

Note-gen is a simple tool for generating notes automatically based on user input. It uses natural language processing techniques to analyze text and extract key information to create structured notes. The tool is designed to save time and effort for users who need to summarize large amounts of text or generate notes quickly. With note-gen, users can easily create organized and concise notes for study, research, or any other purpose.

FlagEmbedding

FlagEmbedding focuses on retrieval-augmented LLMs, consisting of the following projects currently: * **Long-Context LLM** : Activation Beacon * **Fine-tuning of LM** : LM-Cocktail * **Embedding Model** : Visualized-BGE, BGE-M3, LLM Embedder, BGE Embedding * **Reranker Model** : llm rerankers, BGE Reranker * **Benchmark** : C-MTEB

ST-LLM

ST-LLM is a temporal-sensitive video large language model that incorporates joint spatial-temporal modeling, dynamic masking strategy, and global-local input module for effective video understanding. It has achieved state-of-the-art results on various video benchmarks. The repository provides code and weights for the model, along with demo scripts for easy usage. Users can train, validate, and use the model for tasks like video description, action identification, and reasoning.

PPTAgent

PPTAgent is an innovative system that automatically generates presentations from documents. It employs a two-step process for quality assurance and introduces PPTEval for comprehensive evaluation. With dynamic content generation, smart reference learning, and quality assessment, PPTAgent aims to streamline presentation creation. The tool follows an analysis phase to learn from reference presentations and a generation phase to develop structured outlines and cohesive slides. PPTEval evaluates presentations based on content accuracy, visual appeal, and logical coherence.

pro-chat

ProChat is a components library focused on quickly building large language model chat interfaces. It empowers developers to create rich, dynamic, and intuitive chat interfaces with features like automatic chat caching, streamlined conversations, message editing tools, auto-rendered Markdown, and programmatic controls. The tool also includes design evolution plans such as customized dialogue rendering, enhanced request parameters, personalized error handling, expanded documentation, and atomic component design.

For similar tasks

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

Firefly

Firefly is an open-source large model training project that supports pre-training, fine-tuning, and DPO of mainstream large models. It includes models like Llama3, Gemma, Qwen1.5, MiniCPM, Llama, InternLM, Baichuan, ChatGLM, Yi, Deepseek, Qwen, Orion, Ziya, Xverse, Mistral, Mixtral-8x7B, Zephyr, Vicuna, Bloom, etc. The project supports full-parameter training, LoRA, QLoRA efficient training, and various tasks such as pre-training, SFT, and DPO. Suitable for users with limited training resources, QLoRA is recommended for fine-tuning instructions. The project has achieved good results on the Open LLM Leaderboard with QLoRA training process validation. The latest version has significant updates and adaptations for different chat model templates.

create-million-parameter-llm-from-scratch

The 'create-million-parameter-llm-from-scratch' repository provides a detailed guide on creating a Large Language Model (LLM) with 2.3 million parameters from scratch. The blog replicates the LLaMA approach, incorporating concepts like RMSNorm for pre-normalization, SwiGLU activation function, and Rotary Embeddings. The model is trained on a basic dataset to demonstrate the ease of creating a million-parameter LLM without the need for a high-end GPU.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

BetaML.jl

The Beta Machine Learning Toolkit is a package containing various algorithms and utilities for implementing machine learning workflows in multiple languages, including Julia, Python, and R. It offers a range of supervised and unsupervised models, data transformers, and assessment tools. The models are implemented entirely in Julia and are not wrappers for third-party models. Users can easily contribute new models or request implementations. The focus is on user-friendliness rather than computational efficiency, making it suitable for educational and research purposes.

AI-TOD

AI-TOD is a dataset for tiny object detection in aerial images, containing 700,621 object instances across 28,036 images. Objects in AI-TOD are smaller with a mean size of 12.8 pixels compared to other aerial image datasets. To use AI-TOD, download xView training set and AI-TOD_wo_xview, then generate the complete dataset using the provided synthesis tool. The dataset is publicly available for academic and research purposes under CC BY-NC-SA 4.0 license.

UMOE-Scaling-Unified-Multimodal-LLMs

Uni-MoE is a MoE-based unified multimodal model that can handle diverse modalities including audio, speech, image, text, and video. The project focuses on scaling Unified Multimodal LLMs with a Mixture of Experts framework. It offers enhanced functionality for training across multiple nodes and GPUs, as well as parallel processing at both the expert and modality levels. The model architecture involves three training stages: building connectors for multimodal understanding, developing modality-specific experts, and incorporating multiple trained experts into LLMs using the LoRA technique on mixed multimodal data. The tool provides instructions for installation, weights organization, inference, training, and evaluation on various datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.