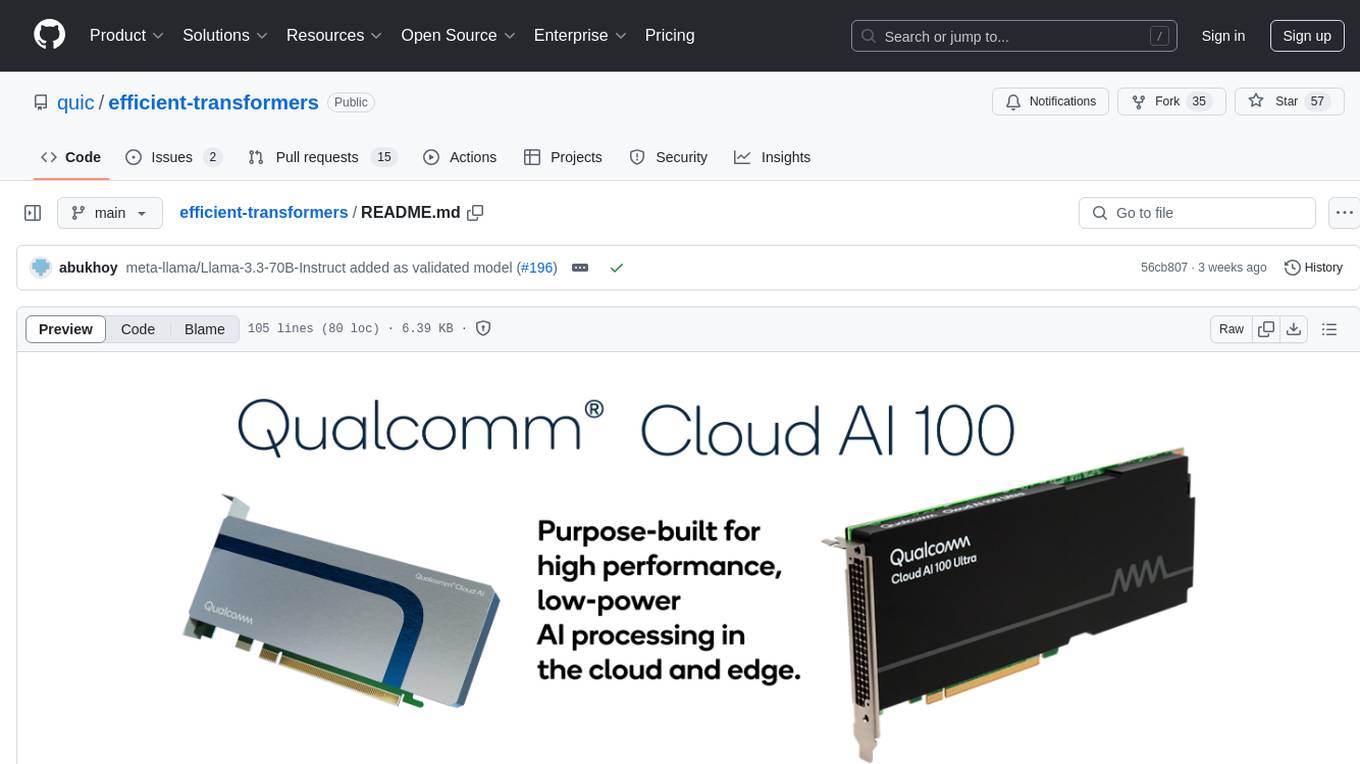

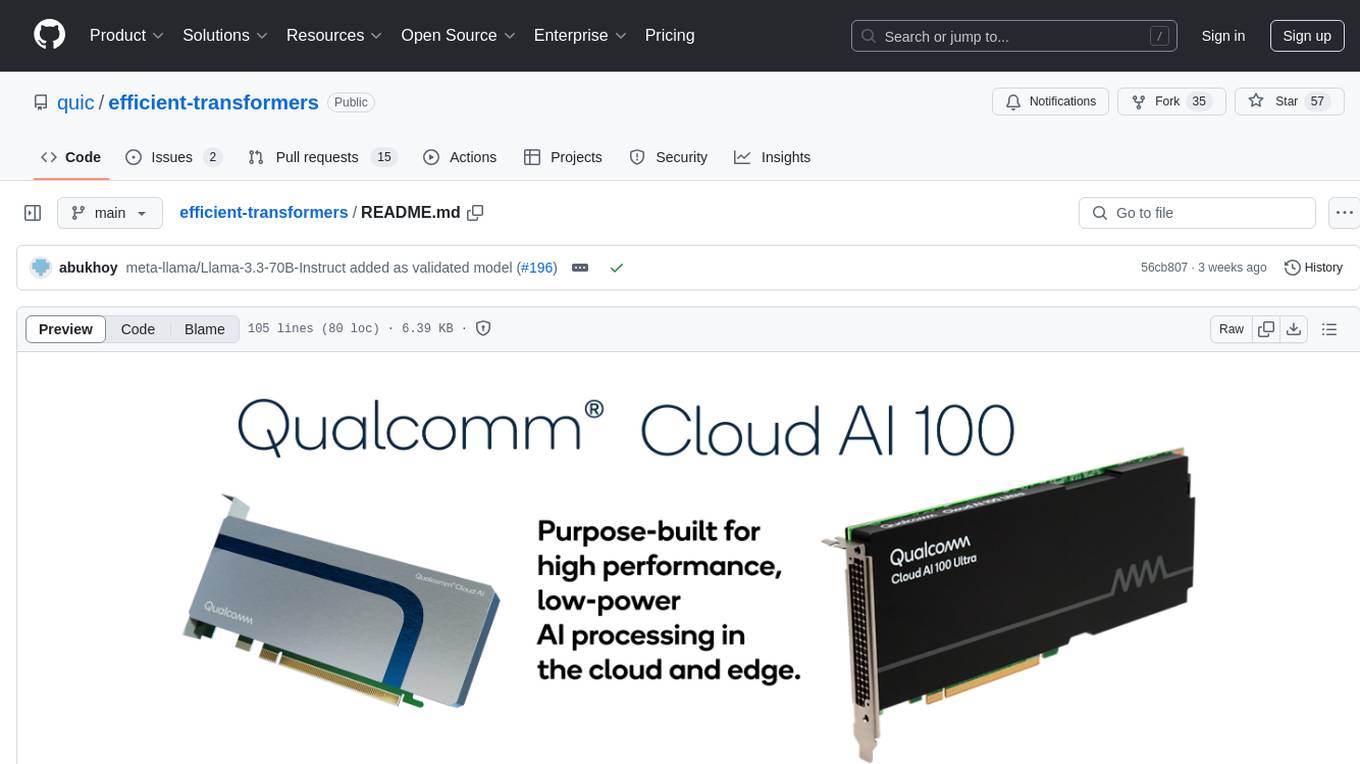

efficient-transformers

This library empowers users to seamlessly port pretrained models and checkpoints on the HuggingFace (HF) hub (developed using HF transformers library) into inference-ready formats that run efficiently on Qualcomm Cloud AI 100 accelerators.

Stars: 85

Efficient Transformers Library provides reimplemented blocks of Large Language Models (LLMs) to make models functional and highly performant on Qualcomm Cloud AI 100. It includes graph transformations, handling for under-flows and overflows, patcher modules, exporter module, sample applications, and unit test templates. The library supports seamless inference on pre-trained LLMs with documentation for model optimization and deployment. Contributions and suggestions are welcome, with a focus on testing changes for model support and common utilities.

README:

Latest news 🔥

- [12/2025] Enabled disaggregated serving for GPT-OSS model

- [12/2025] Added support for wav2vec2 Audio Model facebook/wav2vec2-base-960h

- [12/2025] Added support for diffuser video generation model WAN 2.2 Model Card

- [12/2025] Added support for diffuser image generation model FLUX.1 Model Card

- [12/2025] Added support for openai/gpt-oss-20b

- [12/2025] Added support for OpenGVLab/InternVL3_5-1B

- [12/2025] Added support for Olmo Model allenai/OLMo-2-0425-1B

- [10/2025] Added support for Qwen3 MOE Model Qwen/Qwen3-30B-A3B-Instruct-2507

- [10/2025] Added support for Qwen2.5VL Multi-Model Qwen/Qwen2.5-VL-32B-Instruct

- [10/2025] Added support for Mistral3 Multi-Model mistralai/Mistral-Small-3.1-24B-Instruct-2503

- [10/2025] Added support for Molmo Multi-Model allenai/Molmo-7B-D-0924

More

-

[06/2025] Added support for Llama4 Multi-Model meta-llama/Llama-4-Scout-17B-16E-Instruct

-

[06/2025] Added support for Gemma3 Multi-Modal-Model google/gemma-3-4b-it

-

[06/2025] Added support of model

hpcai-tech/grok-1hpcai-tech/grok-1 -

[06/2025] Added support for sentence embedding which improves efficiency, Flexible/Custom Pooling configuration and compilation with multiple sequence lengths, Embedding model

-

[04/2025] Support for SpD, multiprojection heads. Implemented post-attention hidden size projections to speculate tokens ahead of the base model

-

[04/2025] QNN Compilation support for AutoModel classes. QNN compilation capabilities for multi-models, embedding models and causal models.

-

[04/2025] Added support for separate prefill and decode compilation for encoder (vision) and language models. This feature will be utilized for disaggregated serving.

-

[04/2025] SwiftKV Support for both continuous and non-continuous batching execution in SwiftKV.

-

[04/2025] Support for GGUF model execution (without quantized weights)

-

[04/2025] Enabled FP8 model support on replicate_kv_heads script

-

[04/2025] Added support for gradient checkpointing in the finetuning script

-

[04/2025] Added support of model

ibm-granite/granite-vision-3.2-2bibm-granite/granite-vision-3.2-2b -

[03/2025] Added support for swiftkv model Snowflake/Llama-3.1-SwiftKV-8B-Instruct

-

[02/2025] VLMs support added for the models InternVL-1B, Llava and Mllama

-

[01/2025] FP8 models support Added support for inference of FP8 models.

-

[01/2025] Added support for [Ibm-Granite] (https://huggingface.co/ibm-granite/granite-3.1-8b-instruct)

-

[11/2024] finite adapters support allows mixed adapter usage for peft models.

-

[11/2024] Speculative decoding TLM QEFFAutoModelForCausalLM model can be compiled for returning more than 1 logits during decode for TLM.

-

[11/2024] Added support for Meta-Llama-3.3-70B-Instruct, Meta-Llama-3.2-1B and Meta-Llama-3.2-3B

-

[09/2024] Now we support PEFT models

-

[01/2025] Added support for [Ibm-Granite] (https://huggingface.co/ibm-granite/granite-3.1-8b-instruct)

-

[01/2025] Added support for [Ibm-Granite-Guardian] (https://huggingface.co/ibm-granite/granite-guardian-3.1-8b)

-

[09/2024] Added support for Gemma-2-Family

-

[09/2024] Added support for CodeGemma-Family

-

[09/2024] Added support for Gemma-Family

-

[09/2024] Added support for Meta-Llama-3.1-8B

-

[09/2024] Added support for Meta-Llama-3.1-8B-Instruct

-

[09/2024] Added support for Meta-Llama-3.1-70B-Instruct

-

[09/2024] Added support for granite-20b-code-base

-

[09/2024] Added support for granite-20b-code-instruct-8k

-

[09/2024] Added support for Starcoder1-15B

-

[08/2024] Added support for inference optimization technique

continuous batching -

[08/2024] Added support for Jais-adapted-70b

-

[08/2024] Added support for Jais-adapted-13b-chat

-

[08/2024] Added support for Jais-adapted-7b

-

[06/2024] Added support for GPT-J-6B

-

[06/2024] Added support for Qwen2-1.5B-Instruct

-

[06/2024] Added support for StarCoder2-15B

-

[06/2024] Added support for Phi3-Mini-4K-Instruct

-

[06/2024] Added support for Codestral-22B-v0.1

-

[06/2024] Added support for Vicuna-v1.5

-

[05/2024] Added support for Mixtral-8x7B & Mistral-7B-Instruct-v0.1.

-

[04/2024] Initial release of efficient transformers for seamless inference on pre-trained LLMs.

This library provides reimplemented blocks of LLMs which are used to make the models functional and highly performant on Qualcomm Cloud AI 100. There are several models which can be directly transformed from a pre-trained original form to a deployment ready optimized form. For other models, there is comprehensive documentation to inspire upon the changes needed and How-To(s).

- Reimplemented blocks from Transformers which enable efficient on-device retention of intermediate states.

- Graph transformations to enable execution of key operations in lower precision

- Graph transformations to replace some operations to other mathematically equivalent operations

- Handling for under-flows and overflows in lower precision

- Patcher modules to map weights of original model's operations to updated model's operations

- Exporter module to export the model source into a

ONNXGraph. - Sample example applications and demo notebooks

- Unit test templates.

It is mandatory for each Pull Request to include tests such as:

- If the PR is for adding support for a model, the tests should include successful execution of the model post changes (the changes included as part of PR) on Pytorch and ONNXRT. Successful exit criteria is MSE between output of original model and updated model.

- If the PR modifies any common utilities, tests need to be included to execute tests of all models included in the library.

# Create Python virtual env and activate it. (Recommended Python 3.10)

sudo apt install python3.10-venv

python3.10 -m venv qeff_env

source qeff_env/bin/activate

pip install -U pip

# Clone and Install the QEfficient repository from the mainline branch

pip install git+https://github.com/quic/efficient-transformers

# Clone and Install the QEfficient repository from a specific branch, tag or commit by appending @ref

# Release branch (e.g., release/v1.20.0):

pip install "git+https://github.com/quic/efficient-transformers@release/v1.20.0"

# Or build wheel package using the below command.

pip install build wheel

python -m build --wheel --outdir dist

pip install dist/qefficient-0.0.1.dev0-py3-none-any.whl

For more details about using QEfficient via Cloud AI 100 Apps SDK, visit Linux Installation Guide

Note: More details are here: https://quic.github.io/cloud-ai-sdk-pages/latest/Getting-Started/Model-Architecture-Support/Large-Language-Models/llm/

Thanks to:

- HuggingFace transformers for work in LLM GenAI modeling implementation

- ONNX, Pytorch, ONNXruntime community.

If you run into any problems with the code, please file Github issues directly to this repo.

This project welcomes contributions and suggestions. Please check the License. Integration with a CLA Bot is underway.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for efficient-transformers

Similar Open Source Tools

efficient-transformers

Efficient Transformers Library provides reimplemented blocks of Large Language Models (LLMs) to make models functional and highly performant on Qualcomm Cloud AI 100. It includes graph transformations, handling for under-flows and overflows, patcher modules, exporter module, sample applications, and unit test templates. The library supports seamless inference on pre-trained LLMs with documentation for model optimization and deployment. Contributions and suggestions are welcome, with a focus on testing changes for model support and common utilities.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

stm32ai-modelzoo

The STM32 AI model zoo is a collection of reference machine learning models optimized to run on STM32 microcontrollers. It provides a large collection of application-oriented models ready for re-training, scripts for easy retraining from user datasets, pre-trained models on reference datasets, and application code examples generated from user AI models. The project offers training scripts for transfer learning or training custom models from scratch. It includes performances on reference STM32 MCU and MPU for float and quantized models. The project is organized by application, providing step-by-step guides for training and deploying models.

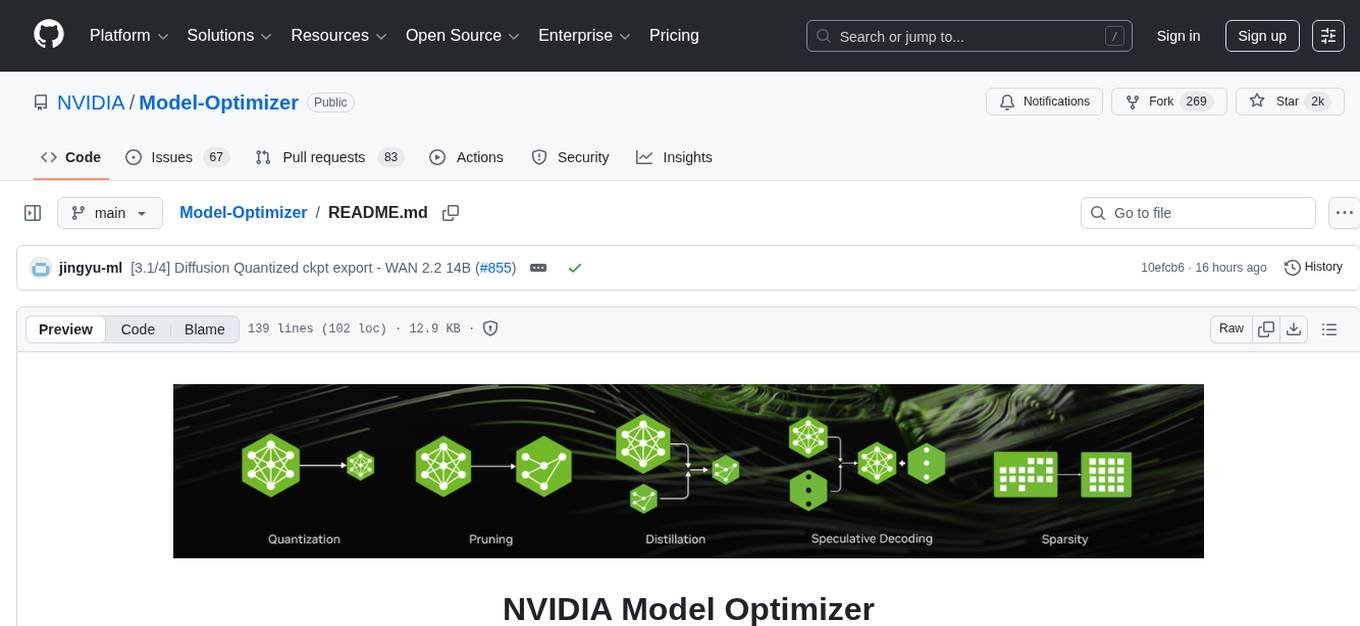

Model-Optimizer

NVIDIA Model Optimizer is a library that offers state-of-the-art model optimization techniques like quantization, distillation, pruning, speculative decoding, and sparsity to accelerate models. It supports inputs of Hugging Face, PyTorch, or ONNX models, provides Python APIs for easy composition of optimization techniques, and exports optimized quantized checkpoints. Integrated with NVIDIA Megatron-Bridge, Megatron-LM, and Hugging Face Accelerate for training required inference optimization techniques. The generated quantized checkpoint is ready for deployment in downstream inference frameworks like SGLang, TensorRT-LLM, TensorRT, or vLLM.

autogen

AutoGen is a framework that enables the development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools.

pro-chat

ProChat is a components library focused on quickly building large language model chat interfaces. It empowers developers to create rich, dynamic, and intuitive chat interfaces with features like automatic chat caching, streamlined conversations, message editing tools, auto-rendered Markdown, and programmatic controls. The tool also includes design evolution plans such as customized dialogue rendering, enhanced request parameters, personalized error handling, expanded documentation, and atomic component design.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

screenpipe

24/7 Screen & Audio Capture Library to build personalized AI powered by what you've seen, said, or heard. Works with Ollama. Alternative to Rewind.ai. Open. Secure. You own your data. Rust. We are shipping daily, make suggestions, post bugs, give feedback. Building a reliable stream of audio and screenshot data, simplifying life for developers by solving non-trivial problems. Multiple installation options available. Experimental tool with various integrations and features for screen and audio capture, OCR, STT, and more. Open source project focused on enabling tooling & infrastructure for a wide range of applications.

ten-framework

TEN is an open-source ecosystem for creating, customizing, and deploying real-time conversational AI agents with multimodal capabilities including voice, vision, and avatar interactions. It includes various components like TEN Framework, TEN Turn Detection, TEN VAD, TEN Agent, TMAN Designer, and TEN Portal. Users can follow the provided guidelines to set up and customize their agents using TMAN Designer, run them locally or in Codespace, and deploy them with Docker or other cloud services. The ecosystem also offers community channels for developers to connect, contribute, and get support.

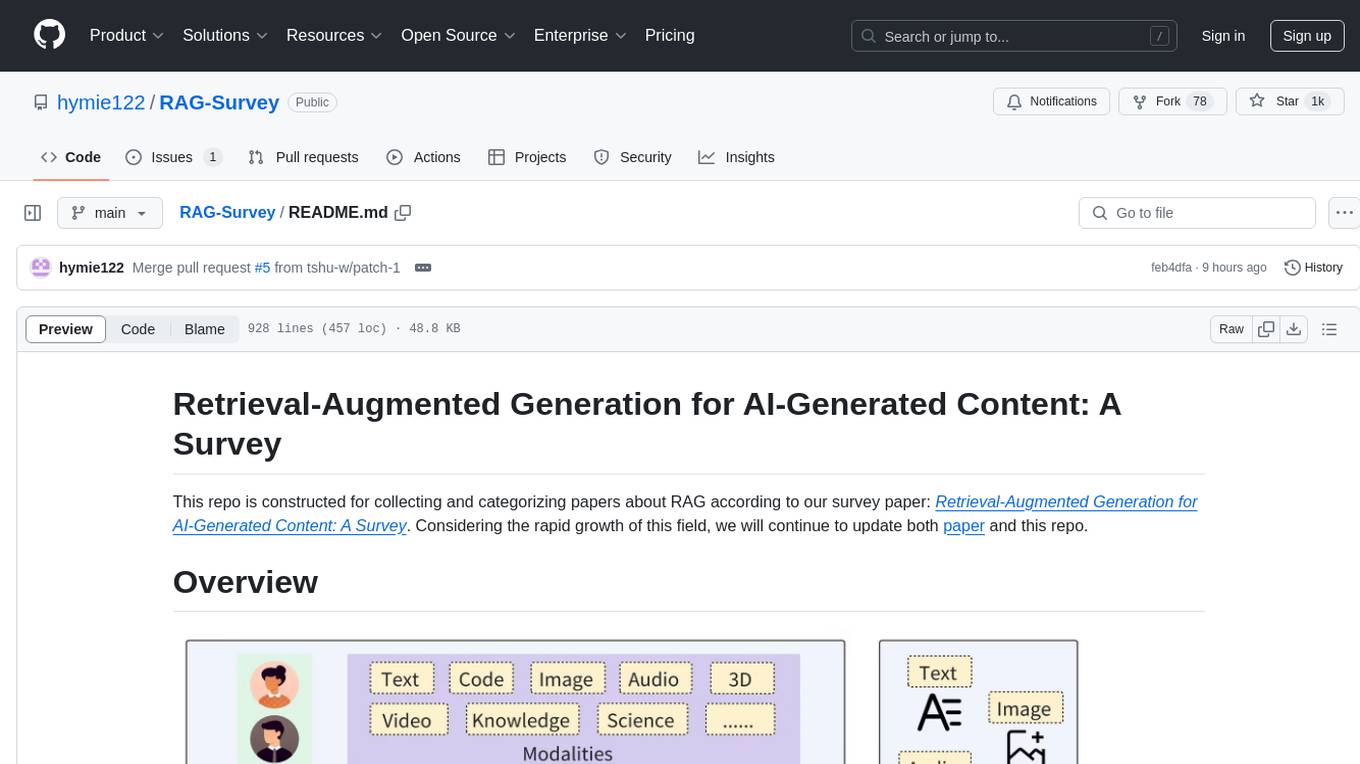

RAG-Survey

This repository is dedicated to collecting and categorizing papers related to Retrieval-Augmented Generation (RAG) for AI-generated content. It serves as a survey repository based on the paper 'Retrieval-Augmented Generation for AI-Generated Content: A Survey'. The repository is continuously updated to keep up with the rapid growth in the field of RAG.

nacos

Nacos is an easy-to-use platform designed for dynamic service discovery and configuration and service management. It helps build cloud native applications and microservices platform easily. Nacos provides functions like service discovery, health check, dynamic configuration management, dynamic DNS service, and service metadata management.

ColossalAI

Colossal-AI is a deep learning system for large-scale parallel training. It provides a unified interface to scale sequential code of model training to distributed environments. Colossal-AI supports parallel training methods such as data, pipeline, tensor, and sequence parallelism and is integrated with heterogeneous training and zero redundancy optimizer.

core

OpenSumi is a framework designed to help users quickly build AI Native IDE products. It provides a set of tools and templates for creating Cloud IDEs, Desktop IDEs based on Electron, CodeBlitz web IDE Framework, Lite Web IDE on the Browser, and Mini-App liked IDE. The framework also offers documentation for users to refer to and a detailed guide on contributing to the project. OpenSumi encourages contributions from the community and provides a platform for users to report bugs, contribute code, or improve documentation. The project is licensed under the MIT license and contains third-party code under other open source licenses.

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

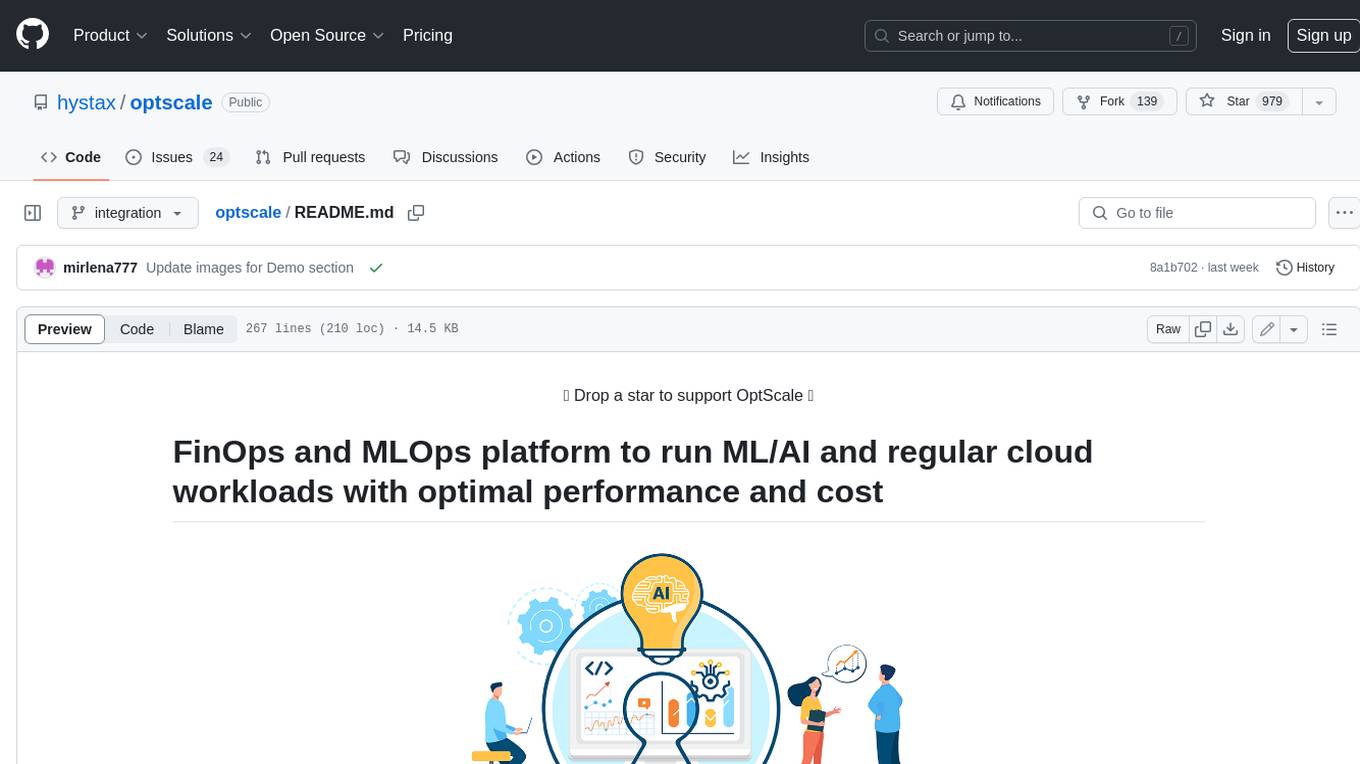

optscale

OptScale is an open-source FinOps and MLOps platform that provides cloud cost optimization for all types of organizations and MLOps capabilities like experiment tracking, model versioning, ML leaderboards.

For similar tasks

leptonai

A Pythonic framework to simplify AI service building. The LeptonAI Python library allows you to build an AI service from Python code with ease. Key features include a Pythonic abstraction Photon, simple abstractions to launch models like those on HuggingFace, prebuilt examples for common models, AI tailored batteries, a client to automatically call your service like native Python functions, and Pythonic configuration specs to be readily shipped in a cloud environment.

efficient-transformers

Efficient Transformers Library provides reimplemented blocks of Large Language Models (LLMs) to make models functional and highly performant on Qualcomm Cloud AI 100. It includes graph transformations, handling for under-flows and overflows, patcher modules, exporter module, sample applications, and unit test templates. The library supports seamless inference on pre-trained LLMs with documentation for model optimization and deployment. Contributions and suggestions are welcome, with a focus on testing changes for model support and common utilities.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

For similar jobs

efficient-transformers

Efficient Transformers Library provides reimplemented blocks of Large Language Models (LLMs) to make models functional and highly performant on Qualcomm Cloud AI 100. It includes graph transformations, handling for under-flows and overflows, patcher modules, exporter module, sample applications, and unit test templates. The library supports seamless inference on pre-trained LLMs with documentation for model optimization and deployment. Contributions and suggestions are welcome, with a focus on testing changes for model support and common utilities.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.